Abstract

This study investigates the impact of introducing a mandatory practical programming exam on student learning outcomes in introductory programming courses. To facilitate structured coding practice and scalable automated feedback, we developed Programmers’ Interactive Virtual Onboarding (PIVO), a novel Automated Programming Assessment System (APAS). Traditional programming curricula often prioritize theoretical concepts, limiting practical coding opportunities and immediate feedback, resulting in poor skill retention and proficiency. By integrating mandatory practical assessments together with voluntary, self-driven programming tasks through PIVO, we aimed to enhance student engagement, programming proficiency, and overall academic performance. Results show a substantial reduction in failure rates following the introduction of the practical exam, and statistical analyses revealed moderate correlation between students’ voluntary engagement in non-mandatory coding exercises and their performance in both theoretical and practical examinations. These findings indicate an association among engagement in structured, automated practice assessments, algorithmic thinking, and problem-solving capabilities.

1. Introduction

Freshmen students in science, technology, engineering, mathematics, and arts often struggle to succeed in programming courses, primarily due to misconceptions about the learning process (Gomes & Mendes, 2007). Traditional university and school courses typically emphasize skills such as memorization, data analysis, and information extraction. Programming, however, requires these abilities in addition to strong skills in problem fragmentation and solution synthesis (Sabarinath & Quek, 2020; Wang et al., 2016).

Computer programming is a skill that can and should be learned to succeed in breaking down complex problems and building effective engineering, scientific, or organizational solutions (Sabarinath & Quek, 2020). Programming is not merely a technical goal—it is a key to unlocking unforeseen intellectual abilities.

Universities worldwide incorporate computer programming courses into their curricula (Belmar, 2022; Sobral, 2021). Mastery of programming fosters the development of intellectual skills, such as complex problem solving, pattern recognition, building different layers of abstraction, (programming) language learning, algorithm (procedure) design, and others. These skills benefit both students and instructors, complementing traditional academic disciplines (Belmar, 2022; Grover & Pea, 2018).

The introduction of computer science courses into traditional engineering and arts programs often attempts to fit this multidimensional field into outdated teaching structures. Although programming instructors are typically experts, they are often constrained to two-hour weekly lectures and two to three hours of exercises. Programming, however, cannot be effectively learned with such limited practice, especially when students are only passively following someone else practice. Algorithmic thinking and programming require interactive learning processes with extensive trial-and-error feedback.

2. Study Aim and Research Questions

2.1. Scalable Feedback Systems

Establishing an effective learning feedback system on a large scale is challenging, particularly when serving approximately 500 students in a single semester. Many universities rely on only 1–2 full-time instructors and 2–3 part-time instructors for practical courses and examination, a situation common in underfunded technical faculties.

To provide students with more frequent feedback on their progress, Automated Programming Assessment Systems (APAS) are increasingly being introduced into algorithms and programming courses. APAS are widely used for homework assessments, lab assignments, exercises, examinations, competitions, and job or position selection.

Many teachers have already reported success in using various APAS for improving programming education (Luxton-Reilly et al., 2018; Mekterović et al., 2020, 2023; Meža et al., 2017; Rajesh et al., 2024; Restrepo-Calle et al., 2019). Some teachers developed their own APAS-class systems to fit their cases and curriculum (Brkić et al., 2024; De Souza et al., 2015; Hellín et al., 2023; Lokar & Pretnar, 2015).

Students in science, technology, engineering, mathematics, and arts disciplines frequently transition into roles as software engineers, whether in large, multi-level corporate environments or dynamic start-ups. The process of creating software in these contexts often involves interpreting documentation (e.g., assignment texts), developing usage examples and tests, crafting solutions, performing extensive testing, and finalizing the product for delivery (e.g., code submission). Instead of building entire solutions independently, engineers are typically responsible for developing and testing small components of larger, more complex projects. These components, often referred to as functions, methods, or subroutines, must be rigorously tested before integration into the larger system (Gao et al., 2016). This divide and conquer approach, fragmenting complex problems into manageable pieces, is a cornerstone of engineering practice.

At the Faculty of Electrical Engineering, University of Ljubljana, we embraced this methodology to develop our own APAS, designed to simulate real-world business environments. Named the Programmers’ Interactive Virtual Onboarding (PIVO, originally in Slovene as Programerjevo Interaktivno Vadbeno Okolje), this system provides a practical and industry-relevant platform for teaching and assessing programming skills.

Programmers’ Interactive Virtual Onboarding (PIVO) was developed in 2017, and it was used as a homework assessment system until 2023. In 2024, the system became an integral part of the final examination process.

2.2. Scope of the Study

This case study examines the measured impacts of introducing a compulsory practical exam using PIVO in the subject of Algorithms and Data Structures (Osnove programiranja and Programiranje I) for first-year Bachelor students in Electrical Engineering at the University of Ljubljana.

It is important to note that freshmen at the Faculty of Electrical Engineering are not primarily oriented toward computer science, as dedicated programs are offered by another member of the university. Consequently, these students often approach programming courses with less initial interest or background knowledge in the field, making effective teaching strategies and evaluation methods particularly critical.

Introducing a mandatory practical exam was not a decision taken lightly. The instructional team initially feared that this intervention might significantly increase the course failure rate, potentially overwhelming students and affecting morale. However, it was also clear that encouraging deeper engagement with programming through hands-on practice was a moral and pedagogical necessity. Ultimately, the decision to proceed with the practical exam appears justified, as student performance and involvement improved during the period following its introduction. This case study presents the outcomes of that decision.

The findings from this study provide valuable insights into improving programming education for non-computer-science focused students and offer practical implications for both academic and industry audiences.

2.3. Research Questions

The methodological framework of this study is designed to address two key research questions as follows:

- RQ1: Does the introduction of a mandatory practical exam improve student learning outcomes?

- RQ2: Does engagement in non-mandatory programming assignments correlate with student performance in written and practical exams?

To systematically explore these questions, we define the following research objectives:

- Evaluate the impact of a mandatory practical exam on student performance by comparing success rates before and after its implementation.

- Analyze the relationship between voluntary programming practice and exam performance by measuring correlations between engagement in non-mandatory assignments and results in both theoretical (written) and applied (practical) assessments.

- Assess the effectiveness of self-directed learning opportunities by investigating whether students who voluntarily engage in additional exercises demonstrate stronger problem-solving abilities.

Based on these objectives, we propose the following hypotheses:

- H1: The introduction of a mandatory practical exam is expected to be associated with improved learning outcomes.

- H2: There exists a positive correlation between engagement in non-mandatory programming assignments and exam performance, suggesting that students who practice more tend to achieve higher scores.

These research questions, objectives, and hypotheses guide the structure of our analysis in the subsequent sections.

3. Materials and Methods

3.1. Programmers’ Interactive Virtual Onboarding (PIVO)

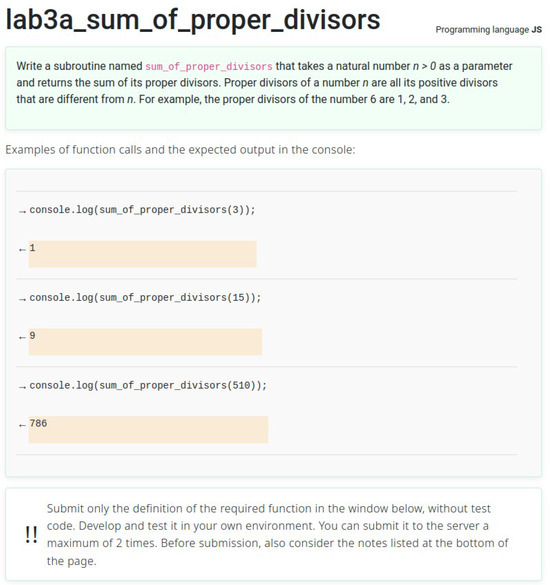

The Programmers’ Interactive Virtual Onboarding (PIVO) platform is an online system designed to provide registered users with computational problems to solve using a specified programming language (e.g., JavaScript, C). Each assignment comprises two key components: a comprehensive problem description and accompanying testing or usage examples (see Figure 1). Users are required to develop their solutions within their chosen development environment. Typically, tasks are formulated in a way that practitioner is asked to construct a function (also method or subroutine) and gives all the infrastructure, like helper functions, library inclusions, function prototypes) together with typical usage and tests already in task description. The main part that needs to be constructed then is the requested function(s) description(s) which is the core of algorithm.

Figure 1.

An example of a simple assignment given in the PIVO system. The solution is a subroutine that executes the task and passes given tests in JavaScript language.

By design, PIVO does not include built-in editing or debugging tools, as the platform emphasizes the importance of developers becoming proficient with their own development tools. Once a solution is complete, users submit the resulting source code to the PIVO system for evaluation, simulating the process of code shipping. To encourage careful preparation, submissions are limited to a maximum of two attempts per problem, ensuring that users thoroughly test their code prior to submission. After failing for the second time, submissions are not possible and the assignment gets zero score.

This submission limit reflects real-world scenarios, where developers often face constraints on revising or resubmitting their work after deployment. The first submission typically addresses common errors, such as mistakes in copy-pasting code into the PIVO interface. The second attempt offers an opportunity for rectification but underscores the importance of meticulous testing and preparation before finalizing code. With years of PIVO usage, we realized that smaller number of available attempts forces students to think, test, and reevaluate before submitting. Students tend to test code more (locally), read debugger outputs, and discuss with peers and teacher during exercises. Submission to the PIVO is then considered as final code shipping with the system being our target client or customer.

From a technical perspective, PIVO was validated through extensive internal testing before deployment. All assignments were automatically compiled, executed, and graded in a sand-boxed environment identical to the one used in the practical exam. Each test case was verified and peer-reviewed manually by course instructors to ensure deterministic evaluation and to avoid false positives or negatives in scoring. System logs were continuously monitored during both academic years, showing no grading errors or downtime affecting students’ results. Thus, the reliability of the collected engagement and performance data is considered high.

Submitted user’s code is always considered as a serious security threat to the system. System backend (Rojec, 2021) performs an initial analysis of the submitted source code, identifying and removing any prohibited system expressions. It subsequently prepares the test environment by integrating and initializing the necessary input data for code execution. The testing process involves initializing variables and objects, executing function calls, and analyzing outputs directed to the standard console.

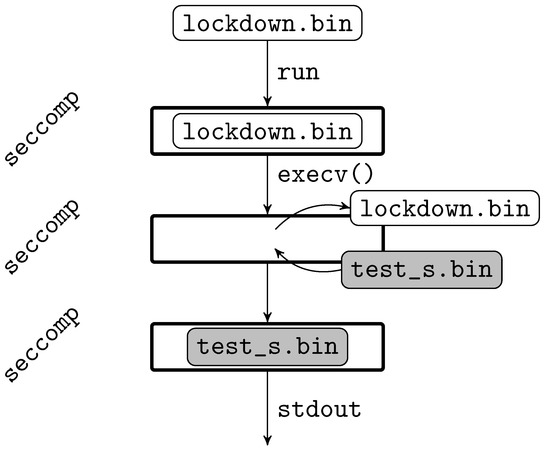

The submitted code is then compiled into machine language (depending on the specified programming language) and executed in a secure, isolated container. Using technologies like Docker and Linux seccomp, this containerized environment ensures that the code cannot negatively affect the server’s operations by disabling specific system calls and restricting access to system resources and files. Figure 2 exhibits steps of process down-locking for ahead-of-time compilation programming languages like C. A program containing seccomp rules locks-down the process, execv() then replaces the program with the student’s executable. The platform then evaluates the submitted code by monitoring its standard output, comparing the results against the predefined expected values.

Figure 2.

Process Locking—The program lockdown.c locks the permissions for the process, while the execv() command replaces the currently running program with the user’s executable code test_s.bin, maintaining the same (parent) process identity. During the execution of test_s.bin, we monitor the contents of the standard output (Rojec, 2021).

The system’s test cases are designed to provide a comprehensive and rigorous evaluation of submitted solutions. These include both public test cases, which are visible to the user and provide immediate feedback, and hidden test cases, which remain concealed to ensure the robustness and correctness of the solution. Public cases allow students to verify the basic functionality of their code, while hidden cases prevent superficial or hard-coded solutions.

Without hidden test cases, students could rely on simplistic if–else constructs or other hard-coded logic to satisfy only the provided test cases, rather than developing a generalized, algorithmically sound solution. By incorporating hidden cases, the system compels students to fully analyze the problem, anticipate edge cases, and design solutions that work under a variety of conditions—ultimately reinforcing problem-solving skills and deeper comprehension of programming concepts.

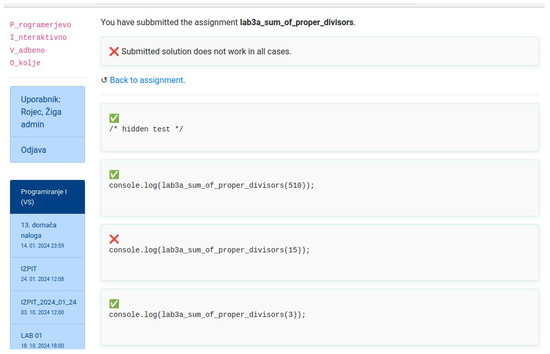

Feedback on the submission’s correctness is provided almost instantaneously, enabling users to determine whether their code has passed the required test cases (see Figure 3). The user interface reports success on each test and provides a simple, but clear status bar on individual’s progress on the course. According to prior comprehensive studies of (Calles-Esteban et al., 2024; Iosup & Epema, 2014; Paiva et al., 2023), such small feedback features improve the motivation of students and give a sense of gamification.

Figure 3.

Feedback after submitting a simple assignment given in the PIVO system.

PIVO was used by over 2,000 students, who collectively submitted more than 36,000 programs for evaluation.

More about effects and acceptance of the PIVO system usage was provided in Section 4.

3.2. Curriculum

In this section, we provide a brief overview of the organizational structure of the curriculum and the role of the PIVO system within it.

The curriculum for the introductory programming course (comprising of introductory topics on algorithms and data structures) during the winter semester of 2022/2023 and 2023/2024 followed the weekly schedule outlined below:

- two hours of lectures,

- one hour of tutorial sessions, and

- one hour of lab sessions (conducted as two-hour sessions every two weeks).

3.2.1. Exam Enrollment Requirements

To be eligible for the final exam, students were required to fulfill the following conditions:

Laboratory Component

- Attendance at all five laboratory sessions (5/5), and

- Successful submission of assignments for all five sessions (5/5) via the PIVO system.

- Lab assignments were standardized across all students.

Homework Component

- Completion of 12 multiple-choice (MCQ) theoretical questions, and

- Completion of five introductory programming tasks, submitted to the PIVO system.

- Homework assignments were individualized for each student. To pass the homework component, students were required to obtain a minimum of 12 out of 20 available points.

3.3. Exercising and Mentoring Environment

In addition to the obligatory exercises, which were a mandatory prerequisite for exam enrollment, students were provided with an extensive set of non-mandatory homework assignments. During the winter semester of 2023/2024, more than 50 programming tasks of moderate to high complexity were made available through the PIVO system. These tasks were designed to comprehensively cover the majority of the methods and approaches discussed in the lectures. Importantly, students were neither explicitly encouraged nor discouraged from engaging with these additional assignments, allowing for a self-regulated learning environment.

To ensure that students became well-acquainted with the PIVO system and its functionalities, exposure was facilitated through multiple avenues as follows:

- Laboratory sessions: Hands-on practice sessions where students worked on practical exercises under supervision

- Obligatory homework assignments: Four structured assignments comprising easy to moderately difficult tasks, forming part of the required coursework

- Non-mandatory homework assignments: A set of over 50 programming tasks ranging from moderate to high difficulty, aimed at reinforcing students’ understanding of advanced concepts.

This multi-faceted approach provided students with ample opportunities to familiarize themselves with the practical aspects of the course. The availability of a large number of voluntary assignments ensured that motivated students could deepen their understanding beyond the core curriculum.

Further analysis of student engagement and its correlation with academic success is discussed in Section 4. Additionally, throughout the semester, particularly during laboratory sessions, mentors were available to assist students, guiding them through the problem-solving process and addressing any technical or conceptual difficulties they encountered.

3.4. Exam Format Semester 2022/2023

While curriculum frame and examination standards were identical in both years to ensure fair comparison, the minimal-pass exam policy differed. The final examination for the winter semester 2022/2023 was structured as follows:

- Probing Exam (Optional): Students complete two simple programming assignments during the probing exam (on PIVO system, offline and with no accessories). Successfully completing these assignments awarded extra points, rising the final score if the student passed the written exam.

- Written Exam: The main component of the final exam, consisting of 15 multiple-choice questions focused on code analysis (strictly on-paper). The exam had a maximum duration of 50 min. Students scoring more than 50% on this component passed, points achieved at probing exam were added on top in this case.

3.5. Exam Format Semester 2023/2024

The final examination for the winter semester 2023/2024 included both written and practical components, structured as follows:

- Part I—Written Exam The first part consisted of 15 multiple-choice questions focused on code analysis (strictly on-paper), with a maximum duration of 50 min. Students scoring more than 50% on this component were eligible to proceed to the practical exam.

- Part II—Practical Exam The second part involved a unique set of three programming assignments for each student. To pass, students were required to score written exam >50% and, in addition, successfully complete at least one of the assignments in practical part. Three assignments had three levels, easy, medium, hard. More assignments completed bring higher final score.

An important note on practical examination is that laboratory room, used in practical exam, is under the staff-control with minimum of two supervisors and up to 20 candidates. Candidates work on laboratory computers (Linux Debian with KDE Plasma) with central Ethernet switch and firewall, whitelisting only an IP and port of server with PIVO APAS. Access to the internet is, therefore, completely blocked, file-sharing is disabled. No other helping devices are allowed, except of an empty A4 paper that can be used for sketching. Following these rules, candidates have no access to external servers or plugins.

Below, examples of assignments given in Part II—Practical Exam are given.

(Easy assignment) Write a subroutine named binaryRepresentation that takes an array b as a parameter, which contains the binary representation of a non-negative integer. The array b consists of a sequence of ones (1) and zeros (0), ending with a sentinel value of −1. The subroutine should return the decimal value of the number represented in the array b. Examples:

bin = [1, 0, 0, −1];

console.log(binaryRepresentation(bin));

bin = [1, 0, 0, 1, 1, 0, −1];

console.log(binaryRepresentation(bin));

bin = [0, 0, −1];

console.log(binaryRepresentation(bin));

bin = [1, 1, 0, 1, −1];

console.log(binaryRepresentation(bin));

(Intermediate assignment) Write a subroutine named search, which takes two arrays of natural numbers, haystack and needle, as parameters. Both arrays are terminated with a sentinel value of 0. The subroutine should search the haystack array for a sequence of elements that match the needle array and return the index of the first element of the found sequence. If there are multiple such sequences, the subroutine should return the smallest index among them. If no such sequence exists in the haystack array, the subroutine should return the value −1. Examples:

sn = [2, 3, 4, 5, 6, 7, 2, 3, 4, 0];

ig = [2, 3, 4, 0];

console.log(search(sn, ig));

sn = [1, 2, 3, 4, 5, 6, 0];

ig = [3, 4, 5, 0];

console.log(search(sn, ig));

sn = [1, 2, 3, 4, 5, 6, 0];

ig = [5, 6, 7, 0];

console.log(search(sn, ig));

sn = [2, 3, 4, 5, 6, 0];

ig = [1, 2, 3, 0];

console.log(search(sn, ig));

sn = [2, 3, 4, 5, 6, 0];

ig = [2, 3, 4, 5, 6, 0];

console.log(search(sn, ig));

(Hard assignment) Write a subprogram named extractsnake that takes as parameters a two-dimensional array m with its number of rows () and columns (), as well as an empty array snake. The array m contains only zeros and one continuous sequence of ones in either a vertical or horizontal direction. The subprogram should record the row and column indices of the start and end of this sequence of ones in the array snake, then count and return the number of ones in the identified sequence. The array snake should be a two-dimensional array, where the first row stores the start indices and the second row stores the end indices of the sequence of ones in m. The beginning of the sequence is considered to be the one that has either the smallest row index or the smallest column index. Assume that there is always at least one occurrence of a one in m. Examples:

m1 = [[0, 0, 0, 0, 0, 0],

[0, 0, 0, 0, 0, 0],

[0, 0, 1, 1, 1, 1],

[0, 0, 0, 0, 0, 0],

[0, 0, 0, 0, 0, 0]];

k1 = [];

d1 = extractsnake(m1, 5, 6, k1);

console.log(d1, JSON.stringify(k1));

m2 = [[0, 0, 0, 0, 0, 0, 0],

[0, 0, 0, 0, 0, 0, 0],

[0, 0, 0, 0, 0, 1, 0]];

k2 = [];

d2 = extractsnake(m2, 3, 7, k2);

console.log(d2, JSON.stringify(k2));

m3 = [[0],

[1],

[1],

[1],

[0],

[0]];

k3 = [];

d3 = extractsnake(m3, 6, 1, k3);

console.log(d3, JSON.stringify(k3));

3.6. Accepted Answers

To promote a deeper understanding of core algorithmic principles, only fundamental built-in functions are permitted. Students are encouraged to implement solutions using low-level operations, such as direct value or reference assignments, array indexing, and basic arithmetic. The use of high-level built-in methods (e.g., sorting functions or array manipulation utilities) is intentionally restricted. This constraint is particularly feasible in JavaScript, where standard libraries are minimal and most advanced functionality is exposed only through built-in methods such as those in Math object. Simillarily in C programming language, only pre-included system libraries are available in the assignment. As such, students are expected to develop their own implementations of core algorithms and are encouraged to write custom helper subroutines when appropriate. This pedagogical strategy reinforces algorithmic thinking by requiring students to understand and construct the underlying logic of their solutions rather than relying on abstracted functionality.

3.7. Threats to Validity and Limitations

This study employed a quasi-experimental year-to-year comparison design without random assignment. Consequently, cohort differences may have influenced outcomes independently of the introduced practical exam. Factors such as prior experience, student motivation, or variations in secondary-school preparation cannot be ruled out as alternative explanations for the observed differences.

Given that the analysis involves an entire freshman cohort within a mandatory bachelor-level curriculum, implementing randomized or control-group experimentation would have raised ethical and administrative concerns. The intervention was introduced as a legitimate curriculum reform rather than a research experiment, and all students were informed of the examination changes in advance. The intent was to ensure transparency and fairness rather than to manipulate conditions for research purposes.

A potential Hawthorne effect remains possible, as awareness of the new mandatory assessment may have influenced engagement independently of actual skill development. Selection bias may also exist, as only students who reached the exam stage were included in the analysis. Minor variations in exam difficulty or grading thresholds between years could further act as confounding variables.

External validity is limited by the single-institution context and by the profile of participants, who were primarily engineering students for whom programming is not the main field of study. The analysis is therefore exploratory and descriptive rather than causal; correlations reported in Section 4.1 indicate associations but do not establish direct cause-and-effect relationships. Future research should employ controlled or longitudinal designs to account for such confounding factors and to assess the generalization of these findings in broader educational contexts.

4. Results

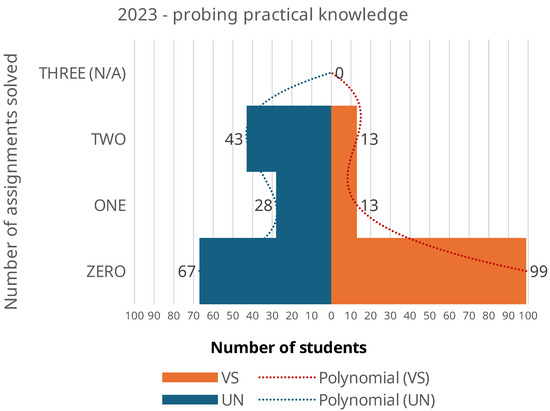

This section provides an in-depth analysis of the effects of introducing a mandatory practical examination during the winter semester of the 2023/2024 academic year. The decision to implement this exam was partly motivated by the outcomes of an earlier, non-mandatory, exam-like assessment that took place in January 2023. The primary objective of that initial assessment was to gauge the practical programming abilities of incoming freshmen, offering them an opportunity to earn bonus points toward their subsequent written examination.

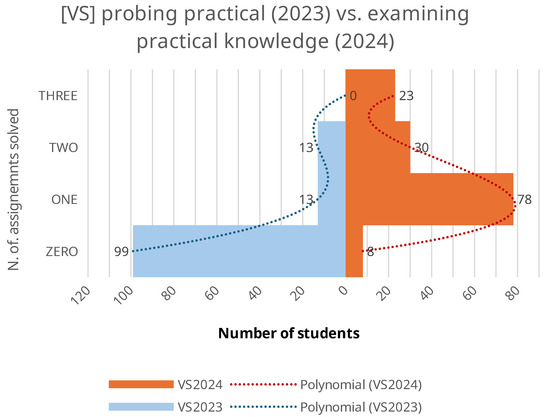

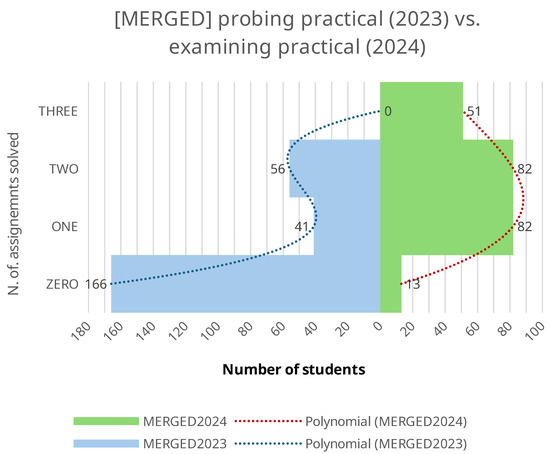

However, the results from January 2023 were both surprising and concerning. As illustrated in Figure 4, 166 out of 263 students (63%) were unable to solve even the simplest of the assigned programming tasks in under two hours in a controlled environment. A closer examination revealed significant discrepancies between the two programs under consideration: the University (academic) study program (UN) and the Short-cycle higher vocational (professional) program (VS). The overall failure rate in the UN program was 49%, whereas it reached 79% in the VS program. In more specific terms, within UN, 28 students (20%) managed to solve one of the two tasks, while 43 (31%) successfully completed both. In contrast, the VS program had only 13 students (10%) complete one task, and an additional 13 (10%) solve both tasks. These results raised concerns about whether a compulsory practical exam would further exacerbate the already high failure rates.

Figure 4.

Success of 2023 programming practical knowledge probing. Only 33% of attended students accomplished at least one task. Students of university study program performed better than of Short-cycle higher vocational (professional) program.

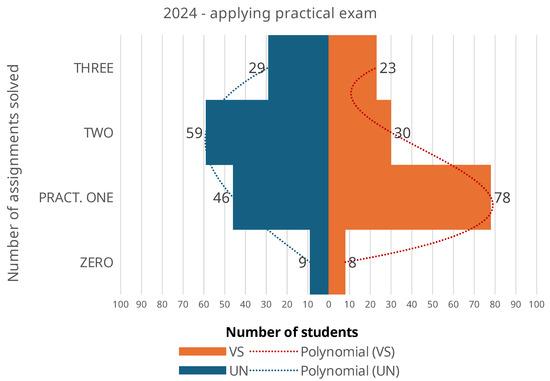

Despite these apprehensions, the obligatory practical examination implemented in January 2024 under strict supervision—prohibiting any form of external assistance—yielded unexpectedly positive results. As depicted in Figure 5, only 13 of the 228 participating students (6%) failed this mandatory assessment. Notably, in the UN program, 46 students (32%) completed only one task, 59 (41%) managed two tasks, and 29 (25%) accomplished all three. The VS program presented a similarly constructive pattern, although more students (78, 56%) only completed one task, with 30 (22%) mastering two tasks and 23 (17%) successfully solving all three. See the results also in Table 1.

Figure 5.

Success of 2024 programming practical knowledge mandatory exam. About 94% of attended students accomplished at least one programming task. Success shifted towards more accomplished task with obligatory practical exam.

Table 1.

Results of applying practical mandatory practical exam in 2024. Number of students who accomplished zero, one, two or all three given programming tasks within two hours under controlled environment, using the PIVO platform.

Figure 6, Figure 7 and Figure 8 exhibit the shift in knowledge in years from 2023 to 2024 in the UN study program, VS study program and merged, respectively.

Figure 6.

Shift in practical knowledge from year 2023 (left) to 2024 (right) in the UN study program.

Figure 7.

Shift in practical knowledge from year 2023 (left) to 2024 (right) in the VS study program.

Figure 8.

Shift in practical knowledge from year 2023 (left) to 2024 (right) in both study programs.

Overall, data suggests that in the year of the introduction of a mandatory practical exam no drastic decline in pass rates took place, as initially feared. On the contrary, it appears that students across both the UN and VS programs achieved higher levels of proficiency in practical programming tasks than year before.

We are able to compare student success in winter semester 2023/2024 to 2022/2023 on the following basis:

- curriculum format was identical (same basic study resources and references, same time-schedule, same physical location…),

- staff was identical,

- staff/per student ratio was identical,

- written exam was identical,

- number of students were high, and

- no known socially impacted generation (special COVID-epidemic circumstances already faded-out).

The two of the known, intentional, and key differences were offer of non-obligatory practical assignments through PIVO and the announcement of obligatory practical exam to pass the course.

4.1. Correlation Between Engagement and Success

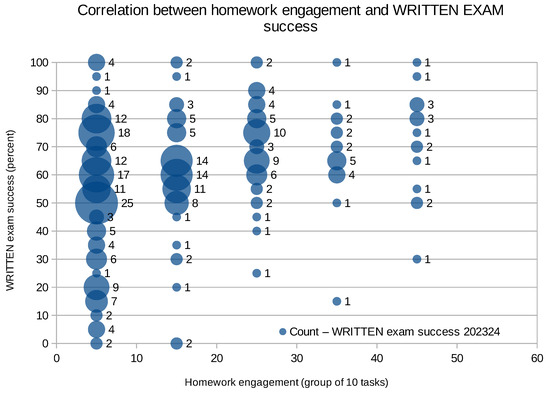

As described in Section 3.7, the analysis is exploratory and does not control for potential cohort differences or confounding factors. However, a noticeable correlation was observed between students who actively engaged in programming practice before the exam and their performance across different exam components. To quantitatively assess this relationship, three datasets were analyzed. The primary dataset consisted of the number of non-mandatory homework assignments successfully completed and submitted to the PIVO system by each student during the semester. This dataset was then correlated with performance in the written exam component, which focused on code analysis and algorithmic reasoning (15 multiple-choice questions, 50 min, paper-based) and performance in the practical exam component, which required students to develop functional code and implement algorithms under exam conditions.

The analysis revealed a Pearson correlation coefficient (Freedman et al., 2007) of with a statistically significant p-value of 3.17 × 10−23 for the written exam, indicating a moderate positive correlation between semester-long engagement with non-mandatory programming tasks and performance in theoretical algorithmic assessments. The confidence interval ranged from 0.40 to 0.55, confirming a statistically significant and positive relationship between engagement and written exam performance. This relationship is visually depicted in Figure 9. Findings suggests that students who engaged more actively in non-mandatory programming tasks tended to perform better on written exam (code and algorithm analysis).

Figure 9.

Correlation between non-mandatory homework engagement and success in the written exam during the academic year 2023/2024. As the number of submitted non-obligatory homework tasks rises, the probability for the student to fail (<50%) the theoretical (written) exam, lowers.

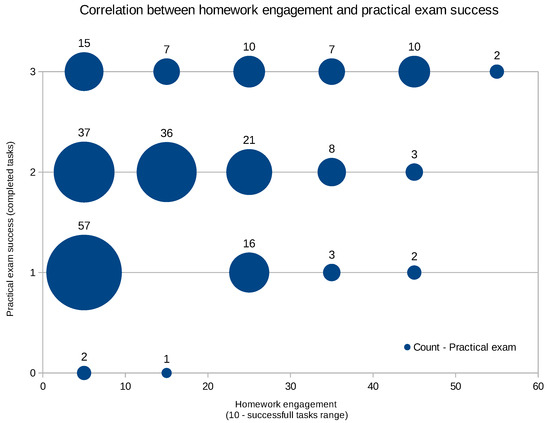

Slightly stronger correlation was observed in the practical exam, where the Pearson coefficient was , with a statistically significant p-value of 4.88 × 10−21. This suggests that students who engaged more frequently with the non-mandatory homework assignments tended to perform better in hands-on coding and algorithm development tasks. The confidence interval ranged from 0.41 to 0.58, reinforcing a positive association between non-mandatory programming practice and students’ ability to complete hands-on coding assessments. The corresponding correlation graph is presented in Figure 10.

Figure 10.

Correlation between non-mandatory homework engagement and success in the practical exam during the academic year 2023/2024. As the number of submitted non-obligatory homework tasks rises, the probability for the student to fail (zero completed tasks) the practical (coding) exam, lowers.

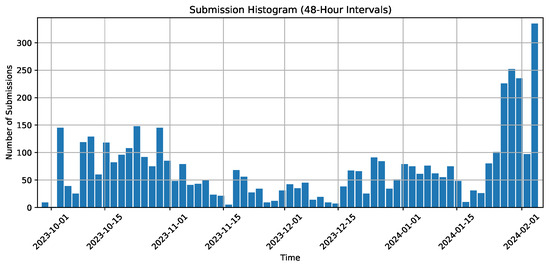

It is essential to clarify that these correlations were derived using non-mandatory homework engagement as the primary dataset. These assignments were not part of any formal enrollment requirement but were instead provided as optional challenges through the PIVO system, offering students an opportunity to reinforce their programming skills. Notably, students were neither encouraged nor discouraged from submitting these assignments. As a result, our dataset does not account for the extent of individual practice that may have taken place without submission. Some students may have solved problems independently and tested their code locally without submitting their solutions to PIVO. If data on such activities were available, the observed correlations might have been even stronger. One can observe engagement on the tasks vs. time in winter semester in Figure 11. Students engaged actively during the start of the semester, dropped the engagement during mid-terms at other courses (15th of November, 15th of December), and boost the work just before the programming course exam dates (end of January).

Figure 11.

Distribution of student engagement over time during the winter semester. The figure illustrates the frequency of non-mandatory homework submissions to the PIVO system, providing insight into engagement trends. Since students were neither encouraged nor discouraged from submitting, this distribution reflects self-motivated participation. It is important to note that the data does not account for independent problem-solving efforts without submission. Cramming behavior before the exam can be observed, but is naturally comparable to engagement in the beginning of the semester.

In conclusion, the availability of more than 50 non-mandatory, moderate-to-difficult programming tasks provided students with substantial opportunities to engage in practical problem-solving. These associations suggest that voluntary programming challenges may support learning outcomes.

5. Discussion

This study examined the impact of introducing a mandatory practical exam on student learning outcomes in an introductory programming course, as well as the relationship between engagement in non-mandatory programming assignments and student performance. The findings indicate that hands-on coding assessments are associated with higher measured skill levels, reinforcing the importance of practice-based learning in computer science education.

Contrary to initial concerns that the mandatory practical exam might increase the failure rate, the results show improved student outcomes. Students not only adapted to the new requirement but demonstrated increased proficiency in programming tasks, as reflected in the higher number of successfully completed assignments. Moreover, a moderate correlation was observed between engagement in non-mandatory assignments and success in both written and practical exams, indicating that self-directed practice plays a meaningful role in skill development.

Although the overall trend is positive, several alternative explanations for the improvement should be considered. The practical exam was not a replacement for the written assessment but an additional component on top of it-students were still required to achieve at least 50% on the written exam to pass the course, plus successfully complete at least one practical task. Therefore, the lower failure rate cannot be attributed to a relaxed grading policy or easier exam format. Instead, increased awareness of the forthcoming practical exam may have motivated students to engage more actively in preparatory exercises throughout the semester. This anticipatory effect, combined with transparent communication about exam requirements, likely contributed to higher engagement levels and reduced exam anxiety. Cohort-level differences, such as variation in prior programming experience or changes in secondary-school curricula, may also have influenced performance, as discussed in Section 3.7. While these factors could have interacted with the new assessment format, the consistency of improvement across multiple indicators suggests that structured, feedback-driven practice coincided with better outcomes, though causal inferences cannot be made.

These results highlight the importance of regular coding practice, timely feedback, and structured evaluation in cultivating algorithmic thinking and problem-solving abilities. The integration of automated programming assessment, real-world tasks, and a compulsory practical exam created an environment that fostered both accountability and active learning. This multifaceted approach appears to support the internalization of core programming concepts—particularly among students who are not primarily focused on computer science.

5.1. Student Feedback (Qualitative Observations)

Although this study focused primarily on quantitative indicators of engagement and success, open comments collected through the PIVO feedback form provide additional qualitative insight. Several students (original comments in Slovenian, translated by the authors) emphasized that the assignment format encouraged creative problem solving and a deeper understanding compared with traditional multiple-choice questions. Typical remarks included: “This format of homework feels ideal, especially because it stimulates creativity and requires real problem solving instead of guessing. It helps us find our own approach to the solution.” Others appreciated the challenge and structure of the tasks while suggesting minor improvements such as allowing more submission attempts or clarifying edge cases: “The assignments are well designed and promote algorithmic thinking, though three attempts instead of two would be helpful.” These testimonials qualitatively support the quantitative finding that practice-oriented, feedback-driven assignments were well received by students and perceived as beneficial for learning.

5.2. Implications for Practice/Transferability

Although this study was carried out within an engineering faculty where programming is not a primary field of study, the observed improvements indicate that introducing a mandatory practical assessment can also strengthen programming competence in comparable introductory courses. For broader adoption, institutions should ensure that automated feedback systems are stable and well-integrated, that evaluation criteria remain consistent with course objectives, and that students have structured opportunities for preparation before assessment. Further work could explore how such an approach transfers to computer science major cohorts or to other disciplines that include programming as a supporting skill.

5.3. Cost–Benefit and Scalability Considerations

The implementation of PIVO required minimal financial and technical resources. The platform operates on standard university hardware and can be hosted either on an existing departmental server or on a low-cost workstation. After initial configuration, ongoing maintenance involves only periodic task updates and automated log review, requiring less than one staff hour per week. Compared with manual assessment, automated grading significantly reduces instructor workload while ensuring consistent and transparent evaluation across large student cohorts. The system has been used successfully with more than 500 students per year without performance issues, indicating strong scalability for similar institutional settings.

5.4. Future Work

While this study demonstrates the benefits of integrating a practical programming exam, further research is needed to explore additional pedagogical strategies. One promising approach is Test-Driven Learning (TDL), where students write test cases before implementing code solutions. This method has been shown to improve both conceptual understanding and coding accuracy (Erdogmus et al., 2005).

Additionally, as AI-generated code (e.g., ChatGPT-based solutions) becomes more prevalent in education, future research should investigate how assessment systems can be adapted to ensure that students develop independent problem-solving skills rather than relying solely on automated code generation (Savelka et al., 2023). A potential solution is enforcing a test-first approach, where students must write test cases before submitting their implementation, thereby requiring them to demonstrate an understanding of the problem before coding.

Overall, this study reinforces the principle that practice, practice, and more practice is key to developing algorithmic thinking and programming proficiency. By continuously refining assessment methods and leveraging automated feedback systems, educators can further enhance student learning experiences and better prepare them for real-world software development challenges.

Author Contributions

Conceptualization, Ž.R.; methodology, Ž.R. and I.F.; software, Ž.R. and J.P.; validation, I.F.; formal analysis, J.P.; data curation, J.P.; writing—original draft, Ž.R.; writing—review and editing, J.P. and I.F.; visualization, I.F.; supervision, I.F.; project administration, I.F.; funding acquisition, I.F. All authors have read and agreed to the published version of the manuscript.

Funding

This work is sponsored in part by the Slovenian Research and Innovation Agency within the research program ICT4QoL-Information and Communications Technologies for Quality of Life (research core funding no. P2-0246).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

This study is based solely on the analysis of anonymized exam results and assignment scores recorded in the course grading system during the academic years 2022/23 and 2023/24. No additional testing or interventions were introduced for research purposes.The introduction of the mandatory practical exam was a regular curricular update, implemented independently of this study. The research evaluates its educational impact retrospectively. No individuals or groups were directly involved in experimental procedures. The study did not involve any form of discrimination, and all data handling complied with institutional privacy and academic integrity guidelines.

Data Availability Statement

The dataset supporting the conclusions of this article is available in the FigShare repository, https://doi.org/10.6084/m9.figshare.30041788.v1.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| APAS | Automated Programming Assessment System |

| PIVO | Programmers’ Interactive Virtual Onboarding |

References

- Belmar, H. (2022). Review on the teaching of programming and computational thinking in the world. Frontiers in Computer Science, 4, 997222. [Google Scholar] [CrossRef]

- Brkić, L., Mekterović, I., Fertalj, M., & Mekterović, D. (2024). Peer assessment methodology of open-ended assignments: Insights from a two-year case study within a university course using novel open source System. Computers & Education, 213, 105001. [Google Scholar] [CrossRef]

- Calles-Esteban, F., Hellín, C. J., Tayebi, A., Liu, H., López-Benítez, M., & Gómez, J. (2024). Influence of gamification on the commitment of the students of a programming course: A case study. Applied Sciences, 14(8), 3475. [Google Scholar] [CrossRef]

- De Souza, D. M., Isotani, S., & Barbosa, E. F. (2015). Teaching novice programmers using ProgTest. International Journal of Knowledge and Learning, 10, 60–77. [Google Scholar] [CrossRef]

- Erdogmus, H., Morisio, M., & Torchiano, M. (2005). On the effectiveness of the test-first approach to programming. IEEE Transactions on Software Engineering, 31(3), 226–237. [Google Scholar] [CrossRef]

- Freedman, D., Pisani, R., & Purves, R. (2007). Statistics (international student edition). W. W. Norton & Company. [Google Scholar]

- Gao, J., Pang, B., & Lumetta, S. S. (2016, July 9–13). Automated feedback framework for introductory programming courses. 2016 ACM Conference on Innovation and Technology in Computer Science Education (pp. 53–58), Arequipa, Peru. [Google Scholar] [CrossRef]

- Gomes, A., & Mendes, A. J. (2007, September 3–7). Learning to program–difficulties and solutions. International Conference on Engineering Education (ICEE) (Vol. 7, pp. 1–5), Coimbra, Portugal. [Google Scholar]

- Grover, S., & Pea, R. (2018). Computational thinking: A competency whose time has come. Computer Science Education: Perspectives on Teaching and Learning in School, 19(1), 19–38. [Google Scholar]

- Hellín, C. J., Calles-Esteban, F., Valledor, A., Gómez, J., Otón-Tortosa, S., & Tayebi, A. (2023). Enhancing student motivation and engagement through a gamified learning environment. Sustainability, 15(19), 14119. [Google Scholar] [CrossRef]

- Iosup, A., & Epema, D. (2014, March 5–8). An experience report on using gamification in technical higher education. 45th ACM Technical Symposium on Computer Science Education (pp. 27–32), Atlanta, GA, USA. [Google Scholar] [CrossRef]

- Lokar, M., & Pretnar, M. (2015, November 19–22). A low overhead automated service for teaching programming. 15th Koli Calling Conference on Computing Education Research (pp. 132–136), Koli, Finland. [Google Scholar] [CrossRef]

- Luxton-Reilly, A., Simon, Albluwi, I., Becker, B. A., Giannakos, M., Kumar, A. N., Ott, L., Paterson, J., Scott, M. J., Sheard, J., & Szabo, C. (2018, July 2–4). Introductory programming: A systematic literature review. 23rd Annual ACM Conference on Innovation and Technology in Computer Science Education (pp. 55–106), Larnaca, Cyprus. [Google Scholar] [CrossRef]

- Mekterović, I., Brkić, L., & Horvat, M. (2023). Scaling automated programming assessment systems. Electronics, 12(4), 942. [Google Scholar] [CrossRef]

- Mekterović, I., Brkić, L., Milašinović, B., & Baranović, M. (2020). Building a comprehensive automated programming assessment system. IEEE Access, 8, 81154–81172. [Google Scholar] [CrossRef]

- Meža, M., Košir, J., Strle, G., & Košir, A. (2017). Towards automatic real-time estimation of observed learner’s attention using psychophysiological and affective signals: The touch-typing study case. IEEE Access, 5, 27043–27060. [Google Scholar] [CrossRef]

- Paiva, J. C., Figueira, Á., & Leal, J. P. (2023). Bibliometric analysis of automated assessment in programming education: A deeper insight into feedback. Electronics, 12(10), 2254. [Google Scholar] [CrossRef]

- Rajesh, S., Rao, V. V., & Thushara, M. (2024, April 5–7). Comprehensive investigation of code assessment tools in programming courses. 2024 IEEE 9th International Conference for Convergence in Technology (I2CT), Pune, India. Available online: https://www.semanticscholar.org/paper/Comprehensive-Investigation-of-Code-Assessment-in-Rajesh-Rao/cffa6f4234607988c9418a89412ac2ca6cc08db1 (accessed on 20 September 2025).

- Restrepo-Calle, F., Echeverry, J. J. R., & González, F. A. (2019). Continuous assessment in a computer programming course supported by a software tool. Computer Applications in Engineering Education, 27(1), 80–89. [Google Scholar] [CrossRef]

- Rojec, Z. (2021). Varen zagon nepreverjene programske kode v sistemu PIVO. Elektrotehniski Vestnik, 88(1/2), 54–60. [Google Scholar]

- Sabarinath, R., & Quek, C. L. G. (2020). A case study investigating programming students’ peer review of codes and their perceptions of the online learning environment. Education and Information Technologies, 25(5), 3553–3575. [Google Scholar] [CrossRef]

- Savelka, J., Agarwal, A., Bogart, C., Song, Y., & Sakr, M. (2023, June 7–12). Can generative pre-trained transformers (GPT) pass assessments in higher education programming courses? 2023 Conference on Innovation and Technology in Computer Science Education V (pp. 117–123), Turku, Finland. [Google Scholar] [CrossRef]

- Sobral, S. R. (2021). Teaching and learning to program: Umbrella review of introductory programming in higher education. Mathematics, 9(15), 1737. [Google Scholar] [CrossRef]

- Wang, H.-Y., Huang, I., & Hwang, G.-J. (2016). Comparison of the effects of project-based computer programming activities between mathematics-gifted students and average students. Journal of Computers in Education, 3(1), 33–45. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).