Abstract

Traditional systematic reviews, despite their high-quality evidence, are labor-intensive and error-prone, especially during the abstract screening phase. This paper investigates the application of machine learning-assisted systematic reviewing in the context of Learning Analytics (LA) in higher education. This study evaluates two approaches—ASReview, an active traditional machine learning tool, and GPT-4o, a large language model—to automate this process. By comparing key performance metrics such as sensitivity, specificity, accuracy, precision, and F1-score, we assess the effectiveness of these tools against traditional manual methods. Our findings demonstrate the potential of machine learning to enhance the efficiency and accuracy of systematic reviews in learning analytics.

1. Introduction

Systematic reviews and meta-analyses, although providing high-quality evidence, are often time-consuming and labor costly, particularly due to the manual screening of titles, abstracts, and full texts (Chai et al., 2021). The growing volume of academic publications has increased the need for reviewers to screen large numbers of papers, a process that is prone to human errors. Traditional systematic reviewing methods can easily lead to oversight. Although machine learning has shown promise in aiding systematic reviews, its application has not been widely tested across domains. In this study, we use Learning Analytics (LA) in higher education as a case context to examine how machine learning can improve the review process.

This paper addresses a critical gap by providing a domain-specific evaluation of machine learning (ML) and large language model (LLM) tools for systematic reviews in LA, with a focus on higher education. While prior work has examined the general potential of ML or LLMs for screening, the novelty of this study lies in its direct performance comparison between ASReview and ChatGPT-4o within the domain of LA. By applying standardized evaluation metrics (precision, recall, accuracy, and F1-score), we establish concrete benchmarks for these two frontier approaches relative to traditional manual screening. In doing so, this case study contributes both methodological insights and practical guidance for educational researchers seeking to integrate advanced tools into their review workflows. Specifically, we address the following research questions: (1) How does machine learning-assisted abstract screening compare with manual systematic review in efficiency? (2) How do ChatGPT-4o and ASReview perform relative to one another and to manual screening in terms of precision, recall, accuracy, and F1-score? (3) What insights do standardized evaluation metrics provide about the effectiveness of manual, ASReview, and ChatGPT-assisted screening approaches?

The application of machine learning (ML) to assist in systematic reviews began gaining attention in 2018, with studies by Gates et al. (2018) and Xiong et al. (2018) exploring this innovative approach. Researchers have identified both potential benefits and limitations, such as the risk of missing relevant records and variations in reliability. For instance, Gates et al. (2018) used ML tools like Abstrackr to semi-automate citation screening and predict relevant records in systematic reviews. In their evaluation across four screening projects at the Alberta Research Centre for Health Evidence, Abstrackr showed varied reliability, with sensitivity above 0.75 and specificity ranging from 0.19 to 0.90. While Abstrackr provided significant workload savings (median 67.20%), the potential for missing relevant records indicated the need for further evaluation of its impact on systematic review outcomes and its role as a secondary reviewer. In a subsequent study, Gates et al. (2019) evaluated the performance of three ML tools—Abstrackr, DistillerSR, and RobotAnalyst—for title and abstract screening in systematic reviews. The findings echoed their previous findings, highlighting significant workload reductions alongside a higher risk of missing relevant records. The performance of each tool varied depending on the specific systematic review, but most studies reported positive benefits, such as reduced workload and improved accuracy.

Xiong et al. (2018) demonstrated that ML-assisted screening could identify the same 29 studies for meta-analysis as manual screening, significantly reducing the number of articles requiring manual review from 4177 to 556. This approach made the study selection process more efficient and robust, enhancing efficiency and objectivity in identifying publications for meta-analysis in medicine.

Bannach-Brown et al. (2019) evaluated two independently developed ML approaches for screening in a systematic review of preclinical animal studies. These methods achieved a sensitivity of 98.70% by learning from a training set of 5749 records, confirming the effectiveness and applicability of ML algorithms in preclinical animal research. Chai et al. (2021) introduce Research Screener, a semi-automated ML tool that demonstrated a workload reduction of 60–96% and saved 12.53 days in real-world analysis. Their findings suggest that Research Screener effectively reduces the burden on researchers while maintaining the scientific rigor of systematic reviews.

Zimmerman et al. (2021) explored using ML and text analytics to streamline the review process by pre-screening titles and abstracts, guided by previously conducted systematic reviews and human confirmation. This approach achieved 99.50% sensitivity, identifying 213 out of 214 relevant articles while requiring human review of only 31% of the total articles, demonstrating its potential to enhance efficiency while maintaining rigor.

Pijls (2023) tested ASReview, an ML-assisted systematic reviewing tool, in orthopedics. It efficiently retrieved relevant papers, identifying most of the papers after screening just 10% of the total. All relevant papers were found after screening 30–40% of the papers, potentially saving 60–70% of the workload. Muthu (2023) reported that the ML platform significantly outperforms traditional methods as an assistive tool for article screening in orthopedic surgery. Quan et al. (2024) introduced ASReview, an AI tool that streamlines the screening process by providing step-by-step guidance/tutorial process on data preparation, import, labeling, and result saving while addressing essential considerations and potential limitations to promote efficient and transparent meta-research. In recent years, ChatGPT has been utilized to assist systematic review (Alshami et al., 2023; Issaiy et al., 2024). Compared to machine learning-assisted review, GPT-based models were slightly less accurate in title and abstract screening (Scherbakov et al., 2024). Most studies used Machine learning-Assisted Tools/platforms like ASReview that has machine learning algorithms embedded in the tool while some articles used Machine learning techniques like clustering (Xiong et al., 2018) or classification (Bannach-Brown et al., 2019; Zimmerman et al., 2021). Table 1 presented an overview of Machine Learning-Assisted Tools used. In summary, ML-assisted systematic reviews can significantly reduce workload and have proven accurate across various fields and review topics.

Table 1.

Machine Learning-Assisted Tools.

2. Materials and Methods

2.1. Data

A comprehensive search strategy was developed using three concepts: learning analytics, academic achievement, and college students. Synonyms, related terms, and database subject headings were identified for each concept and combined using the Boolean operator OR to create search clusters. The search was initially developed in the ERIC (EBSCO) database and subsequently adapted for Education Source (EBSCO), APA PsycInfo (EBSCO), Web of Science Core Collection, IEEExplore, and Applied Science and Technology Source Ultimate (EBSCO). The searches, limited to publications from 2011 to 2023, were conducted between February and May 2023.

Inclusion criteria included studies on the impact of learning analytics on academic performance in higher education, published in peer-reviewed journals, conferences, or dissertations between January 2011 and January 2023, and available in English. Exclusions were made for works not addressing learning analytics, lacking a focus on academic achievement, situated outside formal higher education, or focused solely on model development without application to learning outcomes. Additionally, studies from open universities or MOOCs that indicated informal education settings were excluded. After removing 1603 duplicates, 7016 unique references were screened. We manually identified 5930 irrelevant references, leaving 1086 articles eligible for full-text screening. During the full-text screening process, we manually excluded 913 irrelevant articles. The reasons for labeling irrelevant articles include not LA in higher education (n = 466), not STEM (n = 152), no LMS/digital data (n = 110), full text unavailable (n = 67), wrong study design (n = 40), incorrect learning outcomes (n = 47), not in English (n = 14), duplicates (n = 10), and wrong publication type (n = 7). We identified 173 articles for coding. We excluded 14 articles during coding phase, leaving 159 articles, which generated 161 independent studies, for final review and data analysis for the systematic review.

In machine learning or text classification, ground truth labels are the correct categories assigned to each sample (e.g., “relevant” vs. “irrelevant” in abstract screening), usually established by human annotators or domain experts. In this study, we used a previously completed systematic review as a case study, so the manually screened title and abstracts from our research team were treated as the correct categories/labels. The prediction results from ASReview or ChatGPT were compared with these manually screened results, which served as foundations to evaluate model performance metrics.

2.2. Models

Among the current machine learning-assisted review tools available, both ASReview and ChatGPT stood out for their strengths. With its active learning capability, ASReview demonstrated excellent performance, including high recall rates, making it suitable for large datasets with a large number of irrelevant studies while significantly reducing workloads (Quan et al., 2024). The reliability of ASReview is evident across different disciplines (Adamse et al., 2024; Quan et al., 2024; Spedener, 2023). ChatGPT has high sensitivity and accuracy (Guo et al., 2024). It provides linguistic nuance and adaptability, supporting detailed evaluations of inclusion and exclusion criteria. In addition, ASReview represents an iterative human-in-the-loop design that incorporates human perspectives, providing the advantage of efficiency; ChatGPT automates systematic review and offer adaptability. The complementary characteristics of ASReview and ChatGPT informed us to include both for providing a robust, hybrid approach to systematic reviews.

Machine learning and generative AI are transforming systematic reviews by automating the traditionally manual abstract screening process. This study evaluated ASReview and ChatGPT within the context of higher education, focusing on LA. We compared key metrics such as specificity, precision, and accuracy scores to assess the consistency and effectiveness of these tools in enhancing the systematic review process.

2.2.1. Traditional Machine Learning

This study employed Active Learning for Systematic Reviews (ASReview, version 1.6.2) (Van De Schoot et al., 2021), a ML tool designed for conducting systematic reviews in education. ASReview is based on active ML algorithms and empirical tests, proving to be less error-prone and delivering high-quality results, especially in imbalanced or large literature datasets (Kempeneer et al., 2023; Van De Schoot et al., 2021). ASReview has been applied in various fields, such as orthopedics (Pijls, 2023) and second language acquisition (Quan et al., 2024).

ASReview default settings include classifiers such as Naive Bayes, query strategy, feature extraction, and term frequency-inverse document frequency. In ASReview, researchers interact with an active ML model to classify papers as relevant or not. The process starts with identifying at least one relevant and one non-relevant paper. The software then ranks the papers based on predicted relevance, presenting the most relevant papers first. With each decision made by the researcher, the model recalculates the predicted relevance of the remaining papers and updates their order. This method ensures that relevant papers are likely to be identified early in the screening process, potentially reducing the need to review non-relevant papers.

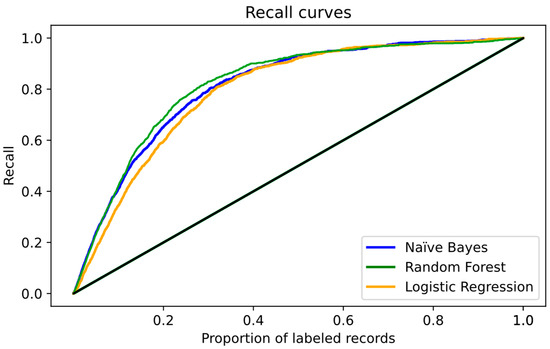

The classifiers tested in this study included Naive Bayes, Random Forest, and Logistic Regression. We used two major performance metrics—“recall” and “work saved oversampling”—to compare these classifiers. Recall is the proportion of relevant records identified at a specific point during the screening process. This metric is also known as the proportion of Relevant Records Found (RRF) after screening a certain percentage of the total records. For example, RRF@10 indicates the recall by representing the proportion of total relevant records identified after screening 10% of the dataset. Work Saved over Sampling (WSS) measures the proportion of screening effort saved by using active learning compared to random sampling for a given level of recall. WSS is typically calculated at a recall of 0.95 (WSS@95), indicating the percentage of records saved while maintaining 95% recall. This metric reflects the efficiency of the screening process while accepting a 5% loss in relevant records. Our primary comparison focuses on WSS@95, as it aligns with the common threshold for statistical significance, allowing for a meaningful assessment of the model’s performance.

To ensure comparability and assess run-to-run variability, ASReview was evaluated using a Monte Carlo simulation design consisting of 10 independent runs per classifier (Naïve Bayes, Random Forest, and Logistic Regression). To ensure comparability and assess run-to-run variability, ASReview was evaluated using a Monte Carlo simulation design consisting of 10 independent runs per classifier (Naïve Bayes, Random Forest, and Logistic Regression). Each run was initialized with the same amount of prior knowledge—five relevant and five irrelevant studies. This design maintained a consistent starting condition across simulations while capturing stochastic variation inherent in active learning. All other parameters, including classifier type, query strategy, and feature-extraction method, were held constant. Results across the 10 runs were summarized using the mean, standard deviation, and 95% confidence intervals for key metrics, including WSS@95, RRF@10, and records required to reach 95% recall.

2.2.2. Large Language Models (ChatGPT)

In addition to traditional methods, we implemented a ChatGPT-based approach using the GPT-4o model to automate the abstract screening process for a PRISMA systematic review on LA in higher education. ChatGPT, developed by OpenAI, is a sophisticated language model that can understand and generate human-like text. The GPT-4o model is an advanced iteration of this technology and was specifically chosen for its enhanced ability to process and analyze large volumes of text with high accuracy. This model excels in dealing with complex language contexts and can evaluate articles based on predefined inclusion and exclusion criteria with a level of precision that often surpasses traditional manual methods. GPT-4o helped streamline the systematic review process and reduce the potential for human error. To assess stability and quantify uncertainty, we conducted ten independent runs of the identical prompt (See Appendix A: Prompt used to evaluate each article using the OpenAI API). This multi-run protocol not only enabled us to evaluate the consistency of results across runs, but also provided a more reliable basis for comparison with manual screening. The GPT-4o model was configured with the following parameters, which were held constant across all ten runs to ensure comparability and optimize performance for the abstract screening task:

- Temperature Setting: The temperature was set to 0.50 to balance creativity with coherence, enabling the model to capture nuanced aspects of complex abstracts while maintaining consistent decision-making. Using this setting also introduced controlled stochasticity across the ten runs, allowing us to evaluate the model’s reliability under realistic variability.

- Max Tokens: The maximum number of tokens was set to 1 to strictly control response length. This design follows evidence from Yu et al. (2024), who showed that in classification tasks, additional generated tokens are often redundant and do not improve decision accuracy. Restricted to a single token to enforce strictly binary output (“0” = exclude, “1” = include). This constraint ensured concise and consistent classifications, minimized variability, and prevented extraneous text.

- Top-p Value: The top-p value was set to 1.00 to allow the model to consider the full probability distribution of possible outputs. In line with standard practice for binary classification tasks, leaving top-p unconstrained ensures that all high-probability tokens are evaluated, thereby supporting consistent inclusion and exclusion decisions without artificially narrowing the model’s response space.

- Frequency and Presence Penalties: Both frequency and presence penalties were set to zeros. This configuration reduces repetition and encourages the model to introduce new topics, ensuring that responses are varied and contextually appropriate, thus improving the screening process by avoiding redundant information.

These settings collectively determined the model’s ability to provide comprehensive and contextually appropriate responses. To further enhance the sensitivity and specificity of the GPT-4o model, several adjustments of key parameters can be made. Each adjustment impacts the model’s ability to accurately screen abstracts by fine-tuning its response characteristics (Detailed information can be found in Appendix B: Fine-Tune GPT Model).

2.2.3. Model Comparison

To ensure a fair evaluation, the results from our original manual screening were used as the benchmark for comparison. For ASReview, the reported Work Saved Scores (WSS) were converted into standard evaluation metrics, and the performance of both ASReview and ChatGPT was assessed using sensitivity, specificity, precision, accuracy, and the F1-score derived from the confusion matrix. The confusion matrix, which consisted of True Positive (TP). False Positive (FP), True Negative (TN), False Negative (FN), can assess the identification accuracy between the actual and predicted values in classification (Alshami et al., 2023; Zeng, 2020). In binary classification tasks, data instances are assigned either a positive or a negative label. A positive label typically indicates the presence of illness, abnormality, or another deviation, whereas a negative label suggests no deviation from the baseline. Each prediction falls into one of four categories: TP refers to when the model correctly predicted positive outcome; TN refers to when a model correctly predicted negative outcome; FP refers to negative instance incorrectly predicted as positive; and FN refers to a positive instance incorrectly predicted as negative (Rainio et al., 2024; Zhu et al., 2010).

In our study, manual screening results served as the benchmark. A TP occurs when the ML tool correctly identifies a study as included, matching the manual decision. A false negative (FN) occurs when the ML tools incorrectly code a study as excluded when it should have been included based on the manual results. Conversely, a true negative (TN) occurs when both the ML tool and manual screening agree to exclude a study, while a false negative (FN) occurs when the ML tools incorrectly code a study as included when the manual screening result is excluded. In this context, “positive” refers to inclusion and “negative” refers to exclusion.

These evaluation metrics and the confusion matrix components provide a standardized way to evaluate the performance of different screening methods, making them useful for comparing the results of ASReview and other models. Work Saved at 95% Recall (WSS@95) represents the proportion of effort saved while maintaining a 95% recall rate. In this context, recall is fixed at 95% (0.95). The following formulas were used to calculate the standard evaluation metrics:

Specificity:

Sensitivity (Recall):

Precision:

Accuracy:

F1-Score:

The F1-score provides the harmonic mean of precision and recall, ensuring that neither metric is considered in isolation. In the context of systematic review screening, this is particularly important: while high recall minimizes the chance of missing relevant studies, low precision can result in unnecessary workload due to false positives. The F1-score, therefore, balances these considerations, making it a critical supplement to WSS, accuracy, and specificity when evaluating model performance. By converting WSS metrics to these metrics, we can comprehensively evaluate and compare the performance of ASReview and ChatGPT, ensuring a robust and consistent assessment of both tools. Both ASReview and GPT-4o were evaluated through repeated runs. ASReview used 10 Monte Carlo simulation runs, whereas GPT-4o was tested through 10 repeated runs under identical prompts and parameters to assess stability. Mean values and 95% confidence intervals across runs were used for comparative analysis.

3. Results and Discussion

3.1. Traditional Machine Learning

We conducted a 10-run Monte Carlo simulation using ASReview to evaluate three traditional machine learning classifiers: Naïve Bayes, Random Forest, and Logistic Regression. In all runs, the same initial prior knowledge set (five relevant and five irrelevant records) was used to ensure consistency across simulations. Random variation was introduced only through different random seeds controlling the active-learning sampling process, which determined the subsequent order in which unlabeled records were presented to the model.

Table 2 summarizes mean performance and 95% confidence intervals (CIs) across runs for three key indicators: the number of records required to reach 95% recall, work saved over sampling (WSS@95), and recall rate found after screening 10% of records (RRF@10).

Table 2.

Traditional Machine Learning Algorithms (ASReview) Monte Carlo summary.

The WSS statistic represents the percentage of screening workload saved by using active learning compared to random manual screening once a defined level of recall is achieved. In this study, WSS@95% quantifies the proportion of records that can be skipped while still identifying 95% of all relevant articles. Based on the 10-run Monte Carlo simulation summarized in Table 2, Logistic Regression achieved the highest mean workload reduction, saving approximately 31.10% of the screening effort (95% CI [18.30–43.90]), followed by Naïve Bayes at 27.30% (95% CI [13.90–40.60]) and Random Forest at 20.40% (95% CI [9.10–31.70]). These results indicate that, on average, reviewers would need to examine roughly 4800–5600 records out of the full corpus to capture nearly all relevant studies—substantially less than the total number of abstracts originally screened.

The differences in WSS values among classifiers reflect their underlying algorithmic characteristics. Naïve Bayes, which relies on conditional probability and assumes feature independence, is fast and efficient but may miss subtle contextual cues in the text. Random Forest, an ensemble of multiple decision trees, produces stable predictions but requires more computation, which can reduce efficiency gains. Logistic Regression, which models inclusion decisions as a linear combination of text features, performs especially well when relationships are approximately linear, leading to its higher WSS@95% and RRF@10% results. Overall, these patterns align with prior findings that linear models often deliver strong baseline accuracy in text classification tasks used for systematic reviews (Pranckevičius & Marcinkevičius, 2017).

At the 95% recall level, active learning produced substantial workload reductions across all classifiers. As shown in Table 2, Logistic Regression achieved the highest efficiency, saving about 31% of the screening effort (95% CI [18.30–43.90]), followed by Naïve Bayes at 27% (95% CI [13.90–40.60]) and Random Forest at 20% (95% CI [9.10–31.70]). These results indicate that reviewers would need to examine roughly 4800–5600 records instead of the full dataset to capture nearly all relevant studies. Figure 1 illustrates the average recall trajectories of the three classifiers. All models follow similar patterns, but Logistic Regression reaches high recall slightly earlier on average, consistent with its superior mean WSS@95% and RRF@10% performance reported in Table 2.

Figure 1.

Performance Evaluation of ASReview.

Table 3 presents the performance of ASReview in abstract screening across three traditional machine learning classifiers—Naïve Bayes, Random Forest, and Logistic Regression—evaluated at the 95% recall level (WSS@95%). All classifiers achieved consistently high sensitivity (mean = 0.950 [0.946–0.954]), correctly identifying about 95% of the relevant studies (≈1032 true positives). However, specificity remained relatively low (0.346–0.409), meaning that only about 37% of irrelevant records were correctly excluded. Consequently, each model produced a substantial number of false positives (3400–3980), directly limiting precision (0.204–0.233) and yielding modest F1-scores (0.337–0.373). Accuracy also remained moderate (0.444–0.488), reflecting the inherent imbalance between true positives and true negatives. Overall, these results highlight a fundamental trade-off in active learning–based systematic reviewing. The models are highly effective in identifying nearly all relevant studies, which is essential for achieving comprehensive recall. However, this performance is accompanied by lower specificity and precision, resulting in a considerable number of irrelevant records being flagged for review. While ASReview substantially improves the efficiency of the initial screening phase, further refinement or integration with human review remains necessary to enhance overall screening effectiveness.

Table 3.

Performance Evaluation of ASReview in Abstract Screening at WSS@95% (10 Monte Carlo Runs).

3.2. Large Language Model

The performance of the GPT-4o model was assessed over 10 independent runs. Table 4 reports the mean confusion matrix counts (TP, FP, TN, FN) together with the corresponding mean evaluation metrics (sensitivity, specificity, accuracy, precision, and F1-score) and their 95% confidence intervals.

Table 4.

Performance Evaluation of GPT-4o Over Ten Independent Runs.

The sensitivity of the GPT-4o model, at 79.50%, indicates that it correctly identified nearly 80% of the relevant articles. This sensitivity level shows the model’s strong capability to capture pertinent studies needed for a comprehensive review. Specificity, on the other hand, reflects the model’s ability to correctly exclude irrelevant articles, which in this case is 68.80%. Precision was 0.314, meaning that just less than one-third of the records identified as relevant were truly relevant, while the F1-score of 0.449 highlights a more balanced trade-off between recall and precision than either metric alone. The overall accuracy of 70.40% further confirms that GPT-4o performed well in distinguishing between relevant and irrelevant articles.

From the perspective of systematic review practice, these results illustrate the strengths and limitations of GPT-4o. Its moderately high sensitivity reduces the risk of overlooking important studies, which is essential for comprehensive evidence synthesis. At the same time, its relatively higher specificity compared with traditional active learning approaches helps limit the volume of irrelevant articles that need to be screened, thereby saving reviewer time. The modest precision and mid-level F1-score indicate that some false positives remain, but the overall balance of metrics suggests that GPT-4o can substantially reduce human workload while still capturing the majority of relevant literature.

3.3. Model Comparison

To comprehensively compare the performance of the ASReview machine learning algorithms (Traditional ML) and the GPT-4o large language model, we examine the results from Table 3 and Table 4. Both tables present key evaluation metrics, including sensitivity, specificity, accuracy, precision, and F1-score, showing that both methods achieve reasonable performance in identifying relevant studies. However, a formal paired statistical comparison (e.g., McNemar’s test) could not be conducted because the results for ASReview and GPT-4o were obtained from separate simulation runs rather than from paired record-level predictions on the same dataset. Therefore, performance comparisons relied on run-level means and 95% confidence intervals across repeated simulations. This approach still provides a valid and reliable basis for comparing the overall trends and relative strengths of both methods.

3.3.1. Sensitivity, Specificity, and Precision

Sensitivity measures the proportion of relevant records correctly identified by the model. In ASReview, recall was fixed at 0.95 for the WSS@95 comparison, yielding a mean sensitivity of 0.950 (95% CI 0.946 to 0.954) across classifiers (Table 3). Achieving this recall required screening approximately 4833 to 5585 records, which represents about 68 to 80% of the 7020 total records, indicating a substantial human workload to ensure comprehensive coverage. In contrast, GPT-4o achieved a mean sensitivity of 0.795 (95% CI 0.790 to 0.800) without interactive labeling during runs. Although its recall was lower than ASReview’s fixed 95%, GPT-4o correctly identified roughly 80% of relevant studies while greatly reducing the number of records requiring manual review. ASReview’s specificity ranged from 0.346 to 0.409, whereas GPT-4o reached 0.688 (95% CI 0.684 to 0.692); corresponding precision values were 0.204 to 0.233 for ASReview and 0.314 (95% CI 0.311 to 0.317) for GPT-4o. These results indicate that while ASReview maximizes recall through active learning, GPT-4o achieves a better balance between recall and precision, reducing false positives and improving overall screening efficiency.

Specificity measures the proportion of irrelevant records correctly excluded. ASReview demonstrated moderate performance, with specificity values ranging from 0.346 to 0.409 across classifiers, resulting in several thousand false positives. In contrast, GPT-4o achieved a much higher mean specificity of 0.688 (95% CI 0.684 to 0.692), reflecting stronger capability in excluding irrelevant articles. This difference in specificity directly affected precision, which was considerably lower for ASReview (0.204 to 0.233) than for GPT-4o (0.314 [95% CI 0.311 to 0.317]). The higher precision of GPT-4o indicates that a larger proportion of the records it identified as relevant were truly relevant, thereby reducing the manual burden associated with reviewing false positives in later stages of the screening process.

3.3.2. Accuracy, F1-Score and Overall Performance

Accuracy reflects the overall proportion of correct classifications, combining both true positives and true negatives. Across classifiers in ASReview, accuracy ranged from 0.461 (95% CI 0.444 to 0.478) for Random Forest to 0.471 (95% CI 0.454 to 0.488) for Naïve Bayes, with Logistic Regression showing an intermediate value of 0.465 (95% CI 0.448 to 0.482). These values indicate moderate overall performance when balancing correct inclusions and exclusions. In contrast, GPT-4o achieved a substantially higher mean accuracy of 0.704 (95% CI 0.701 to 0.707), demonstrating stronger capability in correctly distinguishing between relevant and irrelevant studies.

Beyond accuracy, the F1-score offers a balanced measure of precision and recall. ASReview’s F1-scores were modest, ranging from 0.353 (95% CI 0.337 to 0.369) for Random Forest to 0.357 (95% CI 0.341 to 0.373) for Naïve Bayes, with Logistic Regression at 0.355 (95% CI 0.339 to 0.371). These results reflect the trade-off inherent in active learning: achieving very high recall (0.950 [95% CI 0.946 to 0.954]) while maintaining relatively low precision (0.204 to 0.233). In comparison, GPT-4o reached a higher mean F1-score of 0.449 (95% CI 0.445 to 0.453), showing a more balanced performance that captures most relevant studies while reducing false positives. This improvement in balance underscores GPT-4o’s efficiency advantage—it lowers reviewer workload while retaining adequate recall for comprehensive evidence coverage.

Overall, the results highlight distinct roles for the two approaches. ASReview remains well suited for contexts where exhaustive inclusion and minimal omission of relevant studies are essential, despite the additional human effort required to address false positives. GPT-4o, by contrast, provides a more efficient option, achieving a better equilibrium between recall and precision and reducing the manual screening burden when time and resources are limited.

Figure 2 visualizes these differences by comparing mean sensitivity, specificity, accuracy, precision, and F1-score between ASReview (averaged across classifiers at WSS@95) and GPT-4o.

Figure 2.

Comparative performance of ASReview.

There are several factors that explain the performance differences between ASReview and GPT-4o, even though both address the same binary classification task. Fundamentally, their algorithmic foundations are distinct. ASReview relies on supervised machine learning models such as Naïve Bayes, Random Forest, or Logistic Regression, where performance depends heavily on the quality of the labeled training data and researcher expertise during the interactive training process (Quan et al., 2024). In contrast, GPT-4o is a large language model that leverages general reasoning and natural language inference, drawing on prior linguistic knowledge to evaluate abstracts without task-specific training. Because large language models rely on semantic interpretation, their performance may be influenced by textual ambiguity or the framing of prompts, with prompt quality directly shaping model outputs (Alshami et al., 2023). The most effective prompts clearly state exclusion criteria and avoid negative examples, but they should also be tailored to the specific features of the corpus (Syriani et al., 2024). Adjusting standard settings, such as tweaking the prompt or expanding the Likert scale from 1 to 5 to 1 to 10, significantly affected performance using LLM (Dennstädt et al., 2024).

Previous studies have reported that ChatGPT and similar LLMs can function as fully automated systematic review tools, showing strong performance in filtering irrelevant articles while sometimes being overly inclusive by retaining borderline or ambiguous records (Alshami et al., 2023). Some research has noted exceptionally high sensitivity, while others emphasize that zero-shot robustness allows LLMs to generalize without pretraining or fine-tuning on specific review datasets (Issaiy et al., 2024). Variability in findings has also been attributed to default settings and model choices: different machine learning classifiers (e.g., logistic regression, neural networks, or random forests) yield varying outcomes in ASReview, and parameter optimization can strongly affect both ASReview and LLM results (Muthu, 2023; Pijls, 2023). Yao et al. (2024) explored four machine learning tools in assisting systematic review in medical field and findings suggested that those automation tools’ performances vary from each other in accuracy and efficiency. Similarity, Li et al. (2024) used ChatGPT and found similar variation in performance. It is also noteworthy that the accuracy of these tools was shown to rely heavily on the proportion of citations that were manually screened and used to train them, highlighting the importance of training process in the performance for ML. However, another review paper suggested that it is difficult to find the correct stopping point for training the data (Ofori-Boateng et al., 2024). No unified/standard guideline for the training process might affect the model performance. The goal or parameters setting can also affect the performance. Yao et al. (2024) pointed out the sensitivity and specificity had a trade-off. Ofori-Boateng et al. (2024) indicated a trade-off between precision and recall: improving one typically reduces the other. Techniques that can enhance precision while also with high recall rates are feature enrichment, resampling methods, and query expansion. The performance metrics would be different depending on how much weight it carries to prioritize precision or recall. Dennstädt et al. (2024) stated that it is still uncertain how these systems will perform in prospective use and what this means for conducting SLRs. Caution is advised due to limited evidence (Yao et al., 2024). Therefore, why the different approaches might yield different specificity and sensitivity trade-offs remains unknow and needs future efforts.

Despite these limitations, prior studies and our findings suggest that GPT outperforms traditional active learning methods in terms of time efficiency (Alshami et al., 2023). The choice between ASReview and GPT-4o should therefore depend on the specific goals of the systematic review. ASReview may be more appropriate when reviewers can provide high-quality training examples, and the priority is to capture as many relevant studies as possible with minimal risk of omission. In contrast, GPT-4o may serve as an efficient complementary tool for broader screening tasks, particularly when the aim is to reduce reviewer workload and achieve a more balanced overall performance.

4. Conclusions

In the rapid evolving field of higher education, the need for sufficient and accurate systematic reviews is critical. This study provides one of the first direct, domain-specific comparisons of ASReview and GPT-4o for abstract screening within LA. Our results show that ASReview’s machine learning classifiers ensure that the most relevant records are identified, which is crucial for comprehensive systematic reviews. However, this high sensitivity comes with the trade-off of low precision, modest F1-scores, and a substantial human workload, as more than 38% of the total papers still need to be manually reviewed.

On the other hand, GPT-4o offers a more balanced performance with significantly higher specificity, overall accuracy, and a stronger F1-score. This makes GPT-4o more effective in reducing false positives and minimizing human intervention. The choice between ASReview and GPT-4o depends on the specific priorities of the review process: whether the goal is to maximize coverage of relevant studies (favoring ASReview) or to reduce workload by improving efficiency (favoring GPT-4o).

Looking ahead, integrating these advanced machine learning tools can transform systematic reviews, making them more reliable and adaptable. A hybrid approach using ASReview to identify a broad range of studies and GPT-4o to refine the results could combine the strengths of both methods. In addition, Motzfeldt Jensen et al. (2025) indicated that ChatGPT was qualified to serve as a second reviewer in a systematic review. Therefore, the effect of a hybrid combination of AI and human efforts can be further explored in the future.

From a practical perspective, our findings suggest a decision framework for researchers. ASReview is best suited for early-phase scoping reviews or contexts where comprehensiveness is paramount, while GPT-4o is more appropriate for teams that face time or personnel constraints and need to minimize false positives. For projects where both recall and efficiency are critical, a sequential hybrid approach may provide the best balance. In terms of reviewer training, ASReview requires domain-specific expertise to curate and label high-quality training datasets, while GPT-4o requires familiarity with coding and prompt design.

However, there are limitations to consider. The effectiveness of both tools relies on the quality of the data, and biases in input data can affect outcomes. ChatGPT’s output is particularly sensitive to prompt phrasing, and there is no agreement on what characteristics of prompts work the best. Additionally, these tools require technical expertise and might incur costs that limit accessibility for some researchers. For example, effective use of ChatGPT often requires familiarity with coding and prompt engineering, whereas ASReview requires domain-specific expertise to curate high-quality training dataset. Beyond these practical considerations, several methodological limitations remain. We did not include learning curve analyses for ASReview to show how performance evolves with training set size, nor did we examine prompt sensitivity for GPT-4o or conduct a detailed error analysis of failure cases and boundary conditions. These aspects are important for understanding edge performance and model robustness but were beyond the scope of this study. Future research should address these elements to provide a fuller picture of when and how each method performs best.

This study is also limited by its single-case focus on higher education and by evaluating only two tools (GPT-4o and ASReview) due to resource constraints. For GPT-4o, we sought to strengthen statistical rigor by conducting ten independent runs and reporting mean performance metrics with confidence intervals. While this approach reduces variance from single-run outcomes, it remains a limitation that only ten trials were conducted; future research could extend this by running additional iterations to further confirm robustness. In addition, paired record-level decisions were not retained, which prevents the use of additional statistical tests such as McNemar’s test. We also did not include learning curve analyses for ASReview, prompt sensitivity analyses for GPT-4o, or detailed error analysis of failure cases and boundary conditions. Furthermore, alternative tools (e.g., Abstrackr, DistillerSR, RobotAnalyst, Research Screener) were not tested, nor were results validated across multiple datasets or domains. These factors constrain the generalizability of our findings beyond the learning analytics context.

Furthermore, since LLMs predict word sequences from past data, they can inherit biases (e.g., ethnic or gender), making them unsuitable as fair and reliable evaluators (Dennstädt et al., 2024). ML models can produce unfair outcomes due to biases arising from data, development, and interaction factors, including training data, algorithms, feature selection, institutional practices, reporting, and temporal shifts. (Hanna et al., 2025). Those biases might result in performance differences. Future studies should extend the analysis to additional tools, datasets, and robustness checks to strengthen both statistical validity and practical applicability. Even with these limitations, this study provides an early effort to compare ASReview and GPT-4o for abstract screening in systematic reviews, offering methodological insights with practical implications. By acknowledging both the potential and the constraints, our work helps lay the groundwork for future research on ML-assisted screening.

ASReview and GPT-4o each provide distinct advantages in supporting systematic reviews. ASReview is most effective when the priority is to include as many relevant studies as possible, ensuring comprehensive coverage of the literature. In contrast, GPT-4o is better suited for improving efficiency by reducing the number of irrelevant articles and minimizing the workload required from reviewers. When applied together, these tools can complement one another, helping researchers conduct reviews that are both thorough and efficient, thereby better aligning with the evolving demands of educational research.

Author Contributions

Conceptualization, Z.X. and S.M.; methodology, Z.X. and X.Z.; software, X.Z., Z.X. and S.M.; validation, X.Z. and Z.X.; formal analysis, X.Z. and S.M.; investigation, Z.X. and S.M.; resources, Z.X.; data curation, Z.X.; writing—original draft preparation, Z.X., X.Z. and S.M.; writing—review and editing, Z.X.; visualization, X.Z.; supervision, Z.X.; project administration, Z.X.; funding acquisition, Z.X. All authors have read and agreed to the published version of the manuscript.

Funding

This research is based upon work supported by the U.S. Department of Homeland Security under Grant Award Number, Award No 18STCBT00001 through the Cross-Border Threat Screening and Supply Chain Defense Center of Excellence.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original data presented in the study are openly available in Texas Data Repository at https://doi.org/10.18738/T8/WDFJOG (accessed on 4 November 2025).

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| LA | Learning Analytics |

| ML | Machine Learning |

| TP | True Positives |

| FP | False Positives |

| TN | True Negatives |

| FN | False Negatives |

| WSS | Work Saved over Sampling |

Appendix A. Prompt Used to Evaluate Each Article Using the OpenAI API

You are assisting a PRISMA abstract screening for:

- “Learning Analytics (LA) in Higher Education”.

Your task is to decide if the record should be included for full-text screening.

Be liberal and maximize sensitivity:

- -

- If in doubt or information is missing, INCLUDE (1).

- -

- Only EXCLUDE (0) if the abstract/title clearly and explicitly meets an exclusion criterion.

Output: ONLY one character

- -

- 0 = Exclude

- -

- 1 = Include

- -

- No explanations. No extra characters.

Record

- -

- Title: {title}

- -

- Abstract: {abstract}

- -

- Year: {year}

Inclusion criteria (all should be satisfied, but missing/unclear info = still include):

- -

- I1. Focus on Learning Analytics (e.g., LMS logs, interaction/behavior data, at-risk prediction, study behaviors linked to outcomes).

- -

- I2. Evaluates students’ academic performance or related outcomes (grades, GPA, course completion, exam scores).

- -

- I3. Higher education setting (undergraduate/graduate, for-credit). MOOCs are NOT higher ed.

- -

- I4. Empirical study using learner-level primary data (institutional logs, course records). If unclear, assume primary.

- -

- I5. Participants are formal learners (enrolled in credit-bearing programs).

- -

- I6. Publication is peer-reviewed journal, peer-reviewed conference, or dissertation. (Exclude book chapters, reports.)

- -

- I7. English language.

- -

- I8. Published Jan 2011–Jan 2023.

Exclusion criteria (EXCLUDE ONLY if clearly stated in abstract/title):

- -

- E1. Not Learning Analytics.

- -

- E2. No focus on academic performance/outcomes.

- -

- E3. No learning impact or outcome evaluation.

- -

- E4. No empirical evidence (e.g., reviews without data, purely secondary/aggregate data).

- -

- E5. Not higher education (K-12, workforce training, MOOCs).

- -

- E6. Method development only, no application to outcomes.

- -

- E7. Literature review, meta-analysis, commentary, editorial.

- -

- E8. Not English.

- -

- E9. Published outside Jan 2011–Jan 2023.

Decision rules:

- -

- If an exclusion criterion is explicitly and unambiguously met → 0.

- -

- Otherwise (unclear, missing, or ambiguous) → 1.

Appendix B. Fine-Tune GPT Model

To further enhance the sensitivity and specificity of the GPT-4o model, several adjustments of key parameters can be made. Each adjustment impacts the model’s ability to accurately screen abstracts by fine-tuning its response characteristics. Here are the four main adjustments:

Temperature Setting. The default temperature was set at 0.5 to balance consistency with some variability in responses. Lowering the temperature (e.g., to 0.2–0.3) makes outputs more deterministic, which can improve specificity by reducing random false positives but may reduce sensitivity by excluding borderline cases. Raising the temperature (e.g., to 0.6–0.7) increases variability, which can enhance sensitivity by capturing more relevant articles but may lower specificity by introducing additional false positives. Temperature tuning therefore adjusts the trade-off between sensitivity and specificity, depending on whether the goal is to maximize inclusiveness or limit misclassifications.

Max Tokens. By default, the maximum output length was set to 1 token to enforce a binary decision (“0” = exclude, “1” = include). Increasing this limit (e.g., to 10–50 tokens) allows the model to produce short explanations in addition to the label. These longer outputs can specify which inclusion or exclusion criteria are met, making the decision process more transparent. This adjustment influences interpretability rather than the underlying classification accuracy.

Top-p Value. The default top-p was set to 1.0, meaning the model considered the full probability distribution of possible outputs. Lowering this value (e.g., to 0.8–0.9) restricts sampling to a narrower set of high-probability tokens, making outputs more conservative and consistent. This adjustment can reduce false positives and improve specificity but may also lower sensitivity by excluding some borderline relevant studies. Top-p tuning thus provides a mechanism to shift the balance between inclusiveness and strictness in classification.

Frequency and Presence Penalties. Both frequency and presence penalties were set to their default values of 0.0. At this setting, the model generates outputs without additional constraints on repetition or novelty. Adjusting these penalties upward (e.g., frequency = 0.5, presence = 0.6) can reduce repetitive outputs and encourage more varied responses, which may improve interpretability when longer outputs are used. However, in the default binary classification setting with a single-token output, these parameters have minimal effect on model performance.

These parameter adjustments can collectively enhance the model’s ability depending on the needs of researchers in the systematic review process. By providing a more comprehensive sample for the next round of full-text screening, these adjustments help ensure a thorough and reliable literature review.

In addition to parameter tuning, we also examined how prompt design influences model performance. The main analyses in this paper were conducted using a shorter, liberal prompt that emphasized inclusiveness, reflecting the priority in systematic reviews to minimize the risk of excluding relevant studies. For robustness, we also tested a longer, more comprehensive prompt that explicitly enumerated inclusion and exclusion criteria. This alternative prompt increased specificity and precision but substantially lowered sensitivity, illustrating the trade-off between strictness and coverage. These findings highlight that, alongside parameter settings, the formulation of the prompt itself is a critical factor in shaping GPT-4o’s classification behavior.

References

- Adamse, I., Eichelsheim, V., Blokland, A., & Schoonmade, L. (2024). The risk and protective factors for entering organized crime groups and their association with different entering mechanisms: A systematic review using ASReview. European Journal of Criminology, 21(6), 859–886. [Google Scholar] [CrossRef]

- Alshami, A., Elsayed, M., Ali, E., Eltoukhy, A. E., & Zayed, T. (2023). Harnessing the power of ChatGPT for automating systematic review process: Methodology, case study, limitations, and future directions. Systems, 11(7), 351. [Google Scholar] [CrossRef]

- Bannach-Brown, A., Przybyła, P., Thomas, J., Rice, A. S., Ananiadou, S., Liao, J., & Macleod, M. R. (2019). Machine learning algorithms for systematic review: Reducing workload in a preclinical review of animal studies and reducing human screening error. Systematic Reviews, 8, 23. [Google Scholar] [CrossRef]

- Chai, K. E., Lines, R. L., Gucciardi, D. F., & Ng, L. (2021). Research screener: A machine learning tool to semi-automate abstract screening for systematic reviews. Systematic Reviews, 10, 93. [Google Scholar] [CrossRef]

- Dennstädt, F., Zink, J., Putora, P. M., Hastings, J., & Cihoric, N. (2024). Title and abstract screening for literature reviews using large language models: An exploratory study in the biomedical domain. Systematic Reviews, 13(1), 158. [Google Scholar] [CrossRef] [PubMed]

- Gates, A., Guitard, S., Pillay, J., Elliott, S. A., Dyson, M. P., Newton, A. S., & Hartling, L. (2019). Performance and usability of machine learning for screening in systematic reviews: A comparative evaluation of three tools. Systematic Reviews, 8, 278. [Google Scholar] [CrossRef] [PubMed]

- Gates, A., Johnson, C., & Hartling, L. (2018). Technology-assisted title and abstract screening for systematic reviews: A retrospective evaluation of the abstrackr machine learning tool. Systematic Reviews, 7, 45. [Google Scholar] [CrossRef] [PubMed]

- Guo, E., Gupta, M., Deng, J., Park, Y. J., Paget, M., & Naugler, C. (2024). Automated paper screening for clinical reviews using large language models: Data analysis study. Journal of Medical Internet Research, 26, e48996. [Google Scholar] [CrossRef]

- Hanna, M. G., Pantanowitz, L., Jackson, B., Palmer, O., Visweswaran, S., Pantanowitz, J., Deebajah, M., & Rashidi, H. H. (2025). Ethical and bias considerations in artificial intelligence/machine learning. Modern Pathology, 38(3), 100686. [Google Scholar] [CrossRef]

- Issaiy, M., Ghanaati, H., Kolahi, S., Shakiba, M., Jalali, A. H., Zarei, D., Kazemian, S., Avanaki, M. A., & Firouznia, K. (2024). Methodological insights into ChatGPT’s screening performance in systematic reviews. BMC Medical Research Methodology, 24(1), 78. [Google Scholar] [CrossRef]

- Kempeneer, S., Pirannejad, A., & Wolswinkel, J. (2023). Open government data from a legal perspective: An AI-driven systematic literature review. Government Information Quarterly, 40(3), 101823. [Google Scholar] [CrossRef]

- Li, M., Sun, J., & Tan, X. (2024). Evaluating the effectiveness of large language models in abstract screening: A comparative analysis. Systematic Reviews, 13(1), 219. [Google Scholar] [CrossRef] [PubMed]

- Motzfeldt Jensen, M., Brix Danielsen, M., Riis, J., Assifuah Kristjansen, K., Andersen, S., Okubo, Y., & Jørgensen, M. G. (2025). ChatGPT-4o can serve as the second rater for data extraction in systematic reviews. PLoS ONE, 20(1), e0313401. [Google Scholar] [CrossRef] [PubMed]

- Muthu, S. (2023). The efficiency of machine learning-assisted platform for article screening in systematic reviews in orthopaedics. International Orthopaedics, 47(2), 551–556. [Google Scholar] [CrossRef]

- Ofori-Boateng, R., Aceves-Martins, M., Wiratunga, N., & Moreno-Garcia, C. F. (2024). Towards the automation of systematic reviews using natural language processing, machine learning, and deep learning: A comprehensive review. Artificial Intelligence Review, 57(8), 200. [Google Scholar] [CrossRef]

- Pijls, B. G. (2023). Machine Learning assisted systematic reviewing in orthopaedics. Journal of Orthopaedics, 48, 103–106. [Google Scholar] [CrossRef]

- Pranckevičius, T., & Marcinkevičius, V. (2017). Comparison of naive bayes, random forest, decision tree, support vector machines, and logistic regression classifiers for text reviews classification. Baltic Journal of Modern Computing, 5(2), 221. [Google Scholar] [CrossRef]

- Quan, Y., Tytko, T., & Hui, B. (2024). Utilizing ASReview in screening primary studies for meta-research in SLA: A step-by-step tutorial. Research Methods in Applied Linguistics, 3(1), 100101. [Google Scholar] [CrossRef]

- Rainio, O., Teuho, J., & Klén, R. (2024). Evaluation metrics and statistical tests for machine learning. Scientific Reports, 14(1), 6086. [Google Scholar] [CrossRef]

- Scherbakov, D., Hubig, N., Jansari, V., Bakumenko, A., & Lenert, L. A. (2024). The emergence of large language models (llm) as a tool in literature reviews: An llm automated systematic review. arXiv, arXiv:2409.04600. [Google Scholar] [CrossRef]

- Spedener, L. (2023). Towards performance comparability: An implementation of new metrics into the ASReview active learning screening prioritization software for systematic literature reviews [Unpublished Master’s Thesis, Utrecht University]. Available online: https://studenttheses.uu.nl/handle/20.500.12932/45187 (accessed on 1 November 2025).

- Syriani, E., David, I., & Kumar, G. (2024). Screening articles for systematic reviews with ChatGPT. Journal of Computer Languages, 80, 101287. [Google Scholar] [CrossRef]

- Van De Schoot, R., De Bruin, J., Schram, R., Zahedi, P., De Boer, J., Weijdema, F., Kramer, B., Huijts, M., Hoogerwerf, M., Ferdinands, G., Harkema, A., Willemsen, J., Ma, Y., Fang, Q., Hindriks, S., Tummers, L., & Oberski, D. L. (2021). An open source machine learning framework for efficient and transparent systematic reviews. Nature Machine Intelligence, 3(2), 125–133. [Google Scholar] [CrossRef]

- Xiong, Z., Liu, T., Tse, G., Gong, M., Gladding, P. A., Smaill, B. H., Stiles, M. K., Gillis, A. M., & Zhao, J. (2018). A machine learning aided systematic review and meta-analysis of the relative risk of atrial fibrillation in patients with diabetes mellitus. Frontiers in Physiology, 9, 835. [Google Scholar] [CrossRef] [PubMed]

- Yao, X., Kumar, M. V., Su, E., Miranda, A. F., Saha, A., & Sussman, J. (2024). Evaluating the efficacy of artificial intelligence tools for the automation of systematic reviews in cancer research: A systematic review. Cancer Epidemiology, 88, 102511. [Google Scholar] [CrossRef] [PubMed]

- Yu, Y. C., Kuo, C. C., Ye, Z., Chang, Y. C., & Li, Y. S. (2024). Breaking the ceiling of the llm community by treating token generation as a classification for ensembling. arXiv, arXiv:2406.12585. [Google Scholar] [CrossRef]

- Zeng, G. (2020). On the confusion matrix in credit scoring and its analytical properties. Communications in Statistics-Theory and Methods, 49(9), 2080–2093. [Google Scholar] [CrossRef]

- Zhu, W., Zeng, N., & Wang, N. (2010, November 14–17). Sensitivity, specificity, accuracy, associated confidence interval and ROC analysis with practical SAS implementations. NESUG Proceeding: Health Care and Life Sciences (Vol. 19, p. 67), Baltimore, MD, USA. [Google Scholar]

- Zimmerman, J., Soler, R. E., Lavinder, J., Murphy, S., Atkins, C., Hulbert, L., Lusk, R., & Ng, B. P. (2021). Iterative guided machine learning-assisted systematic literature reviews: A diabetes case study. Systematic Reviews, 10(1), 97. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).