Learning Course Improvement Tools: Search Has Led to the Development of a Maturity Model

Abstract

1. Introduction

2. Description of the Course “Algorithmization and Programming of Solutions”

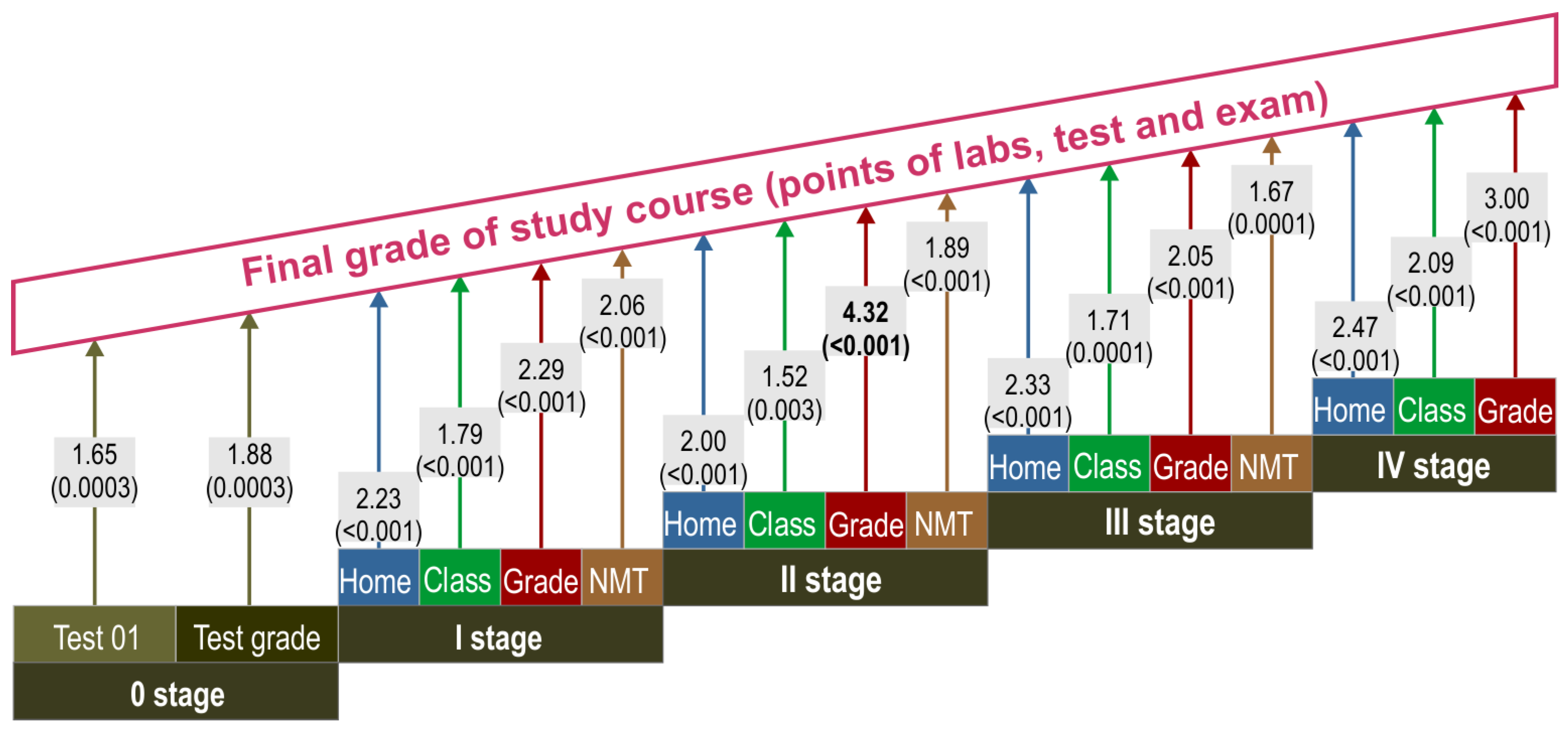

3. Predicting Student Performance Using Risk of Underachievement

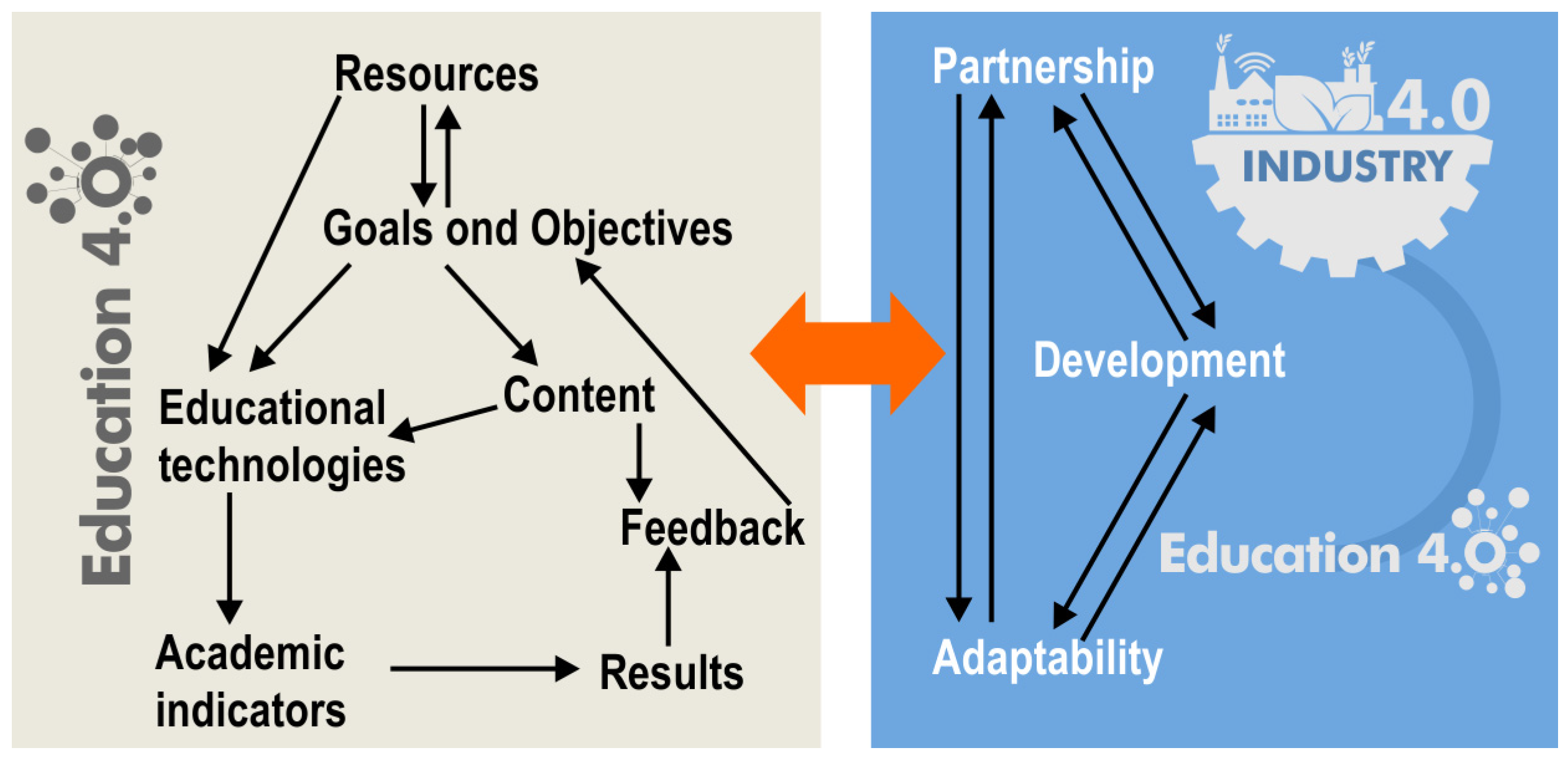

4. Maturity Model TTIC: Ten Tools for Improving Course

- Alignment of course content with labor market demands and educational standards.

- Student learning outcomes, alignment with the educational goals of the course and institution, and current market demands.

- Relevance, novelty, and timely updating of course materials.

- Effectiveness of teaching methods and technologies, including personalization.

- Alignment of instructors with the set educational process trends.

- Quality of the educational process organization from the perspectives of administration, instructors, and students.

- Level of student engagement, including encouragement of their independence and involvement in the educational process.

- Objectives and Goals: The course objectives and goals should be clearly defined and understood by all participants in the educational process. They must align with labor market demands and educational standards.

- Content: The course content should be current and reflect modern programming trends. It should cover programming fundamentals as well as more advanced topics to provide students with in-depth knowledge and skills.

- Educational Technologies: Teaching methods should foster critical thinking, problem-solving, and teamwork skills. They should include practical sessions, lab work, projects, and other learning formats.

- Academic indicators (knowledge assessment, attendance, completion of assignments): Student knowledge should be assessed regularly and objectively, using tests, exams, practical assignments, and projects.

- Feedback: Feedback from instructors and other educational participants should be timely and constructive, aiding students in improving their knowledge and skills.

- Outcomes: Course outcomes should be evaluated based on criteria set at the beginning of the course, including not only knowledge assessment but also students’ ability to apply it in practice.

- Development: To remain relevant and effective, the course should continually evolve and improve based on feedback not only from students and instructors, but also be aligned with the current development priorities of industry and higher education as a whole—namely, Industry 4.0 and Education 4.0. In this context, the university can serve as the resource that ensures the necessary communication to translate the needs of Industry 4.0 into advances in education at all levels within the lifelong learning paradigm.

- Partnerships: Collaborations with industry and other educational institutions can enhance course quality and relevance. Partnerships can help address the issue of the motivation for acquiring the required competencies and involvement in the educational process, as well as of financial self-sufficiency, thereby reducing the risk of students leaving the university due to financial hardship and, at the same time, increasing students’ professionalism.

- Resources: The availability of essential resources, such as pedagogues, textbooks, software, overall intellectual capital, equipment, and university infrastructure, is crucial for the successful implementation of the course.

- Adaptability: The course should be tailored to meet students’ needs and preparation levels, helping them better grasp the material and develop their skills.

5. Link Between TTIC and Empirical Data

- Academic Indicators—regular monitoring of performance, attendance, and assignment completion;

- Feedback—timely informing of students and instructors about risks, enabling adjustments to the learning trajectory.

- Students with a weak background are not “poor performers”—they need a different trajectory;

- Stage 2 is a critical point where the workload can be adjusted, additional materials offered, and mentoring arranged.

6. Limitations

7. Future Research

8. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Adekunle, S. A., Aigbavboa, C., Ejohwomu, O., Ikuabe, M., & Ogunbayo, B. (2022). A critical review of maturity model development in the digitisation era. Buildings, 12(6), 858. [Google Scholar] [CrossRef]

- Alencar Rigon, E., Merkle Westphall, C., Ricardo dos Santos, D., & Becker Westphall, C. (2014). A cyclical evaluation model of information security maturity. Information Management & Computer Security, 22(3), 265–278. [Google Scholar] [CrossRef]

- Al Mughrabi, A., & Jaeger, M. (2018). Utilising a capability maturity model to optimise project based learning–case study. European Journal of Engineering Education, 43(5), 679–692. [Google Scholar] [CrossRef]

- Al-Obathani, F. S., & Ameen, A. A. (2019, November 4–5). Measurement-based smart government maturity model. Measurement Based Smart Government Maturity Model (Vol. 4, p. 5), Amsterdam, The Netherlands. [Google Scholar]

- Andersen, E. S., & Jessen, S. A. (2003). Project maturity in organisations. International Journal of Project Management, 21(6), 457–461. [Google Scholar] [CrossRef]

- Arruda, H., & Silva, É. R. (2021). Assessment and evaluation in active learning implementations: Introducing the engineering education active learning maturity model. Education Sciences, 11(11), 690. [Google Scholar] [CrossRef]

- Chu, T., Liu, X., Takayanagi, S., Matsushita, T., & Kishimoto, H. (2023). Association between mental health and academic performance among university undergraduates: The interacting role of lifestyle behaviors. International Journal of Methods in Psychiatric Research, 32(1), e1938. [Google Scholar] [CrossRef] [PubMed]

- Clarke, J. A., Nelson, K. J., & Stoodley, I. D. (2013, July 1–4). The place of higher education institutions in assessing student engagement, success and retention: A maturity model to guide practice. The 2013 Higher Education Research and Development Society of Australasia Conference (pp. 1–11), Auckland, New Zealand. [Google Scholar]

- De Bruin, T., Rosemann, M., Freeze, R., & Kaulkarni, U. (2005). Understanding the main phases of developing a maturity assessment model. In Australasian conference on information systems (ACIS) (pp. 8–19). Australasian Chapter of the Association for Information Systems. [Google Scholar]

- Drăghici, G. L., & Cazan, A. M. (2022). Burnout and maladjustment among employed students. Frontiers in Psychology, 13, 825588. [Google Scholar] [CrossRef]

- El Moutchou, K., & Touate, S. (2024). Pedagogical innovation in the context of higher education 4.0: A systematic literature review. Multidisciplinary Reviews, 7(11), 2024275. [Google Scholar] [CrossRef]

- Funchall, D., Herselman, M. E., & Van Greunen, D. (2011, November 9–11). People innovation capability maturity model (PICaMM) for measuring SMMEs in South Africa. CIRN Prato Community Informatics Conference 2011, Prato, Italy. [Google Scholar]

- García-Santiago, L., & Díaz-Millón, M. (2024). Pedagogical and communicative resilience before industry 4.0 in higher education in translation and interpreting in the twenty-first century. Education and Information Technologies, 29(17), 23495–23515. [Google Scholar] [CrossRef]

- Goeman, K., & Dijkstra, W. (2022). Creating mature blended education: The European maturity model guidelines. Higher Education Studies, 12(3), 34–46. [Google Scholar] [CrossRef]

- Gutiérrez-Monsalve, J. A., Garzón, J., Forero-Meza, M. F., Estrada-Jiménez, C., & Segura-Cardona, A. M. (2024, October). Factors associated with dropout in engineering: A structural equation and logistic model approach. In Workshop on engineering applications (pp. 225–236). Springer Nature Switzerland. [Google Scholar]

- Kasser, J. E., & Frank, M. (2010, July 12–15). 1.2.2 A maturity model for the competency of systems engineers. INCOSE International Symposium (Vol. 20, pp. 37–50), Chicago, IL, USA. [Google Scholar]

- Knosp, B. M., Barnett, W. K., Anderson, N. R., & Embi, P. J. (2019). Corrigendum: Research IT maturity models for academic health centers: Early development and initial evaluation. Journal of Clinical and Translational Science, 3(1), 45. [Google Scholar] [CrossRef]

- Kolukısa Tarhan, A., Garousi, V., Turetken, O., Söylemez, M., & Garossi, S. (2020). Maturity assessment and maturity models in health care: A multivocal literature review. Digital Health, 6, 2055207620914772. [Google Scholar] [CrossRef]

- Król, K., & Zdonek, D. (2020). Analytics maturity models: An overview. Information, 11(3), 142. [Google Scholar] [CrossRef]

- Lasrado, L. A., Vatrapu, R., & Andersen, K. N. (2015). Maturity models development in IS research: A literature review. IRIS: Selected Papers of the Information Systems Research Seminar in Scandinavia, 6, 6. [Google Scholar]

- Nikolaidis, P., Ismail, M., Shuib, L., Khan, S., & Dhiman, G. (2022). Predicting student attrition in higher education through the determinants of learning progress: A structural equation modelling approach. Sustainability, 14(20), 13584. [Google Scholar] [CrossRef]

- Onu, P., Ikumapayi, O. M., Omole, E. O., Amoyedo, F. E., Ajewole, K. P., Jacob, A. S., Akiyode, O. O., & Mbohwa, C. (2024, April 2–4). Science, technology, engineering and mathematics (STEM) education and the influence of Industry 4.0. 2024 International Conference on Science, Engineering and Business for Driving Sustainable Development Goals (SEB4SDG) (pp. 1–8), Omu-Aran, Nigeria. [Google Scholar]

- Paulk, M. C. (2009). A history of the capability maturity model for software. ASQ Software Quality Professional, 12(1), 5–19. [Google Scholar]

- Pigosso, D. C. A., & Rozenfeld, H. (2012). Ecodesign maturity model: The ecodesign practices. In Design for innovative value towards a sustainable society, proceedings of EcoDesign 2011: 7th international symposium on environmentally conscious design and inverse manufacturing (pp. 424–429). Springer Netherlands. [Google Scholar]

- Prokofyeva, N., Uhanova, M., Zagulova, D., Katalnikova, S., Ziborova, V., & Uhanovs, M. (2024, October 3–4). Educational group project organization approaches and their impact on student academic performance. 2024 IEEE 65th International Scientific Conference on Information Technology and Management Science of Riga Technical University (ITMS) (pp. 1–7), Riga, Latvia. [Google Scholar]

- Prokofyeva, N., Uhanova, M., Zavyalova, O., & Katalnikova, S. (2015, June 18–20). Structuration of courses at studying disciplines of programming. Environment Technology Resources Proceedings of the International Scientific and Practical Conference (Vol. 3, pp. 159–163), Rezekne, Latvia. [Google Scholar]

- Purcărea, T. (2017). Marketing’s renaissance by committing to improve CX. Holistic Marketing Management, 7(3), 30–47. [Google Scholar]

- Rabelo, A. M., & Zárate, L. E. (2025). A model for predicting dropout of higher education students. Data Science and Management, 8(1), 72–85. [Google Scholar] [CrossRef]

- Reefke, H., Ahmed, M. D., & Sundaram, D. (2014). Sustainable supply chain management—Decision making and support: The SSCM maturity model and system. Global Business Review, 15(Suppl. 4), 1S–12S. [Google Scholar] [CrossRef]

- Sadiq, R. B., Safie, N., Abd Rahman, A. H., & Goudarzi, S. (2021). Artificial intelligence maturity model: A systematic literature review. PeerJ Computer Science, 7, e661. [Google Scholar] [CrossRef]

- Secundo, G., Elena-Perez, S., Martinaitis, Ž., & Leitner, K. H. (2015). An intellectual capital maturity model (ICMM) to improve strategic management in European universities: A dynamic approach. Journal of Intellectual Capital, 16(2), 419–442. [Google Scholar] [CrossRef]

- Silva, C., Ribeiro, P., Pinto, E. B., & Monteiro, P. (2021). Maturity model for collaborative R&D university-industry sustainable partnerships. Procedia Computer Science, 181, 811–817. [Google Scholar]

- Szydło, J., Sakowicz, A., & Di Pietro, F. (2024). University maturity model—A bibliometric analysis. Economics and Environment, 91(4), 938. [Google Scholar] [CrossRef]

- Tapia, R. S., Daneva, M., & van Eck, P. (2007, April 23–26). Developing an inter-enterprise alignment maturity model: Research challenges and solutions. First International Conference on Research Challenges in Information Science, RCIS 2007, Ouarzazate, Morocco. [Google Scholar]

- Tarhan, A., Turetken, O., & Reijers, H. A. (2016). Business process maturity models: A systematic literature review. Information and Software Technology, 75, 122–134. [Google Scholar] [CrossRef]

- Tayebi, A., Gómez, J., & Delgado, C. (2021). Analysis on the lack of motivation and dropout in engineering students in Spain. IEEE Access, 9, 66253–66265. [Google Scholar] [CrossRef]

- Tocto-Cano, E., Paz Collado, S., López-Gonzales, J. L., & Turpo-Chaparro, J. E. (2020). A systematic review of the application of maturity models in universities. Information, 11(10), 466. [Google Scholar] [CrossRef]

- Uhanova, M., Prokofyeva, N., Katalnikova, S., Zavjalova, O., & Ziborova, V. (2023). The influence of prior knowledge and additional courses on the academic performance of students in the introductory programming course CS1. Procedia Computer Science, 225, 1397–1406. [Google Scholar] [CrossRef]

- Uhrenholt, J. N., Kristensen, J. H., Rincón, M. C., Adamsen, S., Jensen, S. F., & Waehrens, B. V. (2022). Maturity model as a driver for circular economy transformation. Sustainability, 14(12), 7483. [Google Scholar] [CrossRef]

- Yaacob, Z., Zakaria, N., Mokhtar, M., Jalal, S. F., Pati, K. M. I., & Rahmat, N. H. (2023). Exploring students’ fatigue: Is there a relationship between outcome with effort and performance? International Journal of Academic Research in Business and Social Sciences, 13(9), 155–173. [Google Scholar] [CrossRef] [PubMed]

- Yildiz, D., Boztoprak, H., & Guzey, Y. (2017). A research on project maturity perception of techno-entrepreneurship firms. PressAcademia Procedia, 4(1), 357–368. [Google Scholar] [CrossRef]

| Reason | Opportunities of the Maturity Model (MM) |

|---|---|

| Enhancing the quality of education. | MM allows for assessing how well a course aligns with current requirements and standards, and identifies areas for improvement. This contributes to enhancing the quality of education and the training of professionals. |

| Improving interaction between instructors and students. | The model helps identify weaknesses in the educational process and develop strategies to address them. Instructors can adapt their teaching methods to meet students’ needs, which promotes more effective interaction. |

| Development of professional competencies. | The model enables the identification of competencies that students acquire through the course. This helps instructors design assignments and projects that foster the development of professional skills. |

| Assessment of the educational process’s effectiveness. | The model provides tools for evaluating the effectiveness of the educational process. Instructors can track student progress and make adjustments to the curriculum as needed. |

| Attracting investments and partners. | Developing a maturity model can attract the attention of investors and partners to the academic course. This may foster collaboration and experience sharing with other universities and organizations. |

| Alignment with labor market demands. | The maturity model takes labor market demands into account and helps adapt the educational process to meet employer needs. This enhances graduates’ competitiveness in the job market. |

| Providing feedback. | The model includes feedback from instructors, students, and other participants in the educational process. This enables timely responses to changes and improvements in the quality of education. |

| Integration with other courses. | The model can be integrated with other courses and programs, ensuring a more systematic and comprehensive development of students’ professional competencies. |

| Steps/Stage | Description |

|---|---|

| Scope | Defining the scope and focus of the MM, which determines the direction for all subsequent development. |

| Design | Defining the structure/architecture of the MM. |

| Populate | Defining the content of the MM, including the domain’s components and subcomponents; external and internal actors (individual specialists and organizations), processes, interrelationships, and the system for their evaluation, etc. |

| Test | Verification of the relevance, rigor, validity, and reliability of the MM—its design and content. |

| Deploy | Phased implementation of the MM; expansion and scaling; selection and optimization of elements, processes, and stakeholders. |

| Maintain | Ensuring the capability to process large data volumes and to scale and extend the MM; creating a repository to track the model’s evolution and development. |

| Exam Pass Rates | Final Project Success Rates | Test Success Rates | |||

|---|---|---|---|---|---|

| Exam Scores | Number of Students | Project Total Scores (Max 15) | Number of Students | Test Scores (Max 20) | Number of Students |

| From 1 to 4 | 71 (18.83%) | 0–3 | 80 (21.22%) | 5–8 | 3 (0.80%) |

| From 5 to 7 | 206 (54.64%) | 4–6 | 40 (10.61%) | 9–12 | 14 (3.71%) |

| From 8 to 10 | 100 (26.53%) | 7–9 | 50 (13.26%) | 13–16 | 173 (45.89%) |

| Total | 377 (100.0%) | 10–12 | 63 (16.71%) | 17–20 | 171 (45.36%) |

| 13–15 | 144 (38.20%) | No Grade | 16 (4.24%) | ||

| Total | 377 (100.0%) | Total | 377 (100.0%) | ||

| (a) | Group 1 | Group 2 | ||

| Stage 1 (Labs 1–3) | 276 (18.3%) | 480 (31.7%) | Yates corrected Chi-square = 141.42, p < 0.001 | |

| Stage 3 (Labs 7–9) | 79 (5.2%) | 677 (44.8%) | ||

| (b) | Group 1 | Group 2 | ||

| Stage 1 (Labs 1–3) | 122 (16.4%) | 256 (34.3%) | Yates corrected Chi-square = 22.09, p < 0.001 |

|

TTIC

Component | Empirical Findings | Practical Application |

|---|---|---|

| 1. Objectives and Goals | Stage 2 (labs 4–6) is a critical point for predicting failure. The course objectives should include early risk detection. | The course objectives are adjusted: the task “identify and support students at Stage 2” is added, which reduces attrition and improves performance. |

| 2. Content | Stage-2 topics (loops and arrays) are crucial for developing programming skills. Insufficient mastery of these topics creates a risk of failure. | The Stage 2 content is strengthened by adding additional examples, visualizations, step-by-step guides, and micro-lectures. |

| 3. Educational Technologies | Students pass the theoretical test (syntax) but struggle with the exam/project; therefore, practice-oriented methods are required. | The share of practical, project-based, and problem-oriented assignments is increased. The emphasis is on application rather than memorization. |

| 4. Academic indicators | Stage-wise analysis of laboratory work enables the prediction of final outcomes (p < 0.001). | A monitoring system is implemented: automatic alerts to the instructor for low activity/performance at Stage 2. |

| 5. Feedback | The risk of failure is statistically significant already at Stage 2—there is still time to intervene. | A mechanism for timely feedback is implemented: personalized recommendations, tutor meetings, and individual roadmaps. |

| 6. Outcomes | Success in the course ≠ success on a test. What matters is not only scores but the ability to apply knowledge (projects, exams). | Assessment is revised: greater weight is assigned to projects and practical tasks. Evaluation of “progress” and “mastery,” not just “completion,” is introduced. |

| 7. Development | By Stage 3, more students complete the assignments, but the quality declines; therefore, the content or methodology needs refinement. | The course is continuously reviewed: Stage-3 assignments are adapted, with added clarifications, checklists, and sample solutions. |

| 8. Partnerships | Financial difficulties are a driver of attrition. Stage-2 “at-risk” students are a target group for support. | Partners are engaged: IT companies offer scholarships, hackathons, and short-term internships to provide motivation and financial support. |

| 9. Resources | Insufficient support at Stage 2 poses a high risk of failure. Resources are needed: tutors, materials, and time. | Resources are allocated: a pool of teaching assistants is created, supplementary materials are developed, and time is reserved for consultations. |

| 10. Adaptability | Students with different levels of preparation handle Stage 2 differently—personalization is required. | A differentiated approach is introduced: “basic” and “advanced” assignment tracks, the option to choose difficulty, and flexible deadlines. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zagulova, D.; Uhanova, M.; Jurenoks, A.; Prokofyeva, N.; Jansone, A.; Katalnikova, S.; Ziborova, V. Learning Course Improvement Tools: Search Has Led to the Development of a Maturity Model. Educ. Sci. 2025, 15, 1302. https://doi.org/10.3390/educsci15101302

Zagulova D, Uhanova M, Jurenoks A, Prokofyeva N, Jansone A, Katalnikova S, Ziborova V. Learning Course Improvement Tools: Search Has Led to the Development of a Maturity Model. Education Sciences. 2025; 15(10):1302. https://doi.org/10.3390/educsci15101302

Chicago/Turabian StyleZagulova, Diana, Marina Uhanova, Aleksejs Jurenoks, Natalya Prokofyeva, Anita Jansone, Sabina Katalnikova, and Viktorija Ziborova. 2025. "Learning Course Improvement Tools: Search Has Led to the Development of a Maturity Model" Education Sciences 15, no. 10: 1302. https://doi.org/10.3390/educsci15101302

APA StyleZagulova, D., Uhanova, M., Jurenoks, A., Prokofyeva, N., Jansone, A., Katalnikova, S., & Ziborova, V. (2025). Learning Course Improvement Tools: Search Has Led to the Development of a Maturity Model. Education Sciences, 15(10), 1302. https://doi.org/10.3390/educsci15101302