Pure Question-Based Learning

Abstract

1. Introduction

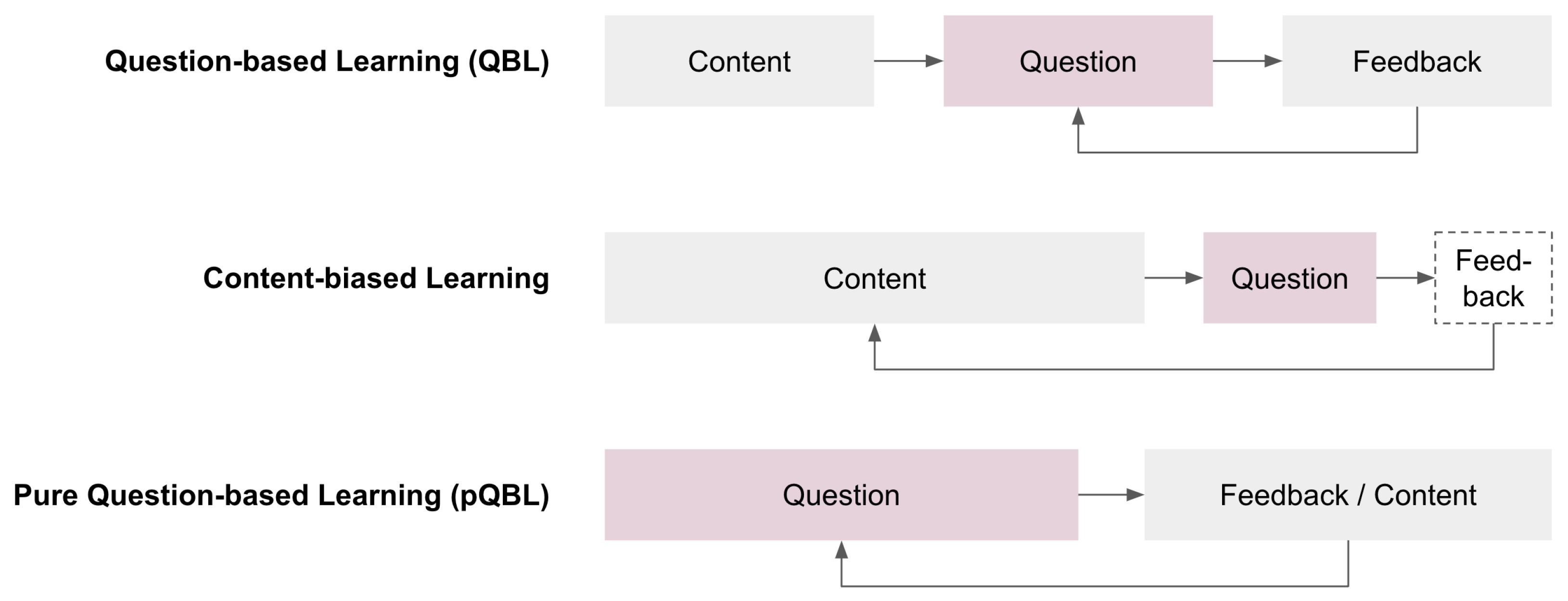

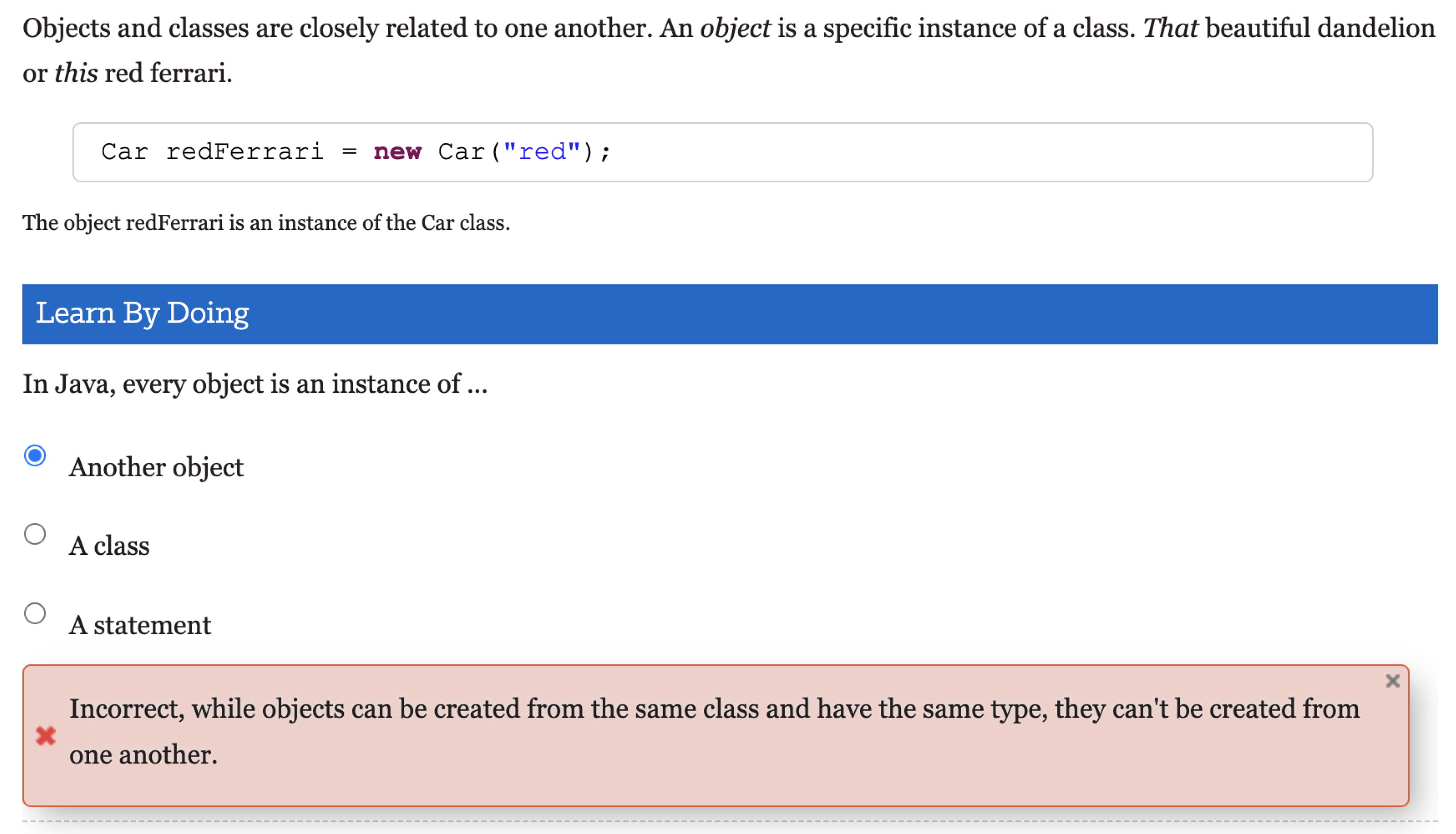

Pure question-based learning: learning material that consists solely of questions with constructive formative feedback.

1.1. Question-Based Learning

1.2. Testing and Testing First

Kapur distinguished between different outcomes. Both groups did equally well on items measuring “procedural fluency” (i.e., in computing the variance). On items measuring data analysis, conceptual insight and transfer, the “finding out” group did better than the “being told” group.([25], p. 219)

1.3. Feedback

1.4. Design-Based Research

How do higher education students and teachers, and researchers in technology-enhanced learning perceive pQBL when testing it for the first time?

2. Methods

2.1. Data Collection

- What was your first impression?

- What kind of challenges do you see?

- What kind of advantages do you see?

2.1.1. Round 1: University of Macedonia

2.1.2. Round 2: University of Gävle

2.1.3. Round 3: East African Course Participants

- What was your impression of this course that had no reading material besides the questions?

- What do you think needs to be improved (or what are the disadvantages with this type of methodology)?

- What was the best parts of the experience?

2.1.4. Round 4: Workshop at the NLASI ’22

2.2. Data Analysis

3. Results

3.1. Round 1: University of Macedonia

3.1.1. First Impression

3.1.2. Challenges

3.1.3. Advantages

3.1.4. Improvements to the Course

3.2. Round 2: University of Gävle

3.2.1. First Impression

3.2.2. Challenges

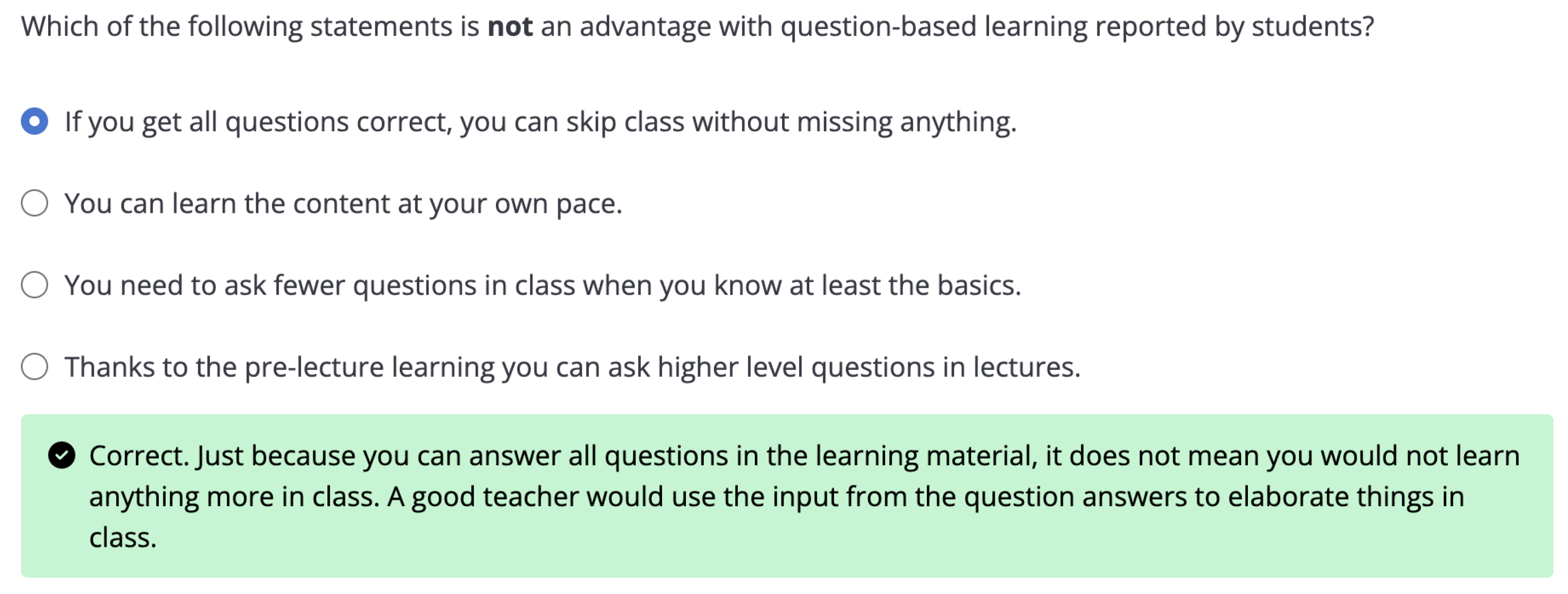

3.2.3. Advantages

3.2.4. Improvements to the Course

3.3. Round 3: East African Course Participants

3.3.1. First Impression

3.3.2. Challenges

3.3.3. Advantages

- It prompt you to develop deep thinking and focus.

- We have been impressed by how we can answer questions by guessing.

- The feedback was very constructive and helpful.

- It promotes focused reading towards the answer to the specific question.

- It allows learner to test him/herself by answering questions [until he/she] gives correct answer. This is the best way of effective academic learning.

- Not boring as there is no many texts to read.

- It is smart and time saving.

3.3.4. Improvements to the Course

3.4. Round 4: Workshop at the NLASI ’22

3.4.1. First Impression

3.4.2. Challenges

3.4.3. Advantages

3.4.4. Improvements to the Course

4. Discussion

In a typical Japanese lesson, on the other hand, the teacher introduced a rather complex problem and invited the students to have a go at it—individually or together in small groups. After a while the teacher asked the students to show their solutions, their attempts were compared and teacher and students might conclude that one way of solving the problem is more powerful than the others, and in such a case the students could practice that particular way of solving this kind of problem on some other, but related, problems.([25], p. 181)

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| ADD | Attention Deficit Disorder |

| DBR | Design-based research |

| GDPR | General Data Protection Regulation |

| NGO | Non-Governmental Organisation |

| OLI | Open Learning Initiative |

| pQBL | Pure question-based learning |

| QBL | Question-based learning |

References

- Lovett, M.; Meyer, O.; Thille, C. The Open Learning Initiative: Measuring the Effectiveness of the OLI Statistics Course in Accelerating Student Learning. J. Interact. Media Educ. 2008, 1, 13. [Google Scholar] [CrossRef]

- Kaufman, J.; Ryan, R.; Thille, C.; Bier, N. Open Learning Initiative Courses in Community Colleges: Evidence on Use and Effectiveness; Mellon University: Pittsburgh, PA, USA, 2013; Available online: https://www.researchgate.net/publication/344726610_Open_Learning_Initiative_Courses_in_Community_Colleges_Evidence_on_Use_and_Effectiveness?channel=doi&linkId=5f8c1f2e458515b7cf8825ed&showFulltext=true (accessed on 9 August 2024).

- Koedinger, K.R.; McLaughlin, E.A.; Jia, J.Z.; Bier, N.L. Is the doer effect a causal relationship? How can we tell and why it’s important. In Proceedings of the Sixth International Conference on Learning Analytics & Knowledge, Edinburgh, UK, 25–29 April 2016; pp. 388–397. [Google Scholar]

- Yarnall, L.; Means, B.; Wetzel, T. Lessons Learned from Early Implementations of Adaptive Courseware; SRI Education: Menlo Park, CA, USA, 2016. [Google Scholar]

- Glassey, R.; Bälter, O. Put the students to work: Generating Questions with Constructive Feedback. In Proceedings of the IEEE Frontiers in Education Conference (FIE), Uppsala, Sweden, 21–24 October 2020; pp. 1–8. [Google Scholar]

- Glassey, R.; Bälter, O. Sustainable Approaches for Accelerated Learning. Sustainability 2021, 13, 11994. [Google Scholar] [CrossRef]

- Székely, L. Productive processes in learning and thinking. Acta Psychol. 1950, 7, 388–407. [Google Scholar] [CrossRef]

- Bransford, J.D.; Schwartz, D.L. Chapter 3: Rethinking transfer: A simple proposal with multiple implications. Rev. Res. Educ. 1999, 24, 61–100. [Google Scholar] [CrossRef]

- Kapur, M. Productive failure in learning the concept of variance. Instr. Sci. 2012, 40, 651–672. [Google Scholar] [CrossRef]

- Little, J.L.; Bjork, E.L. Multiple-Choice Pretesting Potentiates Learning of Related Information. Mem. Cogn. 2016, 44, 1085–1101. [Google Scholar] [CrossRef]

- Duggal, S. Factors impacting acceptance of e-learning in India: Learners’ perspective. Asian Assoc. Open Univ. J. 2022, 17, 101–119. [Google Scholar] [CrossRef]

- Brown, A.L. Design experiments: Theoretical and methodological challenges in creating complex interventions in classroom settings. J. Learn. Sci. 1992, 2, 141–178. [Google Scholar] [CrossRef]

- Anderson, T.; Shattuck, J. Research-based design: A decade of progress in Educational Research. Educ. Res. 2012, 41, 16–25. [Google Scholar] [CrossRef]

- Bier, N.; Moore, S.; Van Velsen, M. Instrumenting courseware and leveraging data with the Open Learning Initiative (OLI). In Proceedings of the Companion Proceedings 9th International Learning Analytics & Knowledge Conference, Tempe, AZ, USA, 4–8 March 2019. [Google Scholar]

- Clearinghouse, W.W. What Works Clearinghouse Standards Handbook, Version 4.1. US Department of Education, Institute of Education Sciences. National Center for Education Evaluation and Regional Assistance. 2020. Available online: https://ies.ed.gov/ncee/wwc/Docs/referenceresources/WWC-Procedures-Handbook-v4-1-508.pdf (accessed on 9 August 2024).

- Pane, J.F.; Griffin, B.A.; McCaffrey, D.F.; Karam, R. Effectiveness of cognitive tutor algebra I at scale. Educ. Eval. Policy Anal. 2014, 36, 127–144. [Google Scholar] [CrossRef]

- Bälter, O.; Glassey, R.; Wiggberg, M. Reduced learning time with maintained learning outcomes. In Proceedings of the 52nd ACM Technical Symposium on Computer Science Education, Virtual, 13–20 March 2021; pp. 660–665. [Google Scholar]

- Deng, R.; Yang, Y.; Shen, S. Impact of question presence and interactivity in instructional videos on student learning. Educ. Inf. Technol. 2024, 1–29. [Google Scholar] [CrossRef]

- Deslauriers, L.; McCarty, L.S.; Miller, K.; Callaghan, K.; Kestin, G. Measuring actual learning versus feeling of learning in response to being actively engaged in the classroom. Proc. Natl. Acad. Sci. USA 2019, 116, 19251–19257. [Google Scholar] [CrossRef] [PubMed]

- Bälter, O.; Zimmaro, D.; Thille, C. Estimating the minimum number of opportunities needed for all students to achieve predicted mastery. SpringerOpen Smart Learn. Environ. 2018, 5, 1–19. [Google Scholar] [CrossRef]

- Glassey, R.; Bälter, O.; Haller, P.; Wiggberg, M. Addressing the double challenge of learning and teaching enterprise technologies through peer teaching. In Proceedings of the IEEE/ACM 42nd International Conference on Software Engineering: Software Engineering Education and Training (ICSE-SEET), Seoul, Republic of Korea, 27 June–19 July 2020; pp. 130–138. [Google Scholar]

- Alsufyani, A.A. “Scie-losophy” a teaching and learning framework for the reconciliation of the P4C and the scientific method. MethodsX 2023, 11, 102417. [Google Scholar] [CrossRef] [PubMed]

- Kapur, M. Productive failure. Cogn. Instr. 2008, 26, 379–424. [Google Scholar] [CrossRef]

- Kapur, M. Productive failure in mathematical problem solving. Instr. Sci. 2010, 38, 523–550. [Google Scholar] [CrossRef]

- Marton, F. Necessary Conditions of Learning; Routledge: London, UK, 2015. [Google Scholar]

- Little, J.; Bjork, E. Pretesting with Multiple-choice Questions Facilitates Learning. Proc. Annu. Meet. Cogn. Sci. Soc. 2011, 33, 294–299. [Google Scholar]

- Roediger, H.L.; Karpicke, J.D. Test-enhanced learning: Taking memory tests improves long-term retention. Psychol. Sci. 2006, 17, 249–255. [Google Scholar] [CrossRef] [PubMed]

- Adesope, O.O.; Trevisan, D.A.; Sundararajan, N. Rethinking the use of tests: A meta-analysis of practice testing. Rev. Educ. Res. 2017, 87, 659–701. [Google Scholar] [CrossRef]

- Schwieren, J.; Barenberg, J.; Dutke, S. The testing effect in the psychology classroom: A meta-analytic perspective. Psychol. Learn. Teach. 2017, 16, 179–196. [Google Scholar] [CrossRef]

- Van der Meij, H.; Böckmann, L. Effects of embedded questions in recorded lectures. J. Comput. High. Educ. 2021, 33, 235–254. [Google Scholar] [CrossRef]

- Bälter, O.; Enström, E.; Klingenberg, B. The effect of short formative diagnostic web quizzes with minimal feedback. Comput. Educ. 2023, 60, 234–242. [Google Scholar] [CrossRef]

- Shute, V.J. Focus on Formative Feedback. Rev. Educ. Res. 2008, 78, 153–189. [Google Scholar] [CrossRef]

- Martinez, C.; Serra, R.; Sundaramoorthy, P.; Booij, T.; Vertegaal, C.; Bounik, Z.; Van Hastenberg, K.; Bentum, M. Content-Focused Formative Feedback Combining Achievement, Qualitative and Learning Analytics Data. Educ. Sci. 2023, 13, 1014. [Google Scholar] [CrossRef]

- Pashler, H.; Cepeda, N.J.; Wixted, J.T.; Rohrer, D. When Does Feedback Facilitate Learning of Words? J. Exp. Psychol. Learn. Mem. Cogn. 2005, 31, 3–8. [Google Scholar] [CrossRef] [PubMed]

- Marsh, E.J.; Lozito, J.P.; Umanath, S.; Bjork, E.L.; Bjork, R.A. Using verification feedback to correct errors made on a multiple-choice test. Memory 2012, 20, 645–653. [Google Scholar] [CrossRef] [PubMed]

- Ahmed, M.M.; Rahman, A.; Hossain, M.K.; Tambi, F.B. Ensuring learner-centred pedagogy in an open and distance learning environment by applying scaffolding and positive reinforcement. Asian Assoc. Open Univ. J. 2022, 17, 289–304. [Google Scholar] [CrossRef]

- Cividatti, L.N.; Moralles, V.A.; Bego, A.M. Incidence of design-based research methodology in science education articles: A bibliometric analysis. Revista Brasileira de Pesquisa em Educação em Ciências 2021, e25369. [Google Scholar] [CrossRef]

- Schleiss, J.; Laupichler, M.C.; Raupach, T.; Stober, S. AI course design planning framework: Developing domain-specific AI education courses. Educ. Sci. 2023, 13, 954. [Google Scholar] [CrossRef]

- McKenney, S.; Reeves, T.C. Educational design research. In Handbook of Research on Educational Communications and Technology; Springer: New York, NY, USA, 2014; pp. 131–140. [Google Scholar]

- Majgaard, G.; Misfeldt, M.; Nielsen, J. How design-based research and action research contribute to the development of a new design for learning. Des. Learn. 2011, 4, 8–27. [Google Scholar] [CrossRef]

- Kim, P.; Suh, E.; Song, D. Development of a design-based learning curriculum through design-based research for a technology-enabled science classroom. Educ. Technol. Res. Dev. 2015, 63, 575–602. [Google Scholar] [CrossRef]

- Reinmann, G. Outline of a holistic design-based research model for higher education. EDeR EDucational Des. Res. 2020, 4, 1–16. [Google Scholar]

- Wang, Y.H. Design-based research on integrating learning technology tools into higher education classes to achieve active learning. Comput. Educ. 2020, 156, 103935. [Google Scholar] [CrossRef]

- Silva-Díaz, F.; Marfil-Carmona, R.; Narváez, R.; Silva Fuentes, A.; Carrillo-Rosúa, J. Introducing Virtual Reality and Emerging Technologies in a Teacher Training STEM Course. Educ. Sci. 2023, 13, 1044. [Google Scholar] [CrossRef]

- Bälter, K.; Abraham, F.J.; Mutimukwe, C.; Mugisha, R.; Osowski, C.P.; Bälter, O. A web-based program about sustainable development goals focusing on digital learning, digital health literacy, and nutrition for professional development in Ethiopia and Rwanda: Development of a pedagogical method. JMIR Form. Res. 2022, 6, e36585. [Google Scholar] [CrossRef] [PubMed]

- Haladyna, T.M.; Downing, S.M. A taxonomy of multiple-choice item-writing rules. Appl. Meas. Educ. 1989, 2, 37–50. [Google Scholar] [CrossRef]

- Martin Nyling, M.P. Självhjälpsguiden-För dig med ADHD på Jobbet. 2019. Available online: https://attention.se/wp-content/uploads/2021/03/attention_ADHD-pa-jobbet-_sjalvhjalpsguide.pdf (accessed on 19 August 2022).

- Butler, K.A. Usability engineering turns 10. Interactions 1996, 3, 58–75. [Google Scholar] [CrossRef]

- Gurung, R.A. Pedagogical aids and student performance. Teach. Psychol. 2003, 30, 92–95. [Google Scholar] [CrossRef]

- Jemstedt, A.; Bälter, O.; Gavel, A.; Glassey, R.; Bosk, D. Less to produce and less to consume: The advantage of pure question-based learning. Interact. Learn. Environ. 2024, 1–22. [Google Scholar] [CrossRef]

- Swedish Ethical Review Authority (SERA) About the Authority. 2019. Available online: https://etikprovningsmyndigheten.se/en/about-the-authority/ (accessed on 23 October 2023).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bälter, O.; Glassey, R.; Jemstedt, A.; Bosk, D. Pure Question-Based Learning. Educ. Sci. 2024, 14, 882. https://doi.org/10.3390/educsci14080882

Bälter O, Glassey R, Jemstedt A, Bosk D. Pure Question-Based Learning. Education Sciences. 2024; 14(8):882. https://doi.org/10.3390/educsci14080882

Chicago/Turabian StyleBälter, Olle, Richard Glassey, Andreas Jemstedt, and Daniel Bosk. 2024. "Pure Question-Based Learning" Education Sciences 14, no. 8: 882. https://doi.org/10.3390/educsci14080882

APA StyleBälter, O., Glassey, R., Jemstedt, A., & Bosk, D. (2024). Pure Question-Based Learning. Education Sciences, 14(8), 882. https://doi.org/10.3390/educsci14080882