Generative AI for Culturally Responsive Science Assessment: A Conceptual Framework

Abstract

1. Introduction

“Sometimes it’s not the student who is failing the assessment—it might be that the assessment is failing to fully assess the abilities of the student”—[1]

2. A Literature Review

2.1. Impact of Standardized Assessment

2.2. Culturally Responsive Assessments in Science Education

2.3. Challenges of Implementing Culturally Responsive Assessments in Science Education

2.4. Generative AI and Culturally Responsive Assessment

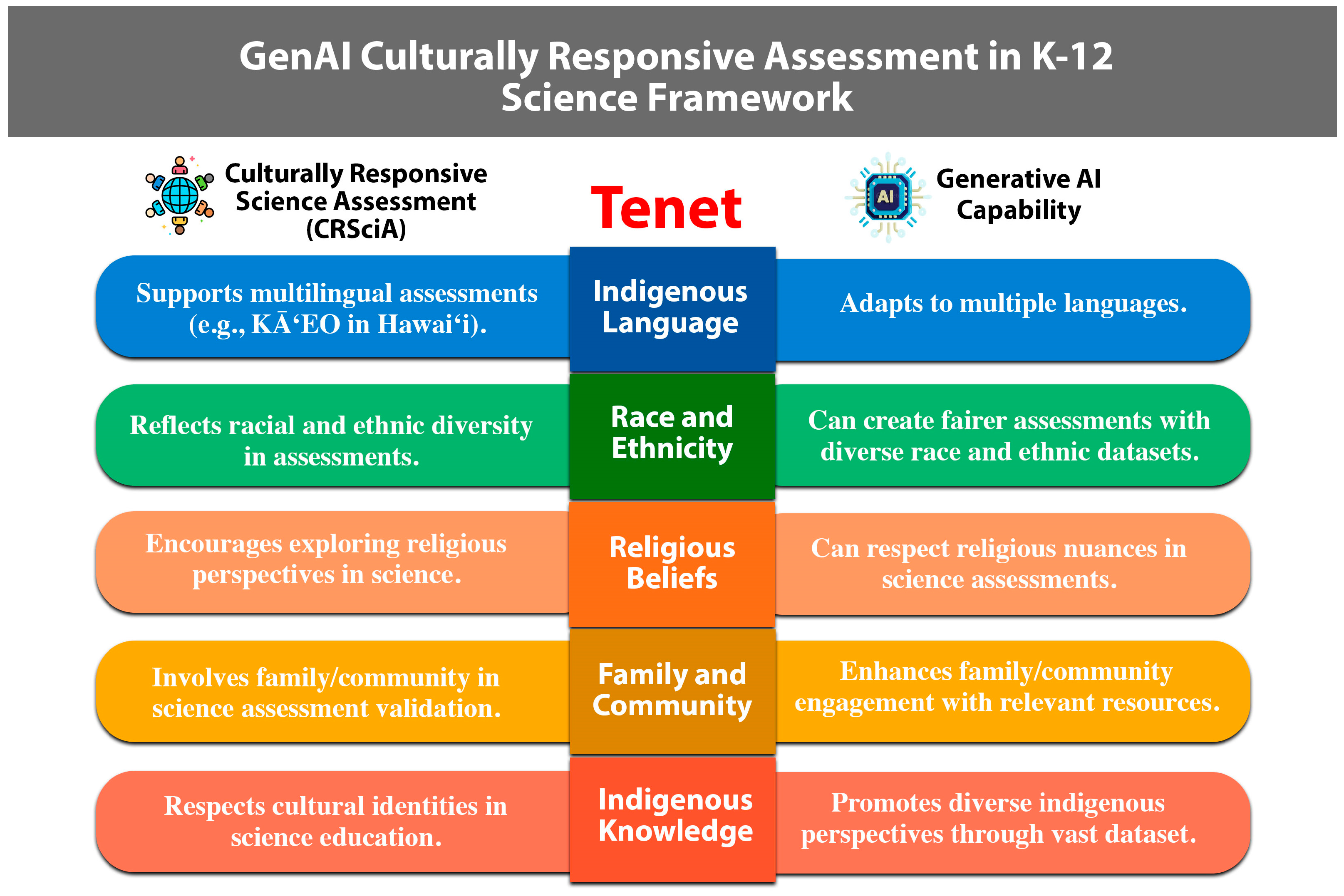

3. Generative AI Framework for Culturally Responsive Assessments in Science

3.1. Indigenous Language

3.2. Religion

3.3. Indigenous Knowledge

3.4. Race and Ethnicity

3.5. Family and Community Engagement

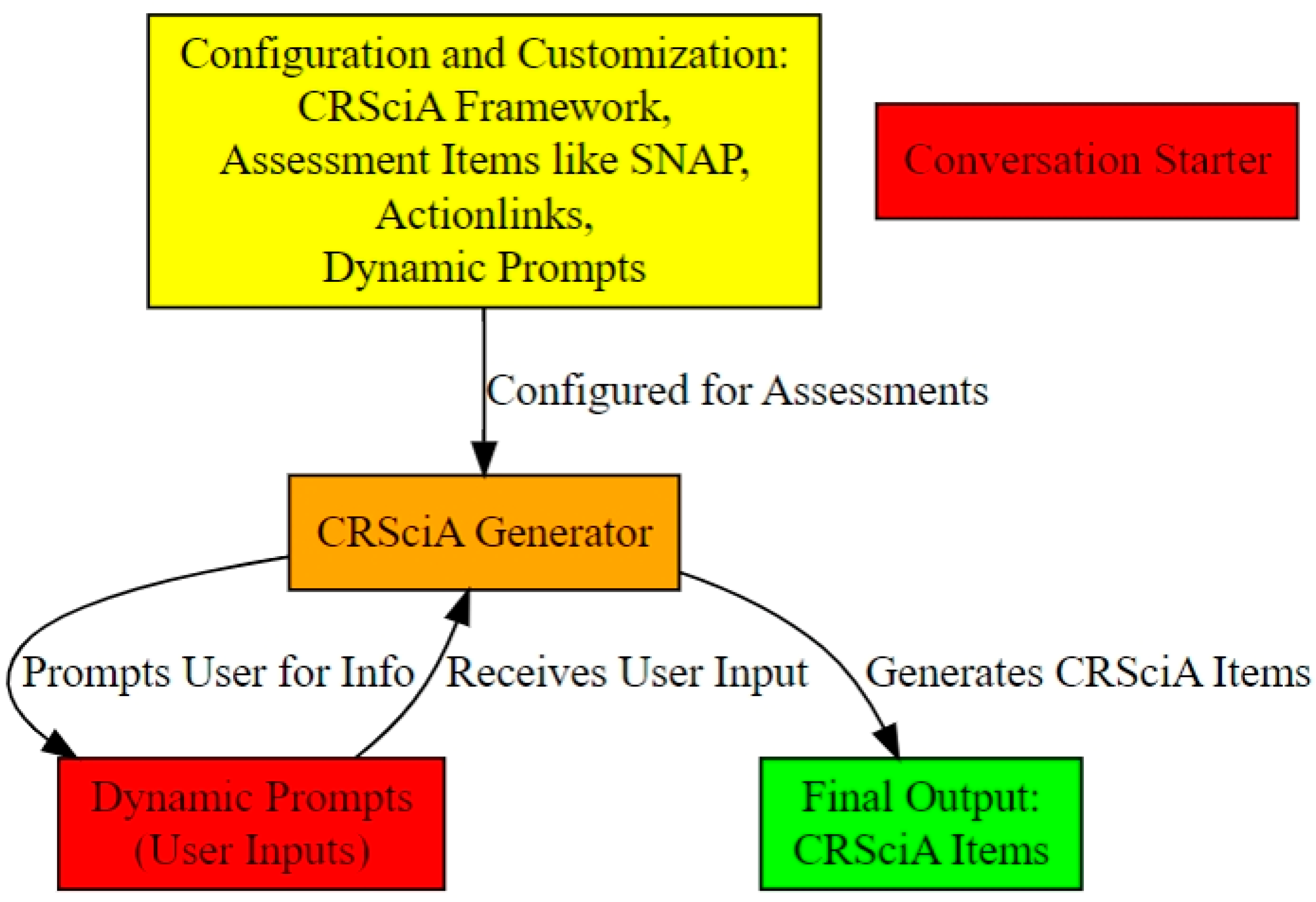

4. Developing the CRSciA-Generator

4.1. Configuration and Customization

4.2. Prompt Engineering

“Welcome! I am your culturally responsive science assessment generator (CRSciA-Generator). I am here to help you develop science assessment items that meet the diverse cultural and context-specific needs of your students. Would you like assistance in developing a culturally responsive science assessment for your students that aligns with the NGSS? Please type ‘Yes’ or ‘No’ to proceed.

“Great! I can help you create an assessment aligned with NGSS standards. Would you like me to use the SNAP questions from the Stanford NGSS Assessment Project? Please type ‘Yes’ or ‘No’.”(See Appendix A)

4.3. Piloting the CRSciA-Generator

4.4. Use Cases of the CRSciA-Generator and Prompts

5. Discussion

6. Conclusions and Future Directions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Demonstration of the CRSciA Generator

Appendix B. Python Code Snip of Interactive Guided Dynamic Prompt Screenshot

References

- Frommert, C. Creating an Environment Where All Students See That They Can Be Good at Math. 27 January 2023. Available online: https://www.edutopia.org/article/helping-students-see-themselves-good-math (accessed on 5 August 2024).

- Kouo, J.L. Don’t Assess a Fish by Its Ability to Climb a Tree: Considerations and Strategies to Ensure Equitable Formative Assessment Practices for All Learners. In Research Anthology on Physical and Intellectual Disabilities in an Inclusive Society; IGI Global: Hershey, PA, USA, 2022; pp. 873–889. [Google Scholar] [CrossRef]

- NRC. A Framework for K-12 Science Education: Practices, Crosscutting Concepts, and Core Ideas; National Academies Press: Washington, DC, USA, 2012. [Google Scholar]

- Ashreef, S.Y.; Asowayan, A.A.; Omar, S.H. A Systematic Review: The Next Generation Science Standards and the Increased Cultural Diversity. Engl. Lang. Teach. 2017, 10, 63–76. [Google Scholar] [CrossRef]

- Mullis IV, S.; Martin, M.O.; von Davier, M. (Eds.) TIMSS 2023 assessment frameworks. TIMSS & PIRLS International Study Center, Lynch School of Education and Human Development, Boston College, & International Association for the Evaluation of Educational Achievement (IEA). 2021. Available online: https://timssandpirls.bc.edu/timss2023/frameworks/index.html (accessed on 1 September 2024).

- States, N.N.L. Next Generation Science Standards: For States, by States; National Academies Press: Washington, DC, USA, 2013. [Google Scholar]

- Harris, K.; Sithole, A.; Kibirige, J.; McCarthy, P. The Next Generation Science Standards and the quest towards culturally responsive pedagogy: Perceptions of K-12 educators. Am. Acad. Sci. Res. J. Eng. Technol. Sci. 2018, 49, 192–208. [Google Scholar]

- Câmara, J.N. Funds of knowledge: Towards an asset-based approach to refugee education and family engagement in England. Br. Educ. Res. J. 2024, 50, 876–904. [Google Scholar] [CrossRef]

- O’Leary, E.S.; Shapiro, C.; Toma, S.; Sayson, H.W.; Levis-Fitzgerald, M.; Johnson, T.; Sork, V.L. Creating inclusive classrooms by engaging STEM faculty in culturally responsive teaching workshops. Int. J. Stem Educ. 2020, 7, 32. [Google Scholar] [CrossRef]

- Idrus, F.; Sohid, M. Teachers’ expectations and challenges in using culturally responsive teaching (CRT) strategies in the ESL classroom. J. Lang. Teach. Res. 2023, 14, 629–635. [Google Scholar] [CrossRef]

- Nortvedt, G.A.; Wiese, E.; Brown, M.; Burns, D.; McNamara, G.; O’Hara, J.; Altrichter, H.; Fellner, M.; Herzog-Punzenberger, B.; Nayir, F.; et al. Aiding culturally responsive assessment in schools in a globalising world. Educ. Assess. Eval. Account. 2020, 32, 5–27. [Google Scholar] [CrossRef]

- Preston, J.P.; Claypool, T.R. Analyzing assessment practices for Indigenous students. In Frontiers in Education; Frontiers Media SA: Lausanne, Switzerland, 2021; p. 679972. [Google Scholar]

- Wu, X.; He, X.; Liu, T.; Liu, N.; Zhai, X. Matching Exemplar as Next Sentence Prediction (MeNSP): Zero-Shot Prompt Learning for Automatic Scoring in Science Education; Springer Nature Switzerland: Cham, Switzerland, 2023; pp. 401–413. [Google Scholar]

- Lee, G.-G.; Shi, L.; Latif, E.; Gao, Y.; Bewersdorf, A.; Nyaaba, M.; Guo, S.; Wu, Z.; Liu, Z.; Wang, H. Multimodality of ai for education: Towards artificial general intelligence. arXiv 2023, arXiv:2312.06037. [Google Scholar]

- Li, X.; Li, B.; Cho, S.-J. Empowering Chinese language learners from low-income families to improve their Chinese writing with ChatGPT’s assistance afterschool. Languages 2023, 8, 238. [Google Scholar] [CrossRef]

- Bewersdorff, A.; Hartmann, C.; Hornberger, M.; Seßler, K.; Bannert, M.; Kasneci, E.; Kasneci, G.; Zhai, X.; Nerdel, C. Taking the Next Step with Generative Artificial Intelligence: The Transformative Role of Multimodal Large Language Models in Science Education. arXiv 2024, arXiv:2401.00832. [Google Scholar]

- Zhai, X. ChatGPT for next generation science learning. XRDS Crossroads ACM Mag. Stud. 2023, 29, 42–46. [Google Scholar] [CrossRef]

- Tan, B.; Armoush, N.; Mazzullo, E.; Bulut, O.; Gierl, M. A Review of Automatic Item Generation in the Large Language Model Era. Conference Poster Presented at the Faculty of Education Celebrating Socially Transformative Research and Teaching Event, 28 March 2024. Available online: https://era.library.ualberta.ca/items/2338861e-e0d5-44ac-9fd9-3552f69c255a (accessed on 28 June 2024).

- Hwang, K.; Challagundla, S.; Alomair, M.; Chen, K.; Choa, F.-S. Towards AI-Assisted Multiple Choice Question Generation and Quality Evaluation at Scale: Aligning with Bloom’s Taxonomy. Poster Presented at the Workshop: Generative AI for Education (GAIED): Advances, Opportunities, and Challenges, NeurIPS Conference. 2023. Available online: https://neurips.cc/virtual/2023/79098 (accessed on 22 April 2024).

- Richards, M.; Waugh, K.; Slaymaker, M.; Petre, M.; Woodthorpe, J.; Gooch, D. Bob or Bot: Exploring ChatGPT’s Answers to University Computer Science Assessment. ACM Trans. Comput. Educ. 2024, 24, 5. [Google Scholar] [CrossRef]

- Andreadis, K.; Newman, D.R.; Twan, C.; Shunk, A.; Mann, D.M.; Stevens, E.R. Mixed methods assessment of the influence of demographics on medical advice of ChatGPT. J. Am. Med. Inform. Assoc. 2024, 31, ocae086. [Google Scholar] [CrossRef]

- Kıyak, Y.S.; Emekli, E. ChatGPT Prompts for Generating Multiple-Choice Questions in Medical Education and Evidence on Their Validity: A Literature Review. Postgrad. Med. J. 2024, 100(1189), 858–865. [Google Scholar] [CrossRef] [PubMed]

- Chan, W.; An, A.; Davoudi, H. A Case Study on ChatGPT Question Generation. In Proceedings of the 2023 IEEE International Conference on Big Data (BigData), Sorrento, Italy, 15–18 December 2023; pp. 1647–1656. [Google Scholar]

- Sembok, T.M.T.; Wani, S. Is ChatGPT not Appropriate for Religious Use? Springer Nature Singapore: Singapore, 2024; pp. 595–605. [Google Scholar]

- Nyaaba, M.; Wright, A.L.; Choi, G.L. Generative AI and Digital Neocolonialism in Global Education: Towards an Equitable Framework. arXiv 2024, arXiv:2406.02966. [Google Scholar]

- Jaakkola, E. Designing conceptual articles: Four approaches. AMS Rev. 2020, 10, 18–26. [Google Scholar] [CrossRef]

- McKenney, S.R.; Thomas, C. Conducting Educational Design Research; Routledge: London, UK, 2012. [Google Scholar]

- Ladson-Billings, G. From the achievement gap to the education debt: Understanding achievement in US schools. Educ. Res. 2006, 35, 3–12. [Google Scholar] [CrossRef]

- Yerrick, R.; Ridgeway, M. Culturally Responsive Pedagogy, Science Literacy, and Urban Underrepresented Science Students. In Inclusive Principles and Practices in Literacy Education; Milton, M., Ed.; Emerald Publishing Limited: Bingley, UK, 2017; Volume 11, pp. 87–103. [Google Scholar]

- Morgan, P.L.; Hu, E.H.; Farkas, G.; Hillemeier, M.M.; Oh, Y.; Gloski, C.A. Racial and Ethnic Disparities in Advanced Science and Mathematics Achievement During Elementary School. Gift. Child Q. 2023, 67, 151–172. [Google Scholar] [CrossRef]

- Muhammad, G.E.; Ortiz, N.A.; Neville, M.L. A Historically Responsive Literacy Model for Reading and Mathematics. Read. Teach. 2021, 75, 73–81. [Google Scholar] [CrossRef]

- Hoosain, M.S.; Paul, B.S.; Ramakrishna, S. The impact of 4IR digital technologies and circular thinking on the United Nations sustainable development goals. Sustainability 2020, 12, 10143. [Google Scholar] [CrossRef]

- Johnston-Goodstar, K.; Roholt, R.V. “Our kids aren’t dropping out; they’re being pushed out”: Native American students and racial microaggressions in schools. In Microaggressions and Social Work Research, Practice and Education; Routledge: London, UK, 2020; pp. 31–48. [Google Scholar]

- Apple, M.W. Can Education Change Society? Routledge: London, UK, 2012. [Google Scholar]

- Baker, D. The Schooled Society: The Educational Transformation of Global Culture; Stanford University Press: Redwood City, CA, USA, 2020. [Google Scholar]

- Fink, D.; Stoll, L. Educational change: Easier said than done. In International Handbook of Educational Change: Part One; Springer: Berlin/Heidelberg, Germany, 1998; pp. 297–321. [Google Scholar]

- Benediktsson, A.I.; Ragnarsdóttir, H. Immigrant students’ experiences of assessment methods used in Icelandic universities. Multicult. Educ. Rev. 2020, 12, 98–116. [Google Scholar] [CrossRef]

- Maaruf, S.Z.; Mohamad, S.N.A.; Mohamed, A.M.D.; Shukor, N. Examining Knowledge, Awareness and Action Orientation Through Multicultural Assessment Proficiency (MAP) for Cultural Responsive Pedagogy in Higher Education Practices. In Proceedings of the 2nd International Research Conference on Business and Economics (IRCBE), Semarang, Indonesia, 3–4 August 2016. [Google Scholar]

- Ladson-Billings, G. Three decades of culturally relevant, responsive, & sustaining pedagogy: What lies ahead. In The Educational Forum; Taylor & Francis: Abingdon, UK, 2021; pp. 351–354. [Google Scholar]

- Ghattas, N.I.; Carver, J.S. Cultural responsiveness of the next generation science standards. J. Stem Teach. Educ. 2017, 52, 5. [Google Scholar] [CrossRef]

- Brown, M.; Bruns, D.; Macnamara, G.; O’Hara, J. Culturally responsive classroom-based assessment A case study of secondary schools in Ireland. Rie-Rev. Investig. Educ. 2022, 40, 15–32. [Google Scholar] [CrossRef]

- Edwards, F.; Edwards, R. A story of culture and teaching: The complexity of teacher identity formation. Curric. J. 2017, 28, 190–211. [Google Scholar] [CrossRef]

- Rosheim, K.M.; Tamte, K.G.; Froemming, M.J. Reducing Inequalities Inherent in Literacy Assessment of Multilingual Learners. Read. Psychol. 2024, 45, 735–751. [Google Scholar] [CrossRef]

- Nayir, F.; Brown, M.; Burns, D.; O’Hara, J.; McNamara, G.; Nortvedt, G.; Skedsmo, G.; Gloppen, S.K.; Wiese, E.F. Assessment with and for Migration Background Students-Cases from Europe. Eurasian J. Educ. Res. 2019, 19, 39–68. [Google Scholar] [CrossRef]

- Muñiz, J. Culturally Responsive Teaching: A 50-State Survey of Teaching Standards; New America: Washington, DC, USA, 2019; Available online: http://files.eric.ed.gov/fulltext/ED594599.pdf (accessed on 26 July 2024).

- Stepp, Z.A.; Brown, J.C. The (lack of) relationship between secondary science teachers’ self-efficacy for culturally responsive instruction and their observed practices. Int. J. Sci. Educ. 2021, 43, 1504–1523. [Google Scholar] [CrossRef]

- Ionescu, V.M.; Enescu, M.C. Using ChatGPT for Generating and Evaluating Online Tests. In Proceedings of the 2023 15th International Conference on Electronics, Computers and Artificial Intelligence (ECAI), Bucharest, Romania, 29–30 June 2023; pp. 1–6. [Google Scholar]

- Yi, G.; Chen, Z.; Chen, Z.; Wong, W.E.; Chau, N. Exploring the Capability of ChatGPT in Test Generation. In Proceedings of the 2023 IEEE 23rd International Conference on Software Quality, Reliability, and Security Companion (QRS-C), Chiang Mai, Thailand, 22–26 October 2023; pp. 72–80. [Google Scholar]

- Pandey, M.K.; Upadhyay, J. Overview of ChatGPT Model Architecture. In Advanced Applications of Generative AI and Natural Language Processing Models; IGI Global: Hershey, PA, USA, 2024; pp. 87–107. [Google Scholar]

- Lee, U.; Jung, H.; Jeon, Y.; Sohn, Y.; Hwang, W.; Moon, J.; Kim, H. Few-shot is enough: Exploring ChatGPT prompt engineering method for automatic question generation in english education. Educ. Inf. Technol. 2023, 29, 11483–11515. [Google Scholar] [CrossRef]

- Alasadi, E.A.; Baiz, C.R. Multimodal Generative Artificial Intelligence Tackles Visual Problems in Chemistry. J. Chem. Educ. 2024, 101, 2716–2729. [Google Scholar] [CrossRef]

- Gupta, N.; Choudhuri, S.S.; Hamsavath, P.N.; Varghese, A. Fundamentals of Chat GPT for Beginners Using AI; Academic Guru Publishing House: Bhopal, India, 2024. [Google Scholar]

- Bevilacqua, M.; Oketch, K.; Qin, R.; Stamey, W.; Zhang, X.; Gan, Y.; Yang, K.; Abbasi, A. When Automated Assessment Meets Automated Content Generation: Examining Text Quality in the Era of GPTs. arXiv 2023, arXiv:2309.14488. [Google Scholar] [CrossRef]

- Kartal, G. Contemporary Language Teaching and Learning with ChatGPT. In Contemporary Research in Language and Linguistics; Galip KARTAL: Konya, Turkey, 2023; Volume 1, ISSN 2980-2253. [Google Scholar] [CrossRef]

- Arifianto, M.L.; Thoriquttyas, T.; Izzudin, I.F. Inquiring Arabic-Islamic Debatable Terms to ChatGPT: Will It Take a Side? Digit. Muslim Rev. 2023, 1, 18–35. [Google Scholar] [CrossRef]

- Shabbir, A.; Rizvi, S.; Alam, M.M.; Su’ud, M.M. Beyond boundaries: Navigating the positive potential of ChatGPT, empowering education in underdeveloped corners of the world. Heliyon 2024, 10, e35845. [Google Scholar] [CrossRef] [PubMed]

- Zheng, Y.D.; Stewart, N. Improving EFL students’ cultural awareness: Reframing moral dilemmatic stories with ChatGPT. Comput. Educ. Artif. Intell. 2024, 6, 100223. [Google Scholar] [CrossRef]

- Oxenberg, R. A Dialogue on the Existence and Nature of God with ChatGPT (Part I). PhilArchive. Available online: https://philarchive.org/rec/OXEADO (accessed on 28 June 2024).

- Jayaweera, M. From Jargon to Clarity: Enhancing Science Communication with ChatGPT. Vidyodaya J. Sci. 2023, 26. [Google Scholar]

- Elu, J. Gender and science education in sub-Saharan Africa. J. Afr. Dev. 2018, 20, 105–110. [Google Scholar] [CrossRef]

- Wright, T.S.; Domke, L.M. The role of language and literacy in K-5 science and social studies standards. J. Lit. Res. 2019, 51, 5–29. [Google Scholar] [CrossRef]

- Kūkea Shultz, P.; Englert, K. Cultural validity as foundational to assessment development: An indigenous example. In Frontiers in Education; Frontiers Media SA: Lausanne, Switzerland, 2021; p. 701973. [Google Scholar]

- Latif, E.; Lee, G.-G.; Neuman, K.; Kastorff, T.; Zhai, X. G-SciEdBERT: A Contextualized LLM for Science Assessment Tasks in German. arXiv 2024, arXiv:2402.06584. [Google Scholar]

- Mantelas, N.; Mavrikaki, E. Religiosity and students’ acceptance of evolution. Int. J. Sci. Educ. 2020, 42, 3071–3092. [Google Scholar] [CrossRef]

- Black, P. Christian beliefs and values in science and religious education: An essay to assist the work of teachers of both subjects. Int. Stud. Cathol. Educ. 2017, 9, 206–222. [Google Scholar] [CrossRef]

- Barnes, M.E.; Supriya, K.; Dunlop, H.M.; Hendrix, T.M.; Sinatra, G.M.; Brownell, S.E. Relationships between the religious backgrounds and evolution acceptance of Black and Hispanic biology students. CBE—Life Sci. Educ. 2020, 19, ar59. [Google Scholar] [CrossRef]

- Owens, D.C.; Pear, R.S.; Alexander, H.A.; Reiss, M.J.; Tal, T. Scientific and religious perspectives on evolution in the curriculum: An approach based on pedagogy of difference. Res. Sci. Educ. 2018, 48, 1171–1186. [Google Scholar] [CrossRef]

- Sumarni, W.; Faizah, Z.; Subali, B.; Wiyanto, W. The Urgency of Religious and Cultural Science in STEM Education: A Meta Data Analysis. Int. J. Eval. Res. Educ. 2020, 9, 1045–1054. [Google Scholar] [CrossRef]

- Cheong, P.H. Robots, religion and communication: Rethinking piety, practices and pedagogy in the era of artificial intelligence. In Religion in the Age of Digitalization; Routledge: London, UK, 2020; pp. 86–96. [Google Scholar]

- Ashraf, C. Exploring the impacts of artificial intelligence on freedom of religion or belief online. Int. J. Hum. Rights 2022, 26, 757–791. [Google Scholar] [CrossRef]

- Black, A.; Tylianakis, J.M. Teach Indigenous knowledge alongside science. Science 2024, 383, 592–594. [Google Scholar] [CrossRef]

- Trumbull, E.; Nelson-Barber, S. The Ongoing Quest for Culturally-Responsive Assessment for Indigenous Students in the U.S. Front. Educ. 2019, 4, 40. [Google Scholar] [CrossRef]

- Azam, S.; Goodnough, K. Learning Together About Culturally Relevant Science Teacher Education: Indigenizing a Science Methods Course. Int. J. Innov. Sci. Math. Educ. 2018, 26, 74–88. Available online: https://www.researchgate.net/publication/324971384 (accessed on 28 June 2024).

- Jin, Q. Supporting indigenous students in science and STEM education: A systematic review. Educ. Sci. 2021, 11, 555. [Google Scholar] [CrossRef]

- Washburn, J.; McCutchen, J.M. AI Meets AI: ChatGPT as a Pedagogical Tool to Teach American Indian History. Crit. Humanit. 2024, 2, 2. [Google Scholar] [CrossRef]

- Castro Nascimento, C.M.; Pimentel, A.S. Do large language models understand chemistry? a conversation with chatgpt. J. Chem. Inf. Model. 2023, 63, 1649–1655. [Google Scholar] [CrossRef]

- Atwater, M.M.; Lance, J.; Woodard, U.; Johnson, N.H. Race and ethnicity: Powerful cultural forecasters of science learning and performance. Theory Pract. 2013, 52, 6–13. [Google Scholar] [CrossRef]

- Wells, A.S. Racial, ethnic, and cultural diversity across K–12 and higher education sectors: Challenges and opportunities for cross-sector learning. Chang. Mag. High. Learn. 2020, 52, 56–61. [Google Scholar] [CrossRef]

- Riegle-Crumb, C.; Morton, K.; Nguyen, U.; Dasgupta, N. Inquiry-based instruction in science and mathematics in middle school classrooms: Examining its association with students’ attitudes by gender and race/ethnicity. AERA Open 2019, 5, 2332858419867653. [Google Scholar] [CrossRef] [PubMed]

- Lesseig, K.; Firestone, J.; Morrison, J.; Slavit, D.; Holmlund, T. An analysis of cultural influences on STEM schools: Similarities and differences across K-12 contexts. Int. J. Sci. Math. Educ. 2019, 17, 449–466. [Google Scholar] [CrossRef]

- Choudhary, T. Reducing Racial and Ethnic Bias in AI Models: A Comparative Analysis of ChatGPT and Google Bard. Preprints 2024, 2024062016. [Google Scholar] [CrossRef]

- Warr, M.; Pivovarova, M.; Mishra, P.; Oster, N.J. Is ChatGPT Racially Biased? The Case of Evaluating Student Writing. In The Case of Evaluating Student Writing; Elsevier: Amsterdam, The Netherlands, 2024. [Google Scholar]

- Denton, M.; Borrego, M.; Boklage, A. Community cultural wealth in science, technology, engineering, and mathematics education: A systematic review. J. Eng. Educ. 2020, 109, 556–580. [Google Scholar] [CrossRef]

- Gerde, H.K.; Pikus, A.E.; Lee, K.; Van Egeren, L.A.; Huber, M.S.Q. Head Start children’s science experiences in the home and community. Early Child. Res. Q. 2021, 54, 179–193. [Google Scholar] [CrossRef]

- Soto-Lara, S.; Simpkins, S.D. Parent support of Mexican-descent high school adolescents’ science education: A culturally grounded framework. J. Adolesc. Res. 2022, 37, 541–570. [Google Scholar] [CrossRef]

- Garbacz, S.A.; Herman, K.C.; Thompson, A.M.; Reinke, W.M. Family engagement in education and intervention: Implementation and evaluation to maximize family, school, and student outcomes. J. Sch. Psychol. 2017, 62, 1–10. [Google Scholar] [CrossRef]

- Saıd, Z.; Al-amadı, A. The Role of Family Engagement in Students’ Science Learning in Qatari Schools. Eurasia Proc. Educ. Soc. Sci. 2018, 11, 142–152. [Google Scholar]

- Shahin, M.; Chen, F.F.; Hosseinzadeh, A. Harnessing customized AI to create voice of customer via GPT3.5. Adv. Eng. Inform. 2024, 61, 102462. [Google Scholar] [CrossRef]

- Gallifant, J.; Fiske, A.; Levites Strekalova, Y.A.; Osorio-Valencia, J.S.; Parke, R.; Mwavu, R.; Martinez, N.; Gichoya, J.W.; Ghassemi, M.; Demner-Fushman, D. Peer review of GPT-4 technical report and systems card. PLOS Digit. Health 2024, 3, e0000417. [Google Scholar] [CrossRef] [PubMed]

- Bast, H.; Korzen, C. A benchmark and evaluation for text extraction from PDF. In Proceedings of the 2017 ACM/IEEE joint conference on digital libraries (JCDL), Toronto, ON, Canada, 19–23 June 2017; pp. 1–10. [Google Scholar]

- SIregar, A.Z. Implementation Of ADFGVX Cipher And RSA On TXT And Doc File Security. J. Basic Sci. Technol. 2021, 10, 105–110. [Google Scholar] [CrossRef]

- Janssens, R.; Wolfert, P.; Demeester, T.; Belpaeme, T. Integrating Visual Context into Language Models for Situated Social Conversation Starters. IEEE Trans. Affect. Comput. 2024. Available online: https://ieeexplore.ieee.org/document/10599883 (accessed on 1 September 2024). [CrossRef]

- Spasić, A.J.; Janković, D.S. Using ChatGPT standard prompt engineering techniques in lesson preparation: Role, instructions and seed-word prompts. In Proceedings of the 2023 58th International Scientific Conference on Information, Communication and Energy Systems and Technologies (ICEST), Nis, Serbia, 29 June–1 July 2023; pp. 47–50. [Google Scholar]

- Yang, F.; Yang, S.; Butt, M.A.; van de Weijer, J. Dynamic prompt learning: Addressing cross-attention leakage for text-based image editing. Adv. Neural Inf. Process. Syst. 2023, 36, 26291–26303. [Google Scholar]

- Mo, W.; Zhang, T.; Bai, Y.; Su, B.; Wen, J.-R.; Yang, Q. Dynamic Prompt Optimizing for Text-to-Image Generation. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 26627–26636.

- Consortium, C. Wolves and moose interactions (ID# 116-04-Y03). NGSS standard for MS-LS2-2 2024. Available online: https://scienceeducation.stanford.edu/assessments (accessed on 18 February 2024).

- Erling, E.J.; Adinolfi, L.; Hultgren, A.K.; Buckler, A.; Mukorera, M. Medium of instruction policies in Ghanaian and Indian primary schools: An overview of key issues and recommendations. In English as a Medium of Instruction in Postcolonial Contexts; Routledge: London, UK, 2018; pp. 18–34. [Google Scholar]

- Loh, E.K.; Tam, L.C.; Lau, K.-c. Moving between language frontiers: The challenges of the medium of instruction policy for Chinese as a second language. Lang. Policy 2019, 18, 131–153. [Google Scholar] [CrossRef]

- Zhang, Z. English-medium instruction policies in China: Internationalisation of higher education. J. Multiling. Multicult. Dev. 2018, 39, 542–555. [Google Scholar] [CrossRef]

- Yu, S.; Wang, Y.; Jiang, L.; Wang, B. Coping with EMI (English as a medium of instruction): Mainland China students’ strategies at a university in Macau. Innov. Educ. Teach. Int. 2021, 58, 462–472. [Google Scholar] [CrossRef]

- Owu-Ewie, C.; Eshun, E.S. The Use of English as Medium of Instruction at the Upper Basic Level (Primary Four to Junior High School) in Ghana: From Theory to Practice. J. Educ. Pract. 2015, 6, 72–82. [Google Scholar]

- Owu-Ewie, C.; Eshun, E.S. Language representation in the Ghanaian lower primary classroom and its implications: The case of selected schools in the Central and Western Regions of Ghana. Curr. Issues Lang. Plan. 2019, 20, 365–388. [Google Scholar] [CrossRef]

- Akyeampong, O.A. Aboakyer: Traditional festival in decline. Ghana Soc. Sci. J. 2019, 16, 97. [Google Scholar]

- Lumor, F. Significance of Animal Symbolism Among the Akans of Akyem Abuakwa Traditional Area; Kwame Nkrumah University of Science and Technology: Kumasi, Ghana, 2009. [Google Scholar]

- Li, Y.; Powell, J.; Jin, A.; Ryoo, H.K.; Li, H.; Pandey, P.; Zhu, W.; Li, D.; Lee, H. Community attitudes towards Amur tigers (Panthera tigris altaica) and their prey species in Yanbian, Jilin province, a region of northeast China where tigers are returning. PLoS ONE 2022, 17, e0276554. [Google Scholar] [CrossRef] [PubMed]

- Rudenko, S.V.; Sobolievskyi, Y.A. Philosophical Ideas in Spiritual Culture of the Indigenous Peoples of North America. Anthropol. Meas. Philos. Res. 2020, 18, 168–182. [Google Scholar] [CrossRef]

- Kosoe, E.A.; Adjei, P.O.-W.; Diawuo, F. From sacrilege to sustainability: The role of indigenous knowledge systems in biodiversity conservation in the Upper West Region of Ghana. GeoJournal 2020, 85, 1057–1074. [Google Scholar] [CrossRef]

- Tavor, O. the Land of Tigers and Snakes: Living with Animals in Medieval Chinese Religions; Chen, H., Ed.; Oxford University Press US: Oxford, UK, 2024. [Google Scholar]

- Owoseni, A.; Kolade, O.; Egbetokun, A. Applications of Generative AI in Summative Assessment. In Generative AI in Higher Education: Innovation Strategies for Teaching and Learning; Springer Nature Switzerland: Cham, Switzerland, 2024; pp. 97–122. [Google Scholar]

- Knoth, N.; Tolzin, A.; Janson, A.; Leimeister, J.M. AI literacy and its implications for prompt engineering strategies. Comput. Educ. Artif. Intell. 2024, 6, 100225. [Google Scholar] [CrossRef]

- Arvidsson, S.; Axell, J. Prompt engineering guidelines for LLMs in Requirements Engineering. Bachelor’s Thesis, Software Engineering and ManagementGothenburg University, Göteborg, Sweden, 2023. [Google Scholar]

- Zhai, X. Transforming Teachers’ Roles and Agencies in the Era of Generative AI: Perceptions, Acceptance, Knowledge, and Practices. J. Sci. Educ. Technol. 2024, 1–11, 1–11. [Google Scholar] [CrossRef]

- Nyaaba, M. Transforming Teacher Education in Developing Countries: The Role of Generative AI in Bridging Theory and Practice. arXiv 2024, arXiv:2411.10718. [Google Scholar]

| Component | Code Snippet |

|---|---|

| Import Libraries | import openai |

| API Key Setup | openai.api_key = “API Key” |

| Get Response Function | def get_openai_response(prompt, model = “gpt-4”): response = openai.Completion.create( engine = model, prompt = prompt, max_tokens = 150, temperature = 0.7, n = 1, stop = None ) |

| Return Response | return response.choices[0].text.strip() |

| Conversation Starter Function | def conversation_starter(): starter_prompt = ( “Welcome! I am your culturally responsive science assessment generator (CRSciA-Generator). “ “I am here to help you create assessment items that meet the diverse cultural and context-specific needs of your class “ “that align with the NGSS. Let’s begin with a few questions to tailor the assessment for your class.\n” “What science topic or NGSS standard would you like to cover?” ) |

| User Topic Input | user_topic = input(get_openai_response(starter_prompt) + “\n”) |

| Return User Topic | return user_topic |

| User-Prompted Pathway Function | def user_prompted_pathway(): language_prompt = “What are the dominant languages your students can read and write in for science?” cultural_prompt = “Would you like to include any culturally specific knowledge or context in the assessment? (Yes/No)” |

| Get Responses from User | language = input(get_openai_response(language_prompt) + “\n”) cultural_relevance = input(get_openai_response(cultural_prompt) + “\n”) |

| Cultural Context Check | if cultural_relevance.lower() == ‘yes’: context_prompt = “Please provide some details about the cultural context you’d like to include.” context = input(get_openai_response(context_prompt) + “\n”) return language, context |

| Return Language and Context | else: return language, None |

| Main Function to Generate Assessment | def generate_assessment(): topic = conversation_starter() language, context = user_prompted_pathway() |

| Display Summary | print(“\n--- Assessment Summary ---”) print(f”Science Topic: {topic}”) print(f”Language: {language}”) if context: print(f”Cultural Context: {context}”) else: print(“No specific cultural context included.”) |

| Run the Generator | print(“\nYour assessment will be tailored based on the information provided.”) generate_assessment() |

| NGSS standard for MS-LS2-2 [96] NGSS standard for MS-LS2-2 [96] NGSS standard for MS-LS2-2 [96] NGSS standard for MS-LS2-2 [96] MS-LS2-2_024_ Wolves and moose interactions Ecosystem Interactions Construct an explanation that predicts patterns of interactions among organisms across multiple ecosystems. Activity: Wolve and moose interactions (ID# 116-04-Y03) Construct an evidence-based argument about whether there exists a predator and prey relationship between wolves and moose. From: Page 1 Wolves and moose interactions (ID# 116-04-Y03) (concord.org) Source: This resource is part of the Concord Consortium’s Middle Grades (6–8) project Task Wolves and moose interactions (ID# 116-04-Y03)  Wolf and moose images are licensed under the public domain. |

| Standard Prompt: Prompt: Create a culturally responsive science assessment question based on the NGSS standard MS-LS2-2, which involves ecosystem interactions and predator–prey relationships. The question should be suitable for middle school students in Ghana, the USA, and China. The question should ask students to construct an evidence-based argument about a predator and prey relationship, using examples that are relevant to their cultural context. Output Culturally Responsive Science Assessment Question: NGSS Standard: MS-LS2-2 Concept: Ecosystem Interactions Grade Level: Middle School Target Regions: Ghana, the USA, China Question: In ecosystems around the world, animals interact as predators and prey. Think about a predator and prey relationship that is familiar in your cultural context or from your region (Ghana, the USA, or China). Construct an evidence-based argument about the relationship between these two organisms. Consider the following in your response:

|

| Prompt: Following CRSciA-Generator Conversation Starter and Interactive guided dynamic Prompt Strategies Objective: Create a culturally responsive science assessment question that is scientifically rigorous and tailored to the cultural, linguistic, and educational contexts of students from [insert country/region, e.g., Ghana, the USA, China]. The question should be based on the [insert specific scientific concept or standard, e.g., ecosystem interactions, photosynthesis, forces, and motion]. Instructions: Use the following guidelines to structure the assessment question:

Construct a question that requires the student to engage with the scientific concept by analyzing data, making predictions, or constructing an evidence-based argument.Ensure the question aligns with the [insert specific scientific standard or concept, e.g., NGSS standard MS-LS2-2]. Provide clear instructions for the student on how to incorporate cultural elements into their response. |

| CRSciA-Generator Questions |

Ghana: Language: English Context: Consider how local knowledge and traditional stories in Ghana describe predator–prey relationships, such as those between lions and antelopes in the savanna ecosystem. Think about how the knowledge passed down through your family or community elders might help you understand similar relationships in other parts of the world. Task:

Language: English Context: Reflect on how Native American perspectives describe wolves as spiritual beings and key regulators in ecosystems. Consider how this Indigenous knowledge might influence your understanding of the predator–prey relationships shown in the graph. Task:

Language: Mandarin Context: Consider how traditional Chinese views on predators like the Amur tiger can help you understand predator–prey relationships in ecosystems. Reflect on how your family’s stories or the teachings from Taoism or Buddhism might influence your interpretation of the graph. 任务:

(Translation: Task:

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nyaaba, M.; Zhai, X.; Faison, M.Z. Generative AI for Culturally Responsive Science Assessment: A Conceptual Framework. Educ. Sci. 2024, 14, 1325. https://doi.org/10.3390/educsci14121325

Nyaaba M, Zhai X, Faison MZ. Generative AI for Culturally Responsive Science Assessment: A Conceptual Framework. Education Sciences. 2024; 14(12):1325. https://doi.org/10.3390/educsci14121325

Chicago/Turabian StyleNyaaba, Matthew, Xiaoming Zhai, and Morgan Z. Faison. 2024. "Generative AI for Culturally Responsive Science Assessment: A Conceptual Framework" Education Sciences 14, no. 12: 1325. https://doi.org/10.3390/educsci14121325

APA StyleNyaaba, M., Zhai, X., & Faison, M. Z. (2024). Generative AI for Culturally Responsive Science Assessment: A Conceptual Framework. Education Sciences, 14(12), 1325. https://doi.org/10.3390/educsci14121325