Abstract

Ambitious approaches to science teaching feature collaborative learning environments and engage students in rich discourse to make sense of their own and their peers’ ideas. Classroom assessment must cohere with and mutually reinforce these kinds of learning experiences. This paper explores how teachers’ enactment of formative assessment tasks can support such an ambitious vision of learning. We draw on video data collected through a year-long investigation to explore the ways that co-designing formative assessments linked to a learning progression for modeling energy in systems could help teachers coordinate classroom practices across high school physics, chemistry, and biology. Our analyses show that while there was some alignment of routines within content areas, students had differential opportunities to share and work on their ideas. Though the tasks were constructed for surfacing students’ ideas, they were not always facilitated to create space for teachers to take up and work with those ideas. This paper suggests the importance of designing and enacting formative assessment tasks to support ambitious reform efforts, as well as ongoing professional learning to support teachers in using those tasks in ways that will center discourse around students’ developing ideas.

1. Introduction

The Framework for K-12 Science Learning (Framework) [1] posed an ambitious three-dimensional vision for science learning and teaching in the U.S. In this vision, science practices, disciplinary core ideas, and crosscutting concepts form three intertwined strands that guide students’ learning experiences.

Crosscutting concepts are a new element of science education reform and consist of concepts that span the science disciplines such as stability and change, patterns, and energy and matter [2]. For example, the crosscutting concept of patterns could guide a student to pay attention to which kinds of plants grow in different places, such as in the shade versus sun, or in wet versus well-drained soils. Crosscutting concepts can serve as guideposts for students when they encounter a novel scenario or phenomenon and create footholds by which students can make sense of those phenomena [3].

With 48 states having now adapted standards based on the Framework [4], teachers are faced with making substantial shifts to their teaching practices. Tools and routines are necessary to support teachers in making these shifts [5,6]. Research is just beginning to emerge about how crosscutting concepts can serve as foundations not only for classroom learning experiences, but also assessment and how assessment can inform these learning experiences.

Crosscutting concepts create a new opportunity in science education to provide organizing frameworks across historically siloed science disciplines, particularly in high school science, where there is often little vertical alignment between ideas taught in core classes such as biology, chemistry, and physics. In addition, organizing frameworks based on the crosscutting concepts have the potential to help teachers in developing and enacting assessments across an academic year, compared to tools, like content-only rubrics that may be ‘single-use’. This paper explores how a research–practice partnership used the crosscutting concept of energy and matter in systems as a connective thread for the design and enactment of formative assessment tasks with science teachers at three high schools. We explore how the tasks guided classroom instruction, and the ways in which teachers’ facilitation afforded students opportunities to make sense of energy-related phenomena.

2. Background

The Next Generation Science Standards (NGSS) [7], which were developed from the Framework, consist of three-dimensional performance expectations. In a departure from previous science standards (e.g., [8]), which focused on what students should know related to canonical science content and inquiry separately, the NGSS performance expectations combine science and engineering practices and crosscutting concepts to figure out disciplinary core ideas.

We ground our exploration in frameworks for NGSS task design, learning progressions, classroom assessment practices, and professional learning for formative assessment in secondary science.

2.1. Ambitious Teaching Reforms and Science Classroom Assessment

Larger movements in educational reform toward teaching and learning have been called ambitious (e.g., [9,10]). Ambitious approaches to teaching and learning involve rigorous expectations for students, ample space for students’ intellectual engagement, and connections to students’ daily lives. These learning environments are collaborative and engage students in rich discourse in which they make sense of their own ideas, as well as those of others.

In such an ambitious environment, students focus on making sense of phenomena or solving design problems. Phenomena are puzzling scenarios based on real-world, scientific occurrences or events [11]. Teachers and students engage in authentic dialogue aimed at understanding the phenomenon, drawing upon each other’s ideas and experiences to develop their understanding. Teachers guide the class as students build knowledge together to ultimately make sense of those phenomena to support students’ learning of not only disciplinary core ideas and science and engineering practices, but also crosscutting concepts. These complex and three-dimensional learning objectives are best supported by curriculum materials and instruction with high cognitive demand; that is, tasks that involve doing science, as well as content and practices combined [12]. The development of student ideas has been shown to be supported when teachers draw out student thinking in dialogue with their ideas [13], and press students to provide evidence and better explain their ideas [10].

It follows that classroom assessment must also cohere with and mutually reinforce these kinds of learning experiences [14]. Upon its publication in 2012, the NRC Framework [1] had already identified the importance of classroom assessment aligned with three-dimensional learning in order for its vision to succeed. Later reports (e.g., [15,16] followed up with more specifications for what these assessments might look like, including that they consist of multiple components and question types in order to fully tap the three dimensions; that they be oriented around accessible scenarios and/or phenomena; that they include deliberate uncertainty in order to compel students to make sense of those scenarios [3], and that they incorporate multiple scaffolds in order to encourage students to show what they know [17]. Such novel approaches to assessment, meant to cohere with rather than conflict with curriculum and instruction, represent another learning demand for teachers.

While assessment in schools takes many forms, in this paper we focus upon classroom assessment and, more specifically, formative assessment, or the assessment that teachers carry out with their students on an ongoing basis to inform subsequent learning [18]. Formative assessment has several features that make it distinct from summative assessment, which is used to assign grades at the end of an instructional unit, and are generally more consequential for students’ learning. These features of formative assessment include situating learning goals within progressions of learning, drawing out and interpreting student ideas, peer and self-assessment, providing feedback, and making adjustments to support student learning going forward [19]. Formative assessment has been described as both a process by which teachers attend to and support the development of student thinking as described above, as well as an object or artifact, or the task that teachers use to organize student activity (e.g., [20]). This distinction between formative assessment tasks and the enactment of those tasks is important because prior studies have indicated that even when tasks are of high quality, they may not be sufficient to support teachers and students in maintaining their high cognitive demand [21]. The enactment of any classroom task is subject to local variations and adaptations (e.g., [22]), and yet classroom and formative assessments in particular are susceptible to interpretations that may reduce their intended function in supporting learning and broadening participation. Even when tasks are constructed with high cognitive demand [23], they can be oversimplified in their launching and enactment with students, focusing students on correct answers [21]. These approaches to formative assessment limit students’ ability to access the full potential the tasks were designed to provide (e.g., [24]). Nuances in design and enactment further emphasize the need for adequate professional learning opportunities and resources to support making these shifts to classroom assessment practices [25].

2.2. Learning Progressions to Support Development of Understanding across Grade Bands

A key element of both the definition of formative assessment given above and the NGSS are their emphasis on learning progressions, which are representations or hypothesized trajectories of how student understandings can develop over time [26]. At their top anchors, learning progressions represent the ultimate goals for learning; at their lower anchors, they include the emerging ideas that students will develop at the beginning of learning sequences. In the middle are various intermediate understandings through which students may progress.

Learning progressions have served as the foundations for curriculum and classroom assessment design, as well as for professional development [27]. In the NGSS, underlying learning progressions have been created for disciplinary core ideas, science and engineering practices, and crosscutting concepts independently; individual performance expectations, then, are constructed by intertwining grade-level-appropriate understandings from each of the three dimensions.

Many efforts to support curriculum, learning, and instruction have been based on bundles of three-dimensional performance expectations (e.g., [28]). This approach links together related performance expectations at a given grade level and connects them to a compelling phenomenon or scenario that is the target for a curriculum unit or assessment.

An alternative approach to assessment design, however, could be to take a learning progression for a single dimension of the NGSS—such as a CCC—and then follow that CCC into different performance expectations of the NGSS. While many learning progressions are designed within specific disciplinary spaces or for scientific practices (e.g., [29]), we took the approach of founding our project on a three-dimensional progression that followed the crosscutting concept of energy and the science practice of modeling into different disciplinary core ideas (Table 1). This resulted in a ‘core’ learning progression describing increasingly sophisticated conceptions associated with applying the science and engineering practice of modeling to the crosscutting concept of energy flows, with extension progressions for target core ideas in physics (e.g., force and motion), chemistry (e.g., chemical reactions), and biology (e.g., carbon cycling) (see Figure 1).

Table 1.

Learning progression for modeling energy flows in systems [30].

Figure 1.

Organizing assessment across classrooms with a learning progression for a crosscutting concept.

This learning progression created the opportunity to understand how everyday assessment practices could be organized across, rather than within, high school science courses. That is, by using a core learning progression for the crosscutting concept, and then adapting it for particular disciplinary core ideas in high school physics, chemistry, and biology, we sought to understand how this representation might organize formative assessment task design and students’ opportunities to learn about energy.

In this context, we define formative assessment as pre-planned activities in which teachers and students pause during the course of a sequence of learning to draw out and work with student ideas; in a sense, assessment conducted while learning is in progress to further develop students’ understandings of modeling energy flows in systems. This definition of formative assessment acknowledges that the term incorporates tasks that organize student activity, the practices that teachers and students engage in as they learn, and multiple participants [31].

We have studied the ways in which long-term, school-based professional learning experiences can support teachers in designing, enacting, and taking instructional action on the basis of formative assessment tasks embedded in their regular curriculum materials (e.g., [32]). These studies have taken the perspective that particular tools and routines can support teachers and coordinate their practice across multiple settings (e.g., [33]).

While studies have examined learning progressions from multiple perspectives, as described above, the field does not yet have an understanding of how such representations might organize formative assessment not only within, but across, grade bands in order to support students as they participate in three-dimensional learning. Our prior work has led us to form the high-level conjecture [34] that a progression that serves as the orienting framework for long-term, school-embedded professional learning can organize teachers in surfacing and supporting student thinking across grade levels. To that end, we pose the following research questions:

- What routines do teachers use as they enact formative assessment tasks to support students in modeling energy in systems across high school science courses?

- What variations do we see in the ways teachers enact the tasks to surface and work with students’ ideas?

- How did students’ ideas expressed in the task enactments demonstrate their understanding of energy as represented in the learning progressions?

3. Materials and Methods

We draw on video data collected as part of a larger, long-term research–practice partnership [35] between researchers at a public university located in the Mountain West of the United States and a linguistically, ethnically, and socioeconomically diverse public school district located near a large city in the U.S. Within this larger partnership, we conducted a year-long investigation into the ways that engaging in cycles of co-designing formative assessments linked to the learning progression for modeling energy in systems could help organize learning and teaching across high school physics, chemistry, and biology.

3.1. Design for Professional Learning: The Formative Assessment Design Cycle

We engaged teachers from three high schools in our partner district in bimonthly, school-based professional learning community (PLC) meetings. These three schools were identified in cooperation with district science curriculum leaders as sites where PLCs were already in place, and we then recruited teachers within the content areas from each school. These meetings were organized around the Formative Assessment Design Cycle (FADC), an established set of routines developed to support teachers in using a learning progression as an orienting framework for formative assessment [36]. Teachers also worked with sets of tools to support their planning and enactment of formative assessment to support the crosscutting concept of energy. The steps of the FADC, with accompanying routines and tools, are summarized in Table 2.

Table 2.

Formative assessment design cycle tools and routines.

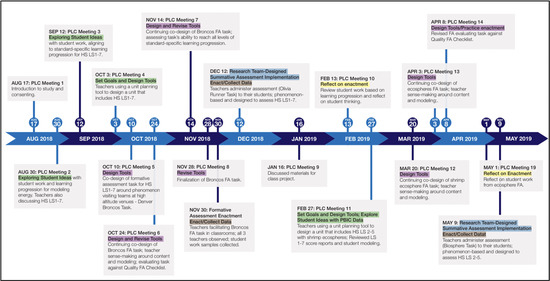

Figure 2.

Timeline of PLC meetings for the Fox Hill High School Biology PLC, 2018–2019.

Across the three partner schools, teachers participated in between 11 and 16 meetings, approximately bimonthly, through the 2018-2019 academic year (mean = 14 meetings). Meetings took place during teachers’ collaborative planning time during the regular school day.

Co-designed formative assessment tasks. To enable analysis across physics, chemistry, and biology PLCs that participated in the FADC, we selected a subset of tasks that shared common features of engaging students in creating models to represent flows, transfers, and transformation of energy around different phenomena (see Table 3 for descriptions of each task). Each of the tasks involved higher cognitive demand by engaging students in science practices and crosscutting concepts to demonstrate their understanding of disciplinary core ideas (e.g., [37]), and used multiple forms of representation to engage students in the practice of modeling (see [38] for a detailed analysis of the tasks).

Table 3.

Modeling energy tasks, by discipline and performance expectation.

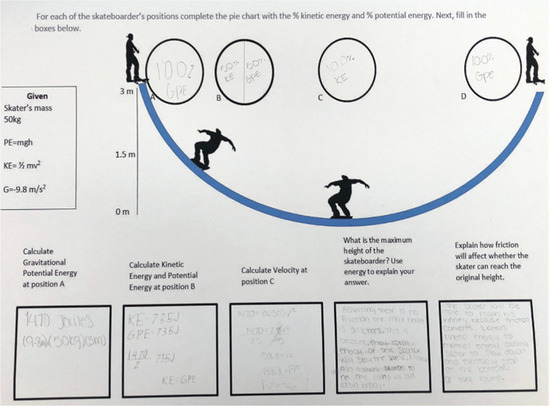

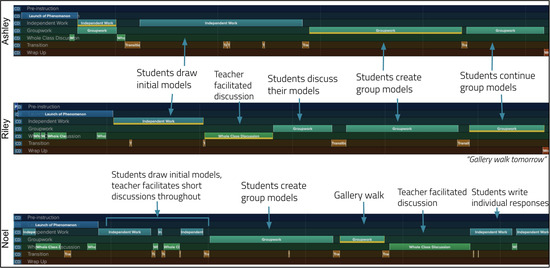

Physics. Teachers co-designed a task for 9th grade physics that showed a skateboarder at four different positions on a halfpipe (Figure 3). The task was designed to be used at the end of a unit on energy, shortly before the unit exam. The task used multiple scaffolds to engage students in making models and performing calculations of kinetic and potential energy and velocity. For each position of the skateboarder, students were prompted to create a pie chart that showed the relative proportions of kinetic and potential energy at each point, and then to perform calculations for kinetic and potential energy, as well as velocity, at different points.

Figure 3.

Skateboarder Task (Physics). See Appendix A for the typed-out student responses.

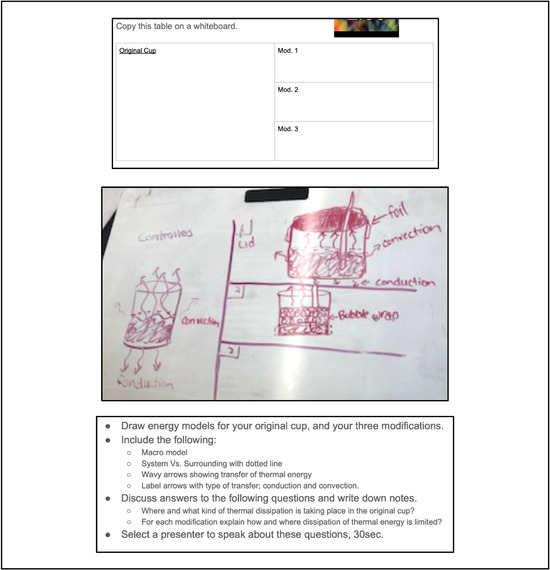

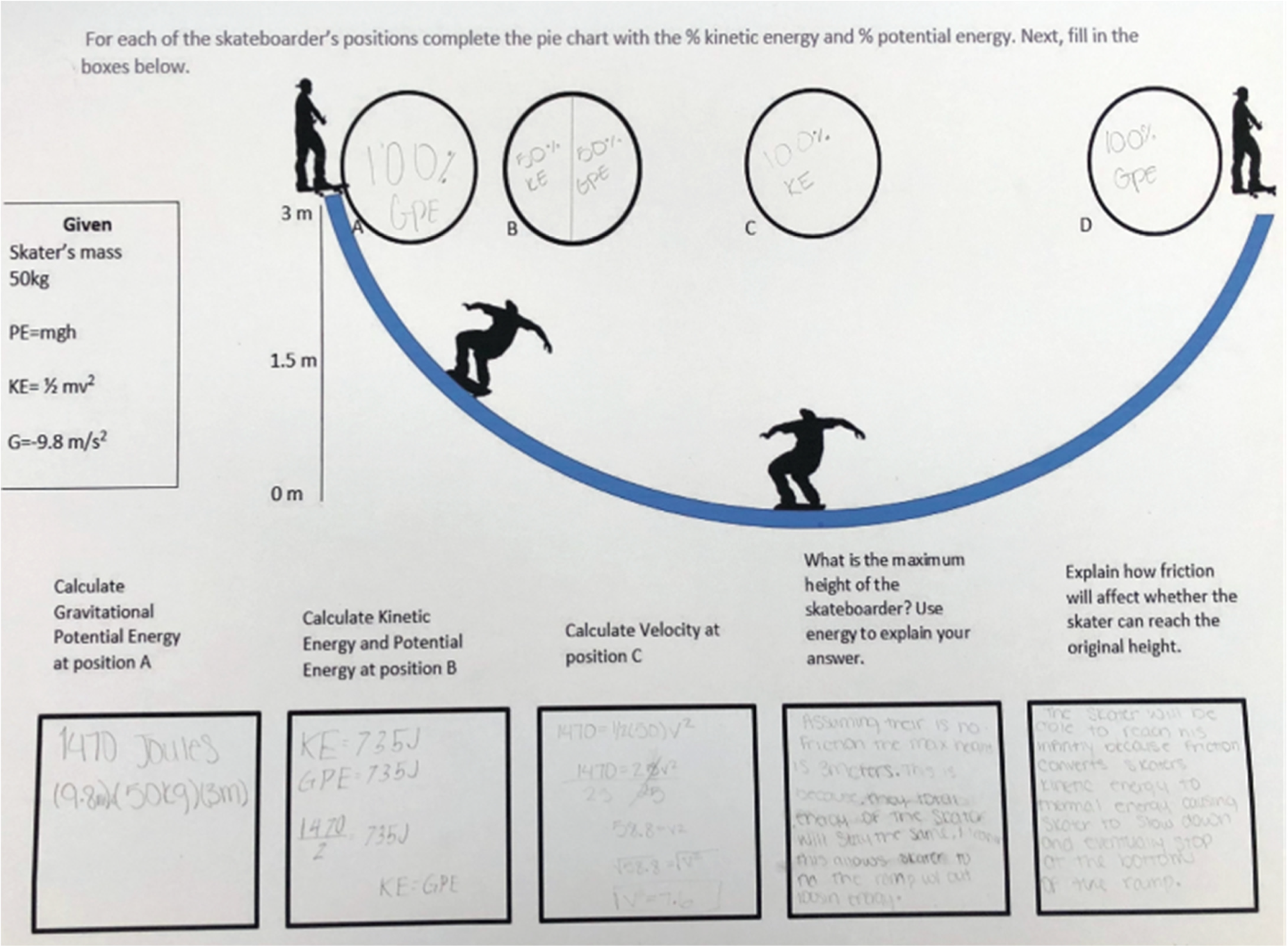

Chemistry. The Kinetic Cup task was a group modeling task designed by 10th grade chemistry teachers following a laboratory investigation in which students examined the thermal properties of multiple kinds of insulated cups. Then, for the formative assessment, students were provided with a disposable paper cup and were asked, based upon what they had learned in their laboratory, to propose three modifications to the paper cup, and then to draw a model and explain how the modifications would limit thermal dissipation before trying out and investigating their proposed modifications. Rather than providing a written task to students, teachers posed the task using PowerPoint slides, shown in Figure 4, including a checklist for what to include in the model, as well as framing questions. Students then were directed by the task to copy the table on the first slide onto a whiteboard, to work in groups, and then to select a presenter to give a summary of the model to the class.

Figure 4.

Teacher tools and resources, and a student model drawn for the Kinetic Cup task. See Appendix B for the typed-out student work on the model (center image).

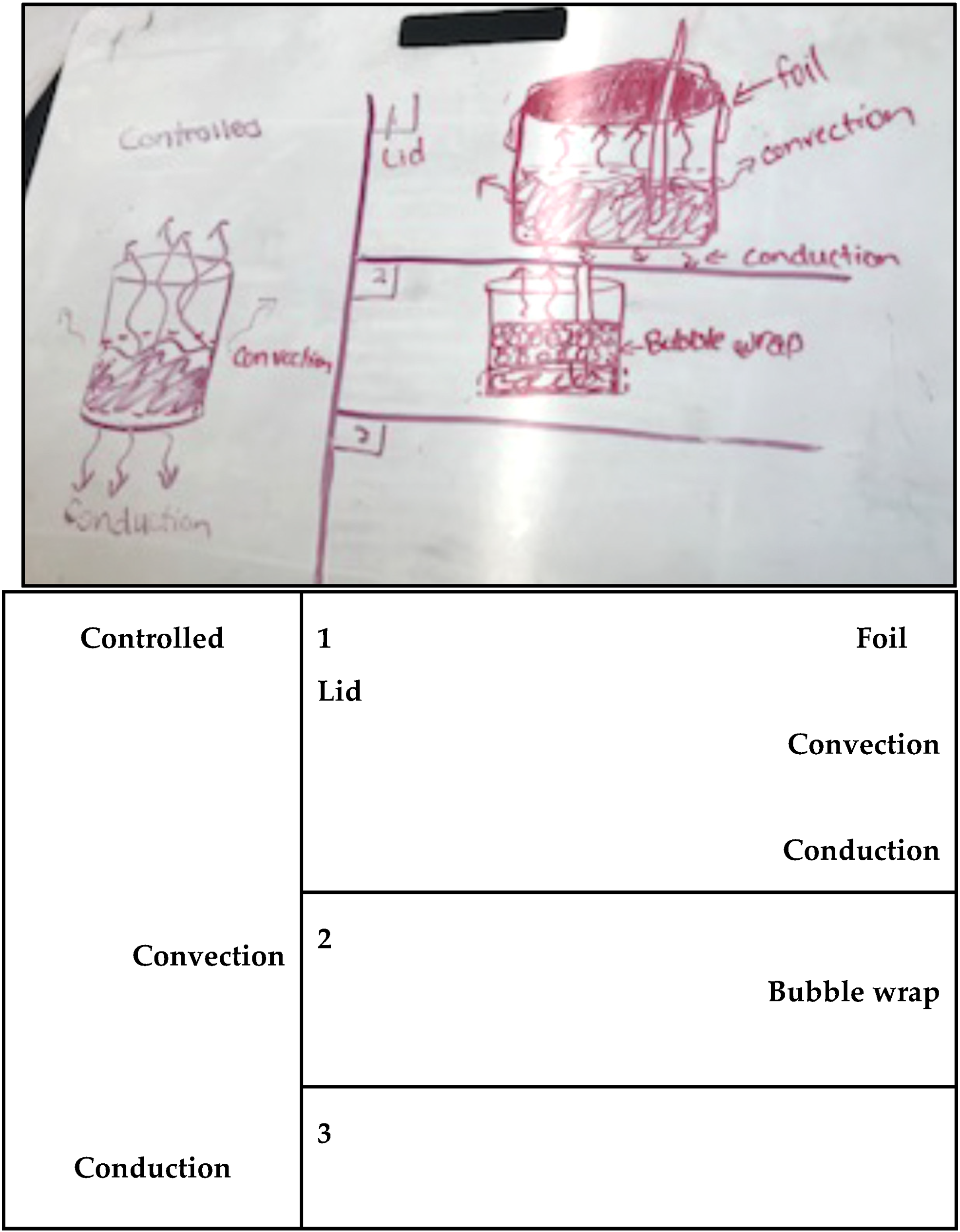

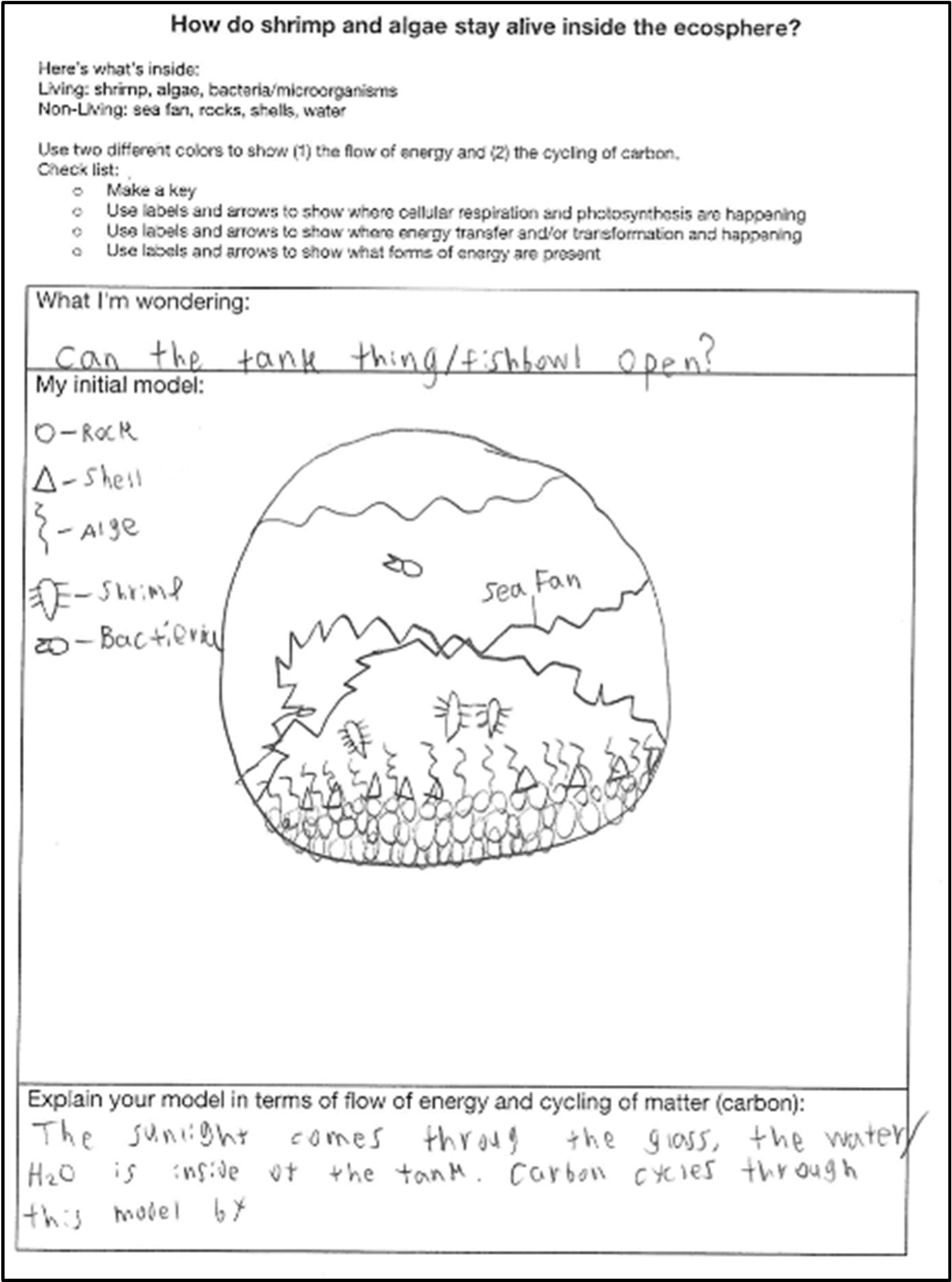

Biology. The Shrimp Ecosphere task was designed for 11th grade biology courses and was used as the introduction to a unit on carbon and energy cycling across the biosphere, atmosphere, hydrosphere, and geosphere. It began with teachers sharing a small, closed ecosystem that is entirely self-sustaining with students. The ecosphere includes brine shrimp, plants, and other microscopic organisms. Students were asked to draw initial models of how the shrimp stayed alive, showing how energy and matter cycled within the ecosphere, even without being able to open it and, for example, feed the brine shrimp inside. Students were invited to write what questions they had about the model, as well as to list additional information they would like to have to understand what was going on inside the ecospheres (Figure 5).

Figure 5.

Shrimp ecosphere task. See Appendix C for the typed-out student responses.

In addition to the task, teachers also wrote themselves guidance for how to use the task with students:

- Prior to formative assessment:

- Have the ecosphere around—students start to ask what it is

- Day of formative assessment:

- Introduce the ecosphere formally—we haven’t added anything or taken anything out, they are still moving around. We’re going to explore what’s happening.

- Have a brief full class discussion of what is a model? Compare to other models students have worked with in the course or other science classes.

- Students work alone to (1) create an initial model (2) write an initial explanation and (3) write what they’re wondering

- Then students talk with their table groups to share information they need and as a table make a model on butcher paper. Groups can send someone to go around and come back with what they want to add to their model

- Groups hang their model up and do a walk around

- Full class discussion:

- What did you notice about the different models that were different or similar to yours

- What would you add to your model now?

- What questions do you still have?

- Students work with their tables to answer questions at the end

Participants. We drew on data collected in partnership with six professional learning communities at three high schools. For the current analysis, we purposively sampled from PLCs that had developed tasks that seemed most conducive to surfacing and working with students’ ideas about modeling energy transfers and transformation. This resulted in data from four PLCs and a total of nine teachers, shown in Table 4. All teachers are identified by gender-neutral pseudonyms.

Table 4.

Teacher participants, by PLC and by school.

3.2. Sources of Data

We analyzed a video recording of each of the teachers’ enactments of the formative assessment tasks. This resulted in a dataset consisting of observations of nine teachers across four PLCs enacting tasks from each content area: nine video-recorded enactments, for a total of 7 h and 53 min of video (Table 5). Given limitations related to the audio of the recordings, we focused our analysis on whole-class discussions in which teachers used the different elements of the tasks to structure conversations about student ideas.

Table 5.

Summary of video data.

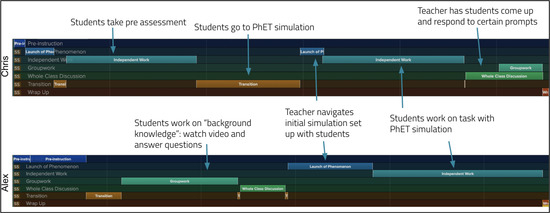

3.3. Analytic Approach

We developed a multifaceted coding system to understand the ways in which teachers’ facilitation of the co-designed tasks created opportunities for students to make sense of the phenomena framing the tasks, and for students to model and explain with the crosscutting concept of energy. We conducted four rounds of coding. First, to understand how teachers organized classroom participation structures around the tasks, we used the Vosaic video coding software (used version available in 2022) to identify episodes of classroom talk such as whole-class discussion and groupwork derived from previous studies of classroom teaching [39]. This coding produced a visual timeline of each teacher’s enactment showing the episodes of participation structures across the class period (see Figure 6, for example). We annotated these timelines with the activities specific to each task to highlight similarities and differences across teachers’ enactments. Second, we segmented whole-class discussions into idea units, which we defined as units of interactions between teachers and students that developed one particular idea towards understanding the phenomenon. Third, we coded each of the idea units according to the nature of teachers’ and students’ interactions around the task, using codes presented in Table 6, to help us understand the opportunities students had to surface their ideas and how teachers worked with those ideas to leverage learning forward. More specifically, we coded exchanges as authoritative, or exchanges in which particular answers were prioritized, or dialogic, in which teachers and students participate more equally in sharing and responding to ideas [13]. Finally, we coded each idea unit according to the corresponding level of the modeling energy learning progression (Table 1), using the extension learning progressions co-developed with teachers for each content area to code each task (see Appendix D). For example, we used the modeling energy learning progression adapted for the NGSS Performance Expectation HS-LS2-5, which focuses on the role of photosynthesis and cellular respiration in the cycling of carbon, to code the idea units in the enactment of the Shrimp Ecosphere task. To further elaborate, in a series of idea units taking place within a whole class discussion, we determined the level of the learning progression developed by students and the teacher during each idea unit. After training on common videos, we divided the remaining videos and coded them independently, then adjudicated all disagreements as a group.

Table 6.

Video codes for the nature of teacher–student talk.

4. Results

We present our findings in relation to the three research questions posed above.

What routines do teachers use as they enact formative assessment tasks to support students in modeling energy in systems across high school science courses?

Current reforms in science education in general, and formative assessment in particular, emphasize how learning happens through dialogue and discourse, as well as the importance of students having opportunities to share ideas with their teacher and peers, and to be able to revise those ideas as they progress in their learning. When it comes to formative assessment, we acknowledge that students learn when tasks are organized within larger participation structures that include a combination of task launches, independent work, groupwork, and whole-class discussion.

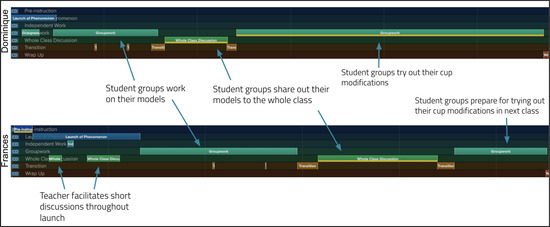

To better understand how the tasks organized participation across different classrooms, we used the coded timelines of the episodes of participation structures within each enactment to illustrate how teachers organized classroom activity (see Figure 6, for example).

Physics. Four teachers across two schools enacted the physics task with their students (Figure 6). At Carver, teachers combined the task with students interacting with a similar PhET simulation, and then completing the task. Alex combined this with building students’ background knowledge of the phenomenon by watching a video that discussed different examples of energy, and students in Chris’ classes took a pre-assessment prior to completing the task, ending the class period with students sharing their responses to different parts of the task. In contrast, at Mayfield, the majority of class time involved students working in small groups to respond to the questions on the task, with Perry facilitating a whole-class discussion of student responses at the end of class.

Chemistry. Two teachers at Carver enacted the Kinetic Cup task, shown in Figure 7. The class periods each began with teachers launching the task by describing the phenomenon at hand; Frances interspersed short whole-class discussions with the launch to achieve consensus around how to model energy flows and transfer with the cup. Students in both classes were then provided with time to work in small groups to create their models, which they then presented to the whole class. Both classes concluded with periods of time in which students prepared for, or actually tested, modifications to cup designs that they had proposed in their models.

Biology. Three teachers at Fox Hill enacted the Shrimp Ecosphere task, shown in Figure 8. The guidance teachers created for themselves led to similarities across the three classrooms. All three launched the phenomenon at the beginning of class, and then provided time for students to draw initial models before constructing group models. A key difference, however, in the ways the teachers enacted the tasks came in facilitating class discussions. Both Riley and Noel integrated whole-class discussions with students’ models; Riley integrated this after students drew their initial models, and Noel did so after students completed a gallery walk of other groups’ models.

Summary. Looking across the three different tasks, we see similar routines used by teachers that provided opportunities across classrooms for students to share and model their ideas about energy flows in systems. One such routine was the ‘launch of the phenomenon’, which introduced students to a real-world context for using and working with these ideas: a skateboarder on a halfpipe, a cooling cup of coffee, and a closed ecosystem. The three tasks had different kinds of scaffolds to encourage students to make their models, as follows: In the physics task, students were prompted to create models that illustrated shifts in the proportion of kinetic and potential energy at different points in the halfpipe ramps. In chemistry, students were provided with fewer scaffolds to create models of energy transfers and transformations as they proposed designs for coffee cups. In biology, the task and teachers supported students by including discussions around the characteristics of effective models along with a bulleted list of what to include in their ecosphere models (e.g., a key, using different colors, etc.).

Figure 6.

Enactment timelines for physics Skateboarder task for Carver (top) and Mayfield (bottom).

Figure 7.

Enactment timelines for chemistry Kinetic Cup task at Carver.

Figure 8.

Enactment timelines for biology Shrimp Ecosphere task at Fox Hill.

We also interpret these findings in the context of the placement of the three tasks within instructional units: the physics task was enacted near the end, before a summative assessment; the chemistry task was mid-unit, following a laboratory investigation; and the shrimp ecosphere task was enacted at the beginning of a unit on carbon and energy cycling. These unit placements help us to see that the tasks, by design, were serving different instructional purposes: late in the unit, teachers may have been more likely to check that students have particular understandings, rather than early in a unit, where the assessment was provided to be expansive and encourage students to share prior experiences and ideas that could be leveraged as the unit continued. The chemistry task served as an extension and application of the ideas students learned about in the lesson.

We observed similar routines around surfacing student ideas during formative assessment across the observed lessons and within the different content areas. In general, these routines were structured around the task that was co-designed by teachers; for example, chemistry teachers had students construct models of their coffee cup design to reduce energy transfers and transformations in small groups, then students presented their models to the whole class. In biology, teachers had students construct initial models of how shrimp stay alive in a sealed, glass ecosphere, discussed those models and their answers to questions as a whole class, and then revised their models following the discussion.

At the same time, there were differences in enactment within the different content areas for teachers enacting the same tasks. These involved the degree to which there were opportunities for students to discuss their models in whole-class conversations, as we saw with the shrimp ecosphere task. In addition, the physics teachers at two schools showed variations in how the task was used, as teachers at Carver integrated the task with a PhET simulation, whereas teachers at Mayfield did not.

What variations do we see in the ways teachers enact the tasks to surface and work with students’ ideas?

We next focused on the ways in which teachers created opportunities for students to make sense of their ideas in classroom discussions in each of the sampled classrooms. Each of the three tasks, as described above, was deliberately designed to elicit student thinking around an accessible phenomenon and create space in classrooms for students to talk about their models. Both of these elements are foundational to the practice of formative assessment.

To understand the ways that teachers’ enactment of these tasks created opportunities for students to share and work with their ideas, we explored the relative distribution of idea units coded as authoritative, dialogic, pressing for ideas, and students sharing ideas (see Table 7 above, and for the nature of teacher–student talk codes, see Table 6). With respect to formative assessment, these distinctions helped us to differentiate between dialogic exchanges, when students were engaged in authentic conversations about ideas that helped to refine ideas, sometimes referred to as ‘assessment conversations’ (e.g., [40]), occasions where students shared their ideas but they were not taken up, and instances in which student ideas were evaluated without further discussion.

Table 7.

Idea unit coding, by teacher.

We found that there were more similarities between the two chemistry teachers’ enactment of the Kinetic Cup task, as compared to the Skateboarder task and Shrimp Ecosphere tasks, although Riley and Noel were more similar than Ashley in enacting the Shrimp Ecosphere task.

Across the two schools where the Skateboarder task was enacted, we see an overall focus on authoritative coding, meaning that students shared ideas that were then evaluated by the teacher without further follow-up and development of ideas. That said, there were still opportunities for students to share their ideas in response to the task in Perry’s enactment, and three of the four teachers had idea units in which they took up and worked with student ideas. The findings for the biology and physics tasks indicate that although the tasks were the same, they were enacted differently, with either more dialogic enactments in which teachers and students talked about and developed ideas, or more authoritative enactments. This indicates that there were differential opportunities for students to engage in discourse about their ideas related to the phenomenon framing the task.

All but one of the enactments included segments that featured teachers pressing students on ideas they had shared. These follow-up questions encourage students to further develop and expand on their ideas, a form of providing feedback (e.g., [41,42]). This indicates that teachers selectively listened for and picked up on elements of student contributions to help them move toward particular understandings, a key element of formative assessment practice. Seven of the nine videos observed a much smaller proportion of lessons that featured teachers in dialogue with students and their ideas in whole-class discussions; looking across the tasks, the Kinetic Cup task offered the most opportunities for teachers to engage students in dialogue about their ideas.

These findings indicate that, although the tasks may have structured classroom activity similarly across classrooms, some teachers differentially supported opportunities for making sense of the phenomena by opening up or constraining students’ opportunities for making connections between their prior experiences and understandings and interweaving those with a developing scientific understanding that explains the phenomena at hand. These differences have implications for how informed teachers will be in providing feedback and making instructional adjustments to support learning moving forward.

In the context of the placement of the three tasks within instructional units, we can see how the design of the task served a different function that then became apparent in the classroom enactment. In physics, teachers were focused on students coming away with particular responses, whereas chemistry teachers encouraged students to make connections between the lab they had just connected and the modifications they proposed for designs. In biology, teachers used multiple levels of groupings to encourage students to make and discuss models.

Returning to our framing of ambitious learning and teaching, these findings indicate that there were unevenly distributed opportunities for students to engage with each other and in discourse-rich learning environments in which their teachers took up and responded to their ideas. Our coding indicates that teachers were for the most part supportive of students in their classroom, even when guiding them toward particular answers, and some, but not all, of the teachers engaged students in discourse about their ideas.

How did students’ ideas expressed in the task enactments demonstrate their understanding of energy as represented in the learning progressions?

Overall, we found that all of the task enactments featured students sharing their ideas about energy forms (associated with levels 1 and 2 of the learning progression), although there was considerable variation in the number of idea units coded according to different levels of the learning progression (mean = 11; S.D. = 6.7; min = 3, max = 21; see Table 8). This indicates that while students had opportunities to share their ideas about energy flows, these were not evenly distributed across classrooms. We interpret these findings carefully, since the tasks were embedded at different points within instructional units.

Table 8.

Learning progression coding, by teacher.

Looking across the coded data, we see that the teachers who held class discussions as part of their enactment of the formative assessment tasks had more overall idea units that were coded as corresponding with a learning progression level. This indicates that if students’ opportunities to learn through formative assessment tasks were related to their opportunities to share their thinking, then there were fewer opportunities to do so in the physics classrooms than in two of the three biology classrooms (Riley and Noel). Students had opportunities to share their ideas in the two chemistry classrooms as well, as the structure of the task created space for students to make and then share their models in whole-class conversations. At the same time, we note that in Jamie’s physics classroom, there were many opportunities for the teacher to ask questions and listen to students during small-group discussions, rather than in a whole-class setting.

We also note that only Noel’s classroom featured student ideas that were coded at the top level of the learning progression. In their enactment of the Shrimp Ecosphere task, Noel engaged students in a brief discussion about how the shrimp ecosphere model was alike and different from the Earth. This allowed students to engage with making predictions and generalizations beyond the presented phenomenon. This is an unusual finding as the biology teachers enacted the shrimp ecosphere task early in the unit.

5. Discussion

In this paper, we have explored the ways in which a long-term, school-based professional learning based upon a three-dimensional learning progression can organize formative assessment across high school physics, chemistry, and biology classrooms. We analyzed task enactments from nine teachers at three schools and found that there was a certain amount of alignment of routines within enactments within content areas. At the same time, our analyses showed that students had differential opportunities to share and work with their ideas in whole-class discussions. Key to ambitious teaching reforms are discourse-based opportunities for sensemaking along with formative assessment, as prior studies show their importance for supporting student learning (e.g., [43,44]).

We also observed that students’ opportunities to reason with ideas in the learning progression organizing the study varied in relation to the way the tasks were structured; the more-structured physics task was related to fewer whole-class conversations, whereas the chemistry and biology tasks, which included less-structured space for students to make their models, included more discursive space for students to share their ideas. These findings suggest that even when tasks are designed to create high cognitive demand, which is a key element of ambitious approaches to teaching, they may still be enacted in ways that prevent the intended cognitive demand from being realized. These results are similar to those of Kang et al. (2014) [17], who found that in some instances, teachers lowered the cognitive demand of tasks when enacting them with students. Our findings also align with those of Buell (2020) [38], whose analysis of these and related tasks revealed that less-structured tasks created more ample space for students to create and share their models.

Ultimately, our analysis indicates that students’ opportunities to engage with ambitious learning in science classrooms are supported by, but not guaranteed by, the design of high-quality formative assessment tasks.

These findings, beyond coinciding with the emergent understanding of teaching in the current wave of science education reforms, may also support desired instructional shifts. Since the advent of the NGSS, it has been clear that assessment, both classroom- and large-scale, has the potential to serve as a barrier to changes in instruction if assessment does not emphasize the integrative—that is, highly cognitively demanding—nature of science [15,45,46]. This study suggests, in line with prior findings, that classroom assessments intended to engage students in complex scientific thinking are not guaranteed to achieve this goal ‘out-of-the-box’, and that attention must be paid to how tasks might be enacted in order to increase the likelihood that a given task will ‘land’ as its designer intends. Further, task design must engage with the complex and dynamic sociocultural nature of classrooms in which teachers navigate assessment practices [47] and the context-laden nature of assessment itself [48]. This suggests that there is no one-size-fits-all approach to task development.

Given that this paper is a small investigation of a limited number of formative assessment tasks, we draw these conclusions lightly. It is possible that another set of tasks, related to another underlying representation, might yield different findings, and future research should explore this possibility. That said, we acknowledge that the uniqueness of the design of this project—which allowed us to look at task designs across high school science classes usually treated separately—helped us to take a zoomed-out view of how tasks related to an underlying common representation created space for students to share their thinking. The in-depth coding we conducted was time-consuming but allowed us to take a high-level view of the different opportunities to reason in these different science classrooms.

We recognize that our study was conducted with a small sample size, and that a larger study may yield more robust findings about how formative assessment tasks can organize learning across classrooms. We also acknowledge that we are limited in our understanding of how formative assessment may have functioned on different levels, such as one-on-one feedback and self- and peer assessment, as the nature of our videorecorded classroom observations did not allow us to look at student–student interactions, or to reliably capture audio of teachers speaking with students individually or in small groups. Future studies may work with whole schools or districts to obtain larger samples of teachers and students, as well as to create recordings that would enable tracking students individually, as has been done in other studies (e.g., [49]).

A key finding of this study is that, even though tasks were designed in common, and with a common underlying learning framework, there was variation in the ways these tasks were enacted. Even tasks that were constructed for students to share their ideas were not always facilitated in ways that created space for teachers to take up and work with those ideas. As such, the paper suggests the importance of not only designing better formative assessment tasks to support students’ three-dimensional learning, but also ongoing professional learning to support teachers in using those tasks in ways that will center on students’ ideas about compelling science phenomena.

Author Contributions

Conceptualization, C.D.-R. and E.M.F.; Methodology, C.D.-R. and E.M.F.; Validation, E.M.F.; Formal analysis, C.D.-R., E.M.F., S.R.S. and A.B.; Investigation, C.D.-R., E.M.F., S.R.S. and A.B.; Resources, E.M.F.; Data curation, C.D.-R.; Writing—original draft, C.D.-R. and E.M.F.; Writing—review & editing, C.D.-R., E.M.F., S.R.S. and A.B.; Visualization, C.D.-R. and E.M.F.; Supervision, E.M.F.; Project administration, E.M.F.; Funding acquisition, E.M.F. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Science Foundation under Grant No. 1561751. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and approved by the Institutional Review Board of the University of Colorado Boulder (protocol code 14-0689, approved 8 August 2018).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Due to our IRB agreement, we are not able to share the original video or audio files, but we can share the learning progression, professional learning materials, and co-designed tasks, as well as the anonymized, coded data.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Typed Out Student Work for Figure 3, Skateboarder Task

| 1470 Joules (9.8)(50 kg)(3 m) | KE = 735 J GPE = 735 J = 735 J KE = GPE | 1470 = (50) = 58.8 = = 7.6 | Assuming there is no friction the max height is 3 m. This is because the total energy of the skater will stay the same. This allows skaters to … the ramp without losing energy. | The skater will be able to reach his … because friction converts skaters’ kinetic energy to thermal energy causing the skater to slow down and eventually stop at the bottom of the ramp. |

Appendix B. Typed Out Student Work for Kinetic Cup Task, Figure 4

Appendix C. Typed Out Student Work for Figure 5: Shrimp Ecosphere Task

- What I’m wondering:

- Can the tank thing/fishbowl open?

- Explain your model in terms of flow of energy and cycling of matter (carbon):

- The sunlight comes through the glass, the water/H2O is inside of the tank. Carbon cycles through this model by.

Appendix D. Extension Learning Progressions Developed for Each Content Area

| Level | A Learning Progression for Modeling Energy Flows (Buell et al., 2019) [30] | Extension Progression for HS-PS3-2: Skateboarder Task | Extension Progression for HS-PS3-4: Kinetic Cup Task | Extension Progression for HS-LS2-5: Shrimp Ecosphere Task |

| 5 |

|

|

|

|

| 4 |

|

|

|

|

| 3 |

|

|

|

|

| 2 |

|

|

|

|

| 1 |

|

|

|

|

References

- National Research Council. A Framework for K-12 Science Education: Practices, Crosscutting Concepts, and Core Ideas; National Academies Press: Washington, DC, USA, 2012. [Google Scholar]

- Nordine, L.; Lee, O. Crosscutting Concepts: Strengthening Science and Engineering Learning; National Science Teachers’ Association Press: Arlington, VA, USA, 2021. [Google Scholar]

- Furtak, E.M.; Badrinarayan, A.; Penuel, W.R.; Duwe, S.; Patrick-Stuart, R. Assessment of Crosscutting Concepts: Creating Opportunities for Sensemaking. In Crosscutting Concepts Strengthening Science and Engineering Learning; Nordine, L., Lee, O., Eds.; National Science Teachers’ Association Press: Arlington, VA, USA, 2021. [Google Scholar]

- Schweingruber, H.; Quinn, H.; Pruitt, S.; Keller, T.E. Development of The Framework and Next Generation Science Standards: History and Reflections; National Academies Press: Washington, DC, USA, 2023; Available online: https://www.nationalacademies.org/documents/embed/link/LF2255DA3DD1C41C0A42D3BEF0989ACAECE3053A6A9B/file/D66AD07B7886BA0259D11FB90DF9A469D72BAFFD98A2?noSaveAs=1 (accessed on 15 May 2024).

- Putnam, R.T.; Borko, H. What Do New Views of Knowledge and Thinking Have to Say About Research on Teacher Learning? Educ. Res. 2000, 29, 4–15. [Google Scholar] [CrossRef]

- Windschitl, M.; Thompson, J.; Braaten, M. Ambitious Pedagogy by Novice Teachers: Who Benefits From Tool-Supported Collaborative Inquiry into Practice and Why? Teach. Coll. Rec. 2011, 113, 1311–1360. [Google Scholar] [CrossRef]

- National Research Council. Next Generation Science Standards: For States, By States; The National Academies Press: Washington, DC, USA, 2013. [Google Scholar]

- National Research Council. National Science Education Standards; The National Academies Press: Washington, DC, USA, 1996. [Google Scholar]

- Smith, J.B.; Lee, V.E.; Newmann, F.M. Achievement in Chicago Elementary Schools; Consortium on Chicago School Research: Chicago, IL, USA, 2001; p. 52. [Google Scholar]

- Windschitl, M.; Thompson, J.; Braaten, M. Ambitious Science Teaching; Harvard Education Press: Cambridge, MA, USA, 2018. [Google Scholar]

- National Academies of Science, Engineering and Medicine. Science and Engineering for Grades 6–12: Investigation and Design at the Center; National Academies Press: Washington, DC, USA, 2019. [Google Scholar]

- Tekkumru-Kisa, M.; Kisa, Z.; Hiester, H. Intellectual work required of students in science classrooms: Students’ opportunities to learn science. Res. Sci. Educ. 2021, 51, 1107–1121. [Google Scholar] [CrossRef]

- Scott, P.; Mortimer, E.F.; Aguiar Or, G. The tension between authoritative and dialogic discourse: A fundamental characteristic of meaning making interactions in high school science lessons. Sci. Educ. 2006, 90, 605–631. [Google Scholar] [CrossRef]

- Shepard, L.; Penuel, W.; Pellegrino, J. Using Learning and Motivation Theories to Coherently Link Formative Assessment, Grading Practices, and Large-Scale Assessment. Educ. Meas. Issues Pract. 2018, 37, 21–34. [Google Scholar] [CrossRef]

- National Research Council. Developing Assessments for the Next Generation Science Standards; National Academies Press: Washington, DC, USA, 2014. [Google Scholar]

- National Academies of Sciences, Engineering, and Medicine. How People Learn II: Learners, Contexts, and Cultures; The National Academies Press: Washington, DC, USA, 2018. [Google Scholar]

- Kang, H.; Thompson, J.; Windschitl, M. Creating Opportunities for Students to Show What They Know: The Role of Scaffolding in Assessment Tasks. Sci. Educ. 2014, 98, 674–704. [Google Scholar] [CrossRef]

- Black, P.; Wiliam, D. Assessment and classroom learning. Assess. Educ. Princ. Policy Pract. 1998, 5, 7–74. [Google Scholar] [CrossRef]

- Council of Chief State School Officers. Revising the Definition of Formative Assessment. 2018. Available online: https://ccsso.org/resource-library/revising-definition-formative-assessment (accessed on 15 May 2024).

- Bennett, R.E. Formative assessment: A critical review. Assess. Educ. Princ. Policy Pract. 2011, 18, 5–25. [Google Scholar] [CrossRef]

- Kang, H.; Windschitl, M.; Stroupe, D.; Thompson, J. Designing, launching, and implementing high quality learning opportunities for students that advance scientific thinking. J. Res. Sci. Teach. 2016, 53, 1316–1340. [Google Scholar] [CrossRef]

- Dini, V.; Sevian, H.; Caushi, K.; Orduña Picón, R. Characterizing the formative assessment enactment of experienced science teachers. Sci. Educ. 2020, 104, 290–325. [Google Scholar] [CrossRef]

- Tekkumru-Kisa, M.; Stein, M.K. Learning to See Teaching in New Ways: A Foundation for Maintaining Cognitive Demand. Am. Educ. Res. J. 2015, 52, 105–136. [Google Scholar] [CrossRef]

- Otero, V.; Nathan, M.J. Preservice Elementary Teachers’ Views of Their Students’ Prior Knowledge of Science. J. Res. Sci. Teach. 2008, 45, 497–523. [Google Scholar] [CrossRef]

- Klinger, D.A.; McDivitt, P.R.; Howard, B.B.; Munoz, M.A.; Rogers, W.T.; Wylie, E.C. The Classroom Assessment Standards for PreK-12 Teachers; Kindle Direct Press: Seattle, WA, USA, 2015. [Google Scholar]

- Corcoran, T.; Mosher, F.A.; Rogat, A. Learning Progressions in Science: An Evidence-Based Approach to Reform; Consortium for Policy Research in Education: Philadelphia, PA, USA, 2009. [Google Scholar]

- Alonzo, A.C.; Gotwals, A.W. Learning Progressions in Science: Current Challenges and Future Directions; Sense Publishers: Rotterdam, The Netherlands, 2012. [Google Scholar]

- Harris, C.J.; Krajcik, J.S.; Pellegrino, J.W.; DeBarger, A.H. Designing Knowledge-In-Use Assessments to Promote Deeper Learning. Educ. Meas. Issues Pract. 2019, 38, 53–67. [Google Scholar] [CrossRef]

- Duschl, R.; Maeng, S.; Sezen, A. Learning progressions and teaching sequences: A review and analysis. Stud. Sci. Educ. 2011, 47, 123–182. [Google Scholar] [CrossRef]

- Buell, J.Y.; Briggs, D.C.; Burkhardt, A.; Chattergoon, R.; Fine CG, M.; Furtak, E.M.; Henson, K.; Mahr, B.; Tayne, K. A Learning Progression for Modeling Energy Flows in Systems; Center for Assessment, Design, Research and Evaluation (CADRE): Boulder, CO, USA, 2019; Available online: https://www.colorado.edu/cadre/sites/default/files/attached-files/report_-_a_learning_progression_for_modeling_energy_flows_in_systems.pdf (accessed on 15 May 2024).

- Furtak, E.M.; Heredia, S.C.; Morrison, D. Formative Assessment in Science Education: Mapping a Shifting Terrain. In Handbook of Formative Assessment in the Disciplines; Routledge: New York, NY, USA, 2019; pp. 97–125. [Google Scholar]

- Furtak, E.M.; Kiemer, K.; Circi, R.K.; Swanson, R.; de León, V.; Morrison, D.; Heredia, S.C. Teachers’ formative assessment abilities and their relationship to student learning: Findings from a four-year intervention study. Instr. Sci. 2016, 44, 267–291. [Google Scholar] [CrossRef]

- Thompson, J.; Windschitl, M.; Braaten, M. Developing a Theory of Ambitious Early-Career Teacher Practice. Am. Educ. Res. J. 2013, 50, 574–615. [Google Scholar] [CrossRef]

- Sandoval, W. Conjecture Mapping: An Approach to Systematic Educational Design Research. J. Learn. Sci. 2014, 23, 18–36. [Google Scholar] [CrossRef]

- Penuel, W.R.; Fishman, B.J.; Haugan Cheng, B.; Sabelli, N. Organizing Research and Development at the Intersection of Learning, Implementation, and Design. Educ. Res. 2011, 40, 331–337. [Google Scholar] [CrossRef]

- Furtak, E.M.; Heredia, S.C. Exploring the influence of learning progressions in two teacher communities. J. Res. Sci. Teach. 2014, 51, 982–1020. [Google Scholar] [CrossRef]

- Tekkumru-Kisa, M.; Stein, M.K.; Schunn, C. A framework for analyzing cognitive demand and content-practices integration: Task analysis guide in science. J. Res. Sci. Teach. 2015, 52, 659–685. [Google Scholar] [CrossRef]

- Buell, J.Y. Designing for Relational Science Practices. Doctoral dissertation, University of Colorado Boulder, Boulder, CO, USA, 2020. [Google Scholar]

- Lemke, J.L. Talking Science: Language, Learning, and Values; Ablex Publishing Corporation: Norwood, NJ, USA, 1990. [Google Scholar]

- Duschl, R.A.; Gitomer, D.H. Strategies and challenges to changing the focus of assessment and instruction in science classrooms. Educ. Assess. 1997, 4, 37–73. [Google Scholar] [CrossRef]

- Ruiz-Primo, M.A.; Furtak, E.M. Informal formative assessment and scientific inquiry: Exploring teachers’ practices and student learning. Educ. Assess. 2006, 11, 237–263. [Google Scholar] [PubMed]

- Ruiz-Primo, M.A.; Furtak, E.M. Exploring teachers’ informal formative assessment practices and students’ understanding in the context of scientific inquiry. J. Res. Sci. Teach. 2007, 44, 57–84. [Google Scholar] [CrossRef]

- Berland, L.K.; Reiser, B.J. Making sense of argumentation and explanation. Sci. Educ. 2009, 93, 26–55. [Google Scholar] [CrossRef]

- Warren, B.; Ballenger, C.; Ogonowski, M.; Rosebery, A.S.; Hudicourt-Barnes, J. Rethinking diversity in learning science: The logic of everyday sense-making. J. Res. Sci. Teach. 2001, 38, 529–552. [Google Scholar] [CrossRef]

- Alonzo, A.C.; Ke, L. Taking stock: Existing resources for assessing a new vision of science learning. Meas. Interdiscip. Res. Perspect. 2016, 14, 119–152. [Google Scholar] [CrossRef]

- Wertheim, J.; Osborne, J.; Quinn, H.; Pecheone, R.; Schultz, S.; Holthuis, N.; Martin, P. An Analysis of Existing Science Assessments and the Implications for Developing Assessment Tasks for the NGSS. 2016. Available online: https://scienceeducation.stanford.edu/sites/g/files/sbiybj25191/files/media/file/snap_landscape_analysis_of_assessments_for_ngss_1.pdf (accessed on 15 May 2024).

- Willis, J.; Adie, L.; Klenowski, V. Conceptualising teachers’ assessment literacies in an era of curriculum and assessment reform. Aust. Educ. Res. 2013, 40, 241–256. [Google Scholar] [CrossRef]

- Mislevy, R.J. Sociocognitive Foundations of Educational Measurement; Routledge: New York, NY, USA, 2018. [Google Scholar]

- Seidel, T.; Prenzel, M.; Kobarg, M. How to Run a Video Study: Technical Report of the IPN Video Study; Waxmann: München, Germany, 2005. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).