Collaboration Skills and Puzzles: Development of a Performance-Based Assessment—Results from 12 Primary Schools in Greece

Abstract

1. Introduction

Relevant Literature

2. The Proposed Methodology

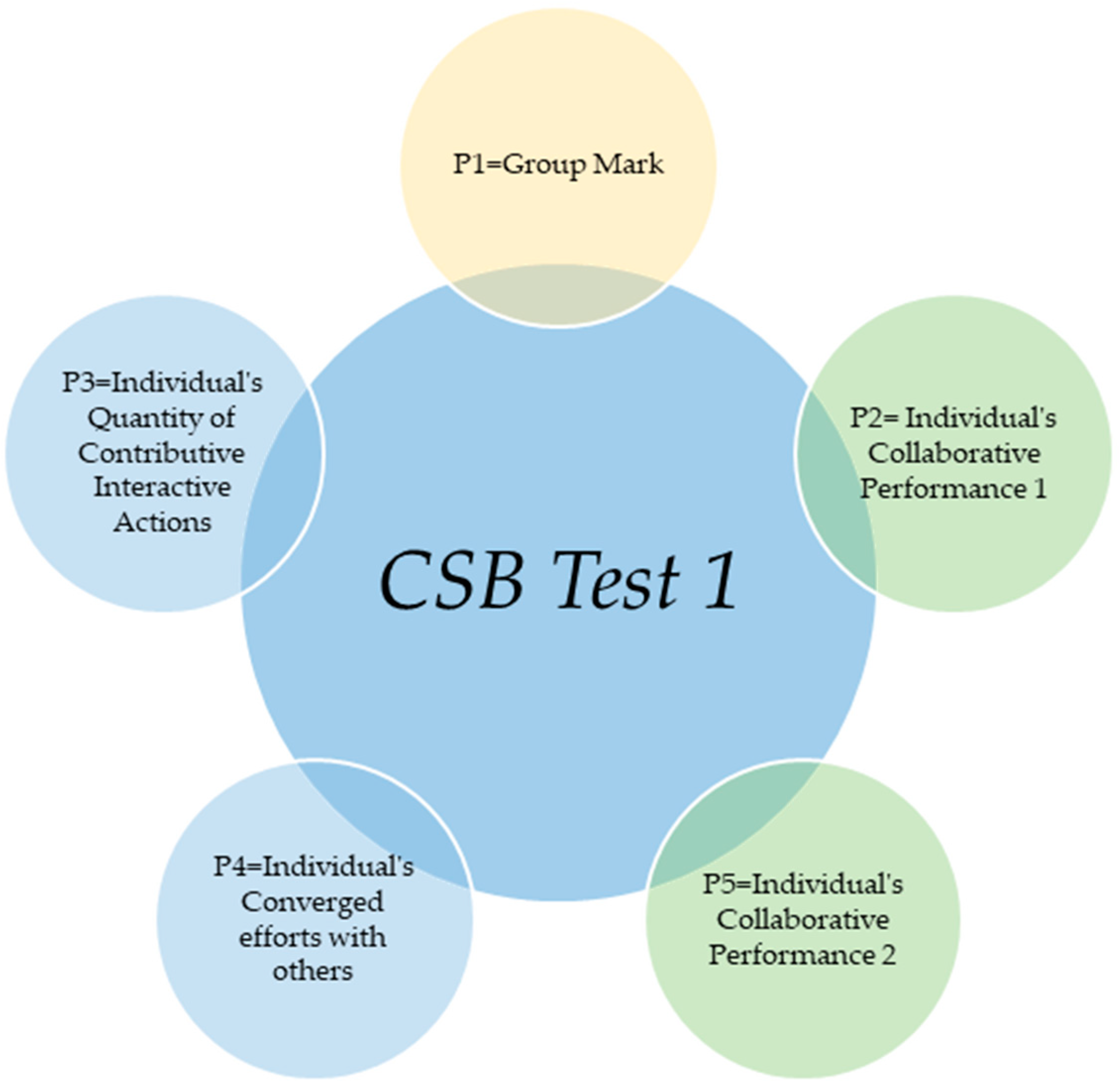

- Point 1 = TPAG% (Total Pieces Assembled by Group%)

- If, for example, 45% of pieces are assembled by the group, then five marks are awarded to each individual.

- Point 2 = IPPAG% − IPPAA%(Individual Puzzle Pieces%Assembled within Group − Individual Puzzle Pieces%Assembled Alone)

- If, for example, IPPAG = 45% and IPPAA = 30% then we have a 15% increase, consequently the mark that is awarded = 7.

- Point 3 = IPPAG%Individual Puzzle Pieces%Assembled within Group

- If, for example, IPPAG = 55%, then the awarded mark = 6.

- Point 4 = ICPGIndividual Convergence with Peers in the Group

- If 45% of pieces are within efforts of 2 then—5 marks × 0.50 = 2.5

- If 45% of pieces are within efforts of 3 then—5 marks × 0.75 = 3.75

- If 45% of pieces are within efforts of 4 then—5 marks × 1 = 5

- Point 5 =Individual Performance Efficiency in GroupGroup Completion Rate − Individual Completion Rate

- If, for example, GCR = 2.6 pieces/min and ICR = 1.4 pieces/min, then we can understand that the student within the group increased their performance in assembling the pieces to +1.2 pieces/min, which is awarded 3 marks (Table 1).

3. Methods and Procedures

3.1. Context and Participants

3.1.1. Ethical Approvals

3.1.2. Study Location and Duration

3.1.3. Participant Selection

3.2. Procedures

3.2.1. Pilot Phase

3.2.2. Main Study

3.2.3. Experimental Conditions

3.2.4. Pre-Experiment Preparations

3.2.5. Individual Puzzle Process

- Setup: Each student was assigned a desk with their code and puzzle

- Instructions:

- Students start upon the researcher’s signal;

- Students raise their hand upon completion;

- Assembly stops at the 45-min mark.

- Data Collection:

- Time of completion and photo of the completed puzzle;

- Photo of the puzzle at the 45-min mark.

3.2.6. Group Puzzle Process

- 4.

- Setup: Each group table was assigned a six-digit code sticker. Students received colored bags with 30 puzzle pieces each (red, blue, yellow and green).

- 5.

- Instructions:

- Every student within the group is assigned a colored bag (red, blue, yellow, green) of 30 puzzle pieces;

- The researcher will visit every group to assign the colored bag to the student;

- In order for a piece of a puzzle to enter on the assembly paper, there has to be another one connected to it. If the attempt fails, then the piece returns back to the individual’s side;

- The students cannot attempt connecting the puzzle pieces outside the white assembly paper;

- The students cannot give their pieces to another group member;

- The students can communicate with verbal or non-verbal patterns, but touching or grabbing the pieces that are assigned to other group members is not allowed;

- If there are groups of pieces that need to be connected with other groups of pieces, then all the group members have to coordinate and move them collectively to be connected;

- When a group finishes the assembly, the students should raise their hand.

- 6.

- Data Collection:

- Group puzzle completion time;

- Puzzle completion percentage;

- Individual pieces used;

- Photos of puzzle connections.

3.2.7. Data Recording and Retest Phase

4. Results

5. Discussion

5.1. Stakeholders’ Perspectives

5.2. Limitations and Future Research

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Shute, V.; Ke, F.; Wang, L. Assessment and Adaptation in Games. In Instructional Techniques to Facilitate Learning and Motivation of Serious Games; Advances in Game-Based Learning; Wouters, P., van Oostendorp, H., Eds.; Springer: Cham, Switzerland, 2017; pp. 59–78. [Google Scholar] [CrossRef]

- Lee, K.T.H.; Sullivan, A.; Bers, M.U. Collaboration by Design: Using Robotics to Foster Social Interaction in Kindergarten. Comput. Sch. 2013, 30, 271–281. [Google Scholar] [CrossRef]

- Demetroulis, E.A.; Theodoropoulos, A.; Wallace, M.; Poulopoulos, V.; Antoniou, A. Collaboration Skills in Educational Robotics: A Methodological Approach—Results from Two Case Studies in Primary Schools. Educ. Sci. 2023, 13, 468. [Google Scholar] [CrossRef]

- Alam, A.; Mohanty, A. Integrated Constructive Robotics in Education (ICRE) Model: A Paradigmatic Framework for Transformative Learning in Educational Ecosystem. Cogent Educ. 2024, 11, 2324487. [Google Scholar] [CrossRef]

- Zhang, T. Exploring Children’s Mathematics Learning and Self-Regulation in Robotics. Ph.D. Thesis, University of British Columbia, Vancouver, BC, Canada, 2024. [Google Scholar]

- Lai, E.R. Collaboration: A Literature Review; Pearson Publisher: New York, NY, USA, 2011; pp. 1–23. Available online: https://www.pearsonassessments.com/hai/images/tmrs/Collaboration-Review.pdf (accessed on 11 November 2016).

- Liu, L.; Hao, J.; von Davier, A.A.; Kyllonen, P.; Zapata-Rivera, J. A Tough Nut to Crack: Measuring Collaborative Problem Solving. In Handbook of Research on Technology Tools for Real-World Skill Development; Rosen, Y., Ferrara, S., Mosharraf, M., Eds.; IGI Global: Hershey, PA, USA, 2016; pp. 344–359. [Google Scholar] [CrossRef]

- Graesser, A.C.; Fiore, S.M.; Greiff, S.; Andrews-Todd, J.; Foltz, P.W.; Hesse, F.W. Advancing the Science of Collaborative Problem Solving. Psychol. Sci. Public Interest 2018, 19, 59–92. [Google Scholar] [CrossRef]

- Evans, C.M. Measuring Student Success Skills: A Review of the Literature on Collaboration; National Center for the Improvement of Educational Assessment: Dover, NH, USA, 2020. [Google Scholar]

- Rojas, M.; Nussbaum, M.; Chiuminatto, P.; Guerrero, O.; Greiff, S.; Krieger, F.; Van Der Westhuizen, L. Assessing Collaborative Problem-Solving Skills among Elementary School Students. Comput. Educ. 2021, 175, 104313. [Google Scholar] [CrossRef]

- Nansubuga, F.; Ariapa, M.; Baluku, M.; Kim, H. Approaches to Assessment of Twenty-First Century Skills in East Africa. In The Contextualisation of 21st Century Skills: Assessment in East Africa; Springer International Publishing: Cham, Switzerland, 2024; pp. 99–116. [Google Scholar] [CrossRef]

- Zhu, M.; Wang, X.; Wang, X.; Chen, Z.; Huang, W. Application of Prompt Learning Models in Identifying the Collaborative Problem Solving Skills in an Online Task. arXiv 2024, arXiv:2407.12487v1. [Google Scholar]

- Griffin, P.; Care, E. The ATC21S Method. In Assessment and Teaching of 21st Century Skills; Springer: Dordrecht, The Netherlands, 2015; pp. 17–35. [Google Scholar] [CrossRef]

- OECD. PISA: Preparing Our Youth for an Inclusive and Sustainable World: The OECD PISA Global Competence Framework; OECD: Paris, France, 2018. [Google Scholar]

- Child, S.; Shaw, S. Collaboration in the 21st Century: Implications for Assessment; Cambridge Assessment: Cambridge, UK, 2016. [Google Scholar]

- Cox, J.; Foster, B.; Bamat, D. A Review of Instruments for Measuring Social and Emotional Learning Skills among Secondary School Students. REL 2020-010 2019. Available online: https://ies.ed.gov/ncee/edlabs/regions/northeast/pdf/REL_2020010.pdf (accessed on 5 May 2020).

- Lower, L.M.; Newman, T.J.; Anderson-Butcher, D. Validity and reliability of the teamwork scale for youth. Res. Soc. Work. Pract. 2017, 27, 716–725. [Google Scholar] [CrossRef]

- Marks, H.M. Student engagement in instructional activity: Patterns in the elementary, middle, and high school years. Am. Educ. Res. J. 2000, 37, 153–184. [Google Scholar] [CrossRef]

- Duckworth, A.L.; Peterson, C.; Matthews, M.D.; Kelly, D.R. Grit: Perseverance and passion for long-term goals. J. Personal. Soc. Psychol. 2007, 92, 1087. [Google Scholar] [CrossRef]

- Sperling, R.A.; Howard, B.C.; Miller, L.A.; Murphy, C. Measures of children’s knowledge and regulation of cognition. Contemp. Educ. Psychol. 2002, 27, 51–79. [Google Scholar] [CrossRef]

- Andrews-Todd, J.; Kerr, D. Application of Ontologies for Assessing Collaborative Problem Solving Skills. Int. J. Test. 2019, 19, 172–187. [Google Scholar] [CrossRef]

- French, B.F.; Gotch, C.M.; Immekus, J.C.; Beaver, J.L. An Investigation of the Psychometric Properties of a Measure of Teamwork Among High School Students. Psychol. Test Assess. Model. 2016, 58, 455–470. [Google Scholar]

- Wang, L.; Maccann, C.; Liu, O.L.; Roberts, R.D. Assessing Teamwork and Collaboration in High School Students. Can. J. Sch. Psychol. 2009, 24, 108–124. [Google Scholar] [CrossRef]

- Care, E.; Scoular, C.; Griffin, P. Assessment of Collaborative Problem Solving in Education Environments. Appl. Meas. Educ. 2016, 29, 250–264. [Google Scholar] [CrossRef]

- Hao, J.; Liu, L.; Kyllonen, P.; Flor, M.; von Davier, A.A. Psychometric Considerations and a General Scoring Strategy for Assessments of Collaborative Problem Solving; ETS Research Report No. RR-19-41; Educational Testing Service: Princeton, NJ, USA, 2019. [Google Scholar]

- Garcia-Mila, M.; Gilabert, S.; Erduran, S.; Felton, M. The Effect of Argumentative Task Goal on the Quality of Argumentative Discourse. Sci. Educ. 2013, 97, 497–523. [Google Scholar] [CrossRef]

- Mercer, N. The Quality of Talk in Children’s Collaborative Activity in the Classroom. Learn. Instr. 1996, 6, 359–377. [Google Scholar] [CrossRef]

- Mislevy, R.J.; Steinberg, L.S.; Almond, R.G. On the Structure of Educational Assessments. Meas. Interdiscip. Res. Perspect. 2003, 1, 3–62. [Google Scholar] [CrossRef]

- Liu, Z.; He, Z.; Yuan, J.; Lin, H.; Fu, C.; Zhang, Y.; Wang, N.; Li, G.; Bu, J.; Chen, M.; et al. Application of Immersive Virtual-Reality-Based Puzzle Games in Elderly Patients with Post-Stroke Cognitive Impairment: A Pilot Study. Brain Sci. 2022, 13, 79. [Google Scholar] [CrossRef] [PubMed]

- Nef, T.; Chesham, A.; Schütz, N.; Botros, A.A.; Vanbellingen, T.; Burgunder, J.M.; Urwyler, P. Development and Evaluation of Maze-Like Puzzle Games to Assess Cognitive and Motor Function in Aging and Neurodegenerative Diseases. Front. Aging Neurosci. 2020, 12, 87. [Google Scholar] [CrossRef]

- Monajemi, A.; Yaghmaei, M. Puzzle Test: A Tool for Non-Analytical Clinical Reasoning Assessment. Med. J. Islam. Repub. Iran 2016, 30, 438. [Google Scholar]

- Levitin, A.; Papalaskari, M.A. Using Puzzles in Teaching Algorithms. In Proceedings of the 33rd SIGCSE Technical Symposium on Computer Science Education, Cincinnati, OH, USA, 27 February–3 March 2002; pp. 292–296. [Google Scholar] [CrossRef]

- Lottering, R.; Hans, R.; Lall, M. The Impact of Crossword Puzzles on Students’ Performance: Does Pre-Exposure to Puzzles Matter? In Proceedings of the 2018 IEEE International Conference on Teaching, Assessment, and Learning for Engineering (TALE), Wollongong, NSW, Australia, 4–7 December 2018; pp. 545–550. [Google Scholar] [CrossRef]

- Shokeen, E.; Pellicone, A.J.; Weintrop, D.; Ketelhut, D.J.; Cukier, M.; Plane, J.D.; Williams-Pierce, C. Children’s Approaches to Solving Puzzles in Videogames. Int. J. Child Comput. Interact. 2024, 40, 100635. [Google Scholar] [CrossRef]

- Liu, T. Assessing Implicit Computational Thinking in Game-Based Learning: A Logical Puzzle Game Study. Br. J. Educ. Technol. 2024. [Google Scholar] [CrossRef]

- Bruffee, K.A. Sharing Our Toys: Cooperative Learning versus Collaborative Learning. Chang. Mag. High. Learn. 1995, 27, 12–18. [Google Scholar] [CrossRef]

- Brna, P.; Aspin, R. Collaboration in a Virtual World: Support for Conceptual Learning? Educ. Inf. Technol. 1998, 3, 247–259. [Google Scholar] [CrossRef]

- Johnson, D.W.; Johnson, R.T.; Smith, K. The State of Cooperative Learning in Postsecondary and Professional Settings. Educ. Psychol. Rev. 2007, 19, 15–29. [Google Scholar] [CrossRef]

- Brna, P.; Burton, M. Modelling Students Collaborating While Learning about Energy. J. Comput. Assist. Learn. 1997, 13, 194–205. [Google Scholar] [CrossRef]

- Hesse, F.; Care, E.; Buder, J.; Sassenberg, K.; Griffin, P. A Framework for Teachable Collaborative Problem Solving Skills. In Assessment and Teaching of 21st Century Skills: Methods and Approach; Griffin, P., Care, E., Eds.; Springer: Dordrecht, The Netherlands, 2015; pp. 37–56. [Google Scholar] [CrossRef]

- Crowston, K.; Rubleske, J.; Howison, J. Coordination Theory: A Ten-Year Retrospective. In Human-Computer Interaction in Management Information Systems; Zhang, P., Galletta, D., Eds.; M.E. Sharpe: Armonk, NY, USA, 2006; pp. 120–138. [Google Scholar]

- Webb, N.M. Task-Related Verbal Interaction and Mathematics Learning in Small Groups. J. Res. Math. Educ. 1991, 22, 366–389. [Google Scholar] [CrossRef]

- Noël, R.; Miranda, D.; Cechinel, C.; Riquelme, F.; Primo, T.T.; Munoz, R. Visualizing Collaboration in Teamwork: A Multimodal Learning Analytics Platform for Non-Verbal Communication. Appl. Sci. 2022, 12, 7499. [Google Scholar] [CrossRef]

- Rosen, Y. Comparability of Conflict Opportunities in Human-to-Human and Human-to-Agent Online Collaborative Problem Solving. Technol. Knowl. Learn. 2014, 18, 291–314. [Google Scholar] [CrossRef]

- Dillenbourg, P. (Ed.) What Do You Mean by Collaborative Learning. In Collaborative-Learning: Cognitive and Computational Approaches; Elsevier: Oxford, UK, 1999; pp. 1–19. [Google Scholar]

- Webb, N.M.; Nemer, K.M.; Chizhik, A.W.; Sugrue, B. Equity Issues in Collaborative Group Assessment: Group Composition and Performance. Am. Educ. Res. J. 1998, 35, 607–651. [Google Scholar] [CrossRef]

- Rafique, A.; Khan, M.S.; Jamal, M.H.; Tasadduq, M.; Rustam, F.; Lee, E.; Ashraf, I. Integrating Learning Analytics and Collaborative Learning for Improving Student’s Academic Performance. IEEE Access 2021, 9, 167812–167826. [Google Scholar] [CrossRef]

- Roschelle, J. Learning by Collaborating: Convergent Conceptual Change. J. Learn. Sci. 1992, 2, 235–276. [Google Scholar] [CrossRef]

- Lench, S.; Fukuda, E.; Anderson, R. Essential Skills and Dispositions: Developmental Frameworks for Collaboration, Communication, Creativity, and Self-Direction; Center for Innovation in Education at the University of Kentucky: Lexington, KY, USA, 2015. [Google Scholar]

- Papadogiannis, I.; Wallace, M.; Poulopoulos, V.; Vassilakis, C.; Lepouras, G.; Platis, N. An Assessment of the Effectiveness of the Remedial Teaching Education Policy. Knowledge 2023, 3, 349–363. [Google Scholar] [CrossRef]

| Point 1 | Point 2 | Point 3 | Point 4 | Point 5 | |||||

|---|---|---|---|---|---|---|---|---|---|

| 0–10% | 1 | +41% Decline | 1 | 0–10% | 1 | 0–10% | 1 | 0–0.5 pieces/min | 1 |

| 11–20% | 2 | 31–40% Decline | 2 | 11–20% | 2 | 11–20% | 2 | 0.51–1 pieces/min | 2 |

| 21–30% | 3 | 21–30% Decline | 3 | 21–30% | 3 | 21–30% | 3 | 1–1.5 pieces/min | 3 |

| 31–40% | 4 | 11–20% Decline | 4 | 31–40% | 4 | 31–40% | 4 | 1.5–2 pieces/min | 4 |

| 41–50% | 5 | 1–10% Decline | 5 | 41–50% | 5 | 41–50% | 5 | 2–2.5 pieces/min | 5 |

| 51–60% | 6 | 0–10% Improvement | 6 | 51–60% | 6 | 51–60% | 6 | 2.5–3 pieces/min | 6 |

| 61–70% | 7 | 11–20% Improvement | 7 | 61–70% | 7 | 61–70% | 7 | 3–3.5 pieces/min | 7 |

| 71–80% | 8 | 21–30% Improvement | 8 | 71–80% | 8 | 71–80% | 8 | 3.5–4 pieces/min | 8 |

| 81–90% | 9 | 31–40% Improvement | 9 | 81–90% | 9 | 81–90% | 9 | 4–4.5 pieces/min | 9 |

| 91–100% | 10 | 41%+ Improvement | 10 | 91–100% | 10 | 91–100% | 10 | 4.5+ pieces/min | 10 |

| Settings/Instruments | Test Phase | Retest Phase |

|---|---|---|

| Number of Participants | 148 | 148 |

| Gender Breakdown | 76 Boys/72 Girls | 76 Boys/72 Girls |

| Age | 11–12 | 11–12 |

| Groupings | Mixed gender of 4 | Mixed gender of 4 |

| Total Number of Groups | 37 | 37 |

| Educational Level | 6th Grade Elementary | 6th Grade Elementary |

| Individual Puzzle Time Limit | 45 min | 45 min |

| Group Puzzle Time Limit | 45 min | 45 min |

| Individual Puzzle | 120 Pieces Puzzle (Age 6+) | 120 Pieces Puzzle (Age 6+) |

| Group Puzzle | 120 Pieces Puzzle (Age 6+) | 120 Pieces Puzzle (Age 6+) |

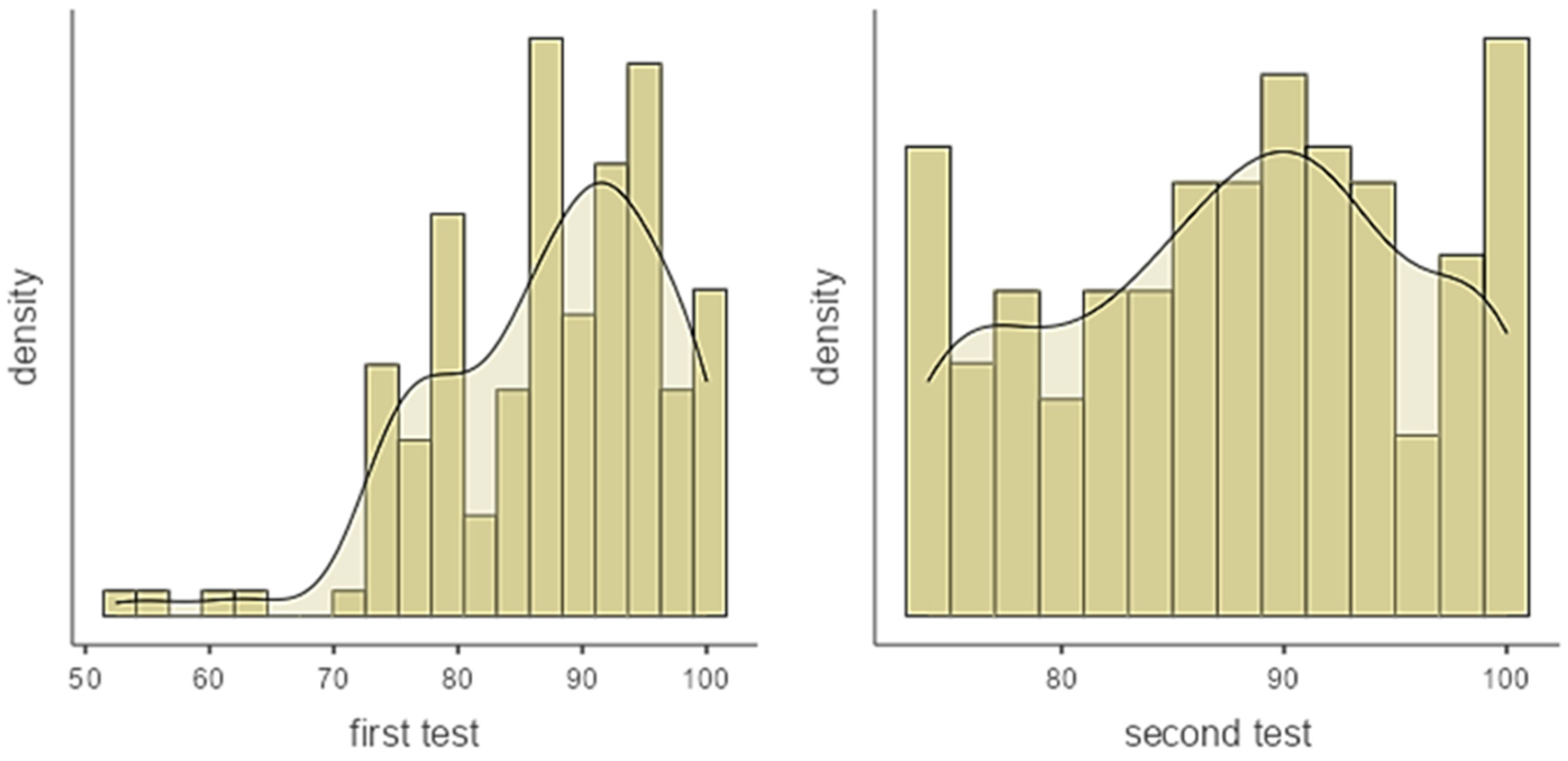

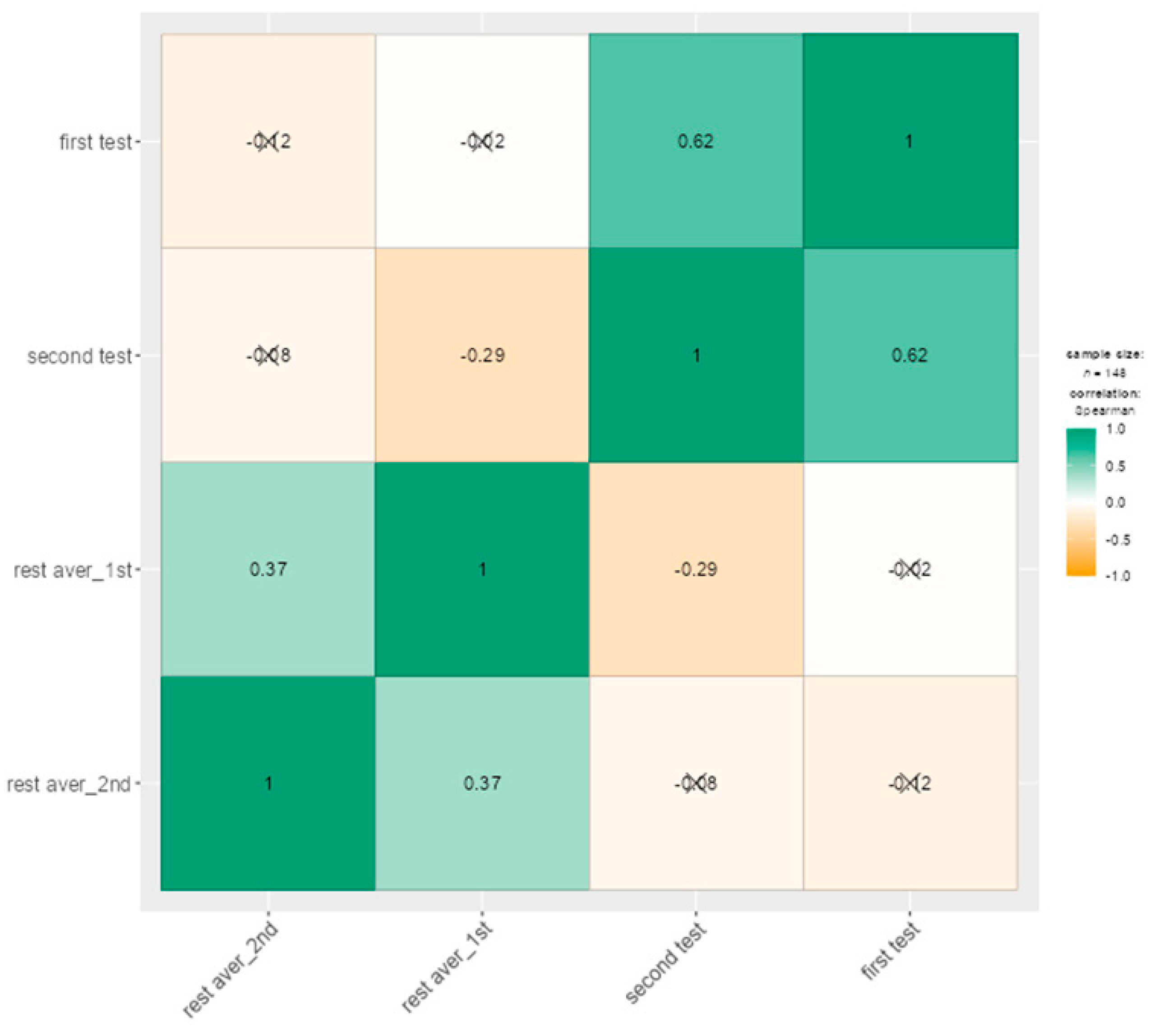

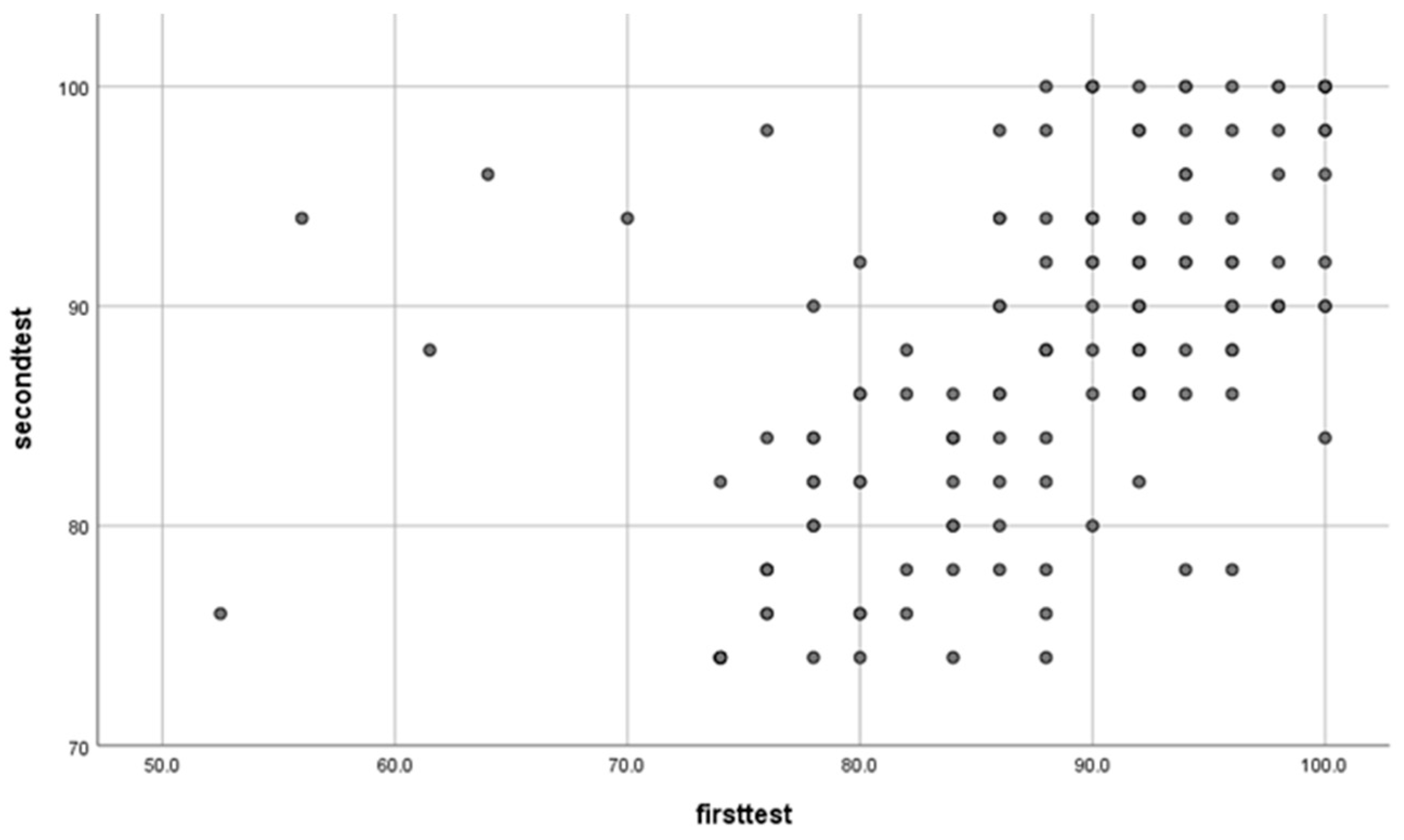

| First Test | Second Test | |

|---|---|---|

| N | 148 | 148 |

| Missing | 0 | 0 |

| Mean | 87.40 | 87.80 |

| Median | 89.00 | 88.00 |

| Standard deviation | 9.28 | 8.11 |

| Minimum | 52.50 | 74.00 |

| Maximum | 100.00 | 100.00 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Demetroulis, E.A.; Papadogiannis, I.; Wallace, M.; Poulopoulos, V.; Theodoropoulos, A.; Vasilopoulos, N.; Antoniou, A.; Dasakli, F. Collaboration Skills and Puzzles: Development of a Performance-Based Assessment—Results from 12 Primary Schools in Greece. Educ. Sci. 2024, 14, 1056. https://doi.org/10.3390/educsci14101056

Demetroulis EA, Papadogiannis I, Wallace M, Poulopoulos V, Theodoropoulos A, Vasilopoulos N, Antoniou A, Dasakli F. Collaboration Skills and Puzzles: Development of a Performance-Based Assessment—Results from 12 Primary Schools in Greece. Education Sciences. 2024; 14(10):1056. https://doi.org/10.3390/educsci14101056

Chicago/Turabian StyleDemetroulis, Emmanouil A., Ilias Papadogiannis, Manolis Wallace, Vassilis Poulopoulos, Anastasios Theodoropoulos, Nikos Vasilopoulos, Angeliki Antoniou, and Fotini Dasakli. 2024. "Collaboration Skills and Puzzles: Development of a Performance-Based Assessment—Results from 12 Primary Schools in Greece" Education Sciences 14, no. 10: 1056. https://doi.org/10.3390/educsci14101056

APA StyleDemetroulis, E. A., Papadogiannis, I., Wallace, M., Poulopoulos, V., Theodoropoulos, A., Vasilopoulos, N., Antoniou, A., & Dasakli, F. (2024). Collaboration Skills and Puzzles: Development of a Performance-Based Assessment—Results from 12 Primary Schools in Greece. Education Sciences, 14(10), 1056. https://doi.org/10.3390/educsci14101056