Abstract

Geographic information system (GIS) education empowers engineering students to make informed decisions, integrate comprehensive data, and communicate effectively through maps and visualizations. In GIS education, it is common to employ problem-based learning, which can benefit from the advantages of peer assessment methods. Among the benefits of peer assessment are the enhancement of students’ capacity for analysis and synthesis, improvement in organizational and professional communication skills, and the development of critical judgement. However, a consequence of its application is that there may be variation in students’ final grades, with no consensus in the literature on this matter. This paper explores the extent to which the application of peer ratings among students can modify student grades in the field of GIS education. This was achieved by conducting an experiment in which undergraduate engineering students assessed two problem-based learning activities carried out by their peers in two different basic GIS courses. The ratings obtained after the peer assessment were compared with the grades given by the instructors. The results allowed us to debate whether the teaching benefits of this strategy compensate for the differences between the students’ grades and those given by instructors. Although no clear pattern was found in the mean ratings awarded by the two groups of evaluators, the results show that student engagement in peer assessment was high. This experience has demonstrated that the assessments of the two groups complement each other and allow students to gain a better understanding of their ratings and how to improve their skills.

1. Introduction

Geography, as an academic discipline within the spatial sciences, is devoted to the examination of the reciprocal influences between the environment and human societies. Geography investigates dimensions such as scale, movement, regions, human–environment interaction, location, and place. Essentially, geography functions as a comprehensive domain that addresses the interplay of environmental, social, and economic aspects [1]. For this reason, it becomes a fundamental discipline for any engineer who must solve problems related to territory and people during professional activities. The assimilation of geographical concepts by students is enhanced through the integration of computer tools based on geographic information systems (GISs) [2]. A GIS can be defined as a computer system used for the input, storage, transformation, visualization, mapping, and analysis of spatial and non-spatial data, which must necessarily have coordinates that position them at a location on the Earth [3]. Due to its ability to analyze spatial data and the associated quantitative and qualitative information and layer structure, the study of geographic information is based on the superimposition of layers in order to establish relationships between the information they contain [4,5]. These characteristics make GISs a tool that integrates a multitude of disciplines, and it is essential in territorial planning processes [6], environmental impact assessments [7], transport modeling and its effects [8], urban mobility [9], allocation of uses [10], and landscape [11], among others.

Processing, classifying, and mapping data using GISs, i.e., GIS knowledge and skills, spatial thinking, and problem-solving, are key competencies for technically trained bachelor’s and master’s students [12]. For this reason, engineering-related bachelor’s and master’s curricula incorporate GISs, data processing, and mapping as important training competencies for students. The acquisition of these competencies will enable students to solve a wide range of problems related to land management [6], such as those mentioned above. The use of GISs also motivates students to develop relevant skills as they recognize the contributions of spatial analysis and geographical perspectives in their training [13]. Ref. [14] found that learning GISs helped them improve their spatial thinking, which in turn strongly correlated with their performance in the GIS course. Today, GISs are taught in departments such as geodesy, geography, photogrammetry, ecology, natural resources, forestry, civil engineering, landscaping, and urban design and planning [15].

In summary, the utilization of geographic data and spatial analysis has become indispensable in the domain of engineering pertaining to territory and the environment. Notably, GISs have established themselves in recent years as a foundational tool for engineers in this field. Consequently, GIS education holds paramount significance in engineering and natural sciences as it imparts students with essential skills in spatial analysis, data integration, visualization, resource management, and infrastructure planning. It facilitates informed decision-making, comprehensive data integration, and effective communication through maps and visualizations. In this work, we have designed and implemented a peer assessment method that reinforces the acquisition of skills in communication with maps and geodatabase creation. The students, employing critical analysis of their peers’ work, quantitatively assess whether their peers have acquired such skills. The objective of this experiment is to evaluate the extent to which the application of peer ratings among students can modify student grades in the field of teaching GISs. The results of the peer assessment are compared with the grades given by the instructors, which allows us to discuss whether the teaching benefits of this strategy compensate for the differences in the grades given by the students and those given by the instructors, who have much more experience and knowledge.

The experience was conducted in the realm of teaching Information and Communication Technologies (ICTs) in engineering, specifically in GIS instruction. This domain offers various alternatives for problem-solving, fostering the development of a critical mindset and a deeper comprehension of the activity and its content. In particular, we examined differences in two assessable problem-based activities conducted in two undergraduate programs, considering the students’ grade levels. The subsequent sub-sections of this introduction describe the fundamental aspects of teaching GISs and peer student assessment. Section 2 describes the methods employed in both the implementation of peer review, and the analysis is then outlined, followed by the presentation of the results. Lastly, the main findings are presented, and a discussion is carried out and conclusions are drawn in Section 4.

1.1. Teaching of Geographic Information Systems

The teaching of GISs has a markedly practical nature. The instructor guides the students by explaining the different tools available and their application to specific cases, which must then be resolved by the students. Teaching GISs is complex; instructors and students often struggle with technical obstacles, file management, and complex software operations [16]. This can lead to what [17] calls “buttonology”, i.e., students focusing on learning how to point and click with the mouse to complete certain functions rather than engaging in the intended reasoning. The course in which the subject is taught can be a barrier, as students must have knowledge of other subjects to enable them to use the power of GISs to solve problems. This difficulty can be solved by incorporating different GIS subjects throughout the bachelor’s or master’s degree, starting with basic levels and increasing in difficulty to show more specific applications. The instructor must also ensure that students do not simply copy what the instructor has done but are able to understand the complexity of the spatial relationships between the data and identify the appropriate tools to solve each problem. The main barrier to achieving this is the limited number of hours available for teaching these subjects [18], which require a very high practical use of software. One solution is to provide students with practical cases to solve outside the classroom that have a positive impact on their final grade.

1.2. New Rating Techniques: Student Peer Assessment

To guarantee that students take an active role in the teaching–learning process, the learning environment must be interactive and cooperative. The instructor is responsible for designing a methodology to optimize learning, in accordance with the established objectives. The teaching methodology consists of a set of methods and techniques used by the teaching staff to undertake training actions and transmit knowledge and competencies designed to achieve certain objectives, in which the instructor teaches the student to learn and to learn with a critical spirit throughout life [19,20]. The teaching–learning model requires aligning assessment methods and systems with competencies [21]. The rating or assessment system must therefore be useful both for students to learn and for instructors to improve their teaching [22,23]. Competence-oriented assessment involves four fundamental aspects [23]: (1) it must be a planned process that is coherent with the competencies to be achieved, which are in turn aligned with the professional activity; (2) it must specify the level of achievement or performance of competences that are considered adequate; and (3) it must be coherent with students’ active learning; and (4) it must be formative and continuous.

The assessment of ratings is a key aspect of any learning process [24]. It conditions what and how students learn and is the most useful tool instructors have to influence how students respond to the teaching and learning process [24,25,26]. The assessment process is based on collecting information by different means (written, oral, or observation), analyzing that information and making a judgement on it and then reaching decisions based on that judgement. It is an action that continues throughout the teaching–learning process. Its functions are formative, regulatory, pedagogical, and communicative. Its communicative function is fundamental, because it contributes to the feedback of information between students and instructors, between the students themselves, and between instructors and students, i.e., it facilitates interaction and cooperation.

Some authors consider that traditional assessment methods have become obsolete as they promote passivity and should be replaced by others that encourage dynamic learning [27]. In this context, it is increasingly common for instructors to incorporate new methods for assessing courses, despite relinquishing their dominant role in a competence that was traditionally their exclusive domain [28]. Students’ participation in the assessment method gives them the necessary skills to objectively analyze other types of documents [29].

These new assessment methods include self-assessment, in which students assess their own work; peer assessment, where students assess their peers; and co-assessment, in which both students and instructors score [30]. Peer assessment consists of “a process by which students evaluate their classmates in a reciprocal manner, applying assessment criteria” [31], or can be considered “a specific form of collaborative learning in which learners make an assessment of the learning process or product of all or some students or group of students” [32]. Peer assessment is therefore perfectly adapted to the framework of the European Higher Education Area, where assessment has become another activity within the teaching–learning process and contributes to the development of competencies [33]. Its implementation is very open: it can be anonymous or not, students can be assessors and/or assessed, and it can be carried out quantitatively or qualitatively and with or without feedback [34].

1.3. Current Practice in Peer Rating Assessment

Effective peer assessment requires the use of instruments to guide the students. One of the most common assessment tools is rubrics [35]. Rubrics are scoring guides that describe the specific characteristics of a task at various levels of performance in order to assess its execution [36]. The rubric is similar to a list of specific and essential criteria against which knowledge and/or competencies are assessed [37] in order to establish a gradation of the different criteria or elements that make up the task, competence, or content. To be helpful to students, it is essential for the instructor to offer clear instructions, provide a list of aspects to consider when determining the overall grade, and use a numerical scoring system for assessment [35]. This rating method is particularly suitable for courses with predominantly practical content and which rely on active student participation. Peer assessment has been applied in many disciplines such as computer applications [38,39], developmental psychology, music didactics and the psychology of language and thought [30], physical chemistry [40], environmental education [33], linear algebra [41], and online training [42], to name a few examples. While engaged in this evaluation process, students increase their capacity for analysis and synthesis, their organizational and professional communication skills, and the development of critical judgement [43,44,45], which they will later transfer to their own work [46]. Studies suggest that this enhancement in critical judgement correlates with improved writing skills. Additionally, the more critical students were of their peers, the higher grades they achieved [43]. It can enhance students’ performance [47], engagement with the course [48], and the quality of work they present [45]. Ref. [49] reported that the integration of peer assessment enabled over 60% of students to become more aware and reflective and learn from their mistakes. This method allows students to receive more diverse feedback compared to feedback solely from the instructor. Its application in evaluating work carried out by students in a team also yields benefits. Ref. [50] concluded that the more abrupt the decrease in peer assessments, the more pronounced the rate of change in effort. Ref. [51] showed that including peer assessment in students’ assignments improves their perception of fairness in the rating process, as it is not solely in the hands of instructors. Other studies state that students find peer review useful [52,53] and they hold a consistent standard when giving scores to peers [54].

By receiving peer ratings, students also encountered distributive and procedural justice [55]; following the implementation of peer assessment, complaints from students about fairness in grading decreased, and their overall opinion about the course improved [30]. Instructors also obtain benefits from the application of peer review, in the form of greater student motivation and learning [40] and an increase in the competencies achieved with little cost in time and effort for instructors as the students carry out the task [30]. In addition, no significant differences were found between the ratings assigned by the instructors and those assigned by peers [55]. For all of these reasons, peer rating assessment is a useful and accurate tool that should be used as another learning aid [28], provided that the students feel comfortable and engaged in the learning process [56] and that it is designed and implemented in a thoughtful way to ensure its effectiveness [57].

However, it should be noted that the weaknesses of this assessment method may raise some doubts about its reliability and validity and cause some educators to have little confidence in its use [58]. These weaknesses may include the requirement for prior training, the time commitment by the student, and the choice of explicit, clear, and simple criteria [59]. Previous experience has shown that the application of these assessment methods poses several problems, mainly related to the objectivity of the grades assigned [29]. Some authors highlight students’ possible misgivings about being assessed by their peers due to bias in the rating [41]; the influence of personal relationships on the grades assigned [53], which often causes them to be high [60]; the time at which the evaluation is carried out [35], being lower if the evaluator has already made the presentation of his/her work [61]; the fact that some students consider that their peers do not have the skills required to evaluate them [62]; or that they themselves do not consider themselves to be experts [57]. The authors also argue that in the assessment of teamwork, individuals contribute to the effort to enhance others’ perceptions and, consequently, impact the ratings [63]. This all leads some authors to consider the technique to be inadequate, as the resulting scores differ greatly from those assigned by the instructor, who is kinder to the students [29] or the reverse [64]; however, other studies show the opposite, with no major differences between the two assessment sources [41,65]. Student participation in the assessment process must therefore be supervised [29] and blind in order to reduce pressure and lack of objectivity [34].

In short, peer assessment is a learning-oriented assessment strategy that helps students gain a better understanding of the activity and its content, develop critical thinking and argumentation, be more self-critical, and detect strengths and weaknesses. It may involve awarding marks other than those given by the instructor. Despite this, there are many areas in which this evaluation methodology is not widely applied, such as, to the best of our knowledge, in the field of geography and GISs. As a result, there is a lack of knowledge regarding generalizations across disciplines on how peer assessment works. We postulate that the results, benefits, and weaknesses of this methodology may be mostly context-specific, so experiences in specific disciplines are of major importance for effective deployment and for the seeking of common features in case they exist.

2. Method

In order to address our objective and evaluate the extent to which the application of peer ratings among students can modify student grades in the field of teaching GISs, a two-phase methodology is followed in this study. The first is the implementation of the peer assessment and the second is the comparison of the grades obtained by the students without peer assessment with those obtained with peer assessment. This comparison allows us to discuss if the possible differences in the grades given by the students and those given by the instructors are compensated by the teaching benefits of this strategy reported in the literature. The two phases are described after presenting the course and the activities involved in this work.

2.1. Course Presentation and Activities

The experience was carried out in two introductory GIS courses taught in the forestry engineering (FE) bachelor’s degree (second year) and the environmental technologies engineering (ETE) bachelor’s degree (third year), both taught at the Universidad Politécnica de Madrid and with a teaching load of three credits. They include the fundamentals of GISs and basic vector and raster analysis tools and applications. The learning goals of the course are divided into six groups: (i) understanding GIS core concepts, (ii) technical proficiency, (iii) data acquisition and integration, (iv) problem-solving and analysis, (v) communication, and (vi) critical thinking and evaluation. The students enrolled in these courses do not have experience in GISs whatsoever. The preferred form of assessment is continuous, and the assessable problem-based activities include the following exercises, which account for 20% of the final mark:

Activity 1. Designing a map (A1). The objective of this problem-based activity is for students to effectively communicate results through maps and visualizations. The specific learning goal, within the fifth group, is to present findings and analyses using maps. The students must produce a map of a Spanish province from a series of vector files provided by the instructors. Students work on the symbology of the map, the legend, scales, the grid of coordinates, labels, the composition of the different elements on the page, etc. The instructors provide the students with the initial data, a statement with the objectives of the exercise, and the correction rubric to resolve the exercise.

Activity 2. Editing vector data (A2). The objective of this problem-based activity is to generate a comprehensive geodatabase that integrates environmental and geographic information. The specific learning goals, within the third group, are to produce/acquire diverse spatial data types, evaluate data quality, and perform data cleaning and preparation for analysis. The students must produce two vector layers by editing their entities from an orthophoto. The students are required to create layers representing the land uses of an area of 4 km2. The instructors provide the students with the initial orthophoto, a statement with the objectives of the exercise, and the correction rubric to resolve the exercise.

By implementing peer evaluation, both activities also contribute to the sixth learning goals group, specifically to evaluate and critique GIS methodologies and approaches.

2.2. Implementation of Student Peer Evaluation

A blind peer assessment is carried out, in which each student receives the exercises completed by other students without knowing who they are. The student rates the exercises he/she has been given in a quantitative way.

The peer assessment methodology consists of several stages. In the first stage, the instructors created the specific assignment included in the Moodle Workshop 3.11 application [66]. It combines tasks for instructors and students, and the transition from one to the other can be controlled by the teaching staff. This process has five phases: configuration, submission, evaluation, grading of evaluations, and closed. In the configuration phase, instructors provide instructions for the submission, and the grading and rubric used are explained. The system randomly assigns the submissions to be assessed by each student in the submission phase. In the evaluation phase, the instructor grades the students’ exercises submitted, and each student evaluates the work assigned to him/her. Finally, the grades are automatically calculated. It is possible to award two separate grades: (i) for the student’s work, derived from the other students’ evaluations, and (ii) for the coincidence of the evaluations made by the student with the evaluations of the same work by the rest of the students.

At the same time, the case is presented in class and the students are guided in its resolution and explained the evaluation process. They have 14 days to complete the exercise and send it without any identifying data.

Once the exercise has been completed and the deadline has expired, in the next stage, the instructors randomly give each student the exercise completed by three students. All students taking the subject were recruited and participation was voluntary. The students then quantitatively grade the exercise objectively according to the rubric provided by the instructors and submit the rating given to each of the three cases to the instructors. The students have 7 days to complete the assessment.

Finally, the instructors review the ratings, compare them with the ones they themselves have given, and assign the final grade.

2.2.1. Indications for the Evaluation of the Activities: Rubrics

In order for all of the students to assess each other’s work against the same criteria, a rubric is provided describing the assessment process applied by the instructors. The content of the rubric is as follows:

Activity 1 (A1)

From the starting mark, 10, one point will be deducted for each of the following errors, which are acknowledged as a partial failure in achieving the learning goal related to presenting findings and analyses using maps:

Lack of visual balance between the different elements in the map.

- A map element is missing.

- Lack of visibility of a map layer.

- The hierarchy in the legend is not properly established.

- The coordinate grid has intervals not properly established.

- Intervals in a legend item are not properly set.

- The graphical scale is not properly customized.

- Misspelling in the legend, titles, or labels.

- Difficulty in the legibility of a map element.

An incorrect scale will result in a failing grade.

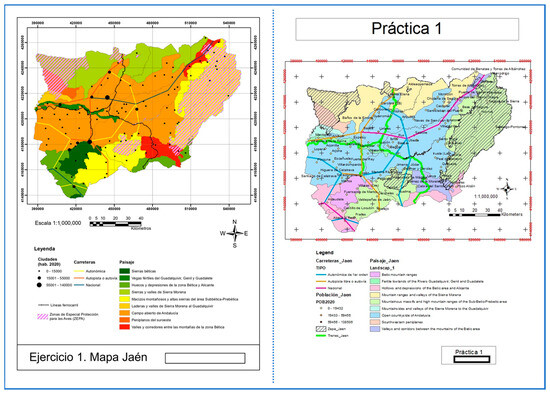

The errors detected should be indicated in a constructive way and briefly described. It is also necessary to highlight the positive points of the map. The student evaluator should focus on the work and make objective judgements. An example is shown in Figure 1, where in the right panel, the following errors are visible (in parenthesis—the corresponding numbers in the rubric): linear elements (roads) are not visually balanced to convey the information (1), the margins of the map are lacking (2), items in the legend are not properly arranged and some item titles are missing, so the hierarchy is lost (e.g., the train line is within the population of cities item) (4), the intervals in the population of cities are the default, not set to meaningful ones (6), the titles and descriptions in the legend are the default ones, so they are in two different languages, without proper labels and with underscores between words in some cases (8), and the coordinate grid labels are in different colors and above the box of the geographic data (9).

Figure 1.

Examples of students’ exercises in A1 where they scored ten points (left) and four points (right).

Activity 2 (A2)

The starting mark is 10, and points are deducted for each error made in completing the exercise according to the following:

- The land use data are not a single layer, i.e., there are several layers for the different categories: buildings, arboretum, paths, etc. (six points).

- There are gaps and/or overlaps between the land use layer polygons (one error, one point; two to five errors, two points; six to ten errors, three points; eleven to fifteen errors, four points; sixteen to twenty errors, five points; more than twenty errors, six points).

- Poor digitizing accuracy, according to the measured difference in meters when zooming in on the screen, between the digitized feature and the orthophoto image (three to five meters, two points; five to ten meters, four points; more than ten meters; five points).

- Lack of categories or information in the table (identification of ten to eight categories; one point; seven to four categories, two points; fewer than four categories, three points; for every five features without data in the attribute table, one point, up to three).

- The limits of the study area are not properly digitized (one point).

- Lack of connectivity in areas of the road layer (two points).

- More than ten missing features (one point).

- The existence of other complementary layers (e.g., a point layer) (one point added).

The errors detected should be indicated in a constructive way and briefly described. It is also necessary to highlight the positive points of the map.

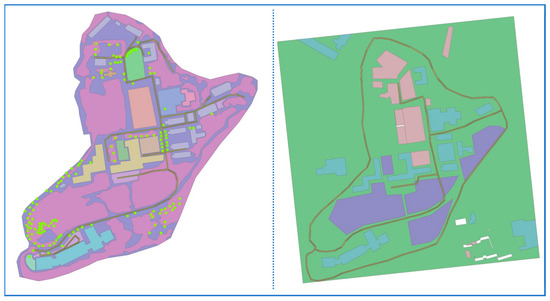

The student evaluator should focus on the work and make objective judgements. In Figure 2 an example is shown, presenting the following errors in the right panel (in parenthesis, the corresponding numbers in the rubric): four gaps, two points (2); just four categories are identified, two points (4); the limits are not properly set; one point (5); missing features, one point (compared to the left panel) (7). By digitizing several layers, this activity is linked to the learning goal of producing diverse spatial data types. Also, from the list of the rubric, errors 1, 3, 4, 5, and 7 are linked to the learning goal devoted to evaluating data quality, and errors 2 and 6 (and 8, although not an error in itself) are linked to data cleaning and preparation for analyses.

Figure 2.

Examples of students’ exercises in A2 where they scored ten points (left) and four points (right).

2.2.2. Final Rating

As indicated above, the instructors compare the marks assigned by peers with the marks they themselves awarded and assign the final mark according to the criteria described below. The final mark will have values between 0 and 10. These criteria are known by the students before they assess each other’s work and are intended to involve students in a positive way in the peer assessment process.

The criteria are as follows:

- The rating assigned to each student is the average of the three grades given by the students who assess their work.

- If the instructor’s rating coincides with the student’s rating, that rating will be assigned.

- If the instructor’s rating is higher than the student’s rating, the average of the two ratings is assigned as the grade.

- If the instructor’s rating is lower than the student’s rating, the average of the two ratings is assigned as the grade.

- If there is a significant discrepancy between the two grades, the instructor’s rating is assigned.

- Any student who gives an equal rating to the instructor’s ±1.0 points when grading the other students’ work will obtain one extra point in his/her final grade.

The discrepancy between instructors’ and students’ ratings is used as an indicator of the level of achievement of the learning goal that involves the evaluation and critique of GIS methods by students.

2.3. Statistical Analysis

The analysis phase of the results was carried out using statistical tests to compare means and differences between the instructors’ and students’ ratings. These analyses took into consideration both groups of students, corresponding to two different graduate programs, and the level of performance obtained in the two exercises (A1 and A2). The analyses were based on paired sample data, as each individual exercise was evaluated twice (by instructors and by students), so Student’s t-tests for paired samples were used. In cases where the differences between the instructors’ and students’ ratings were analyzed for each individual exercise, Kruskal–Wallis tests with chi-squared distribution were used instead of the actual rating values themselves. A significance level of 95% was applied in all cases.

3. Results

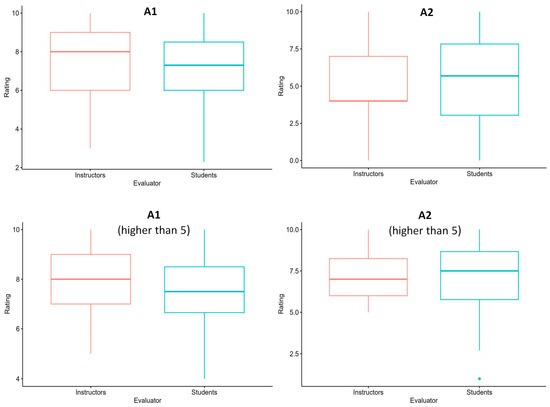

A total of 116 individual exercises from A1 and 114 from A2 were evaluated (Table 1). 35% were evaluated by women and 65% were evaluated by men. In A1, the average rating given by instructors was 7.36 out of 10, and the average rating given by students was 7.20 out of 10. In contrast, the ratings were lower for A2, with averages of 4.65 and 5.15, respectively (Figure 3 top). The variability in ratings was lower in A1, with standard deviations of 1.83 for instructor ratings and 1.60 for student ratings. In A2, the standard deviations were 2.96 and 3.06 respectively. Similarly, the range of rating values in A1 was greater for the instructor ratings, while in A2 it was greater for student ratings. The p-values from the t-tests, assuming a significance level of 95%, indicated that the mean ratings given both by instructors and students were equal in A1 and different in A2.

Table 1.

Summary of results.

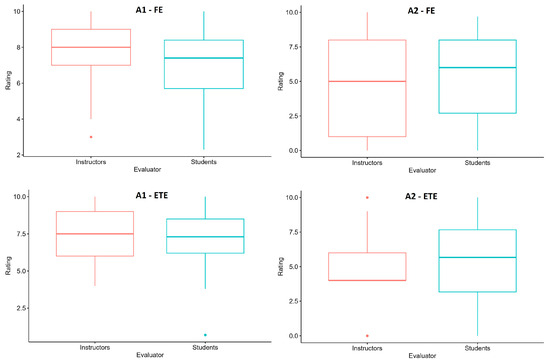

Figure 3.

Distribution of ratings by instructors and students in activities A1 and A2. The upper box plots correspond to submissions by all of the students, while the lower box plots correspond to submissions given a rating > 5 by the instructors.

Focusing on the ratings of students who passed the exercises (final rating > 5) (Table 1, Figure 3 below), the first notable point is that the pass rate was significantly higher in A1 (89.6%, n = 104) than in A2 (48.2%, n = 55). In the case of A1, the mean ratings given by instructors (7.75) and students (7.49) were not significantly equal, whereas the opposite was observed in A2, with higher values than when all of the students were considered (instructors 7.13; students 7.05).

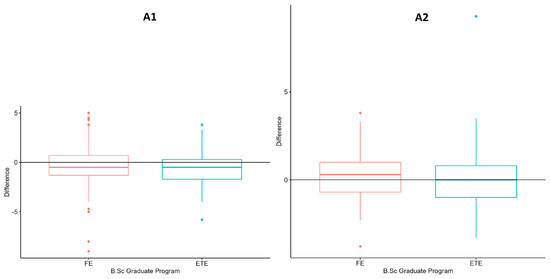

When comparing the results of the two groups for the two different graduate programs (Table 1, Figure 4), it can be seen that in A1, the mean differences are positive in the FE group and nearly zero in the ETE group, while both are slightly negative in A2 (the instructors rate below the students). In this case, the p-values from the Kruskal–Wallis tests indicate that the medians of the differences are significantly equal in both A1 (FE 0.32, ETE 0.04) and A2 (FE −0.44, ETE −0.55). It can therefore be assumed that the effect on the graduate program is not notable, and the differences between instructor and student ratings do not generally exceed 0.6 points (out of 10).

Figure 4.

Distribution of the difference between instructor and student ratings (y-axis) in both activities A1 and A2. Box plots differentiated by the B.Sc graduate programs studied are shown (x-axis).

Although the group does not generally appear to be a differentiating factor, the analyses by exercise and graduate program (Table 1, Figure 5) yielded contradictory results (Student’s t-tests). For the FE group, the instructors’ and students’ means were not significantly equal in A1 (p-value 0.001) but they were in A2 (p-value 0.32). Conversely, for the ETE group, the means were equal in A1 (p-value 0.85) and different in A2 (p-value 0.017). When using data from the entire group (FE + ETE), the variability in ratings was much higher in A2 compared to A1. In cases where equal means can be assumed, the standard deviations were 1.54 for instructors and 1.80 for students in A1 and 3.14 for teachers and 3.38 for students in A2. The heterogeneity in performance at the program level does not therefore appear to be a decisive factor in the similarity or dissimilarity of instructor and student ratings.

Figure 5.

Distribution of instructors’ and students’ ratings in activities A1 and A2. The upper box plots correspond to submissions by students in the FE B.Sc. program, while the lower box plots correspond to submissions by students in the ETE B.Sc. program.

4. Discussion and Conclusions

GISs have emerged in the last few decades as a vital tool in engineering focused on territory and environmental applications. GIS education facilitates informed decision-making, data integration, and effective communication through maps. All of the concepts related to the realm of geography are encompassed in the aspects that GISs enhance in geography training [2,67]: geographic inquiry-based learning, visual–spatial comprehension power, the definition of spatial relationships, and the development of spatial thinking skills.

This study introduces a peer assessment method that enhances communication skills in map creation and geodatabase development, with associated benefits in analytical capacity, organizational skills, and critical judgement. [43,44,45]. Given that there is no consensus in the literature on this matter [29,41,55,64,65], our main objective was to assess the extent to which the application of peer assessment can modify students’ grades in the teaching environment of ICT in engineering, specifically in the teaching of GISs. We have focused on the evaluation of the results of two evaluable problem-based activities given to second- and third-year students of Forestry Engineering and Environmental Technologies, comparing the ratings given by the students with those assigned by the instructors. We believe that the evaluation presented in this article is a valuable contribution to the knowledge of the effects of participatory evaluation activities on teaching.

New learning techniques promote dynamic learning as opposed to traditional methods [27], and many instructors have incorporated new methods for this purpose. However, their implementation implies a loss of protagonism for the instructor [28], and it is therefore essential to be aware of both its positive and negative implications. Many authors argue that student participation in the assessment method allows them to acquire the necessary skills to analyze documents objectively [29] and develop critical judgement [30]. We verified that the students demonstrated this ability, as they all correctly interpreted the rubric provided and the entire evaluation process. We also noted that the students found the experience motivating, as participation was at 85%, which reinforces the fact that the implementation of peer assessment increases the overall opinion about the course [48]. However, these methods can cause problems with the reliability of the rating assigned [29]. To avoid or at least reduce this, this study was conducted blind and under supervision, as recommended by some authors [34], and with clear and simple criteria published at the beginning of the process [35].

The results show scores of 116 and 114 submissions for two basic GIS and mapping exercises of differing difficulty. Exercise 1, on creating layouts, is less technically complex than exercise 2 on editing vector entities. The second exercise requires data management skills and the use of drawing tools that can be laborious to use. This could explain why the marks awarded in all cases are lower in exercise 2. The s.d. is higher in the distributions of marks in exercise 2 given by both instructors and students. Given that students make more errors in exercise 2, one would expect their ratings to differ from those of the instructors. However, the test results show that the means of the instructors’ marks are not significantly different from those awarded by the students in all cases, coinciding with [65]. We explored the marks given by the instructors and students in the two exercises, taking into account only passing scores and differentiating the degree courses in which the subject is framed. The instructors’ evaluation differs significantly from the students’ in half of the analyses in exercise 1 and also in half of the analyses in exercise 2 (Table 1) nor did we find any significant results indicating that the marks given by the two groups differed in terms of the course they were studying (FE or ETE).

It was expected that as the students had access to rubrics and correction criteria that they themselves had to apply, they would be able to solve the exercises and obtain a better mark than in previous years when the rubric was available but when they did not have to use it to assess someone else. However, the average marks are similar to the average for previous years, with a maximum difference of ±0.5 points with respect to the average mark from 2011 to 2022. It is true that the experience is limited to one academic year and would need to be extended over more years to obtain more robust conclusions in this regard.

Although no clear pattern was found in the results, the experience was positive overall. As far as we are aware, there are no publications in the literature that use this type of collaborative assessment methodology in GIS learning. Given that the mapping exercises allow for a great diversity of solutions, their correction by means of peer assessment provides students with the chance to observe work other than their own, and also to learn from the assessment process, which is implemented as another training activity within the course. The results show that student involvement in the peer assessment process was high. In fact, 95% of the students who submitted the exercises also carried out the peer assessment. In general, there is little difference between the assessments of instructors and peers (Figure 2), indicating a high degree of involvement by the students, who undertook the assessment activity responsibly and in a critical and constructive spirit. Further evidence of this is that 27% of the students received an extra point for their good performance as markers. The experience has shown that the evaluation of the two groups is complementary and that although we do not believe that peer marking can replace marking by instructors, it does allow students to better understand their marks and how to improve them. We lecturers have noticed that the number of requests to review marks has decreased substantially, which opens up new lines of work.

Some authors consider that one of the benefits of peer review is that it reduces the instructors’ workload [30]. This benefit was not identified, as the instructors are also involved in the review process. It actually involved more work, since it was necessary to create the environment for submitting and correcting the exercises by both peers and instructors and for awarding incentives. Although the workload is greater, there are tools to make it easier, and they are increasingly available to the educational community (virtual classrooms such as Moodle [66]). The literature reports that student learning improves [40,43,53,68] when a student peer assessment is implemented. We assessed if its application can modify students’ grades and our focus was not quantifying the benefits of these assessment techniques in terms of the increase in competencies in the analysis of documents, the development of critical judgement, and involvement in their learning. One possible line of work would be to carry out surveys before and after the activity in order to know the students’ opinions.

This study is intended to address the doubts about the application of peer assessment in the grading of students in the field of GISs. However, it has a number of limitations that could be addressed in future research. On the one hand, it is limited to a specific academic year (2022–2023); a longitudinal analysis of several years would provide more information. On the other hand, as has been said, the statistical results do not directly address the improvement in student learning engagement. It would be desirable to add student interviews or surveys in the future. However, the information we provide is sufficient to confirm that this type of evaluation is beneficial and will therefore be implemented in successive years.

Assessment is a fundamental aspect of the learning process as it conditions how students learn [24]. The new assessment methods favor the students’ incorporation into the process and increase their involvement and motivation. This situation reduces the relevance of the instructor and can lead to conflicts in the grading of students [29]. This work was unable to dispel the doubts about the possible replacement of instructor ratings by peer ratings. Further work is needed to confirm students’ improvement in the management of transversal competencies and encourage their widespread implementation, at least in the sphere of university engineering schools, where their application is limited to isolated experiences in certain subjects.

Author Contributions

Conceptualization, E.O. and B.M.; methodology, E.O., B.M. and S.G.-Á.; software, S.G.-Á.; validation, E.O., B.M. and S.G.-Á.; formal analysis, B.M.; investigation, E.O., B.M. and S.G.-Á.; data curation, E.O., B.M. and S.G.-Á.; writing—original draft preparation, E.O., B.M. and S.G.-Á.; writing—review and editing, E.O. and B.M.; visualization, B.M.; supervision, E.O. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Universidad Politécnica de Madrid in the framework of the project “Herramientas Cartográficas para el Desarrollo Urbano y Regional Sostenible” (RP220430C029).

Institutional Review Board Statement

Ethical review and approval was not requested for this study because it did not possess the characteristics that require an ethical assessment, as outlined by Research Ethics Committee in the Humanities and Social and Behavioural Sciences of the University of Helsinki.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available upon request from the corresponding author.

Acknowledgments

The authors would like to thank their students for their enthusiastic participation in the experience.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Kerski, J.J. The role of GIS in Digital Earth education. Int. J. Digit. Earth 2008, 1, 326–346. [Google Scholar] [CrossRef]

- Artvinli, E. The Contribution of Geographic Information Systems (GIS) to Geography Education and Secondary School Students’ Attitudes Related to GIS. Educ. Sci. Theory Pract. 2010, 10, 1277–1292. [Google Scholar]

- Burrough, P.A. Principles of Geographic Information Systems for Land Resources Assessment; Clarendon: Hong Kong, China, 1986. [Google Scholar]

- Heywood, I.; Cornelius, S.; Carver, S. An Introduction to Geographical Information Systems; Prentice Hall: Hoboken, NJ, USA, 2002. [Google Scholar]

- Longley, P.A.; Goodchild, M.F.; MacGuire, D.J.; Rhind, D.W. Geographical Information Systems: Principles, Techniques, Applications, and Management; Wiley and Sons: Hoboken, NJ, USA, 1999. [Google Scholar]

- Sikder, I.U. Knowledge-based spatial decision support systems: An assessment of environmental adaptability of crops. Expert Syst. Appl. 2009, 36, 5341–5347. [Google Scholar] [CrossRef]

- Rodriguez-Bachiller, A.; Glasson, J. Expert Systems and GIS for Impact Assessment; Taylor and Francis: Abingdon, UK, 2004. [Google Scholar]

- Monzón, A.; López, E.; Ortega, E. Has HSR improved territorial cohesion in Spain? An accessibility analysis of the first 25 years: 1990–2015. Eur. Plan. Stud. 2019, 27, 513–532. [Google Scholar] [CrossRef]

- Ortega, E.; Martín, B.; De Isidro, Á.; Cuevas-Wizner, R. Street walking quality of the ‘Centro’ district, Madrid. J. Maps 2020, 16, 184–194. [Google Scholar] [CrossRef]

- Santé-Riveira, I.; Crecente-Maseda, R.; Miranda-Barrós, D. GIS-based planning support system for rural landuse allocation. Comput. Electron. Agric. 2008, 63, 257–273. [Google Scholar] [CrossRef]

- Martín, B.; Ortega, E.; Martino, P.; Otero, I. Inferring landscape change from differences in landscape character between the current and a reference situation. Ecol. Indic. 2018, 90, 584–593. [Google Scholar] [CrossRef]

- Schulze, W.; Kanwischer, D.; Reudenbach, C. Essential competences for GIS learning in higher education: A synthesis of international curricular documents in the GISandT domain. J. Geogr. High. Educ. 2013, 37, 257–275. [Google Scholar] [CrossRef]

- Mkhongi, F.A.; Musakwa, W. Perspectives of GIS education in high schools: An evaluation of uMgungundlovu district, KwaZulu-Natal, South Africa. Educ. Sci. 2020, 10, 131. [Google Scholar] [CrossRef]

- Lee, J.; Bednarz, R. Effect of GIS Learning on Spatial Thinking. J. Geogr. High. Educ. 2009, 33, 183–198. [Google Scholar] [CrossRef]

- Demirci, A.; Kocaman, S. Türkiye’de coğrafya mezunlarının CBS ile ilgili alanlarda istihdam edilebilme durumlarının değerlendirilmesi [Evaluation of the employability of geography graduates in GIS-related fields in Turkey]. Marmara Coğraf. Derg. 2007, 16, 65–92. [Google Scholar]

- Radinsky, J.; Hospelhorn, E.; Melendez, J.W.; Riel, J.; Washington, S. Teaching American migrations with GIS census web maps: A modified “backwards design” approach in middle-school and college classrooms. J. Soc. Stud. Res. 2014, 38, 143–158. [Google Scholar] [CrossRef]

- Marsh, M.J.; Golledge, R.G.; Battersby, S.E. Geospatial concept understanding and recognition in G6–College Students: A preliminary argument for minimal GIS. Ann. Assoc. Am. Geogr. 2009, 97, 696–712. [Google Scholar] [CrossRef]

- Johansson, T. GIS in Instructor Education—Facilitating GIS Applications in Secondary School Geography. In Proceedings of the ScanGIS’2003, The 9th Scandinavian Research Conference on Geographical Information Science, Espoo, Finland, 4–6 June 2003. [Google Scholar]

- Fernández-March, A. Metodologías activas para la formación de competencias [Active methodologies for skills training]. Educ. Siglo XXI 2006, 24, 35–56. [Google Scholar]

- Rodríguez-Jaume, M.J. Espacio Europeo de Educación Superior y Metodologías Docentes Activas: Dossier de Trabajo [European Higher Education Area and Active Teaching Methodologies: Work Dossier]; Universidad de Alicante: Alicante, Spain, 2009. [Google Scholar]

- Castejón, F.J.; Santos, M.L. Percepciones y dificultades en el empleo de metodologías participativas y evaluación formativa en el Grado de Ciencias de la Actividad Física [Perceptions and difficulties in the use of participatory methodologies and formative assessment in the Degree of Physical Activity Sciences]. Rev. Electrón. Interuniv. Form. Profr. 2011, 14, 117–126. [Google Scholar]

- Antón, M.A. Docencia Universitaria: Concepciones y Evaluación de los Aprendizajes. Estudio de Casos [Conceptions and Assessment of Learning. Case Study. Estudio de Casos]. Ph.D. Thesis, Universidad de Burgos, Burgos, Spain, 2012. [Google Scholar]

- San Martín Gutiérrez, S.; Torres, N.J.; Sánchez-Beato, E.J. La evaluación del alumnado universitario en el Espacio Europeo de Educación Superior [The assessment of university students in the European Higher Education Area]. Aula Abierta 2016, 44, 7–14. [Google Scholar] [CrossRef]

- Double, K.S.; McGrane, J.A.; Hopfenbeck, T.N. The impact of peer assessment on academic performance: A meta-analysis of control group studies. Educ. Psychol. Rev. 2020, 32, 481–509. [Google Scholar] [CrossRef]

- Brown, S.; Pickforf, R. Evaluación de Habilidades y Competencias en Educación Superior [Assessment of Skills and Competences in Higher Education]; Narcea: Asturias, Spain, 2013. [Google Scholar]

- Panadero, E.; Alqassab, M. An empirical review of anonymity effects in peer assessment, peer feedback, peer review, peer evaluation and peer grading. Assess. Eval. High. Educ. 2019, 44, 1253–1278. [Google Scholar] [CrossRef]

- Zmuda, A. Springing into active learning. Educ. Leadersh. 2008, 66, 38–42. [Google Scholar]

- Rodríguez-Esteban, M.A.; Frechilla-Alonso, M.A.; Sáez-Pérez, M.P. Implementación de la evaluación por pares como herramienta de aprendizaje en grupos numerosos. Experiencia docente entre universidades [Implementation of peer assessment as a learning tool in large groups. Inter-university teaching experience. Experiencia docente entre universidades]. Adv. Build. Educ. 2018, 2, 66–82. [Google Scholar] [CrossRef]

- Blanco, C.; Sánchez, P. Aplicando Evaluación por Pares: Análisis y Comparativa de distintas Técnicas [Applying Peer Evaluation: Analysis and Comparison of different Techniques]. In Proceedings of the Actas Simposio-Taller Jenui 2012, Ciudad Real, Spain, 1–8 July 2012. [Google Scholar]

- Bernabé Valero, G.; Blasco Magraner, S. Actas de XI Jornadas de Redes de Investigación en Docencia Universitaria: Retos de Futuro en la Enseñanza Superior: Docencia e Investigación para Alcanzar la Excelencia Académica; Universidad de Alicante: Alicante, Spain, 2013; pp. 2057–2069. [Google Scholar]

- Sanmartí, N. 10 Ideas Clave: Evaluar para Aprender [Key Ideas: Evaluate to Learn]; Graó: Castellón, Spain, 2007. [Google Scholar]

- Ibarra, M.; Rodríguez, G.; Gómez, R. La evaluación entre iguales: Beneficios y estrategias para su práctica en la universidad [Peer evaluation: Benefits and strategies for its practice at the university]. Rev. Educ. 2012, 359, 206–231. [Google Scholar]

- Bautista-Cerro, M.J.; Murga-Menoyo, M.A. La evaluación por pares: Una técnica para el desarrollo de competencias cívicas (autonomía y responsabilidad) en contextos formativos no presenciales. Estudio de caso [Peer evaluation: A technique for the development of civic competencies (autonomy and responsibility) in non-face-to-face training contexts. Case study]. In XII Congreso Internacional de Teoría de la Educación (CITE2011) [XII International Congress of Educational Theory (CITE2011)]; Universitat de Barcelona: Barcelona, Spain, 2011. [Google Scholar]

- Arruabarrena, R.; Sánchez, A.; Blanco, J.M.; Vadillo, J.A.; Usandizaga, I. Integration of good practices of active methodologies with the reuse of student-generated content. Int. J. Educ. Technol. High. Educ. 2019, 16, 10. [Google Scholar] [CrossRef]

- Luaces, O.; Díez, J.; Bahamonde, A. A peer assessment method to provide feedback, consistent grading and reduce students’ burden in massive teaching settings. Comput. Educ. 2018, 126, 283–295. [Google Scholar] [CrossRef]

- Andrade, H. Teaching with rubrics. Coll. Teach. 2005, 53, 27–31. [Google Scholar] [CrossRef]

- Purchase, H.; Hamer, J. Peer-review in practice: Eight years of Aropä. Assess. Eval. High. Educ. 2018, 43, 1146–1165. [Google Scholar] [CrossRef]

- Chang, C.C.; Tseng, K.H.; Lou, S.J. A comparative analysis of the consistency and difference among instructor assessment, student self-assessment and peer-assessment in a web-based portfolio assessment environment for high school students. Comput. Educ. 2012, 58, 303–320. [Google Scholar] [CrossRef]

- Jaime, A.; Blanco, J.M.; Domínguez, C.; Sánchez, A.; Heras, J.; Usandizaga, I. Spiral and project-based learning with peer assessment in a computer science project management course. J. Sci. Educ. Technol. 2016, 25, 439–449. [Google Scholar] [CrossRef]

- Monllor-Satoca, D.; Guillén, E.; Lana-Villarreal, T.; Bonete, P.; Gómez, R. La evaluación por pares (“peer review”) como método de enseñanza aprendizaje de la Química Física [Peer review as a teaching-learning method of Physical Chemistry]. In Jornadas de Redes de Investigación en Docencia Universitaria X. Alicante [Conference on Research Networks in University Teaching X. Alicante]; Tortosa, M.T., Álvarez, J.D., Pellín, N., Eds.; Editorial Universitat Politècnica de València: Valencia, Spain, 2012. [Google Scholar]

- Delgado, J.; Medina, N.; Becerra, M. La evaluación por pares. Una alternativa de evaluación entre estudiantes universitarios [Peer evaluation. An alternative evaluation among university students]. Rehuso Rev. Cienc. Humaníst. Soc. 2020, 5, 14–26. [Google Scholar]

- Loureiro, P.; Gomes, M.J. Online peer assessment for learning: Findings from higher education students. Educ. Sci. 2023, 13, 253. [Google Scholar] [CrossRef]

- Yalch, M.M.; Vitale, E.M.; Fordand, J.K. Benefits of Peer Review on Students’ Writing. Psychol. Learn. Teach. 2019, 18, 317–325. [Google Scholar] [CrossRef]

- Aston, K.J. ‘Why is this hard, to have critical thinking?’ Exploring the factors affecting critical thinking with international higher education students. Act. Learn. High. Educ. 2023. [Google Scholar] [CrossRef]

- Väyrynen, K.; Lutovac, S.; Kaasila, R. Reflection on peer reviewing as a pedagogical tool in higher education. Act. Learn. High. Educ. 2023, 24, 291–303. [Google Scholar] [CrossRef]

- Boud, D.; Cohen, R.; Sampson, J. Peer Learning and Assessment. Assess. Eval. High. Educ. 1999, 24, 413–426. [Google Scholar] [CrossRef]

- Li, H.; Xiong, Y.; Hunter, C.V.; Guo, X.; Tywoniw, R. Does peer assessment promote student learning? A meta-analysis. Assess. Eval. High. Educ. 2020, 45, 193–211. [Google Scholar] [CrossRef]

- Shishavan, H.B.; Jalili, M. Responding to student feedback: Individualising teamwork scores based on peer assessment. Int. J. Educ. Res. Open 2020, 1, 100019. [Google Scholar] [CrossRef]

- Gómez, M.; Quesada, V. Coevaluación o Evaluación Compartida en el Contexto Universitario: La Percepción del Alumnado de Primer Curso [Co-evaluation or Shared Evaluation in the University Context: The Perception of First Year Students]. Rev. Iberoam. Eval. Educ. 2017, 10, 9–30. [Google Scholar] [CrossRef][Green Version]

- Román-Calderón, J.P.; Robledo-Ardila, C.; Velez-Calle, A. Global virtual teams in education: Do peer assessments motivate student effort? Stud. Educ. Eval. 2021, 70, 101021. [Google Scholar] [CrossRef]

- Ion, G.; Díaz-Vicario, A.; Mercader, C. Making steps towards improved fairness in group work assessment: The role of students’ self- and peer-assessment. Act. Learn. High. Educ. 2023. [Google Scholar] [CrossRef]

- Joh, J.; Plakans, L. Peer assessment in EFL teacher preparation: A longitudinal study of student perception. Lang. Teach. Res. 2021. [Google Scholar] [CrossRef]

- Rød, J.K.; Nubdal, M. Double-blind multiple peer reviews to change students’ reading behaviour and help them develop their writing skills. J. Geogr. High. Educ. 2022, 46, 284–303. [Google Scholar] [CrossRef]

- Chang, C.C.; Tseng, J.S. Student rating consistency in online peer assessment from the perspectives of individual and class. Stud. Educ. Eval. 2023, 79, 101306. [Google Scholar] [CrossRef]

- Vander Schee, B.A.; Stovall, T.; Andrews, D. Using cross-course peer grading with content expertise, anonymity, and perceived justice. Act. Learn. High. Educ. 2022. [Google Scholar] [CrossRef]

- Rotsaert, T.; Panadero, E.; Schellens, T. Anonymity as an instructional scaffold in peer assessment: Its effects on peer feedback quality and evolution in students’ perceptions about peer assessment skills. Eur. J. Psychol. Educ. 2018, 33, 75–99. [Google Scholar] [CrossRef]

- Wanner, T.; Palmer, E. Formative self-and peer assessment for improved student learning: The crucial factors of design, instructor participation and feedback. Assess. Eval. High. Educ. 2018, 43, 1032–1047. [Google Scholar] [CrossRef]

- Agrawal, A.; Rajapakse, D.C. Perceptions and practice of peer assessments: An empirical investigation. Int. J. Educ. Manag. 2018, 32, 975–989. [Google Scholar] [CrossRef]

- Marín García, J.A. Los alumnos y los profesores como evaluadores. Aplicación a la calificación de presentaciones orales [Students and teachers as evaluators. Application to the qualification of oral presentations]. Rev. Esp. Pedagog. 2009, 242, 79–98. [Google Scholar]

- Panadero, E.; Romero, M.; Strijbos, J.W. The impact of a rubric and friendship on peer assessment: Effects on construct validity, performance, and perceptions of fairness and comfort. Stud. Educ. Eval. 2013, 39, 195–203. [Google Scholar] [CrossRef]

- McMillan, A.; Solanelles, P.; Rogers, B. Bias in student evaluations: Are my peers out to get me? Stud. Educ. Eval. 2021, 70, 101032. [Google Scholar] [CrossRef]

- Barriopedro, M.; López, C.; Gómez, M.; Rivero, A. La coevaluación como estrategia para mejorar la dinámica del trabajo en grupo: Una experiencia en Ciencias del Deporte [Co-evaluation as a strategy to improve group work dynamics: An experience in Sports Sciences]. Rev. Complut. Educ. 2016, 27, 571–584. [Google Scholar] [CrossRef]

- Tavoletti, E.; Stephens, R.D.; Dong, L. The impact of peer evaluation on team effort, productivity, motivation and performance in global virtual teams. Team Perform. Manag. Int. J. 2019, 25, 334–347. [Google Scholar] [CrossRef]

- Raposo, M.; Martínez, M. Evaluación educativa utilizando rúbrica: Un desafío para docentes y estudiantes universitarios [Educational evaluation using rubric: A challenge for teachers and university students]. Educ. Educ. 2014, 17, 499–513. [Google Scholar] [CrossRef]

- Conde, M.; Sanchez-Gonzalez, L.; Matellan-Olivera, V.; Rodriguez-Lera, F.J. Application of Peer Review Techniques in Engineering Education. Int. J. Eng. Educ. 2017, 33, 918–926. [Google Scholar]

- GATE UPM. Sección Telenseñanza [Telelearning Section]. In Manual de Moodle 3.11 para el Profesor [Moodle 3.11 Teacher's Manual]; Universidad Politécnica de Madrid: Madrid, Spain, 2022. [Google Scholar]

- Biebrach, T. What Impact Has GIS Had on Geographical Education in Secondary Schools? 2007. Available online: www.geography.org.uk/download/GA_PRSSBiebrach.doc (accessed on 28 December 2023).

- Serrano-Aguilera, J.J.; Tocino, A.; Fortes, S.; Martín, C.; Mercadé-Melé, P.; Moreno-Sáez, R.; Muñoz, A.; Palomo-Hierro, S.; Torres, A. Using Peer Review for Student Performance Enhancement: Experiences in a Multidisciplinary Higher Education Setting. Educ. Sci. 2021, 11, 71. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).