A Current Overview of the Use of Learning Analytics Dashboards

Abstract

1. Introduction

2. Methods

2.1. Research Questions

2.2. Identify the Relevant Studies

- That contained “learning analytics” and “systematic literature review” or “systematic review” in the title.

- That contained “dashboard*” in the text.

- That were published in English.

- That were published only between 2019 and April 2023.

- That did not consider “learning analytics” as one subject or considered the two, each into a separate subject, that is, “learning” and “analytics”.

2.3. Study Selection

2.4. Data Charting

3. Reporting the Results

3.1. Target Users

3.2. Visualization Elements of Dashboards

3.3. Theoretical Framework Used in the Design of Dashboards

3.4. Visualization Representations of Target Outcomes

3.5. Ethics of LA

3.6. Lacking Institutional Strategies

3.7. LAD Effectivenness

4. Trends Based on the Research Reviews

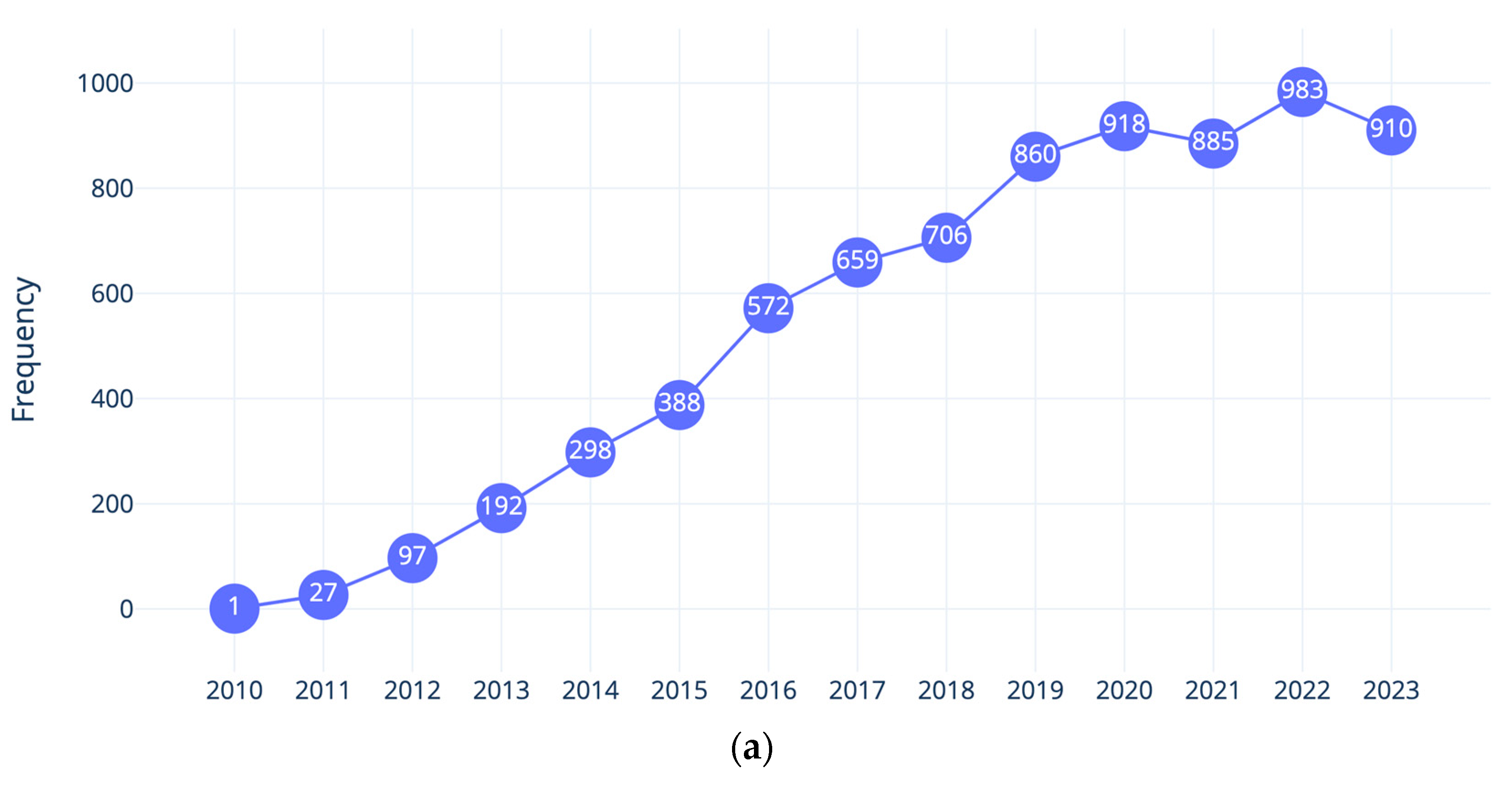

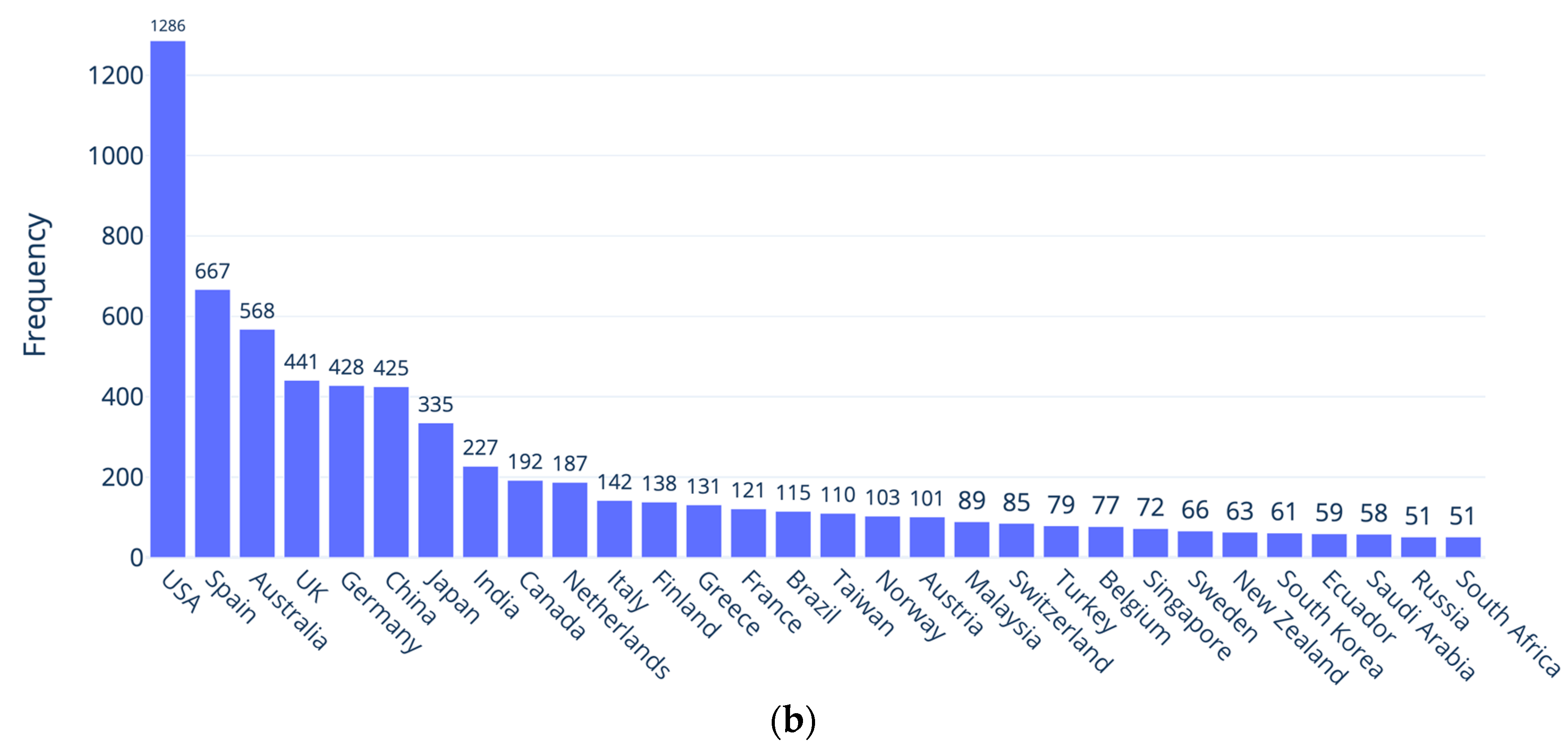

4.1. Trend 1. LADs Research Is Rapidly Evolving

4.2. Trend 2. Inequalities and Inclusiveness

4.3. Trend 3. Data Privacy, Ownership, and Legal Framework

4.4. Trend 4. Prediction and Effectiveness—Lost in Translation

4.5. Trend 5. Self-Regulated Learning and Game-Based Learning

5. Conclusions

- Establish an international research agenda to test and develop LA and LADs in a cross-cultural and cross-language manner to maximize possible benefits.

- Move the evaluation of LADs beyond functionality and usability aspects and assess the impact on the usefulness of LADs to increase understanding not only on outcomes but also learning processes.

- Examine predictive analytics of LADs and the self-reflection that they elicit in learners which results in positive behavioral and cognitive adjustments, incorporate learning sciences into the design of LADs, and use systematic experimental research methods for learning at scale.

- Examine and address possible biases towards different user groups and demographics in the design of LA and LADs.

- Consider ethical aspects of educational and personal data protection such as privacy, security, and transparency. Develop national and international legislation methods for data collection and analysis.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| LA | Learning Analytics |

| LAD | Learning Analytics Dashboards |

| SRL | Self-Regulated Learning |

Appendix A

| Study—[Reference Number] Authors, Year and Title | Summary |

|---|---|

| [2] Sahin, M., & Ifenthaler, D. (2021). Visualizations and Dashboards for Learning Analytics: A Systematic Literature Review. | Performed a descriptive systematic analysis, fitting their criteria (i.e., containing the search string ‘Learning Analytics’, and ‘Learning Analytics’ and ‘Dashboard’ or ‘Visualization’). Those articles were divided into eight summative and descriptive categories: keywords, stakeholders (target group) and year, study group (participants), visualization techniques, methods, data collection tools, variables, and theoretical background. The highest number of articles (six) that included the search string were found in the proceedings of the International Conference on Learning Analytics and Knowledge and in the Computers & Education journal (four), with a sharp increase of the number of publications from 2017 to 2021. |

| [11] Sønderlund, A.L., Hughes, E., & Smith, J. (2019). The efficacy of learning analytics interventions in higher education: A systematic review. | Synthesized the research on the effectiveness of LA intervention on higher education students’ underachievement, experience, and drop-out. The authors reviewed 11 articles from the USA, Brazil, Taiwan, and South Korea. The authors also compare past and current LA methods. |

| [12] Valle, N., Antonenko, P., Dawson, K., & Huggins-Manley, A. C. (2021). Staying on target: A systematic literature review on learner-facing learning analytics dashboards. | Studied the design of LA dashboards, the educational context where the dashboards are implemented, and the types and features of the studies in which the dashboards are used. Twenty-eight articles were included, with more than half published in scientific journals, 36% in proceedings, and the remaining were dissertations, all based in western countries. The authors debated how affects and motivation of users of LA dashboards have been disregarded in the research on the use of LA dashboards. |

| [13] Ahmad, A., Schneider, J., Griffiths, D., Biedermann, D., Schiffner, D., Greller, W., & Drachsler, H. (2022). Connecting the dots–A literature review on learning analytics indicators from a learning design perspective. | Investigated the alignment between learning design (LD) and LA. The review analyzed 161 LA articles to identify indicators based on learning design events and their associated metrics. The proposed reference framework in this review aimed to bridge the gap between these two fields. The study identified four distinct ways in which learning activities have been described in LA literature: procedural actions, LD activities, a combination of LD activities and procedural actions, and scenarios where no explicit activities were mentioned. Furthermore, 135 LA indicators were categorized into 19 clusters based on their similarities and goals, with “predictive analytics”, “performance”, and “self-regulation” being the most prevalent clusters. It also discussed the importance of aligning LA with pedagogical models to improve educational outcomes and the need for clear guidelines in this regard. |

| [14] Avila, A. G. N., Feraud, I. F. S., Solano-Quinde, L. D., Zuniga-Prieto, M., Echeverria, V., & De Laet, T. (2022, October). Learning Analytics to Support the Provision of Feedback in Higher Education: a Systematic Literature Review. | Investigated the use of LA feedback tools to enhance SRL skills in higher education. The review covered articles published over the past 10 years, resulting in the analysis of 31 papers. While LA feedback tools are considered a promising approach for improving SRL skills, the majority of the reviewed papers lack a strong theoretical basis in SRL. This review highlighted the need for further empirical research with a focus on both quantitative and qualitative approaches, encompassing a wider range of educational settings and outcome measures to better understand the impact of LA feedback tools on SRL skills, well-being, and academic performance. |

| [15] Alwahaby, H., Cukurova, M., Papamitsiou, Z., & Giannakos, M. (2022). The evidence of impact and ethical considerations of Multimodal Learning Analytics: A Systematic Literature Review. | Reviewed 100 articles published between 2010 and 2020, focusing on Multimodal Learning Analytics (MMLA) research. This review aimed to address research questions related to the real-world impact on learning outcomes and ethical considerations in MMLA research. The papers were coded based on data modalities and types of empirical evidence provided, including causal evidence, correlational evidence, descriptive evidence, anecdotal evidence, prototypes with no evidence, and machine learning. |

| [16] Tepgeç, M., & Ifenthaler, D. (2022). Learning Analytics Based Interventions: A Systematic Review of Experimental Studies. | Focused on the field of LA and its impact on educational interventions. It highlighted the growing interest in LA but noted the lack of empirical evidence on the effects of such interventions. The review included 52 papers. The findings indicated that student-facing dashboards are the most commonly employed LA-based intervention. Additionally, the review discussed the methodological aspects of these interventions, noting that data distillation for human judgment is the most prevalent approach. In terms of outcomes, the review looked at learning outcomes, user reflections, motivation, engagement, system usage behaviors, and teachers’ performance monitoring. |

| [17] Elmoazern, R. Saqr, M., Khalil, M., & Wasson, B. (2023). Learning analytics in virtual laboratories: A systematic literature review of empirical research. | Examined the current research on LA and collaboration in online laboratory environments. A total of 21 articles between 2015 and 2021 were included. Half of the studies were within the higher education and medical field, but also lower level of education. There were a broad variety of lab platforms and LA was derived from students’ log files. LA was used to measure performance, activities, perceptions, and behavior of students in virtual labs. The landscape was fragmented, and the research did not focus on learning and teaching. Standards and protocols are needed to address factors of collaboration, learning analytics, and online labs. |

| [18] Khalil, M., Slade, S., & Prinsloo, P. (2023). Learning analytics in support of inclusiveness and disable students: a systematic review. | Examined the role of LA in promoting inclusiveness for students with disabilities. A total of 26 articles were analyzed, and the results indicated that while LA began in 2011, discussions on inclusiveness in education started only in 2016. LA has the potential to foster inclusiveness by reducing discrimination and supporting marginalized groups. However, there are gaps in realizing this potential. |

| [19] Moon, J., Lee, D., Choi, G.W., Seo, J., Do, J., & Lim T. (2023). Learning analytics in seamless learning environments: a systematic review. | Reviewed the use of LA within seamless learning environments, analyzing 27 journal articles. While seamless learning, which focuses on inquiry-based and experiential learning, has grown in popularity, the integration of LA to assess student progress in these environments has also increased. However, there’s a gap in comprehensive reviews on this integration. |

| [20] Heikkinen, S., Saqr, M., Malmberg, J., & Tedre, M. (2023). Supporting self-regulated learning with learning analytics interventions—a systematic literature review. | Analyzed studies that employed LA interventions to boost SRL and found 56 which met the criteria. While various LA interventions aimed to support SRL, only 46% showed a positive impact on learning, and just four studies covered all SRL phases. The findings suggest that interventions should consider all SRL phases and call for more comparative research to determine the most effective strategies. |

| [21] Ramaswani, G., Susnjak, T., Mathrani, A., & Umer, R. (2023). Use of predisctive analytics with learning analytics dashboards: A review of case studies. | Analyzed 15 studies revealing that current LADs lack advanced predictive analytics, mostly identifying at-risk students without providing interpretative or actionable advice. Many LADs are still prototypes, and evaluations focus on functionality rather than educational impact. The study recommends creating more advanced LADs using machine learning and stresses the need for evaluations based on educational effectiveness. |

| [22] Cerratto Pargman, T., & McGrath, C. (2021). Mapping the Ethics of Learning Analytics in Higher Education: A Systematic Literature Review of Empirical Research. | Presented the latest evidence on the ethical aspects in relation to the use of LA in higher education. They reviewed 21 publications (16 journal articles and five proceedings), with a concentration of the research mostly in a few countries: the USA, Australia, and the UK. Those aspects concerned mainly the transparency, privacy, and informed consent that if not properly attended, according to the evidence, can prevent the development and implementation of LA. |

| [23] Matcha, W., Uzir, N. A., Gašević, D., & Pardo, A. (2020). A Systematic Review of Empirical Studies on Learning Analytics Dashboards: A Self-Regulated Learning Perspective. | Reviewed studies that empirically assess the impact of LADs on learning and teaching based on a SRL model. The review included 29 papers published between 2010 and 2017. Based on the review, the authors proposed a user-centered learning analytics system (MULAS). MULAS should be used to guide researchers and practitioners in understanding and developing learning environments, instead of making any design decisions on representation of data and analytics results. |

| [24] Williamson, K., & Kizilcec, R. (2022). A Review of Learning Analytics Dashboard Research in Higher Education: Implications for Justice, Equity, Diversity, and Inclusion. | Discussed issues such as justice, equity, diversity, and inclusion in relation to LA dashboards research in higher education. The review included 45 relevant publications from journals and two conference proceedings, mostly from North America, Europe, and Australia. The authors identified four themes: participant identities and researcher positionality, surveillance concerns, implicit pedagogies, and software development resources. All were needed to avoid the risk of reinforcing inequities in education, according to the authors. The review also considered how to address those issues in future LA dashboards research and development. |

| [25] Banihashem, S. K., Noroozi, O., van Ginkel, S., Macfadyen, L. P., & Biemans, H. J. (2022). A systematic review of the role of learning analytics in enhancing feedback practices in higher education. | The study reviewed the status of LA-based feedback systems in higher education. The review included 46 papers published between 2011 and 2022 and presented the classification of LA into four dimensions: (1) what (what types of data does the system capture and analyze); (2) how (how does the system perform analytics); (3) why (for what reasons does the system gather and analyze data); and (4) who (who is served by the analytics). The 46 publications are mostly from Australia, European, and Asian countries, while the USA, south America, Canada, and African countries have one. |

| [26] Daoudi, I. (2022). Learning analytics for enhancing the usability of serious games in formal education: A systematic literature review and research agenda. | Explored the use of Serious Educational Games (SEGs) and the application of Game Learning Analytics (GLA) in formal education settings. The review included 80 relevant studies, and the key findings were: (1) GLA emerged as a powerful tool to assess and improve the usability of educational games. It has the potential to enhance education by improving learning outcomes, early detection of students at risk, increasing learner engagement, providing real-time feedback, and personalizing the learning experience). (2) Multidimensional taxonomy to categorize and understand different aspects of SEGs in formal education. |

| [27] Ouhaichi, H., Spikol, D., & Vogel, B. (2023). Research trends in multimodal learning analytics: A systematic mapping study. | Identified research types, methodologies, and trends in the area of MMLA, revealing an increasing interest in these technologies. The authors reviewed 57 studies and reviews and highlighted 14 topics under four themes—learning context, learning processes, systems and modality, and technologies—that can contribute to the development of MMLA. |

| [28] Salas-Pilco, S. Z., Xiao, K., & Hu, X. (2023). Correction to: Artificial intelligence and learning analytics in teacher education: A systematic review | Discussed the integration of artificial intelligence (AI) and LA in teacher education. They analyzed 30 studies. Key findings from this review reveal a concentration on examining the behaviors, perceptions, and digital competencies of pre-service and in-service teachers regarding AI and LA in their teaching practices. The primary types of data utilized in these studies include behavioral data, discourse data, and statistical data. Furthermore, machine learning algorithms are a common feature in most of these studies. However, only a few studies mention ethical considerations. |

| [29] Apiola, M. V., Lipponen, S., Seitamaa, A., Korhonen, T., & Hakkarainen, K. (2022, July). Learning Analytics for Knowledge Creation and Inventing in K-12: A Systematic Review. | Reviewed empirical LA studies conducted in K-12 education with a focus on pedagogically innovative approaches involving technology-mediated learning, such as knowledge building and maker-centered learning. The review identified 22 articles with an emphasis on constructivist pedagogies. A majority (eight) of the included articles took place in the secondary school (grades 7–9, age 13–15). Four articles took place in primary school (grade 1–6) and four in high school. Another four articles had a variety of age groups ranging between 10 and 18 years. Finally, two articles had teachers or pre-service teacher participants. |

| [30] Hirsto, L., Saqr, M., López-Pernas, S., & Valtonen, T. (2022). A systematic narrative review of learning analytics research in K-12 and schools. | Analyzed the impact of LA research in the context of elementary level teaching. The review focused on highly cited articles retrieved from the Scopus database, with a total of 33 papers meeting the criteria. The analysis revealed that LA research in elementary education is relatively limited, especially in terms of highly cited studies. The research within this field is fragmented, with varying pedagogical goals and approaches. It was noted that LA is used both as a means for analyzing research data and as a tool to support students’ learning. The review emphasized the importance of developing more robust theoretical foundations for LA in elementary school contexts. |

| [31] Kew, S. N., & Tasir, Z. (2022). Learning analytics in online learning environment: A systematic review on the focuses and the types of student-related analytics data. | Presented a comprehensive overview of LA in online learning environments, drawing from a review of 34 articles published between 2012 and 2020. The review highlighted the key themes in LA research, such as monitoring/analysis, prediction/intervention, and the types of student-related analytics data commonly used. Additionally, it addressed challenges in LA, including data management, dissemination of results, and ethical considerations. |

References

- Siemens, G.; Baker, R.S.J.D. Learning analytics and educational data mining: Towards communication and collaboration. In Proceedings of the 2nd International Conference on Learning Analytics and Knowledge, Vancouver, BC, Canada, 29 April–2 May 2012; Association for Computing Machinery: New York, NY, USA; pp. 252–254. [Google Scholar]

- Sahin, M.; Ifenthaler, D. Visualizations and Dashboards for Learning Analytics: A Systematic Literature Review. In Visualizations and Dashboards for Learning Analytics; Sahin, M., Ifenthaler, D., Eds.; Advances in Analytics for Learning and Teaching; Springer International Publishing: Cham, Switzerland, 2021; pp. 3–22. [Google Scholar]

- Mohseni, Z.; Martins, R.M.; Masiello, I. SAVis: Learning Analytics Dashboard with Interactive Visualization and Machine Learning. Nordic Learning Analytics (Summer) Institute 2021, Stockholm. 2021. (CEUR Workshop Proceedings). Available online: http://urn.kb.se/resolve?urn=urn:nbn:se:lnu:diva-107549 (accessed on 22 March 2022).

- Mohseni, Z.; Martins, R.M.; Masiello, I. SBGTool v2.0: An Empirical Study on a Similarity-Based Grouping Tool for Students’ Learning Outcomes. Data 2022, 7, 98. [Google Scholar] [CrossRef]

- Chen, L.; Lu, M.; Goda, Y.; Yamada, M. Design of Learning Analytics Dashboard Supporting Metacognition; International Association for the Development of the Information Society: Cagliari, Italy, 2019. [Google Scholar]

- Molenaar, I.; Horvers, A.; Dijkstra, S.H.E.; Baker, R.S. Designing Dashboards to Support Learners’ Self-Regulated Learning. Radboud University Repository. 2019. Available online: https://repository.ubn.ru.nl/handle/2066/201823 (accessed on 26 April 2022).

- Verbert, K.; Ochoa, X.; De Croon, R.; Dourado, R.A.; De Laet, T. Learning analytics dashboards: The past, the present and the future. In Proceedings of the Tenth International Conference on Learning Analytics & Knowledge (LAK ’20), online, 23–27 March 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 35–40. [Google Scholar] [CrossRef]

- Lang, C.; Wise, A.F.; Merceron, A.; Gašević, D.; Siemens, G. Chapter 1: What is Learning Analytics? In Handbook of learning analytics, 2nd ed.; Society for Learning Analytics Research (SoLAR): Online, 21–25 March 2022; pp. 8–18. [Google Scholar]

- Choi, G.J.; Kang, H. The umbrella review: A useful strategy in the rain of evidence. Korean J. Pain 2022, 35, 127–128. [Google Scholar] [CrossRef]

- Aromataris, E.; Munn, Z. (Eds.) Chapter 1: JBI Systematic Reviews. In JBI Manual for Evidence Synthesis; JBI: Adelaide, Australia, 2020. [Google Scholar] [CrossRef]

- Sønderlund, A.L.; Hughes, E.; Smith, J. The efficacy of learning analytics interventions in higher education: A systematic review. Br. J. Educ. Technol. 2019, 50, 2594–2618. [Google Scholar] [CrossRef]

- Valle, N.; Antonenko, P.; Dawson, K.; Huggins-Manley, A.C. Staying on target: A systematic literature review on learner-facing learning analytics dashboards. Br. J. Educ. Technol. 2021, 52, 1724–1748. [Google Scholar] [CrossRef]

- Ahmad, A.; Schneider, J.; Griffiths, D.; Biedermann, D.; Schiffner, D.; Greller, W.; Drachsler, H. Connecting the dots—A literature review on learning analytics indicators from a learning design perspective. J. Comput. Assist. Learn. 2022, 1, 1–39. [Google Scholar] [CrossRef]

- Avila, A.-G.N.; Feraud, I.F.S.; Solano-Quinde, L.D.; Zuniga-Prieto, M.; Echeverria, V.; Laet, T.D. Learning analytics to support the provision of feedback in higher education: A systematic literature review. In Proceedings of the LACLO 2022, 17th Latin American Conference on Learning Technologies—LACLO, Armenia, Colombia, 17–21 October 2022; IEEE (Institute of Electrical and Electronics Engineers): New York, NY, USA, 2022; p. 163. Available online: https://research.monash.edu/en/publications/learning-analytics-to-support-the-provision-of-feedback-in-higher (accessed on 5 October 2023).

- Alwahaby, H.; Cukurova, M.; Papamitsiou, Z.; Giannakos, M. The Evidence of Impact and Ethical Considerations of Multimodal Learning Analytics: A Systematic Literature Review. In The Multimodal Learning Analytics Handbook; Giannakos, M., Spikol, D., Di Mitri, D., Sharma, K., Ochoa, X., Hammad, R., Eds.; Springer International Publishing: Cham, Switzerland, 2022; pp. 289–325. [Google Scholar]

- Tepgeç, M.; Ifenthaler, D. Learning Analytics Based Interventions: A Systematic Review of Experimental Studies; IADIS Press: Lisboa, Portugal, 2022; pp. 327–330. [Google Scholar]

- Elmoazen, R.; Saqr, M.; Khalil, M.; Wasson, B. Learning analytics in virtual laboratories: A systematic literature review of emirical evidence. Smart Learn. Environ. 2023, 10, 23. [Google Scholar] [CrossRef]

- Khalil, M.; Slade, S.; Prinsloo, P. Learning analytics in support of inclusiveness and disabled students: A systematic review. J. Comput. High Educ. 2023, 1–18. [Google Scholar] [CrossRef]

- Moon, J.; Lee, D.; Choi, G.W.; Seo, J.; Do, J.; Lim, T. Learning analytics in seamless learning environments: A systematic review. Interact. Learn. Environ. 2023, 1–18. [Google Scholar] [CrossRef]

- Heikkinen, S.; Saqr, M.; Malmberg, J.; Tedre, M. Supporting self-regulated learning with learning analytics interventions—A systematic literature review. Educ. Inf. Technol. 2023, 28, 3059–3088. [Google Scholar] [CrossRef]

- Ramaswami, G.; Susnjak, T.; Mathrani, A.; Umer, R. Use of Predictive Analytics within Learning Analytics Dashboards: A Review of Case Studies. Tech. Know. Learn. 2023, 28, 959–980. [Google Scholar] [CrossRef]

- Cerratto Pargman, T.; McGrath, C. Mapping the Ethics of Learning Analytics in Higher Education: A Systematic Literature Review of Empirical Research. J. Learn. Anal. 2021, 8, 123–139. [Google Scholar] [CrossRef]

- Matcha, W.; Uzir, N.A.; Gašević, D.; Pardo, A. A Systematic Review of Empirical Studies on Learning Analytics Dashboards: A Self-Regulated Learning Perspective. IEEE Trans. Learn. Technol. 2020, 13, 226–245. [Google Scholar] [CrossRef]

- Williamson, K.; Kizilcec, R. A Review of Learning Analytics Dashboard Research in Higher Education: Implications for Justice, Equity, Diversity, and Inclusion. In Proceedings of the LAK22: 12th International Learning Analytics and Knowledge Conference, online, 21–25 March 2022; Association for Computing Machinery: New York, NY, USA, 2022; pp. 260–270. [Google Scholar] [CrossRef]

- Banihashem, S.K.; Noroozi, O.; van Ginkel, S.; Macfadyen, L.P.; Biemans, H.J.A. A systematic review of the role of learning analytics in enhancing feedback practices in higher education. Educ. Res. Rev. 2022, 37, 100489. [Google Scholar] [CrossRef]

- Daoudi, I. Learning analytics for enhancing the usability of serious games in formal education: A systematic literature review and research agenda. Educ. Inf. Technol. 2022, 27, 11237–11266. [Google Scholar] [CrossRef] [PubMed]

- Ouhaichi, H.; Spikol, D.; Vogel, B. Research trends in multimodal learning analytics: A systematic mapping study. Comput. Educ. Artif. Intell. 2023, 4, 100136. [Google Scholar] [CrossRef]

- Salas-Pilco, S.Z.; Xiao, K.; Hu, X. Artificial Intelligence and Learning Analytics in Teacher Education: A Systematic Review. Educ. Sci. 2022, 12, 569. [Google Scholar] [CrossRef]

- Apiola, M.-V.; Lipponen, S.; Seitamaa, A.; Korhonen, T.; Hakkarainen, K. Learning Analytics for Knowledge Creation and Inventing in K-12: A Systematic Review. In Intelligent Computing; Arai, K., Ed.; Springer International Publishing: Cham, Switzerland, 2022; pp. 238–257. [Google Scholar]

- Hirsto, L.; Saqr, M.; López-Pernas, S.; Valtonen, T. A systematic narrative review of learning analytics research in K-12 and schools. In FLAIEC 2022: Finnish Learning Analytics and Artificial Intelligence in Education Conference 2022, Proceedings of the 1st Finnish Learning Analytics and Artificial Intelligence in Education Conference (FLAIEC 2022); Hirsto, L., López-Pernas, S., Saqr, M., Sointu, E., Valtonen, T., Väisänen, S., Eds.; CEUR Workshop Proceedings; Aachen University: Aachen, Germany, 2023; Volume 3383, pp. 60–67. [Google Scholar]

- Kew, S.N.; Tasir, Z. Learning Analytics in Online Learning Environment: A Systematic Review on the Focuses and the Types of Student-Related Analytics Data. Tech. Know. Learn. 2022, 27, 405–427. [Google Scholar] [CrossRef]

- Aguerrebere, C.; He, H.; Kwet, M.; Laakso, M.-J.; Lang, C.; Marconi, C.; Price-Dennis, D.; Zhang, H. Global Perspectives on Learning Analytics in K12 Education. In The Handbook of Learning Analytics; Lang, C., Siemens, G., Wise, A.F., Gašević, D., Merceron, A., Eds.; SoLAR: Vancouver, BC, Canada, 2022; p. 231. [Google Scholar]

- Sedrakyan, G.; Mannens, E.; Verbert, K. Guiding the choice of learning dashboard visualizations: Linking dashboard design and data visualization concepts. J. Comput. Lang. 2019, 50, 19–38. [Google Scholar] [CrossRef]

- Viberg, O.; Hatakka, M.; Bälter, O.; Mavroudi, A. The current landscape of learning analytics in higher education. Comput. Hum. Behav. 2018, 89, 98–110. [Google Scholar] [CrossRef]

- Vieira, C.; Parsons, P.; Byrd, V. Visual learning analytics of educational data: A systematic literature review and research agenda. Comput. Educ. 2018, 122, 119–135. [Google Scholar] [CrossRef]

- Reich, J. Chapter 18: Learning Analytics and Learning at Scale. In Handbook of Learning Analytics, 2nd ed.; Society for Learning Analytics Research (SoLAR): Beaumont, AB, Canada, 2022; pp. 188–195. [Google Scholar]

- Guzmán-Valenzuela, C.; Gómez-González, C.; Tagle, A.R.-M.; Vyhmeister, A.L. Learning analytics in higher education: A preponderance of analytics but very little learning? Int. J. Educ. Technol. High. Educ. 2021, 18, 23. [Google Scholar] [CrossRef] [PubMed]

- Macfadyen, L.P. Chapter 17: Insttutional Implementation of Learning Analytics—Current State, Challenges, and Guiding framwroks. In Handbook of Learning Analytics, 2nd ed.; Society for Learning Analytics Research (SoLAR): Beaumont, AB, Canada, 2022; pp. 173–186. [Google Scholar]

- Uttamchandani, S.; Quick, J. Chapter 20: An Introduction to Fairness, Absence of Bias, and Equity in Learning Analytics. In Handbook of Learning Analytics, 2nd ed.; Society for Learning Analytics Research (SoLAR): Beaumont, AB, Canada, 2022; pp. 205–212. [Google Scholar]

- Hantoobi, S.; Wahdan, A.; Al-Emran, M.; Shaalan, K. A Review of Learning Analytics Studies. In Recent Advances in Technology Acceptance Models and Theories; Al-Emran, M., Shaalan, K., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 119–134. [Google Scholar] [CrossRef]

- Harari, Y.N. 21 Lessons for the 21st Century; Vintage: London, UK, 2019. [Google Scholar]

- Winne, P.H.; Perry, N.E. Measuring self-regulated learning. In Handbook of Self-Regulation; Academic Press: San Diego, CA, USA, 2000; pp. 531–566. [Google Scholar]

- Djaouti, D.; Alvarez, J.; Jessel, J.-P.; Rampnoux, O. Origins of Serious Games. In Serious Games and Edutainment Applications; Ma, M., Oikonomou, A., Jain, L.C., Eds.; Springer: London, UK, 2011; pp. 25–43. [Google Scholar] [CrossRef]

- Barrus, A. Does Self-Regulated Learning-Skills Training Improve High-School Students’ Self-Regulation, Math Achievement, and Motivation While Using an Intelligent Tutor? ProQuest LLC.: Ann Arbor, MI, USA, 2013. [Google Scholar]

- Boekaerts, M. Subjective competence, appraisals and self-assessment. Learn. Instr. 1991, 1, 1–17. [Google Scholar] [CrossRef]

- Efklides, A. Interactions of Metacognition With Motivation and Affect in Self-Regulated Learning: The MASRL Model. Educ. Psychol. 2011, 46, 6–25. [Google Scholar] [CrossRef]

- Pintrich, P.R.; De Groot, E.V. Motivational and self-regulated learning components of classroom academic performance. J. Educ. Psychol. 1990, 82, 33–40. [Google Scholar] [CrossRef]

- Winne, P.H.; Hadwin, A.F. Studying as Self-Regulated Learning. In Metacognition in Educational Theory and Practice; Routledge: Abingdon, UK, 1998. [Google Scholar]

- Zimmerman, B.J. Becoming a Self-Regulated Learner: Which Are the Key Subprocesses? Contemp. Educ. Psychol. 1986, 11, 307–313. [Google Scholar] [CrossRef]

- Luckin, R.; Cukurova, M. Designing educational technologies in the age of AI: A learning sciences-driven approach. Br. J. Educ. Technol. 2019, 50, 2824–2838. [Google Scholar] [CrossRef]

- Teasley, S.D. Learning analytics: Where information science and the learning sciences meet. Inf. Learn. Sci. 2018, 120, 59–73. [Google Scholar] [CrossRef]

| Authors [Reference Number in this Paper] | Year | Country | # of Articles | Citations |

|---|---|---|---|---|

| Sahin & Ifenthaler [2] | 2021 | Germany | 76 | 19 |

| Sønderlund et al. [11] | 2019 | UK | 11 | 193 |

| Valle et al. [12] | 2021 | USA | 28 | 30 |

| Ahmad et al. [13] | 2022 | Germany | 161 | 8 |

| Avila et al. [14] | 2022 | Ecuador | 31 | 0 |

| Alwahaby et al. [15] | 2022 | UK | 100 | 28 |

| Tepgeç’& Ifenthaler [16] | 2022 | Germany | 52 | 2 |

| Elmoazen et al. [17] | 2023 | Finland | 21 | 14 |

| Khalil et al. [18] | 2023 | Norway | 26 | 6 |

| Moon et al. [19] | 2023 | USA | 27 | 3 |

| Heikkinen et al. [20] | 2023 | Finland | 56 | 13 |

| Ramaswami et al. [21] | 2023 | New Zealand | 15 | 5 |

| Cerratto Pargman & McGrath [22] | 2021 | Sweden | 21 | 57 |

| Matcha et al. [23] | 2020 | UK | 29 | 290 |

| Williamson & Kizilcec [24] | 2022 | USA | 47 | 34 |

| Banihashem et al. [25] | 2022 | Netherlands | 46 | 36 |

| Daoudi [26] | 2022 | Tunisia | 80 | 14 |

| Ouhaichi et al. [27] | 2023 | Sweden | 57 | 5 |

| Salas-Pilco et al. [28] | 2022 | China | 30 | 33 |

| Apiola et al. [29] | 2022 | Finland | 22 | 2 |

| Hirsto et al. [30] | 2022 | Finland | 33 | 3 |

| Kew & Tasir [31] | 2022 | Malaysia | 34 | 42 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Masiello, I.; Mohseni, Z.; Palma, F.; Nordmark, S.; Augustsson, H.; Rundquist, R. A Current Overview of the Use of Learning Analytics Dashboards. Educ. Sci. 2024, 14, 82. https://doi.org/10.3390/educsci14010082

Masiello I, Mohseni Z, Palma F, Nordmark S, Augustsson H, Rundquist R. A Current Overview of the Use of Learning Analytics Dashboards. Education Sciences. 2024; 14(1):82. https://doi.org/10.3390/educsci14010082

Chicago/Turabian StyleMasiello, Italo, Zeynab (Artemis) Mohseni, Francis Palma, Susanna Nordmark, Hanna Augustsson, and Rebecka Rundquist. 2024. "A Current Overview of the Use of Learning Analytics Dashboards" Education Sciences 14, no. 1: 82. https://doi.org/10.3390/educsci14010082

APA StyleMasiello, I., Mohseni, Z., Palma, F., Nordmark, S., Augustsson, H., & Rundquist, R. (2024). A Current Overview of the Use of Learning Analytics Dashboards. Education Sciences, 14(1), 82. https://doi.org/10.3390/educsci14010082