Co-Developing an Easy-to-Use Learning Analytics Dashboard for Teachers in Primary/Secondary Education: A Human-Centered Design Approach

Abstract

:1. Introduction

2. Related Work

2.1. Learning Analytics Dashboards

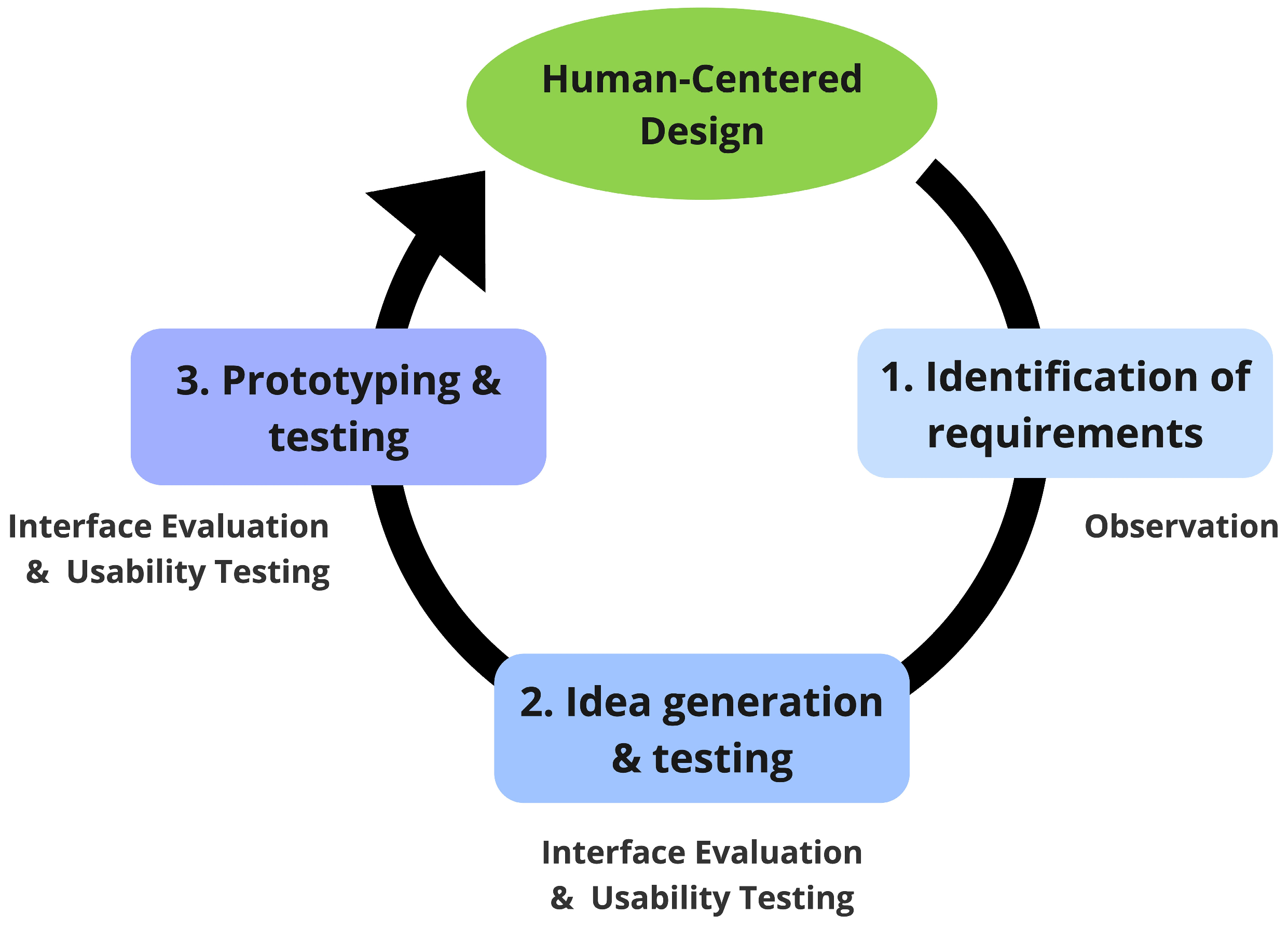

2.2. Human-Centered Design

3. Methodology

3.1. Participants

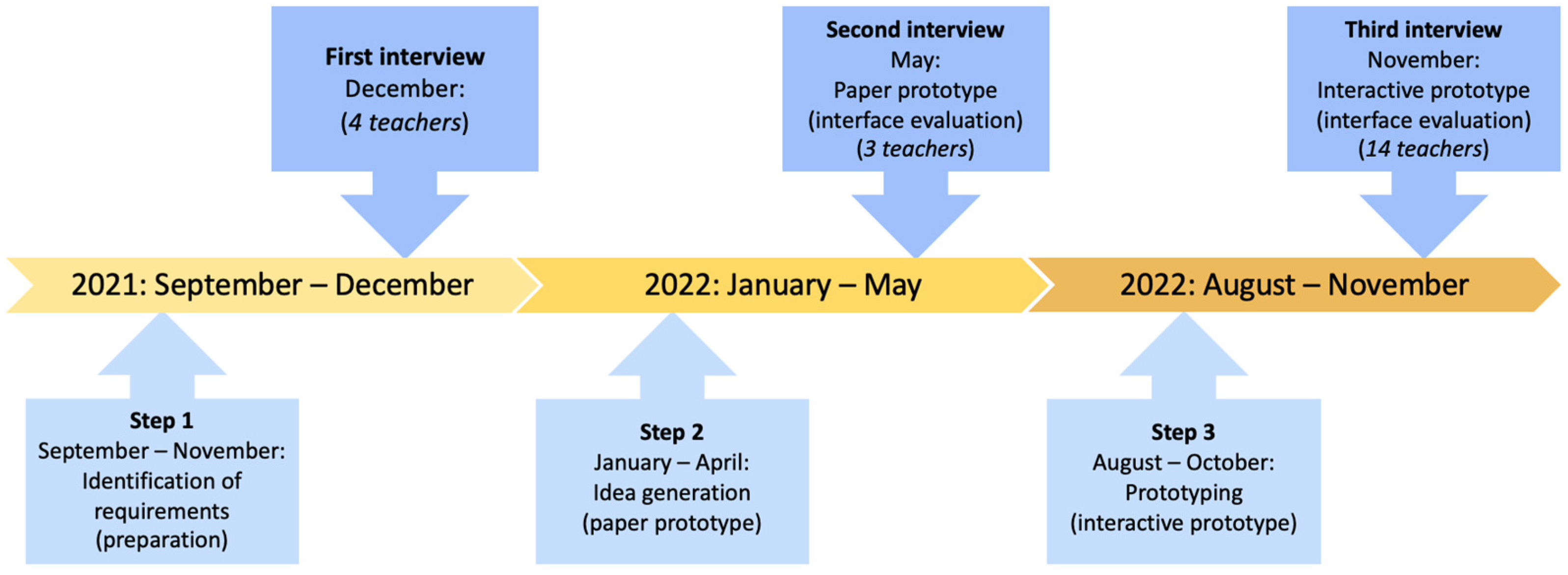

3.2. Our Three-Step HCD Approach

3.2.1. Identification of Requirements

- How do you usually evaluate your students’ data through the DLM or any other EdTech?

- How do you communicate with your students through the DLM or any other EdTech?

- Do you evaluate if your students use the DLM?

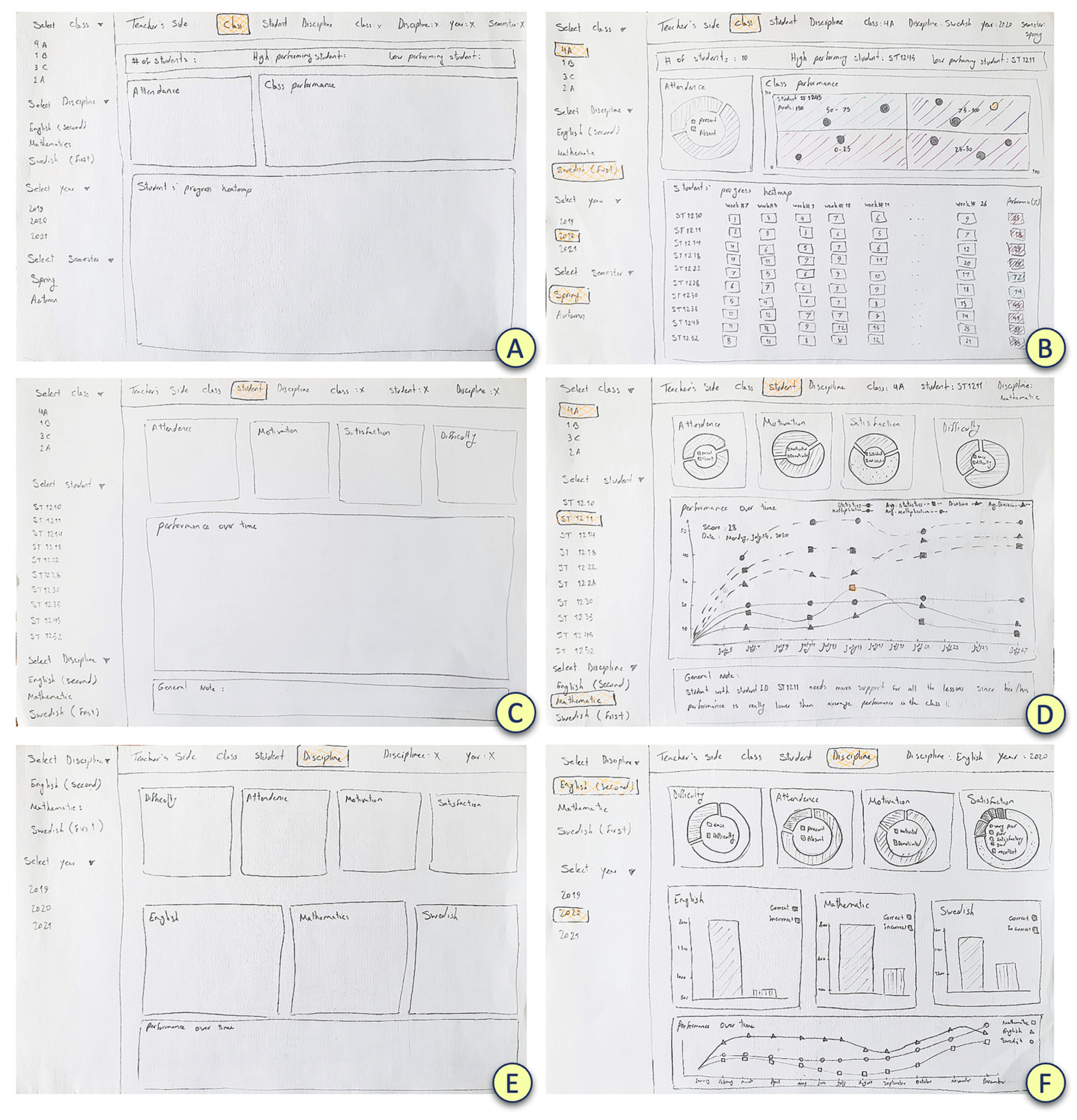

3.2.2. Idea Generation and Testing

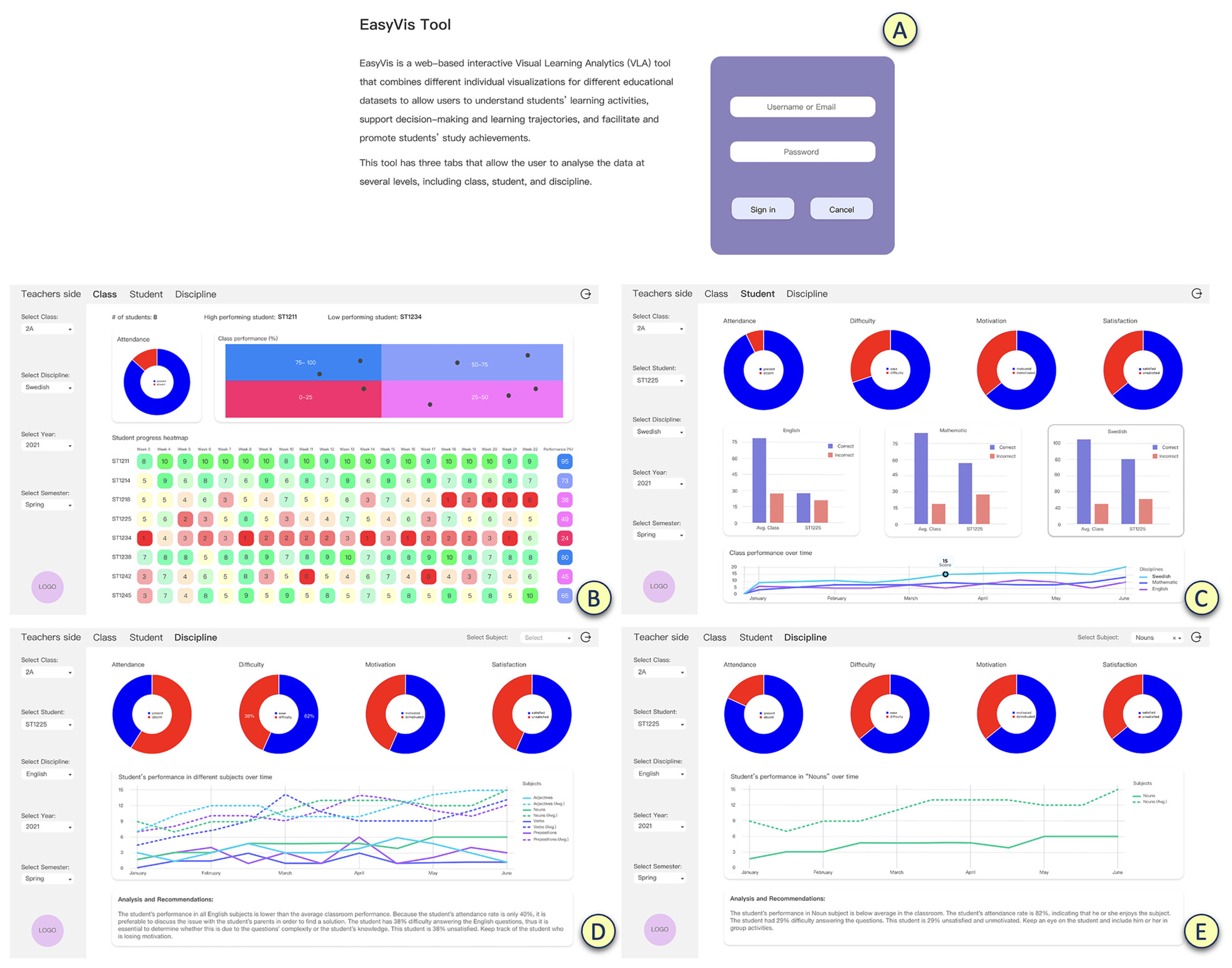

3.2.3. Prototyping and Testing

4. Outcomes

4.1. Outcomes of the First Step of the HCD: Identification of Requirements

Teacher 2 (school 1): “… we tried digital math books as complements to the stages where the students can find other tasks to work with, but the students were not comfortable with it, so we have given up on them...”.

Teacher 1 (school 1): “Well, in our school, teachers use it to check out if students watched the movies or to see if they did their home study during the pandemic…”.

Teacher 4 (school 2): “I think the understanding of the actual word that they need to learn. ... also, to see if the students misunderstand a word and where are they …”.

Teacher 3 (school 2): “… I think instead of having quiz which has a right or wrong answer, the DLM should add ‘what do you think about …?’ so students can describe their feelings…”.

Teacher 1 (school 1): “We do it in the classroom during the work by walking around the classroom and checking the progress, so the students ask a lot of questions and by talking a lot with the students”.

Teacher 4 (school 2): “We use different types of quick questions during the session to check and know how much they understand. We usually end up a session with a test”.

Teacher 1 (school 1): “… There are a lot of data to analyze, and it is so interesting. It is something that you can even discuss with the kids and show them”.

Teacher 2 (school 1): “… to see if they are getting better, so you can follow students individually and help them increase their confidence”.

Teacher 3 (school 2): “In some weeks we do not watch the DLM’s videos, but in some weeks, we assign lots of videos to students, so pie chart is more meaningful for me to have a conclusion”.

4.2. Outcomes of the Second Step of the HCD: Idea Generation and Testing

- The design is more appealing to teachers since they do not have to add information to the dashboard.

- The design is easy, useful, and clear for teachers to obtain results and see how a student’s performance changes over time.

- The sketch prototype has a good layout.

- Some of the teachers already have some information about the students from the DLM that they use in their school. When they assign a task, they can see the response frequency for individual students as well as the entire class, and this sketch prototype provides a more comprehensive view of that.

Teacher 3: “One thing that would be interesting is how many times students answered a question, whether they read a lot before or answered right away and got it wrong…”.

Teacher 2: “… Me and my colleague work a lot with the circle model, where the whole group practice together, then a small group or in pairs then they work individually. So how can the companies adjust their DLM so that it fits with such a teaching model, if they can, then you would automatically get good data over students’ progress...”.

- The dashboard can provide information regarding class and student variances.

- View the entire class before entering data for individual students.

- In the “Discipline” view, the teacher should be able to select both a class and a student.

- Be able to select a discipline, class, and student under the “Student” view and compare the student’s performance in English, Mathematics, and Swedish. In this way, the teacher can see if one student has problems in one or all the disciplines.

- Be able to select the class name in the “Discipline” view.

- Be able to compare student performance across subjects in a discipline and compare students in a class.

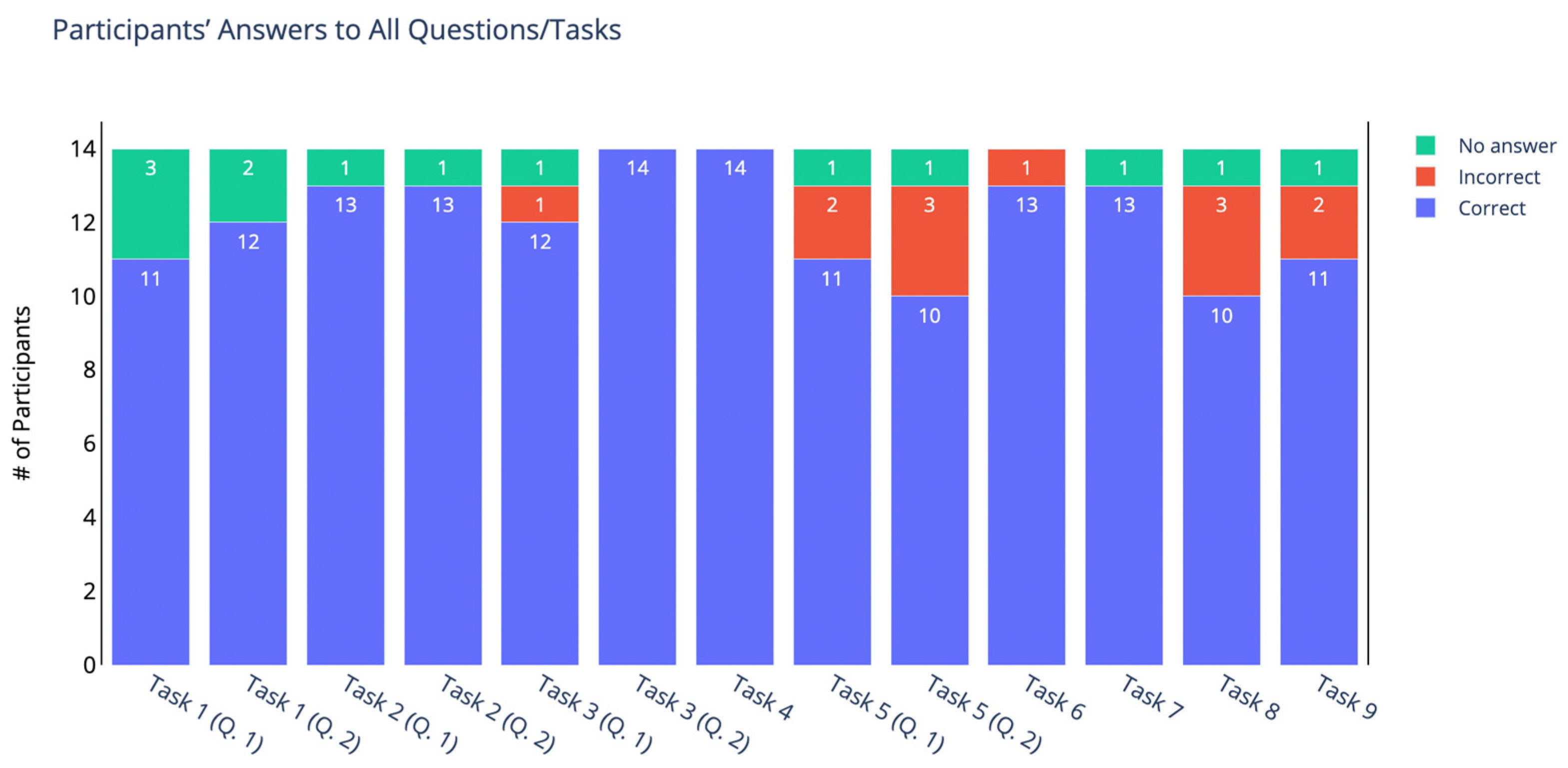

4.3. Outcomes of the Third Step of the HCD: Prototyping and Testing

5. Discussion

5.1. First Step

5.2. Second Step

5.3. Third Step

6. Limitations

7. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Answ. Q1 | Teacher 1 (school 1): We use different things such as google classroom; we do lot of google forms and Exit ticket to understand where the students are. We use the DLM for 2 years as tool beside the other things. Teacher 2 (school 1): Yes, also, we tried digital math books as complements to the stages where the students can find other tasks to work with, but the students were not comfortable with it, so we have given up on them. We do some amount of GeoGebra online and we try to find online digital materials to help students to balance up the pedagogy. We use whiteboard.fi in the pandemic so we can see the students’ self-writing. Teacher 3 (school 2): We use different google products but google classroom is the base, the DLM and digital books—they don’t work very well, because students and parents are conservative. Teacher 4 (school 2): O-hum [murmurs of confirmation]. |

| Answ. Q1.a | Teacher 1 (school 1): Well, in our school, teachers use it to check out if students watched the movies or to see if they did their home study during the pandemic, but when it comes to the quiz, the teacher can see somehow how the students understand the subject, but sometimes it is quite difficult, and the students just click on an answer while they don’t know the correct answer. The teachers can see which questions are the difficult ones and try to figure out why and which students did not understand it. Teacher 2 (school 1): The teachers also can see a picture of the class, so they can see if they need to do something more. Teacher 3 (school 2): Now the DLM is more like a complement to other stuff (watching movies and nothing more). Same problem of “doing quiz before watching the movie”, so we ask the students to watch the movie ones or twice and later do the quiz, so we can see if we should stop if they don’t understand the subject and then we can use the data. If the students do not follow the steps, we can’t rely on the data. Teacher 4 (school 2): O-hum [murmurs of confirmation]. Quite often the students do the quiz before watching the movies which is not a good thing… |

| Answ. Q2 | Teacher 4 (school 2): I think the understanding of the actual word that they need to learn. Also, to see if the students misunderstand a word and where are they—the usual misconception that the students get when they learn a new word. Teacher 2 (school 1): O-hum [murmurs of confirmation]. |

| Answ. Q2.a | Teacher 2 (school 1): We should use different tools and we cannot be relying on just one. The tough part is that by using different tools, the knowledge is quite different and when we narrow it down to just a click, you lose the discussion part with students and we cannot replace that thing with a click, so they must be complemented. Teacher 1 (school 1): Yes, it important to talk to students. Teacher 3 (school 2): Yes, I think instead of having quiz which has a right or wrong answer, the DLM should add “what do you think about …?” so students can describe their feelings (something like Kahoot to check in if the students know a subject), and teachers can have better view about all the students in the class and categories them. |

| Answ. Q2.b | Teacher 1 (school 1): We do it in the classroom during the work by walking around the classroom and checking the progress, so the students ask a lot of questions and by talking a lot with the students. Teacher 2 (school 1): Yes. Teacher 3 (school 2): We mark the text immediately when students write in the digital way. Teacher 4 (school 2): O-hum [murmurs of confirmation]. |

| Answ. Q2.c | Teacher 4 (school 2): We use different types of quick questions during the session to check and know how much they understand. We usually end up a session with a test. Teacher 3 (school 2): Yes, exactly. Teacher 2 (school 1): The students should write different types of text, so I can see how much they learned from the movies that they apply to the text later. They can write in digital way or on the paper, but for readying, this a process that I can see during the time by test and seminars. |

| Answ. Q3 | Teacher 1 (school 1): M-mm [murmurs of thought]. There are a lot of data to analyze, and it is so interesting. It is something that you can even disuses with the kids and show them. Teacher 2 (school 1): I find it useful too! Teacher 3 (school 2): It is interesting, and I can evaluate my students on a deeper level and follow they. Teacher 4 (school 2): That could be absolutely useful! |

| Answ. Q4 | Teacher 3 (school 2): The visualization that shows how much a student used a system is the one that I will use to check how they are interacting. Teacher 1 (school 1): I think having something to show how active students are when they watch a movie is useful because in distance, students play a movie but at the same time play game, so in this way, just one click doesn’t mean anything. Teacher 4 (school 2): I like to see the progress of students—means how many incorrect answers does the student make and what is the end result. Teacher 2 (school 1): Yes, exactly, to see if they are getting better, so you can follow students individually and help them increase their confidence. Teacher 4 (school 2): Definitely! |

| Answ. Q5 | Teacher 1 (school 1): Well, the amount of time that we have doesn’t allow us to really focus on students individually, so seeing group-level data activity is more important for me. Teacher 2 (school 1): I agree, group first and later students individually. Teacher 3 (school 2): In some weeks we do not watch the DLM’s videos, but in some weeks, we assign lots of videos to students, so pie chart is more meaningful for me to have a conclusion. Teacher 4 (school 2): I want to have a plot which shows the number of interactions per subject because maybe I can find the videos on a subject that students have problem with them |

| Answ. Q1 | Teacher 1: If teachers don’t have to add information, it’s more appealing to the teacher. Teacher 2: You can go in and see “wops! I thought this student was further along in their progress!” then I think the design of the dashboard is much easier and useful. it’s much clearer for the teachers then they get results and how a student’s performance is at different times. Teacher 3: I thought it was easy, good layout. I already have some information on the DLM’s platform. If I assign an assignment, I can see the response frequency for students and the whole class, and this is a more detailed view of that. |

| Answ. Q2 | Teacher 1: I think so, I use google in my classroom and then I can get diagrams. I can get an overview too. you must get used to the system. I think all info I need is there in the dashboard. There is nothing I see that should be added or removed. Teacher 2: I don’t know if the DLM provides data about the option “easy read” they have in their DLM. It would be interesting to see that on a student level. As a teacher you know if a student has a reading problem, it would be nice to see if this “easy read” option had been activated during an assignment. Because that is the first adjustment we make if a student display problem. Would be nice to see if students use text to speech in assignments depending on their needs. You want to know if the student understands the question in math. In a data point, is there a problem with the solution or a problem with understanding the text? is it a mathematical problem or a problem with language? Teacher 3: One thing that would be interesting is how many times students answered a question, whether they read a lot before or answered right away and got it wrong, there after reading the text quickly, maybe got it wrong again, then read it carefully and got it right. It would be interesting to see the student’s activity in such a way. |

| Answ. Q3 | Teacher 1: It depends on the assignment. If there is a heavy text which they should read and then answer a lot of questions or write something, then you might be able to see it using the sketches. Or if you watch a documentary and pick out important thing. The assignment varies each day, they do different things every day. I think it is like that in every subject, for example in Swedish. If they have a writing assignment, then it takes a long time, and they work creatively for a long period in their writing. Then there is the next task which is spelling or grammar and such. Teacher 2: The data come from the DLM. Then the teachers must feel comfortable with the material. Most teachers work according to some model. Me and my colleague work a lot with the circle model, where the whole group practice together, then a small group or in pairs then they work individually. So how can the companies adjust their DLM so that it fits with such a teaching model, if they can, then you would automatically get good data over students’ progress. Maybe we can contact the Edtech company and ask them and be a part of the technical solutions for the DLM. I think that there is a key in the intersection between the teachers and the DLM companies, if they can find the right way together, if the companies can give encouragement and tips on programs/assignments and if the teachers can try to see possibilities and can communicate. We would need more of these interviews, it is another process in the teaching. Teacher 3: Yes, absolutely! |

| Answ. Q4 | Teacher 1: It feels useful. the dashboard can show information about class and student differences. Teacher 2: It was just on DISCIPLINE that it would be good to be able to pick both class and student. Under STUDENT I would like to select, subject, class, student there because if I have a student which failing math, I would like to see what it looks like in other subjects. DISCIPLINE must have which class it is displayed. Teacher 3: Maybe also compare student performance in different subject but also compare with the class. |

References

- Aruvee, M.; Ljalikova, A.; Vahter, E.; Prieto, L.; Poom-Valickis, K. Learning analytics to informand guide teachers as designers of educational interventions. In Proceedings of the International Conference on Education and Learning Technologies, Palma de Mallorca, Spain, 2–4 July 2018. [Google Scholar]

- Siemens, G.; Baker, R.S.J.d. Learning analytics and educational data mining: Towards communication and collaboration. In Proceedings of the LAK ‘12: 2nd International Conference on Learning Analytics and Knowledge, Vancouver, BC, Canada, 29 April–2 May 2012; pp. 252–254. [Google Scholar]

- Verbert, K.; Govaerts, S.; Duval, E.; Santos, J.L.; Van Assche, F.; Parra, G.; Klerkx, J. Learning dashboards: An overview and future research opportunities. Pers. Ubiquitous Comput. 2014, 18, 1499–1514. [Google Scholar] [CrossRef]

- Schwendimann, B.A.; Rodriguez-Triana, M.J.; Vozniuk, A.; Prieto, L.P.; Boroujeni, M.S.; Holzer, A.; Gillet, D.; Dillenbourg, P. Perceiving learning at a glance: A systematic literature review of learning dashboard research. IEEE Trans. Learn. Technol. 2016, 10, 30–41. [Google Scholar] [CrossRef]

- Mohseni, Z.; Martins, R.M.; Masiello, I. SBGTool v2. 0: An Empirical Study on a Similarity-Based Grouping Tool for Students’ Learning Outcomes. Data 2022, 7, 98. [Google Scholar] [CrossRef]

- Verbert, K.; Ochoa, X.; De Croon, R.; Dourado, R.A.; De Laet, T. Learning analytics dashboards: The past, the present and the future. In Proceedings of the LAK20: Tenth International Conference on Learning Analytics and Knowledge, Frankfurt, Germany, 23–27 March 2020; pp. 35–40. [Google Scholar]

- Aguerrebere, C.; He, H.; Kwet, M.; Laakso, M.J.; Lang, C.; Price-Dennis, C.M.D.; Zhang, H. Global Perspectives on Learning Analytics in K12 Education. In Handbook of Learning Analytics, 2nd ed.; SOLAR: Vancouver, BC, Canada, 2022; Chapter 22; pp. 223–231. [Google Scholar]

- Buckingham Shum, S.; Ferguson, R.; Martinez-Maldonado, R. Human-centred learning analytics. J. Learn. Anal. 2019, 6, 1–9. [Google Scholar] [CrossRef]

- Chatti, M.A.; Muslim, A.; Guesmi, M.; Richtscheid, F.; Nasimi, D.; Shahin, A.; Damera, R. How to design effective learning analytics indicators? A human-centered design approach. In Addressing Global Challenges and Quality Education: 15th European Conference on Technology Enhanced Learning, EC-TEL 2020, Heidelberg, Germany, 14–18 September 2020, Proceedings 15; Springer International Publishing: New York, NY, USA, 2020; pp. 303–317. [Google Scholar]

- Oviatt, S. Human-centered design meets cognitive load theory: Designing interfaces that help people think. In Proceedings of the 14th ACM International Conference on Multimedia, Santa Barbara, CA, USA, 23–27 October 2006; pp. 871–880. [Google Scholar]

- Sharp, H.; Preece, J.; Rogers, Y. Interaction Design: Beyond Human-Computer Interaction, 5th ed.; John Wiley & Sons: New York, NY, USA, 2019; ISBN 978-1-119-02075-2. [Google Scholar]

- Freeman, A.; Becker, S.A.; Cummins, M. NMC/CoSN Horizon “Report”: 2017, K; The New Media Consortium; U.S. Department of Education: Washinghton, DC, USA, 2017.

- Chen, L.; Lu, M.; Goda, Y.; Yamada, M. Design of Learning Analytics Dashboard Supporting Metacognition. In Proceedings of the 16th International Conference Cognition and Exploratory Learning in Digital Age (CELDA 2019), Cagliari, Italy, 28 June 2019; pp. 175–182. [Google Scholar]

- Verbert, K.; Duval, E.; Klerkx, J.; Govaerts, S.; Santos, J.L. Learning analytics dashboard applications. Am. Behav. Sci.-Entist. 2013, 57, 1500–1509. [Google Scholar] [CrossRef]

- Govaerts, S.; Verbert, K.; Duval, E.; Pardo, A. The student activity meter for awareness and self-reflection. In Proceedings of the CHI’12 Extended Abstracts on Human Factors in Computing Systems, Austin, TX, USA, 5–10 May 2012; pp. 869–884. [Google Scholar]

- Ez-Zaouia, M.; Lavoué, E. EMODA: A tutor oriented multimodal and contextual emotional dashboard. In Proceedings of the Seventh International Learning Analytics & Knowledge Conference, Vancouver, BC, Canada, 13–17 March 2017; pp. 429–438. [Google Scholar]

- He, H.; Dong, B.; Zheng, Q.; Di, D.; Lin, Y. Visual Analysis of the Time Management of Learning Multiple Courses in Online Learning Environment. In Proceedings of the 2019 IEEE Visualization Conference (VIS), Vancouver, BC, Canada, 20–25 October 2019; IEEE: New York, NY, USA, 2019; pp. 56–60. [Google Scholar]

- Mohseni, Z.; Martins, R.M.; Masiello, I. SBGTool: Similarity-Based Grouping Tool for Students’ Learning Outcomes. In Proceedings of the 2021 Swedish Workshop on Data Science (SweDS), Växjö, Sweden, 2–3 December 2021; IEEE: New York, NY, USA, 2021; pp. 1–7. [Google Scholar]

- Mohseni, Z.; Martins, R.M.; Masiello, I. SAVis: A Learning Analytics Dashboard with Interactive Visualization and Machine Learning. In Proceedings of the Nordic Learning Analytics (Summer) Institute 2021, KTH Royal Institute of Technology, Stockholm, Sweden, 23 August 2021; School of Electrical Engineering and Computer Science: Stockholm, Sweeden, 2021; p. 2985. [Google Scholar]

- Kippers, W.B.; Poortman, C.L.; Schildkamp, K.; Visscher, A.J. Data literacy: What do educators learn and struggle with during a data use intervention? Stud. Educ. Eval. 2018, 56, 21–31. [Google Scholar] [CrossRef]

- Fitzpatrick, G. A short history of human computer interaction: A people-centred perspective. In Proceedings of the 2018 ACM SIGUCCS Annual Conference, Orlando, FL, USA, 7–10 October 2018; Association for Computing Machinery: New York, NY, USA, 2018; p. 3. [Google Scholar]

- Giacomin, J. What is human centred design? Des. J. 2014, 17, 606–623. [Google Scholar] [CrossRef]

- Charmaz, K. Constructing Grounded Theory: A Practical Guide through Qualitative Analysis; Sage Publications: Thousand Oaks, CA, USA, 2006. [Google Scholar]

- Crotty, M.J. The Foundations of Social Research: Meaning and Perspective in the Research Process; Sage Publications: Thousand Oaks, CA, USA, 1998; Chapter 1. [Google Scholar]

- Richardson, L.; Pierre, E. Writing: A method for inquiry. In Handbook of Qualitative Research, 3rd ed.; Sage Publications: Thousand Oaks, CA, USA, 2005; pp. 959–978. [Google Scholar]

- Blomberg, J.; Giacomi, J.; Mosher, A.; Swenton-Wall, P. Ethnographic field methods and their relation to design. In Participatory Design; CRC Press: Boca Raton, FL, USA, 2017; pp. 123–155. [Google Scholar]

- Bodker, K.; Kensing, F.; Simonsen, J. Participatory IT Design: Designing for Business and Workplace Realities; MIT Press: Cambridge, MA, USA, 2009. [Google Scholar]

- Holtzblatt, K.; Beyer, H. Contextual Design: Defining Customer-Centered Systems; Elsevier: Amsterdam, The Netherlands, 1997. [Google Scholar]

- Dix, A. Theoretical analysis and theory creation. In Research Methods in Human-Computer Interaction; Cambridge University Press: Cambridge, UK, 2008; pp. 175–195. [Google Scholar]

- Dourish, P. Responsibilities and implications: Further thoughts on ethnography and design. In Proceedings of the 2007 Conference on Designing for User Experiences, Paris, France, 28–31 July 2007; Association for Computing Machinery: New York, NY, USA, 2007; pp. 2–16. [Google Scholar]

- Danielsson, K.; Wiberg, C. Participatory design of learning media: Designing educational computer games with and for teenagers. Interact. Technol. Smart Educ. 2006, 3, 275–291. [Google Scholar] [CrossRef]

- Holstein, K.; McLaren, B.M.; Aleven, V. Co-designing a real-time classroom orchestration tool to support teacher–AI complementarity. J. Learn. Anal. 2019, 6, 27–52. [Google Scholar] [CrossRef]

- Sedlmair, M.; Meyer, M.; Munzner, T. Design study methodology: Reflections from the trenches and the stacks. IEEE Trans. Vis. Comput. Graph. 2012, 18, 2431–2440. [Google Scholar] [CrossRef]

- Dimitriadis, Y.; Martínez-Maldonado, R.; Wiley, K. Human-centered design principles for actionable learning analytics. In Research on E-Learning and ICT in Education: Technological, Pedagogical and Instructional Perspectives; Springer: Berlin/Heidelberg, Germany, 2021; pp. 277–296. [Google Scholar]

- Smith, Q. Prototyping User Experience; I: UXmatters: Boulder Creek, CA, USA, 2019. [Google Scholar]

- Collins, A.; Joseph, D.; Bielaczyc, K. Design research: Theoretical and methodological issues. In Design-Based Research; Psychology Press: London, UK, 2016; pp. 15–42. [Google Scholar]

- Bubenko, J.A. Challenges in requirements engineering. In Proceedings of the 1995 IEEE International Symposium on Requirements Engineering (RE’95), York, UK, 27–29 March 1995; IEEE: New York, NY, USA, 1995; pp. 160–162. [Google Scholar]

- Rudd, J.; Stern, K.; Isensee, S. Low vs. high-fidelity prototyping debate. Interactions 1996, 3, 76–85. [Google Scholar] [CrossRef]

- Mohseni, Z.; Martins, R.M.; Nordmark, S.; Masiello, I. Visual Learning Analytics for Educational Interventions in Primary, Lower and Upper Secondary Schools: A Scoping Review. Under review.

- Viberg, O.; Gronlund, A. Desperately seeking the impact of learning analytics in education at scale: Marrying data analysis with teaching and learning. In Online Learning Analytics; Auerbach Publications: Boca Raton, FL, USA, 2021. [Google Scholar]

- Tan, J.P.L.; Koh, E.; Jonathan, C.; Yang, S. Learner Dashboards a Double-Edged Sword? Students’ Sense Making of a Collaborative Critical Reading and Learning Analytics Environment for Fostering 21st-Century Literacies. J. Learn. Anal. 2017, 4, 117–140. [Google Scholar] [CrossRef]

- Ez-Zaouia, M.; Tabard, A.; Lavoué, E. PROGDASH: Lessons Learned from a Learning Dashboard in-the-wild. In Proceedings of the 12th International Conference on Computer Supported Education-Volume 2: CSEDU, Virtual Event, 2–4 May 2020. [Google Scholar]

- Hege, I.; Kononowicz, A.A.; Adler, M. A clinical reasoning tool for virtual patients: Design-based research study. JMIR Med. Educ. 2017, 3, e8100. [Google Scholar] [CrossRef] [PubMed]

- McGrath, C.; Åkerfeldt, A. Educational technology (EdTech): Unbounded opportunities or just another brick in the wall? In Digital Transformation and Public Services; Routledge: New York, NY, USA, 2019; pp. 143–157. [Google Scholar]

- Liu, L.; Johnson, D.L. Assessing student learning in instructional technology: Dimensions of a learning model. Comput. Sch. 2001, 18, 79–95. [Google Scholar] [CrossRef]

- Molenaar, I.; Knoop-van Campen, C.A. How teachers make dashboard information actionable. IEEE Trans. Learn. Technol. 2018, 12, 347–355. [Google Scholar] [CrossRef]

- Confrey, J.; Toutkoushian, E.; Shah, M. A validation argument from soup to nuts: Assessing progress on learning trajectories for middle-school mathematics. Appl. Meas. Educ. 2019, 32, 23–42. [Google Scholar] [CrossRef]

- Hoyles, C. Transforming the mathematical practices of learners and teachers through digital technology. Res. Math. Educ. 2018, 20, 209–228. [Google Scholar] [CrossRef]

- Svela, A.; Nouri, J.; Viberg, O.; Zhang, L. A systematic review of tablet technology in mathematics education. Int. J. Interact. Mob. Technol. 2019, 13, 139–158. [Google Scholar] [CrossRef]

- Rosmansyah, Y.; Kartikasari, N.; Wuryandari, A.I. A learning analytics tool for monitoring and improving students’ learning process. In Proceedings of the 2017 6th International Conference on Electrical Engineering and Informatics (ICEEI), Langkawi, Malaysia, 25–27 November 2017; IEEE: New York, NY, USA, 2017; pp. 1–5. [Google Scholar]

- Vieira, C.; Parsons, P.; Byrd, V. Visual learning analytics of educational data: A systematic literature review and research agenda. Comput. Educ. 2018, 122, 119–135. [Google Scholar] [CrossRef]

- Sefelin, R.; Tscheligi, M.; Giller, V. Paper prototyping-what is it good for? A comparison of paper-and computer-based low-fidelity prototyping. In CHI’03 Extended Abstracts on Human Factors in Computing Systems; Association for Computing Machinery: New York, NY, USA, 2003; pp. 778–779. [Google Scholar]

- Tao, D.; Or, C. A paper prototype usability study of a chronic disease self-management system for older adults. In Proceedings of the 2012 IEEE International Conference on Industrial Engineering and Engineering Management, Hong Kong, China, 10–13 December 2012; IEEE: New York, NY, USA, 2012; pp. 1262–1266. [Google Scholar]

- Delgado, A.J.; Wardlow, L.; McKnight, K.; O’Malley, K. Educational technology: A review of the integration, resources, and effectiveness of technology in k-12 classrooms. J. Inf. Technol. Educ. Res. 2015, 14, 397–416. [Google Scholar] [CrossRef]

- Farjon, D.; Smits, A.; Voogt, J. Technology integration of pre-service teachers explained by attitudes and beliefs, competency, access, and experience. Comput. Educ. 2019, 130, 81–93. [Google Scholar] [CrossRef]

- Moores, E.; Birdi, G.K.; Higson, H.E. Determinants of university students’ attendance. Educ. Res. 2019, 61, 371–387. [Google Scholar] [CrossRef]

- Chen, Y.; Chen, Q.; Zhao, M.; Boyer, S.; Veeramachaneni, K.; Qu, H. DropoutSeer: Visualizing learning patterns in Massive Open Online Courses for dropout reasoning and prediction. In Proceedings of the 2016 IEEE Conference on Visual Analytics Science and Technology (VAST), Baltimore, MD, USA, 23–28 October 2016; IEEE: New York, NY, USA, 2016; pp. 111–120. [Google Scholar]

- Charleer, S.; Klerkx, J.; Duval, E.; De Laet, T.; Verbert, K. Creating effective learning analytics dashboards: Lessons learnt. In Proceedings of the Adaptive and Adaptable Learning: 11th European Conference on Technology Enhanced Learning, EC-TEL 2016, Lyon, France, 13–16 September 2016; Springer International Publishing: New York, NY, USA, 2016; pp. 42–56. [Google Scholar]

| Participants | Subject of Teaching | Teacher Gender | Teacher Age Group | Student Grade |

|---|---|---|---|---|

| 1 | Mathematics and natural science | Female | 40–45 | 6–9 |

| 2 | Mathematics and natural science | Male | 55–60 | 6–9 |

| 3 | Swedish and Swedish as a second language | Female | 40–45 | 6–9 |

| 4 | Mathematics and chemistry | Male | 40–45 | 6–9 |

| Participants | Subject of Teaching | Teacher Gender | Teacher Age Group | Student Grade |

|---|---|---|---|---|

| 1 | Mathematics | Male | 50–55 | 4–6 |

| 2 | History and Civics | Male | 40–45 | 4–6 |

| 3 | Mathematics | Female | 40–45 | 4–6 |

| Presentation of the researchers and field of research: 5 min. Interview guide: 5 min. Show the visualization tool: 10 min. Ask the questions below: 40 min. |

| Questions: |

|

| Explain the prototype to the teachers: 5 min. Ask the questions below: 15 min. |

| Questions: |

|

| Explain the interactive prototype: 5 min. Carry out 9 tasks and write the answers on paper: 20 min. Transfer the answers to Mentimeter: 5 min. Reflection: 10 min. |

| Questions: |

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mohseni, Z.; Masiello, I.; Martins, R.M. Co-Developing an Easy-to-Use Learning Analytics Dashboard for Teachers in Primary/Secondary Education: A Human-Centered Design Approach. Educ. Sci. 2023, 13, 1190. https://doi.org/10.3390/educsci13121190

Mohseni Z, Masiello I, Martins RM. Co-Developing an Easy-to-Use Learning Analytics Dashboard for Teachers in Primary/Secondary Education: A Human-Centered Design Approach. Education Sciences. 2023; 13(12):1190. https://doi.org/10.3390/educsci13121190

Chicago/Turabian StyleMohseni, Zeynab (Artemis), Italo Masiello, and Rafael M. Martins. 2023. "Co-Developing an Easy-to-Use Learning Analytics Dashboard for Teachers in Primary/Secondary Education: A Human-Centered Design Approach" Education Sciences 13, no. 12: 1190. https://doi.org/10.3390/educsci13121190

APA StyleMohseni, Z., Masiello, I., & Martins, R. M. (2023). Co-Developing an Easy-to-Use Learning Analytics Dashboard for Teachers in Primary/Secondary Education: A Human-Centered Design Approach. Education Sciences, 13(12), 1190. https://doi.org/10.3390/educsci13121190