SMART: Selection Model for Assessment Resources and Techniques

Abstract

:1. Introduction

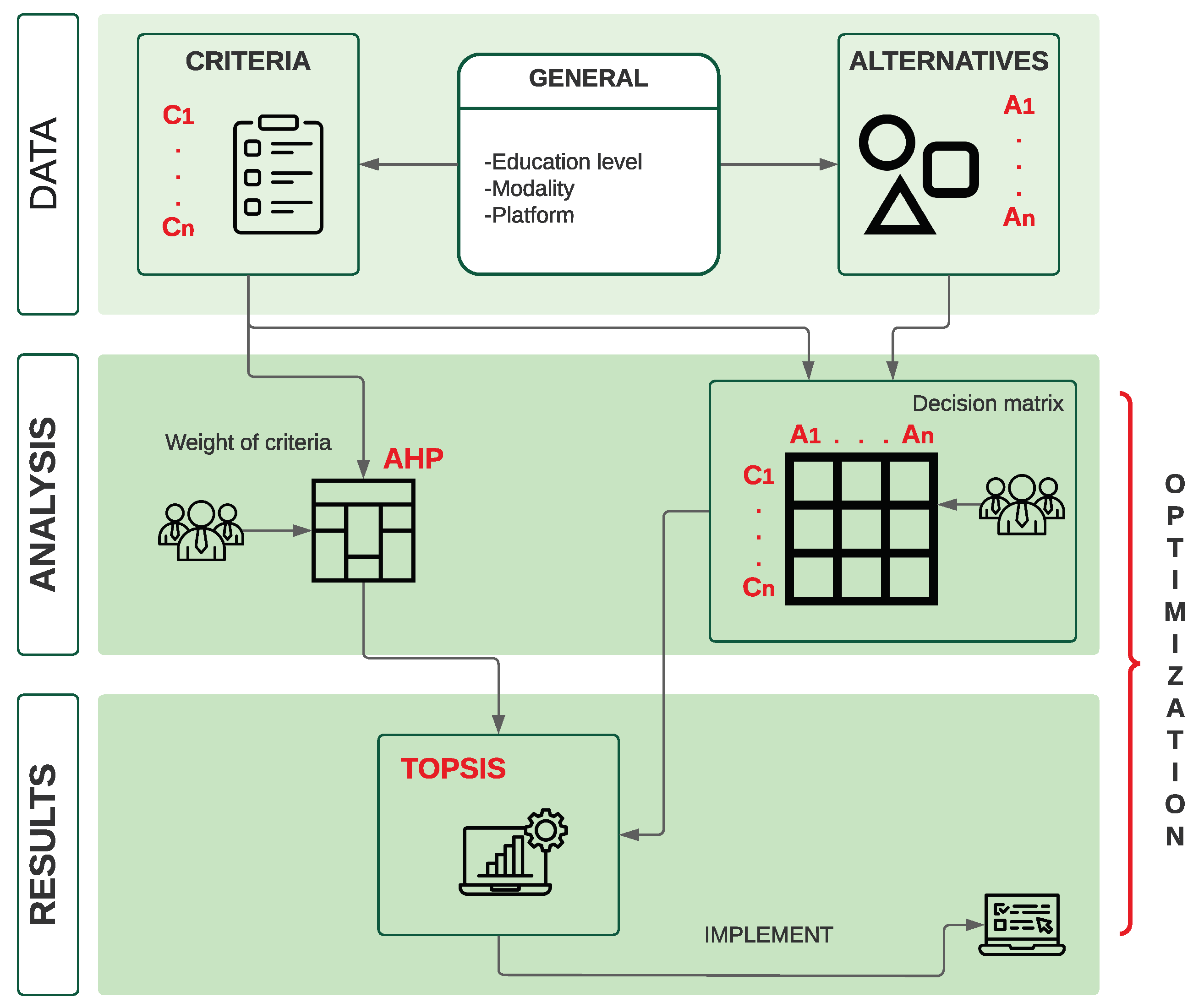

2. Methodology

2.1. Data Phase

- The criteria are the most important indicators that are considered in the evaluation process of the alternatives. They can be quantitative or qualitative and can be organized into main criteria (categories), subcriteria, etc. For example, for the problem analysed in this paper, several categories are involved: students, teachers, subject, and the activity in question. These categories deploy a series of indicators to be evaluated.

- The alternatives are the different options involved in decision making. In this model, the alternatives correspond to the different assessment activities being analysed.

2.2. Analysis Phase

2.2.1. AHP

- 1.

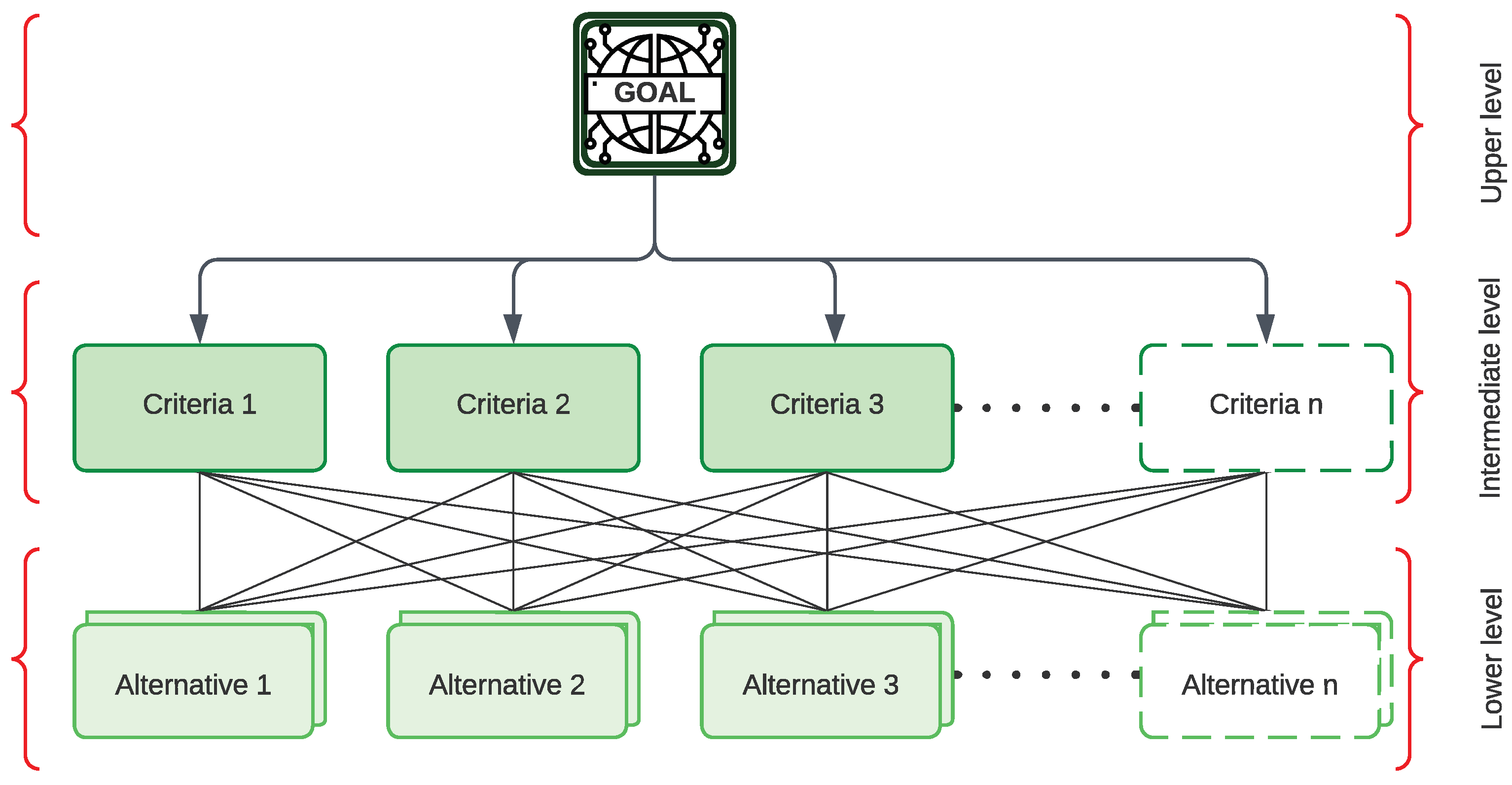

- Design the hierarchical model of the problem. In this step, the problem is modelled with a three-level hierarchical structure, encompassing the goal or objective, criteria, and alternatives; see Figure 2.

- 2.

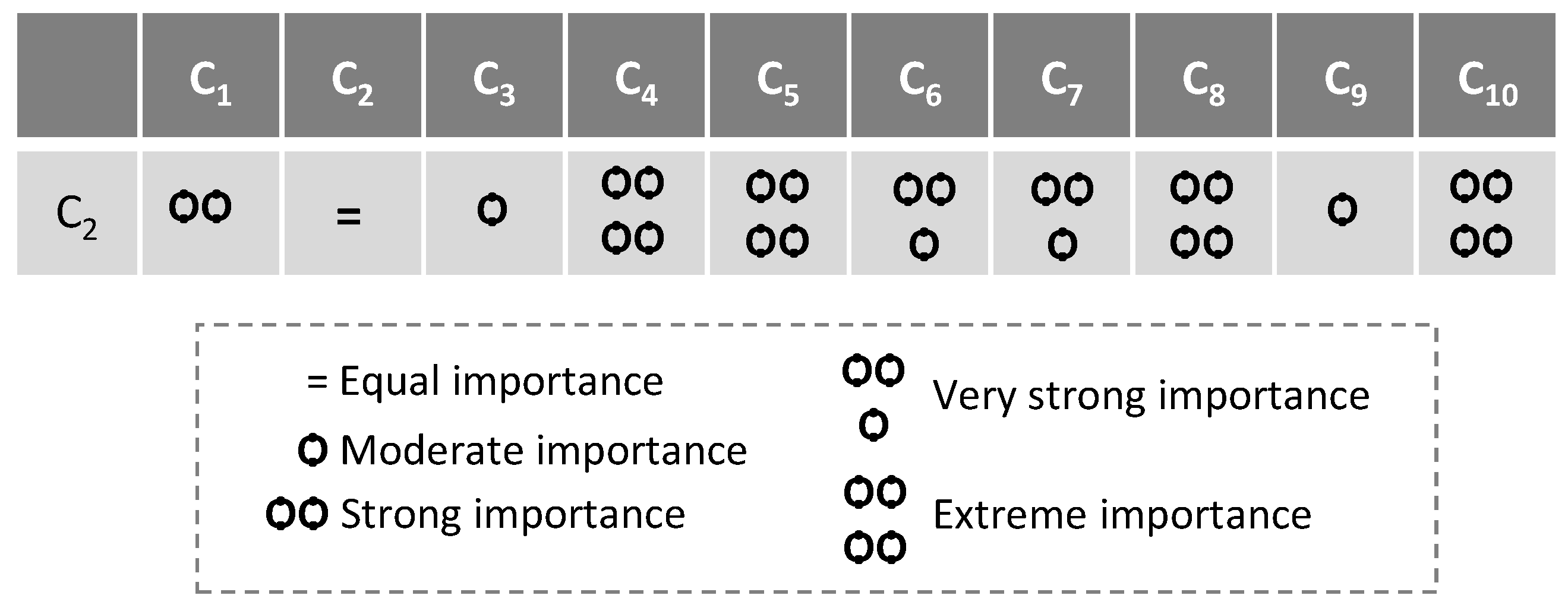

- Assignment and assessment of priorities. The objective of this step is to obtain the weight of the criteria based on the evaluation of the criteria: it can be performed with a scale directly or indirectly through the comparison between pairs of criteria where the different priorities are compared in a matrix R. Each piece of data is a positive numeric value that determines the relative priority between the row criterion compared to the column criterion; see Table 1.

2.3. Decision Matrix

- Ai

- alternatives, ;

- Cj

- criteria, ;

- vij

- evaluation of alternative with reference to criterion ;

- W

- vector of weights associated with the criteria, obtained according to Section 2.2.1.

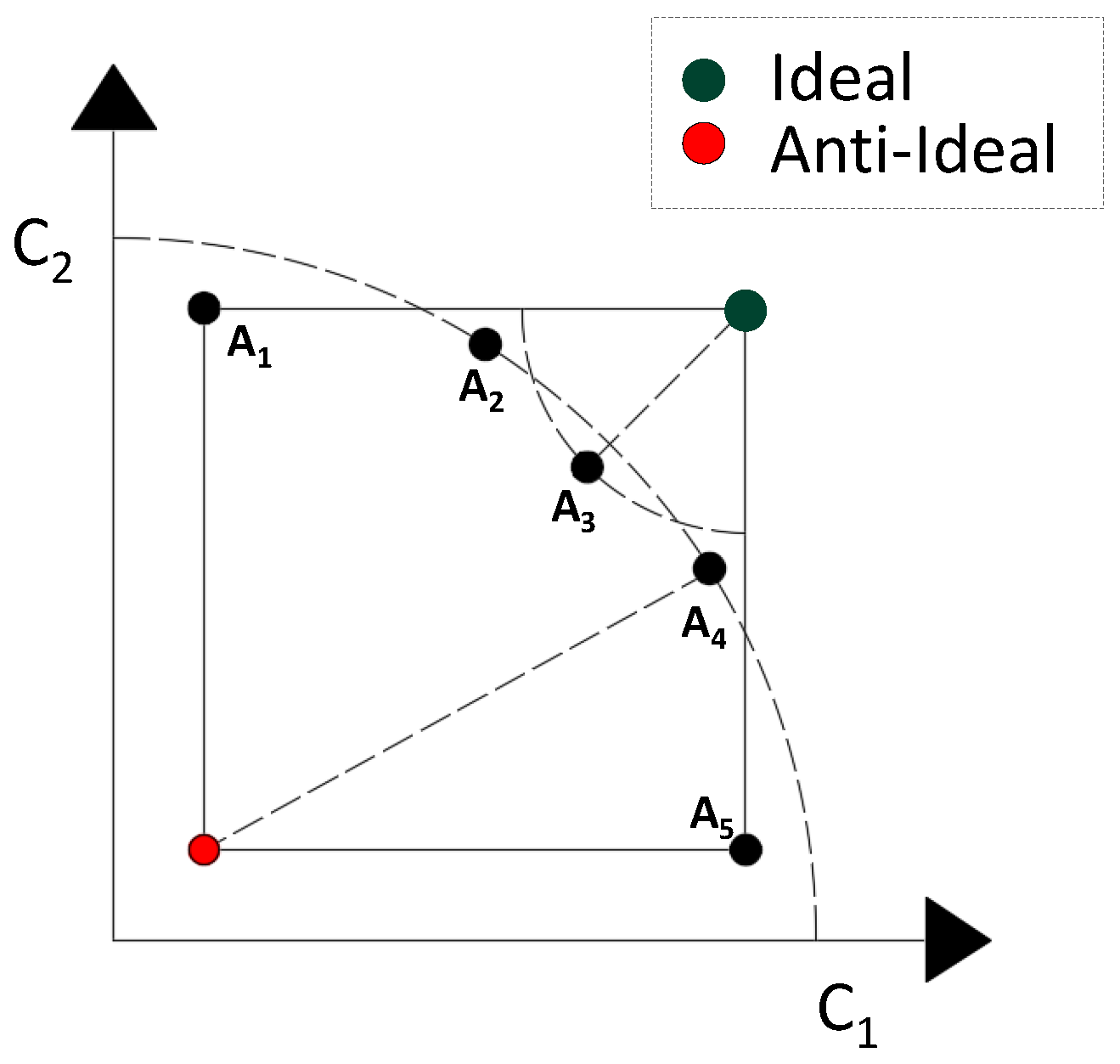

2.4. Results Phase: TOPSIS

- Construction of the decision matrix.

- Normalization of the decision matrix.

- Construction of the normalized weighted matrix.

- Determination of the positive and negative ideal solution.

- Calculation of the positive and negative ideal solution.

- Calculation of the relative proximity of each alternative to the positive ideal solution.

- Ordering of the alternatives according to their relative proximity.

3. Model Evaluation

3.1. Data

3.1.1. Criteria

3.1.2. Alternatives

- A1

- Moodle assignment where students, based on the instructions specified in the assignment itself (activity content, format, structure, etc.), will submit a report they have prepared individually. The activity has associated submission dates. To carry out the activity, they need advanced use of different programs: a word processor, a spreadsheet for statistical analysis and graph insertion, and stipulated technical programs of the subject. The activity is graded with a points rubric, with individual feedback by section and general feedback for the entire activity.

- A2

- Same type as the previous one, but carried out in groups. The lecturer previously forms the different work groups, the activity content is much more extensive, and grading is performed by groups also with a rubric and partial and total feedback.

- A3

- Moodle questionnaire. It consists of blocks of questions of different types (multiple choice, true/false, single choice, match options, small calculations, fill in texts, etc.). Once completed, students can view their grade with brief feedback, as well as view their responses. The teaching staff prepares the questionnaire based on the random selection of a question database.

- A4

- Moodle lesson. A group of pages with different types of information and associated questions of different modalities (essays, multiple choice, true/false, single choice, etc.). Movement between pages can have different itineraries, depending on student responses. At the end of the lesson, the student will see brief feedback and their grade.

- A5

- Moodle workshop. As in alternative , students submit a report according to the professor’s specifications. Grading will be performed by the students themselves, through a rubric designed by the teacher. Self-assessment will weigh 20% and peer assessment 80%.

- A6

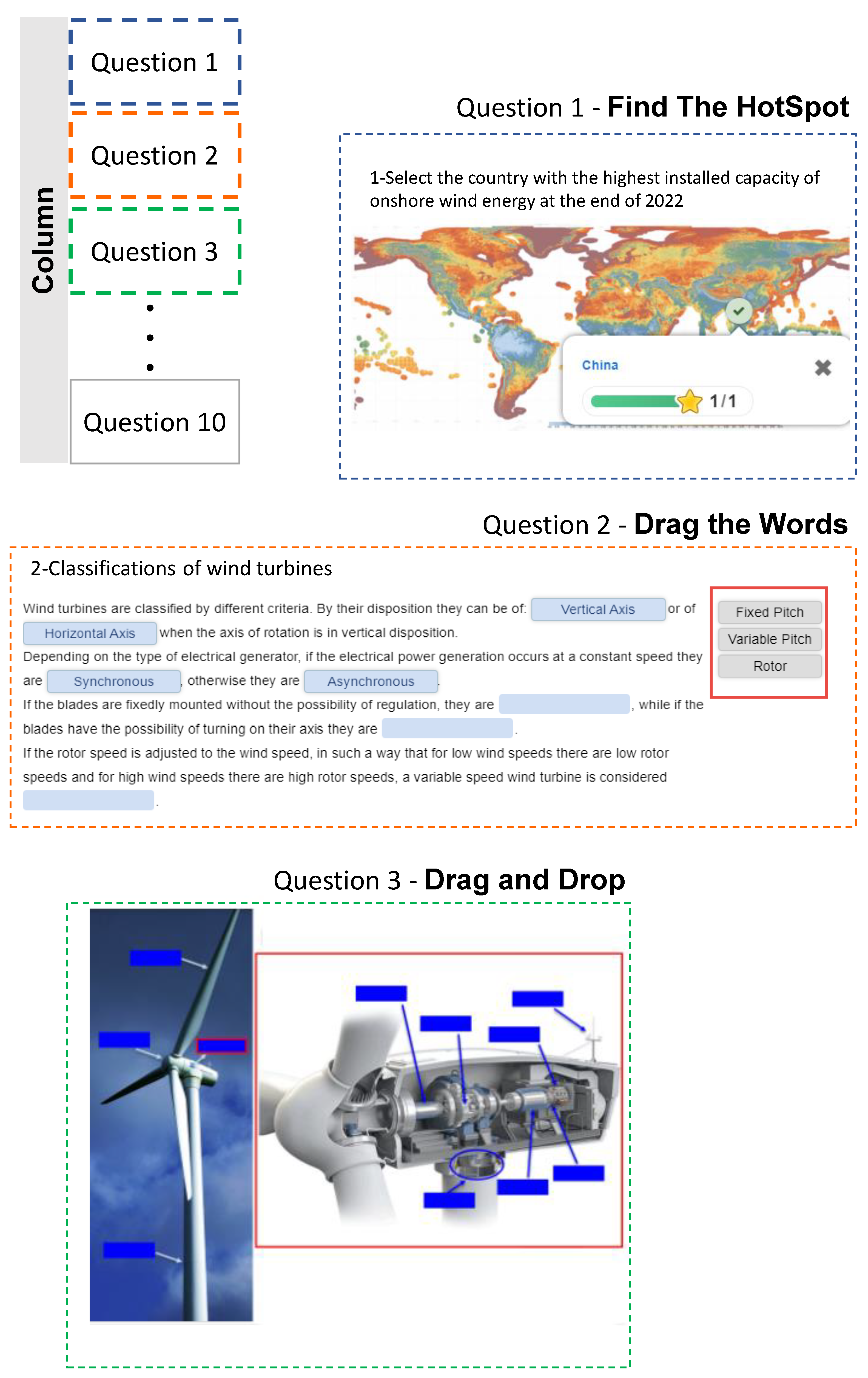

- H5P activity. The H5P plugin is installed on the Moodle platform; therefore, the activity and grading are contained in the classroom. Its composition is a multicolumn object made up of different types of activities: fill-in texts, drag images or texts, select in an image, etc.; see Figure 4. Students receive the grade immediately after finishing the activity; the questions include a review and editing before ending the activity. The design of the activity by the teacher has required prior learning of the different objects to be used.

- A7

- Moodle forum. Discussion on a subject topic; students can start a thread and the rest intervene positively or not in the different threads. At the end of the activity, the teacher will grade both types of interventions. The grade will be reflected in the grade book using a rubric.

- A8

- Moodle glossary. In a collaborative way, students will prepare a list of definitions of a subject specified by the teacher. The teacher will reflect the grade in the grade book.

- A9

- Moodle database. Students will create a technical data sheet of a certain technology and with the fields defined by the teacher. The teacher will reflect the grade in the grade book.

- A10

- Activity with Genially. The activity is not integrated into the Moodle platform, but is an external web. It consists of an interactive Escape Room-type activity, carried out by groups. It consists of different tests, each of which provides a number, see Figure 5. When they have the sequence of numbers, the Escape Room ends. The grade will be reflected by the teacher in the grade book for the different groups, according to the time it takes the groups to solve the activity.

4. Analysis

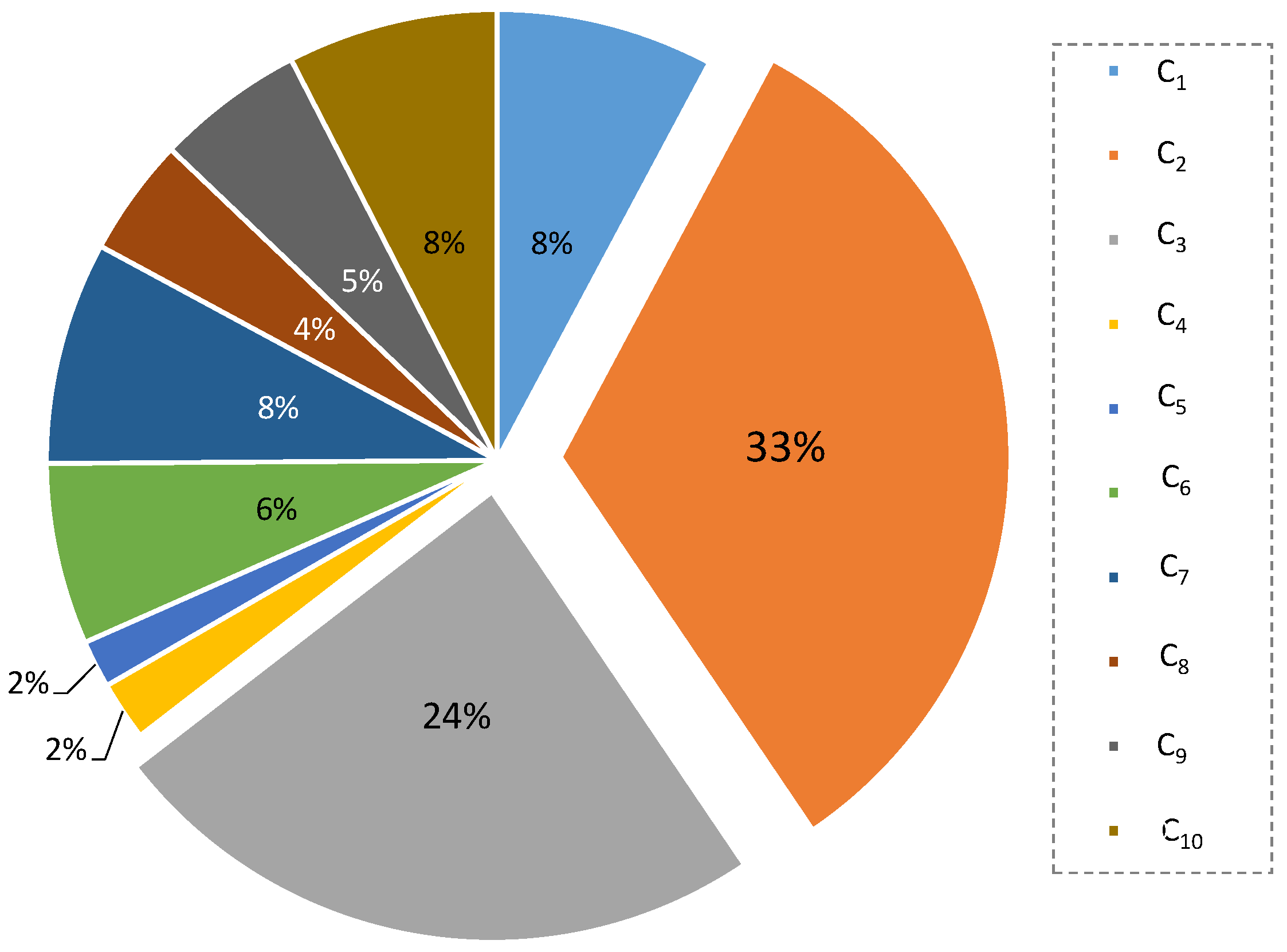

4.1. Criteria Weights

4.2. Decision Matrix

5. Results

5.1. Discussion

- Understanding which activities are optimal for assessment based on the specific criteria and educational context. In this particular case study, assignments, reports, workshops, complex H5P activities, and questionnaires were prioritized. However, depending on the criteria and educational context, traditional methods could also be suitable.

- Understanding tradeoffs between different activity features based on the criteria evaluations.

- Potentially saving preparation and grading time by replacing less optimal activities identified by the model.

- Using ranked activity data to provide teachers/lecturers with standardized recommendations or resources for assessments.

- Allocating educational technology budgets based on the activity ranking given by the model (e.g., tools for creating simulations).

- Establishing faculty training priorities around highly ranked activities, if skill gaps exist.

5.2. Limitations of the Study and Future Works

- Even though all the activities under analysis have been carried out throughout the described course, it is also interesting to confirm if by conducting the top five alternative ranking, the students’ qualifications improve.

- Only instructor and activity factors were evaluated. Students’ preferences and perspectives could be incorporated into the SMART methodology by including student-related criteria in the criteria set used for evaluation, or by conducting a student survey to identify highly rated or preferred activities and using these as the activities (alternatives) for the SMART method.

- Analysing the results using other combinations of MCDM techniques. For instance, to weight the criteria, entropy (objective method), the analytic network process (ANP) or the best worst method (BWM) can be used. Moreover, the VIseKriterijumska Optimizacija I Kompromisno Resenje (multicriteria optimization and compromise solution, VIKOR) and more could rank the alternatives and then compare the results with the ÉLimination Et Choix Traduisant la REalité (elimination and choice translating reality, ELECTRE) to eliminate the least favourable options.

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AHP | Analytic Hierarchy Process |

| ANP | Analytic Network Process |

| BWM | Best Worst Method |

| EHEA | European Higher Education Area |

| ELECTRE | ÉLimination Et Choix Traduisant la REalité |

| ICTs | Information and Communication Technologies |

| MCDM | Multicriteria Decision Making |

| SMART | Selection Model for Assessment Resources and Techniques |

| TOPSIS | Technique for Order of Preference by Similarity to Ideal Solution |

| VIKOR | VIseKriterijumska Optimizacija I Kompromisno Resenje |

References

- Fernández-Guillamón, A.; Molina-García, Á. Comparativa de herramientas de gamificación de acceso libre: Aplicación en asignatura de Grados en Ingenierías industriales. In Innovación Docente e Investigación en Ciencias, Ingeniería y Arquitectura; Dykinson: Madrid, Spain, 2019; pp. 783–800. [Google Scholar]

- Abdel-Aziz, A.A.; Abdel-Salam, H.; El-Sayad, Z. The role of ICTs in creating the new social public place of the digital era. Alex. Eng. J. 2016, 55, 487–493. [Google Scholar] [CrossRef]

- Chiappe, A. Trends in Digital Educational Content in Latin America; Universidad de La Sabana: Chía, Colombia, 2016. [Google Scholar]

- López-Gorozabel, O.; Cedeño-Palma, E.; Pinargote-Ortega, J.; Zambrano-Romero, W.; Pazmiño-Campuzano, M. Bootstrap as a tool for web development and graphic optimization on mobile devices. In XV Multidisciplinary International Congress on Science and Technology; Springer: Cham, Switzerland, 2020; pp. 290–302. [Google Scholar]

- Moodle: A Free Open Source Learning Platform or Course Management System. 2002. Available online: https://moodle.org/ (accessed on 13 October 2023).

- Blin, F.; Munro, M. Why hasn’t technology disrupted academics’ teaching practices? Understanding resistance to change through the lens of activity theory. Comput. Educ. 2008, 50, 475–490. [Google Scholar] [CrossRef]

- Ashrafi, A.; Zareravasan, A.; Rabiee Savoji, S.; Amani, M. Exploring factors influencing students’ continuance intention to use the learning management system (LMS): A multi-perspective framework. Interact. Learn. Environ. 2022, 30, 1475–1497. [Google Scholar] [CrossRef]

- Chichernea, V. Campus information systems for enhancing quality and performance in a smart city high education environment. In Conference Proceedings of «eLearning and Software for Education» (eLSE); Carol I National Defence University Publishing House: Bucharest, Romania, 2016; Volume 12, pp. 50–56. [Google Scholar]

- Fuentes Pardo, J.M.; Ramírez Gómez, Á.; García García, A.I.; Ayuga Téllez, F. Web-based education in Spanish Universities. A Comparison of Open Source E-Learning Platforms. J. Syst. Cybern. Inform. 2012, 10, 47–53. [Google Scholar]

- Piotrowski, M. What is an e-learning platform? In Learning Management System Technologies and Software Solutions for Online Teaching: Tools and Applications; IGI Global: Hershey, PA, USA, 2010; pp. 20–36. [Google Scholar]

- Costa, C.; Alvelos, H.; Teixeira, L. The Use of Moodle e-learning Platform: A Study in a Portuguese University. In Proceedings of the 4th Conference of ENTERprise Information Systems—Aligning Technology, Organizations and People (CENTERIS 2012), Algarve, Portugal, 3–5 October 2012; Volume 5, pp. 334–343. [Google Scholar] [CrossRef]

- Versvik, M.; Brand, J.; Brooker, J. Kahoot. 2012. Available online: https://kahoot.com/ (accessed on 10 October 2023).

- Socrative—Showbie Inc. 2010. Available online: https://www.socrative.com/ (accessed on 10 October 2023).

- Quizizz Inc. 2015. Available online: https://quizizz.com/ (accessed on 10 October 2023).

- Genially Inc. 2015. Available online: https://app.genial.ly/ (accessed on 10 October 2023).

- Fernández-Guillamón, A.; Molina-García, Á. Simulation of variable speed wind turbines based on open-source solutions: Application to bachelor and master degrees. Int. J. Electr. Eng. Educ. 2021. [Google Scholar] [CrossRef]

- Valda Sanchez, F.; Arteaga Rivero, C. Diseño e implementación de una estrategia de gamificacion en una plataforma virtual de educación. Fides Ratio-Rev. Difus. Cult. Cient. Univ. Salle Boliv. 2015, 9, 65–80. [Google Scholar]

- Hamari, J.; Koivisto, J.; Sarsa, H. Does Gamification Work?—A Literature Review of Empirical Studies on Gamification. In Proceedings of the 2014 47th Hawaii International Conference on System Sciences, Waikoloa, HI, USA, 6–9 January 2014; pp. 3025–3034. [Google Scholar] [CrossRef]

- Oliva, H.A. La gamificación como estrategia metodológica en el contexto educativo universitario. Real. Reflex. 2016, 44, 108–118. [Google Scholar] [CrossRef]

- Mohamad, J.R.J.; Farray, D.; Limiñana, C.M.; Ramírez, A.S.; Suárez, F.; Ponce, E.R.; Bonnet, A.S.; Iruzubieta, C.J.C. Comparación de dos herramientas de gamificación para el aprendizaje en la docencia universitaria. In V Jornadas Iberoamericanas de Innovación Educativa en el ámbito de las TIC y las TAC: InnoEducaTIC 2018, Las Palmas de Gran Canaria, 15 y 16 de noviembre de 2018; Universidad de Las Palmas de Gran Canaria: Las Palmas, Spain, 2018; pp. 199–203. [Google Scholar]

- Krahenbuhl, K.S. Student-centered education and constructivism: Challenges, concerns, and clarity for teachers. Clear. House J. Educ. Strateg. Issues Ideas 2016, 89, 97–105. [Google Scholar] [CrossRef]

- Deneen, C.C.; Hoo, H.T. Connecting teacher and student assessment literacy with self-evaluation and peer feedback. Assess. Eval. High. Educ. 2023, 48, 214–226. [Google Scholar] [CrossRef]

- Vinent, M.E.S. Del proceso de enseñanza aprendizaje tradicional, al proceso de enseñanza aprendizaje para la formación de competencias, en los estudiantes de la enseñanza básica, media superior y superior. Cuad. Educ. Desarro. 2009. [Google Scholar]

- García Cascales, M.S. Métodos Para la Comparación de Alternativas Mediante un Sistema de Ayuda a la Decisión SAD y “Soft Computing”. Ph.D. Thesis, Universidad Politécnica de Cartagena, Cartagena, Spain, 2009. [Google Scholar]

- Emovon, I.; Oghenenyerovwho, O.S. Application of MCDM method in material selection for optimal design: A review. Results Mater. 2020, 7, 100115. [Google Scholar] [CrossRef]

- Chakraborty, S.; Chakraborty, S. A scoping review on the applications of MCDM techniques for parametric optimization of machining processes. Arch. Comput. Methods Eng. 2022, 29, 4165–4186. [Google Scholar] [CrossRef]

- Si, J.; Marjanovic-Halburd, L.; Nasiri, F.; Bell, S. Assessment of building-integrated green technologies: A review and case study on applications of Multi-Criteria Decision Making (MCDM) method. Sustain. Cities Soc. 2016, 27, 106–115. [Google Scholar] [CrossRef]

- Gil-García, I.C.; Ramos-Escudero, A.; García-Cascales, M.S.; Dagher, H.; Molina-García, A. Fuzzy GIS-based MCDM solution for the optimal offshore wind site selection: The Gulf of Maine case. Renew. Energy 2022, 183, 130–147. [Google Scholar] [CrossRef]

- Toloie-Eshlaghy, A.; Homayonfar, M. MCDM methodologies and applications: A literature review from 1999 to 2009. Res. J. Int. Stud. 2011, 21, 86–137. [Google Scholar]

- Saaty, T.L. What Is the Analytic Hierarchy Process? Springer: Berlin/Heidelberg, Germany, 1988. [Google Scholar]

- Aguarón, J.; Moreno-Jiménez, J.M. The geometric consistency index: Approximated thresholds. Eur. J. Oper. Res. 2003, 147, 137–145. [Google Scholar] [CrossRef]

- Hwang, C.L.; Yoon, K.; Hwang, C.L.; Yoon, K. Methods for multiple attribute decision making. In Multiple Attribute Decision Making; Lecture Notes in Economics and Mathematical Systems; Springer: Berlin/Heidelberg, Germany, 1981; pp. 58–191. [Google Scholar]

- Dasarathy, B. SMART: Similarity Measure Anchored Ranking Technique for the Analysis of Multidimensional Data Arrays. IEEE Trans. Syst. Man Cybern. 1976, SMC-6, 708–711. [Google Scholar] [CrossRef]

- BOE-A-2010-10542; Real Decreto 861/2010, de 2 de Julio, Por el Que se Modifica el Real Decreto 1393/2007, de 29 de Octubre, Por el Que se Establece la Ordenación de las Enseñanzas Universitarias Oficiales. Agencia Estatal Boletín Oficial del Estado: Madrid, Spain, 2010.

- BOE-A-2010-10542; Real Decreto 1509/2008, de 12 de Septiembre, Por el Que se Regula el Registro de Universidades, Centros y Títulos. Agencia Estatal Boletín Oficial del Estado: Madrid, Spain, 2008.

- Mohammad-Davoudi, A.H.; Parpouchi, A. Relation between team motivation, enjoyment, and cooperation and learning results in learning area based on team-based learning among students of Tehran University of medical science. Procedia-Soc. Behav. Sci. 2016, 230, 184–189. [Google Scholar] [CrossRef]

- Marticorena-Sánchez, R.; López-Nozal, C.; Ji, Y.P.; Pardo-Aguilar, C.; Arnaiz-González, Á. UBUMonitor: An open-source desktop application for visual E-learning analysis with Moodle. Electronics 2022, 11, 954. [Google Scholar] [CrossRef]

- Gil-García, I.C.; Fernández-Guillamón, A.; García-Cascales, M.S.; Molina-García, Á. Virtual campus environments: A comparison between interactive H5P and traditional online activities in master teaching. Comput. Appl. Eng. Educ. 2023, 31, 1648–1661. [Google Scholar] [CrossRef]

- Sánchez-González, A. Peer assessment between students of energy in buildings to enhance learning and collaboration. Adv. Build. Educ. 2021, 5, 23–38. [Google Scholar] [CrossRef]

- de la Vega, I.N. Una aproximación al concepto de evaluación para el aprendizaje y al feedback con función reguladora a partir de los diarios docentes. J. Neuroeduc. 2022, 3, 69–89. [Google Scholar] [CrossRef]

- Luna, S.M.M. Manual práctico para el diseño de la Escala Likert. Xihmai 2007, 2. [Google Scholar] [CrossRef]

| Scale | Verbal Scale | Explanation |

|---|---|---|

| 1 | Equal importance | Two criteria contribute equally to the objective |

| 3 | Moderate importance | Experience and judgement favour one criterion over another |

| 5 | Strong importance | One criterion is strongly favoured |

| 7 | Very strong importance | One criterion is very dominant |

| 9 | Extreme importance | One criterion is favoured by at least one order of magnitude of difference |

| ⋯ | ⋯ | |||||

|---|---|---|---|---|---|---|

| ⋯ | ⋯ | |||||

| ⋯ | ⋯ | |||||

| ⋯ | ⋯ | |||||

| ⋮ | ⋮ | ⋮ | ⋱ | ⋮ | ⋱ | ⋮ |

| ⋮ | ⋮ | ⋱ | ⋮ | ⋱ | ⋮ | |

| ⋮ | ⋮ | ⋮ | ⋱ | ⋮ | ⋱ | ⋮ |

| ⋯ | ⋯ |

| Category | Criterion | |

|---|---|---|

| Subject | General and transversal competencies | |

| Specific competencies | ||

| Learning outcomes | ||

| Lecturer | Complexity in preparing the activity | |

| Grading of the activity | ||

| Activity | Use of new technologies | |

| Encourages collaborative learning | ||

| Integration with the platform | ||

| Student | Degree of difficulty to perform the activity | |

| Feedback from the teaching team |

| Activities | |

|---|---|

| Assignment: Report (Individual) | |

| Assignment: Report (Group) | |

| Questionnaire | |

| Lesson | |

| Workshop | |

| Complex H5P Activity | |

| Forums | |

| Glossary | |

| Databases | |

| Genially: Escape Room case |

| 1 | 1/5 | 1/3 | 3 | 3 | 3 | 1/3 | 3 | 3 | 1/5 | |

| 5 | 1 | 3 | 9 | 9 | 7 | 7 | 9 | 9 | 3 | |

| 3 | 1/3 | 1 | 9 | 9 | 5 | 5 | 5 | 7 | 3 | |

| 1/3 | 1/9 | 1/9 | 1 | 3 | 1/5 | 1/3 | 1/3 | 1/3 | 1/7 | |

| 1/3 | 1/9 | 1/9 | 1/3 | 1 | 1/3 | 1/5 | 1/5 | 1/5 | 1/7 | |

| 1/3 | 1/7 | 1/5 | 5 | 3 | 1 | 1/3 | 3 | 3 | 1/3 | |

| 3 | 1/7 | 1/5 | 3 | 5 | 3 | 1 | 3 | 3 | 1/3 | |

| 1/3 | 1/9 | 1/5 | 3 | 5 | 1/3 | 1/3 | 1 | 3 | 1/3 | |

| 1/3 | 1/9 | 1/7 | 3 | 5 | 1/3 | 1/3 | 1/3 | 1 | 1/5 | |

| 5 | 1/3 | 1/3 | 7 | 7 | 3 | 3 | 3 | 5 | 1 |

| 1 | 1/7 | 1/7 | 3 | 3 | 1/3 | 1/3 | 3 | 1/3 | 1/3 | |

| 7 | 1 | 3 | 9 | 9 | 5 | 5 | 7 | 3 | 3 | |

| 7 | 1/3 | 1 | 9 | 9 | 5 | 5 | 7 | 3 | 3 | |

| 1/3 | 1/9 | 1/9 | 1 | 3 | 1/5 | 1/5 | 1/3 | 1/5 | 1/5 | |

| 1/3 | 1/9 | 1/9 | 1/3 | 1 | 1/5 | 1/3 | 1/3 | 1/5 | 1/3 | |

| 3 | 1/5 | 1/5 | 5 | 5 | 1 | 1/3 | 3 | 1/3 | 1/5 | |

| 3 | 1/5 | 1/5 | 5 | 3 | 3 | 1 | 3 | 1/3 | 3 | |

| 1/3 | 1/7 | 1/7 | 3 | 3 | 1/3 | 1/3 | 1 | 1/3 | 1/3 | |

| 3 | 1/3 | 1/3 | 5 | 5 | 3 | 3 | 3 | 1 | 3 | |

| 3 | 1/3 | 1/3 | 5 | 3 | 5 | 1/3 | 3 | 1/3 | 1 |

| 1 | 1/3 | 1/3 | 9 | 9 | 5 | 3 | 3 | 5 | 5 | |

| 3 | 1 | 3 | 9 | 9 | 9 | 7 | 7 | 9 | 3 | |

| 3 | 1/3 | 1 | 9 | 9 | 7 | 5 | 5 | 7 | 5 | |

| 1/9 | 1/9 | 1/9 | 1 | 3 | 1/5 | 1/3 | 1/3 | 1/3 | 1/3 | |

| 1/9 | 1/9 | 1/9 | 1/3 | 1 | 1/5 | 1/3 | 1/3 | 1/3 | 1/3 | |

| 1/5 | 1/9 | 1/7 | 5 | 5 | 1 | 3 | 3 | 5 | 3 | |

| 1/3 | 1/7 | 1/5 | 3 | 3 | 1/3 | 1 | 3 | 3 | 3 | |

| 1/3 | 1/7 | 1/5 | 3 | 3 | 1/3 | 1/3 | 1 | 3 | 3 | |

| 1/5 | 1/9 | 1/7 | 3 | 3 | 1/5 | 1/3 | 1/3 | 1 | 3 | |

| 1/5 | 1/5 | 1/5 | 3 | 3 | 1/3 | 1/3 | 1/3 | 1/3 | 1 |

| Criterion | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Weight | 0.0747 | 0.3127 | 0.2295 | 0.0204 | 0.0164 | 0.0622 | 0.0764 | 0.0405 | 0.0509 | 0.0722 |

| Criterion | Unit | Objective Function |

|---|---|---|

| General and transversal competencies | % | Maximize (↑) |

| Specific competencies | % | Maximize (↑) |

| Learning outcomes | % | Maximize (↑) |

| Complexity in preparing the activity | Likert | Minimize (↓) |

| Grading of the activity | Likert | Minimize (↓) |

| Use of new technologies | Likert | Maximize (↑) |

| Encourages collaborative learning | Likert | Maximize (↑) |

| Integration with the platform | Likert | Maximize (↑) |

| Degree of difficulty to perform the activity | Likert | Minimize (↓) |

| Feedback from the teaching team | Likert | Maximize (↑) |

| Value | Description |

|---|---|

| 1 | Very little |

| 2 | Little |

| 3 | Moderately sufficient |

| 4 | Sufficient |

| 5 | A lot |

| 45 | 70 | 80 | 4 | 5 | 2 | 1 | 5 | 2 | 5 | |

| 48 | 80 | 90 | 5 | 5 | 2 | 5 | 5 | 3 | 5 | |

| 35 | 50 | 95 | 5 | 1 | 3 | 1 | 5 | 2 | 4 | |

| 30 | 45 | 60 | 5 | 1 | 4 | 1 | 5 | 3 | 4 | |

| 48 | 55 | 70 | 5 | 4 | 3 | 5 | 5 | 4 | 5 | |

| 40 | 60 | 65 | 5 | 1 | 5 | 1 | 5 | 4 | 4 | |

| 20 | 25 | 35 | 2 | 3 | 2 | 5 | 5 | 2 | 3 | |

| 18 | 20 | 20 | 2 | 1 | 2 | 1 | 5 | 2 | 2 | |

| 15 | 20 | 18 | 2 | 1 | 2 | 1 | 5 | 2 | 2 | |

| 25 | 45 | 45 | 5 | 4 | 5 | 3 | 1 | 4 | 4 |

| 0.427 | 0.457 | 0.411 | 0.328 | 0.562 | 0.231 | 0.112 | 0.353 | 0.246 | 0.429 | |

| 0.456 | 0.522 | 0.462 | 0.410 | 0.562 | 0.231 | 0.559 | 0.353 | 0.369 | 0.429 | |

| 0.332 | 0.326 | 0.488 | 0.410 | 0.113 | 0.346 | 0.112 | 0.353 | 0.246 | 0.343 | |

| 0.285 | 0.294 | 0.308 | 0.410 | 0.113 | 0.462 | 0.112 | 0.353 | 0.369 | 0.343 | |

| 0.456 | 0.359 | 0.359 | 0.410 | 0.450 | 0.346 | 0.559 | 0.353 | 0.492 | 0.429 | |

| 0.380 | 0.392 | 0.334 | 0.410 | 0.113 | 0.577 | 0.112 | 0.353 | 0.492 | 0.343 | |

| 0.190 | 0.164 | 0.180 | 0.164 | 0.337 | 0.231 | 0.559 | 0.353 | 0.246 | 0.257 | |

| 0.171 | 0.131 | 0.103 | 0.164 | 0.113 | 0.231 | 0.112 | 0.353 | 0.246 | 0.171 | |

| 0.142 | 0.131 | 0.093 | 0.164 | 0.113 | 0.231 | 0.112 | 0.353 | 0.246 | 0.171 | |

| 0.237 | 0.294 | 0.231 | 0.410 | 0.450 | 0.577 | 0.335 | 0.070 | 0.492 | 0.343 |

| 0.032 | 0.143 | 0.094 | 0.007 | 0.009 | 0.014 | 0.009 | 0.014 | 0.013 | 0.031 | 0.0493 | 0.1298 | 0.7248 | |

| 0.034 | 0.163 | 0.106 | 0.008 | 0.009 | 0.014 | 0.043 | 0.014 | 0.019 | 0.031 | 0.0249 | 0.1563 | 0.8628 | |

| 0.025 | 0.102 | 0.112 | 0.008 | 0.002 | 0.022 | 0.008 | 0.014 | 0.013 | 0.025 | 0.0726 | 0.1129 | 0.6086 | |

| 0.021 | 0.092 | 0.071 | 0.008 | 0.002 | 0.029 | 0.008 | 0.014 | 0.019 | 0.025 | 0.0911 | 0.0759 | 0.4545 | |

| 0.034 | 0.112 | 0.083 | 0.008 | 0.007 | 0.022 | 0.043 | 0.014 | 0.025 | 0.031 | 0.0624 | 0.1054 | 0.6282 | |

| 0.028 | 0.123 | 0.077 | 0.008 | 0.002 | 0.036 | 0.008 | 0.014 | 0.025 | 0.025 | 0.0659 | 0.1042 | 0.6127 | |

| 0.014 | 0.051 | 0.041 | 0.003 | 0.006 | 0.014 | 0.043 | 0.014 | 0.013 | 0.019 | 0.1365 | 0.0453 | 0.2491 | |

| 0.013 | 0.041 | 0.024 | 0.003 | 0.002 | 0.014 | 0.008 | 0.014 | 0.013 | 0.013 | 0.1589 | 0.0194 | 0.1090 | |

| 0.011 | 0.041 | 0.021 | 0.003 | 0.002 | 0.014 | 0.008 | 0.014 | 0.013 | 0.013 | 0.1605 | 0.0192 | 0.1067 | |

| 0.018 | 0.092 | 0.053 | 0.008 | 0.007 | 0.036 | 0.026 | 0.003 | 0.025 | 0.023 | 0.0976 | 0.0677 | 0.4096 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gil-García, I.C.; Fernández-Guillamón, A. SMART: Selection Model for Assessment Resources and Techniques. Educ. Sci. 2024, 14, 23. https://doi.org/10.3390/educsci14010023

Gil-García IC, Fernández-Guillamón A. SMART: Selection Model for Assessment Resources and Techniques. Education Sciences. 2024; 14(1):23. https://doi.org/10.3390/educsci14010023

Chicago/Turabian StyleGil-García, Isabel C., and Ana Fernández-Guillamón. 2024. "SMART: Selection Model for Assessment Resources and Techniques" Education Sciences 14, no. 1: 23. https://doi.org/10.3390/educsci14010023

APA StyleGil-García, I. C., & Fernández-Guillamón, A. (2024). SMART: Selection Model for Assessment Resources and Techniques. Education Sciences, 14(1), 23. https://doi.org/10.3390/educsci14010023