A Framework for Analysis and Development of Augmented Reality Applications in Science and Engineering Teaching

Abstract

:1. Introduction

2. Materials and Methods

3. Results

3.1. Selection of Relevant Parameters

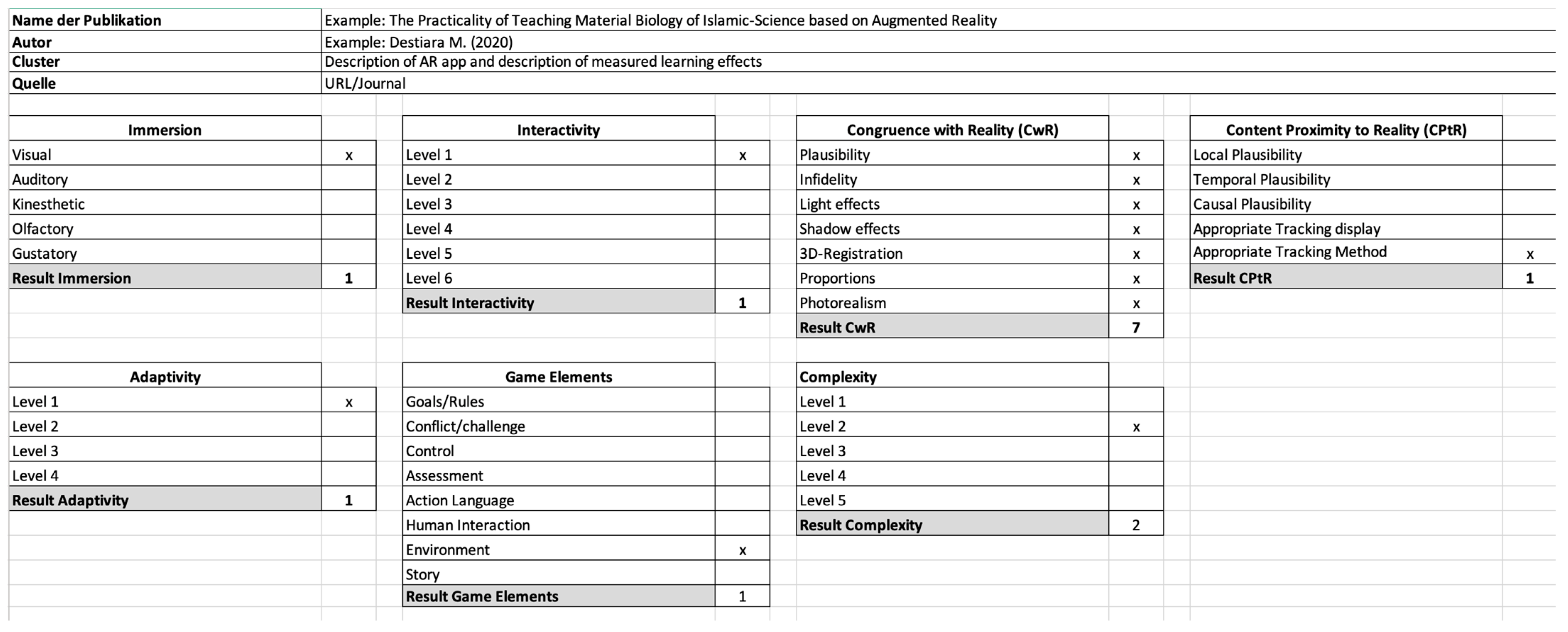

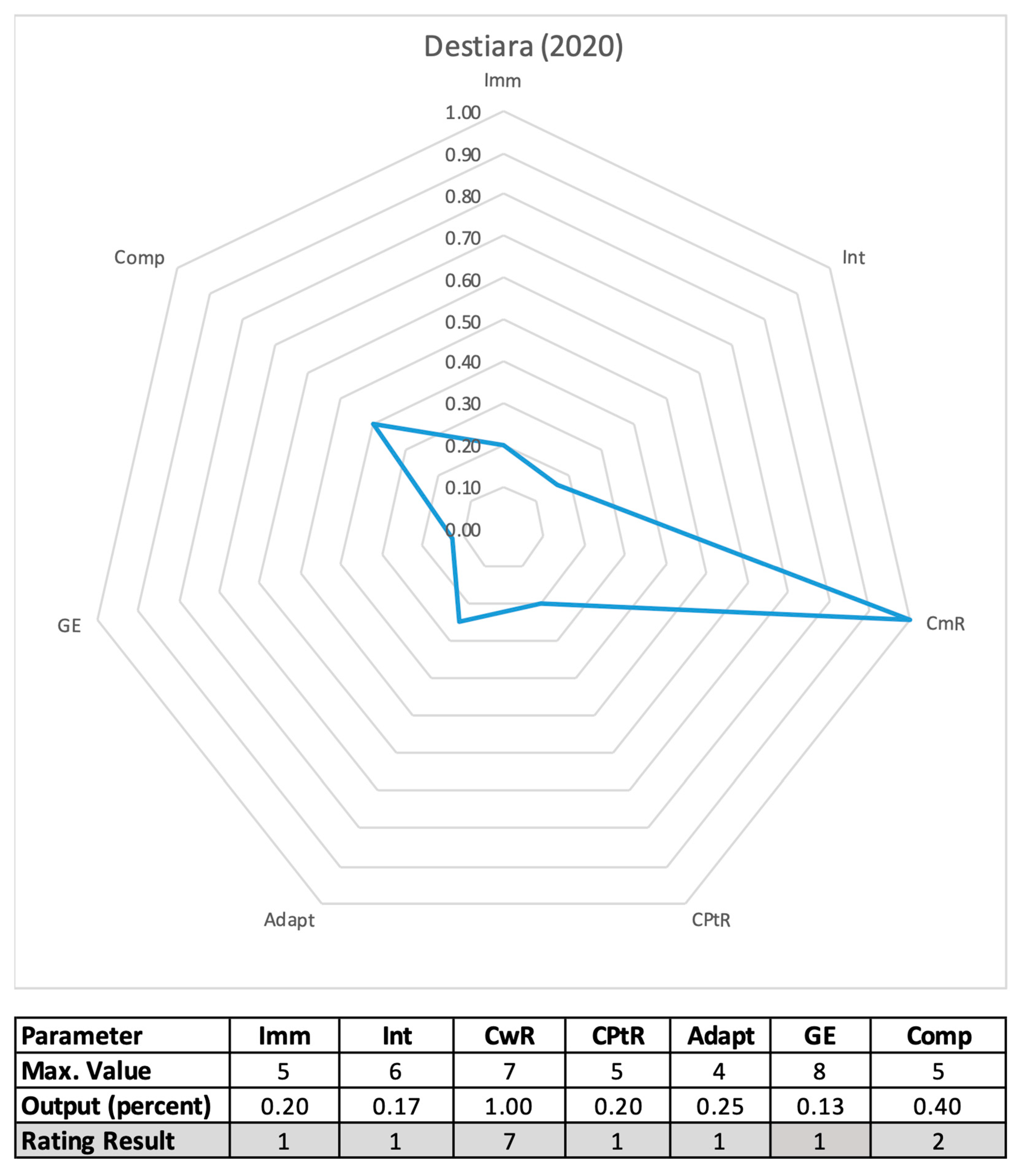

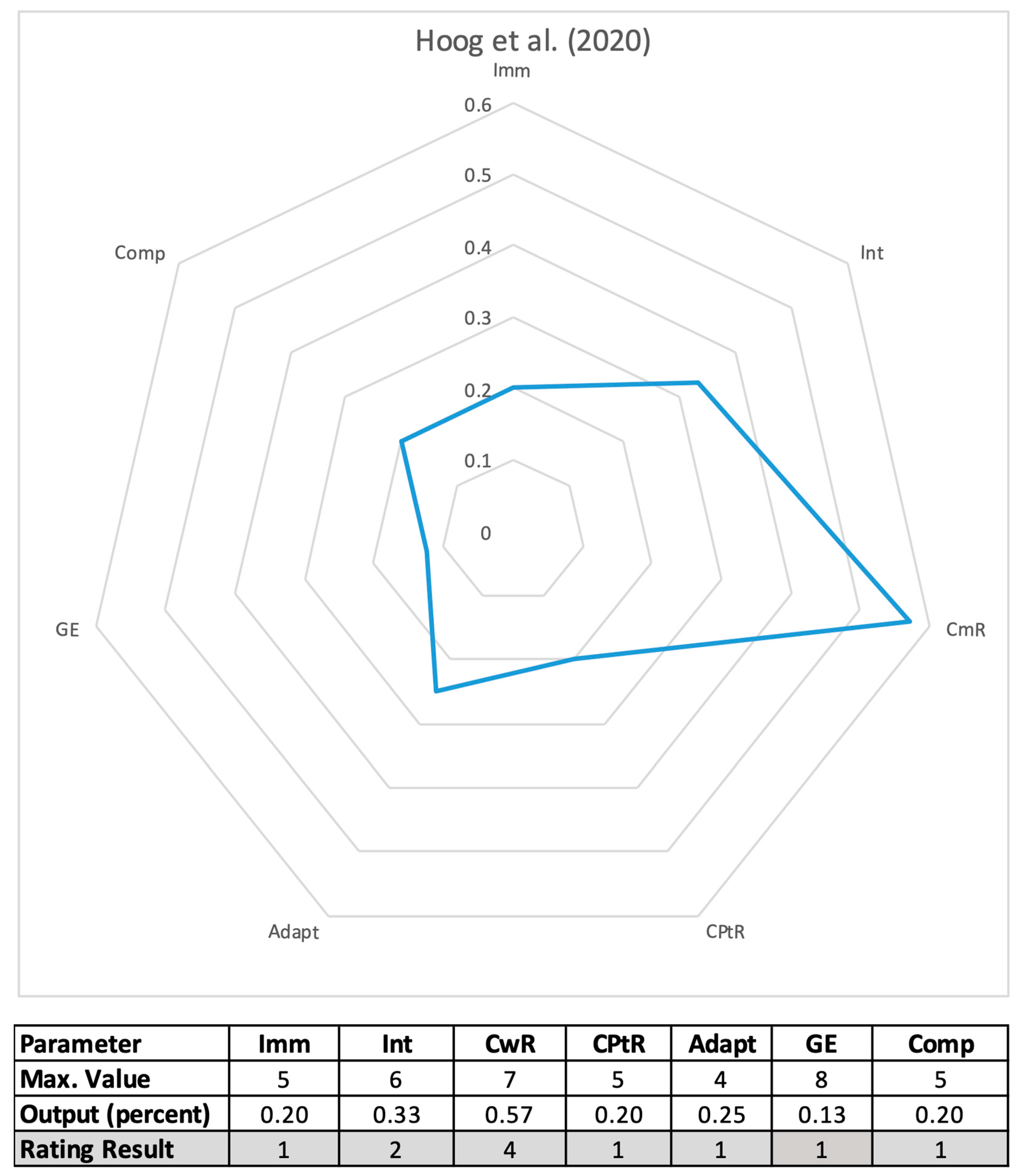

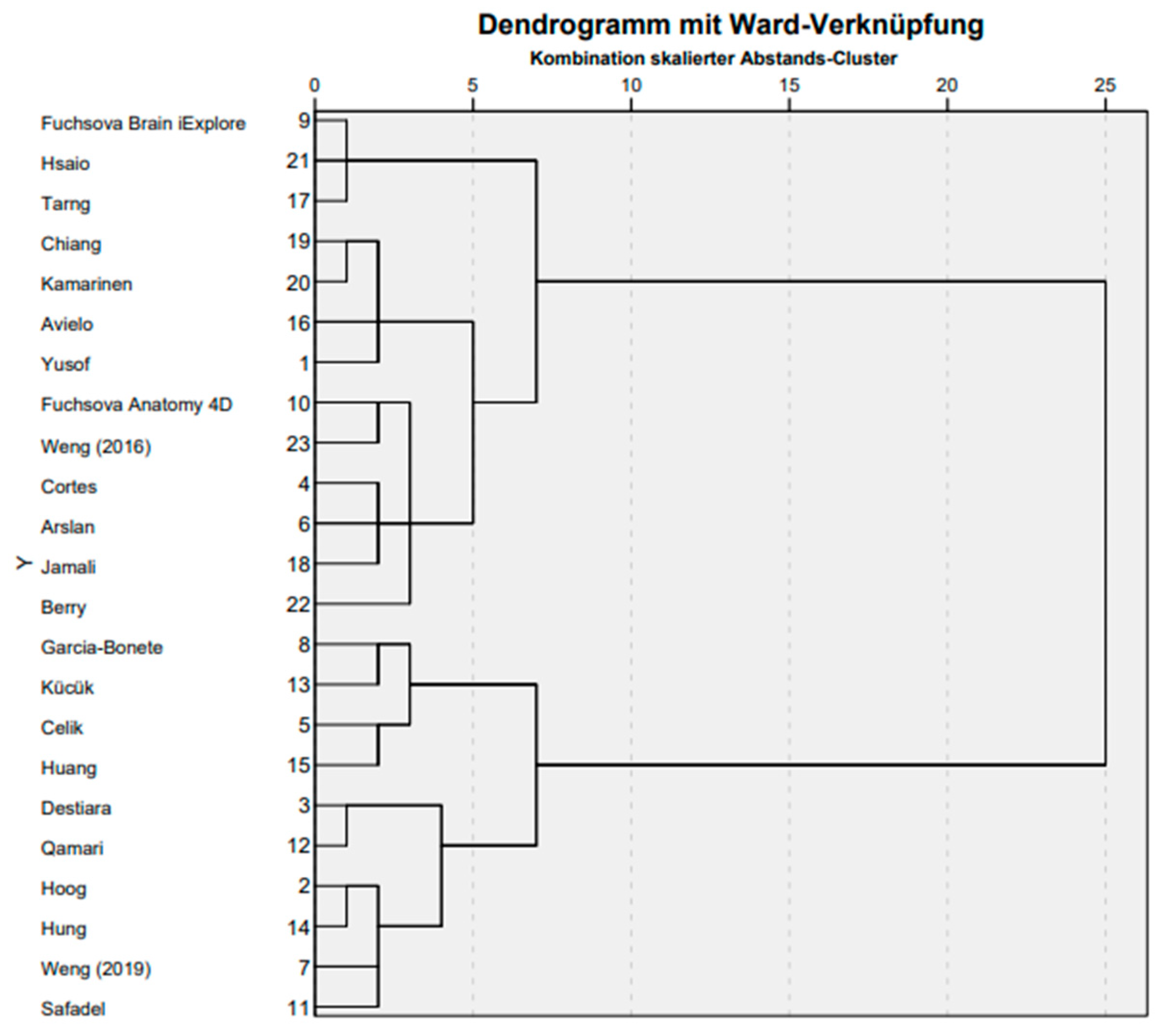

3.2. Evaluation and Comparison of Rated AR Applications

3.2.1. Measured Learning Effects

3.2.2. Graphical Overlay

3.2.3. Statistical Clustering and Relation between Setup and Learning Effect

4. Discussion

- Target audience (age, level of knowledge, prior experience);

- Learning objective (skills, knowledge, content/topic);

- AR technology (equipment, restrictions/possibilities);

- AR elements (use of advantages/benefits of this technology);

- Type of user action (learning strategy: interactive, game-based, collaborative, experiential).

Limitations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- European Comission. Digital Education Action Plan 2021–2027 Resetting Education and Training for the Digital Age. 2020. Available online: https://education.ec.europa.eu/focus-topics/digital-education/action-plan (accessed on 9 March 2023).

- KMK Lehren und Lernen in der Digitalen Welt. Die Ergänzende Empfehlung zur Strategie Bildung in der Digitalen Welt. 2021. Available online: https://www.kmk.org/fileadmin/Dateien/veroeffentlichungen_beschluesse/2021/2021_12_09-Lehren-und-Lernen-Digi.pdf (accessed on 9 March 2023).

- OECD The Future of Education and Skills Education 2030. 2018. Available online: https://www.oecd.org/education/2030/E2030%20Position%20Paper%20(05.04.2018).pdf (accessed on 9 July 2023).

- Van Laar, E.; Van Deursen, A.J.A.M.; Van Dijk, J.A.G.M.; De Haan, J. The relation between 21st-century skills and digital skills: A systematic literature review. Comput. Hum. Behav. 2017, 72, 577–588. [Google Scholar] [CrossRef]

- González-Pérez, L.I.; Ramírez-Montoya, M.S. Components of Education 4.0 in 21st Century Skills Frameworks: Systematic Review. Sustainability 2022, 14, 1493. [Google Scholar] [CrossRef]

- von Kotzebue, L.; Meier, M.; Finger, A.; Kremser, E.; Huwer, J.; Thoms, L.-J.; Becker, S.; Bruckermann, T.; Thyssen, C. The Framework DiKoLAN (Digital Competencies for Teaching in Science Education) as Basis for the Self-Assessment Tool DiKoLAN-Grid. Educ. Sci. 2021, 11, 775. [Google Scholar] [CrossRef]

- Krug, M.; Czok, V.; Huwer, J.; Weitzel, H.; Müller, W. Challenges for the design of augmented reality applications for science teacher education. INTED Proc. 2021, 6, 2484–2491. [Google Scholar] [CrossRef]

- Milgram, P.; Takemura, H.; Utsumi, A.; Kishino, F. Augmented reality: A class of displays on the reality-virtuality continuum. In SPIE Proceedings, Telemanipulator and Telepresence Technologies; Das, H., Ed.; SPIE: Boston, MA, USA, 1995; pp. 282–292. [Google Scholar]

- Azuma, R.T. A Survey of Augmented Reality. Presence Teleoper. Virtual Environ. 1997, 6, 355–385. [Google Scholar] [CrossRef]

- Azuma, R.; Baillot, Y.; Behringer, R.; Feiner, S.; Julier, S.; MacIntyre, B. Recent advances in augmented reality. IEEE Comput. Graph. Appl. 2001, 21, 34–47. [Google Scholar] [CrossRef]

- Sepúlveda, A. The Digital Transformation of Education: Connecting Schools, Empowering Learners. 2020. Available online: https://unesdoc.unesco.org/ark:/48223/pf0000374309 (accessed on 5 July 2023).

- Nielsen, B.L.; Brandt, H.; Swensen, H. Augmented Reality in science education–affordances for student learning. Nord. Stud. Sci. Educ. 2016, 12, 157–174. [Google Scholar] [CrossRef]

- Pence, H.E. Smartphones, Smart Objects, and Augmented Reality. Ref. Libr. 2010, 52, 136–145. [Google Scholar] [CrossRef]

- Cheng, K.H.; Tsai, C.C. Affordances of Augmented Reality in Science Learning: Suggestions for Future Research. J. Sci. Educ. Technol. 2013, 22, 449–462. [Google Scholar] [CrossRef]

- Celik, C.; Guven, G.; Cakir, N.K. Integration of mobile augmented reality (MAR) applications into biology laboratory: Anatomic structure of the heart. Res. Learn. Technol. 2020, 28, 2355. [Google Scholar] [CrossRef]

- Tschiersch, A.; Krug, M.; Huwer, J.; Banerji, A. Augmented Reality in chemistry education—An overview. ChemKon 2021, 28, 241–244. [Google Scholar] [CrossRef]

- Probst, C.; Fetzer, D.; Lukas, S.; Huwer, J. Effects of using augmented reality (AR) in visualizing a dynamic particle model. ChemKon 2021, 29, 164–170. [Google Scholar] [CrossRef]

- Huwer, J.; Banerji, A.; Thyssen, C. Digitalisierung—Perspektiven für den Chemieunterricht. Nachrichten Chem. 2020, 68, 10–16. [Google Scholar] [CrossRef]

- Wu, H.; Lee, S.W.; Chang, H.; Liang, J. Current status, opportunities and challenges of augmented reality in edu-cation. Comput. Educ. 2013, 62, 41–49. [Google Scholar] [CrossRef]

- Hanid, M.F.A.; Said, M.N.H.M.; Yahaya, N. Learning Strategies Using Augmented Reality Technology in Education: Meta-Analysis. Univers. J. Educ. Res. 2020, 8, 51–56. [Google Scholar] [CrossRef]

- Karayel, C.E.; Krug, M.; Hoffmann, L.; Kanbur, C.; Barth, C.; Huwer, J. ZuKon 2030: An Innovative Learning Environment Focused on Sustainable Development Goals. J. Chem. Educ. 2022, 100, 102–111. [Google Scholar] [CrossRef]

- Syskowski, S.; Huwer, J. A Combination of Real-World Experiments and Augmented Reality When Learning about the States of Wax—An Eye-Tracking Study. Educ. Sci. 2023, 13, 177. [Google Scholar] [CrossRef]

- Krug, M.; Huwer, J. Safety in the Laboratory—An Exit Game Lab Rally in Chemistry Education. Computers 2023, 12, 67. [Google Scholar] [CrossRef]

- Harzing, A.-W. Publish or Perish User’s Manual. 2016. Available online: https://harzing.com/resources/publish-or-perish/manual/using/query-results/accuracy (accessed on 9 March 2023).

- Gusenbauer, M. Google Scholar to overshadow them all? Comparing the sizes of 12 academic search engines and bibliographic databases. Scientometrics 2019, 118, 177–214. [Google Scholar] [CrossRef]

- Krug, M.; Czok, V.; Müller, S.; Weitzel, H.; Huwer, J.; Kruse, S.; Müller, W. Ein Bewertungsraster für Augmented-Reality-Lehr-Lernszenarien im Unterricht. ChemKon 2022, 29, 312–318. [Google Scholar] [CrossRef]

- Destiara, M. The Practicality of Teaching Material Biology of Islamic-Science based on Augmented Reality. BIO-INOVED J. Biol. Pendidik. 2020, 2, 117–122. [Google Scholar] [CrossRef]

- Chi, M.T.H.; Wylie, R. The ICAP Framework: Linking Cognitive Engagement to Active Learning Outcomes. Educ. Psychol. 2014, 49, 219–243. [Google Scholar] [CrossRef]

- Puentedura, R. Transformation, Technology, and Education. 2006. Available online: http://hippasus.com/resources/tte/puentedura_tte.pdf (accessed on 9 March 2023).

- Schulmeister, R. Interaktivität in Multimedia-Anwendungen. 2005. Available online: https://www.e-teaching.org/didaktik/gestaltung/interaktiv/InteraktivitaetSchulmeister.pdf (accessed on 9 March 2023).

- Heeter, C. Being There: The subjective experience of presence. Presence Teleoper. Virtual Environ. 1992, 1, 262–271. [Google Scholar] [CrossRef]

- Witmer, B.G.; Singer, M.J. Measuring Presence in Virtual Environments: A Presence Questionnaire. Presence Teleoper. Virtual Environ. 1998, 7, 225–240. [Google Scholar] [CrossRef]

- Slater, M.; Wilbur, S. A framework for immersive virtual environments (FIVE): Speculations on the role of presence in virtual environments. Presence Teleoper. Virtual Environ. 1997, 6, 603–616. [Google Scholar] [CrossRef]

- Standen, P. Realism and imagination in educational multimedia simulations. In The Learning Superhighway: New World? New Worries? Proceedings of the Third International Interactive Multimedia Symposium, Perth, Western Australia, 21–25 January 1996; McBeath, C., Atkinson, R., Eds.; Promaco Conventions Pty Limited: Bateman, WA, USA, 1996; pp. 384–390. Available online: https://ascilite.org/archived-journals/aset/confs/iims/1996/ry/standen.html (accessed on 1 July 2023).

- McMahan, A. Immersion, Engagement, and Presence: A Method for Analyzing 3-D Video Games. In The Video Game Theory Reader; Wolf, M.J.P., Perron, B., Eds.; Routledge: Oxfordshire, UK, 2003; pp. 67–86. [Google Scholar]

- Söldner, G. Semantische Adaption von Komponenten. Ph.D. Thesis, Friedrich Alexander-Universität Erlangen-Nürnberg, Erlangen, Germany, 2012. Available online: https://d-nb.info/1029374171/34 (accessed on 9 March 2023).

- Paramythis, A.; Loidl-Reisinger, S. Adaptive Learning Environments and e-Learning Standards. Electron. J. E-Learn. 2004, 2, 181–194. [Google Scholar]

- Deterding, S.; Dixon, D.; Khaled, R.; Nacke, L. From Game Design Elements to Gamefulness: Defining “Gamification”. In Proceedings of the 15th International Academic MindTrek Conference: Envisioning Future Media Environments, Proceedings from MindTrek 11, New York, NY, USA, 28–30 September 2011. [Google Scholar]

- Nah, F.F.H.; Zeng, Q.; Telaprolu, V.R.; Ayyappa, A.P.; Eschenbrenner, B. Gamification of education: A review of literature. In Proceedings of the International Conference on HCI in Business, Heraklion, Greece, 22–27 June 2014; Available online: https://link.springer.com/content/pdf/10.1007/978-3-319-07293-739.pdf (accessed on 1 July 2023).

- Parong, J.; Mayer, R.E. Learning science in immersive virtual reality. J. Educ. Psychol. 2018, 110, 785–797. [Google Scholar] [CrossRef]

- Hung, H.; Yeh, H. Augmented-reality-enhanced game-based learning in flipped English classrooms: Effects on students’ creative thinking and vocabulary acquisition. J. Comput. Assist. Learn. 2023, 1–23, Early View. [Google Scholar] [CrossRef]

- Bedwell, W.L.; Pavlas, D.; Heyne, K.; Lazzara, E.H.; Salas, E. Toward a Taxonomy Linking Game Attributes to Learning. Simul. Gaming 2012, 43, 729–760. [Google Scholar] [CrossRef]

- Kauertz, A.; Fischer, H.E.; Mayer, J.; Sumfleth, E.; Walpuski, M. Standardbezogene Kompetenzmodellierung in den Naturwissenschaften der Sekundarstufe. Z. Didakt. Nat. 2010, 16, 135–153. [Google Scholar]

- Yusof, C.S.; Amri, N.L.S.; Ismail, A.W. Bio-WtiP: Biology lesson in handheld augmented reality application using tangible interaction. IOP Conf. Ser. Mater. Sci. Eng. 2020, 979, 12002. [Google Scholar] [CrossRef]

- Hoog, T.G.; Aufdembrink, L.M.; Gaut, N.J.; Sung, R.; Adamala, K.P.; Engelhart, A.E. Rapid deployment of smartphone-based augmented reality tools for field and online education in structural biology. Biochem. Mol. Biol. Educ. 2020, 48, 448–451. [Google Scholar] [CrossRef] [PubMed]

- Rodríguez, F.C.; Frattini, G.; Krapp, L.F.; Martinez-Hung, H.; Moreno, D.M.; Roldán, M.; Salomón, J.; Stemkoski, L.; Traeger, S.; Dal Peraro, M.; et al. MoleculARweb: A Web Site for Chemistry and Structural Biology Education through Interactive Augmented Reality out of the Box in Commodity Devices. J. Chem. Educ. 2021, 98, 2243–2255. [Google Scholar] [CrossRef]

- Kofoglu, M.; Dargut, C.; Arslan, R. Development of Augmented Reality Application for Biology Education. Tused 2020, 17, 62–72. [Google Scholar] [CrossRef]

- Weng, C.; Otanga, S.; Christianto, S.M.; Chu, R.J.-C. Enhancing Students’ Biology Learning by Using Augmented Reality as a Learning Supplement. J. Educ. Comput. Res. 2020, 58, 747–770. [Google Scholar] [CrossRef]

- Garcia-Bonete, M.-J.; Jensen, M.; Katona, G. A practical guide to developing virtual and augmented reality exercises for teaching structural biology. Biochem. Mol. Biol. Educ. A Bimon. Publ. Int. Union Biochem. Mol. Biol. 2019, 47, 16–24. [Google Scholar] [CrossRef]

- Korenova, L.; Fuchsova, M. Visualisation in Basic Science and Engineering Education of Future Primary School Teachers in Human Biology Education Using Augmented Reality. Eur. J. Contemp. Educ. 2019, 8, 92–102. [Google Scholar] [CrossRef]

- Safadel, P.; White, D. Facilitating Molecular Biology Teaching by Using Augmented Reality (AR) and Protein Data Bank (PDB). TechTrends 2019, 63, 188–193. [Google Scholar] [CrossRef]

- Qamari, C.N.; Ridwan, M.R. Implementation of Android-based augmented reality as learning and teaching media of dicotyledonous plants learning materials in biology subject. In Proceedings of the 3rd International Conference on Science in Information Technology (ICSITech), Bandung, Indonesia, 25–26 October 2017; pp. 441–446. [Google Scholar] [CrossRef]

- Küçük, S.; Kapakin, S.; Göktaş, Y. Learning anatomy via mobile augmented reality: Effects on achievement and cognitive load. Anat. Sci. Educ. 2016, 9, 411–421. [Google Scholar] [CrossRef]

- Hung, Y.-H.; Chen, C.-H.; Huang, S.-W. Applying augmented reality to enhance learning: A study of different teaching materials. J. Comput. Assist. Learn. 2017, 33, 252–266. [Google Scholar] [CrossRef]

- Huang, T.-C.; Chen, C.-C.; Chou, Y.-W. Animating eco-education: To see, feel, and discover in an augmented reality-based experiential learning environment. Comput. Educ. 2016, 96, 72–82. [Google Scholar] [CrossRef]

- Aivelo, T.; Uitto, A. Digital gaming for evolutionary biology learning: The case study of parasite race, an augmented reality location-based game. LUMAT 2016, 4, 1–26. [Google Scholar] [CrossRef]

- Tarng, W.; Yu, C.-S.; Liou, F.-L.; Liou, H.-H. Development of a virtual butterfly ecological system based on augmented reality and mobile learning technologies. Virtual Real. 2013, 19, 674–679. [Google Scholar] [CrossRef]

- Jamali, S.S.; Shiratuddin, M.F.; Wong, K.W.; Oskam, C.L. Utilising Mobile-Augmented Reality for Learning Human Anatomy. Procedia—Soc. Behav. Sci. 2015, 197, 659–668. [Google Scholar] [CrossRef]

- Chiang, T.; Yang, S.; Hwang, G.-J. An Augmented Reality-based Mobile Learning System to Improve Students’ Learning Achievements and Motivations in Natural Science Inquiry Activities. Educ. Technol. Soc. 2014, 17, 352–365. [Google Scholar]

- Kamarainen, A.M.; Metcalf, S.; Grotzer, T.; Browne, A.; Mazzuca, D.; Tutwiler, M.S.; Dede, C. EcoMOBILE: Integrating augmented reality and probeware with environmental education field trips. Comput. Educ. 2013, 68, 545–556. [Google Scholar] [CrossRef]

- Hsiao, K.-F.; Chen, N.-S.; Huang, S.-Y. Learning while exercising for science education in augmented reality among adolescents. Interact. Learn. Environ. 2012, 20, 331–349. [Google Scholar] [CrossRef]

- Berry, C.; Board, J. A Protein in the palm of your hand through augmented reality. Biochem. Mol. Biol. Educ. A Bimon. Publ. Int. Union Biochem. Mol. Biol. 2014, 42, 446–449. [Google Scholar] [CrossRef]

- Cai, S.; Wang, X.; Chiang, F.-K. A case study of Augmented Reality simulation system application in a chemistry course. Comput. Hum. Behav. 2014, 37, 31–40. [Google Scholar] [CrossRef]

- Mahmoud Mohd Said Al Qassem, L.; Al Hawai, H.; AlShehhi, S.; Zemerly, M.J.; Ng, J.W. AIR-EDUTECH: Augmented immersive reality (AIR) technology for high school Chemistry education. In Proceedings of the 2016 IEEE Global Engineering Education Conference (EDUCON), Abu Dhabi, United Arab, 10–13 April 2016; pp. 842–847. [Google Scholar] [CrossRef]

- Abu-Dalbouh, H.M.; AlSulaim, S.M.; AlDera, S.A.; Alqaan, S.E.; Alharbi, L.M.; AlKeraida, M.A. An Application of Physics Experiments of High School by using Augmented Reality. IJSEA 2020, 11, 37–49. [Google Scholar] [CrossRef]

- Nishihamat, D.; Takeuchi, T.; Inoue, T.; Okada, K.I. AR chemistry: Building up augmented reality for learning chemical experiment. Int. J. Inform. Soc. 2010, 2, 43–48. [Google Scholar]

- Wan, A.T.; San, L.Y.; Omar, M.S. Augmented Reality Technology for Year 10 Chemistry Class. Int. J. Comput.-Assist. Lang. Learn. Teach. 2018, 8, 45–64. [Google Scholar] [CrossRef]

- Cai, S.; Liu, C.; Wang, T.; Liu, E.; Liang, J.-C. Effects of learning physics using Augmented Reality on students’ self-efficacy and conceptions of learning. Br. J. Educ. Technol. 2021, 52, 235–251. [Google Scholar] [CrossRef]

- Maier, P.; Klinker, G.J. Evaluation of an Augmented-Reality-based 3D User Interface to Enhance the 3D-Understanding of Molecular Chemistry. In Proceedings of the 5th International Conference on Computer Supported Education, Aachen, Germany, 6–8 May 2013; pp. 294–302. [Google Scholar] [CrossRef]

- Chun Lam, M.; Suwadi, A. Smartphone-based Face-to-Face Collaborative Augmented Reality Architecture for Assembly Training. In Proceedings of the 2nd National Symposium on Human-Computer Interaction 2020, Kuala Lumpur, Malaysia, 8 October 2020. [Google Scholar]

- Kumta, I.; Srisawasdi, N. Investigating Correlation between Students’ Attitude toward Chemistry and Perception toward Augmented Reality, and Gender Effect. In Proceedings of the 23rd International Conference on Computers in Education (ICCE2015), Hangzhou, China, 30 November–4 December 2015. [Google Scholar]

- Nandyansah, W.; Suprapto, N.; Mubarok, H. Picsar (Physics Augmented Reality) as a Learning Media to Practice Abstract Thinking Skills in Atomic Model. J. Phys.Conf. Ser. 2020, 1491, 12049. [Google Scholar] [CrossRef]

- Chen, S.-Y.; Liu, S.-Y. Using augmented reality to experiment with elements in a chemistry course. Comput. Hum. Behav. 2020, 111, 106418. [Google Scholar] [CrossRef]

- Astriawati, N.; Wibowo, W.; Widyanto, H. Designing Android-Based Augmented Reality Application on Three Dimension Space Geometry. J. Phys. Conf. Ser. 2020, 1477, 22006. [Google Scholar] [CrossRef]

- Bazarov, S.E.; Kholodilin, I.Y.; Nesterov, A.S.; Sokhina, A.V. Applying Augmented Reality in practical classes for engineering students. IOP Conf. Ser. Earth Environ. Sci. 2017, 87, 32004. [Google Scholar] [CrossRef]

- Gonzalez-Franco, M.; Pizarro, R.; Cermeron, J.; Li, K.; Thorn, J.; Hutabarat, W.; Tiwari, A.; Bermell-Garcia, P. Immersive Mixed Reality for Manufacturing Training. Front. Robot. AI 2017, 4, 3. [Google Scholar] [CrossRef]

- Guo, W. Improving Engineering Education Using Augmented Reality Environment. In Learning and Collaboration Technologies. Design, Development and Technological Innovation; Zaphiris, P., Ioannou, A., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2018; pp. 233–242. [Google Scholar] [CrossRef]

- Liarokapis, F.; Anderson, E.F. Using Augmented Reality as a Medium to Assist Teaching in Higher Education; Eurographics: Saarbrücken, Germany, 2010. [Google Scholar]

- Luwes, N.J.; van Heerden, L. Augmented reality to aid retention in an African university of technology engineering program. In Proceedings of the 6th International Conference on Higher Education Advances (HEAd’20), Virtually, 3–5 June 2020. [Google Scholar]

- Martín-Gutiérrez, J.; Contero, M. Improving Academic Performance and Motivation in Engineering Education with Augmented Reality. In Proceedings of the HCI International 2011–Posters’ Extended Abstracts: International Conference, HCI International 2011, Proceedings, Part II 14, Orlando, FL, USA, 9–14 July 2011; Springer: Berlin/Heidelberg, Germany, 2011; pp. 509–513. [Google Scholar] [CrossRef]

- Gutiérrez, J.; Fernández, M.D.M. Applying augmented reality in engineering education to improve academic performance & student motivation. Int. J. Eng. Educ. 2014, 30, 625–635. [Google Scholar]

- Sahin, C.; Nguyen, D.; Begashaw, S.; Katz, B.; Chacko, J.; Henderson, L.; Stanford, J.; Dandekar, K.R. Wireless communications engineering education via Augmented Reality. In Proceedings of the 2016 IEEE Frontiers in Education Conference (FIE), Erie, PA, USA, 12–15 October 2016; pp. 1–7. [Google Scholar] [CrossRef]

- Sanchez, A.; Redondo, E.; Fonseca, D.; Navarro, I. Academic performance assessment using Augmented Reality in engineering degree course. In Proceedings of the 2014 IEEE Frontiers in Education Conference (FIE) Proceedings, Madrid, Spain, 22–25 October 2014; pp. 1–7. [Google Scholar] [CrossRef]

- Shirazi, A.; Behzadan, A. Assessing the pedagogical value of Augmented Reality-based learning in construction engineering. In Proceedings of the 13th International Conference on Construction Applications of Virtual Reality, London, UK, 30–31 October 2013; pp. 416–426. [Google Scholar]

- Singh, G.; Mantri, A.; Sharma, O.; Dutta, R.; Kaur, R. Evaluating the impact of the augmented reality learning environment on electronics laboratory skills of engineering students. Comput. Appl. Eng. Educ. 2019, 27, 1361–1375. [Google Scholar] [CrossRef]

- Theodorou, P.; Kydonakis, P.; Botzori, M.; Skanavis, C. Augmented Reality Proves to Be a Breakthrough in Environmental Education. Prot. Restor. Environ. 2018, 7, 219–228. [Google Scholar]

| Key Aspect | Description |

|---|---|

| Interactive | From observing to user interaction with the material. |

| Creator | From consuming to creating content. |

| Collaborative | From individual to collaborative work. |

| Situated learning | The degree of a designs incorporating augmented reality and its setting. |

| Inquiry-based science | The degree of facilitating an inquiry-based perspective opposed to learning facts and concepts. |

| Real-world augmentation | From virtual reality to real world augmentation. |

| 3D visualization | From 2D such as illustrations to applying 3D designs. |

| Juxtaposing | From viewing the design from one perspective to flexibility of altering content and perspective. |

| Data-driven | From static content to actively collecting and presenting data. |

| Domain | Author (Year) of Published Augmented Reality App | Motivation | Cognition | Self-Regulation | Self-Efficacy |

|---|---|---|---|---|---|

| Biology | Yusof, C. et al. (2020) [44] | X | |||

| Hoog, T. G. et al. (2020) [45] | X | ||||

| Destiara, M. (2020) [27] | X | ||||

| Rodriguez, F. et al. (2021) [46] | X | X | |||

| Celik, C. et al. (2020) [15] | X | X | |||

| Kofoglu, M. et al. (2020) [47] | X | X | |||

| Weng et al. (2019) [48] | X | X | |||

| Garcia-Bonete, M.-J. et al. (2019) [49] | X | ||||

| Korenova, L & Fuchsova, M. (2019) “Brain iExplore” [50] | X | X | |||

| Korenova, L & Fuchsova, M. (2019) “Anatomy 4D” [50] | X | X | |||

| Safadel, P. & White, D. (2018) [51] | X | ||||

| Qamari, C. & Ridwan, M. (2017) [52] | X | X | |||

| Kücük, S. et al. (2016) [53] | X | X | |||

| Hung, Y.-H. et al. (2017) [54] | X | ||||

| Huang, T.-C. et al. (2016) [55] | X | X | |||

| Avielo, T. & Uitto, A. (2016) [56] | X | ||||

| Tarng, W. et al. (2015) [57] | X | X | |||

| Jamali, S. S. et al. (2015) [58] | X | X | |||

| Chiang, T.-H.-C. et al. (2014) [59] | X | X | |||

| Kamarainen, A. M. et al. (2013) [60] | X | X | |||

| Hsiao, K.-F. et al. (2012) [61] | X | X | |||

| Berry, C. & Board, J. (2014) [62] | X | ||||

| Result | Biology (number of augmented reality apps measuring…) | 19 | 11 | 3 | 3 |

| Chemistry | Cai, S., Wang, X., & Chiang, F.-K. (2014) [63] | X | |||

| Al Qassem, L. M. M. S. et al. (2016) [64] | X | X | |||

| Abu Dalbouh, H. et al. (2020) [65] | X | X | |||

| Nishihama, D. et al. (2010) [66] | X | ||||

| Wan, A.T. et al. (2018) [67] | X | X | |||

| Cai, S. et al. (2020) [68] | X | x | |||

| Maier, P., & Klinker, G. J. (2013) [69] | X | ||||

| Meng, C. L. et al. (2020) [70] | X | ||||

| Kumta, I., & Srisawasdi, N. (2015) [71] | X | ||||

| Nandyansah, W. et al. (2020) [72] | X | ||||

| Chen, S.-Y., & Liu, S.-Y. (2020) [73] | X | X | |||

| Result | Chemistry (number of augmented reality apps measuring…) | 5 | 10 | 0 | 1 |

| Engineering | Astriawati, N. et al. (2020) [74] | X | |||

| Bazarov, S. E. et al. (2017) [75] | X | ||||

| Gonzalez-Franco, M. et al. (2017) [76] | X | ||||

| Guo, W. (2018) [77] | X | ||||

| Liarokapis, F. & Anderson, E. F. (2010) [78] | X | ||||

| Luwes, N. & Van Heerden L. (2020) [79] | X | ||||

| Martín-Gutiérrez, J. & Contero, M. (2011) [80] | X | X | |||

| Martín-Gutiérrez, J. & Meneses Fernández, M. (2014) [81] | X | ||||

| Sahin, C. et al. (2016) [82] | X | ||||

| Sanchez, A. et al. (2013) [83] | X | ||||

| Shirazi, A. & Behzadan, A. (2013) [84] | X | ||||

| Singh, G. et al. (2019) [85] | X | ||||

| Theodorou, P. et al. (2018) [86] | X | ||||

| Result | Engineering (number of augmented reality apps measuring…) | 6 | 8 | 0 | 0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Czok, V.; Krug, M.; Müller, S.; Huwer, J.; Kruse, S.; Müller, W.; Weitzel, H. A Framework for Analysis and Development of Augmented Reality Applications in Science and Engineering Teaching. Educ. Sci. 2023, 13, 926. https://doi.org/10.3390/educsci13090926

Czok V, Krug M, Müller S, Huwer J, Kruse S, Müller W, Weitzel H. A Framework for Analysis and Development of Augmented Reality Applications in Science and Engineering Teaching. Education Sciences. 2023; 13(9):926. https://doi.org/10.3390/educsci13090926

Chicago/Turabian StyleCzok, Valerie, Manuel Krug, Sascha Müller, Johannes Huwer, Stefan Kruse, Wolfgang Müller, and Holger Weitzel. 2023. "A Framework for Analysis and Development of Augmented Reality Applications in Science and Engineering Teaching" Education Sciences 13, no. 9: 926. https://doi.org/10.3390/educsci13090926

APA StyleCzok, V., Krug, M., Müller, S., Huwer, J., Kruse, S., Müller, W., & Weitzel, H. (2023). A Framework for Analysis and Development of Augmented Reality Applications in Science and Engineering Teaching. Education Sciences, 13(9), 926. https://doi.org/10.3390/educsci13090926