Educational Computational Chemistry for In-Service Chemistry Teachers: A Data Mining Approach to E-Learning Environment Redesign

Abstract

1. Introduction

1.1. Educational Computational Chemistry and E-Learning Environments

E-Learning Educational Computational Chemistry Course

- Module I: CC for Science Education is based on using research-grade computational chemistry software (RGCCS) to edit, create, and test 3D chemical structures linked to drug design.

- Module II: Use databases of chemical compounds, visualisers, and virtual screening to solve problem scenarios.

- Module III: Development of experiences using the Problem-Based Learning (PBL) methodology, problem scenarios, and pedagogical aspects.

| Module | Specific Topics/Contents | Activity Type | Evaluation |

|---|---|---|---|

| I Introduction to computational chemistry for science education. |

|

| Identification of potential drugs for the treatment of COVID-19. |

| II Virtual screening, visualisers, and molecular editors in pedagogical contexts. |

|

| |

| III Fundamentals of PBL. |

|

| Planning of learning activities framed in a PBL environment. |

- (a)

- Description of the stages of the PBL methodology for future implementation.

- (b)

- Approach to a problem scenario, which should contemplate a socio-scientific theme that could be solved through CC, main background, and research question.

- (c)

- Identification of the educational sector in which it will be applied, indicating subjects and learning objectives according to the local curricula, the number of students, technical requirements, application time, etc.

- (d)

- All the necessary information must be provided to help an instructor implement the elaborated activity and the evaluations that she will use during the process.

- (e)

- It must incorporate all the necessary reference material to complement the activity: videos, websites, computer programmes, articles, book chapters, and other documents.

1.2. Educational Data Mining in E-Learning Environments

- Classification is a procedure that consists of grouping individual elements into categories based on the analysis of quantitative information on one or more characteristics inherent to these elements using a training set composed of previously labelled components. Predicting student performance or retention/dropout in a particular course is possible from these categories. Some of the most commonly used classification algorithms are K-Nearest Neighbours, Decision Tree (DT), Naïve Bayes, Support Vector Machine (SVM), and Random Forest (RF).

- Clustering is a technique that classifies students based on their learning and interaction patterns. This technique has recurrent applications in various fields, such as resource recommendation, understanding learning processes, and preventing academic failure, especially in the university environment. Some of the most commonly used clustering algorithms are Hierarchical clustering and K-means.

- Regression is a technique that allows predicting a range of numerical values from a specific dataset. The regression analysis has been used in various applications, including predicting student academic performance and how accurately they will answer particular questions. Additionally, regression has been applied to model user learning behaviour, making it a valuable tool for understanding the cognitive processes associated with knowledge acquisition.

2. Methods

2.1. Sample Characterisation

- Demographic information

- Gender, age, and education

- Knowledge, use, and technological access

2.2. In-Service Chemistry Teachers’ Perception (RQ1)

- The survey consists of 48 questions evaluated on a five-point Likert scale, aiming to obtain balanced responses and avoid the possibility of neutral answers, thereby compelling participants to take definite positions when responding. Using the Likert scale allowed for quantitatively measuring attitudes, opinions, and perceptions [16].

- 2.

- The focus group was conducted to gather information about participants’ perceptions of the e-learning module on educational computational chemistry and its impact on learning and future teaching endeavours. The focus group allows for an in-depth and detailed exploration of the topic, as discussions and idea exchanges among participants can provide new perspectives, perceptions, and nuances. The focus group included open-ended and closed-ended questions related to the TPASK framework. For example, regarding knowledge in the sciences, a question used was, “Did you acquire or develop scientific skills during the completion of the module? If so, what were those skills?” [9].

2.3. Compilation of Records and Database

2.4. Educational Data Mining (RQ2)

3. Results and Discussion

3.1. Integration of CC in the Planning of Learning Activities (RQ1)

3.1.1. Examination of Teaching Plans

3.1.2. TPASK Survey

3.1.3. Focus Group

- TK and TPK

“(…) as difficulties, I think it is time to design a module (…) the fact that one has to do all these activities from scratch and plan them, it could be a bit exhausting (…) there is a lot of work behind the implementation of a PBL environment”.(Id_4)

- PSK

- TSK

“(…) all the software was open access (…) anyone could access the specific scientific information, totally free, they did not require registration or anything (…) it was downloaded from the official page and used immediately (…) even, the issue of databases could be done directly by accessing the internet (…) it can even be done with a mobile phone”.(Id_4)

- TPASK

3.1.4. Final Section Considerations

3.2. Model Generation Using Educational Data Mining (RQ1)

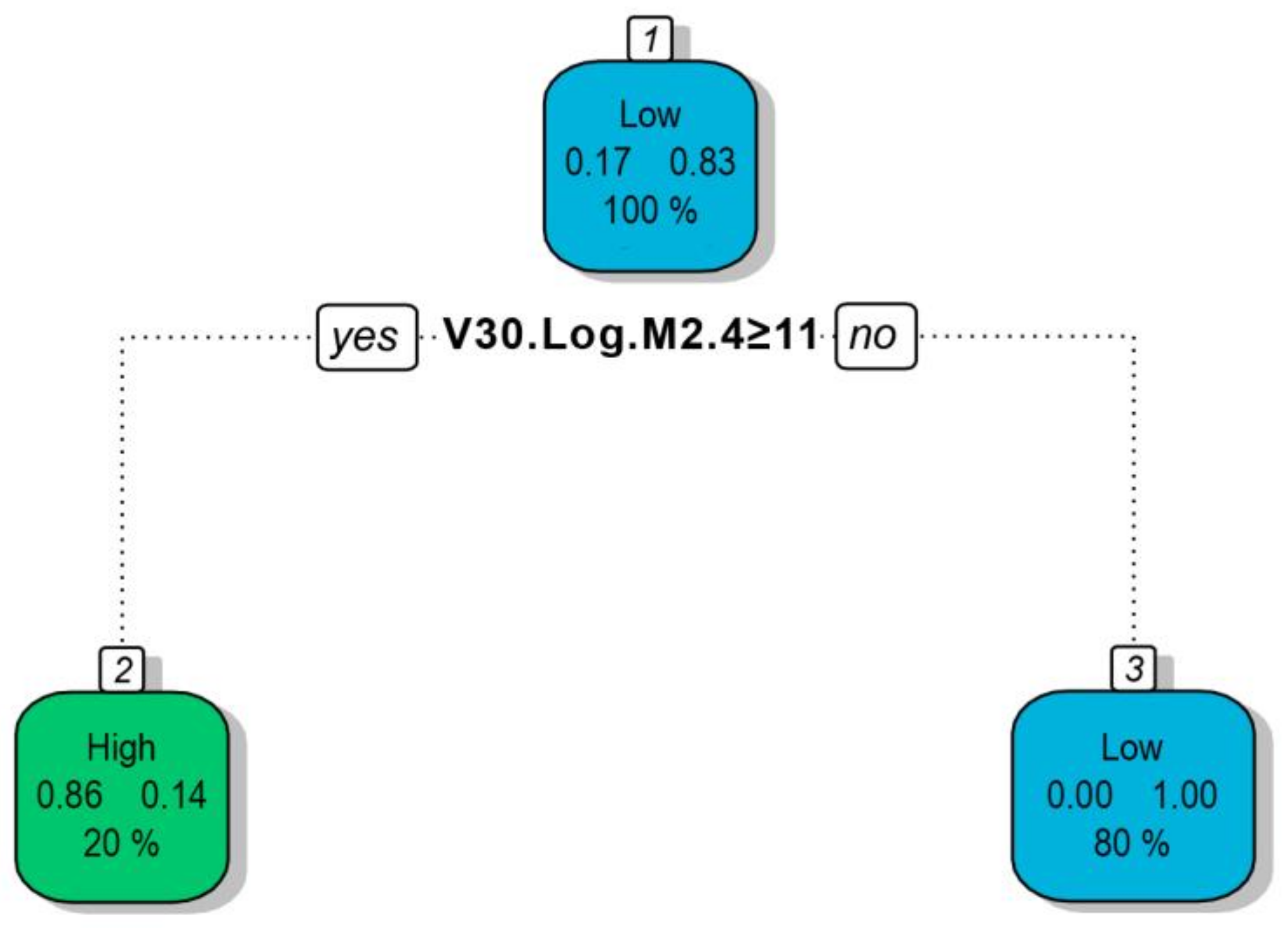

3.2.1. Classification and Regression Trees

- Rule number: 3 [V34.perf = Low cover = 28 (80%) prob = 1.00] V30.Log.M2.4 < 10.5.

- Rule number: 2 [V34.perf = High cover = 7 (20%) prob = 0.14] V30.Log.M2.4 ≥ 10.5.

3.2.2. Random Forest and Support Vector Machine

3.2.3. Model Evaluation and Guidelines for EECCC Redesign

4. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Cannon, A.S.; Anderson, K.R.; Enright, M.C.; Kleinsasser, D.G.; Klotz, A.R.; O’Neil, N.J.; Tucker, L.J. Green Chemistry Teacher Professional Development in New York State High Schools: A Model for Advancing Green Chemistry. J. Chem. Educ. 2023, 100, 2224–2232. [Google Scholar] [CrossRef]

- Hernández-Ramos, J.; Pernaa, J.; Cáceres-Jensen, L.; Rodríguez-Becerra, J. The Effects of Using Socio-Scientific Issues and Technology in Problem-Based Learning: A Systematic Review. Educ. Sci. 2021, 11, 640. [Google Scholar] [CrossRef]

- Parrill, A.L.; Lipkowitz, K.B. Reviews in Computational Chemistry; Wiley Online Library: Hoboken, NJ, USA, 2022; Volume 32. [Google Scholar]

- Jimoyiannis, A. Designing and implementing an integrated technological pedagogical science knowledge framework for science teachers professional development. Comput. Educ. 2010, 55, 1259–1269. [Google Scholar] [CrossRef]

- Tuvi-Arad, I.; Blonder, R. Technology in the Service of Pedagogy: Teaching with Chemistry Databases. Isr. J. Chem. 2019, 59, 572–582. [Google Scholar] [CrossRef]

- Lehtola, S.; Karttunen, A.J. Free and open source software for computational chemistry education. Wiley Interdiscip. Rev. Comput. Mol. Sci. 2022, 12, 33. [Google Scholar] [CrossRef]

- Rodríguez-Becerra, J.; Cáceres-Jensen, L.; Díaz, T.; Druker, S.; Bahamonde, V.; Pernaa, J.; Aksela, M. Developing technological pedagogical science knowledge through educational computational chemistry: A case study of pre-service chemistry teachers’ perceptions. Chem. Educ. Res. Pract. 2020, 21, 638–654. [Google Scholar] [CrossRef]

- Adedoyin, O.B.; Soykan, E. COVID-19 pandemic and online learning: The challenges and opportunities. Interact. Learn. Environ. 2020, 31, 863–875. [Google Scholar] [CrossRef]

- Hernández-Ramos, J.; Rodríguez-Becerra, J.; Cáceres-Jensen, L.; Aksela, M. Constructing a Novel E-Learning Course, Educational Computational Chemistry through Instructional Design Approach in the TPASK Framework. Educ. Sci. 2023, 13, 648. [Google Scholar] [CrossRef]

- Hachicha, W.; Ghorbel, L.; Champagnat, R.; Zayani, C.A.; Amous, I. Using Process Mining for Learning Resource Recommendation: A Moodle Case Study. Procedia Comput. Sci. 2021, 192, 853–862. [Google Scholar] [CrossRef]

- Davies, R.; Allen, G.; Albrecht, C.; Bakir, N.; Ball, N. Using Educational Data Mining to Identify and Analyse Student Learning Strategies in an Online Flipped Classroom. Educ. Sci. 2021, 11, 668. [Google Scholar] [CrossRef]

- Gaftandzhieva, S.; Talukder, A.; Gohain, N.; Hussain, S.; Theodorou, P.; Salal, Y.K.; Doneva, R. Exploring Online Activities to Predict the Final Grade of Student. Mathematics 2022, 10, 3758. [Google Scholar] [CrossRef]

- Williams, G. Data Mining with Rattle and R: The Art of Excavating Data for Knowledge Discovery; Springer Science & Business Media: Berlin, Germany, 2011. [Google Scholar]

- Al-Kindi, I.; Al-Khanjari, Z. A Comparative Study of Classification Algorithms of Moodle Course Logfile using Weka Tool. Int. J. Comput. Their Appl. 2022, 29, 202–211. [Google Scholar]

- Esfijani, A.; Zamani, B.E. Factors influencing teachers’ utilisation of ICT: The role of in-service training courses and access. Res. Learn. Technol. 2020, 28, 2313. [Google Scholar] [CrossRef]

- Jebb, A.T.; Ng, V.; Tay, L. A Review of Key Likert Scale Development Advances: 1995–2019. Front. Psychol. 2021, 12, 637547. [Google Scholar] [CrossRef]

- Habibi, A.; Yusop, F.D.; Razak, R.A. The dataset for validation of factors affecting pre-service teachers’ use of ICT during teaching practices: Indonesian context. Data Brief 2020, 28, 104875. [Google Scholar] [CrossRef]

- Lin, T.; Tsai, C.; Chai, C.; Lee, M. Identifying science teachers’ perceptions of technological pedagogical and content knowledge (TPACK). J. Sci. Educ. Technol. 2013, 22, 325–336. [Google Scholar] [CrossRef]

- Schmidt, D.A.; Baran, E.; Thompson, A.D.; Mishra, P.; Koehler, M.J.; Shin, T.S. Technological pedagogical content knowledge (TPACK) the development and validation of an assessment instrument for pre-service teachers. J. Res. Technol. Educ. 2009, 42, 123–149. [Google Scholar] [CrossRef]

- Forman, J.; Damschroder, L. Qualitative content analysis. In Empirical Methods for Bioethics: A Primer; Emerald Group Publishing Limited: Bingley, UK, 2007; Volume 11, pp. 39–62. [Google Scholar]

- McHugh, M.L. Interrater reliability: The kappa statistic. Biochem. Medica 2012, 22, 276–282. [Google Scholar]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2021. [Google Scholar]

- Williams, G. Rattle: A data mining GUI for R. R J. 2009, 1, 45–55. [Google Scholar]

- Lawrence, M.; Lang, D.T. RGtk2: A graphical user interface toolkit for R. J. Stat. Softw. 2010, 37, 1–52. [Google Scholar] [CrossRef][Green Version]

- Krepf, M.; Plöger, W.; Scholl, D.; Seifert, A. Pedagogical content knowledge of experts and novices—What knowledge do they activate when analysing science lessons? J. Res. Sci. Teach. 2018, 55, 44–67. [Google Scholar] [CrossRef]

- Duc, T.; Hop, N.; Dung, T.; Ha, V. The Effectiveness of Chemistry e-Teaching and e-Learning during the COVID-19 Pandemic in Northern Viet Nam. Int. J. Inf. Educ. Technol. 2022, 12, 240–247. [Google Scholar] [CrossRef]

- Cáceres-Jensen, L.; Rodríguez-Becerra, J.; Jorquera-Moreno, B.; Escudey, M.; Druker-Ibañez, S.; Hernández-Ramos, J.; Díaz-Arce, T.; Pernaa, J.; Aksela, M. Learning Reaction Kinetics through Sustainable Chemistry of Herbicides: A Case Study of Preservice Chemistry Teachers’ Perceptions of Problem-Based Technology Enhanced Learning. J. Chem. Educ. 2021, 98, 1571–1582. [Google Scholar] [CrossRef]

- Bulut, O.; Yavuz, H.C. Educational data mining: A tutorial for the rattle package in R. Int. J. Assess. Tools Educ. 2019, 6, 20–36. [Google Scholar] [CrossRef]

- Shea, P.; Bidjerano, T. Learning presence: Towards a theory of self-efficacy, self-regulation, and the development of a communities of inquiry in online and blended learning environments. Comput. Educ. 2010, 55, 1721–1731. [Google Scholar] [CrossRef]

- Garrison, D.R.; Vaughan, N.D. Blended Learning in Higher Education: Framework, Principles, and Guidelines; John Wiley & Sons: Hoboken, NJ, USA, 2008. [Google Scholar]

- Kizilcec, R.F.; Piech, C.; Schneider, E. Deconstructing disengagement: Analysing learner subpopulations in massive open online courses. In Proceedings of the Third International Conference on Learning Analytics and Knowledge, Arlington, TX, USA, 13–17 March 2013; pp. 170–179. [Google Scholar]

- Rao, P.K. CCR5 inhibitors: Emerging promising HIV therapeutic strategy. Indian J. Sex. Transm. Ted Dis. AIDS 2009, 30, 1–9. [Google Scholar] [CrossRef]

- Tan, Q.; Zhu, Y.; Li, J.; Chen, Z.; Han, G.W.; Kufareva, I.; Li, T.; Ma, L.; Fenalti, G.; Li, J.; et al. Structure of the CCR5 chemokine receptor-HIV entry inhibitor maraviroc complex. Science 2013, 341, 1387–1390. [Google Scholar] [CrossRef]

- Motivational Video. Available online: https://www.youtube.com/watch?v=v2PcQ-449p4 (accessed on 1 January 2023).

| Variable | Data Type | Description |

|---|---|---|

| V1 | Numeric | Final grade of the course |

| V2 | Numeric | Participation in course activities |

| V3 | Categorical | Gender, Female = 1, Male=0 |

| V4 | Numeric | Age |

| V5 | Categorical | No further study, Yes = 1, No = 0 |

| V6 | Categorical | Diploma studies, Yes = 1, No = 0 |

| V7 | Categorical | Postgraduate studies, Yes = 1, No = 0 |

| V8 | Categorical | Master’s studies, Yes = 1, No = 0 |

| V9 | Categorical | Years in service (0 a 2), Yes = 1, No = 0 |

| V10 | Categorical | Years in service (3 a 5), Yes = 1, No = 0 |

| V11 | Categorical | Years in service (6 a 10), Yes = 1, No = 0 |

| V12 | Categorical | Years in service (>10), Yes = 1, No = 0 |

| V13 | Categorical | Access to computer at home, Yes = 1, No = 0 |

| V14 | Categorical | Access to internet at home, Yes = 1, No = 0 |

| V15 | Categorical | Access to computers at work, Yes = 1, No = 0 |

| V16 | Categorical | Access to internet at work, Yes = 1, No = 0 |

| V17 | Categorical | Hardware knowledge, Yes = 1, No = 0 |

| V18 | Categorical | Technology courses (pre-service), Yes = 1, No = 0 |

| V19 | Categorical | Technology courses (in-service), Yes = 1, No = 0 |

| V20 | Numeric | Total courses taken |

| V21 | Numeric | Computer use |

| V22 | Numeric | Event log (Total) |

| V23 | Numeric | Event log (M1) * |

| V25 | Numeric | Event log (M1.1) * |

| V25 | Numeric | Event log (M1.2) * |

| V26 | Numeric | Event log (M2) * |

| V27 | Numeric | Event log (M2.1) * |

| V28 | Numeric | Event log (M2.2) * |

| V29 | Numeric | Event log (M2.3) * |

| V30 | Numeric | Event log (M2.4) * |

| V31 | Numeric | Event log (M3) * |

| V32 | Numeric | Event log (M3.1) * |

| V33 | Numeric | Event log (M4) * |

| V34 | Categorical | Performance, If scores ≥ 5.50, High; Low otherwise |

| Knowledge Type | Strongly Agree | Agree | Neither Agree nor Disagree | Disagree | Strongly Disagree |

|---|---|---|---|---|---|

| PK | 27.8% | 61.1% | 9.7% | 1.4% | 0.0% |

| TK | 42.2% | 42.2% | 11.1% | 4.4% | 0.0% |

| SK | 31.1% | 55.6% | 13.3% | 0.0% | 0.0% |

| PSK | 26.4% | 59.7% | 12.5% | 1.4% | 0.0% |

| TPK | 25.0% | 68.1% | 6.9% | 0.0% | 0.0% |

| TSK | 20.6% | 28.6% | 25.4% | 25.4% | 0.0% |

| TPASK | 30.2% | 58.7% | 11.1% | 0.0% | 0.0% |

| Performance | High | Low | Class.Error |

|---|---|---|---|

| High | 5 | 1 | 0.1666667 |

| Low | 0 | 29 | 0.0000000 |

| Variable | High | Low | Mean Decrease Accuracy | Mean Decrease Gini |

|---|---|---|---|---|

| V2 | 8.43 | 8.27 | 8.46 | 1.18 |

| V32 | 8.23 | 6.73 | 7.64 | 0.83 |

| V33 | 7.88 | 5.25 | 7.33 | 0.68 |

| V31 | 6.78 | 5.25 | 7.04 | 0.62 |

| V30 | 6.03 | 4.81 | 5.91 | 0.51 |

| V1 | 5.11 | 4.89 | 5.48 | 0.90 |

| V22 | 4.33 | 2.84 | 3.91 | 0.34 |

| V28 | 3.13 | 3.33 | 3.63 | 0.25 |

| V29 | 3.60 | 1.76 | 3.49 | 0.32 |

| V26 | 2.97 | 1.37 | 2.69 | 0.18 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hernández-Ramos, J.; Cáceres-Jensen, L.; Rodríguez-Becerra, J. Educational Computational Chemistry for In-Service Chemistry Teachers: A Data Mining Approach to E-Learning Environment Redesign. Educ. Sci. 2023, 13, 796. https://doi.org/10.3390/educsci13080796

Hernández-Ramos J, Cáceres-Jensen L, Rodríguez-Becerra J. Educational Computational Chemistry for In-Service Chemistry Teachers: A Data Mining Approach to E-Learning Environment Redesign. Education Sciences. 2023; 13(8):796. https://doi.org/10.3390/educsci13080796

Chicago/Turabian StyleHernández-Ramos, José, Lizethly Cáceres-Jensen, and Jorge Rodríguez-Becerra. 2023. "Educational Computational Chemistry for In-Service Chemistry Teachers: A Data Mining Approach to E-Learning Environment Redesign" Education Sciences 13, no. 8: 796. https://doi.org/10.3390/educsci13080796

APA StyleHernández-Ramos, J., Cáceres-Jensen, L., & Rodríguez-Becerra, J. (2023). Educational Computational Chemistry for In-Service Chemistry Teachers: A Data Mining Approach to E-Learning Environment Redesign. Education Sciences, 13(8), 796. https://doi.org/10.3390/educsci13080796