An Examination of the Structural Validity of Instruments Assessing PE Teachers’ Beliefs, Intentions, and Self-Efficacy towards Teaching Physically Active Classes

Abstract

1. Introduction

Instruments Assessing Teacher Beliefs, Intentions and Their Self-Efficacy

2. Materials and Methods

2.1. Recruitment

2.2. Participants and Setting

2.3. Instruments

2.3.1. Curriculum Beliefs Instrument

2.3.2. Self-Efficacy Instrument

2.3.3. Instruments to Assess Behavioural Intention, Behavioural Control, Attitude and Subjective Norm

2.4. Data Analysis

2.5. Rasch Analysis

2.5.1. Unidimensionality

2.5.2. Model Data Fit

2.5.3. Item/Person Reliability and Separation

2.5.4. Item Invariance/Differential Item Functioning (DIF)

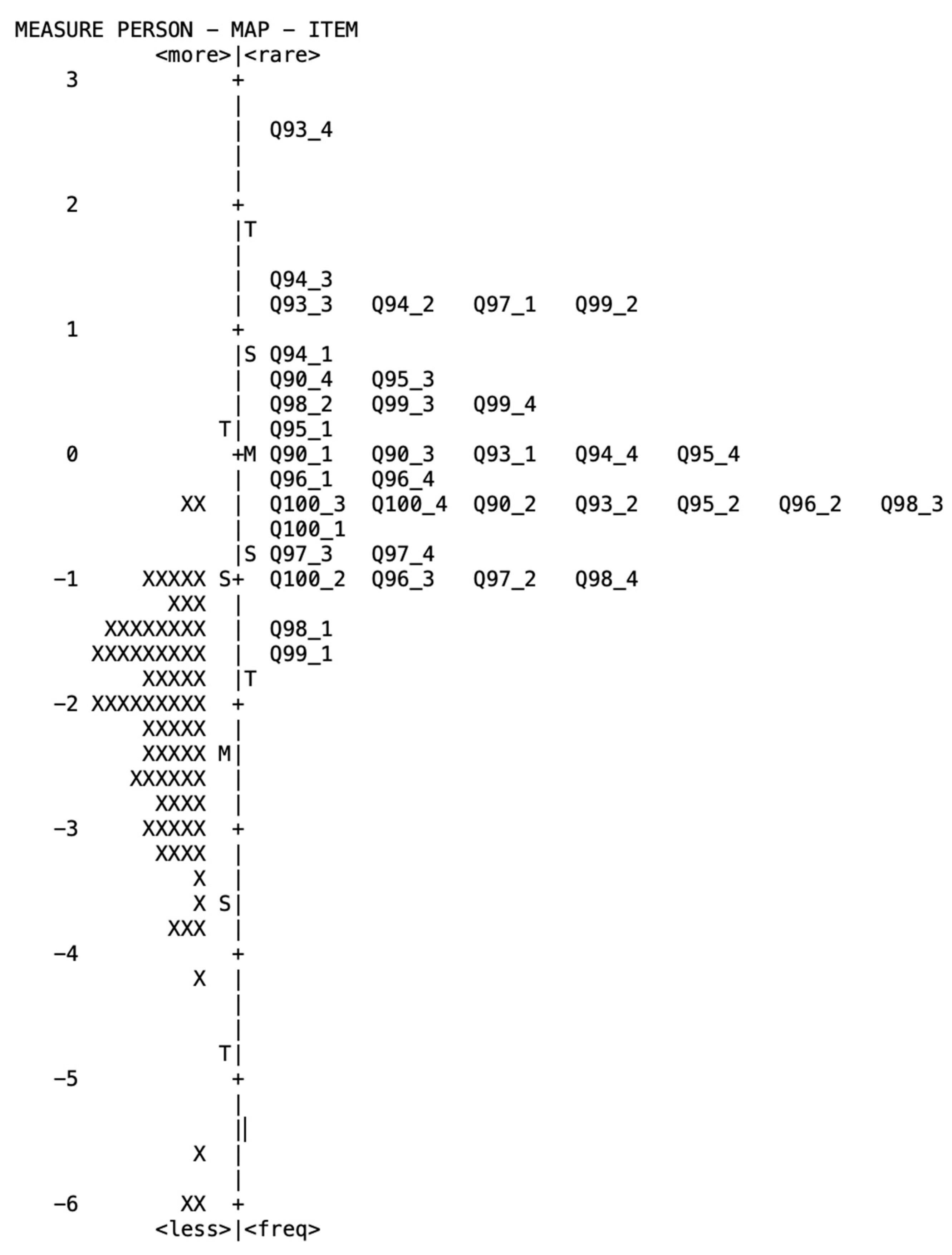

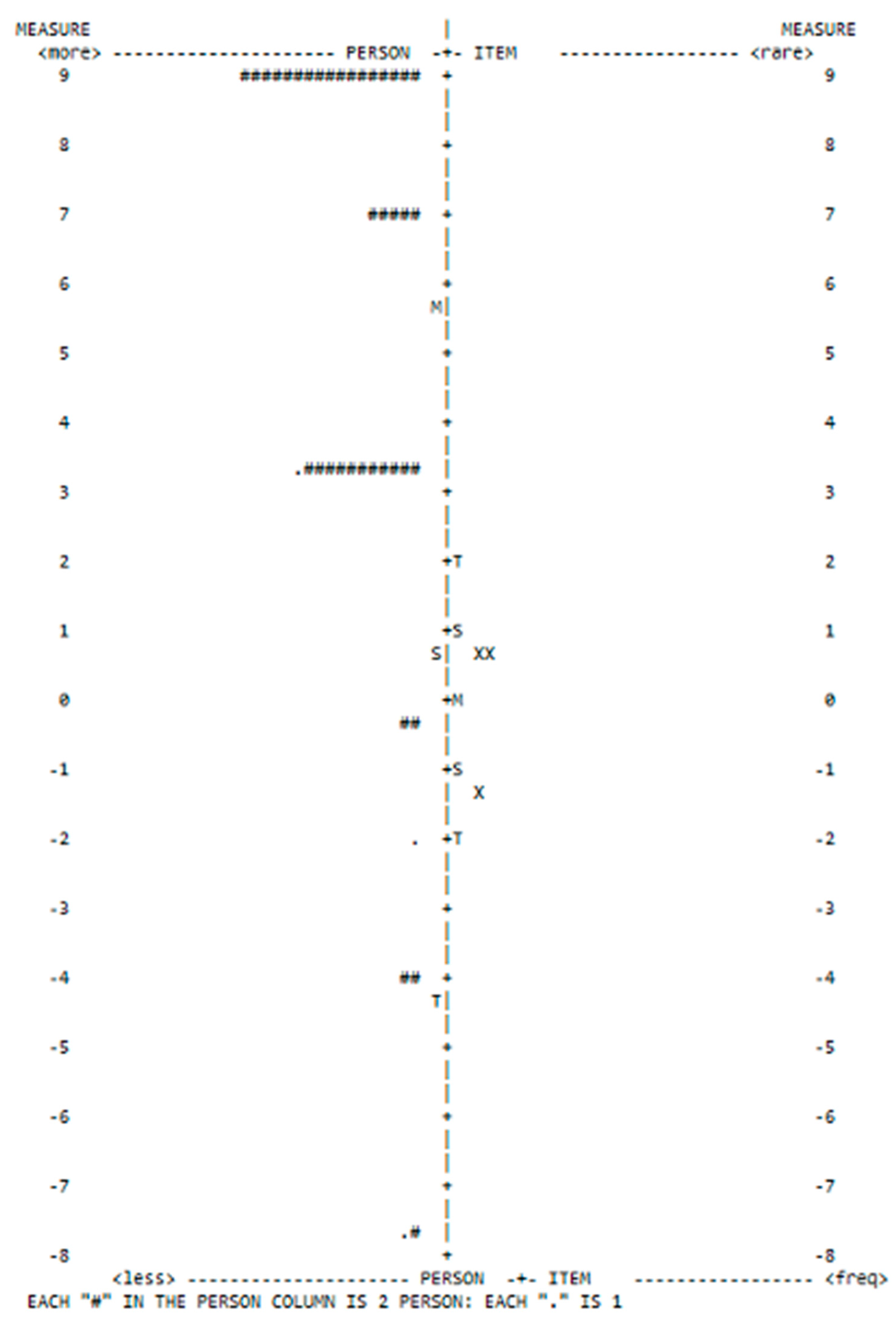

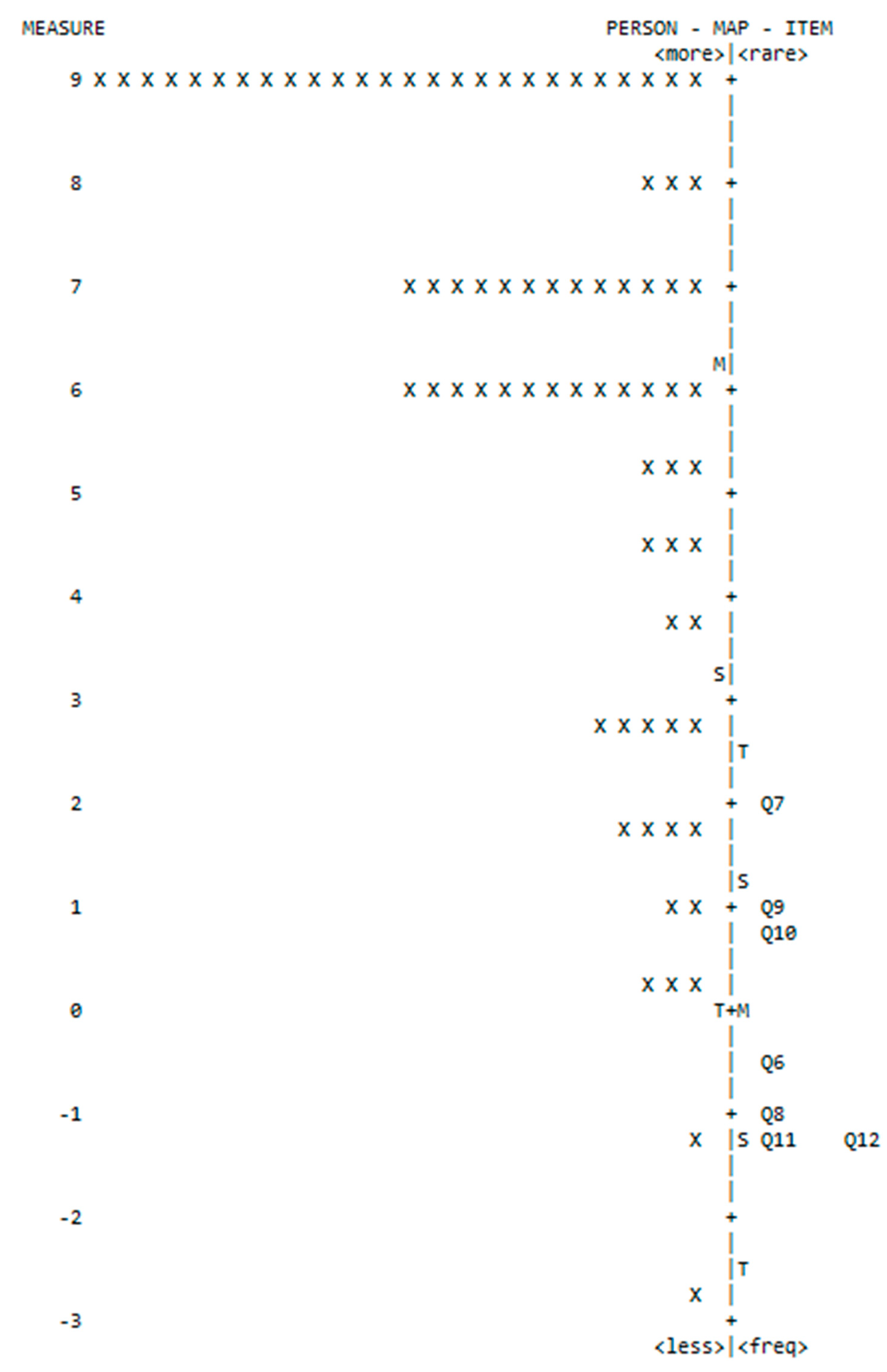

2.5.5. Item-Person Variable (Wright) Map

3. Results

3.1. Curriculum Beliefs Instrument

3.2. Self-Efficacy Instrument

3.3. Behavioural Intentions/Control, Attitude and Subjective Norm Instruments

4. Discussion

5. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ennis, C.D. Knowledge and beliefs underlying curricular expertise. Quest 1994, 46, 164–175. [Google Scholar] [CrossRef]

- Kern, B.D.; Killian, C.M.; Ellison, D.W.; Graber, K.C.; Belansky, E.; Cutforth, N. Teacher Beliefs and Changes in Practice Through Professional Development. J. Teach. Phys. Educ. 2021, 40, 606–617. [Google Scholar] [CrossRef]

- Kulinna, P.H.; Cothran, D.J. Teacher beliefs and efficacy. In Routledge Handbook of Physical Education Pedagogies; Ennis, C.D., Ed.; Routledge: Abington, UK, 2016; pp. 530–540. [Google Scholar]

- Xiong, Y.; Sun, X.-Y.; Liu, X.-Q.; Wang, P.; Zheng, B. The Influence of Self-Efficacy and Work Input on Physical Education Teachers’ Creative Teaching. Front. Psychol. 2020, 10, 2856. [Google Scholar] [CrossRef] [PubMed]

- Kern, B.D.; Graber, K.C.; Woods, A.M.; Templin, T. The Influence of Socializing Agents and Teaching Context Among Teachers of Different Dispositions Toward Change. J. Teach. Phys. Educ. 2019, 38, 252–261. [Google Scholar] [CrossRef]

- Richards, K.A.R.; Pennington, C.G.; Sinelnikov, O.A. Teacher socialization in physical education: A scoping review of literature. Kinesiol. Rev. 2019, 8, 86–99. [Google Scholar] [CrossRef]

- Hutzler, Y.; Meier, S.; Reuker, S.; Zitomer, M. Attitudes and self-efficacy of physical education teachers toward inclusion of children with disabilities: A narrative review of international literature. Phys. Educ. Sport Pedagog. 2019, 24, 249–266. [Google Scholar] [CrossRef]

- Krause, J.M.; O’Neil, K.; Jones, E. Technology in physical education teacher education: A call to action. Quest 2020, 72, 241–259. [Google Scholar] [CrossRef]

- Ennis, C.D. A model describing the influence of values and context on student learning. In Student Learning in Physical Education: Applying Research to Enahnce Instruction; Silverman, S., Ennis, C.D., Eds.; Human Kinetics: Champaign, IL, USA, 1996; pp. 127–147. [Google Scholar]

- Department of Education and Training. Active Schools, Active Kids and Active Communities; Department of Education and Training: Melbourne, VIC, Australia, 2020.

- Society for Health and Physical Educators (SHAPE). National Standards for K-12 Physical Education; SHAPE America: Baltimore, MD, USA, 2013. [Google Scholar]

- Victorian Curriculum and Assessment Authority. Victorian Curriculum: Health and Physical Education; Victorian Curriculum and Assessment Authority: Melbourne, VIC, Australia, 2022.

- Whitehead, M. Physical literacy: Throughout the Lifecourse; Routledge: London, UK, 2010. [Google Scholar]

- Jewett, A.E.; Bain, L.L.; Ennis, C.D. The Curriculum Process in Physical Education; Brown & Benchmark: Cartersveille, GA, USA, 1995. [Google Scholar]

- Shulman, L.S. Those Who Understand: Knowledge Growth in Teaching. Educ. Res. 1986, 15, 4–14. [Google Scholar] [CrossRef]

- Shulman, L.S. Knowledge and Teaching: Foundations of the New Reform. Harv. Educ. Rev. 1987, 57, 1–23. [Google Scholar] [CrossRef]

- Ennis, C.D.; Hooper, L.M. Development of an instrument for assessing educational value orientations. J. Curric. Stud. 1988, 20, 277–280. [Google Scholar] [CrossRef]

- Kulinna, P.H.; Silverman, S. The Development and Validation of Scores on a Measure of Teachers’ Attitudes toward Teaching Physical Activity and Fitness. Educ. Psychol. Meas. 1999, 59, 507–517. [Google Scholar] [CrossRef]

- Kulinna, P.H.; Silverman, S. Teachers’ Attitudes toward Teaching Physical Activity and Fitness. Res. Q. Exerc. Sport 2000, 71, 80–84. [Google Scholar] [CrossRef] [PubMed]

- Adamakis, M. Physical Education students’ beliefs toward four important curricular outcomes: Results from three Greek faculties. J. Phys. Educ. Sport 2018, 18, 1001–1007. [Google Scholar] [CrossRef]

- Adamakis, M.; Dania, A. Are pre-service teachers’ beliefs toward curricular outcomes challenged by teaching methods modules and school placement? Evidence from three Greek physical education faculties. Eur. Phys. Educ. Rev. 2020, 26, 729–746. [Google Scholar] [CrossRef]

- Adamakis, M.; Zounhia, K. The impact of occupational socialization on physical education pre-service teachers’ beliefs about four important curricular outcomes:A cross-sectional study. Eur. Phys. Educ. Rev. 2016, 22, 279–297. [Google Scholar] [CrossRef]

- Adamakis, M.; Zounhia, K.; Hatziharistos, D.; Psychountaki, M. Greek pre-service physical education teachers’ beliefs about curricular orientations: Instrument validation and examination of four important goals. Acta Gymnica 2013, 43, 39–51. [Google Scholar] [CrossRef]

- Guan, J.; McBride, R.; Xiang, P. Chinese teachers’ attitudes toward teaching physical activity and fitness. Asia-Pac. J. Teach. Educ. 2005, 33, 147–157. [Google Scholar] [CrossRef]

- Bandura, A. Self-Efficacy: The Exercise of Control; W H Freeman/Times Books/ Henry Holt & Co: New York, NY, USA, 1997; pp. ix–604. [Google Scholar]

- Gencay, O. Validation of the Physical Education Teachers’ Physical Activity Self-efficacy Scale with a Turkish Sample. Soc. Behav. Personal. Int. J. 2009, 37, 223–230. [Google Scholar] [CrossRef]

- Martin, J.J.; Kulinna, P.H. The Development of a Physical Education Teachers’ Physical Activity Self-Efficacy Instrument. J. Teach. Phys. Educ. 2003, 22, 219–232. [Google Scholar] [CrossRef]

- Martin, J.J.; Kulinna, P.H. Self-efficacy theory and the theory of planned behavior: Teaching physically active physical education classes. Res. Q. Exerc. Sport 2004, 75, 288–297. [Google Scholar] [CrossRef]

- Martin, J.J.; Kulinna, P.H.; Eklund, R.C.; Reed, B. Determinants of teachers’ intentions to teach physically active physical education classes. J. Teach. Phys. Educ. 2001, 20, 129–143. [Google Scholar] [CrossRef]

- Newton, P.E.; Shaw, S.D. Disagreement over the best way to use the word ‘validity’ and options for reaching consensus. Assess. Educ. Princ. Policy Pract. 2016, 23, 178–197. [Google Scholar] [CrossRef]

- Dudley, D.; Weaver, N.; Cairney, J. High-Intensity Interval Training and Health Optimizing Physical Education: Achieving Health and Educative Outcomes in Secondary Physical Education—A Pilot Nonrandomized Comparison Trial. J. Teach. Phys. Educ. 2021, 40, 215–227. [Google Scholar] [CrossRef]

- Bond, T.G.; Fox, C.M. Applying the Rasch Model: Fundamental Measurement in the Human Sciences, 3rd ed.; Routledge: New York, NY, USA; London, UK, 2015. [Google Scholar]

- Wright, B.D.; Masters, G.N. Rating Scale Analysis; MESA Press: San Diego, CA, USA, 1982. [Google Scholar]

- Linacre, M. Practical Rasch Measurement-Tutorial 3. Investigating Test Functioning; Winsteps: Chicago, IL, USA, 2011. [Google Scholar]

- Rasch, G. Probabilistic Models for Some Intelligence and Attainment Tests; University of Chicago Press: Chicago, IL, USA, 1980. [Google Scholar]

- Tsuda, E.; Ward, P.; Ressler, J.D.; Wyant, J.; He, Y.; Kim, I.; Santiago, J.A. Basketball Common Content Knowledge Instrument Validation. Int. J. Kinesiol. High. Educ. 2022, 7, 35–47. [Google Scholar] [CrossRef]

- Williams, B.; Brown, T.; Boyle, M. Construct validation of the readiness for interprofessional learning scale: A Rasch and factor analysis. J. Interprofessional Care 2012, 26, 326–332. [Google Scholar] [CrossRef]

- Wuang, Y.-P.; Lin, Y.-H.; Su, C.-Y. Rasch analysis of the Bruininks–Oseretsky Test of Motor Proficiency-Second Edition in intellectual disabilities. Res. Dev. Disabil. 2009, 30, 1132–1144. [Google Scholar] [CrossRef]

- Tsuda, E.; Ward, P.; Kim, J.; He, Y.; Sazama, D.; Brian, A. The tennis common content knowledge measure validation. Eur. Phys. Educ. Rev. 2021, 27, 654–665. [Google Scholar] [CrossRef]

- Wright, B.D.; Masters, G.N. Number of Person or Item Strata. Rasch Meas. Tradit. 2002, 16, 888. [Google Scholar]

- Linacre, J.M. A User’s Guide to Winsteps Ministep Rasch-Model Computer Program; Winsteps: Chicago, IL, USA, 2022. [Google Scholar]

- Wright, B.D.; Linacre, J.M. Observations are always ordinal; measurements, however, must be interval. Arch. Phys. Med. Rehabil. 1989, 70, 857–860. [Google Scholar] [PubMed]

- Cruickshank, V.; Hyndman, B.; Patterson, K.; Kebble, P. Encounters in a marginalised subject: The experiential challenges faced by Tasmanian Health and Physical Education teachers. Aust. J. Educ. 2021, 65, 24–40. [Google Scholar] [CrossRef]

- Lunz, M.E. Using the Very Useful Wright Map. Measurement Research Associates Test Insights 505 North Lake Shore Dr., Chicago, IL 60611 2010. Available online: https://www.rasch.org/mra/mra-01-10.htm (accessed on 20 October 2022).

- Teman, E.D. A Rasch analysis of the statistical anxiety rating scale. J. Appl. Meas. 2013, 14, 414–434. [Google Scholar] [PubMed]

- Boone, W.J. Rasch Analysis for Instrument Development: Why, When, and How? CBE—Life Sci. Educ. 2016, 15, rm4. [Google Scholar] [CrossRef] [PubMed]

- Marsh, H.W.; Martin, A.J.; Jackson, S. Introducing a Short Version of the Physical Self Description Questionnaire: New Strategies, Short-Form Evaluative Criteria, and Applications of Factor Analyses. J. Sport Exerc. Psychol. 2010, 32, 438–482. [Google Scholar] [CrossRef]

- Brown, T.; Bonsaksen, T. An examination of the structural validity of the Physical Self-Description Questionnaire-Short Form (PSDQ–S) using the Rasch Measurement Model. Cogent Educ. 2019, 6, 1571146. [Google Scholar] [CrossRef]

- Smith, A.B.; Rush, R.; Fallowfield, L.J.; Velikova, G.; Sharpe, M. Rasch fit statistics and sample size considerations for polytomous data. BMC Med. Res. Methodol. 2008, 8, 33. [Google Scholar] [CrossRef]

- Linacre, J.M. Sample Size and Item Calibration Stability. Rasch Meas. Trans. 1994, 7, 328. [Google Scholar]

- Azizan, N.H.; Mahmud, Z.; Rambli, A. Rasch rating scale item estimates using maximum likelihood approach: Effects of sample size on the accuracy and bias of the estimates. Int. J. Adv. Sci. Technol. 2020, 29, 2526–2531. [Google Scholar]

| Parameter | RMM Requirements | Curriculum Beliefs (36 Items) | Self-Efficacy (22 Items) | Behavioural Intention (5 Items) | Attitude (7 Items) | Behavioural Control (3 Items) | Subjective Norm (8 Items) |

|---|---|---|---|---|---|---|---|

| Model fit: summary of items | |||||||

| Item mean (SD) logits | 0.00 | 0.00 (0.19) | 0.00 (0.18) | 0.00 (0.45) | 0.00 (0.35) | 0.00 (0.44) | 0.00 (0.16) |

| Item reliability | >0.8 | 0.95 | 0.85 | 0.79 | 0.92 | 0.82 | 0.73 |

| Item separation index | >3.0 | 4.37 | 2.33 | 1.97 | 3.31 | 2.15 | 1.65 |

| Item strata | >3.0 | 6.16 | 3.44 | 2.96 | 4.74 | 3.2 | 2.53 |

| Item spread | Defined as the difference between maximum item logit score and minimum item logit score | 4.22 | 0.78 | 0.43 | 2.58 | 2.28 | 0.85 |

| Item model fit Infit MNSQ range extremes | 0.60–1.40 | 0.65–1.46 (items 93_2, 97_2) | 0.62–1.31 | 0.50–1.14 | 0.73–1.24 | 0.73 to 1.13 | 0.74 to 1.28 |

| Item model fit Infit ZSTD range extremes | −2.0 to 2.0 | −2.36–2.50 | −2.79–1.86 | −1.86–0.54 | −0.08 to 0.49 | −1.10 to 0.58 | −1.67 to 1.54 |

| Item model fit Outfit MnSq range extremes | 0.60–1.40 | 0.67–1.61(item 97_2, 93_2) | 0.61–1.31 | 0.24–1.25 | 0.75 to 1.07 | 0.42 to 0.74 | 0.77 to 1.23 |

| Item model fit Outfit ZSTD range extremes | −2.0 to 2.0 | −2.21–3.22 (item 97_2, 96_4) | −2.58–2.21 | −2.27–0.73 | −0.72 to 0.34 | −1.04 to −0.31 | −1.45 to 1.30 |

| Model fit:summary of person | |||||||

| Person mean logits (SD) | −2.33 (0.33) | 0.36 (0.38) | −3.50 (1.75) | 6.33 (1.32) | 5.75 (2.22) | 1.25 (0.62) | |

| Person spread | Defined as the difference between maximum person logit score and minimum person logit score | 5.06 | 9.24 | 3.34 | 10.91 | 16.47 | 4.54 |

| Measurement quality: reliability and targeting | |||||||

| Person reliability | >0.80 for individual measurement | 0.89 | 0.91 | 0.55 [poor] | 0.87 | 0.68 | 0.79 |

| Person Separation Index | >1.5 | 2.82 | 3.26 | 1.11 | 2.58 | 1.47 | 1.96 |

| # of Separate Person Strata | >3.0 strata for individual measurement | 4.09 | 4.68 | 1.81 | 3.77 | 2.29 | 2.94 |

| Person Raw Score reliability | >0.80 for individual measurement | 0.91 | 0.96 | 0.93 | 0.91 | 0.92 | 0.82 |

| Unidimensionality | |||||||

| Variance accounted for by 1st factor | >50% | 34.7% | 36.6% | 54.9% | 60.7% | 77.6% | 40.9% |

| PCA (eigenvalue for 1st contrast) | <2.0 | 6.80 | 4.07 | 1.6 | 1.94 | 1.62 | 2.50 |

| Differential Item functioning | |||||||

| DIF by gender | >0.5 logits; p < 0.05 | 1 item possessed DIF (item 96_2) | 0 items possessed DIF | 0 items possessed DIF | 0 items possessed DIF | 0 items possessed DIF | 0 items possessed DIF |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Brown, T.D. An Examination of the Structural Validity of Instruments Assessing PE Teachers’ Beliefs, Intentions, and Self-Efficacy towards Teaching Physically Active Classes. Educ. Sci. 2023, 13, 768. https://doi.org/10.3390/educsci13080768

Brown TD. An Examination of the Structural Validity of Instruments Assessing PE Teachers’ Beliefs, Intentions, and Self-Efficacy towards Teaching Physically Active Classes. Education Sciences. 2023; 13(8):768. https://doi.org/10.3390/educsci13080768

Chicago/Turabian StyleBrown, Trent D. 2023. "An Examination of the Structural Validity of Instruments Assessing PE Teachers’ Beliefs, Intentions, and Self-Efficacy towards Teaching Physically Active Classes" Education Sciences 13, no. 8: 768. https://doi.org/10.3390/educsci13080768

APA StyleBrown, T. D. (2023). An Examination of the Structural Validity of Instruments Assessing PE Teachers’ Beliefs, Intentions, and Self-Efficacy towards Teaching Physically Active Classes. Education Sciences, 13(8), 768. https://doi.org/10.3390/educsci13080768