Characterizing Child–Computer–Parent Interactions during a Computer-Based Coding Game for 5- to 7-Year-Olds

Abstract

1. Introduction

Purpose of the Study

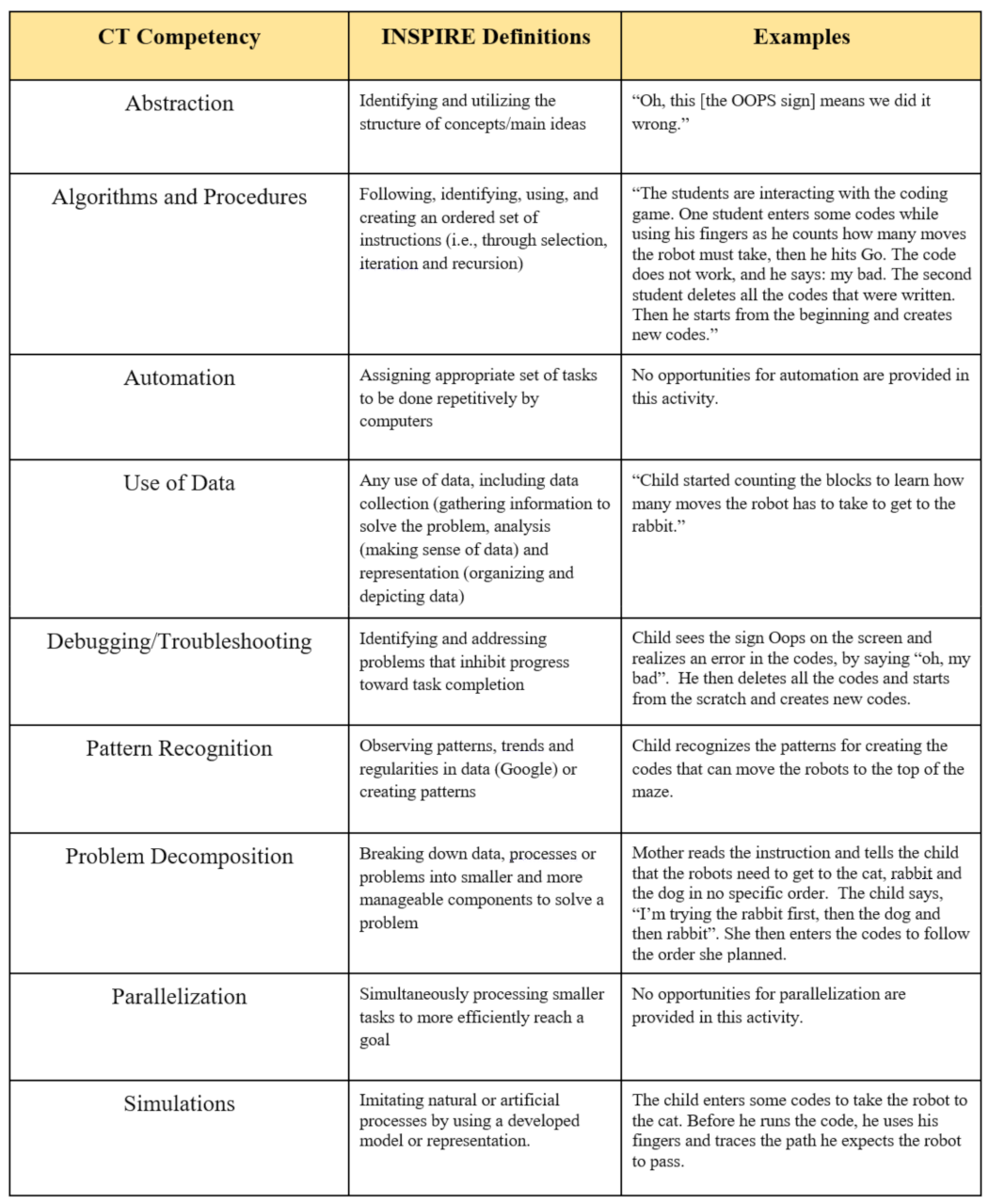

2. Computational Thinking

3. Theoretical and Conceptual Background

3.1. Child–Computer (Coding Game) Interaction

3.2. Child–Parent Interaction

4. Methods

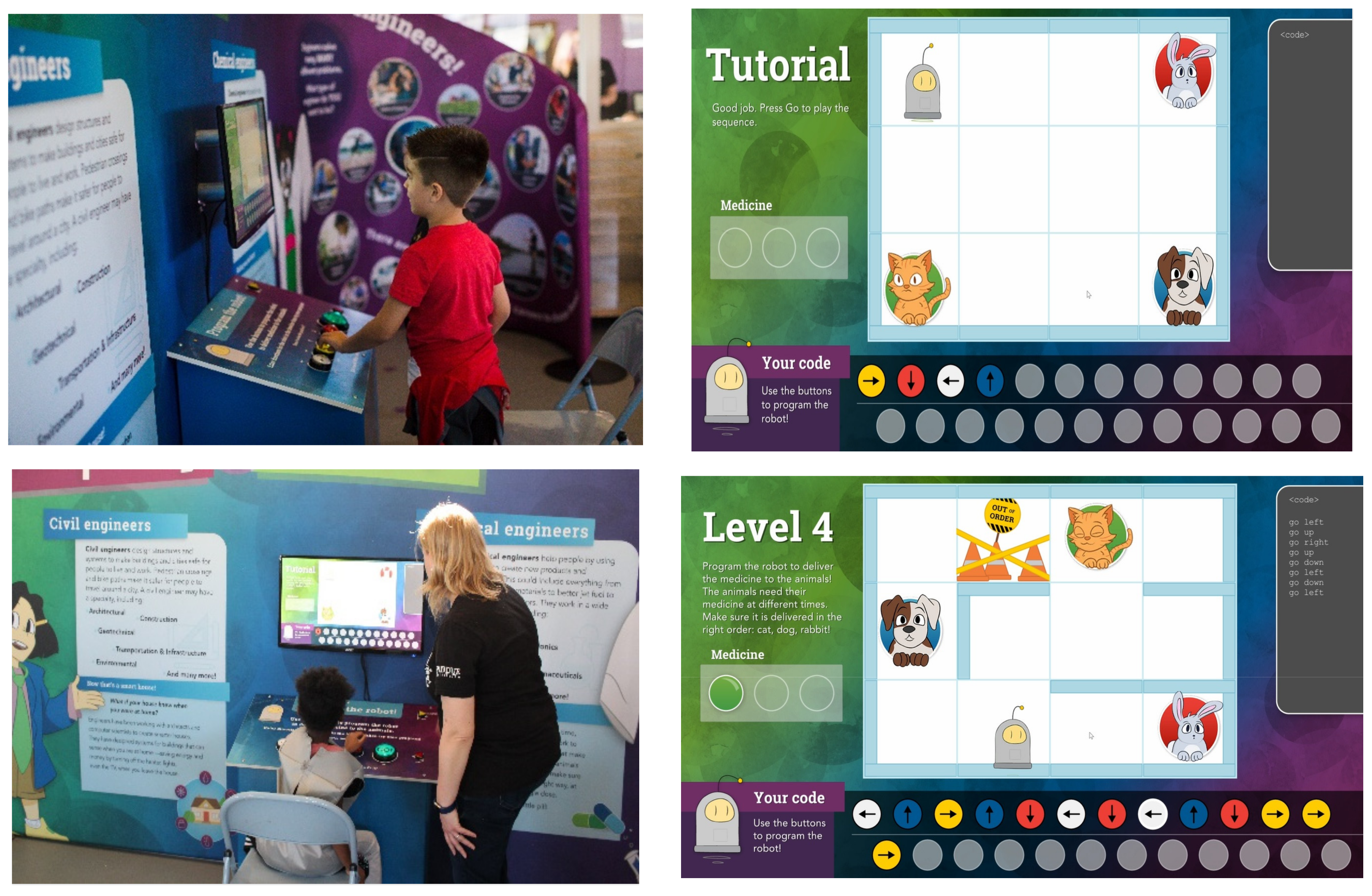

4.1. Context

4.2. Participants

4.3. Procedure

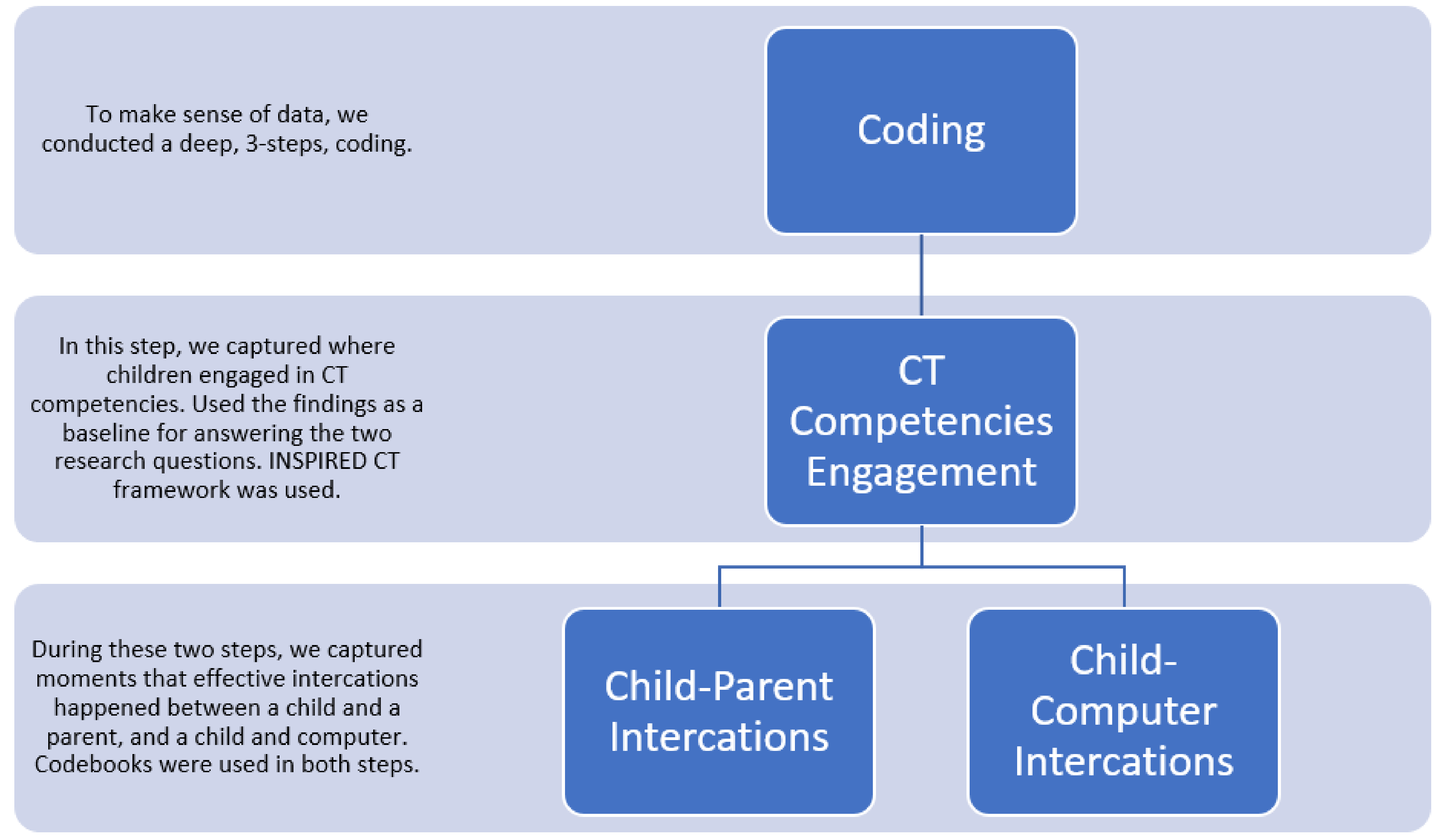

4.4. Data Analysis

5. Findings

5.1. Child–Computer Interactions

5.1.1. Using Buttons

5.1.2. Reading Written Instructions on the Keyboard

5.1.3. Reading Written Instructions on the Display

5.1.4. Interacting with the Map

5.1.5. Reading Code Log

5.1.6. Reading Robot Text-Based Code Log

5.1.7. Reading Medicine Bar

5.1.8. Receiving Feedback from Running Codes

5.1.9. Watching the Robot Move

5.1.10. Combinations of Interactions

5.2. Parent–Child Interaction

5.2.1. Parent Supervising/Directing

5.2.2. Parent Facilitation

5.2.3. Child–Parent Co-Learning

5.2.4. Parent Becoming a Student of the Child

5.2.5. Parent Disengagement

5.2.6. Parent Encouragement

6. Discussion

6.1. Implications for Designers

6.2. Implications for Educators and Facilitators

7. Conclusions

Limitations and Future Research

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

Appendix B

| Role | Freedom | Definition | General Examples |

| Supervising/Directing [2,3,4] | Most adult-led | Parent directly instructs child to act in a specific way. | “You guys do the same path in there. So you’ve gotta go to your right” |

| Facilitation [3,4] | Adult-led | Parent makes suggestions and prompts the child to think in a specific way. | “Do you not think that would have been quicker if you went to red first?” |

| Co-learning [4] | No leader | Both parent and child work together on a task together; neither is the leader and no prompting occurs. Parent and child share information with each other. | Parent shows the current location of the robot in the game while the child enters instructions: “Oh, these are walls!” |

| Student of the Child [3,4] | Child-led | Parent prompts the child to take the lead in the activity. | “So we know that you got the cat. Now what?” |

| Disengaged | Most child-led | The parent completely disengages from the activity, leaving the child to continue on their own. | “The mom says ‘Do what you want.’ and she steps back” |

| Encouragement [4] | Ancillary | Parent reassures or encourages the child while they are working on a task or after they complete a task. | “Awesome!” “You found the best answer” |

References

- Papert, S. Mindstorms: Children, Computers, and Powerful Ideas; Basic Books. Inc.: New York, NY, USA, 1980. [Google Scholar]

- Resnick, M. Computer as paint brush: Technology, play, and the creative society. In Play = Learning: How Play Motivates and Enhances Children’s Cognitive and Social-Emotional Growth; Oxford University Press: New York, NY, USA, 2006; pp. 192–208. [Google Scholar]

- Resnick, M. All I really need to know (about creative thinking) I learned (by studying how children learn) in kindergarten. In Proceedings of the 6th ACM SIGCHI Conference on Creativity & Cognition, Washington, DC, USA, 13 June 2007; pp. 1–6. [Google Scholar]

- Wing, J.M. Computational thinking. Commun. ACM 2006, 49, 33–35. [Google Scholar] [CrossRef]

- Lu, J.J.; Fletcher, G.H. Thinking about computational thinking. ACM SIGCSE Bull. 2009, 41, 260–264. [Google Scholar] [CrossRef]

- Grover, S.; Pea, R. Computational thinking in K–12: A review of the state of the field. Educ. Res. 2013, 42, 38–43. [Google Scholar] [CrossRef]

- Escherle, N.A.; Ramirez-Ramirez, S.I.; Basawapatna, A.R.; Assaf, D.; Repenning, A.; Maiello, C.; Nolazco-Flores, J.A. Piloting computer science education week in Mexico. In Proceedings of the 47th ACM Technical Symposium on Computing Science Education, Memphis, TN, USA, 2–5 March 2016; pp. 431–436. [Google Scholar]

- Yadav, A.; Hong, H.; Stephenson, C. Computational thinking for all: Pedagogical approaches to embedding 21st century problem solving in K-12 classrooms. TechTrends 2016, 60, 565–568. [Google Scholar] [CrossRef]

- Buckingham, D. Do we really need media education 2.0? Teaching media in the age of participatory culture. In New Media and Learning in the 21st Century; Lin, T., Chen, D., Chai, V., Eds.; Springer: Singapore, 2015; pp. 9–21. [Google Scholar]

- Hynes, M.M.; Moore, T.J.; Cardella, M.E.; Tank, K.M.; Purzer, S.; Menekse, M.; Brophy, S.; Yeter, I.; Ehsan, H. Inspiring Young Children to Engage in Computational Thinking In and Out of School (Research to Practice). In Proceedings of the 2019 American Society for Engineering Education Annual Conference & Exposition, Tampa, FL, USA, 16–19 June 2019. [Google Scholar]

- Martin, C. Coding as Literacy. In The International Encyclopedia of Media Literacy; Wiley-Blackwell: Hoboken, NJ, USA, 2019; pp. 1–8. [Google Scholar]

- Ehsan, H.; Cardella, M. Capturing the Computational Thinking of Families with Young Children in Out-of-School Environments. In Proceedings of the 2017 American Society for Engineering Education Annual Conference & Exposition, Columbus, OH, USA, 25–28 June 2017. [Google Scholar]

- Huang, W.; Batura, A.; Seah, T.L. The design and implementation of “unplugged” game-based learning in computing education. SocArXiv 2020. [Google Scholar] [CrossRef]

- Chou, P.N. Using ScratchJr to Foster Young Children’s Computational Thinking Competence: A Case Study in a Third-Grade Computer Class. J. Educ. Comput. Res. 2020, 58, 570–595. [Google Scholar] [CrossRef]

- Southgate, E.; Smith, S.P.; Cividino, C.; Saxby, S.; Kilham, J.; Eather, G.; Bergin, C. Embedding immersive virtual reality in classrooms: Ethical, organisational and educational lessons in bridging research and practice. Int. J. Child-Comput. Interact. 2019, 19, 19–29. [Google Scholar] [CrossRef]

- Lye, S.Y.; Koh, J.H.L. Review on teaching and learning of computational thinking through programming: What is next for K-12? Comput. Hum. Behav. 2014, 41, 51–61. [Google Scholar] [CrossRef]

- Stevens, R.; Bransford, J.; Stevens, A. The LIFE Center’s Lifelong and Life wide Diagram. 2005. Available online: http://life-slc.org (accessed on 23 January 2023).

- Brianna, D.L.; Jones, T.R.; Pollock, M.C.; Cardella, M. Parents as Critical Influence: Insights from five different studies. In Proceedings of the 2014 American Society for Engineering Education Annual Conference & Exposition, Indianapolis, IN, USA, 15 June 2014. [Google Scholar]

- Dorie, B.L.; Cardella, M. Parental Strategies for Introducing Engineering: Connections from the Home. In Proceedings of the 2nd P-12 Engineering and Design Education Research Summit, Washington, DC, USA, 26–28 April 2012. [Google Scholar]

- Ehsan, H.; Rehmat, A.; Osman, H.; Yeter, I.; Ohland, C.; Cardella, M. Examining the Role of Parents in Promoting Computational Thinking in Children: A Case Study on one Homeschool Family (Fundamental). In Proceedings of the 2019 American Society for Engineering Education Annual Conference & Exposition, Tampa, FL, USA, 16–19 June 2019. [Google Scholar]

- Ehsan, H.; Beebe, C.; Cardella, M. Promoting Computational Thinking in Children Using Apps. In Proceedings of the 2017 American Society for Engineering Education Annual Conference & Exposition, Columbus, OH, USA, 25–28 June 2017. [Google Scholar]

- Gomes, T.C.S.; Falcão, T.P.; Tedesco, P.C.D.A.R. Exploring an approach based on digital games for teaching programming concepts to young children. Int. J. Child-Comput. Interact. 2018, 16, 77–84. [Google Scholar] [CrossRef]

- Yu, J.; Roque, R. A review of computational toys and kits for young children. Int. J. Child-Comput. Interact. 2019, 21, 17–36. [Google Scholar] [CrossRef]

- Ehsan, H.; Rehmat, A.P.; Cardella, M.E. Computational thinking embedded in engineering design: Capturing computational thinking of children in an informal engineering design activity. Int. J. Technol. Des. Educ. 2021, 31, 441–464. [Google Scholar] [CrossRef]

- Armoni, B.M. Designing a K-12 computing curriculum. ACM Inroads 2013, 4, 34–35. [Google Scholar] [CrossRef]

- Duncan, C.; Bell, T. A pilot computer science and programming course for primary school students. In Proceedings of the Workshop in Primary and Secondary Computing Education, London, UK, 9–11 November 2015; pp. 1–10. [Google Scholar]

- Google. Computational Thinking Concepts Guide. 2020. Available online: https://docs.google.com/document/d/1Hyb2WKJrjT7TeZ2ATq6gsBhkQjSZwTH-xfpVMFEn2F8/edit (accessed on 25 March 2021).

- Grover, S.; Cooper, S.; Pea, R. Assessing computational learning in K-12. In Proceedings of the 2014 Conference on Innovation & Technology in Computer Science Education, Uppsala, Sweden, 23–25 June 2014; pp. 57–62. [Google Scholar]

- Barr, V.; Stephenson, C. Bringing computational thinking to K-12: What is Involved and what is the role of the computer science education community? ACM Inroads 2011, 2, 48–54. [Google Scholar] [CrossRef]

- Shute, V.J.; Sun, C.; Asbell-Clarke, J. Demystifying computational thinking. Educ. Res. Rev. 2017, 22, 142–158. [Google Scholar] [CrossRef]

- BBC. Bitesize. Available online: https://www.bbc.com/education/topics/z7tp34j (accessed on 25 March 2021).

- Brennan, K.; Resnick, M. New frameworks for studying and assessing the development of computational thinking. In Proceedings of the 2012 Annual Meeting of the American Educational Research Association, Vancouver, BC, Canada, 13–17 April 2012; Volume 1, p. 25. [Google Scholar]

- Weintrop, D.; Beheshti, E.; Horn, M.; Orton, K.; Jona, K.; Trouille, L.; Wilensky, U. Defining computational thinking for mathematics and science classrooms. J. Sci. Educ. Technol. 2016, 25, 127–147. [Google Scholar] [CrossRef]

- Dasgupta, A.; Purzer, S. No patterns in pattern recognition: A systematic literature review. In Proceedings of the 2016 IEEE Frontiers in Education Conference (FIE), Eire, PA, USA, 12–15 October 2016; pp. 1–3. [Google Scholar]

- Dasgupta, A.; Rynearson, A.M.; Purzer, S.; Ehsan, H.; Cardella, M.E. Computational thinking in K-2 classrooms: Evidence from student artifacts (fundamental). In Proceedings of the 2017 American Society for Engineering Education Annual Conference & Exposition, Columbus, OH, USA, 25–28 June 2017. [Google Scholar]

- Ehsan, H.; Ohland, C.; Dandridge, T.; Cardella, M. Computing for the Critters: Exploring Computational Thinking of Children in an Informal Learning Setting. In Proceedings of the 2018 IEEE Frontiers in Education Conference, San José, CA, USA, 3–6 October 2018. [Google Scholar]

- Ehsan, H.; Dandridge, T.; Yeter, I.; Cardella, M. K-2 Students’ Computational Thinking Engagement in Formal and Informal Learning Settings: A Case Study (Fundamental). In Proceedings of the 2018 American Society for Engineering Education Annual Conference & Exposition, Salt Lake City, UT, USA, 24–27 June 2018. [Google Scholar]

- Fagundes, B.; Ehsan, H.; Moore, T.J.; Tank, K.M.; Cardella, M.E. WIP: First-graders’ Computational Thinking in Informal Learning Settings. In Proceedings of the 2020 American Society for Engineering Education Annual Conference & Exposition, Online, 22–26 June 2020. [Google Scholar]

- Wing, J.M. Computational Thinking: What and Why?. Unpublished manuscript. 2010. Available online: http://www.cs.cmu.edu/~CompThink/papers/TheLinkWing.pdf (accessed on 15 April 2021).

- Vygotsky, L.S. Mind in Society: The Development of Higher Mental Process; Harvard University Press: Cambridge, MA, USA, 1978. [Google Scholar]

- Piaget, J. Development and learning. Readings on the development of children. Piaget Rediscovered 1972, 25–33. [Google Scholar]

- Duncan, C.; Bell, T.; Tanimoto, S. Should your 8-year-old learn coding? In Proceedings of the 9th Workshop in Primary and Secondary Computing Education, Berlin, Germany, 5–7 November 2014; pp. 60–69. [Google Scholar]

- Weintrop, D.; Wilensky, U. How block-based, text-based, and hybrid block/text modalities shape novice programming practices. Int. J. Child-Comput. Interact. 2018, 17, 83–92. [Google Scholar] [CrossRef]

- Long, D.; McKlin, T.; Weisling, A.; Martin, W.; Blough, S.; Voravong, K.; Magerko, B. Out of tune: Discord and learning in a music programming museum exhibit. In Proceedings of the Interaction Design and Children Conference, London, UK, 21–24 June 2020; pp. 75–86. [Google Scholar]

- Sullivan, A.A.; Bers, M.U.; Mihm, C. Imagining, playing, and coding with KIBO: Using robotics to foster computational thinking in young children. In Proceedings of the International Conference on Computational Thinking Education, Hong Kong, 13–15 July 2017; p. 110. [Google Scholar]

- Code Spark Academy. Learn. Code. Play. codeSpark Academy with The Foos. Available online: https://codespark.com (accessed on 23 January 2023).

- Hsi, S.; Eisenberg, M. Math on a sphere: Using public displays to support children’s creativity and computational thinking on 3D surfaces. In Proceedings of the 11th International Conference on Interaction Design and Children, Bremen, Germany, 12–15 June 2012; pp. 248–251. [Google Scholar]

- Dornbusch, S.M.; Ritter, P.L.; Leiderman, P.H.; Roberts, D.F.; Fraleigh, M.J. The relation of parenting style to adolescent school performance. Child Dev. 1987, 58, 1244–1257. [Google Scholar] [CrossRef]

- Fan, X.; Chen, M. Parental involvement and students’ academic achievement: A meta-analysis. Educ. Psychol. Rev. 2001, 13, 1–22. [Google Scholar] [CrossRef]

- Ninio, A.; Bruner, J. The achievement and antecedents of labelling. J. Child Lang. 1978, 5, 1–15. [Google Scholar] [CrossRef]

- Pattison, S.; Cardella, M.; Ehsan, H.; Ramos-Montanez, S.; Svarovsky, G.; Portsmore, M.; Milto, E.; McCormick, M.; Antonio-Tunis, C.; Sanger, S.T. Early Childhood Engineering: Supporting Engineering Design Practices with Young Children and Their Families. NARST. (conference cancelled). 2020. Available online: https://www.informalscience.org/early-childhood-engineering-supporting-engineering-design-practices-young-children-and-their (accessed on 15 December 2021).

- Yun, J.; Cardella, M.E.; Purzer, S. Parents’ Roles in K-12 Education: Perspectives from Science and Engineering Education Research. In Proceedings of the American Educational Research Association Annual Conference, Denver, CO, USA, 4 May 2010. [Google Scholar]

- Crowley, K.; Callanan, M.A.; Jipson, J.L.; Galco, J.; Topping, K.; Shrager, J. Shared scientific thinking in everyday parent-child activity. Sci. Educ. 2001, 85, 712–732. [Google Scholar] [CrossRef]

- Palmquist, S.; Crowley, K. From teachers to testers: How parents talk to novice and expert children in a natural history museum. Sci. Educ. 2007, 91, 783–804. [Google Scholar] [CrossRef]

- Ehsan, H.; Cardella, M.E. Capturing Children with Autism’s Engagement in Engineering Practices: A Focus on Problem Scoping. J. Pre-Coll. Eng. Educ. Res. 2020, 10, 2. [Google Scholar] [CrossRef]

- Svarovsky, G.N.; Wagner, C.; Cardella, M.E. Exploring moments of agency for girls during an engineering activity. Int. J. Educ. Math. Sci. Technol. 2018, 6, 302–319. [Google Scholar] [CrossRef]

- Rehmat, A.P.; Ehsan, H.; Cardella, M.E. Instructional strategies to promote computational thinking for young learners. J. Digit. Learn. Teach. Educ. 2020, 36, 46–62. [Google Scholar] [CrossRef]

- Yu, J.; Bai, C.; Roque, R. Considering Parents in Coding Kit Design: Understanding Parents’ Perspectives and Roles. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems (CHI ’20). Association for Computing Machinery, New York, NY, USA, 25–30 April 2020; pp. 1–14. [Google Scholar] [CrossRef]

- Powell, A.B.; Francisco, J.M.; Maher, C.A. An analytical model for studying the development of learners’ mathematical ideas and reasoning using videotape data. J. Math. Behav. 2003, 22, 405–435. [Google Scholar] [CrossRef]

- Ohland, C.; Ehsan, H.; Cardella, M. Parental Influence on Children’s Computational Thinking in an Informal Setting. In Proceedings of the 2019 American Society for Engineering Education Annual Conference & Exposition, Tampa, FL, USA, 16–19 June 2019. [Google Scholar]

- Ehsan, H.; Leeker, J.; Cardella, M. Examining Children’s Engineering Practices During an Engineering Activity in a Designed Learning Setting: A Focus on Troubleshooting (Fundamental). In Proceedings of the 2018 American Society for Engineering Education Annual Conference & Exposition, Salt Lake City, UT, USA, 24–27 June 2018. [Google Scholar]

- Griffith, S.F.; Arnold, D.H. Home learning in the new mobile age: Parent–child interactions during joint play with educational apps in the US. J. Child. Media 2019, 13, 1–19. [Google Scholar] [CrossRef]

- Papavlasopoulou, S.; Giannakos, M.N.; Jaccheri, L. Exploring children’s learning experience in constructionism-based coding activities through design-based research. Comput. Hum. Behav. 2019, 99, 415–427. [Google Scholar] [CrossRef]

- Wang, L.C.; Chen, M.P. The effects of game strategy and preference-matching on flow experience and programming performance in game-based learning. Innov. Educ. Teach. Int. 2010, 47, 39–52. [Google Scholar] [CrossRef]

- Jiau, H.C.; Chen, J.C.; Ssu, K.F. Enhancing self-motivation in learning programming using game-based simulation and metrics. IEEE Trans. Educ. 2009, 52, 555–562. [Google Scholar] [CrossRef]

- Lee, T.Y.; Mauriello, M.L.; Ahn, J.; Bederson, B.B. CTArcade: Computational thinking with games in school age children. Int. J. Child-Comput. Interact. 2014, 2, 26–33. [Google Scholar] [CrossRef]

- Droit-Volet, S.; Zélanti, P.S. Development of time sensitivity and information processing speed. PLoS ONE 2013, 8, e71424. [Google Scholar] [CrossRef] [PubMed]

- Moore, T.J.; Brophy, S.P.; Tank, K.M.; Lopez, R.D.; Johnston, A.C.; Hynes, M.M.; Gajdzik, E. Multiple representations in computational thinking tasks: A clinical study of second-grade students. J. Sci. Educ. Technol. 2020, 29, 19–34. [Google Scholar] [CrossRef]

- Sapounidis, T.; Demetriadis, S.; Papadopoulos, P.M.; Stamovlasis, D. Tangible and graphical programming with experienced children: A mixed methods analysis. Int. J. Child-Comput. Interact. 2019, 19, 67–78. [Google Scholar] [CrossRef]

- Allsop, Y. Assessing computational thinking process using a multiple evaluation approach. Int. J. Child-Comput. Interact. 2019, 19, 30–55. [Google Scholar] [CrossRef]

- Sadka, O.; Zuckerman, O. From Parents to Mentors: Parent-Child Interaction in Co-Making Activities. In Proceedings of the 2017 Conference on Interaction Design and Children, Stanford, CA, USA, 27–30 June 2017; pp. 609–615. [Google Scholar] [CrossRef]

- Hiniker, A.; Lee, B.; Kientz, J.A.; Radesky, J.S. Let’s Play! Digital and Analog Play Patterns between Preschoolers and Parents. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems. ACM, Montreal, QC, Canada, 21–26 April 2018; pp. 1–13. [Google Scholar] [CrossRef]

- Barron, B.; Martin, C.K.; Takeuchi, L.; Fithian, R. Parents as learning partners in the development of technological fluency. Int. J. Learn. Media 2009, 1, 55–77. [Google Scholar] [CrossRef]

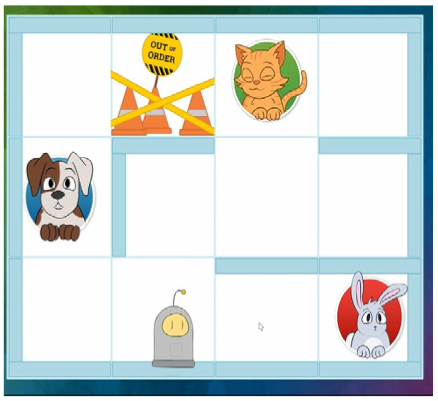

| Interactions | Image from the Exhibit | Description | Examples |

|---|---|---|---|

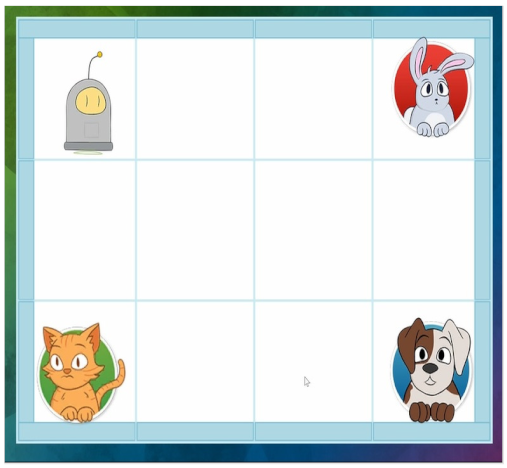

| Using Buttons |  | The child uses the buttons to enter moves for the robot to follow. | The child begins entering instructions for the robot using the buttons. |

| Reading Written Instructions on the Keyboard |  | The child reads the text on the keyboard or the parent reads the text aloud to the child. | The mother reads the instructions on the keyboard aloud. |

| Reading Written Instructions on the Display |  | The child or a parent reads the instructions on the display (i.e., on the computer screen). | The code fails because the medicine must be delivered in a specific order. The mother prompts the child to read the on-screen instructions. |

| Interacting with the Map |  | The child observes or interacts with the on-screen map. The child may trace different paths, tap out different moves, or simply look at and follow a path in their head. | The child keeps track of the robot’s position by placing his finger on the display and following the path through the map. |

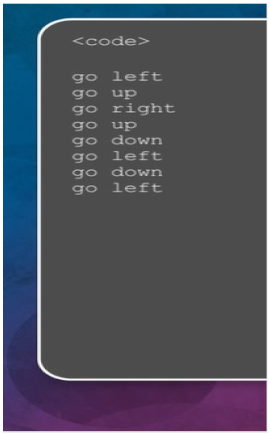

| Reading the Code Log |  | The child looks through recorded moves in the code log, to find a mistake or to identify the robot’s location at some point in the code. | The child seems to get lost, and the mother tells them to use the code path to see where the robot is at the current point in their path. They follow the moves in the log, but skips a move entered in error earlier. |

| Reading Robot Text-based Code log |  | The child reads back and uses information in the robot’s text-based code to identify an error or understand its movement. | N/A |

| Reading Medicine Bar |  | The child uses the medicine bar to understand which of the three animals the robot has already visited. | N/A |

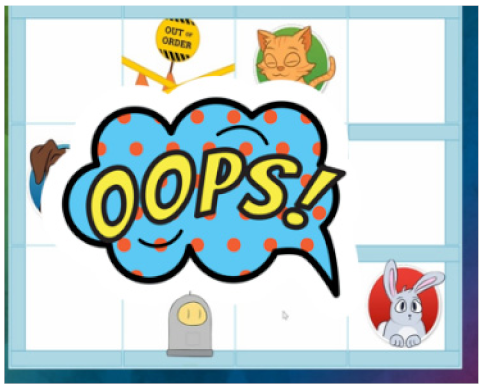

| Receiving Feedback after Running Code |  | The child observes the “OOPS” bubble in a failed path or reads the text displayed at the end of the level. | The robot crashes and the “OOPS” bubble is displayed. The child begins searching for the error in his code. |

| Watching the Robot Move |  | The child closely observes the motion of the robot during a test of the directions they have entered. | N/A |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ehsan, H.; Ohland, C.; Cardella, M.E. Characterizing Child–Computer–Parent Interactions during a Computer-Based Coding Game for 5- to 7-Year-Olds. Educ. Sci. 2023, 13, 164. https://doi.org/10.3390/educsci13020164

Ehsan H, Ohland C, Cardella ME. Characterizing Child–Computer–Parent Interactions during a Computer-Based Coding Game for 5- to 7-Year-Olds. Education Sciences. 2023; 13(2):164. https://doi.org/10.3390/educsci13020164

Chicago/Turabian StyleEhsan, Hoda, Carson Ohland, and Monica E. Cardella. 2023. "Characterizing Child–Computer–Parent Interactions during a Computer-Based Coding Game for 5- to 7-Year-Olds" Education Sciences 13, no. 2: 164. https://doi.org/10.3390/educsci13020164

APA StyleEhsan, H., Ohland, C., & Cardella, M. E. (2023). Characterizing Child–Computer–Parent Interactions during a Computer-Based Coding Game for 5- to 7-Year-Olds. Education Sciences, 13(2), 164. https://doi.org/10.3390/educsci13020164