Abstract

Student engagement is recognised as being a critical factor linked to student success and learning outcomes. The same holds true for online learning and engagement in higher education, where the appetite for this mode of learning has escalated worldwide over several decades, and as a result of COVID-19. At the same time teachers in higher education are increasingly able to access and utilise tools to identify and analyse student online behaviours, such as tracking evidence of engagement and non-engagement. However, even with significant headway being made in fields such as learning analytics, ways in which to make sense of this data, and to utilise data to inform interventions and refine teaching approaches, continue to be areas that would benefit from further insights and exploration. This paper reports on a project that sought to investigate whether low levels of student online engagement could be enhanced through a course specific intervention strategy designed to address student engagement with online materials in a regional university. The intervention used course learning analytics data (CLAD) in combination with the behavioral science concept of nudging as a strategy for increasing student engagement with online content. The study gathered qualitative and quantitative data to explore the impact of nudging on student engagement with 187 students across two disciplines, Education and Regional/Town Planning. The results not only revealed that the use of the nudge intervention was successful in increasing the levels of engagement in online courses but also revealed that the prerequisites for nudging were needed in order to increase success rates. The paper points to the value for the broader awareness, update, and use of learning analytics as well as nudging at a course, program, and institutional level to support student online engagement.

1. Introduction

Student engagement, defined as the purposeful focus on formal and informal learning, is recognised as a significant factor linked to learning outcomes, degree completion, persistence and student success [1,2]. At the same time, online learning in higher education has increased exponentially across the globe, with the equity and access of this mode of learning opening doors for a diverse group of learners [3]. Given this, the combination of online learning and the significance of engagement has led to a surge in momentum and focus by teachers, researchers, and institutions alike, with an interest in exploring this phenomenon further, including the use of ‘nudging’ and potential of learning analytics to track and make sense of online student behavior [4].

As universities increase the proportion of courses that are offered partly or fully through online learning platforms, the phenomenon of student disengagement with online content is becoming a critical issue, yet strategies to increase online engagement remains to a large extent, unexplored [5]. Many higher education institutions, including the context for this study, are therefore grappling with the question of how to address and improve student engagement, particularly engagement of low or non-engaged learners, in online learning contexts. Indeed, for students who study online, there are several unique challenges that educators must consider when designing and implementing a course online.

One such challenge, also evidenced within the context of the present study, is the isolated nature of online learning environments [6,7]. Other challenges experienced by online learners include the online learning environment, frequently typified by fewer prompts and sources of reinforcement (which occur, for example, from interactions with instructors and peers) that keep learners on-task with the learning objectives [8]. As there are fewer outside prompts from lecturers and peers, students need to be self-motivated, able to self-direct their learning, be comfortable working and learning in an online environment [9], and need to be adept at time management [10].

Students who have difficulties with these self-regulations skills may face challenges in engaging with online learning materials, which impact directly on learning outcomes and learner success. Such students may, for example, find their commitment to online learning easily transposed by other priorities [11]. This need for self-responsibility in online learning as well as limited face-to-face contact can further lead to a sense of isolation or disconnectedness [12,13], There is also the potential for students to feel overwhelmed by the online environment [10].

This paper reports on a study that sought to address the problem of the low levels of online engagement by students, particularly in the early weeks of semester and prior to assessment due dates, and the concern the impact this had on their success and the quality of the student experience, through the employment of a nudge intervention. Details are provided on the intervention, that used course learning analytics data (CLAD) in combination with the behavioral science concept of nudging as a strategy for increasing student engagement in online content. The paper then shares findings from the study, as well as discussion, implications, and the potential for future research. This paper fills a void in the existing body of knowledge about aligning educational nudges with learning analytics click data reports.

2. Literature Review

2.1. Monitoring Student Engagement with Online Course Materials Using Learning Analytics Data

Student engagement has been defined as an investment or commitment [14], a level of student participation [15], an effortful involvement in learning [16], a set of behaviours in learning, and a prerequisite for learning [17]. It is a desired outcome of the higher education sector, as it has been proven to impact graduation rates, classroom motivation, and course achievement [15,18]. It is also an indicator for how well students are doing in achieving desirable academic outcomes [19]. By monitoring student engagement and related non-engagement, course instructors can identify students who may need additional support and motivation.

In the identification and remedying of students’ non-engagement with online learning materials, learning analytics data (LAD) are increasing in popularity and prominence within the higher education sector [20]. Increasingly sophisticated applications of LAD are enabling universities to identify student behaviors, analyze student success data; and, hence, inform, improve, tailor and refine pedagogical practices, resources and approaches to online learning in a way that better meet students’ learning requirements [21]. While LAD platforms continue to be refined, these platforms are able to show various levels of detail of individual student activity. These include the resources and activities being accessed (but not necessarily read), when and how often students have logged into the system; when students have accessed assignments; and when students have attempted to submit their completed assignments [22]. LAD is also useful for identifying trends across classes, such as a particular group of students not engaging in online discussions or handing in pieces of work [18].

While the development and implementation of LAD programs are important for helping to identify students requiring intervention, there is arguably little point in implementing such programs unless they are accompanied by interventions and pedagogical strategies aimed at, for example, changing student behavior, refining online student support, or refining online teaching approaches. Yet despite the important role interventions play in motivating changes in student engagement behavior, the design of innovative LAD-based interventions and related reporting of any evidence of impact have been rare [23]. Presently, the most commonly discussed type of course-specific intervention is the provision of personalized advice or support from course instructors [24]. Although this type of intervention has been shown to be generally effective [24], significant workload barriers often impede the capacity of instructors to provide such personalized support, especially to large or highly diverse student cohorts [25]. Given the potential for LAD-based interventions to motivate behavioral change, there is indeed a need to focus on the development and testing of such interventions.

2.2. Nudging, a CLAD-Based Intervention

Thaler and Sustein [26] define a nudge as “any aspect of choice architecture that alters behavior in a predictable way without forbidding any options or significantly changing their economic incentives” (p. 6). The phenomenon of nudging has developed from the behavioral science field and is a novel approach that can be used to potentially motivate online learning behavior. Nudge theory recognizes that people do not always act in ways that serve their own best interests and, as such, suggests that individual decision-making could be improved by using simple nudge strategies to change people’s behavior in a predictable way, while not preventing people from choosing to avoid the (nudged) behavior [26]. By appealing to individual psychology, effective nudges increase the likelihood of people making choices that reflect their underlying best interests, while still respecting their freedom to choose [27].

In higher education, the use of nudging is nascent but growing [28]. Nudges trialed in a pedagogical context include, for example: worse/better than [X]% of the class [29,30] and prompts (e.g., “We can all use the discussion board to collectively learn more”) [31]. Nudges have also been used to provide exemplars of the work of high performing students, such as high-graded assignments from a previous iteration of the course/subject; this, however, can have negative effects on course completion and grades [32]. In addition, nudging strategies have combined goal setting with a commitment device, such as software that reminds students of their previously stated study goal, which automatically blocks distracting websites once students exceed a certain limit [33]. Others have used nudging strategies to provide personalized information to students about their abilities or effort level [34]; or as social-belonging interventions [35].

While not specifically framed as nudging, some of the interventions that universities are experimenting with in relation to CLAD use nudge theory: designed to influence student learning and engagement behaviors and guide students towards making better choices, without making the behavior change mandatory. Examples include student-facing dashboards that contain tools comparing students’ progress relative to their own goals or to those of the rest of the cohort [36]. Such tools enable students to compare their course activity against those of their peers in real time (peer group benchmarking), with the aim of motivating them to increase their engagement and/or performance. While these types of interventions have been found to be generally effective, some researchers suggest nudges are often more effective in motivating more confident and successful students, while for those students who are struggling, the effects are either non-existent or sometimes even negative [25,36].

While there have indeed been efforts to use CLAD to identify low- or non-engaged students and to, thus, communicate with them to offer support and motivation, to date there is limited evidence of efforts to combine the use of CLAD with the strategic use of nudges to promote student engagement. This paper reports on research that extends the use of CLAD to proactively address low levels of online engagement by nudging or encouraging students to utilize key course materials and activities.

3. Materials and Methods

This was a mixed methods study [37] that combined the gathering of qualitative (student comments within a post survey) and quantitative data (closed questions/responses from survey data and CLAD) to explore the impact of nudging on student engagement across two disciplines. The purposeful integration of two approaches enabled the phenomena to be viewed and interpreted through multiple lenses, enabling a more complete picture and understanding [38]. The intervention was carried out across two courses; a second-year course in education and a first-year course in urban and regional planning. The total number of enrolments of the two courses involved in the intervention was 187 students, with 86 in the Education course and 101 in the Planning course. As the authors of this paper aimed to assess the impact of the nudge intervention on student engagement with online learning materials, data were collected and analyzed in two ways namely survey and use of data analytics.

Details on the Nudge Intervention

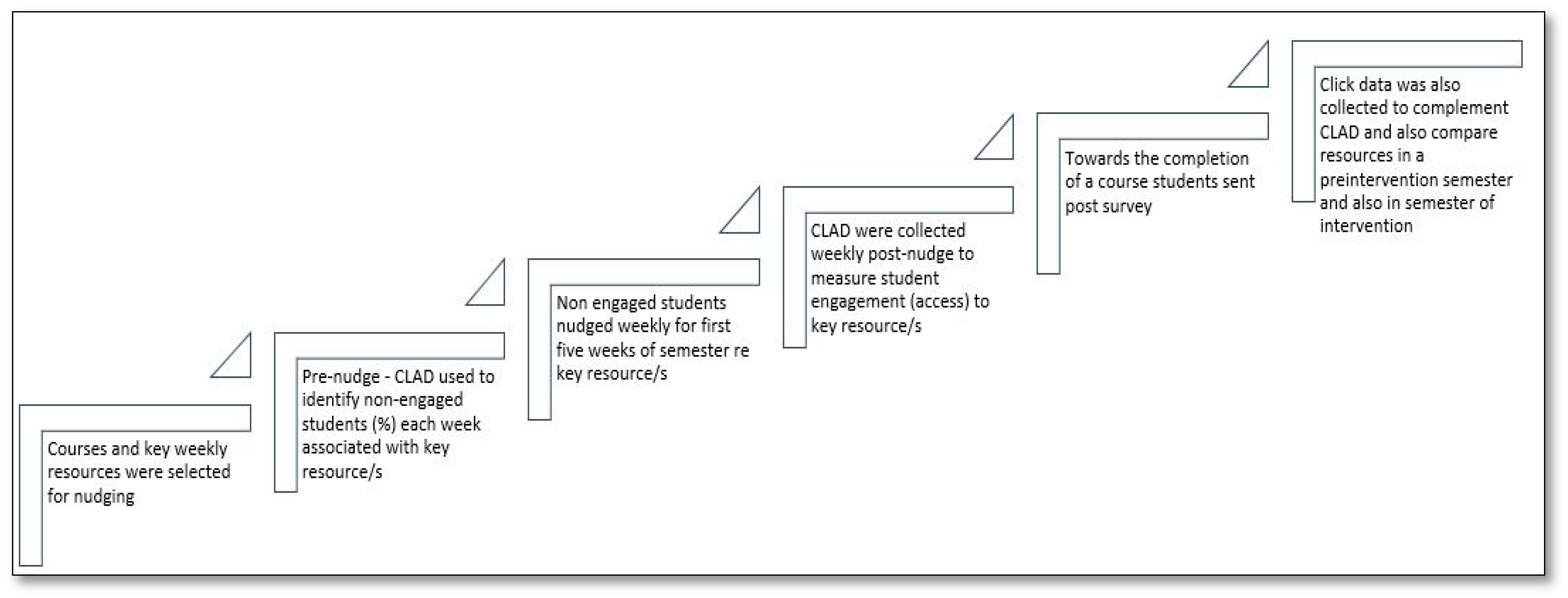

A nudge intervention that utilized CLAD, combined with a structured communication strategy, was designed to elicit increased online engagement for low and non-engaged students in a regional university where more than 70% of students study online. Students identified through CLAD as low- or non-engaged were, through strategic nudges, given early encouragement (focused on the first five weeks of the semester) to engage with key course learning materials (See Figure 1, Process of nudge intervention and mixed method research). The nudges were designed to make course expectations and requirements explicit to students in a just-in-time, just-for-you manner. The aim of the nudges was to motivate students to access key online course materials and help them feel that they were receiving personalized guidance and feedback. Noted that accessing of resources in this study was a proxy measure of student engagement. The nudges were also designed to give more structure to the online learning space for students struggling with the transactional distance (Moore, 1993) inherent in online learning environments.

Figure 1.

Process of nudge intervention and mixed method research.

Since research has highlighted the crucial nature of early engagement in supporting the probability of student success and retention [39], the project team identified 5 key resources that were deemed critical for student success during the first five weeks of semester. Using CLAD, the researchers identified, on a weekly basis, those students who had not accessed one or more of the key resources. These students were then provided a nudge that encouraged their use of the resource and highlighted the key role the resource played in achieving course success. Each key resource was nudged multiple times; these nudges changed in tone, became more individually focused, and were communicated in a range of different ways (such as personal email, message or phone) over the course of the first five weeks. Here is an example of a nudge sent to the identified low- or non-engagement students in this intervention:

The [teaching team] team notice that at the end of Week 2 you have as yet not accessed the Assignment guidelines and support materials. Please familiarize yourself with the assessment expectations for this course as soon as possible. There is also now a recorded presentation to compliment Assignment 1 that can be found via the Useful Links Tab at the top of the study desk. Know that the team are here to support you and wish you all the best for your learning journey.

In addition to the targeted nudges, broader, contextual nudges were also sent regularly in the first five weeks of the semester to all students enrolled in the course via a general announcement email. The aim of these nudges were to reinforce the course expectations and the benefits of online engagement, to highlight the importance of the critical resources and course activities, and to remind students of the value of the resources linked to real-world application and the successful learning outcomes linked to assessment. Here is an example of a nudge sent to all the students in a course in this intervention:

It is important to allocate time this week to listen to the course introduction and virtual study desk tour (presentation). 70% of students have now completed this task and have confirmed the value of this presentation.

Finally, the nudge intervention was also used to remind students of course drop dates and the ability to opt out if required. It was important that the tone adopted for these types of nudges was of an informal nature, which conveyed to students that they mattered, similar to a communication from a concerned friend.

First, CLAD, collated via students’ use of StudyDesk, the university’s online learning management system, was analyzed to identify engagement patterns and assess the impact of the intervention on student online engagement. This was achieved by analyzing student click counts (the number of times students click on a resource on StudyDesk) to explore the immediate effects on student engagement by observing any increase in click counts following a nudge. Data from the current iteration of the two courses in which the nudge intervention was used were also compared with the previous year’s iterations of the two courses, when the nudge strategy had not been used. Two-sample independent t-tests were used to evaluate whether any observed average differences between the two years were significant.

Second, students were invited to participate in a post-intervention online survey in order to gain insights into how the intervention was perceived by the students and, thus, be able to refine future iterations of the intervention. The post-intervention online survey was emailed to all students at the end of the semester and contained questions related to demographics and enrolment details; as well as series of closed questions, with the option for students to add further comments and elaborated on their responses, related to the nudge intervention and the perceived level and value of support by the teaching staff. Closed questions included: How helpful was the nudge intervention for you learning in this course? How helpful were the early prompts of a communication by teaching staff to access key resources? How helpful was the following support in this course—prompts at the beginning of semester; Reminders about key weekly tasks and activities? (very helpful; helpful; moderately helpful; slightly helpful; not helpful). Thirty-three responses were received.

4. Results and Discussion

4.1. Impact of the Nudge Intervention

The effectiveness of the nudges can be determined through an increase in clicks following the provision of a nudge. Data from the Planning course showed that after students were provided with a nudge encouraging them to watch the orientation recordings there was a 21% increase in non-engaged students accessing the resource. In the same course, a nudge emailed to 40 students who had not accessed the Module 1 learning resource resulted in a 50% improvement in access the next day. Similar positive results were also apparent in the Education course. For example, after the key resource, The Winning Formula, was nudged, engagement with the resource increased from 37% of students having accessed the resource to 74% (an increase in access of 37%). Similarly, when students received a nudge encouraging them to engage with the first course module (Module 1), student engagement with the resource increased from 45% to 70% (an increase in access of 25%).

As students may have received multiple nudges for each of the resources with which they had not engaged, it was important to observe how subsequent nudges were received. Click data for the Planning course showed that after the first nudge was received, there was, on average, a nine-click average increase in activity; however, by the third nudge there was only a two-click increase. Surprisingly, there was a consistent decrease in the number of clicks after the second nudge for all resources and activities in the Planning course. A similar trend was identified when using nudges in the Education course. When students were initially nudged to engage with key course resources, there was an increase in clicks for each resource following the first nudges; however, subsequent nudges did not lead to an increase in clicks.

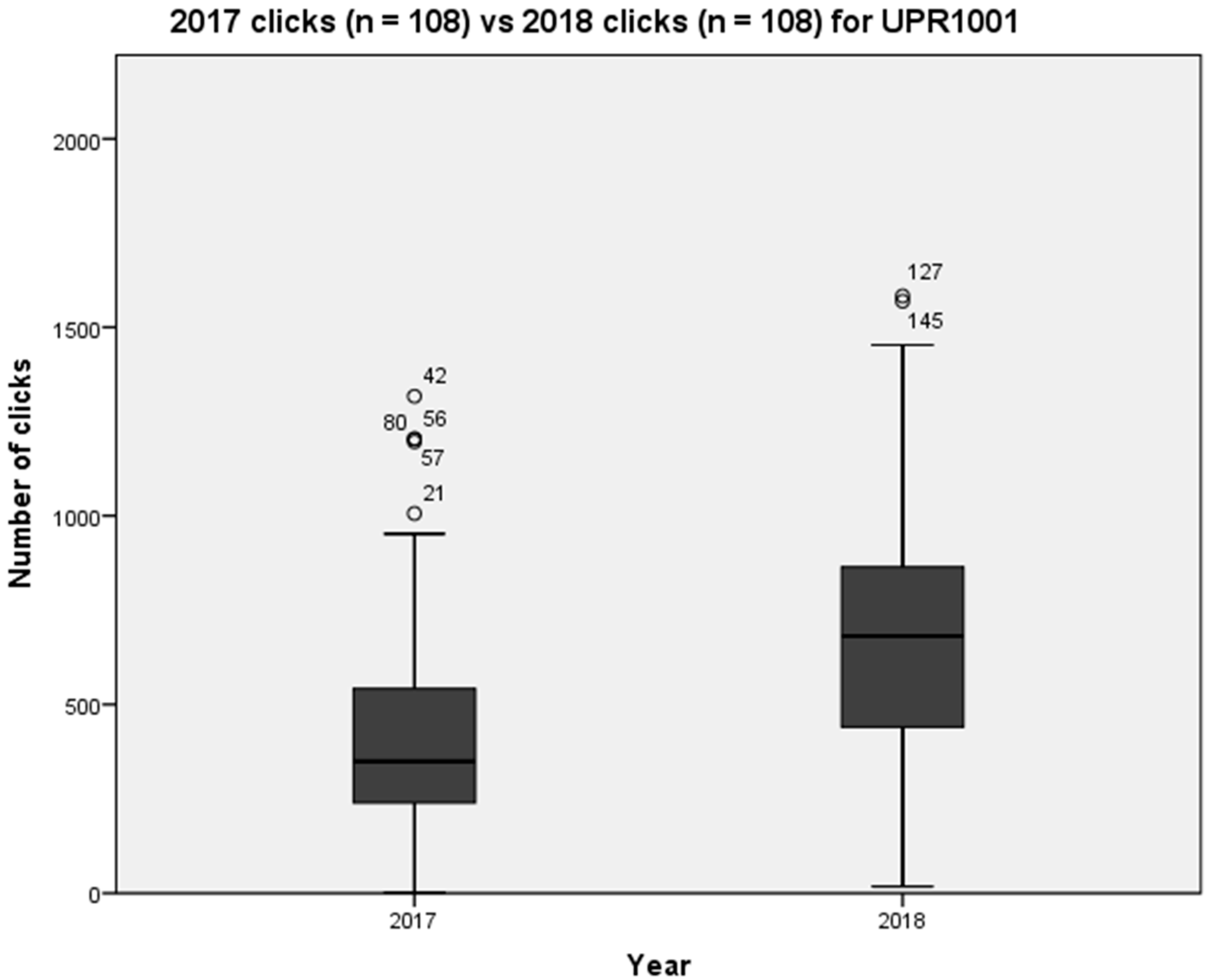

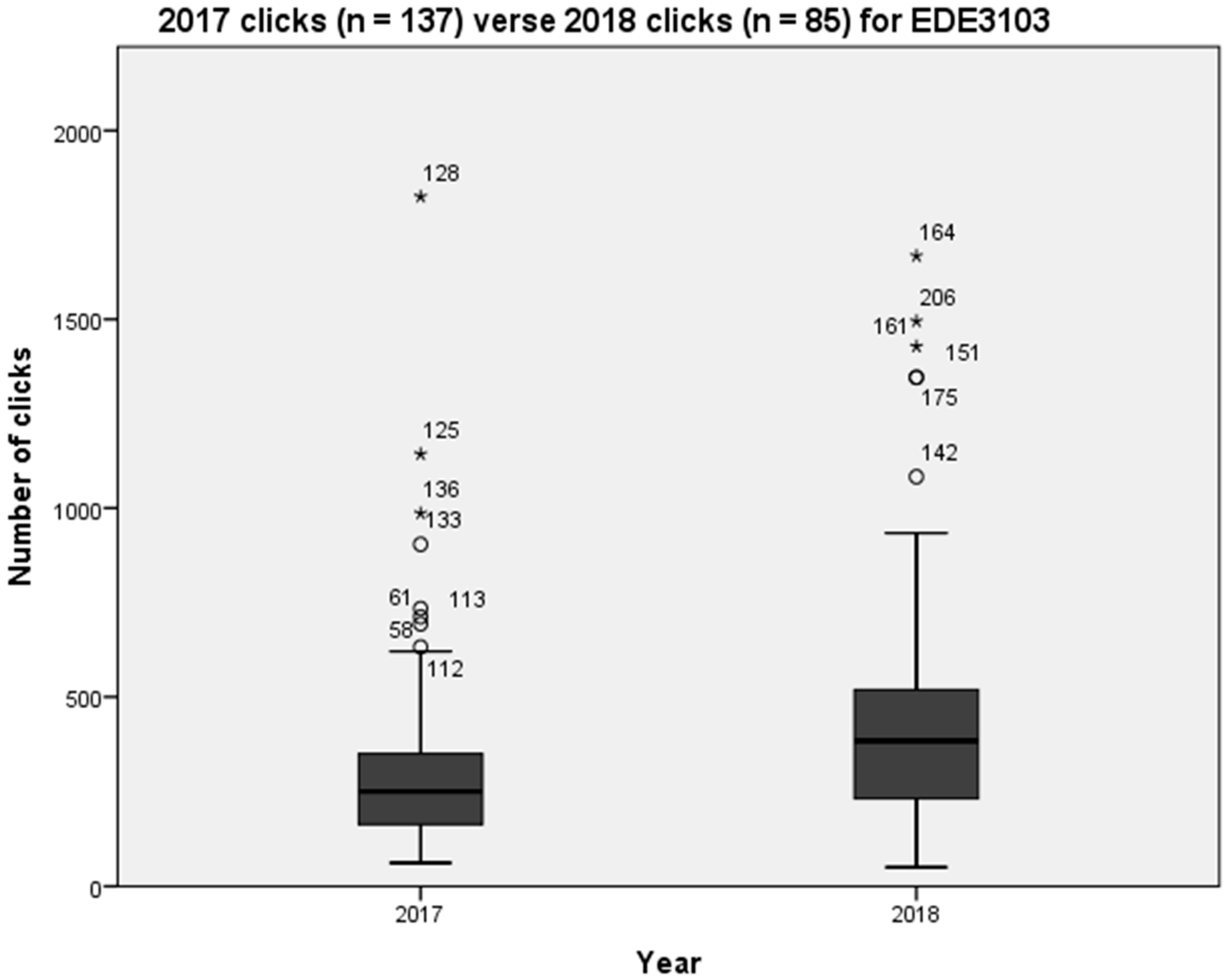

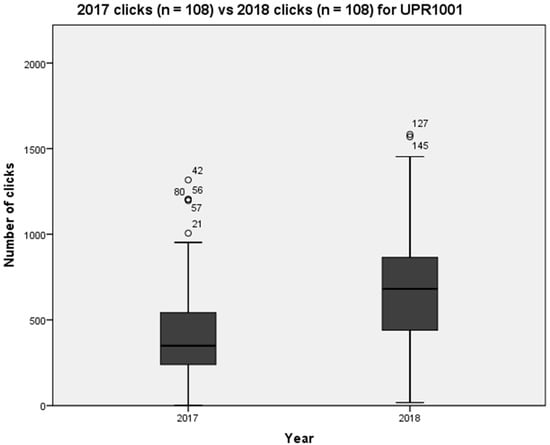

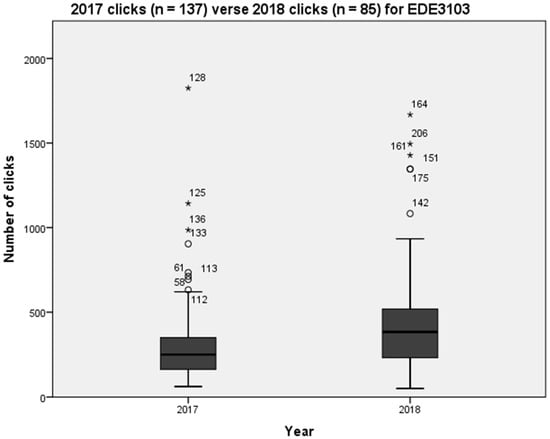

An analysis of the increase in the number of clicks following nudges also enabled the identification of the saturation point, as well as when nudges may have become nags. In this paper, a nag is understood to occur when a piece of communication or a communication strategy is overused to the point of becoming annoying to the students and/or having the opposite of the desired effect. For example, in the Planning course, some students received five nudges to engage with the Module 1 audiobook. While the decrease in clicks after the second nudge was surprising, the decrease in clicks after the fourth and fifth nudges potentially indicate a saturation point, or a point at which a nudge was perceived to be a nag. The ability for the nudge invention to increase student engagement with online course resources becomes further apparent when comparing 2017 and 2018 iterations of each course. In 2018 the nudge intervention was used, while in 2017 it was not. To illustrate the impact of the nudge intervention, student click counts on StudyDesk are compared for each of the two courses.

Figure 2 and Figure 3 show a comparison between the 2017 and 2018 student click counts for the Planning course and the Education course, respectively. Each diagram consists of the top 25% engaged students, the least-engaged students (the bottom 25%, according to student engagement), and the interquartile range, that is, the middle 50% of students. Visually, the diagrams highlight the increases in student engagement across the two courses, as evident from the student click counts. For the planning course, the average number of student clicks increased from 415 (sd = 257.79) in 2017 to 778 (s = 457.05) in 2018, with the median also showing an increase from 349 to 697. For the Education course, the average number of student clicks increased from 304 (sd = 230.35) to 446 clicks (sd = 328.68), with the median also showing an increase from 250 to 384. Two-sample independent t-tests, excluding outliers, were performed to test the hypothesis that the mean click counts increased from 2017 to 2018 (Planning: t = −6.99 results, df = 155.49, p-value = <0.001, n = 108 for 2017 and n = 95 for 2018; Education: t = −3.23, df = 148.03, p-value = 0.001, n = 135 for 2017 and n = 79 for 2018).

Figure 2.

Planning course student click counts.

Figure 3.

Education course student click counts.

CLAD indicated that the intervention was effective in increasing student access to resources, specifically access to nudged resources. This reinforces the general proposition that interventions, such as the nudge behavioral intervention can contribute to increasing a student access to key resources. This type of finding supports Blumenstein et al.’s [40] suggestion that learning analytics tools can be useful in capturing meaningful data about students’ engagement.

However, there is also hesitancy in terms of what is determined by increased access in reference to clicks, and the potential quality of these clicks, perhaps being simply where students may have just clicked and skimmed course materials, rather than what could be understood as quality engagement, such as taking time to carefully review resources. As stated earlier in this paper, CLAD for this study reflected a broad understanding of engagement, rather that identifying a specific type of engagement students had with a particular resource [41]. Instead, a change in behavior (increased click rate) indicated an increased access of a resource, however, this change in behavior still has the potential to afford deeper level engagement. The nudge intervention was also useful for encouraging those students who had not engaged in a course to drop the course, if they did not think they could commit to their study throughout the semester. The monitoring of engagement in this project confirmed that this was a sound approach for identifying those students in need of additional support. For example, in the Planning course, 14 students regularly required prompting. Nine of them chose to drop the course early, which meant that they avoided the associated academic and financial penalties. This would also have contributed to these students potentially committing more time to their remaining courses, increasing their potential for greater success.

Communicating with the students via nudges increased student engagement, as demonstrated by the average click counts per student and by viewing the ‘access to resources’ that were nudged. These results align with the findings of Thaler and Sunstein [26], who revealed that, within a health study, decision making by individuals can be improved by providing information through nudging.

4.2. Student Perceptions of the Nudge Intervention

To gauge students’ perceptions about the nudge intervention, students were asked to participate in a post-semester survey that explored their thoughts on the usefulness of the nudges in relation to their online engagement. The first survey question asked students to rate, on a five-point Likert scale, how helpful the nudge intervention was for their learning in the course they were enrolled (where 5 was very helpful, and 1was not helpful at all). They were also given the opportunity to expand on this rating by providing written comments. Overall, students indicated that they found the nudge intervention to be helpful, as indicated by the average rating of 3.79. Students confirmed that they do not always act in ways that serve their own best interests. The behaviors that were reported included being slack, unfocused and not managing time and other commitments effectively.

In addition, the written comments indicated that students had a range of opinions about the nudges, both positive and negative. The more positive comments showed that students felt the nudges prompted them to keep up to date, helped them manage expectations and engendered a feeling of being supported:

Made you feel like the support was there for online students. [Education course]It did prompt me when I knew I had been a little slack as well as shows that someone does care and just not a number. [Education course][U]seful for reminding students who are busy on what they need to catch up on. [Planning course]

Such comments show that the nudge intervention successfully addressed some of the challenges associated with the online learning environment. While limited face-to-face contact can create a sense of isolation or disconnectedness, as well as the potential for feeling overwhelmed by the online environment, student feedback on the nudge intervention indicated that students felt supported, that the communications reinforced care and concern for students and provided students with direction, to help them know where to start with their studies and, therefore, lessen any feelings they experienced of being overwhelmed.

The qualitative data collected via a student survey were important for examining student perceptions about the nudge intervention. Such qualitative feedback is also valuable when designing nudges that consider what students find important as well as what they find intrusive/invasive. For example, a small number of students reported an increase in anxiety when nudges were perceived as non-encouraging, were overly persuasive, were sent too frequently or when there were too many different nudges being sent across a range of platforms at the same time. Students reported that this increased their anxiety about attaining success in the course, rather than decreasing their fear of failure. Indeed, results from interventions reported on in the literature have also found that multiple nudge communications across a range of media demotivate or confuse some students [36,42].

As well as timing and frequency, the qualitative comments emphasized the importance of the tone of the nudge. When nudges were interpreted as being supportive and caring, students reported feeling more supported as an online student and motivated by the feeling that they were not just another number. The negative comments that emerged from the post-survey related to the tone, length, or frequency of some of the nudges. For example:

I found there was too much information and a lot of emails, I didn’t need all the information. [Education course]To be honest, some of the tone was very negative and quite big brotherish. Other lecturers would at least start with a positive message. I would read the message and get stressed out. [Education course]

Student were also asked to comment on the use of nudges that shared the progress of other students, for example: “It’s great to see that quite a number of you, around 60% of students, have already had a chance to access the introductory presentation for this course”. Student responses to this question ranged from welcoming feedback on the progress of other students to being indifference to having negative feelings about being provided with this information. Examples of the student responses to this question included:

Studying online via distance education can sometimes make it difficult to stay up to date, the sharing of % of students’ engagement was a great motivation to catch up to where I needed to be. [Education course]Can help to know if you are or aren’t the only one struggling with workload. [Planning course]I understand what is required of me to successfully complete a subject. What others have or haven’t done has no bearing on my study regime. [Planning course]I became quite stressed after this communication. I felt I did not have enough time to engage in everything offered in this course. [Education course]

5. Limitations, Implications, and Future Research

CLAD has the potential to be harnessed in multiple forms to not only inform teaching and learning in higher education, but specifically explore and respond to student engagement, particularly low or non-engagement within the learning environment. There are further possibilities and opportunities for both refining and harnessing the combination of learning analytics with nudging as a way of motivating or changing student online engagement behaviour. This includes investing work and research on what defines or determines ‘engagement’ for LAD, that goes beyond simply clicks that reflect ‘access’ to nudged resources or key resources, in order for this type of data to be more meaningful, as well as readily and easily accessible to teaching and research teams.

While the pedagogical use of nudging is still in its infancy, there is great potential for its application and refinement. One important consideration is teaching teams adopting a strategic approach to nudging, or a nudge protocol [4] that considers who to nudge, when to nudge (the frequency and timing of nudges), the language of a nudge, what to nudge, and the implications of over nudging (a nag). Teaching teams may also consider exploring a more coordinated approach to nudging that takes into account and targets a specific course in each semester/year level to nudge. This ensures that multiple courses are not nudging key resources at the same time, as this has the potential to increase the risk of not only nagging, but other negative implications for students’ learning and wellbeing.

A limitation of this work was that the data were collected from one regional university. While the thoughts and experiences of the participants are not a representation of all populations of students, the authors have provided descriptions of the research environment and the methods. The data were collected from two different disciplines, which might enable universities with a similar context to adopt the recommendations.

Future research could consider including more courses from other disciplines in the same university and comparing data from other universities where nudging occurs. Additionally, future research could include in-depth interviews with students and teaching staff to explore their perceptions and gain a deeper understanding of both the positive and negative impacts of nudging.

It would also be useful in the future to follow up on at-risk students, as identified by the university system, to track their success after nudges. In 2018, there were two at risk students in the Planning course—one passed with a B and the other with an A.—. It might be that at-risk students receive adequate support via other channels and that moderately at-risk students are actually at a greater risk.

6. Conclusions

In assessing the impact of the nudging intervention, this paper shows that there was a difference between the 2017 data, when no nudges were employed in the two courses included in this study, and the 2018 data, when nudges were used. Evidence of this increase in engagement was collated from CLAD. In 2018, when students received the nudge intervention, the proportion of students accessing resources was higher than in 2017. In addition, the timing of the access also changed from being mostly assignment-completion focused in 2017 to being more responsive to the nudges in 2018. Researcher tracking data revealed that some of the nudges were too late with the spike in student activity already occurring prior to the nudges. This led to the conclusion that nudges should occur no later than one week after items were supposed to have been viewed.

Although universities are providing ever more options for studying online, many courses fail to engage students with learning objects and activities, which results in students either partially or completely failing to attain the desired learning outcomes. Student retention in online courses also continues to remain lower than that of face-to-face courses. This study found that a nudging communicative intervention strategy aimed at the first five weeks of the semester—a period that is crucial to success—resulted in a greater number of students engaging with the required learning objects and activities.

Author Contributions

Conceptualization, A.B., M.B., P.R. and J.L.; methodology, A.B., M.B., P.R., J.L. and M.A.; software, M.A.; formal analysis, A.B., M.B., P.R., J.L. and M.A.; investigation, A.B., M.B., P.R. and J.L.; data curation, M.A. and M.B.; writing—original draft preparation, A.B., M.B., P.R., J.L. and M.A.; writing—review and editing, A.B., M.B., P.R., J.L. and M.A.; visualization, A.B., M.B., P.R. and J.L.; project administration, A.B., and J.L.; A.B., M.B., P.R. and J.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and approved by the Ethics Committee of USQ Human Research (protocol code H18REA019 and date of approval).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Not applicable.

Acknowledgments

The funding of this project was provided by the University of Southern Queensland through the Office of the Advancement of Learning and Teaching.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Kahu, E.R.; Picton, C.; Nelson, K. Pathways to engagement: A longitudinal study of the first-year student experience in the educational interface. High. Educ. 2020, 79, 657–673. [Google Scholar] [CrossRef]

- Tight, M. Student retention and engagement in higher education. J. Furth. High. Educ. 2020, 44, 689–704. [Google Scholar] [CrossRef]

- Farrell, O.; Brunton, J. A balancing act: A window into online student engagement experiences. Int. J. Educ. Technol. High. Educ. 2020, 17, 1–19. [Google Scholar] [CrossRef]

- Brown, A.; Lawrence, J.; Basson, M.; Axelsen, M.; Redmond, P.; Turner, J.; Maloney, S.; Galligan, L. The creation of a nudging protocol to support online student engagement in higher education. Active Learn. High. Educ. 2022, 14697874211039077. [Google Scholar] [CrossRef]

- Bergdahl, N. Engagement and disengagement in online learning. Comput. Educ. 2022, 188, 104561. [Google Scholar] [CrossRef]

- Brown, M.; Hughes, H.; Keppell, M.; Hard, N.; Smith, L. Stories from students in their first semester of distance learning. Int. Rev. Res. Open Distance Learn. 2015, 16, 1–17. [Google Scholar] [CrossRef]

- O’Shea, S.; Stone, C.; Delahunty, J. “I ‘feel’ like I am at university even though I am online.” Exploring how students narrate their engagement with higher education institutions in an online learning environment. Distance Educ. 2015, 36, 41–58. [Google Scholar] [CrossRef]

- Herrington, J.; Reeves, T.C.; Oliver, R. Authentic learning environments. In Handbook of Research on Educational Communications and Technology; Spector, J., Merrill, M., Elen, J., Bishop, M., Eds.; Springer: New York, NY, USA, 2014; pp. 401–412. [Google Scholar]

- Stone, C.; Springer, M. Interactivity, connectedness and’teacher-presence’: Engaging and retaining students online. Aust. J. Adult Learn. 2019, 59, 146–169. [Google Scholar]

- Burton, L.J.; Summers, J.; Lawrence, J.; Noble, K.; Gibbings, P. Digital literacy in higher education: The rhetoric and the reality. In Myths in Education, Learning and Teaching: Policies, Practices and Principles; Harmes, M.K., Huijser, H., Danaher, P.A., Eds.; Palgrave Macmillan: Hampshire, UK; New York, NY, USA, 2015; pp. 151–172. [Google Scholar]

- Moore, K.; Bartkovich, J.; Fetzner, M.; Ison, S. Success in cyberspace: Student retention in online courses. J. Appl. Res. Community Coll. 2003, 10, 107–118. [Google Scholar]

- Wanner, T. Parallel universes: Student and teacher expectations and interactions in online vs face-to-face teaching and learning environments. Ergo 2014, 3, 37–45. [Google Scholar]

- Willging, P.A.; Johnson, S.D. Factors that influence students’ decision to dropout of online courses. J. Asynchronous Learn. Netw. 2009, 13, 115–127. [Google Scholar]

- Tinto, V. Dropout from higher education: A theoretical synthesis of recent research. Rev. Educ. Res. 1975, 45, 89–125. [Google Scholar] [CrossRef]

- Kuh, G.D. What we’re learning about student engagement from NSSE: Benchmarks for effective educational practices. Change Mag. High. Educ. 2003, 35, 24–32. [Google Scholar] [CrossRef]

- Brown, A.; Redmond, P.; Basson, M.; Lawrence, J. To ‘nudge’ or to ‘nag’: A communication approach for ‘nudging’ online engagement in higher education. In Proceedings of the 8th Annual International Conference on Education and e-Learning, Singapore, 24–25 September 2018. [Google Scholar]

- Guo, P.J.; Kim, J.; Rubin, R. How video production affects student engagement: An empirical study of MOOC videos. In Proceedings of the 1st ACM Learning@ Scale Conference, Atlanta, GA, USA, 4–5 March 2014; ACM: Atlanta, GA, USA, 2014; pp. 41–50. [Google Scholar]

- Higher Education Standards Panel, Improving Retention, Completion and Success in Higher Education: Higher Education Standards Panel Discussion Paper, June 2017; Australian Government, Department of Education and Training: Canberra, Australia, 2017. Available online: https://docs.education.gov.au/system/files/doc/other/final_discussion_paper.pdf (accessed on 20 January 2023).

- Henrie, C.R.; Halverson, L.R.; Graham, C.R. Measuring student engagement in technology-mediated learning: A review. Comput. Educ. 2015, 90, 36–53. [Google Scholar] [CrossRef]

- Hooda, M.; Rana, C. Learning analytics lens: Improving quality of higher education. Int. J. Emerg. Trends Eng. Res. 2020, 8, 1626–1646. [Google Scholar] [CrossRef]

- Colvin, C.; Rodgers, T.; Wade, A.; Dawson, S.; Gasevic, D.; Buckingham Shum, S.; Nelson, K.J.; Alexander, S.; Lockyer, L.; Kennedy, G.; et al. Student Retention and Learning Analytics: A Snapshot of Australian Practices and a Framework for Advancement; Australian Government Office for Learning and Teaching: Canberra, Australia, 2015. [Google Scholar]

- Newlands, D.A.; Coldwell, J.M. Managing student expectations online. In Advance in Web-Based Learning—ICWL 2005, 4th International Conference. Hong Kong August 2005; Lau, R., Li, Q., Chueng, R., Liu, W., Eds.; Springer: Berlin/Heidelberg, Germany, 2005; pp. 355–363. [Google Scholar]

- Fritz, J.; Whitmer, J. Moving the heart and head: Implications for learning analytics research. EDUCAUSE Rev. [Online]. 2017. Available online: https://er.educause.edu/articles/2017/7/moving-the-heart-and-head-implications-for-learning-analytics-research (accessed on 20 January 2023).

- Wong, B.T.M. Learning analytics in higher education: An analysis of case studies. Asian Assoc. Open Univ. J. 2017, 12, 21–40. [Google Scholar] [CrossRef]

- Pardo, A.; Jovanovic, J.; Dawson, S.; Gasevic, D.; Mirriahi, N. Using learning analytics to scale the provision of personalised feedback. Br. J. Educ. Technol. 2017, 50, 128–138. [Google Scholar] [CrossRef]

- Thaler, R.H.; Sunstein, C.R. Nudge: Improving Decisions about Health, Wealth, and Happiness; Penguin: New York, NY, USA, 2008. [Google Scholar]

- Selinger, E.; Whyte, K. Is there a right way to nudge? The practice and ethics of choice architecture. Sociol. Compass 2011, 5, 923–935. [Google Scholar] [CrossRef]

- Damgaard, M.T.; Nielsen, H.S. Nudging in Education (IZA Discussion Paper No. 11454); IZA Institue of Labor Economics: Bonn, Germany, 2018. [Google Scholar]

- Azmat, G.; Bagues, M.; Cabrales, A.; Iriberri, N. What you don’t know... Can’t hurt you? A field experiment on relative performance feedback in higher education. In IZA Discussion Paper Series; IZA: Bonn, Germany, 2016. [Google Scholar]

- Davis, D.; Chen, G.; Hauff, C.; Houben, G.; Jivert, I.; Kizilcec, R. Follow the successful crowd: Raising MOOC completion rates through social comparison at scale. In Proceedings of the Seventh International Learning Analytics & Knowledge Conference, Vancouver, BC, Canada, 13–17 March 2017; pp. 454–463. [Google Scholar]

- Kizilcec, R.F.; Schneider, E.; Cohen, G.L.; McFarland, D.A. Encouraging forum participation in online courses with collectivist, individualist and neutral motivational framings. elearning Pap. 2014, 37, 12–22. [Google Scholar]

- Rogers, T.; Feller, A. Discouraged by peer excellence: Exposure to exemplary peer performance causes quitting. Psychol. Sci. 2016, 27, 365–374. [Google Scholar] [CrossRef] [PubMed]

- Patterson, R.W. Can behavioral tools improve online student outcomes? Experimental evidence from a massive open online course. J. Econ. Behav. Organ. 2018, 153, 293–321. [Google Scholar] [CrossRef]

- Pistolesi, N. Advising students on their field of study: Evidence from a French University reform. Labour Econ. 2017, 44, 106–121. [Google Scholar] [CrossRef]

- Broda, M.; Yun, J.; Schneider, B.; Yeager, D.S.; Walton, G.M.; Diemer, M. Reducing Inequality in academic success for incoming college students: A randomized trial of growth mindset and belonging interventions. J. Res. Educ. Eff. 2018, 11, 317–338. [Google Scholar] [CrossRef]

- Sclater, N.; Peasgood, A.; Mullan, J. Learning Analytics in Higher Education: A Review of UK and International Practice; Jisc.: Bristol, UK, 2016. [Google Scholar]

- Tashakkori, A.; Teddlie, C. Quality of inferences in mixed methods research: Calling for an integrative framework. In Advances in Mixed Methods Research; Bergman, M., Ed.; SAGE: Thousand Oaks, CA, USA, 2008; Volume 53, pp. 101–119. [Google Scholar]

- Bullock, S.; Christou, T. Exploring the radical middle between theory and practice: A collaborative self-study of beginning teacher educators. J. Teach. Educ. 2009, 5, 75–88. [Google Scholar] [CrossRef]

- Van der Meer, J.; Scott, S.; Pratt, K. First semester academic performance: The importance of early indicators of non-engagement. Stud. Success 2018, 9, 1–13. [Google Scholar] [CrossRef]

- Blumenstein, M.; Liu, D.Y.T.; Richards, D.; Leichtweis, S.; Stephens, J.M. Data-informed nudges for student engagement and success. In Learning Analytics in the Classroom: Translating Learning Analytics Research for Teachers; Lodge, J.M., Horvath, J.C., Corrin, L., Eds.; Routledge: Oxon, UK; New York, NY, USA, 2019; pp. 185–207. [Google Scholar]

- Redmond, P.; Heffernan, A.; Abawi, L.; Brown, A.; Henderson, R. An online engagement framework for higher education. Online Learn. 2018, 22, 183–204. [Google Scholar] [CrossRef]

- Pistilli, M.D.; Arnold, K.E. In practice: Purdue signals: Mining real-time academic data to enhance student success. About Campus 2010, 15, 1–32. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).