Predicting At-Risk Students in an Online Flipped Anatomy Course Using Learning Analytics

Abstract

1. Introduction

2. Materials and Methods

2.1. Participants

2.2. Flipped Anatomy Course

2.3. Variables

2.4. Data Analysis

2.4.1. Data Selection

2.4.2. Data Preprocessing

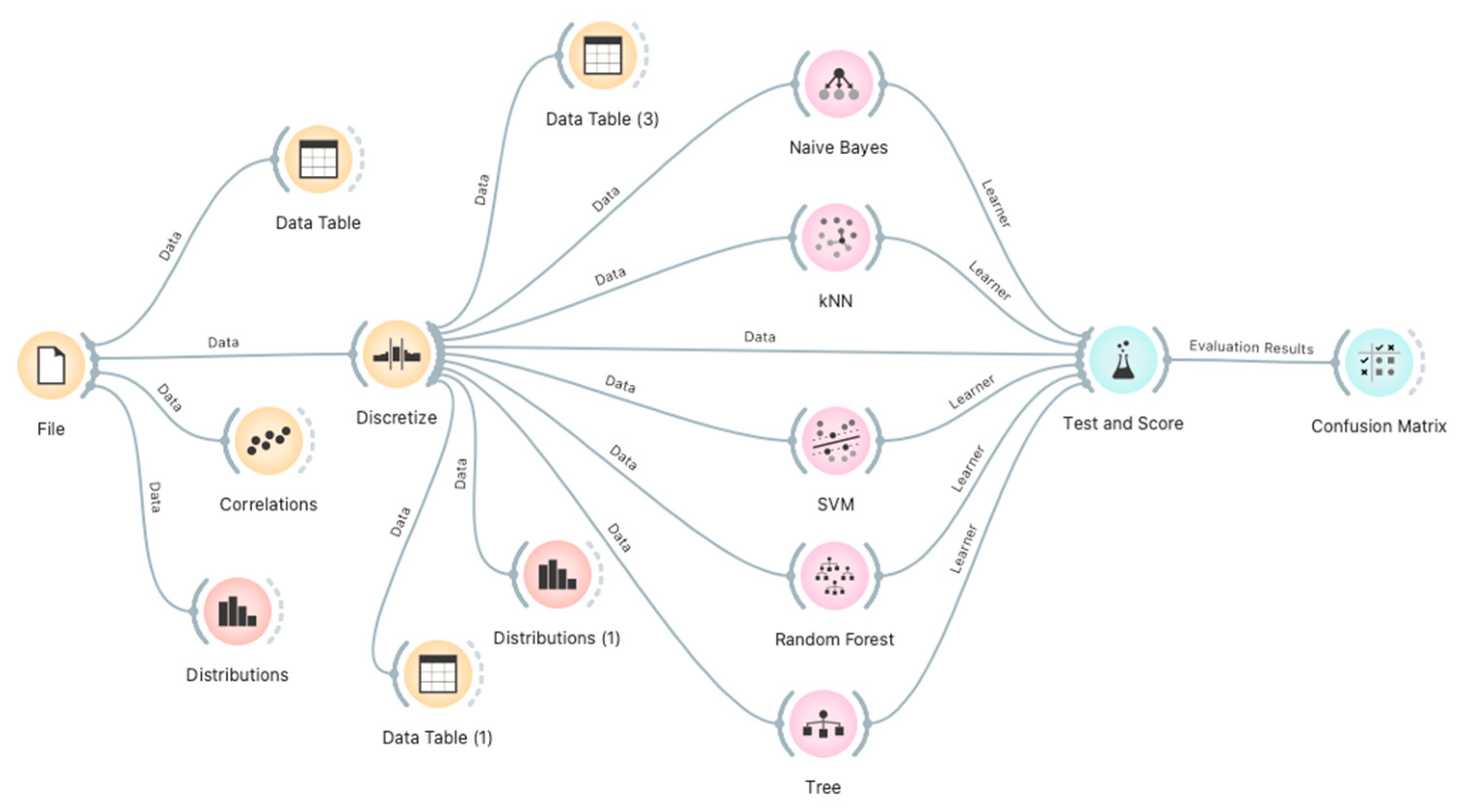

2.4.3. Data Mining

2.4.4. Performance Evaluation

3. Results

3.1. Research Question 1: Which Supervised Learning Technique Can Predict At-Risk Students in an Online Flipped Anatomy Course?

3.2. What Is the Classification Accuracy of the Best Algorithm for Predicting At-Risk Students in an Online Flipped Anatomy Course?

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Abeysekera, L.; Dawson, P. Motivation and cognitive load in the flipped classroom: Definition, rationale and a call for research. High. Educ. Res. Dev. 2014, 34, 1–14. [Google Scholar] [CrossRef]

- Hawks, S.J. The flipped classroom: Now or never? AANA J. 2014, 82, 264–269. [Google Scholar] [PubMed]

- Tucker, B. The flipped classroom. Educ. Next 2012, 12, 82–83. [Google Scholar]

- Rotellar, C.; Cain, J. Research, perspectives and recommendations on implementing the flipped classroom. Am. J. Pharm. Educ. 2016, 80, 34. [Google Scholar] [CrossRef]

- Limniou, M.; Schermbrucker, I.; Lyons, M. Traditional and flipped classroom approaches delivered by two different teachers: The student perspective. Educ. Inf. Technol. 2018, 23, 797–817. [Google Scholar] [CrossRef]

- Østerlie, O. Flipped learning in physical education: Why and how? In Physical Education and New Technologies; Croatian Kinesiology Association: Zagreb, Croatia, 2016; pp. 166–176. [Google Scholar]

- Unal, Z.; Unal, A. Comparison of student performance, student perception and teacher satisfaction with traditional versus flipped classroom models. Int. J. Instr. 2017, 10, 145–164. [Google Scholar] [CrossRef]

- Akçayır, G.; Akçayır, M. The flipped classroom: A review of its advantages and challenges. Comput. Educ. 2018, 126, 334–345. [Google Scholar] [CrossRef]

- Brewer, R.; Movahedazarhouligh, S. Successful stories and conflicts: A literature review on the effectiveness of flipped learning in higher education. J. Comput. Assist. Learn. 2018, 34, 409–416. [Google Scholar] [CrossRef]

- Namaziandost, E.; Çakmak, F. An account of EFL learners’ self-efficacy and gender in the Flipped Classroom Model. Educ. Inf. Technol. 2020, 25, 4041–4055. [Google Scholar] [CrossRef]

- Cresap, L. Preparing university students for flipped learning. In Blended Learning: Concepts, Methodologies, Tools, and Applications; IGI Global: Hershey, PA, USA, 2017; pp. 1510–1531. [Google Scholar]

- DeLozier, S.J.; Rhodes, M.G. Flipped classrooms: A review of key ideas and recommendations for practice. Educ. Psychol. Rev. 2017, 29, 141–151. [Google Scholar] [CrossRef]

- Sailer, M.; Sailer, M. Gamification of in-class activities in flipped classroom lectures. Br. J. Educ. Technol. 2021, 52, 75–90. [Google Scholar] [CrossRef]

- McWhirter, N.; Shealy, T. Case-based flipped classroom approach to teach sustainable infrastructure and decision-making. Int. J. Constr. Educ. Res. 2020, 16, 3–23. [Google Scholar] [CrossRef]

- Oliván-Blázquez, B.; Aguilar-Latorre, A.; Gascón-Santos, S.; Gómez-Poyato, M.J.; Valero-Errazu, D.; Magallón-Botaya, R.; Heah, R.; Porroche-Escudero, A. Comparing the use of flipped classroom in combination with problem-based learning or with case-based learning for improving academic performance and satisfaction. Act. Learn. High. Educ. 2022. [Google Scholar] [CrossRef]

- Tawfik, A.A.; Lilly, C. Using a flipped classroom approach to support problem-based learning. Technol. Knowl. Learn. 2015, 20, 299–315. [Google Scholar] [CrossRef]

- Bohaty, B.S.; Redford, G.J.; Gadbury-Amyot, C.C. Flipping the classroom: Assessment of strategies to promote student-centered, self-directed learning in a dental school course in pediatric dentistry. J. Dent. Educ. 2016, 80, 1319–1327. [Google Scholar] [CrossRef]

- Estrada, A.C.M.; Vera, J.G.; Ruiz, G.R.; Arrebola, I.A. Flipped classroom to improve university student centered learning and academic performance. Soc. Sci. 2019, 8, 315. [Google Scholar] [CrossRef]

- Awidi, I.T.; Paynter, M. The impact of a flipped classroom approach on student learning experience. Comput. Educ. 2019, 128, 269–283. [Google Scholar] [CrossRef]

- Zainuddin, Z. Students’ learning performance and perceived motivation in gamified flipped-class instruction. Comput. Educ. 2018, 126, 75–88. [Google Scholar] [CrossRef]

- Day, L.J. A gross anatomy flipped classroom effects performance, retention. higher-level thinking in lower performing students. Anat. Sci. Educ. 2018, 11, 565–574. [Google Scholar] [CrossRef]

- Shatto, B.; L’Ecuyer, K.; Quinn, J. Retention of Content Utilizing a Flipped Classroom Approach. Nurs. Educ. Perspect. 2017, 38, 206–208. [Google Scholar] [CrossRef]

- Alias, M.; Iksan, Z.H.; Karim, A.A.; Nawawi, A.M.H.M.; Nawawi, S.R.M. A novel approach in problem-solving skills using flipped classroom technique. Creat. Educ. 2020, 11, 38. [Google Scholar] [CrossRef]

- Wen, A.S.; Zaid, N.M.; Harun, J. Enhancing students’ ICT problem solving skills using flipped classroom model. In Proceedings of the 2016 IEEE 8th International Conference on Engineering Education (ICEED), Kuala Lumpur, Malaysia, 7–8 December 2016; IEEE: Piscataway, NJ, USA; pp. 187–192. [Google Scholar]

- Latorre-Cosculluela, C.; Suárez, C.; Quiroga, S.; Sobradiel-Sierra, N.; Lozano-Blasco, R.; Rodríguez-Martínez, A. Flipped Classroom model before and during COVID-19: Using technology to develop 21st century skills. Interact. Technol. Smart Educ. 2021, 18, 189–204. [Google Scholar] [CrossRef]

- Tang, T.; Abuhmaid, A.M.; Olaimat, M.; Oudat, D.M.; Aldhaeebi, M.; Bamanger, E. Efficiency of flipped classroom with online-based teaching under COVID-19. Interact. Learn. Environ. 2020, 1–12. [Google Scholar] [CrossRef]

- Akçapınar, G.; Altun, A.; Aşkar, P. Using learning analytics to develop early-warning system for at-risk students. Int. J. Educ. Technol. High. Educ. 2019, 16, 40. [Google Scholar] [CrossRef]

- Rubio-Fernández, A.; Muñoz-Merino, P.J.; Kloos, C.D. A learning analytics tool for the support of the flipped classroom. Comput. Appl. Eng. Educ. 2019, 27, 1168–1185. [Google Scholar] [CrossRef]

- Saqr, M.; Fors, U.; Tedre, M. How learning analytics can early predict under-achieving students in a blended medical education course. Med. Teach. 2017, 39, 757–767. [Google Scholar] [CrossRef]

- Foster, E.; Siddle, R. The effectiveness of learning analytics for identifying at-risk students in higher education. Assess. Eval. High. Educ. 2019, 45, 842–854. [Google Scholar] [CrossRef]

- Scholes, V. The ethics of using learning analytics to categorize students on risk. Educ. Technol. Res. Dev. 2016, 64, 939–955. [Google Scholar] [CrossRef]

- Sathya, R.; Abraham, A. Comparison of supervised and unsupervised learning algorithms for pattern classification. Int. J. Adv. Res. Artif. Intell. 2013, 2, 34–38. [Google Scholar] [CrossRef]

- Deng, Z.; Zhu, X.; Cheng, D.; Zong, M.; Zhang, S. Efficient kNN classification algorithm for big data. Neurocomputing 2016, 195, 143–148. [Google Scholar] [CrossRef]

- Perez, A.; Grandon, E.E.; Caniupan, M.; Vargas, G. Comparative Analysis of Prediction Techniques to Determine Student Dropout: Logistic Regression vs Decision Trees. In Proceedings of the 37th International Conference of the Chilean Computer Science Society (SCCC), Santiago, Chile, 5–9 November 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–8. [Google Scholar] [CrossRef]

- Herlambang, A.D.; Wijoyo, S.H.; Rachmadi, A. Intelligent Computing System to Predict Vocational High School Student Learning Achievement Using Naïve Bayes Algorithm. J. Inf. Technol. Comput. Sci. 2019, 4, 15–25. [Google Scholar] [CrossRef][Green Version]

- Beaulac, C.; Rosenthal, J.S. Predicting University Students’ Academic Success and Major Using Random Forests. Res. High. Educ. 2019, 60, 1048–1064. [Google Scholar] [CrossRef]

- Burman, I.; Som, S. Predicting Students Academic Performance Using Support Vector Machine. In Proceedings of the 2019 Amity International Conference on Artificial Intelligence (AICAI), Dubai, United Arab Emirates, 4–6 February 2019; IEEE: Piscataway, NJ, USA. [Google Scholar] [CrossRef]

- Hung, J.-L.; Shelton, B.E.; Yang, J.; Du, X. Improving Predictive Modeling for At-Risk Student Identification: A Multistage Approach. IEEE Trans. Learn. Technol. 2019, 12, 148–157. [Google Scholar] [CrossRef]

- Hung, J.-L.; Wang, M.C.; Wang, S.; Abdelrasoul, M.; Li, Y.; He, W. Identifying At-Risk Students for Early Interventions—A Time-Series Clustering Approach. IEEE Trans. Emerg. Top. Comput. 2017, 5, 45–55. [Google Scholar] [CrossRef]

- Arnold, K.E.; Pistilli, M.D. Course signals at Purdue: Using learning analytics to increase student success. Proceedings Of the 2nd International Conference on Learning Analytics and Knowledge (LAK ′12), Vancouver, BC, Canada, 29 April–2 May 2012; Association for Computing Machinery: New York, NY, USA, 2012; pp. 267–270. [Google Scholar] [CrossRef]

- Yu, T.; Jo, I.-H. Educational technology approach toward learning analytics: Relationship between student online behavior and learning performance in higher education. In Proceedings of the Fourth International Conference on Learning Analytics and Knowledge (LAK ′14), Indianapolis, IN, USA, 24–28 March 2014; Association for Computing Machinery: New York, NY, USA; pp. 269–270. [Google Scholar] [CrossRef]

- Kruse, A.; Pongsajapan, R. Student-centered learning analytics. CNDLS Thought Pap. 2012, 1, 98–112. [Google Scholar]

- Chutinan, S.; Riedy, C.A.; Park, S.E. Student performance in a flipped classroom dental anatomy course. Eur. J. Dent. Educ. 2018, 22, e343–e349. [Google Scholar] [CrossRef]

- El Sadik, A.; Al Abdulmonem, W. Improvement in student performance and perceptions through a flipped anatomy classroom: Shifting from passive traditional to active blended learning. Anat. Sci. Educ. 2021, 14, 482–490. [Google Scholar] [CrossRef]

- Ferrer-Torregrosa, J.; Jiménez-Rodríguez, M.; Torralba-Estelles, J.; Garzón-Farinós, F.; Pérez-Bermejo, M.; Ehrling, N.F. Distance learning ects and flipped classroom in the anatomy learning: Comparative study of the use of augmented reality, video and notes. BMC Med. Educ. 2016, 16, 1–9. [Google Scholar] [CrossRef]

- Yang, C.; Yang, X.; Yang, H.; Fan, Y. Flipped classroom combined with human anatomy web-based learning system shows promising effects in anatomy education. Medicine 2020, 99, e23096. [Google Scholar] [CrossRef]

- Akçapınar, G.; Bayazıt, A. MoodleMiner: Data Mining Analysis Tool for Moodle Learning Management System. Elem. Educ. Online 2019, 18, 406–415. [Google Scholar] [CrossRef]

- Silberschatz, A.; Tuzhilin, A. What makes patterns interesting in knowledge discovery systems. IEEE Trans. Knowl. Data Eng. 1996, 8, 970–974. [Google Scholar] [CrossRef]

- Han, J.; Kamber, M. Data Mining: Concepts and Techniques, 2nd ed.; University of Illinois at Urbana Champaign: Champaign, IL, USA; Morgan Kaufmann Publishers: Berlington, MA, USA, 2006; pp. 1–14. [Google Scholar]

- Al Shalabi, L.; Shaaban, Z.; Kasasbeh, B. Data mining: A preprocessing engine. J. Comput. Sci. 2006, 2, 735–739. [Google Scholar] [CrossRef]

- Demšar, J.; Zupan, B. Orange: Data mining fruitful and fun-a historical perspective. Informatica 2013, 37, 55–60. [Google Scholar]

- Marzuki, Z.; Ahmad, F. Data mining discretization methods and performances. Lung 2012, 3, 57. [Google Scholar]

- Marbouti, F.; Diefes-Dux, H.A.; Madhavan, K. Models for early prediction of at-risk students in a course using standards-based grading. Comput. Educ. 2016, 103, 1–15. [Google Scholar] [CrossRef]

- Rizvi, S.; Rienties, B.; Khoja, S.A. The role of demographics in online learning; A decision tree based approach. Comput. Educ. 2019, 137, 32–47. [Google Scholar] [CrossRef]

- Islam, M.J.; Wu, Q.M.J.; Ahmadi, M.; SidAhmed, M.A. Investigating the performance of naive-bayes classifiers and K-nearest neighbor classifiers. In Proceedings of the International Conference on Convergence Information Technology (ICCIT 2007), Gwangju, Korea, 21–23 November 2007; IEEE: Piscataway, NJ, USA, 2007; pp. 1541–1546. [Google Scholar] [CrossRef]

- Khan, R.; Hanbury, A.; Stoettinger, J. Skin detection: A random forest approach. In Proceedings of the IEEE International Conference on Image Processing, Hong Kong, China, 26–29 September 2010; IEEE: Piscataway, NJ, USA, 2010; pp. 4613–4616. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- He, B.; Shi, Y.; Wan, Q.; Zhao, X. Prediction of customer attrition of commercial banks based on SVM model. Procedia Comput. Sci. 2014, 31, 423–430. [Google Scholar] [CrossRef]

- Lou, Y.; Liu, Y.; Kaakinen, J.K.; Li, X. Using support vector machines to identify literacy skills: Evidence from eye movements. Behav. Res. Methods 2017, 49, 887–895. [Google Scholar] [CrossRef]

- Qiao, C.; Hu, X. A Joint Neural Network Model for Combining Heterogeneous User Data Sources: An Example of At-Risk Student Prediction. J. Assoc. Inf. Sci. Technol. 2019, 71, 1192–1204. [Google Scholar] [CrossRef]

- Doherty, I.; Sharma, N.; Harbutt, D. Contemporary and future eLearning trends in medical education. Med. Teach. 2015, 37, 1–3. [Google Scholar] [CrossRef]

- Stewart, W.H. A global crash-course in teaching and learning online: A thematic review of empirical Emergency Remote Teaching (ERT) studies in higher education during Year 1 of COVID-19. Open Prax. 2021, 13, 89–102. [Google Scholar] [CrossRef]

- Bond, M.; Bedenlier, S.; Marín, V.I.; Händel, M. Emergency remote teaching in higher education: Mapping the first global online semester. Int. J. Educ. Technol. High. Educ. 2021, 18, 1–24. [Google Scholar] [CrossRef]

- Mahmood, S. Instructional strategies for online teaching in COVID-19 pandemic. Hum. Behav. Emerg. Technol. 2021, 3, 199–203. [Google Scholar] [CrossRef]

- Walker, K.A.; Koralesky, K.E. Student and instructor perceptions of engagement after the rapid online transition of teaching due to COVID-19. Nat. Sci. Educ. 2021, 50, e20038. [Google Scholar] [CrossRef]

- Wu, F.; Teets, T.S. Effects of the COVID-19 pandemic on student engagement in a general chemistry course. J. Chem. Educ. 2021, 98, 3633–3642. [Google Scholar] [CrossRef]

- Tatiana, B.; Kobicheva, A.; Tokareva, E.; Mokhorov, D. The relationship between students’ psychological security level, academic engagement and performance variables in the digital educational environment. Educ. Inf. Technol. 2022, 1–15. [Google Scholar] [CrossRef]

- Wilson, A.; Watson, C.; Thompson, T.L.; Drew, V.; Doyle, S. Learning analytics: Challenges and limitations. Teach. High. Educ. 2017, 22, 991–1007. [Google Scholar] [CrossRef]

- Fritz, J. Using analytics to nudge student responsibility for learning. New Dir. High. Educ. 2017, 2017, 65–75. [Google Scholar] [CrossRef]

| Weeks | Course Topics | Student Preparation | In-Class Exam | In-Class Activities | Variables Names |

|---|---|---|---|---|---|

| - | Prior Knowledge Exam: 28 November 2020 | Prior | |||

| 1 | Urinary System (US) | December 1–7 | Quiz US | Case Studies and Discussions | w1_eng & exam1 |

| 2 | Reproductive System (RS) | December 8–14 | Quiz RS | Case Studies and Discussions | w2_eng & exam2 |

| 3 | Nervous System (NS) | December 15–22 | Quiz NS | Case Studies and Discussions | w3_eng & exam3 |

| 4 | Spinal Cord and Spinal Plexuses (SCSP) | December 23–29 | Quiz SCSP | Case Studies andDiscussions | w4_eng & exam4 |

| 5 | Cranial Nerves and Autonomic Nervous System (CNAN) | December 30– January 9 | Quiz CNAN | Case Studies andDiscussions | w5_eng & exam5 |

| 6 | Final Exam: 10 January 2021 | final (target variable) | |||

| No | Interaction Variable | Description |

|---|---|---|

| 1 | n_session: | The number of sessions by the student |

| 2 | n_ShortSession | The number of short sessions by the student |

| 3 | d_Time: | The total time the student has spent on the Moodle LMS |

| 4 | n_UniqueDay | The number of unique days logged in by the student |

| 5 | n_TotalAction | The number of total activities |

| 6 | n_CourseView | The number of course (Anatomy) views |

| 7 | n_ResourceView | The number of course resource views |

| Predicted Values | |||

|---|---|---|---|

| At-Risk | Safe | ||

| Actual Values | At-Risk | TP | FP |

| Safe | FN | TN | |

| Model | AUC | CA | F | Precision | Recall |

|---|---|---|---|---|---|

| RF | 0.795 | 0.696 | 0.533 | 0.571 | 0.500 |

| DT | 0.794 | 0.696 | 0.588 | 0.556 | 0.625 |

| NB | 0.703 | 0.681 | 0.607 | 0.531 | 0.708 |

| SVM | 0.690 | 0.681 | 0.476 | 0.556 | 0.417 |

| kNN | 0.689 | 0.667 | 0.489 | 0.524 | 0.458 |

| Predicted | ||||

|---|---|---|---|---|

| At-Risk | Safe | Total | ||

| At-Risk | 17 | 7 | 24 | |

| Actual | Safe | 15 | 30 | 45 |

| Total | 32 | 37 | 69 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bayazit, A.; Apaydin, N.; Gonullu, I. Predicting At-Risk Students in an Online Flipped Anatomy Course Using Learning Analytics. Educ. Sci. 2022, 12, 581. https://doi.org/10.3390/educsci12090581

Bayazit A, Apaydin N, Gonullu I. Predicting At-Risk Students in an Online Flipped Anatomy Course Using Learning Analytics. Education Sciences. 2022; 12(9):581. https://doi.org/10.3390/educsci12090581

Chicago/Turabian StyleBayazit, Alper, Nihal Apaydin, and Ipek Gonullu. 2022. "Predicting At-Risk Students in an Online Flipped Anatomy Course Using Learning Analytics" Education Sciences 12, no. 9: 581. https://doi.org/10.3390/educsci12090581

APA StyleBayazit, A., Apaydin, N., & Gonullu, I. (2022). Predicting At-Risk Students in an Online Flipped Anatomy Course Using Learning Analytics. Education Sciences, 12(9), 581. https://doi.org/10.3390/educsci12090581