Artificial Intelligence and Learning Analytics in Teacher Education: A Systematic Review

Abstract

:1. Introduction

1.1. Teacher Education

1.2. Artificial Intelligence in Education

1.3. Learning Analytics

- RQ1: What are the main goals and objectives of the reviewed studies regarding the use of AI and LA in teacher education?

- RQ2: What kinds of data sources are employed by the studies on AI and LA in teacher education?

- RQ3: What kinds of AI and LA techniques and tools are used to support teacher education?

- RQ4: Who are the participants included in the studies on AI and LA in teacher education?

- RQ5: How are ethical procedures being fulfilled by studies on AI and LA in teacher education?

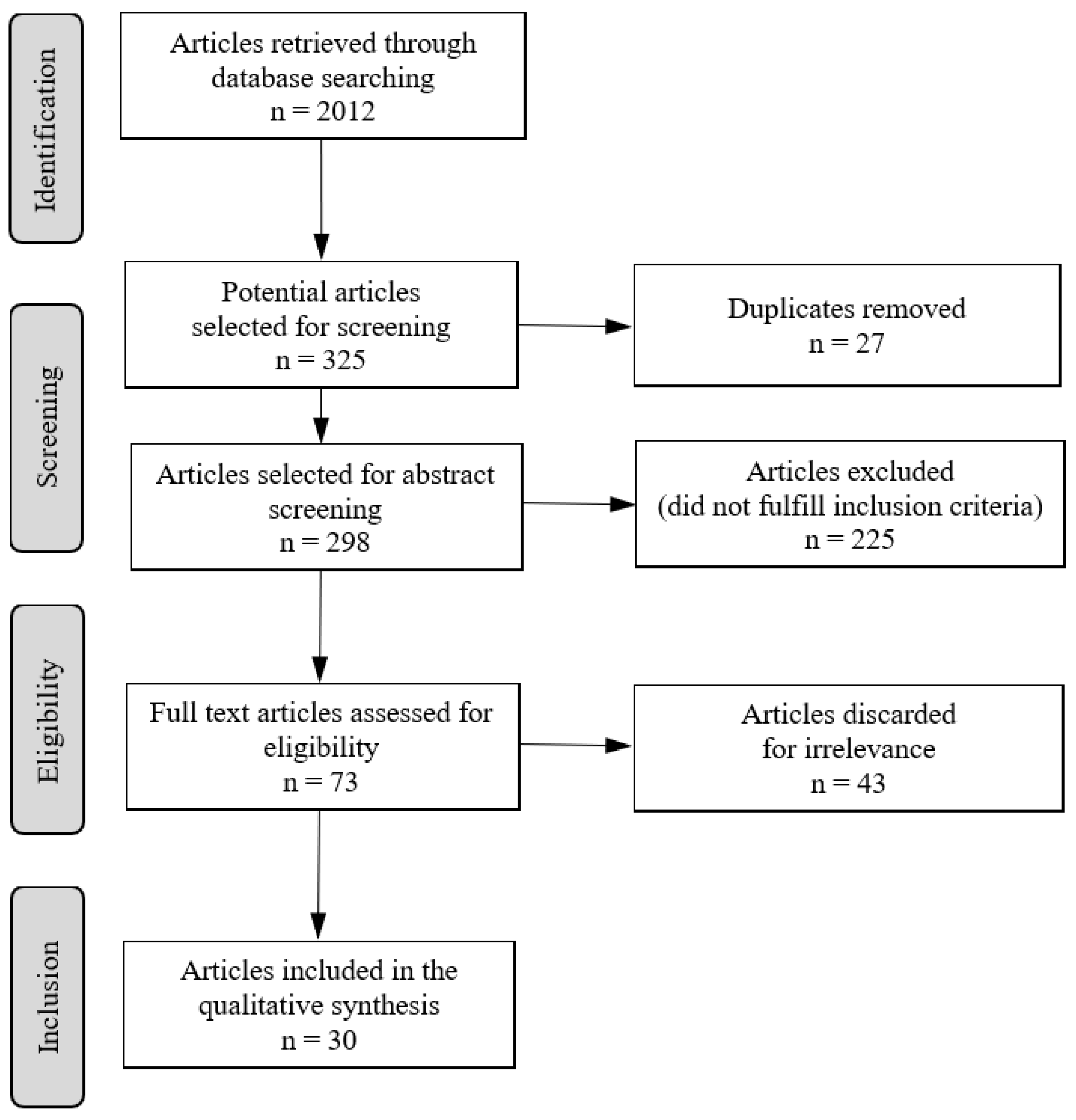

2. Methodology

3. Results

3.1. Goals and Objectives

3.2. Data Sources

3.3. Techniques and Tools

3.4. Participants in the Studies

3.5. Ethical Procedures

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ahuja, A.S. The Impact of Artificial Intelligence in Medicine on the Future Role of the Physician. PeerJ 2019, 7, e7702. [Google Scholar] [CrossRef] [PubMed]

- Veloso, M.; Balch, T.; Borrajo, D.; Reddy, P.; Shah, S. Artificial Intelligence Research in Finance: Discussion and Examples. Oxf. Rev. Econ. Policy 2021, 37, 564–584. [Google Scholar] [CrossRef]

- Salas-Pilco, S.Z.; Yang, Y. Learning Analytics Initiatives in Latin America: Implications for Educational Researchers, Practitioners and Decision Makers. Br. J. Educ. Technol. 2020, 51, 875–891. [Google Scholar] [CrossRef]

- Napier, A.; Huttner-Loan, E.; Reich, J. Evaluating learning transfer from MOOCs to workplaces: A Case Study from Teacher Education and Launching Innovation in Schools. Revista Iberoamericana de Educación a Distancia 2020, 23, 45–64. [Google Scholar] [CrossRef]

- Datta, D.; Phillips, M.; Chiu, J.; Watson, G.S.; Bywater, J.P.; Barnes, L.; Brown, D. Improving Classification through Weak Supervision in Context-Specific Conversational Agent Development for Teacher Education. arXiv 2020, arXiv:2010.12710. [Google Scholar]

- Oyekan, S.O. Foundations of Teacher Education. In Education for Nigeria Certificate in Education; Osisa, W., Ed.; Adeyemi College of Education Textbook Development Board: Ondo, Nigeria, 2000; pp. 1–58. [Google Scholar]

- Dunkin, M.J. The International Encyclopedia of Teaching and Teacher Education; Pergamon Press: Oxford, UK, 1987. [Google Scholar]

- Farjon, D.; Smits, A.; Voogt, J. Technology Integration of Pre-Service Teachers Explained by Attitudes and Beliefs, Competency, Access, and Experience. Comput. Educ. 2019, 130, 81–93. [Google Scholar] [CrossRef]

- Menabò, L.; Sansavini, A.; Brighi, A.; Skrzypiec, G.; Guarini, A. Promoting the Integration of Technology in Teaching: An Analysis of the Factors That Increase the Intention to Use Technologies among Italian Teachers. J. Comput. Assist. Learn. 2021, 37, 1566–1577. [Google Scholar] [CrossRef]

- Butler, D.; Leahy, M.; Twining, P.; Akoh, B.; Chtouki, Y.; Farshadnia, S.; Moore, K.; Nikolov, R.; Pascual, C.; Sherman, B.; et al. Education Systems in the Digital Age: The Need for Alignment. Technol. Knowl. Learn. 2018, 23, 473–494. [Google Scholar] [CrossRef]

- U.S. Department of Education. Advancing Educational Technology in Teacher Preparation: Policy Brief. 2016. Available online: https://tech.ed.gov/files/2016/12/Ed-Tech-in-Teacher-Preparation-Brief.pdf (accessed on 5 April 2022).

- Popenici, S.A.D.; Kerr, S. Exploring the Impact of Artificial Intelligence on Teaching and Learning in Higher Education. Res. Pract. Technol. Enhanc. Learn. 2017, 12, 22. [Google Scholar] [CrossRef]

- Lu, X. Natural Language Processing and Intelligent Computer-Assisted Language Learning (ICALL). In The TESOL Encyclopedia of English Language Teaching; Liontas, J.I., Ed.; Wiley Blackwell: Hoboken, NJ, USA, 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Salas-Pilco, S.Z.; Yang, Y. Artificial Intelligence Applications in Latin American Higher Education: A Systematic Review. Int. J. Educ. Technol. High. Educ. 2022, 19, 21. [Google Scholar] [CrossRef]

- Chen, L.; Chen, P.; Lin, Z. Artificial Intelligence in Education: A review. IEEE Access 2020, 8, 75264–75278. [Google Scholar] [CrossRef]

- Siemens, G.; Gasevic, D.; Haythornthwaite, C.; Dawson, S.; Buckingham, S.; Ferguson, R.; Duval, E.; Verbert, K.; Baker, R. Open Learning Analytics: An Integrated & Modularized Platform Proposal to Design, Implement and Evaluate an Open Platform to Integrate Heterogeneous Learning Analytics Techniques. 2011. Available online: https://solaresearch.org/wp-content/uploads/2011/12/OpenLearningAnalytics.pdf (accessed on 3 May 2022).

- Krikun, I. Applying Learning Analytics Methods to Enhance Learning Quality and Effectiveness in Virtual Learning Environments. In Proceedings of the 2017 5th IEEE Workshop on Advances in Information, Electronic and Electrical Engineering (AIEEE), Riga, Latvia, 24–25 November 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Rets, I.; Herodotou, C.; Bayer, V.; Hlosta, M.; Rienties, B. Exploring Critical Factors of the Perceived Usefulness of a Learning Analytics Dashboard for Distance University Students. Int. J. Educ. Technol. High. Educ. 2021, 18, 46. [Google Scholar] [CrossRef]

- Lee, D.; Huh, Y.; Lin, C.-Y.; Reigeluth, C.M. Technology Functions for Personalized Learning in Learner-Centered Schools. Educ. Technol. Res. Dev. 2018, 66, 1269–1302. [Google Scholar] [CrossRef]

- Starkey, L.A. Review of Research Exploring Teacher Preparation for the Digital Age. Cambridge J. Educ. 2019, 50, 37–56. [Google Scholar] [CrossRef]

- Pongsakdi, N.; Kortelainen, A.; Veermans, M. The Impact of Digital Pedagogy Training on In-Service Teachers’ Attitudes towards Digital Technologies. Educ. Inf. Technol. 2021, 26, 5041–5054. [Google Scholar] [CrossRef]

- Tondeur, J.; Scherer, R.; Baran, E.; Siddiq, F.; Valtonen, T.; Sointu, E. Teacher Educators as Gatekeepers: Preparing the next Generation of Teachers for Technology Integration in Education. Br. J. Educ. Technol. 2019, 50, 1189–1209. [Google Scholar] [CrossRef]

- Salas-Pilco, S.Z. Comparison of National Artificial Intelligence (AI): Strategic Policies and Priorities. In Towards an International Political Economy of Artificial Intelligence; Keskin, T., Kiggins, R.D., Eds.; Palgrave Macmillan: Cham, Switzerland, 2021; pp. 195–217. [Google Scholar]

- Leaton Gray, S. Artificial Intelligence in Schools: Towards a Democratic Future. London Rev. Educ. 2020, 18, 163–177. [Google Scholar] [CrossRef]

- Mayer, D.; Oancea, A. Teacher Education Research, Policy and Practice: Finding Future Research Directions. Oxf. Rev. Educ. 2021, 47, 1–7. [Google Scholar] [CrossRef]

- Garbett, D.; Ovens, A. Being Self-study Researchers in a Digital World: Future Oriented Research and Pedagogy in Teacher Education. Springer: Cham, Switzerland, 2016. [Google Scholar]

- Zawacki-Richter, O.; Kerres, M.; Bedenlier, S.; Bond, M.; Buntins, K. (Eds.) Systematic Reviews in Educational Research; Springer: Wiesbaden, Germany, 2020. [Google Scholar] [CrossRef]

- Sleeter, C. Toward Teacher Education Research That Informs Policy. Educ. Res. 2014, 43, 146–153. [Google Scholar] [CrossRef]

- Moher, D.; Shamseer, L.; Clarke, M.; Ghersi, D.; Liberati, A.; Petticrew, M.; Shekelle, P.; Stewart, L.A. Preferred Reporting Items for Systematic Review and Meta-Analysis Protocols (PRISMA-P) 2015 Statement. Syst. Rev. 2015, 4, 1. [Google Scholar] [CrossRef]

- Creswell, J.W.; Poth, C.N. Qualitative Inquiry and Research Design: Choosing among Five Approaches. Sage Publications: Los Angeles, CA, USA, 2016. [Google Scholar]

- Bao, H.; Li, Y.; Su, Y.; Xing, S.; Chen, N.-S.; Rosé, C.P. The Effects of a Learning Analytics Dashboard on Teachers’ Diagnosis and Intervention in Computer-Supported Collaborative Learning. Technol. Pedagogy Educ. 2021, 30, 287–303. [Google Scholar] [CrossRef]

- Benaoui, A.; Kassimi, M.A. Using Machine Learning to Examine Preservice Teachers’ Perceptions of Their Digital Competence. E3S Web of Conf. 2021, 297, 01067. [Google Scholar] [CrossRef]

- Chen, G. A Visual Learning Analytics (VLA) Approach to Video-Based Teacher Professional Development: Impact on Teachers’ Beliefs, Self-Efficacy, and Classroom Talk Practice. Comput. Educ. 2020, 144, 103670. [Google Scholar] [CrossRef]

- Cutumisu, M.; Guo, Q. (2019). Using Topic Modeling to Extract Pre-Service Teachers’ Understandings of Computational Thinking from their Coding Reflections. IEEE Trans. Educ. 2019, 62, 325–332. [Google Scholar] [CrossRef]

- Fan, Y.; Matcha, W.; Uzir, N.A.A.; Wang, Q.; Gašević, D. Learning Analytics to Reveal Links Between Learning Design and Self-Regulated Learning. Int. J. Artif. Intell. Educ. 2021, 31, 980–1021. [Google Scholar] [CrossRef]

- Hayward, D.V.; Mousavi, A.; Carbonaro, M.; Montgomery, A.P.; Dunn, W. Exploring Preservice Teachers Engagement with Live Models of Universal Design for Learning and Blended Learning Course Delivery. J. Spec. Educ. Technol. 2020, 37, 112–123. [Google Scholar] [CrossRef]

- Hsiao, S.-W.; Sun, H.-C.; Hsieh, M.-C.; Tsai, M.-H.; Tsao, Y.; Lee, C.-C. Toward Automating Oral Presentation Scoring During Principal Certification Program Using Audio-Video Low-Level Behavior Profiles. IEEE Trans. Affect. Comput. 2019, 10, 552–567. [Google Scholar] [CrossRef]

- Ishizuka, H.; Pellerin, M. Providing Quantitative Data with AI Mobile COLT to Support the Reflection Process in Language Teaching and Pre-Service Teacher Training: A Discussion. In CALL for Widening Participation: Short Papers from EUROCALL 2020; Frederiksen, K.-M., Larsen, S., Bradley, L., Thouësny, S., Eds.; Research-Publishing.net: Voillans, France, 2020; pp. 125–131. [Google Scholar] [CrossRef]

- Jensen, E.; Dale, M.; Donnelly, P.J.; Stone, C.; Kelly, S.; Godley, A.; D’Mello, S.K. Toward Automated Feedback on Teacher Discourse to Enhance Teacher Learning. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; pp. 1–13. [Google Scholar] [CrossRef]

- Karunaratne, T.; Byungura, J.C. Using Log Data of Virtual Learning Environments to Examine the Effectiveness of Online Learning for Teacher Education in Rwanda. In Proceedings of the 2017 IST-Africa Week Conference (IST-Africa), Windhoek, Namibia, 30 May–2 June 2017; pp. 1–12. [Google Scholar] [CrossRef]

- Kasepalu, R.; Chejara, P.; Prieto, L.P.; Ley, T. Do Teachers Find Dashboards Trustworthy, Actionable and Useful? A Vignette Study Using a Logs and Audio Dashboard. Technol. Knowl. Learn. 2021, 27, 971–989. [Google Scholar] [CrossRef]

- Kelleci, Ö.; Aksoy, N.C. Using Game-Based Virtual Classroom Simulation in Teacher Training: User Experience Research. Simul. Gaming 2021, 52, 204–225. [Google Scholar] [CrossRef]

- Kilian, P.; Loose, F.; Kelava, A. Predicting Math Student Success in the Initial Phase of College with Sparse Information Using Approaches from Statistical Learning. Front. Educ. 2020, 5, 502698. [Google Scholar] [CrossRef]

- Kosko, K.W.; Yang, Y.; Austin, C.; Guan, Q.; Gandolfi, E.; Gu, Z. Examining Preservice Teachers’ Professional Noticing of Students’ Mathematics through 360 Video and Machine Learning. In Proceedings of the 43rd Annual Meeting of the North American Chapter of the International Group for the Psychology of Mathematics Education, Philadelphia, PA, USA, 14–17 October 2021. [Google Scholar]

- Lucas, M.; Bem-Haja, P.; Siddiq, F.; Moreira, A.; Redecker, C. The Relation between In-Service Teachers’ Digital Competence and Personal and Contextual Factors: What Matters Most? Comput. Educ. 2021, 160, 104052. [Google Scholar] [CrossRef]

- Michos, K.; Hernandez-Leo, D. Supporting Awareness in Communities of Learning Design Practice. Comput. Hum. Behav. 2018, 85, 255–270. [Google Scholar] [CrossRef]

- Montgomery, A.P.; Mousavi, A.; Carbonaro, M.; Hayward, D.V.; Dunn, W. Using Learning Analytics to Explore Self-Regulated Learning in Flipped Blended Learning Music Teacher Education. Br. J. Educ. Technol. 2019, 50, 114–127. [Google Scholar] [CrossRef]

- Neumann, A.T.; Arndt, T.; Köbis, L.; Meissner, R.; Martin, A.; de Lange, P.; Pengel, N.; Klamma, R.; Wollersheim, H.-W. Chatbots as a Tool to Scale Mentoring Processes: Individually Supporting Self-Study in Higher Education. Front. Artif. Intell. 2021, 4, 668220. [Google Scholar] [CrossRef]

- Post, K. Illuminating Student Online Work and Research: Learning Analytics to Support the Development of Pre-Service Teacher Digital Pedagogy. In Proceedings of the Society for Information Technology & Teacher Education International Conference 2019, Las Vegas, NV, USA, 18–22 March 2019; pp. 937–941. Available online: https://www.learntechlib.org/primary/p/207759/ (accessed on 12 April 2022).

- Pu, S.; Ahmad, N.A.; Khambari, M.N.M.; Yap, N.K.; Ahrari, S. Improvement of pre-Service Teachers’ Practical Knowledge and Motivation about Artificial Intelligence Through a Service-Learning-Based Module in Guizhou, China: A quasi-experimental study. Asian J. Univ. Educ. 2021, 17, 203–219. [Google Scholar] [CrossRef]

- Sasmoko; Moniaga, J.; Indrianti, Y.; Udjaja, Y.; Natasha, C. Designing Determining Teacher Engagement Based on the Indonesian Teacher Engagement Index Using Artificial Neural Network. In Proceedings of the 2019 International Conference on Information and Communications Technology (ICOIACT) 2019, Yogyakarta, Indonesia, 24–25 July 2019; pp. 377–382. [Google Scholar] [CrossRef]

- Sun, F.-R.; Hu, H.-Z.; Wan, R.-G.; Fu, X.; Wu, S.-J. A Learning Analytics Approach to Investigating Pre-Service Teachers’ Change of Concept of Engagement in the Flipped Classroom. Interact. Learn. Environ. 2022, 30, 376–392. [Google Scholar] [CrossRef]

- Vazhayil, A.; Shetty, R.; Bhavani, R.R.; Akshay, N. Focusing on Teacher Education to Introduce AI in Schools: Perspectives and Illustrative Findings. In Proceedings of the 2019 IEEE Tenth International Conference on Technology for Education (T4E) 2019, Goa, India, 9–11 December 2019; pp. 71–77. [Google Scholar] [CrossRef]

- Wulff, P.; Buschhüter, D.; Westphal, A.; Nowak, A.; Becker, L.; Robalino, H.; Stede, M.; Borowski, A. Computer-Based Classification of Preservice Physics Teachers’ Written Reflections. J. Sci. Educ. Technol. 2021, 30, 1–15. [Google Scholar] [CrossRef]

- Yang, Y.; Du, Y.; van Aalst, J.; Sun, D.; Ouyang, F. Self-Directed Reflective Assessment for Collective Empowerment among Pre-Service Teachers. Br. J. Educ. Technol. 2020, 51, 1961–1981. [Google Scholar] [CrossRef]

- Yilmaz, F.G.K.; Yilmaz, R. Student Opinions about Personalized Recommendation and Feedback Based on Learning Analytics. Technol. Knowl. Learn. 2020, 25, 753–768. [Google Scholar] [CrossRef]

- Yoo, J.E.; Minjeong, R. Exploration of Predictors for Korean Teacher Job Satisfaction Via a Machine Learning Technique, Group Mnet. Front. Psychol. 2020, 11, 441. [Google Scholar] [CrossRef]

- Zhang, J.; Shi, J.; Liu, X.; Zhou, Y. An Intelligent Assessment System of Teaching Competency for Pre-service Teachers Based on AHP-BP Method. Int. J. Emerg. Technol. Learn. 2021, 16, 52–64. [Google Scholar] [CrossRef]

- Zhang, S.; Gao, Q.; Wen, Y.; Li, M.; Wang, Q. Automatically Detecting Cognitive Engagement Beyond Behavioral Indicators: A Case of Online Professional Learning Community. Educ. Technol. Soc. 2021, 24, 58–72. [Google Scholar]

- Zhao, G.; Liu, S.; Zhu, W.-J.; Qi, Y.-H. A Lightweight Mobile Outdoor Augmented Reality Method using Deep Learning and Knowledge Modeling for Scene Perception to Improve Learning Experience. Int. J. Hum.-Comput. Interact. 2021, 37, 884–901. [Google Scholar] [CrossRef]

- Tezci, E. Factors that Influence Pre-Service Teachers’ ICT Usage in Education. Eur. J. Teach. Educ. 2011, 34, 483–499. [Google Scholar] [CrossRef]

- U.S Department of Education. Study of the Teacher Education Assistance for College and Higher Education (TEACH) Grant Program. 2018. Available online: https://www2.ed.gov/rschstat/eval/highered/teach-grant/final-report.pdf (accessed on 5 April 2022).

- Ferrari, A. Digital Competence in Practice: An Analysis of Frameworks; JRC Technical Reports; Institute for Prospective Technological Studies—European Union: Seville, Spain, 2012; Available online: https://www.ectel07.org/EURdoc/JRC68116.pdf (accessed on 21 April 2022).

- Tican, C.; Deniz, S. Pre-Service Teachers’ Opinions about the Use of 21st Century Learner and 21st Century Teacher Skills. Eur. J. Educ. Res. 2019, 8, 181–197. [Google Scholar] [CrossRef]

- Wilson, M.L.; Ritzhaupt, A.D.; Cheng, L. The impact of Teacher Education Courses for Technology Integration on Pre-Service Teacher Knowledge: A Meta-Analysis Study. Comput. Educ. 2020, 156, 103941. [Google Scholar] [CrossRef]

- Shively, K.; Palilonis, J. Curriculum Development: Preservice Teachers’ Perceptions of Design Thinking for Understanding Digital Literacy as a Curricular Framework. J. Educ. 2018, 198, 202–214. [Google Scholar] [CrossRef]

- Cooper, G.; Park, H.; Nasr, Z.; Thong, L.P.; Johnson, R. Using Virtual Reality in the Classroom: Preservice Teachers’ Perceptions of its Use as a Teaching and Learning Tool. Educ. Media Int. 2019, 5, 1–13. [Google Scholar] [CrossRef]

- Verma, A.; Kumar, Y.; Kohli, R. Study of AI Techniques in Quality Educations: Challenges and Recent Progress. SN Comp. Sci. 2021, 2, 238. [Google Scholar] [CrossRef]

- Namoun, A.; Alshanqiti, A. Predicting Student Performance Using Data Mining and Learning Analytics Techniques: A Systematic Literature Review. Appl. Sci. 2020, 11, 237. [Google Scholar] [CrossRef]

- Seufert, S.; Guggemos, J.; Sailer, M. Technology-Related Knowledge, Skills, and Attitudes of Pre- and In-Service Teachers: The Current Situation and Emerging Trends. Comput. Hum. Behav. 2021, 115, 106552. [Google Scholar] [CrossRef]

- Krutka, D.G.; Heath, M.K.; Willet, K.B.S. Foregrounding Technoethics: Toward Critical Perspectives in Technology and Teacher Education. J. Technol. Teach. Educ. 2019, 27, 555–574. [Google Scholar]

- Stahl, B.C.; Akintoye, S.; Bitsch, L.; Bringedal, B.; Eke, D.; Farisco, M.; Grasenick, K.; Guerrero, M.; Knight, W.; Leach, T.; et al. From Responsible Research and Innovation to Responsibility by Design. J. Responsible Innov. 2021, 8, 175–198. [Google Scholar] [CrossRef]

- Blumenstyk, G. Can Artificial Intelligence Make Teaching More Personal? The Chronicle of Higher Education. 2018. Available online: https://www.chronicle.com/article/can-artificial-intelligence-make-teaching-more-personal/ (accessed on 1 August 2022).

- Herodotou, C.; Maguire, C.; McDowell, N.; Hlosta, M.; Boroowa, A. The Engagement of University Teachers with Predictive Learning Analytics. Comput. Educ. 2021, 173, 104285. [Google Scholar] [CrossRef]

- Chen, X. AI + Education: Self-adaptive Learning Promotes Individualized Educational Revolutionary. In Proceedings of the 6th International Conference on Education and Training Technologies (ICETT), Macau, China, 18–20 May 2020; pp. 44–47. [Google Scholar] [CrossRef]

- Smutny, P.; Schreiberova, P. Chatbots for Learning: A Review of Educational Chatbots for the Facebook Messenger. Comput. Educ. 2020, 151, 103862. [Google Scholar] [CrossRef]

- Regan, P.M.; Jesse, J. Ethical Challenges of Edtech, Big Data and Personalized Learning: Twenty-First Century Student Sorting and Tracking. Ethics Inf. Technol. 2018, 21, 167–179. [Google Scholar] [CrossRef]

- Asterhan, C.S.C.; Rosenberg, H. The Promise, Reality and Dilemmas of Secondary School Teacher–Student Interactions in Facebook: The Teacher Perspective. Comput. Educ. 2015, 85, 134–148. [Google Scholar] [CrossRef]

- Johnson, M. A Scalable Approach to Reducing Gender Bias in Google Translate. Google AI Blog. 2020. Available online: https://ai.googleblog.com/2020/04/a-scalable-approach-to-reducing-gender.html (accessed on 1 August 2022).

- Caldwell, H. Mobile Technologies as a Catalyst for Pedagogic Innovation within Teacher Education. Int. J. Mob. Blended Learn. 2018, 10, 50–65. [Google Scholar] [CrossRef]

- Carrier, M.; Nye, A. Empowering Teachers for the Digital Future: What do 21st-Century Teachers Need? In Digital Language Learning and Teaching; Carrier, M., Damerow, R.M., Bailey, K.M., Eds.; Routledge: London, UK, 2017; pp. 208–221. [Google Scholar]

- Nazaretsky, T.; Bar, C.; Walter, M.; Alexandron, G. Empowering Teachers with AI: Co-Designing a Learning Analytics Tool for Personalized Instruction in the Science Classroom. In Proceedings of the 12th International Learning Analytics and Knowledge (LAK) Conference, Online USA, 20–25 March 2022; pp. 1–12. [Google Scholar] [CrossRef]

- Goksel, N.; Bozkurt, A. Artificial Intelligence in Education: Current Insights and Future Perspectives. In Handbook of Research on Learning in the Age of Transhumanism; Sisman-Ugur, S., Kurubacak, G., Eds.; IGI Global: Hershey, PA, USA, 2019; pp. 224–236. [Google Scholar]

- Van Leeuwen, A.; Teasley, S.; Wise, A. Teacher and Student Facing Learning Analytics. In Handbook of Learning Analytics, 2nd ed.; Lang, C., Siemens, G., Wise, A., Gasevic, D., Merceron, A., Eds.; SOLAR: Vancouver, BC, Canada, 2022; pp. 130–140. Available online: https://solaresearch.org/wp-content/uploads/hla22/HLA22.pdf (accessed on 5 April 2022).

- Luckin, R.; Cukurova, M.; Kent, C.; du Boulay, B. Empowering Educators to Be AI-Ready. Comput. Educ. Artif. Intell. 2022, 3, 100076. [Google Scholar] [CrossRef]

- Pusey, P.; Sadera, W.A. Cyberethics, Cybersafety, and Cybersecurity. J. Digit. Learn. Teach. Educ. 2011, 28, 82–85. [Google Scholar] [CrossRef]

- Reidenberg, J.R.; Schaub, F. Achieving Big Data Privacy in Education. Theory Res. Educ. 2018, 16, 263–279. [Google Scholar] [CrossRef]

- Schelenz, L.; Segal, A.; Gal, K. Best Practices for Transparency in Machine Generated Personalization. In Proceedings of the 28th ACM Conference on User Modeling, Adaptation and Personalization (UMAP), Genoa, Italy, 14–17 July 2020; pp. 23–28. [Google Scholar] [CrossRef]

- Porayska-Pomsta, K.; Rajendran, G. Accountability in Human and Artificial Intelligence Decision-Making as the Basis for Diversity and Educational Inclusion. In Artificial Intelligence and Inclusive Education; Knox, J., Wang, Y., Gallagher, M., Eds.; Springer: Singapore, 2019; pp. 39–59. [Google Scholar]

- Sacharidis, D.; Mukamakuza, C.P.; Werthner, H. Fairness and Diversity in Social-Based Recommender Systems. In Proceedings of the 28th ACM Conference on User Modeling, Adaptation and Personalization (UMAP), Genoa, Italy, 14–17 July 2020; pp. 83–88. [Google Scholar] [CrossRef]

- Holmes, W.; Porayska-Pomsta, K.; Holstein, K.; Sutherland, E.; Baker, T.; Shum, S.B.; Santos, O.C.; Rodrigo, M.T.; Cukurova, M.; Bittencourt, I.I.; et al. Ethics of AI in Education: Towards a Community-Wide Framework. Int. J. Artif. Intell. Educ. 2021. [Google Scholar] [CrossRef]

- Siemens, G. Learning Analytics and Open, Flexible, and Distance Learning. Distance Educ. 2019, 40, 414–418. [Google Scholar] [CrossRef]

| Inclusion Criteria | Exclusion Criteria |

|---|---|

|

|

| Author(s) and Year | Country/Region | Goals and Objectives | Participants | Data Sources | Techniques | Tools | Ethical Procedures | Results |

|---|---|---|---|---|---|---|---|---|

| Bao et al. (2021) [31] | China | To visualize students’ behaviors and interactions. | 35 PSTs |

| LA dashboard |

| n.d. | The KBSD tool has the potential to assist teachers in detecting learning problems. The most common strategy was cross-group; the interventions involved cognitive guidance, scaffold instruction, and positive evaluation. |

| Benaoui and Kassimi (2021) [32] | Morocco | Perceptions of PSTs’ digital competence. | 291 PSTs |

| AI machine learning |

| n.d. | PSTs felt competent when using digital technologies daily, but they did not feel competent in digital content creation and problem solving. This might be due to the predominance of theoretical knowledge at the expense of real practice in teaching training. |

| Chen (2020) [33] | China | To investigate whether visual learning analytics (VLA) has a significant influence on teachers’ beliefs and self-efficacy when guiding classroom discussions. | 46 ISTs |

| LA visual learning analytics (VLA) |

| n.d. | The VLA approach to video-based teacher professional development had significant effects on teachers’ beliefs and self-efficacy, and influenced their actual classroom teaching behavior. |

| Cutumisu and Guo (2019) [34] | Canada | To determine PSTs’ knowledge of and attitudes toward computational thinking through the automatic scoring of short essays. | 139 PSTs |

| AI machine learning |

| PSTs provided informed consent | Topics that emerged from PSTs’ reflection included assignment (66.7%), skill (11.6%), activity 10.1%), and course (6.5%). |

| Fan et al. (2021) [35] | China | To reveal links between learning design and self-regulated learning. | 7030 PSTs, 1758 ISTs |

| LA |

| n.d. | Four meaningful learning tactics were detected with the EM algorithm: search (lectures), content and assessment (case-based or problem-based), content (project-based), and assessment. |

| Hayward et al. (2020) [36] | Canada | To explore PSTs’ engagement with models of universal design for learning and blended learning concepts. | 197 PSTs |

| LA |

| n.d. | The feature regularity of access had a moderate relationship with student engagement. High achievers tended to have a set of strategies. |

| Hsiao et al. (2019) [37] | China Taiwan | To assess the qualities of pre-service principals’ video-based oral presentations through automatic scoring. | 200 pre-service principals |

| AI machine learning |

| n.d. | The SVM classifier had the best accuracy (55%). It was found that human experts can potentially suffer undesirable variabilities over time, while automatic scoring remains robust and reliable over time. |

| Ishizuka and Pellerin (2020) [38] | Japan | To assess real-time activities in second language classrooms. | 4 PSTs |

| AI |

| n.d. | The integration of AI mobile COLT analysis has strong potential to follow-up PSTs’ progress throughout their practicum. |

| Jensen et al. (2020) [39] | USA | To provide automated feedback on teacher discourse to enhance teacher learning. | 16 ISTs |

| AI machine learning |

| n.d. | The RF classifier had 89% accuracy, generating automatic measurement and feedback of teacher discourse using self-recorded audio data from classrooms. |

| Karunaratne and Byungura (2017) [40] | Rwanda | To track in-service teachers’ behavior in an online course of professional development. | 61 ISTs |

| LA visual learning analytics |

| n.d. | Half of the registered teachers never accessed the course. Most of the teachers were actively engaging in the virtual learning environment’s activities. |

| Kasepalu et al. (2021) [41] | Estonia | Teachers’ perceptions of collaborative analytics using a dashboard based on audio and digital trace data. | 21 ISTs |

| LA dashboard |

| Consent forms were filled out by ISTs and their students | New information enhances teachers’ awareness, but it seems that the dashboard decreases teachers’ actionability. Therefore, a guiding dashboard could possibly help less experienced teachers with data-informed assessment. |

| Kelleci and Aksoy (2020) [42] | Turkey | To examine PSTs’ and ISTs’ experiences using an AI-based-simulated virtual classroom. | 16 PSTs, 2 ISTs |

| AI simulation |

| Ethical approval from the institution | The SimInClass simulation was effective in providing clear directions and giving feedback. PSTs suggested that the simulation should give clues as to correct solutions. |

| Kilian et al. (2020) [43] | Germany | To predict PSTs’ dropout for a mathematics course and identify risk groups. | 163 PSTs |

| AI machine learning |

| PSTs provided written informed consent | Risk level 1: score ≤ 12 (highest risk), GPA > 2.1; risk level 2: score ≤ 12 (high risk), GPA ≤ 2.1; risk level 3: score > 12 (moderate), 1.6 < GPA ≤ 2. |

| Kosko et al. (2021) [44] | USA | To examine PSTs’ professional noticing of students through video and ML. | 6 PSTs, subsample of 70 PSTs |

| AI machinelearning |

| n.d. | PSTs’ actions relevant to pedagogical content-specific noticing could be detected by AI algorithms. PSTs’ behavior may have been due to professional knowledge rather than experience. |

| Lucas et al. (2021) [45] | Portugal | To measure teachers’ digital competence and its relation to personal and contextual factors. | 1071 ISTs |

| AI machine learning |

| (Voluntary and anonymous teachers) | For personal factors, FFTrees had an accuracy of 81%, while for contextual factors it was 66%. For digital competence, the important personal factors were the number of digital tools used, ease of use, confidence, and openness to new technology. The contextual factors included students’ access to technology, the curriculum, and classroom equipment. |

| Michos and Hernández-Leo (2018) [46] | Spain | To support community awareness to facilitate teachers’ learning design process using a dashboard with data visualizations. | 23 PSTs, 209 ISTs |

| LA dashboard |

| n.d. | The ILDE dashboard can provide an understanding of the social presence in the community of teachers. Visualization was the most commonly used feature. There were time constraints. |

| Montgomery et al. (2019) [47] | Canada | To examine the relationships between self-regulated learning behaviors and academic achievements. | 157 PSTs |

| LA |

| n.d. | 84.5% of PTSs’ access to the platform took place off-campus. The strongest predictors for student success were the access day of the week and access frequency. |

| Newmann et al. (2021) [48] | Germany | To support PSTs’ self-study using chatbots as a tool to scale mentoring processes. | 19 PSTs |

| AI NLP |

| n.d. | Promising results that bear the potential for digital mentoring to support students. |

| Post (2019) [49] | USA | To challenge PSTs to analyze and interpret data on students’ online behavior and learning. | n.d. PSTs |

| LA |

| n.d. | PSTs lacked media literacy skills. Online assignments promoted student-centered learning and critical thinking. The prevalence of multitasking was highlighted. |

| Pu et al. (2021) [50] | Malaysia | To design a service-learning-based module training AI subjects (SLBM-TAIS). | 60 PSTs |

| AI |

| n.d. | The SLBM-TAIS was effective in training PSTs to teach AI subjects to primary school students. The SLBM-TAIS module influences situational knowledge, teaching strategies, and both intrinsic and extrinsic motivation. |

| Sasmoko et al. (2019) [51] | Indonesia | To determine teacher engagement using artificial neural networks. | 10,642 ISTs |

| AI machine learning (ANN) |

| Not applicable | The ANN classification accuracy was 97.65%, proving the reliability of the instruments and websites; however, this still requires further testing in terms of both ease of use and trials with diverse data. |

| Sun et al. (2019) [52] | China | To investigate changes in PSTs’ concept of engagement, analyzing data recorded during PSTs’ discussions via an MOOC platform. | 53 PSTs |

| LA |

| n.d. | The most frequent discussion behaviors were evaluated (31.52%) and analyzed (27.77%). PSTs with an analytical style implemented multiple strategies for learning. |

| Vazhayil et al. (2019) [53] | India | To introduce AI literacy and AI thinking to in-service secondary school teachers. | 34 ISTs |

| AI |

| 15 ISTs consented to recorded video testimonials | 77% appreciated peer teaching, 41% preferred the game-based approach, and 24% were concerned about internet access. The best strategy was embracing creative freedom and peer teaching to boost learners’ confidence. |

| Wulff et al. (2020) [54] | Germany | To employ AI algorithms for classifying written reflections according to a reflection-supporting model. | 17 PSTs |

| AI natural language processing |

| PSTs provided informed consent | The multinomial logistic regression was the most suitable classifier (0.63). Imprecise writing was a barrier to accurate computer-based classification. |

| Yang et al. (2020) [55] | China | To enhance self-directed reflective assessment (SDRA) using LA. | 47 PSTs |

| LA |

| Ethical approval was obtained from the hosting institution | SDRA fostered PSTs’ collective empowerment, as reflected by their collective decision making, synthesis of ideas, and “rising above” ideas. |

| Yilmaz and Yilmaz (2020) [56] | Turkey | To examine PSTs’ perceptions of personalized recommendations and feedback based on LA. | 40 PSTs |

| LA |

| (Voluntary participation) | LA helped to identify learning deficiencies, provided self-assessment and personalized learning, improved academic performance, and instilled a positive attitude toward the course. |

| Yoo and Rho (2020) [57] | Korea | To determine ISTs’ training and professional development using ML. | 2933 ISTs, 177 principals |

| AI machine learning |

| Not applicable | Identified 18 predictors of ISTs’ professional development. Found 11 new predictors related to ISTs’ pedagogical preparedness, feedback, and participation. |

| Zhang J. et al. (2021) [58] | China | To build an intelligent assessment system of PSTs teaching competency. | 240 PSTs |

| AI machine learning |

| n.d. | The trained model can be used to evaluate PSTs’ competency on a large scale, its relative error was small between 0–0.2. |

| Zhang S. et al. (2021) [59] | China | To automatically detect the discourse characteristics of in-service teachers from online textual data. | 1834 ISTs |

| AI natural language processing |

| Ethical approval from the institution | New and relevant information was posted at the beginning of the online discourse. Cluster analysis showed three different posts: relevant topic with new information, another with little new information, and a less relevant topic with little new information. |

| Zhao et al. (2021) [60] | China | To improve the outdoor learning experience and build a learning resource based on ontology information retrieval. | 38 PSTs |

| AI vision-based mobile augmented reality (VMAR) |

| n.d. | PSTs perceived the usability as good; it was preferred by younger users, and had a positive impact on learning. The average precision of retrieval based on keywords (97.46%) and ontology (90.85%) signified good performance. |

| Goals and Objectives | Reference Number | Number of Studies |

|---|---|---|

| Behavior when using AI and LA | [31,35,39,40,42,44,52] | 7 |

| Digital competence | [34,38,45,50,53,58] | 6 |

| Perception of AI and LA | [32,41,48,56,60] | 5 |

| Self-regulation and reflection | [33,35,47,54,55] | 5 |

| Engagement | [36,51,57,59] | 4 |

| Analysis of educational data | [37,43,49] | 3 |

| Data Sources | Types of Datasets | Reference Number |

|---|---|---|

| Behavioral data | Access data | [32,35,36,38,40,46,47,48,49,56,60] |

| Social interaction data | [31,44,56] | |

| Discourse data | Text discourse data | [34,53,54,55,59] |

| Audio video discourse data | [32,37,39,44] | |

| Discussion data | [31,42,52] | |

| Statistical data | Sociodemographic data | [35,43,45,50,51,57,58] |

| Techniques | Reference Number | Number of Studies | Total |

|---|---|---|---|

| Artificial Intelligence | |||

| [32,34,37,39,44,45,51,57,58] | 9 | 17 |

| [48,55,59] | 3 | |

| [38,60] | 2 | |

| [42,50,53] | 3 | |

| Learning Analytics | |||

| [31,40,46] | 3 | 13 |

| [33,40] | 2 | |

| [35,36,47,49,50,52,54,56] | 8 |

| Themes | Tools | Reference Number |

|---|---|---|

| AI tools | Algorithms: SVM, LR, NLP, etc. | [32,35,37,39,43,44,45,48,55,57,58,59,60] |

| AI systems: IBM Watson AI | [38,39,42,53] | |

| Online platforms | Moodle | [31,34,36,38,40,47,56] |

| MOOC | [35,52] | |

| Data analysis tools | Programming language: R, Python | [34,35,40,51,57] |

| Statistical software: SPSS, STATA | [45,46,58] | |

| Monitoring tools | Dashboards: WISE, ILDE, KBSD | [31,41,46] |

| Modules: CDA, SLBM-TAIS | [33,49,50,54] |

| Participants | Reference Number | Number of Studies |

|---|---|---|

| Pre-service teachers (PSTs) | [31,32,34,36,37,38,43,44,47,48,49,50,52,54,55,56,58,60] | 18 |

| In-service teachers (ISTs) | [33,39,40,41,45,51,53,57,59] | 9 |

| Both pre- and in-service teachers | [35,42,46] | 3 |

| Ethical Consent Granted by | Reference Number | Number of Studies |

|---|---|---|

| Pre- and in-service teachers | [34,41,43,53,54] | 5 |

| The higher education institution | [42,55,59] | 3 |

| Short reference | [45,56] | 2 |

| Not applicable | [51,57] | 2 |

| Not mentioned | [31,32,33,35,36,37,38,39,40,44,46,47,48,49,50,52,58,60] | 18 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Salas-Pilco, S.Z.; Xiao, K.; Hu, X. Artificial Intelligence and Learning Analytics in Teacher Education: A Systematic Review. Educ. Sci. 2022, 12, 569. https://doi.org/10.3390/educsci12080569

Salas-Pilco SZ, Xiao K, Hu X. Artificial Intelligence and Learning Analytics in Teacher Education: A Systematic Review. Education Sciences. 2022; 12(8):569. https://doi.org/10.3390/educsci12080569

Chicago/Turabian StyleSalas-Pilco, Sdenka Zobeida, Kejiang Xiao, and Xinyun Hu. 2022. "Artificial Intelligence and Learning Analytics in Teacher Education: A Systematic Review" Education Sciences 12, no. 8: 569. https://doi.org/10.3390/educsci12080569

APA StyleSalas-Pilco, S. Z., Xiao, K., & Hu, X. (2022). Artificial Intelligence and Learning Analytics in Teacher Education: A Systematic Review. Education Sciences, 12(8), 569. https://doi.org/10.3390/educsci12080569