Barriers to Change: Social Network Interactions Not Sufficient for Diffusion of High-Impact Practices in STEM Teaching

Abstract

:1. Introduction

2. Background

2.1. High-Impact Teaching Practices

2.2. Faculty Teaching Networks

2.3. Study Context and Objectives

3. Methods and Results

3.1. Data Collection

- We have not interacted regarding teaching.

- We have discussed teaching this course or a related general education course.

- We have actively collaborated on this course or a related course, such as developing shared lessons or aligning curriculum.

- We have worked together on the scholarship of teaching this course or a related course, such as a presentation, publication, or grant proposal.

3.2. Results and Analysis

4. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Committee on STEM Education. Charting a Course for Success: America’s Strategy for STEM Education; National Science and Technology Council: Washington, DC, USA, 2018. [Google Scholar]

- Kuh, G.D. High-Impact Educational Practices: What They Are, Who Has Access to Them, and Why They Matter; Association of American Colleges and Universities: Washington, DC, USA, 2008. [Google Scholar]

- Stains, M.; Harshman, J.; Barker, M.K.; Chasteen, S.V.; Cole, R.; DeChenne-Peters, S.E.; Eagan, M.K., Jr.; Esson, J.M.; Knight, J.K.; Laski, F.A.; et al. Anatomy of STEM teaching in North American universities. Science 2018, 359, 1468–1470. [Google Scholar] [CrossRef] [PubMed]

- Daly, A. Social Network Theory and Educational Change; Harvard Education Press: Cambridge, MA, USA, 2010. [Google Scholar]

- Li, Y.; Krasny, M.E. Relationship between professional networks and practice change in environmental education. J. Environ. Educ. 2021, 52, 174–189. [Google Scholar] [CrossRef]

- Moolenaar, N.M.; Sleegers, P.J. Social networks, trust, and innovation. How social relationships support trust and innovative climates in Dutch Schools. In Social Network Theory and Educational Change; Harvard Education Press: Cambridge, MA, USA, 2010; pp. 97–114. [Google Scholar]

- Addis, E.A.; Quardokus, K.M.; Bassham, D.C.; Becraft, P.W.; Boury, N.; Coffman, C.R.; Colbert, J.T.; Powell-Coffman, J.A. Implementing pedagogical change in introductory biology courses through the use of faculty learning communities. J. Coll. Sci. Teach. 2013, 43, 22–29. [Google Scholar] [CrossRef]

- Benbow, R.J.; Lee, C.; Hora, M.T. Social Networks and Skills Instruction: A Pilot Study of STEM College Educators and Employers in Wisconsin and New York; WCER Working Paper No. 2018-3; Wisconsin Center for Education Research: Madison, WI, USA, 2018. [Google Scholar]

- Hirst, R.A.; Anderson, K.L.; Packard, B.W.L.; Liotta, L.J.; Bleakley, B.H.; Lombardi, P.J.; Burkholder, K.C. Faculty Learning at the Individual and Group Level. J. Coll. Sci. Teach. 2021, 50, 20–30. [Google Scholar]

- Kezar, A. Higher education change and social networks: A review of research. J. High. Educ. 2014, 85, 91–125. [Google Scholar] [CrossRef]

- Middleton, J.A.; Krause, S.; Judson, E.; Ross, L.; Culbertson, R.; Hjelmstad, K.D.; Hjelmstad, K.L.; Chen, Y.C. A Social Network Analysis of Engineering Faculty Connections: Their Impact on Faculty Student-Centered Attitudes and Practices. Educ. Sci. 2022, 12, 108. [Google Scholar] [CrossRef]

- Wieman, C.; Gilbert, S. The teaching practices inventory: A new tool for characterizing college and university teaching in mathematics and science. CBE—Life Sci. Educ. 2014, 13, 552–569. [Google Scholar] [CrossRef]

- Wylie, E.C.; Lyon, C.J. Developing a formative assessment protocol to support professional growth. Educ. Assess. 2020, 25, 314–330. [Google Scholar] [CrossRef]

- Wieman, C.E. Expertise in university teaching & the implications for teaching effectiveness, evaluation & training. Daedalus 2019, 148, 47–78. [Google Scholar]

- Ngai, C.; Pilgrim, M.E.; Reinholz, D.L.; Corbo, J.C.; Quan, G.M. Developing the DELTA: Capturing cultural changes in undergraduate departments. CBE—Life Sci. Educ. 2020, 19, ar15. [Google Scholar] [CrossRef]

- Ambrose, S.A.; Bridges, M.W.; DiPietro, M.; Lovett, M.C.; Norman, M.K. How Learning Works: Seven Research-Based Principles for Smart Teaching; John Wiley & Sons: Hoboken, NJ, USA, 2010. [Google Scholar]

- Andrews, T.C.; Lemons, P.P. It’s personal: Biology instructors prioritize personal evidence over empirical evidence in teaching decisions. CBE—Life Sci. Educ. 2015, 14, ar7. [Google Scholar] [CrossRef] [PubMed]

- Gess-Newsome, J.; Southerland, S.A.; Johnston, A.; Woodbury, S. Educational reform, personal practical theories, and dissatisfaction: The anatomy of change in college science teaching. Am. Educ. Res. J. 2003, 40, 731–767. [Google Scholar] [CrossRef]

- McConnell, M.; Montplaisir, L.; Offerdahl, E.G. A model of peer effects on instructor innovation adoption. Int. J. STEM Educ. 2020, 7, 1–11. [Google Scholar] [CrossRef]

- Sjoer, E.; Meirink, J. Understanding the complexity of teacher interaction in a teacher professional learning community. Eur. J. Teach. Educ. 2016, 39, 110–125. [Google Scholar] [CrossRef]

- Buckley, H.; Nimmon, L. Learning in faculty development: The role of social networks. Acad. Med. 2020, 95, S20–S27. [Google Scholar] [CrossRef]

- Cross, R.; Borgatti, S.P.; Parker, A. Making invisible work visible: Using social network analysis to support strategic collaboration. Calif. Manag. Rev. 2002, 44, 25–46. [Google Scholar] [CrossRef]

- Foo, Y.Y.; Moody, J.; Cook, S. Visualizing faculty development impact: A social network analysis. Perspect. Med. Educ. 2019, 8, 191–196. [Google Scholar] [CrossRef]

- Hoe, C.; Adhikari, B.; Glandon, D.; Das, A.; Kaur, N.; Gupta, S. Using social network analysis to plan, promote and monitor intersectoral collaboration for health in rural India. PLoS ONE 2019, 14, e0219786. [Google Scholar] [CrossRef] [PubMed]

- Hu, Q.; Kapucu, N.; O’Byrne, L. Strategic planning for community-based small nonprofit organizations: Implementation, benefits, and challenges. J. Appl. Manag. Entrep. 2014, 19, 83. [Google Scholar] [CrossRef]

- Cela, K.L.; Sicilia, M.Á.; Sánchez, S. Social network analysis in e-learning environments: A preliminary systematic review. Educ. Psychol. Rev. 2015, 27, 219–246. [Google Scholar] [CrossRef]

- Reding, T.E. Determining the University’s Position in a Multi-stakeholder Collaborative Network. Metrop. Univ. 2020, 31, 3–23. [Google Scholar] [CrossRef]

- Li, C.T.; Lin, Y.J.; Yeh, M.Y. Forecasting participants of information diffusion on social networks with its applications. Inf. Sci. 2018, 422, 432–446. [Google Scholar] [CrossRef]

- Jan, S.K.; Vlachopoulos, P. Social network analysis: A framework for identifying communities in higher education online learning. Technol. Knowl. Learn. 2019, 24, 621–639. [Google Scholar] [CrossRef]

- Ortega, L.; Boda, Z.; Thompson, I.; Daniels, H. Understanding the structure of school staff advice relations: An inferential social network perspective. Int. J. Educ. Res. 2020, 99, 101517. [Google Scholar] [CrossRef]

- Ma, S.; Herman, G.L.; West, M.; Tomkin, J.; Mestre, J. Studying STEM faculty communities of practice through social network analysis. J. High. Educ. 2019, 90, 773–799. [Google Scholar] [CrossRef]

- Reding, T.E.; Dorn, B.; Grandgenett, N.; Siy, H.; Youn, J.; Zhu, Q.; Engelmann, C. Identification of the emergent leaders within a CSE professional development program. In Proceedings of the 11th Workshop in Primary and Secondary Computing Education, Munster, Germany, 13–15 October 2016; pp. 37–44. [Google Scholar]

- Gonzoles, H.; Kuenzi, J. Science, Technology, Engineering, and Mathematics (STEM) Education: A Primer; Congressional Research Service: Washington, DC, USA, 2012. [Google Scholar]

- Hall, T.J.; Smith, M.A. Teacher planning, instruction and refl ection: What we know about teacher cognitive processes. Quest 2006, 58, 424–442. [Google Scholar] [CrossRef]

- Wieman, C.; Holmes, N. Measuring the impact of an instructional laboratory on the learning of introductory physics. Am. J. Phys. 2015, 83, 972–978. [Google Scholar] [CrossRef]

- Bailey, D.B., Jr.; Palsha, S.A. Qualities of the stages of concern questionnaire and implications for educational innovations. J. Educ. Res. 1992, 85, 226–232. [Google Scholar] [CrossRef]

- Smith, M.; Ceni, A.; Milic-Frayling, N.; Shneiderman, B.; Mendes Rodrigues, E.; Leskovec, J.; Dunne, C. NodeXL: A Free and Open Network Overview, Discovery and Exploration Add-In for Excel. Social Media Research Foundation. Available online: https://www.smrfoundation.org (accessed on 20 July 2022).

- St-Louis, B.; Roberge, G.; Lavoie, R.; Campbell, D.; Côté, G.; Archambault, E. Measure of homophily in scientific collaboration networks. In Proceedings of the XXXVI Sunbelt Conference of the International Network for Social Network Analysis (INSNA), Newport Beach, CA, USA, 3 April 2016. [Google Scholar]

- McDonald, J.D. Measuring personality constructs: The advantages and disadvantages of self-reports, informant reports and behavioural assessments. Enquire 2008, 1, 1–19. [Google Scholar]

| Factor | Practices That Support Learning |

|---|---|

| Knowledge Organization | Provides a list of topics to be covered. Provides a list of topic-specific competencies. Provides a list of crosscutting competencies (problem-solving, etc.). Provides out-of-class multimedia content. Provides lecture notes or other class materials. Spends course time on the process. |

| Reducing Cognitive Load | Provides worked examples. Pre-class materials are provided. Students read/view and are formatively quizzed before class time. |

| Motivation | The course attempts to change student attitudes and perceptions. Articles from the scientific literature are used in the course. Students discuss why the material is useful. Students are explicitly encouraged to meet with the instructor. Students are provided with opportunities to have some control over their learning. |

| Practice | Practice exams are provided. Small-group discussions or problem-solving. Demonstrations require students to first make predictions. Student presentations are assigned. A significant fraction of class time is spent not lecturing. Peer-response systems are used, such as “clickers.” A paper or project is assigned involving some degree of student control. A significant fraction of an exam grade requires reasoning explanation. |

| Feedback | Student wikis or discussion boards are used with instructor feedback. Solutions to homework assignments are provided. Solutions to exams are provided. Instructor pauses to ask for questions. Feedback is provided on assignments with opportunities for students to redo work. |

| Metacognition | Class ends with a reflective activity. There are opportunities for self-evaluation. |

| Group Learning | Students are encouraged to work collaboratively on assignments. There are explicit group assignments. |

| Factor | Practices That Support Teacher Effectiveness |

|---|---|

| Prior Knowledge and Beliefs | Assessment of student knowledge and/or beliefs is done at the beginning of the course. A pre-post survey of student interests and/or perceptions is assigned. |

| Feedback on Effectiveness | Students complete a midterm course evaluation. The instructor repeatedly gains feedback from students. An instructor-independent pre/post-test is used to measure learning. New teaching methods are evaluated using measurements of the impact on learning. |

| Gain Knowledge and Skills | The instructor uses “departmental” course materials. The instructor discusses how to teach the course with colleagues. The instructor reads the literature about teaching and learning relevant to the course. The instructor sits in on a colleague’s class. |

| Group | N | Mean | S.D. |

|---|---|---|---|

| All Respondents | 90 | 33.2 | 8.2 |

| SNA Respondents | 52 | 35.4 | 7.7 |

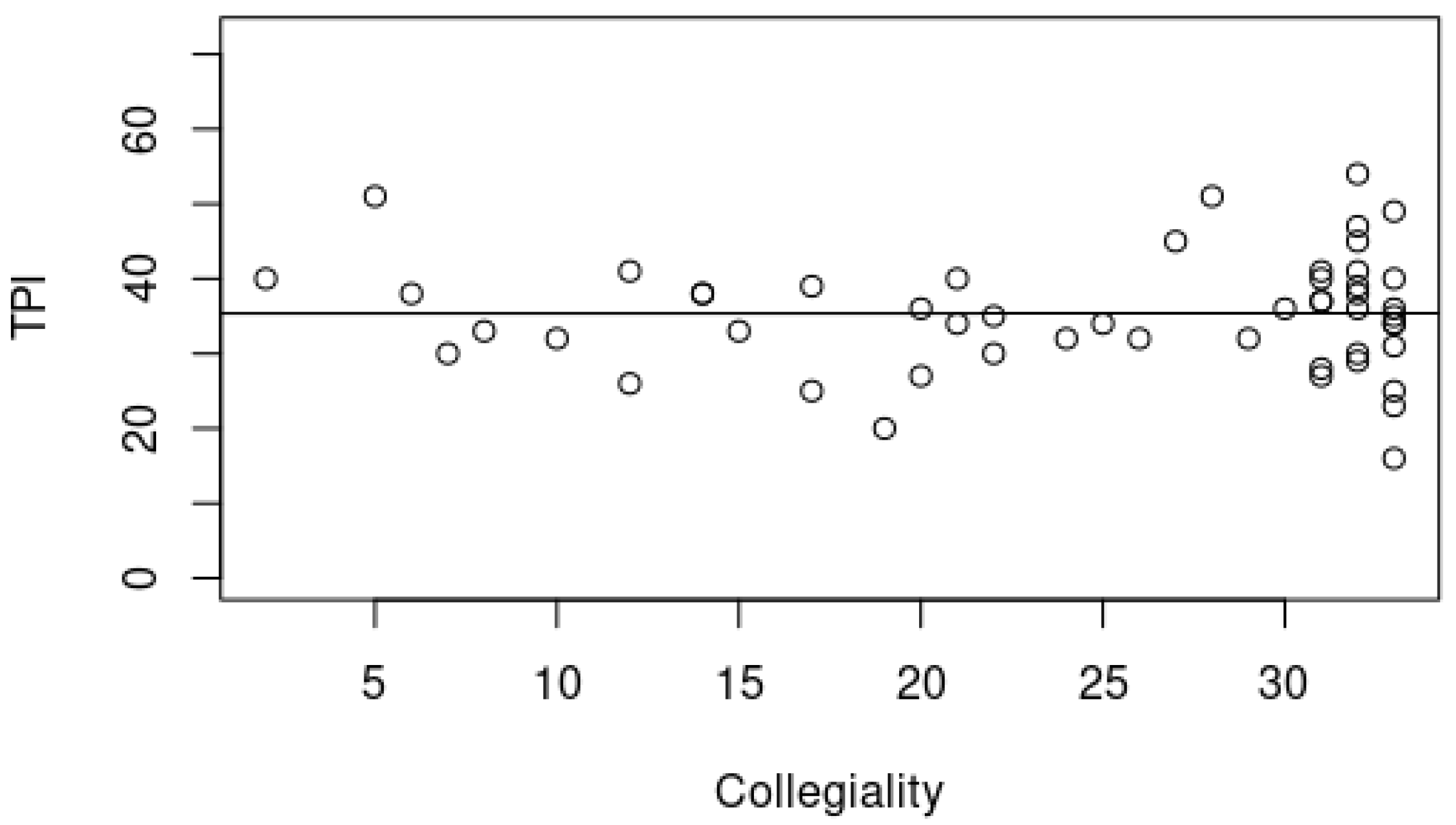

| Ego-Level Metric | All Interaction Network | Collaboration Interaction Network |

|---|---|---|

| In-Degree | 0.25 | 0.056 |

| Out-Degree | −0.081 | −0.120 |

| Betweenness Centrality | −0.012 | −0.064 |

| Closeness Centrality | 0.159 | 0.274 |

| Quartile | H Indicator | Explanation |

|---|---|---|

| 1 | −0.01 | No bias |

| 2 | 0.03 | No bias |

| 3 | −0.07 | No bias |

| 4 | 0.25 | Slight heterophilic pair bias |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Reding, T.; Moore, C.; Pelton, J.A.; Edwards, S. Barriers to Change: Social Network Interactions Not Sufficient for Diffusion of High-Impact Practices in STEM Teaching. Educ. Sci. 2022, 12, 512. https://doi.org/10.3390/educsci12080512

Reding T, Moore C, Pelton JA, Edwards S. Barriers to Change: Social Network Interactions Not Sufficient for Diffusion of High-Impact Practices in STEM Teaching. Education Sciences. 2022; 12(8):512. https://doi.org/10.3390/educsci12080512

Chicago/Turabian StyleReding, Tracie, Christopher Moore, Julie A. Pelton, and Sarah Edwards. 2022. "Barriers to Change: Social Network Interactions Not Sufficient for Diffusion of High-Impact Practices in STEM Teaching" Education Sciences 12, no. 8: 512. https://doi.org/10.3390/educsci12080512

APA StyleReding, T., Moore, C., Pelton, J. A., & Edwards, S. (2022). Barriers to Change: Social Network Interactions Not Sufficient for Diffusion of High-Impact Practices in STEM Teaching. Education Sciences, 12(8), 512. https://doi.org/10.3390/educsci12080512