Fostering Pre-Service Physics Teachers’ Pedagogical Content Knowledge Regarding Digital Media

Abstract

:1. Introduction

1.1. Modelling and Measuring Pedagogical Content Knowledge of Pre-Service Science Teachers

1.2. Pedagogical Content Knowledge Regarding Digital Media

1.3. Learning Opportunities to Foster Pre-Service Teachers’ Digital-Media PCK

- Aligning theory and practice

- Using teacher educators as role models

- Reflecting on attitudes about the role of technology in education

- Learning technology by design

- Collaborating with peers

- Scaffolding authentic technology experiences

- Moving from traditional assessment to continuous feedback.

1.4. Aims and Research Questions

- To what extent can the developed test instrument be used to validly measure digital-media PCK?

- Does the participants’ measured digital-media PCK increase across the seminars at the three participating universities?

2. Materials and Methods

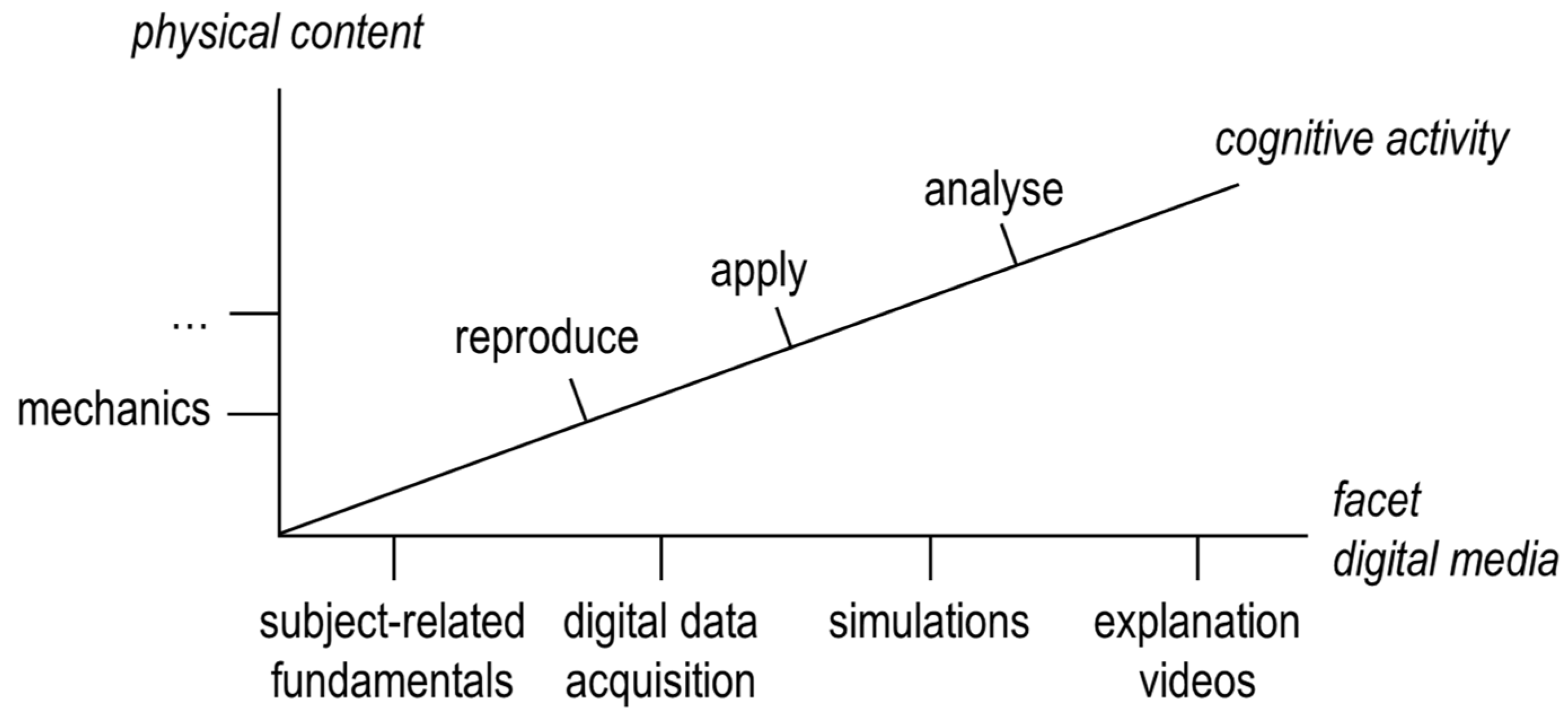

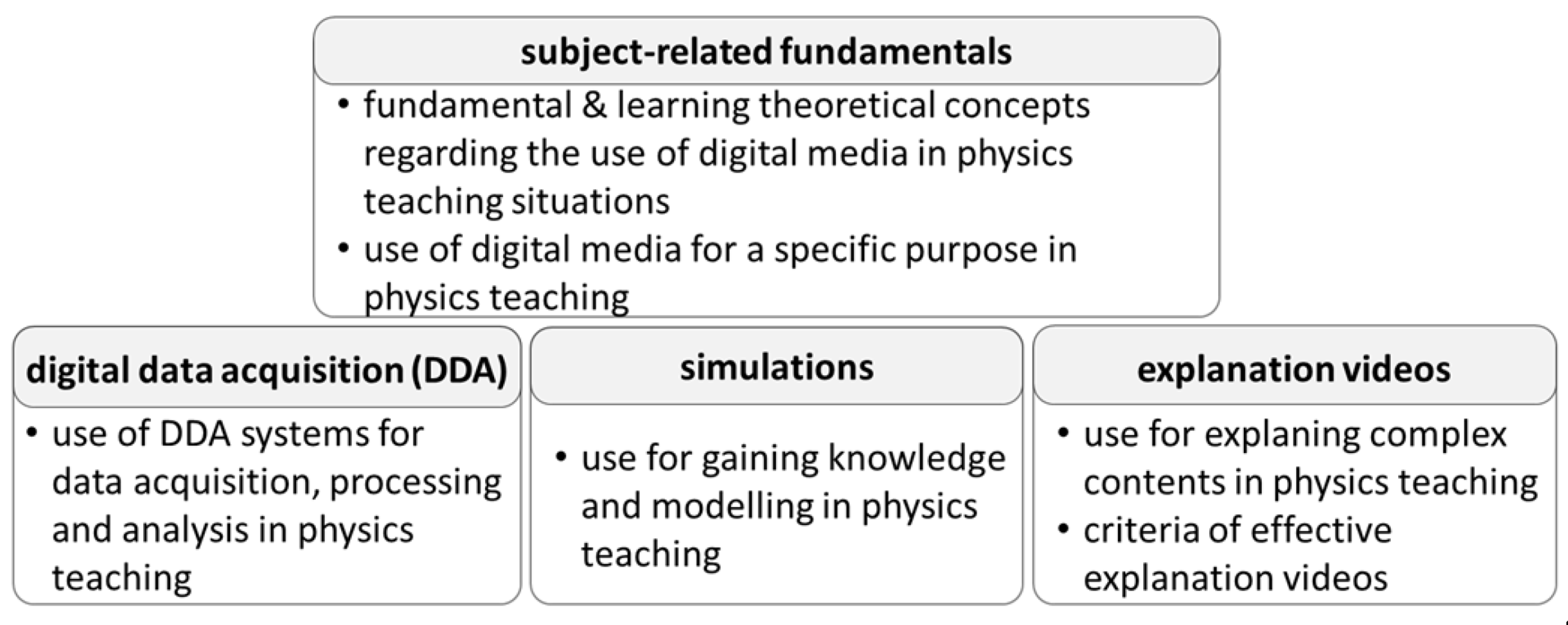

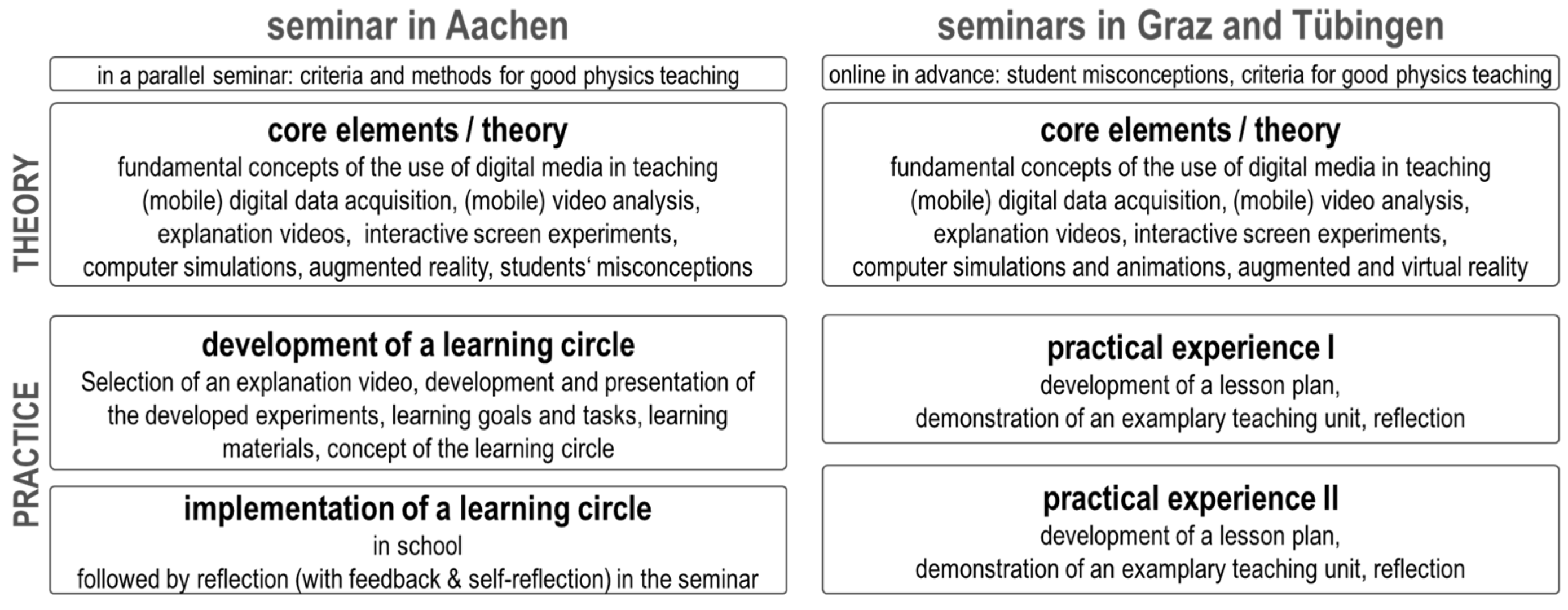

2.1. Development of the Teaching Concept

2.2. Development and Validation of the Knowledge Test Measuring Digital-Media PCK

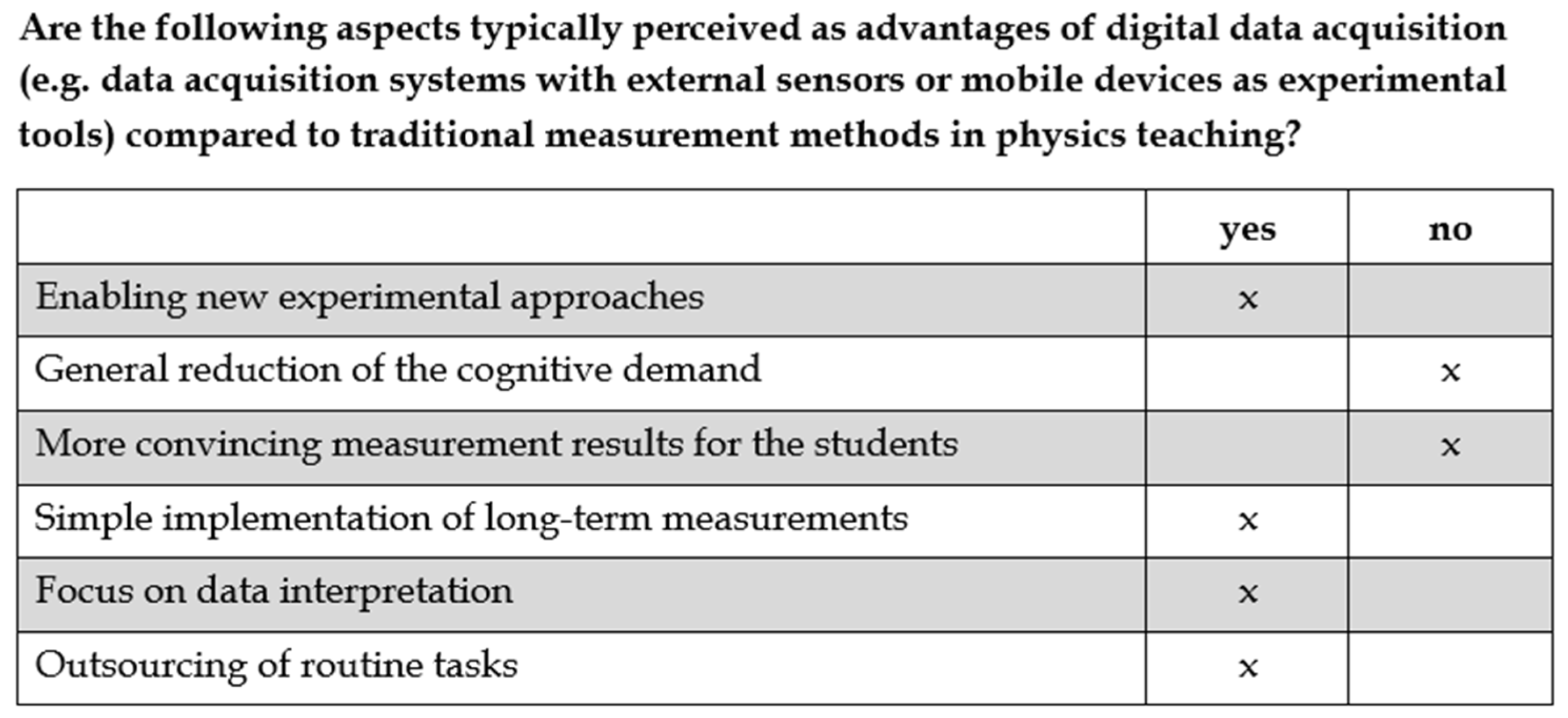

2.2.1. Test Development

2.2.2. Test Validation

2.2.3. Further Test Quality Criteria

2.3. Study Design of the Empirical Evaluation

3. Results

3.1. Teaching Concept

3.2. Test Validation

3.2.1. Delimitation from General Pedagogical Knowledge by Correlation Analysis

3.2.2. Discriminative Validity Aspect by Surveying Pre-Service Teachers of Other Subjects

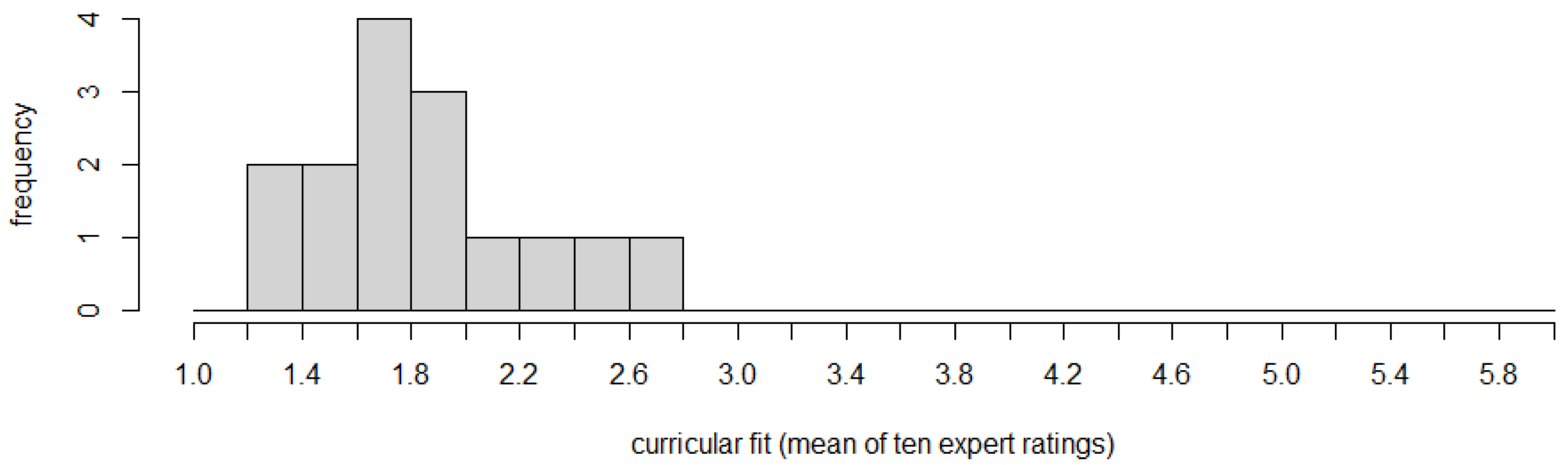

3.2.3. Curricular Validity Aspect by an Expert Survey

3.3. Further Test Quality Criteria

3.4. Empirical Evaluation of the Teaching Concept

4. Discussion

4.1. Limitations

4.2. Further Research

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Hillmayr, D.; Ziernwald, L.; Reinhold, F.; Hofer, S.I.; Reiss, K.M. The potential of digital tools to enhance mathematics and science learning in secondary schools: A context-specific meta-analysis. Comput. Educ. 2020, 153, 103897. [Google Scholar] [CrossRef]

- Girwidz, R.; Kohnle, A. Multimedia and Digital Media in Physics Instruction. In Physics Education; Fischer, H.E., Ed.; Springer International Publishing AG: Cham, Switzerland, 2021; pp. 297–336. ISBN 978-3-030-87390-5. [Google Scholar]

- Mishra, P.; Koehler, M.J. Technological pedagogical content knowledge: A framework for teacher knowledge. Teach. Coll. Rec. 2006, 108, 1017–1054. [Google Scholar] [CrossRef]

- Shulman, L.S. Those who understand: Knowledge growth in teaching. Educ. Res. 1986, 15, 4–14. [Google Scholar] [CrossRef]

- Marks, R. Pedagogical content knowledge: From a mathematical case to a modified conception. J. Teach. Educ. 1990, 41, 3–11. [Google Scholar] [CrossRef]

- Carlson, J.; Daehler, K.R.; Alonzo, A.C.; Barendsen, E.; Berry, A.; Borowski, A.; Carpendale, J.; Kam Ho Chan, K.; Cooper, R.; Friedrichsen, P.; et al. The Refined Consensus Model of Pedagogical Content Knowledge in Science Education. In Repositioning Pedagogical Content Knowledge in Teachers’ Knowledge for Teaching Science; Hume, A., Cooper, R., Borowski, A., Eds.; Springer: Singapore, 2019; pp. 77–94. ISBN 978-981-13-5897-5. [Google Scholar]

- Sorge, S.; Stender, A.; Neumann, K. The Development of Science Teachers’ Professional Competence. In Repositioning Pedagogical Content Knowledge in Teachers’ Knowledge for Teaching Science; Hume, A., Cooper, R., Borowski, A., Eds.; Springer: Singapore, 2019; pp. 151–166. ISBN 978-981-13-5897-5. [Google Scholar]

- Kulgemeyer, C.; Borowski, A.; Buschhüter, D.; Enkrott, P.; Kempin, M.; Reinhold, P.; Riese, J.; Schecker, H.; Schröder, J.; Vogelsang, C. Professional knowledge affects action-related skills: The development of preservice physics teachers’ explaining skills during a field experience. J. Res. Sci. Teach. 2020, 57, 1554–1582. [Google Scholar] [CrossRef]

- Kirschner, S.; Borowski, A.; Fischer, H.E.; Gess-Newsome, J.; von Aufschnaiter, C. Developing and evaluating a paper-and-pencil test to assess components of physics teachers’ pedagogical content knowledge. Int. J. Sci. Educ. 2016, 38, 1343–1372. [Google Scholar] [CrossRef]

- Park, S.; Oliver, J.S. Revisiting the conceptualisation of pedagogical content knowledge (PCK): PCK as a conceptual tool to understand teachers as professionals. Res. Sci. Educ. 2008, 38, 261–284. [Google Scholar] [CrossRef]

- Chan, K.K.H.; Hume, A. Towards a Consensus Model: Literature Review of How Science Teachers’ Pedagogical Content Knowledge Is Investigated in Empirical Studies. In Repositioning Pedagogical Content Knowledge in Teachers’ Knowledge for Teaching Science; Hume, A., Cooper, R., Borowski, A., Eds.; Springer: Singapore, 2019; pp. 3–76. ISBN 978-981-13-5897-5. [Google Scholar]

- Gramzow, Y.; Riese, J.; Reinhold, P. Prospective physics teachers’ pedagogical content knowledge–Validating a test instrument by using a think aloud study. In Science Education Research For Evidence-Based Teaching and Coherence in Learning, Proceedings of the ESERA 2013 Conference. European Science Education Research Association 2013 Conference, Nicosia, Cyprus, 2–7 September 2013; Constantinou, C.P., Papadouris, N., Hadjigeorgiou, A., Eds.; European Science Education Research Association: Nicosia, Cyprus, 2014; pp. 28–37. ISBN 9789963700776. [Google Scholar]

- Gramzow, Y.; Riese, J.; Reinhold, P. Modellierung fachdidaktischen Wissens angehender Physiklehrkräfte. Z. Didakt. Nat. 2013, 19, 7–30. [Google Scholar]

- Kulgemeyer, C.; Riese, J. From professional knowledge to professional performance: The impact of CK and PCK on teaching quality in explaining situations. J. Res. Sci. Teach. 2018, 55, 1393–1418. [Google Scholar] [CrossRef]

- Baumert, J.; Kunter, M.; Blum, W.; Brunner, M.; Voss, T.; Jordan, A.; Klusmann, U.; Krauss, S.; Neubrand, M.; Tsai, Y.-M. Teachers’ mathematical knowledge, cognitive activation in the classroom, and student progress. Am. Educ. Res. J. 2010, 47, 133–180. [Google Scholar] [CrossRef] [Green Version]

- Lee, E.; Luft, J.A. Experienced secondary science teachers’ representation of pedagogical content knowledge. Int. J. Sci. Educ. 2008, 30, 1343–1363. [Google Scholar] [CrossRef]

- Magnusson, S.; Krajcik, J.; Borko, H. Nature, sources, and development of pedagogical content knowledge for science teaching. In Examining Pedagogical Content Knowledge; Gess-Newsome, J., Lederman, N.G., Eds.; Springer: Dordrecht, The Netherlands, 1999; pp. 95–132. [Google Scholar]

- von Kotzebue, L.; Meier, M.; Finger, A.; Kremser, E.; Huwer, J.; Thoms, L.-J.; Becker, S.; Bruckermann, T.; Thyssen, C. The Framework DiKoLAN (Digital Competencies for Teaching in Science Education) as Basis for the Self-Assessment Tool DiKoLAN-Grid. Educ. Sci. 2021, 11, 775. [Google Scholar] [CrossRef]

- Redecker, C. European Framework for the Digital Competence of Educators: DigCompEdu; Publications Office of the European Union: Luxembourg, 2017; ISBN 978-92-79-73718-3. [Google Scholar]

- Hochberg, K.; Kuhn, J.; Müller, A. Using smartphones as experimental tools—Effects on interest, curiosity, and learning in physics education. J. Sci. Educ. Technol. 2018, 27, 385–403. [Google Scholar] [CrossRef]

- Rutten, N.; van Joolingen, W.R.; van der Veen, J.T. The learning effects of computer simulations in science education. Comput. Educ. 2012, 58, 136–153. [Google Scholar] [CrossRef]

- Wieman, C.E.; Adams, W.K.; Loeblein, P.; Perkins, K.K. Teaching physics using PhET simulations. Phys. Teach. 2010, 48, 225–227. [Google Scholar] [CrossRef]

- Kulgemeyer, C. A Framework of Effective Science Explanation Videos Informed by Criteria for Instructional Explanations. Res. Sci. Educ. 2018, 50, 2441–2462. [Google Scholar] [CrossRef]

- Puentedura, R.R. Transformation, Technology, and Education. [Blog Post]. 2006. Available online: http://hippasus.com/resources/tte/ (accessed on 30 May 2022).

- Blömeke, S.; Gustafsson, J.-E.; Shavelson, R.J. Beyond dichotomies. Z. Psychol. 2015, 223, 3. [Google Scholar] [CrossRef]

- Tondeur, J.; van Braak, J.; Sang, G.; Voogt, J.; Fisser, P.; Ottenbreit-Leftwich, A. Preparing pre-service teachers to integrate technology in education: A synthesis of qualitative evidence. Comput. Educ. 2012, 59, 134–144. [Google Scholar] [CrossRef]

- Lachner, A.; Fabian, A.; Franke, U.; Preiß, J.; Jacob, L.; Führer, C.; Küchler, U.; Paravicini, W.; Randler, C.; Thomas, P. Fostering pre-service teachers’ technological pedagogical content knowledge (TPACK): A quasi-experimental field study. Comput. Educ. 2021, 174, 104304. [Google Scholar] [CrossRef]

- Wang, W.; Schmidt-Crawford, D.; Jin, Y. Preservice Teachers’ TPACK Development: A Review of Literature. J. Digit. Learn. Teach. Educ. 2018, 34, 234–258. [Google Scholar] [CrossRef]

- Akyuz, D. Measuring technological pedagogical content knowledge (TPACK) through performance assessment. Comput. Educ. 2018, 125, 212–225. [Google Scholar] [CrossRef]

- Kopcha, T.J.; Ottenbreit-Leftwich, A.; Jung, J.; Baser, D. Examining the TPACK framework through the convergent and discriminant validity of two measures. Comput. Educ. 2014, 78, 87–96. [Google Scholar] [CrossRef]

- Scherer, R.; Tondeur, J.; Siddiq, F. On the quest for validity: Testing the factor structure and measurement invariance of the technology-dimensions in the Technological, Pedagogical, and Content Knowledge (TPACK) model. Comput. Educ. 2017, 112, 1–17. [Google Scholar] [CrossRef] [Green Version]

- Messick, S. Validity of psychological assessment: Validation of inferences from persons’ responses and performances as scientific inquiry into score meaning. Am. Psychol. 1995, 50, 741–749. [Google Scholar] [CrossRef]

- Sandoval, W.A.; Bell, P. Design-Based Research Methods for Studying Learning in Context: Introduction. Educ. Psychol. 2004, 39, 199–201. [Google Scholar] [CrossRef]

- Mayer, R.E. Multimedia Learning, 2nd ed.; Cambridge University Press: Cambridge, UK, 2009. [Google Scholar]

- Sweller, J. Cognitive load theory, learning difficulty, and instructional design. Learn. Instr. 1994, 4, 295–312. [Google Scholar] [CrossRef]

- Große-Heilmann, R.; Riese, J.; Burde, J.-P.; Schubatzky, T.; Weiler, D. Erwerb und Messung physikdidaktischer Kompetenzen zum Einsatz digitaler Medien. PhyDid B-Didaktik Physik-Beiträge DPG-Frühjahrstagung 2021, 1, 171–178. [Google Scholar]

- Krebs, R. The Swiss way to score multiple true-false items: Theoretical and empirical evidence. In Advances in Medical Education; Springer: Dordrecht, The Netherlands, 1997; pp. 158–161. [Google Scholar]

- Seifert, A.; Schaper, N. Die Entwicklung von bildungswissenschaftlichem Wissen: Theoretischer Rahmen, Testinstrument, Skalierung und Ergebnisse. In Lehramtsstudierende Erwerben Pädagogisches Professionswissen. Ergebnisse der Längsschnittstudie LEK zur Wirksamkeit der Erziehungswissenschaftlichen Lehrerausbildung; König, J., Seifert, A., Eds.; Waxmann: Münster, Germany, 2012. [Google Scholar]

- Jordans, M.; Zeller, J.; Große-Heilmann, R.; Riese, J. Weiterentwicklung eines physikdidaktischen Tests zum Online-Assessment. In Unsicherheit als Element von Naturwissenschaftsbezogenen Bildungsprozessen; Gesellschaft für Didaktik der Chemie und Physik Jahrestagung virtuell 2021, virtuell, 13.09.-16.09.2021; Habig, S., van Vorst, H., Eds.; Gesellschaft für Didaktik der Chemie und Physik (GDCP): Essen, Germany, 2022; pp. 764–767. [Google Scholar]

- Weiler, D.; Burde, J.-P.; Große-Heilmann, R.; Lachner, A.; Riese, J.; Schubatzky, T. Bedarfsanalyse zu digitalen Medien bei Physik-Lehramtsstudierenden. In Unsicherheit als Element von Naturwissenschaftsbezogenen Bildungsprozessen; Gesellschaft für Didaktik der Chemie und Physik Jahrestagung virtuell 2021, virtuell, 13.09.-16.09.2021; Habig, S., van Vorst, H., Eds.; Gesellschaft für Didaktik der Chemie und Physik (GDCP): Essen, Germany, 2022; pp. 772–775. [Google Scholar]

- Becker, S.; Klein, P.; Gößling, A.; Kuhn, J. Using mobile devices to augment inquiry-based learning processes with multiple representations. arXiv 2019, arXiv:1908.11281. [Google Scholar]

- Kirstein, J.; Nordmeier, V. Multimedia representation of experiments in physics. Eur. J. Phys. 2007, 28, S115. [Google Scholar] [CrossRef]

- Teichrew, A.; Erb, R. How augmented reality enhances typical classroom experiments: Examples from mechanics, electricity and optics. Phys. Educ. 2020, 55, 65029. [Google Scholar] [CrossRef]

- Henne, A.; Möhrke, P.; Thoms, L.-J.; Huwer, J. Implementing Digital Competencies in University Science Education Seminars Following the DiKoLAN Framework. Educ. Sci. 2022, 12, 356. [Google Scholar] [CrossRef]

| Digital-Media PCK | General PCK (Excl. Digital Media) | PK | |

|---|---|---|---|

| Digital-media PCK (α = 0.69) | − | ||

| General PCK (excl. digital media) (α = 0.67) | 0.46 ** (N = 43) | − | |

| PK (α = 0.74) | 0.32 ** (N = 105) | 0.34 * (N = 41) | − |

| Pre-Service Teachers | M | SD | Minimum | Maximum |

|---|---|---|---|---|

| Physics (N = 92) | 14.02 | 4.31 | 4 | 22 |

| Other subjects (N = 24) | 10.75 | 3.26 | 6 | 17 |

| Digital-Media PCK | M | SD | Minimum | Maximum |

|---|---|---|---|---|

| Pre-test | 14.00 | 3.92 | 3 | 20 |

| Post-test | 15.57 | 4.46 | 6 | 22 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Große-Heilmann, R.; Riese, J.; Burde, J.-P.; Schubatzky, T.; Weiler, D. Fostering Pre-Service Physics Teachers’ Pedagogical Content Knowledge Regarding Digital Media. Educ. Sci. 2022, 12, 440. https://doi.org/10.3390/educsci12070440

Große-Heilmann R, Riese J, Burde J-P, Schubatzky T, Weiler D. Fostering Pre-Service Physics Teachers’ Pedagogical Content Knowledge Regarding Digital Media. Education Sciences. 2022; 12(7):440. https://doi.org/10.3390/educsci12070440

Chicago/Turabian StyleGroße-Heilmann, Rike, Josef Riese, Jan-Philipp Burde, Thomas Schubatzky, and David Weiler. 2022. "Fostering Pre-Service Physics Teachers’ Pedagogical Content Knowledge Regarding Digital Media" Education Sciences 12, no. 7: 440. https://doi.org/10.3390/educsci12070440

APA StyleGroße-Heilmann, R., Riese, J., Burde, J.-P., Schubatzky, T., & Weiler, D. (2022). Fostering Pre-Service Physics Teachers’ Pedagogical Content Knowledge Regarding Digital Media. Education Sciences, 12(7), 440. https://doi.org/10.3390/educsci12070440