Assessing Algorithmic Thinking Skills in Relation to Age in Early Childhood STEM Education

Abstract

:1. Introduction

2. Theoretical Framework

2.1. The Environmental Study Course

2.2. Computational Thinking

2.2.1. Computational Thinking and Programming

2.2.2. Thinking Computationally in Environmental Science

2.2.3. Algorithmic Thinking

2.2.4. Assessing Algorithmic Thinking

2.3. Game-Based Learning

Jigsaw Puzzles

3. Materials and Methods

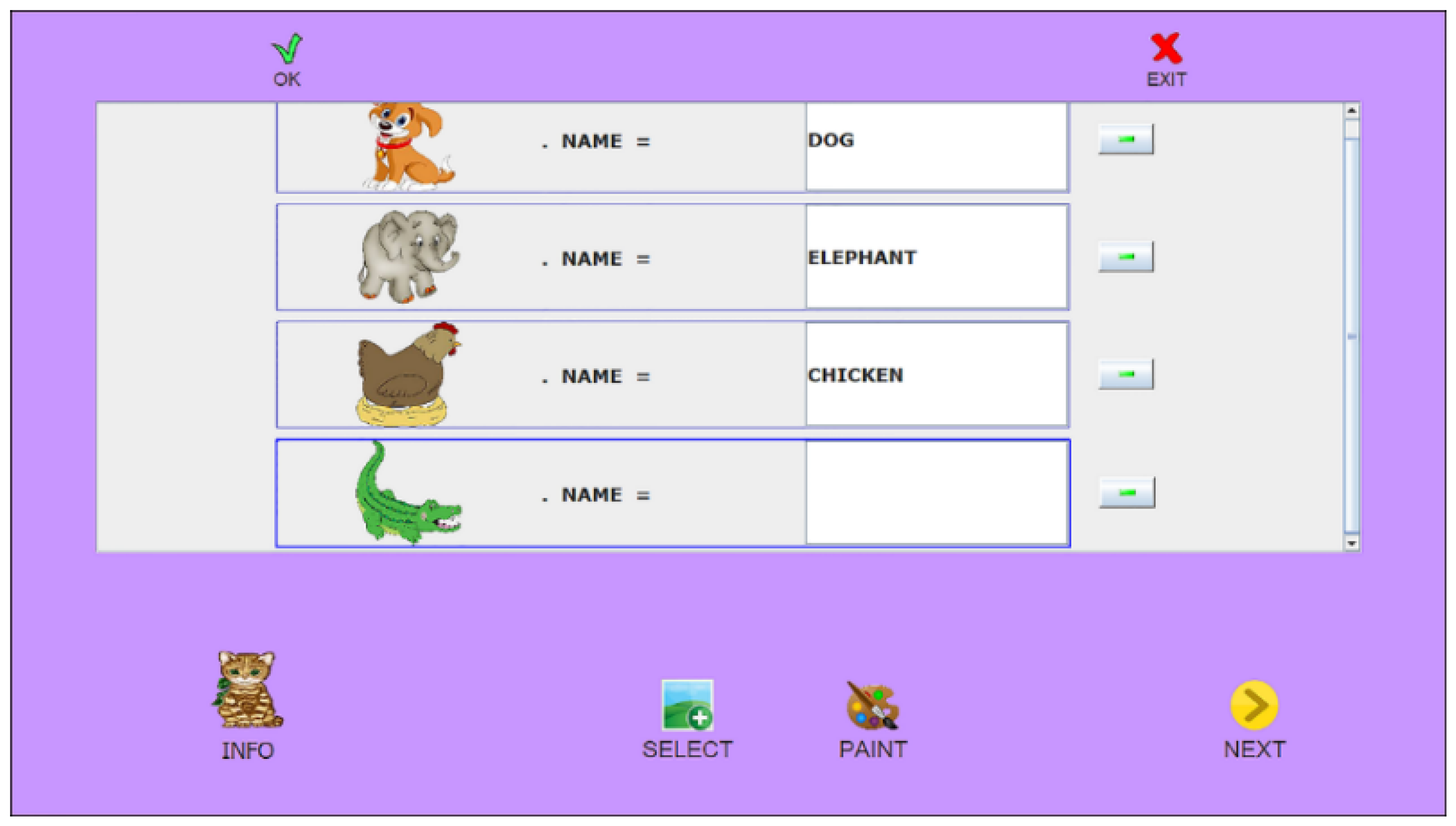

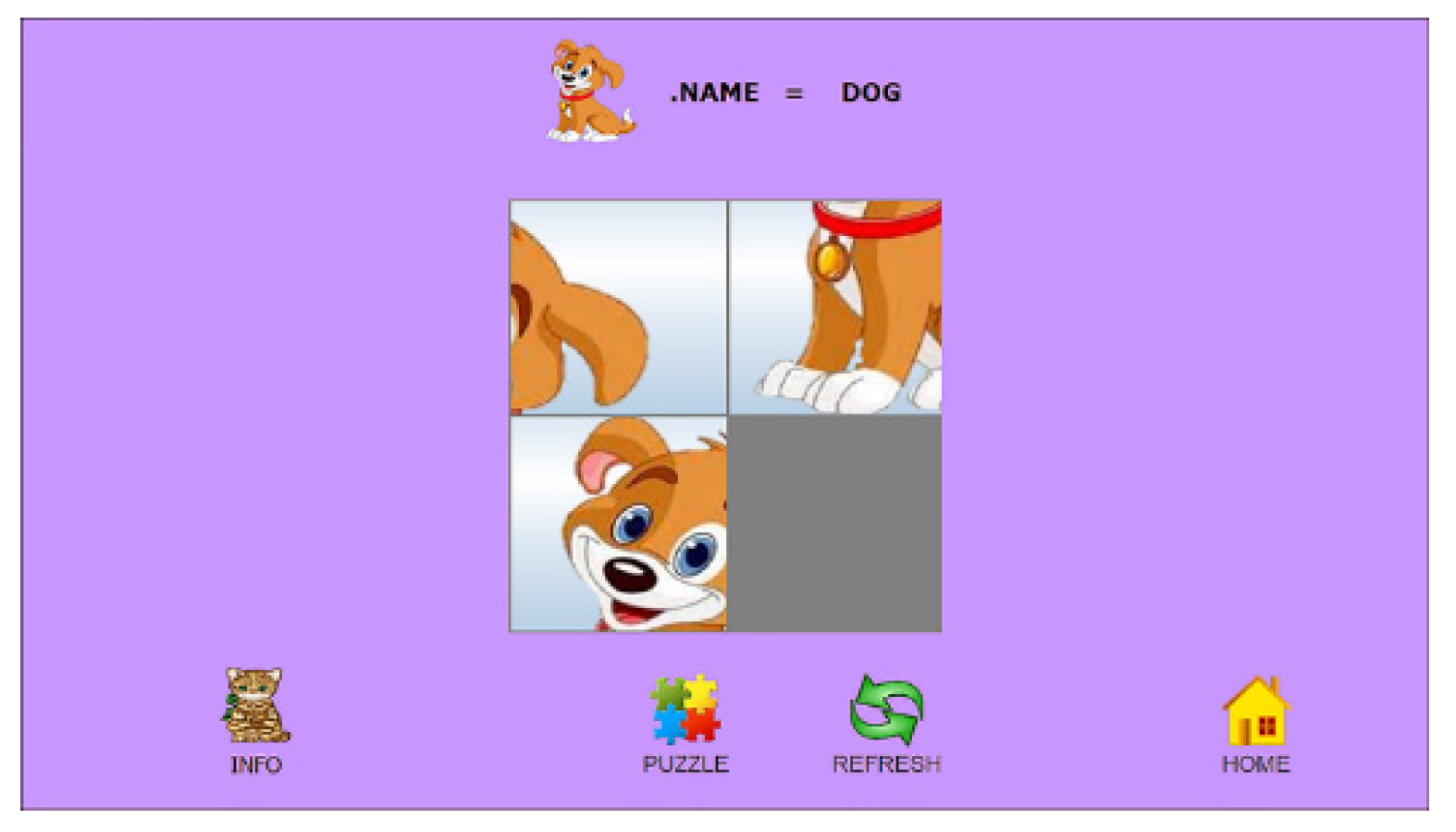

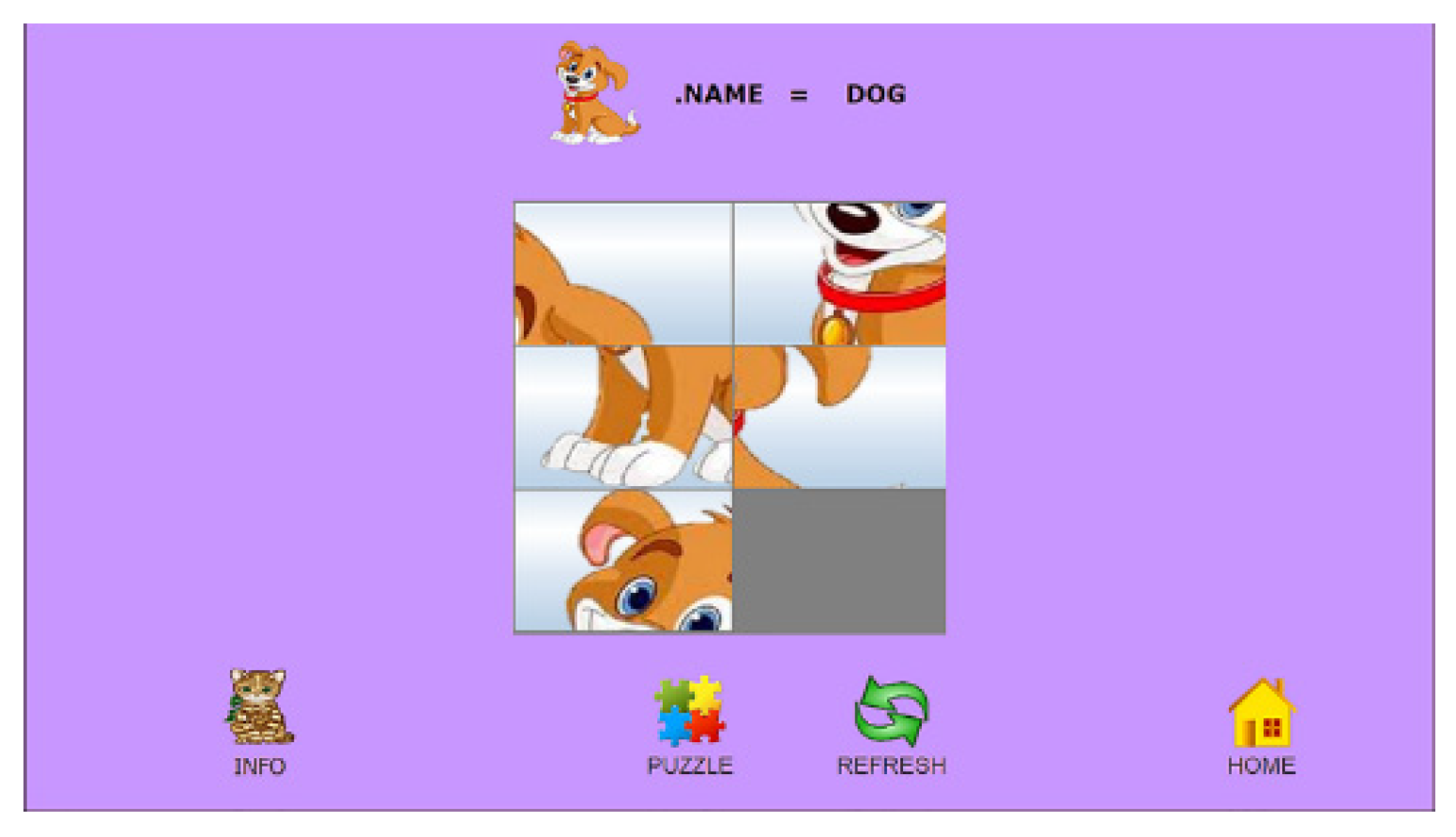

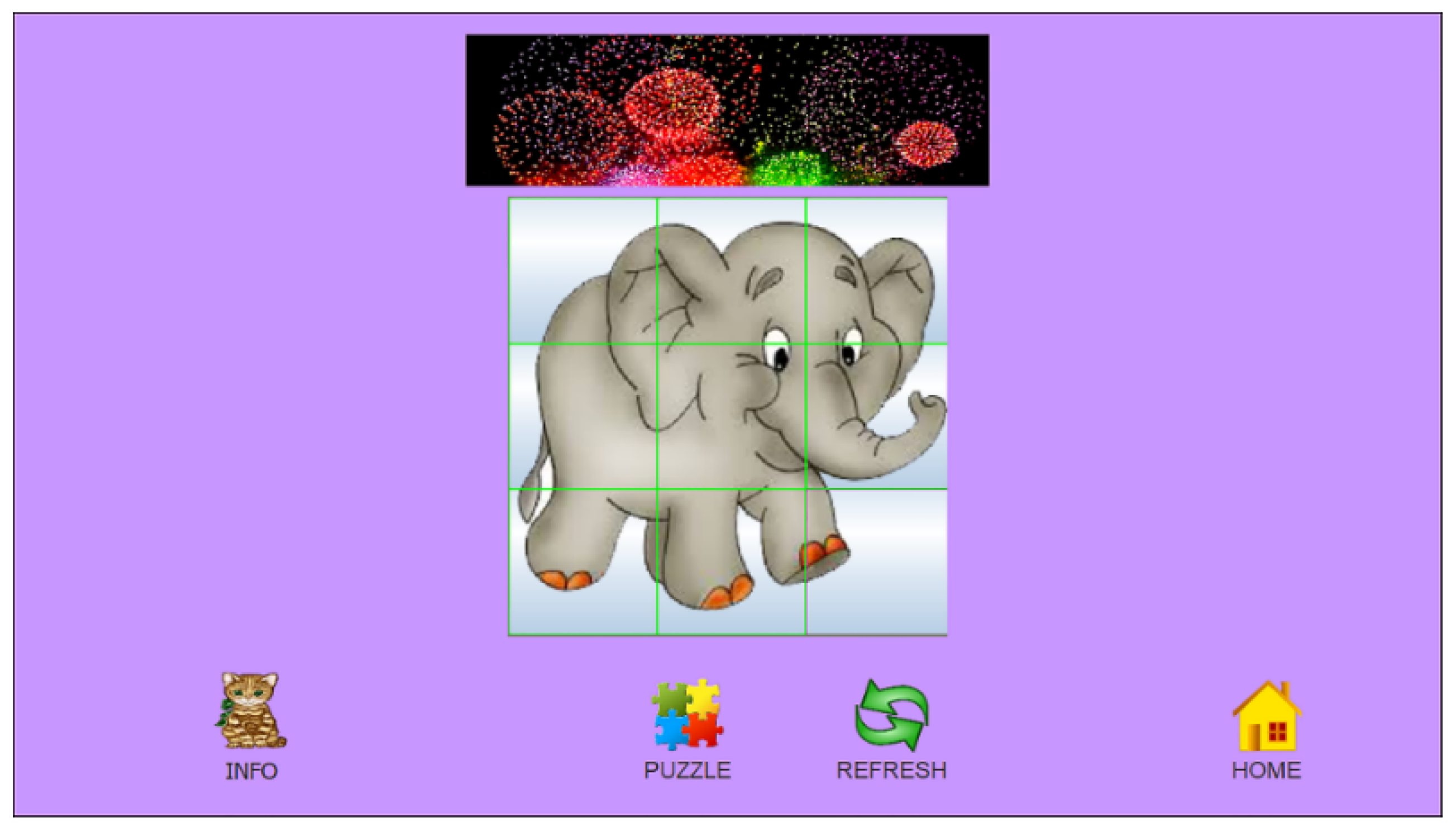

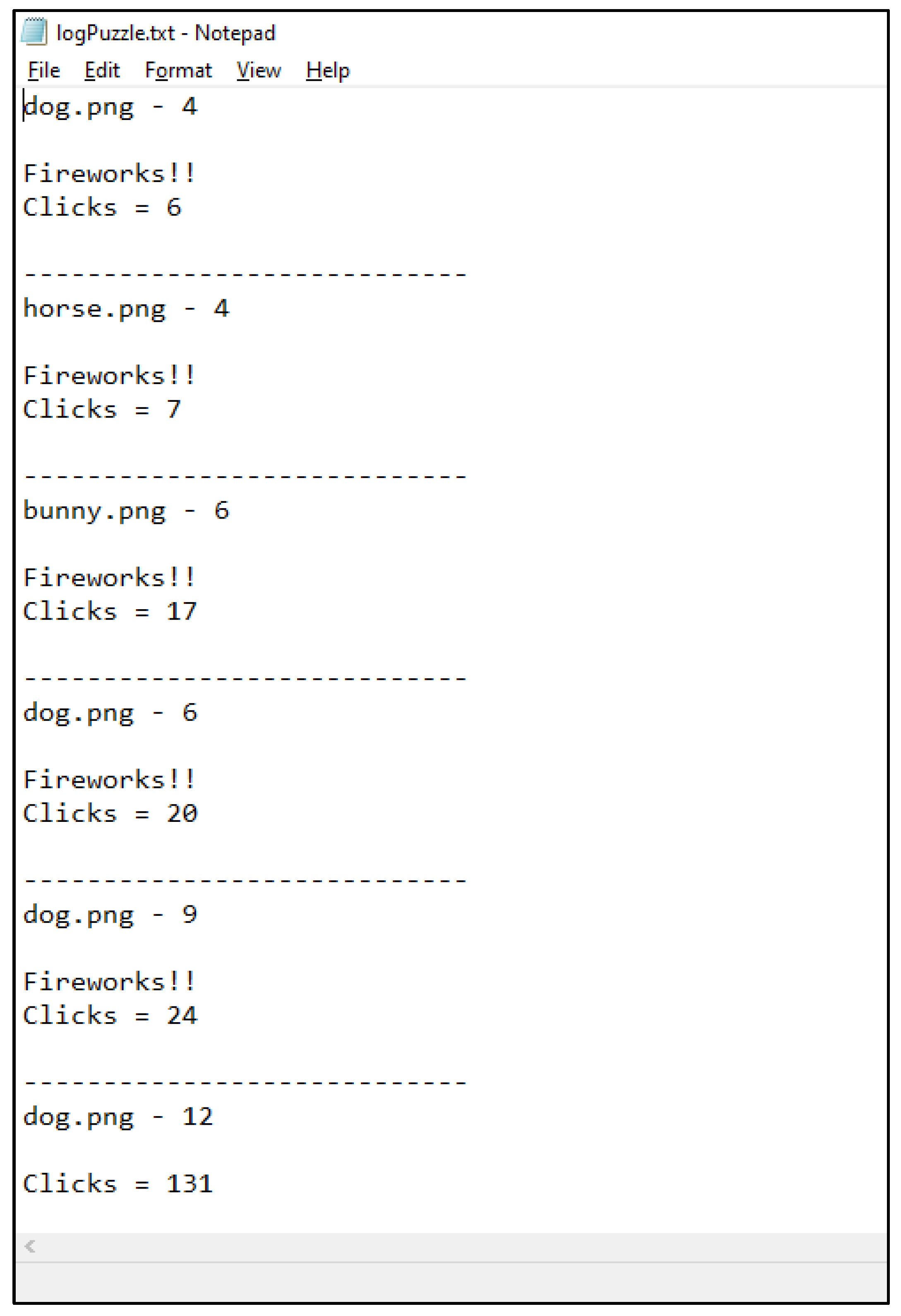

3.1. PhysGramming

3.2. Research Sample

3.3. Validation

4. Results

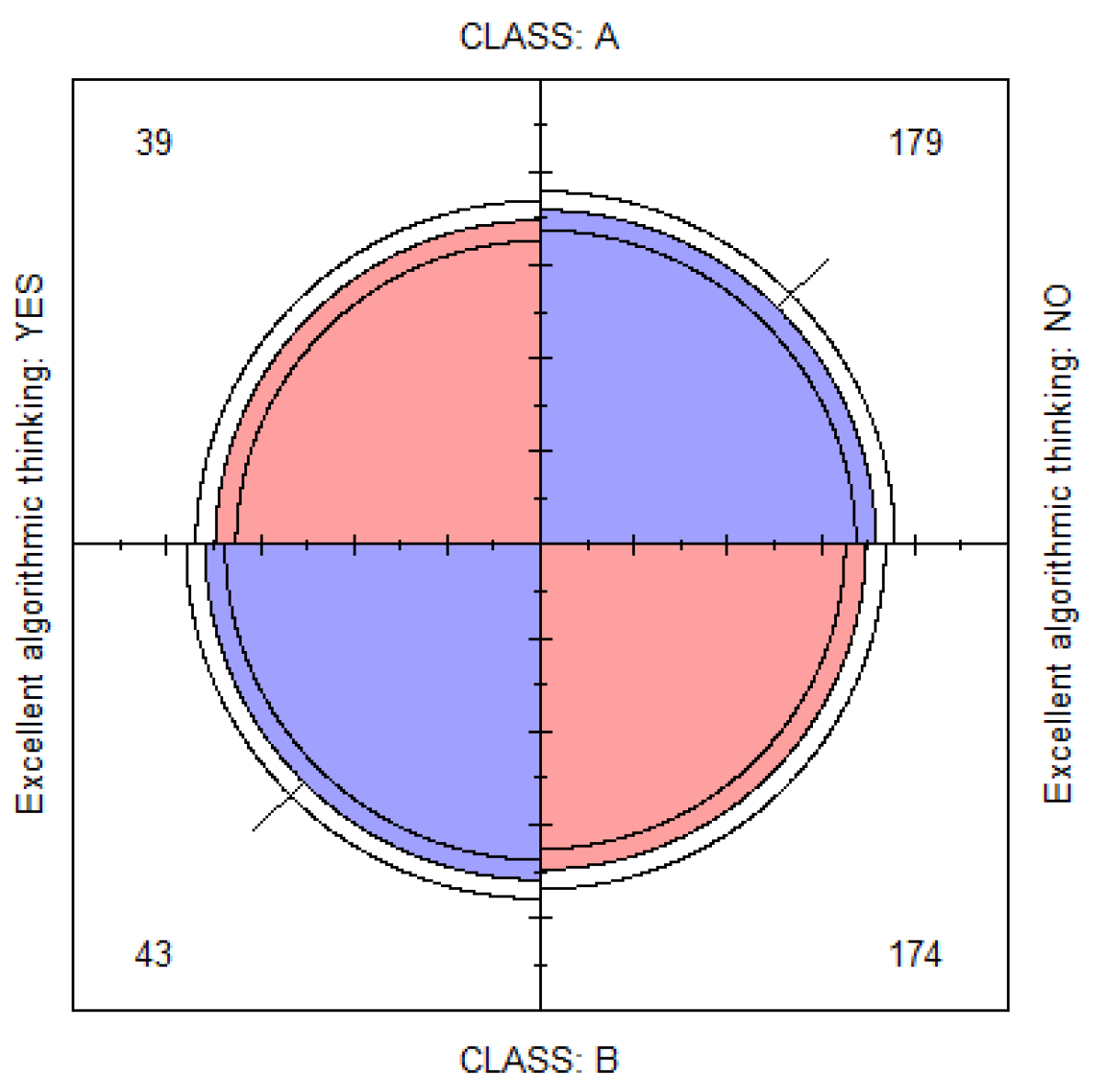

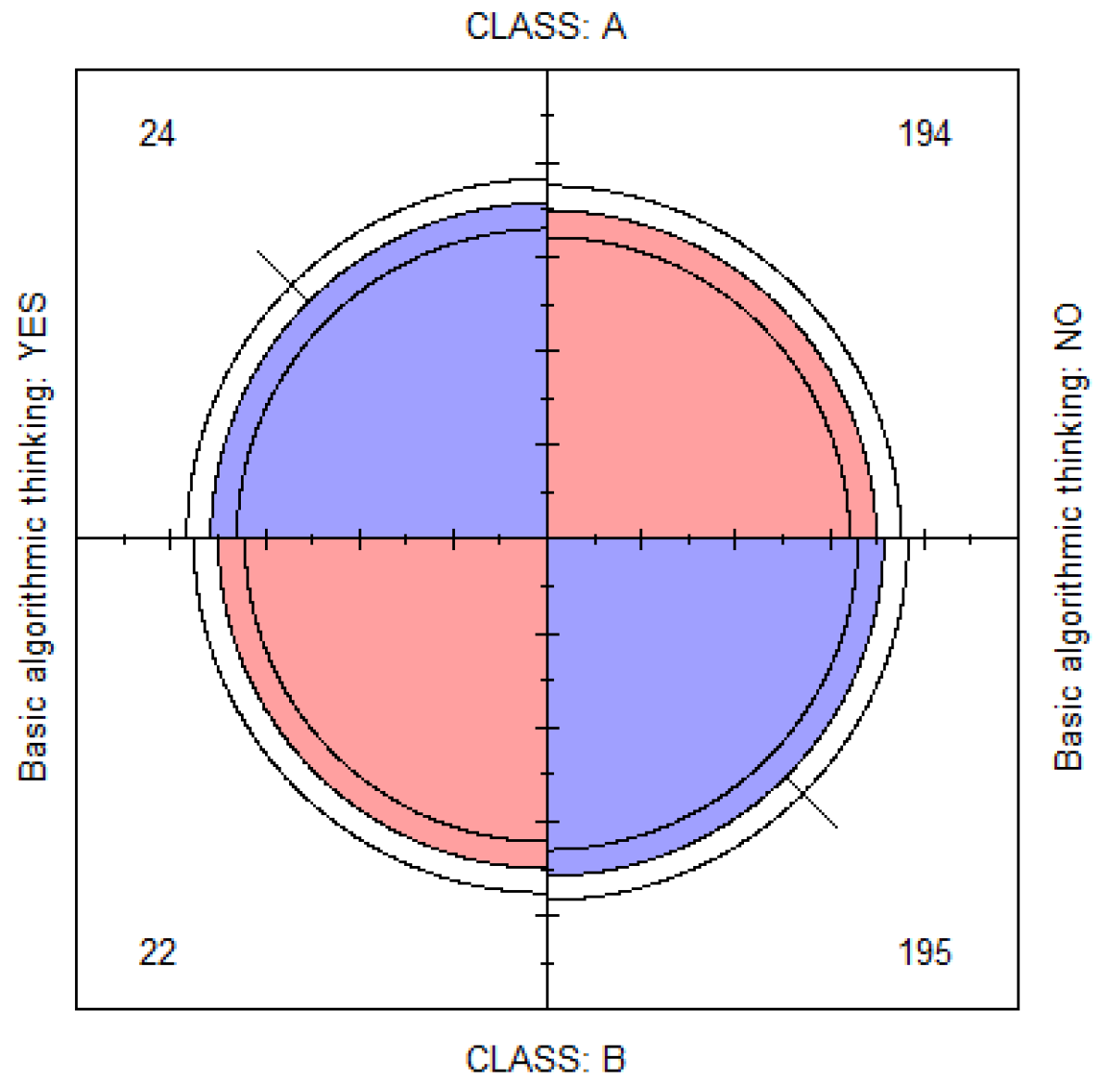

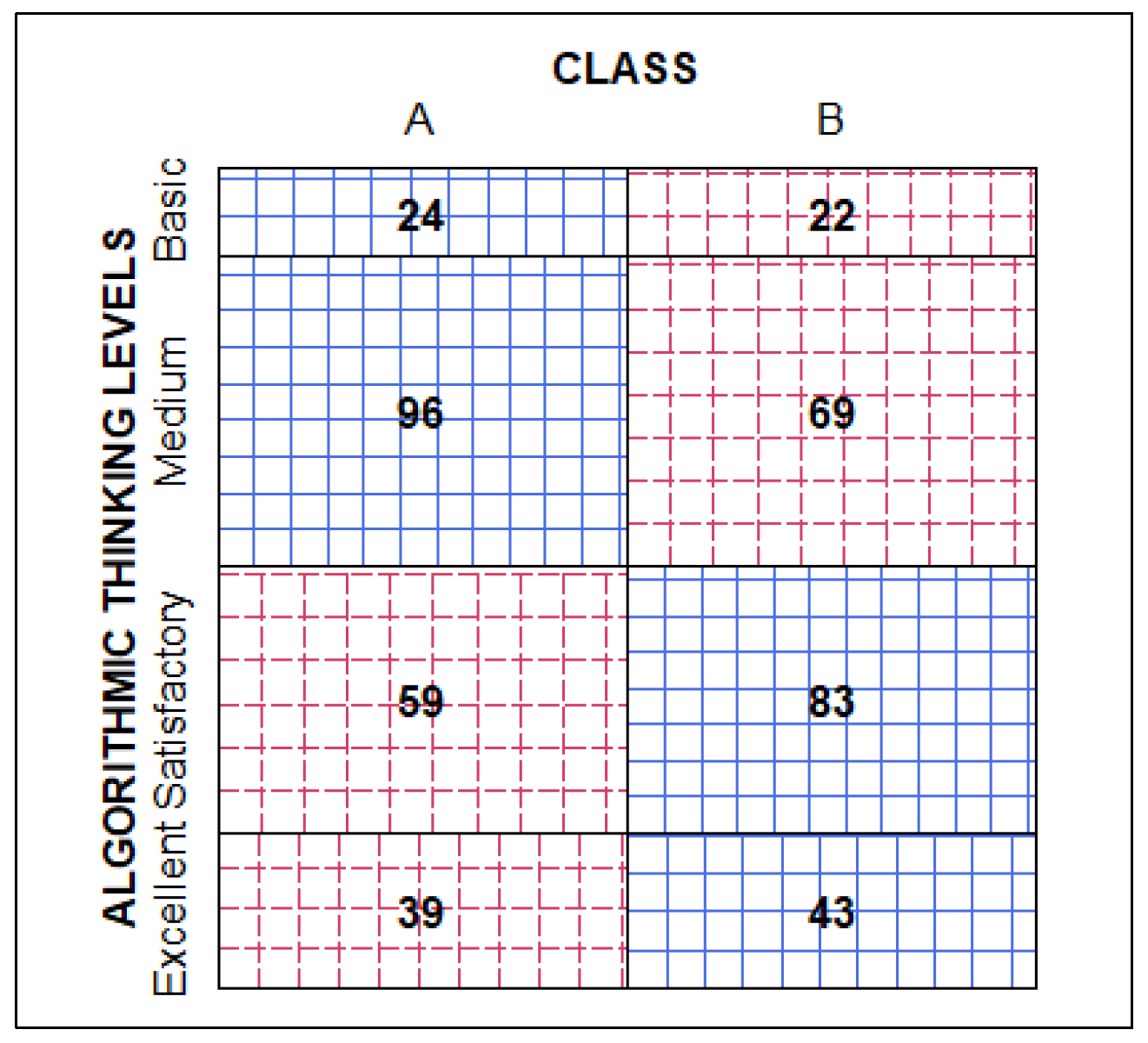

4.1. Examining the Hypothesis Set

4.2. Odds Ratio

4.3. Data Visualization

4.4. Ordinal Logistic Regression Analysis

5. Discussion

5.1. The Rational of the Study

5.2. Limitations and Perspectives

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Nordby, S.K.; Bjerke, A.H.; Mifsud, L. Computational thinking in the primary mathematics classroom: A systematic review. Digit. Exp. Math. Educ. 2022, 8, 27–49. [Google Scholar] [CrossRef]

- Wing, J. Research notebook: Computational thinking—What and why. Link Mag. 2011, 6, 20–23. [Google Scholar]

- Zhang, L.; Nouri, J. A systematic review of learning computational thinking through Scratch in K-9. Comput. Educ. 2019, 141, 103607. [Google Scholar] [CrossRef]

- Wing, J.M. Computational thinking. Commun. ACM 2006, 49, 33–35. [Google Scholar] [CrossRef]

- Acevedo-Borrega, J.; Valverde-Berrocoso, J.; Garrido-Arroyo, M.d.C. Computational Thinking and Educational Technology: A Scoping Review of the Literature. Educ. Sci. 2022, 12, 39. [Google Scholar] [CrossRef]

- Shute, V.J.; Sun, C.; Asbell-Clarke, J. Demystifying computational thinking. Educ. Res. Rev. 2017, 22, 142–158. [Google Scholar] [CrossRef]

- Yang, D.; Baek, Y.; Ching, Y.H.; Swanson, S.; Chittoori, B.; Wang, S. Infusing Computational Thinking in an Integrated STEM Curriculum: User Reactions and Lessons Learned. EJSTEME Eur. J. STEM Educ. 2021, 6, 4. [Google Scholar] [CrossRef]

- Grover, S.; Fisler, K.; Lee, I.; Yadav, A. Integrating Computing and Computational Thinking into K-12 STEM Learning. In Proceedings of the 51st ACM Technical Symposium on Computer Science Education, Portland, OR, USA, 11–14 March 2020; pp. 481–482. [Google Scholar] [CrossRef] [Green Version]

- Waterman, K.P.; Goldsmith, L.; Pasquale, M. Integrating computational thinking into elementary science curriculum: An examination of activities that support students’ computational thinking in the service of disciplinary learning. J. Sci. Educ. Technol. 2020, 29, 53–64. [Google Scholar] [CrossRef] [Green Version]

- Hutchins, N.M.; Biswas, G.; Maróti, M.; Lédeczi, Á.; Grover, S.; Wolf, R.; Blair, K.P.; Chin, D.; Conlin, L.; Basu, S.; et al. C2STEM: A system for synergistic learning of physics and computational thinking. J. Sci. Educ. Technol. 2020, 29, 83–100. [Google Scholar] [CrossRef]

- Sung, W.; Ahn, J.; Black, J.B. Introducing computational thinking to young learners: Practicing computational perspectives through embodiment in mathematics education. Technol. Knowl. Learn. 2017, 22, 443–463. [Google Scholar] [CrossRef]

- Ardoin, N.M.; Bowers, A.W. Early childhood environmental education: A systematic review of the research literature. Educ. Res. Rev. 2020, 31, 100353. [Google Scholar] [CrossRef] [PubMed]

- Malyn-Smith, J.; Lee, I.A.; Martin, F.; Grover, S.; Evans, M.A.; Pillai, S. Developing a framework for computational thinking from a disciplinary perspective. In Proceedings of the International Conference on Computational Thinking Education, Hong Kong, China, 14–16 June 2018; pp. 182–186. [Google Scholar]

- Swaid, S.I. Bringing computational thinking to STEM education. Procedia Manuf. 2015, 3, 3657–3662. [Google Scholar] [CrossRef] [Green Version]

- Hsu, T.C.; Chang, S.C.; Hung, Y.T. How to learn and how to teach computational thinking: Suggestions based on a review of the literature. Comput. Educ. 2018, 126, 296–310. [Google Scholar] [CrossRef]

- Durak, H.Y.; Saritepeci, M. Analysis of the relation between computational thinking skills and various variables with the structural equation model. Comput. Educ. 2018, 116, 191–202. [Google Scholar] [CrossRef]

- Rijke, W.J.; Bollen, L.; Eysink, T.H.; Tolboom, J.L. Computational Thinking in Primary School: An Examination of Abstraction and Decomposition in Different Age Groups. Inform. Educ. 2018, 17, 77–92. [Google Scholar] [CrossRef]

- Jiang, B.; Li, Z. Effect of Scratch on computational thinking skills of Chinese primary school students. J. Comput. Educ. 2021, 8, 505–525. [Google Scholar] [CrossRef]

- Kanaki, K.; Kalogiannakis, M. Introducing fundamental object-oriented programming concepts in preschool education within the context of physical science courses. Educ. Inf. Technol. 2018, 23, 2673–2698. [Google Scholar] [CrossRef]

- Breien, F.S.; Wasson, B. Narrative categorization in digital game-based learning: Engagement, motivation & learning. Br. J. Educ. Technol. 2021, 52, 91–111. [Google Scholar] [CrossRef]

- Rushton, S.; Juola-Rushton, A.; Larkin, E. Neuroscience, play and early childhood education: Connections, implications and assessment. Early Child. Educ. J. 2010, 37, 351–361. [Google Scholar] [CrossRef]

- Sigman, M.; Peña, M.; Goldin, A.P.; Ribeiro, S. Neuroscience and education: Prime time to build the bridge. Nat. Neurosci. 2014, 17, 497–502. [Google Scholar] [CrossRef] [Green Version]

- Cohen, L.; Manion, L.; Morrison, K. Research Methods in Education, 3rd ed.; Routledge: London, UK, 2007. [Google Scholar]

- Petousi, V.; Sifaki, E. Contextualizing harm in the framework of research misconduct. Findings from discourse analysis of scientific publications. Int. J. Sustain. Dev. 2020, 23, 149–174. [Google Scholar] [CrossRef]

- Kanaki, K.; Kalogiannakis, M. Assessing algorithmic thinking skills in relation to gender in early childhood. Educ. Process Int. J. 2022; in press. [Google Scholar]

- Nafea, I.T. Machine Learning in Educational Technology. In Machine Learning-Advanced Techniques and Emerging Applications; Farhadi, H., Ed.; IntechOpen: London, UK, 2008; pp. 175–183. [Google Scholar] [CrossRef] [Green Version]

- Li, Y.; Schoenfeld, A.H.; di Sessa, A.A.; Graesser, A.C.; Benson, L.C.; English, L.D.; Duschl, R.A. On computational thinking and STEM education. J. STEM Educ. Res. 2020, 3, 147–166. [Google Scholar] [CrossRef]

- Grizioti, M.; Kynigos, C. Children as players, modders, and creators of simulation games: A design for making sense of complex real-world problems. In Proceedings of the 20th ACM Conference on Interaction Design and Children, London, UK, 21–24 June 2020; pp. 363–374. [Google Scholar] [CrossRef]

- Grover, S. Assessing Algorithmic and Computational Thinking in K-12: Lessons from a Middle School Classroom. In Emerging Research, Practice, and Policy on Computational Thinking; Rich, P., Hodges, C., Eds.; Educational Communications and Technology: Issues and Innovations; Springer International Publishing AG: Berlin/Heidelberg, Germany, 2017; pp. 269–288. [Google Scholar] [CrossRef]

- Poulakis, E.; Politis, P. Computational Thinking Assessment: Literature Review. In Research on E-Learning and ICT in Education; Tsiatsos, T., Demetriadis, S., Mikropoulos, A., Dagdilelis, V., Eds.; Springer: Cham, Switzerland, 2021; pp. 111–128. [Google Scholar] [CrossRef]

- Tang, H.; Xu, Y.; Lin, A.; Heidari, A.A.; Wang, M.; Chen, H.; Luo, Y.; Li, C. Predicting green consumption behaviors of students using efficient firefly grey wolf-assisted K-nearest neighbor classifiers. IEEE Access 2020, 8, 35546–35562. [Google Scholar] [CrossRef]

- Román-González, M.; Moreno-León, J.; Robles, G. Combining assessment tools for a comprehensive evaluation of computational thinking interventions. In Computational Thinking Education; Kong, S.C., Abelson, H., Eds.; Springer: Singapore, 2019; pp. 79–98. [Google Scholar] [CrossRef] [Green Version]

- Tsarava, K.; Moeller, K.; Román-González, M.; Golle, J.; Leifheit, L.; Butz, M.V.; Ninaus, M. A cognitive definition of computational thinking in primary education. Comput. Educ. 2022, 179, 104425. [Google Scholar] [CrossRef]

- Schroth, S.T.; Daniels, J. Building STEM Skills through Environmental Education; IGI Global: Hershey, PA, USA, 2021. [Google Scholar] [CrossRef]

- Levin, I.; Mamlok, D. Culture and society in the digital age. Information 2021, 12, 68. [Google Scholar] [CrossRef]

- Frankenreiter, J.; Livermore, M.A. Computational methods in legal analysis. Annu. Rev. Law Soc. Sci. 2020, 16, 39–57. [Google Scholar] [CrossRef]

- Kharchenko, P.V. The triumphs and limitations of computational methods for scRNA-seq. Nat. Methods 2021, 18, 723–732. [Google Scholar] [CrossRef]

- Mejia, C.; D’Ippolito, B.; Kajikawa, Y. Major and recent trends in creativity research: An overview of the field with the aid of computational methods. Creat. Innov. Manag. 2021, 30, 475–497. [Google Scholar] [CrossRef]

- Lodi, M.; Martini, S. Computational thinking, between Papert and Wing. Sci. Educ. 2021, 30, 883–908. [Google Scholar] [CrossRef]

- Kanaki, K.; Kalogiannakis, M.; Stamovlasis, D. Assessing Algorithmic Thinking Skills in Early Childhood Education: Evaluation in Physical and Natural Science Courses. In Handbook of Research on Tools for Teaching Computational Thinking in P-12 Education; IGI Global: Hershey, PA, USA, 2020; pp. 104–139. [Google Scholar] [CrossRef]

- Bers, M.U.; González-González, C.; Armas–Torres, M.B. Coding as a playground: Promoting positive learning experiences in childhood classrooms. Comput. Educ. 2019, 138, 130–145. [Google Scholar] [CrossRef]

- Saqr, M.; Ng, K.; Oyelere, S.S.; Tedre, M. People, ideas, milestones: A scientometric study of computational thinking. ACM Trans. Comput. Educ. 2021, 21, 20. Available online: https://www.researchgate.net/publication/347583738_People_Ideas_Milestones_A_Scientometric_Study_of_Computational_Thinking (accessed on 27 May 2022). [CrossRef]

- Sanford, J.F.; Naidu, J.T. Computational thinking concepts for grade school. Contemp. Issues Educ. Res. CIER 2016, 9, 23–32. [Google Scholar] [CrossRef] [Green Version]

- Lye, S.Y.; Koh, J.H.L. Review on teaching and learning of computational thinking through programming: What is next for K-12? Comput. Hum. Behav. 2014, 41, 51–61. [Google Scholar] [CrossRef]

- Wing, J.M. Computational thinking and thinking about computing. Philos. Trans. R. Soc. A 2008, 366, 3717–3725. [Google Scholar] [CrossRef] [PubMed]

- Grover, S.; Biswas, G.; Dickes, A.C.; Farris, A.V.; Sengupta, P.; Covitt, B.A.; Gunckel, K.L.; Berkowitz, A.; Moore, J.C.; Irgens, G.A.; et al. Integrating STEM and computing in PK-12: OperationalizPeople, ideas, milestones: A scientometric study of computational thinkinging computational thinking for STEM learning and assessment. In Proceedings of the Interdisciplinarity of the Learning Sciences, 14th International Conference of the Learning Sciences (ICLS), Nashville, TN, USA, 19–23 June 2020. [Google Scholar]

- NGSS Lead States. Next Generation Science Standards: For States, by States; The National Academies Press: Washington, DC, USA, 2013; Available online: https://epsc.wustl.edu/seismology/book/presentations/2014_Promotion/NGSS_2013.pdf (accessed on 12 April 2022).

- Futschek, G. Algorithmic thinking: The key for understanding computer science. In Proceedings of the International Conference on Informatics in Secondary Schools-Evolution and Perspectives, Vilnius, Lithuania, 7–11 November 2006; pp. 159–168. [Google Scholar] [CrossRef] [Green Version]

- Tengler, K.; Kastner-Hauler, O.; Sabitzer, B. Enhancing Computational Thinking Skills using Robots and Digital Storytelling. In Proceedings of the CSEDU, Online Conference, 23–25 April 2021; pp. 157–164. [Google Scholar] [CrossRef]

- Figueiredo, M.P.; Amante, S.; Gomes, H.M.D.S.V.; Gomes, C.A.; Rego, B.; Alves, V.; Duarte, R.P. Algorithmic Thinking in Early Childhood Education: Opportunities and Supports in the Portuguese Context. In Proceedings of the EduLearn 2021, Online Conference, 5–6 July 2021; pp. 9339–9348. [Google Scholar] [CrossRef]

- Vujičić, L.; Jančec, L.; Mezak, J. Development of algorithmic thinking skills in early and preschool education. In Proceedings of the EDULEARN21, Online Conference, 5–6 July 2021. [Google Scholar] [CrossRef]

- Labusch, A.; Eickelmann, B.; Vennemann, M. Computational thinking processes and their congruence with problem-solving and information processing. In Computational Thinking Education; Kong, S.C., Abelson, H., Eds.; Springer: Singapore, 2019; pp. 65–78. [Google Scholar] [CrossRef]

- Dagienė, V.; Futschek, G. Bebras international contest on informatics and computer literacy: Criteria for good tasks. In Proceedings of the International Conference on Informatics in Secondary Schools-Evolution and Perspectives, Torun, Poland, 1–4 July 2008; pp. 19–30. [Google Scholar] [CrossRef] [Green Version]

- Burton, B.A. Encouraging Algorithmic Thinking Without a Computer. Olymp. Inform. 2010, 4, 3–14. [Google Scholar]

- Merry, B.; Gallotta, M.; Hultquist, C. Challenges in running a computer olympiad in South Africa. Olymp. Inform. 2008, 2, 105–114. [Google Scholar]

- Chen, G.; Shen, J.; Barth-Cohen, L.; Jiang, S.; Huang, X.; Eltoukhy, M. Assessing elementary students’ computational thinking in everyday reasoning and robotics programming. Comput. Educ. 2017, 109, 162–175. [Google Scholar] [CrossRef] [Green Version]

- Werner, L.; Denner, J.; Campe, S. Children programming games: A strategy for measuring computational learning. ACM Trans. Comput. Educ. 2015, 14, 24. [Google Scholar] [CrossRef]

- Asbell-Clarke, J.; Rowe, E.; Almeda, V.; Edwards, T.; Bardar, E.; Gasca, S.; Baker, R.S.; Scruggs, R. The development of students’ computational thinking practices in elementary-and middle-school classes using the learning game, Zoombinis. Comput. Hum. Behav. 2021, 115, 106587. [Google Scholar] [CrossRef]

- Rowe, E.; Almeda, M.V.; Asbell-Clarke, J.; Scruggs, R.; Baker, R.; Bardar, E.; Gasca, S. Assessing implicit computational thinking in Zoombinis puzzle gameplay. Comput. Hum. Behav. 2021, 120, 106707. [Google Scholar] [CrossRef]

- Kalogiannakis, M.; Papadakis, S.; Zourmpakis, A.I. Gamification in Science Education. A Systematic Review of the Literature. Educ. Sci. 2021, 11, 22. [Google Scholar] [CrossRef]

- Misra, R.; Eyombo, L.; Phillips, F.T. Benefits and Challenges of Using Educational Games. In Research Anthology on Developments in Gamification and Game-Based Learning; IGI Global: Hershey, PA, USA, 2022; pp. 1560–1570. [Google Scholar] [CrossRef]

- Kiss, G.; Arki, Z. The influence of game-based programming education on the algorithmic thinking. Procedia Soc. Behav. Sci. 2017, 237, 613–617. [Google Scholar] [CrossRef]

- Gallagher, A.C. Jigsaw puzzles with pieces of unknown orientation. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 382–389. [Google Scholar]

- Huroyan, V.; Lerman, G.; Wu, H.T. Solving jigsaw puzzles by the graph connection Laplacian. SIAM J. Imaging Sci. 2020, 13, 1717–1753. [Google Scholar] [CrossRef]

- Doherty, M.J.; Wimmer, M.C.; Gollek, C.; Stone, C.; Robinson, E.J. Piecing together the puzzle of pictorial representation: How jigsaw puzzles index metacognitive development. Child Dev. 2021, 92, 205–221. [Google Scholar] [CrossRef]

- Paikin, G.; Tal, A. Solving multiple square jigsaw puzzles with missing pieces. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 4832–4839. [Google Scholar] [CrossRef]

- Pomeranz, D.; Shemesh, M.; Ben-Shahar, O. A fully automated greedy square jigsaw puzzle solver. In Proceedings of the 2011 IEEE Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 20–25 June 2011; pp. 9–16. [Google Scholar] [CrossRef] [Green Version]

- Zhao, Y.X.; Su, M.C.; Chou, Z.L.; Lee, J. A puzzle solver and its application in speech descrambling. In Proceedings of the International Conference on Computer Engineering and Applications, Gold Coast, Australia, 17–19 January 2007; pp. 171–176. [Google Scholar]

- del Olmo-Muñoz, J.; Cózar-Gutiérrez, R.; González-Calero, J.A. Computational thinking through unplugged activities in early years of Primary Education. Comput. Educ. 2020, 150, 103832. [Google Scholar] [CrossRef]

- Ferrari, A.; Poggi, A.; Tomaiuolo, M. Object oriented puzzle programming. Mondo. Digit. 2016, 15, 64. [Google Scholar]

- Janke, E.; Brune, P.; Wagner, S. Does outside-in teaching improve the learning of object-oriented programming? In Proceedings of the 2015 IEEE/ACM 37th IEEE International Conference on Software Engineering, Florence, Italy, 16–24 May 2015; Volume 2, pp. 408–417. [Google Scholar] [CrossRef] [Green Version]

- Kanaki, K.; Kalogiannakis, M.; Poulakis, E.; Politis, P. Employing Mobile Technologies to Investigate the Association Between Abstraction Skills and Performance in Environmental Studies in Early Primary School. Int. J. Interact. Mob. Technol. 2022, 16. [Google Scholar] [CrossRef]

- Kraleva, R. Designing an Interface for a Mobile Application Based on Children’s Opinion. Int. J. Interact. Mob. Technol. 2017, 11, 53–70. [Google Scholar] [CrossRef]

- Nam, H. Designing User Experiences for Children. 2010. Available online: https://www.uxmatters.com/mt/archives/2010/05/designing-user-experiences-for-children.php (accessed on 13 March 2022).

- Takahashi, F.; Kawabata, Y. The association between colors and emotions for emotional words and facial expressions. Color Res. Appl. 2018, 43, 247–257. [Google Scholar] [CrossRef] [Green Version]

- Tham, D.S.Y.; Sowden, P.T.; Grandison, A.; Franklin, A.; Lee, A.K.W.; Ng, M.; Park, J.; Pang, W.; Zhao, J. A systematic investigation of conceptual color associations. J. Exp. Psychol. Gen. 2020, 149, 1311. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Fruth, J.; Schulze, C.; Rohde, M.; Dittmann, J. E-learning of IT security threats: A game prototype for children. In Proceedings of the IFIP International Conference on Communications and Multimedia Security, Magdeburg, Germany, 25–26 September 2013; pp. 162–172. [Google Scholar] [CrossRef] [Green Version]

- Criollo-C, S.; Guerrero-Arias, A.; Jaramillo-Alcázar, Á.; Luján-Mora, S. Mobile learning technologies for education: Benefits and pending issues. Appl. Sci. 2021, 11, 4111. [Google Scholar] [CrossRef]

- Chongo, S.; Osman, K.; Nayan, N.A. Level of Computational Thinking Skills among Secondary Science Student: Variation across Gender and Mathematics Achievement. Sci. Educ. Int. 2020, 31, 159–163. [Google Scholar] [CrossRef]

- McManis, L.D.; Gunnewig, S.B. Finding the education in educational technology with early learners. Young Child 2012, 67, 14. [Google Scholar]

- Shoukry, L.; Sturm, C.; Galal-Edeen, G.H. Pre-MEGa: A proposed framework for the design and evaluation of preschoolers’ mobile educational games. In Innovations and Advances in Computing, Informatics, Systems Sciences, Networking and Engineering; Elleithy, K., Sobh, T., Eds.; Springer: Cham, Switzerland, 2015; pp. 385–390. [Google Scholar]

- Peijnenborgh, J.C.; Hurks, P.P.; Aldenkamp, A.P.; van der Spek, E.D.; Rauterberg, M.G.; Vles, J.S.; Hendriksen, J.G. A study on the validity of a computer-based game to assess cognitive processes, reward mechanisms, and time perception in children aged 4–8 years. JMIR Serious Games 2016, 4, e15. [Google Scholar] [CrossRef]

- Richter, G.; Raban, D.R.; Rafaeli, S. Studying gamification: The effect of rewards and incentives on motivation. In Gamification in Education and Business; Reiners, T., Wood, L.C., Eds.; Springer: Cham, Switzerland, 2015; pp. 21–46. [Google Scholar] [CrossRef]

- Montola, M.; Nummenmaa, T.; Lucero, A.; Boberg, M.; Korhonen, H. Applying game achievement systems to enhance user experience in a photo sharing service. In Proceedings of the 13th International MindTrek Conference: Everyday Life in the Ubiquitous Era, Tampere, Finland, 30 September–2 October 2009; pp. 94–97. [Google Scholar] [CrossRef]

- Bleumers, L.; All, A.; Mariën, I.; Schurmans, D.; Van Looy, J.; Jacobs, A.; Willaert, K.; de Grove, F. State of Play of Digital Games for Empowerment and Inclusion: A Review of the Literature and Empirical Cases; EUR 25652; Publications Office of the European Union: Luxembourg, 2012; p. JRC77655. [Google Scholar] [CrossRef]

- Bempechat, J.; Shernoff, D.J. Parental influences on achievement motivation and student engagement. In Handbook of Research on Student Engagement; Christenson, S., Reschly, A., Wylie, C., Eds.; Springer: Boston, MA, USA, 2012; pp. 315–342. [Google Scholar] [CrossRef]

- Tan, C.Y.; Lyu, M.; Peng, B. Academic benefits from parental involvement are stratified by parental socioeconomic status: A meta-analysis. Parent. Sci. Pract. 2020, 20, 241–287. [Google Scholar] [CrossRef]

- Vaz, S.; Falkmer, T.; Passmore, A.E.; Parsons, R.; Andreou, P. The case for using the repeatability coefficient when calculating test–retest reliability. PLoS ONE 2013, 8, e73990. [Google Scholar] [CrossRef]

- Sullivan, G.M. A primer on the validity of assessment instruments. J. Grad. Med. Educ. 2011, 3, 119–120. [Google Scholar] [CrossRef] [Green Version]

- Friendly, M. Visualizing categorical data: Data, stories, and pictures. In Proceedings of the Twenty-Fifth Annual SAS Users Group International Conference, Indiana Convention Center, Indianapolis, Indiana, 9–12 April 2000. [Google Scholar]

- Meyer, D.; Zeileis, A.; Hornik, K. Visualizing contingency tables. In Handbook of Data Visualization; Springer Handbooks Comp., Statistics; Chen, C., Härdle, W., Unwin, A., Eds.; Springer: Berlin/Heidelberg, Germany, 2008; pp. 589–616. [Google Scholar] [CrossRef]

- Grover, S.; Pea, R. Computational thinking in K–12: A review of the state of the field. Educ. Res. 2013, 42, 38–43. [Google Scholar] [CrossRef]

- Román-González, M.; Pérez-González, J.C.; Jiménez-Fernández, C. Which cognitive abilities underlie computational thinking? Criterion validity of the Computational Thinking Test. Comput. Hum. Behav. 2017, 72, 678–691. [Google Scholar] [CrossRef]

- Piatti, A.; Adorni, G.; El-Hamamsy, L.; Negrini, L.; Assaf, D.; Gambardella, L.; Mondada, F. The CT-cube: A framework for the design and the assessment of computational thinking activities. Comput. Hum. Behav. Rep. 2022, 5, 100166. [Google Scholar] [CrossRef]

- Freina, L.; Bottino, R.; Ferlino, L. Fostering Computational Thinking skills in the Last Years of Primary School. Int. J. Serious Games 2019, 6, 101–115. [Google Scholar] [CrossRef]

- Rompapas, D.; Steven, Y.; Chan, J. A Hybrid Approach to Teaching Computational Thinking at a K-1 and K-2 Level. In Proceedings of the CTE-STEM 2021: Fifth APSCE International Conference on Computational Thinking and STEM Education 2021, Virtual Conference, 2–4 June 2021; National Institute of Education, Nanyang Technological University: Singapore, 2021. [Google Scholar]

- Zevenbergen, R.; Logan, H. Computer use by preschool children: Rethinking practice as digital natives come to preschool. Australas. J. Early Child. 2008, 33, 37–44. [Google Scholar] [CrossRef] [Green Version]

- Freeman, H.B. Trade Epidemic: The Impact of the Mad Cow Crisis on EU-US Relations. Boston Coll. Int. Comp. Law Rev. 2002, 25, 343–371. [Google Scholar]

- Washer, P. Representations of mad cow disease. Soc. Sci. Med. 2006, 62, 457–466. [Google Scholar] [CrossRef] [Green Version]

- Fragkiadaki, G.; Ravanis, K. The unity between intellect, affect, and action in a child’s learning and development in science. Learn. Cult. Soc. Interact. 2021, 29, 100495. [Google Scholar] [CrossRef]

- Relkin, E.; de Ruiter, L.E.; Bers, M.U. Learning to code and the acquisition of computational thinking by young children. Comput. Educ. 2021, 169, 104222. [Google Scholar] [CrossRef]

- Qian, M.; Clark, K.R. Game-based Learning and 21st century skills: A review of recent research. Comput. Hum. Behav. 2016, 63, 50–58. [Google Scholar] [CrossRef]

- Janakiraman, S.; Watson, S.L.; Watson, W.R.; Newby, T. Effectiveness of digital games in producing environmentally friendly attitudes and behaviors: A mixed methods study. Comput. Educ. 2021, 160, 104043. [Google Scholar] [CrossRef]

| Algorithmic Thinking Levels | The Most Difficult Puzzle Solved |

|---|---|

| basic | four-piece puzzle |

| medium | six-piece puzzle |

| satisfactory | nine-piece puzzle |

| excellent | twelve-piece puzzle |

| Grade Level | First | Second | Sum | |

|---|---|---|---|---|

| Algorithmic Thinking | ||||

| Excellent | 39 | 43 | 82 | |

| Satisfactory | 59 | 83 | 142 | |

| Medium | 96 | 69 | 165 | |

| Basic | 24 | 22 | 46 | |

| Sum | 218 | 217 | 435 | |

| Coefficients: | |||

|---|---|---|---|

| Value | Std. Error | t-value | |

| Grade Level | −0.2981 | 0.1756 | −1.698 |

| Intercepts: | |||

| Value | Std. Error | t-value | |

| basic|excellent | −2.2954 | 0.1836 | −12.5052 |

| excellent|satisfactory | −1.0354 | 0.1425 | −7.2649 |

| satisfactory|medium | 0.3402 | 0.1335 | 2.5480 |

| Residual Deviance: 1115.307 | |||

| AIC: 1123.307 | |||

| Excellent | Satisfactory | Medium | Basic | |

|---|---|---|---|---|

| First Grade | 0.171 | 0.322 | 0.416 | 0.092 |

| Second Grade | 0.204 | 0.331 | 0.346 | 0.119 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kanaki, K.; Kalogiannakis, M. Assessing Algorithmic Thinking Skills in Relation to Age in Early Childhood STEM Education. Educ. Sci. 2022, 12, 380. https://doi.org/10.3390/educsci12060380

Kanaki K, Kalogiannakis M. Assessing Algorithmic Thinking Skills in Relation to Age in Early Childhood STEM Education. Education Sciences. 2022; 12(6):380. https://doi.org/10.3390/educsci12060380

Chicago/Turabian StyleKanaki, Kalliopi, and Michail Kalogiannakis. 2022. "Assessing Algorithmic Thinking Skills in Relation to Age in Early Childhood STEM Education" Education Sciences 12, no. 6: 380. https://doi.org/10.3390/educsci12060380

APA StyleKanaki, K., & Kalogiannakis, M. (2022). Assessing Algorithmic Thinking Skills in Relation to Age in Early Childhood STEM Education. Education Sciences, 12(6), 380. https://doi.org/10.3390/educsci12060380