Exploring (Collaborative) Generation and Exploitation of Multiple Choice Questions: Likes as Quality Proxy Metric

Abstract

:1. Introduction

2. Materials and Methods

2.1. Generating MCQs: The Reading Game

2.2. Answering MCQs: QuizUp

2.3. The Experimental Setting

3. Results

3.1. Stage 1: MCQ Generation Using the Reading Game (RG)

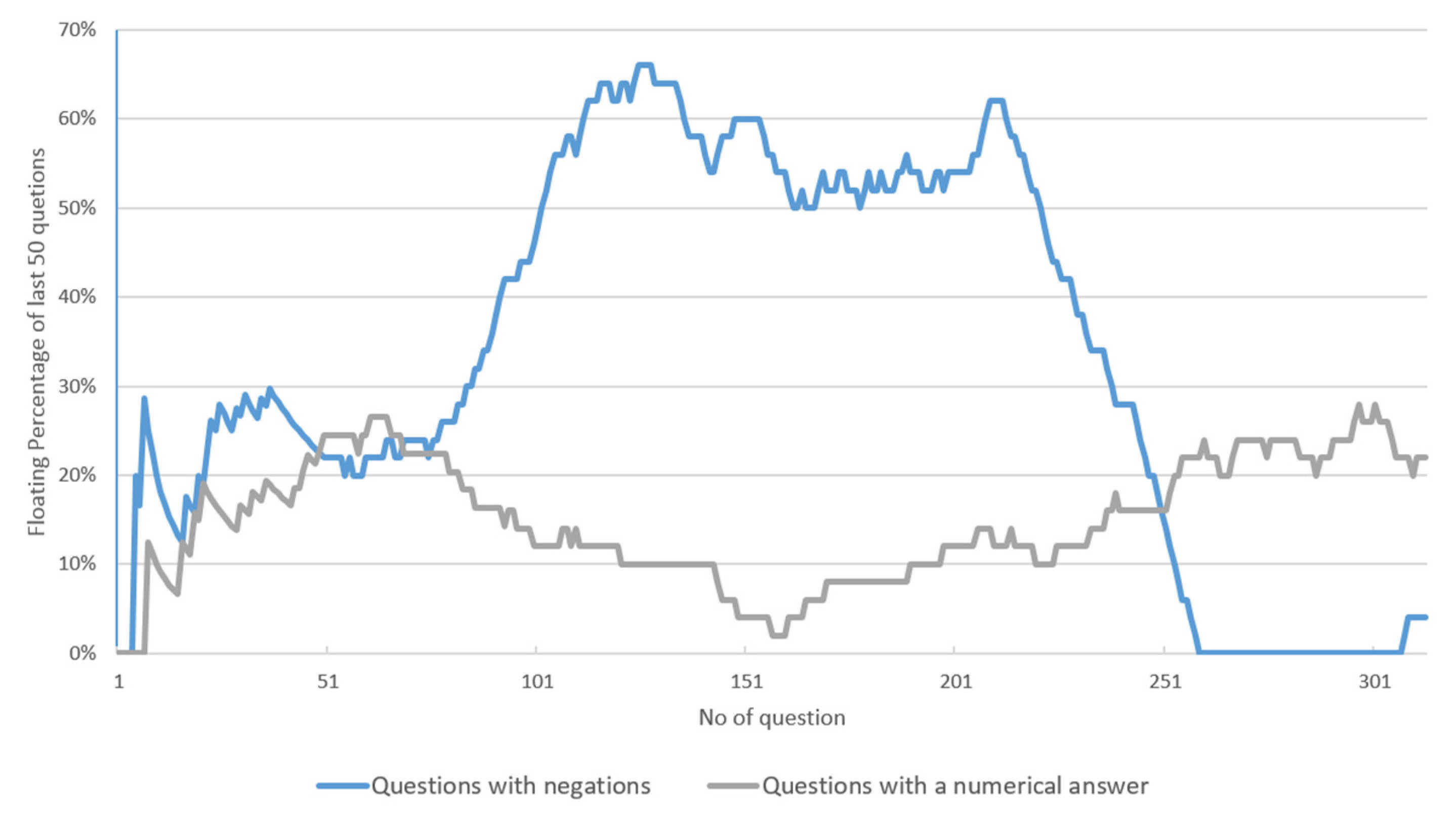

3.1.1. Usage Data

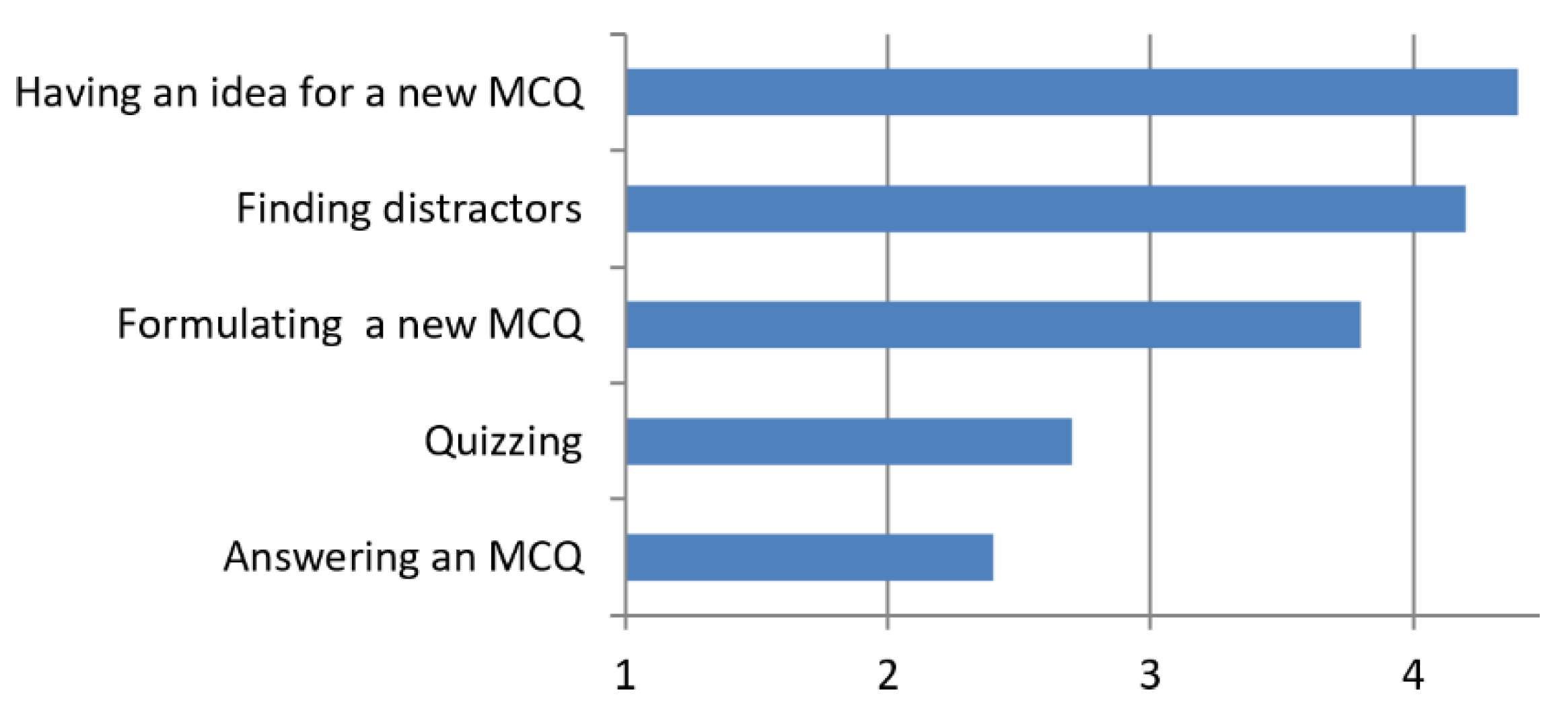

3.1.2. Students’ Perceptions

3.1.3. Analysis

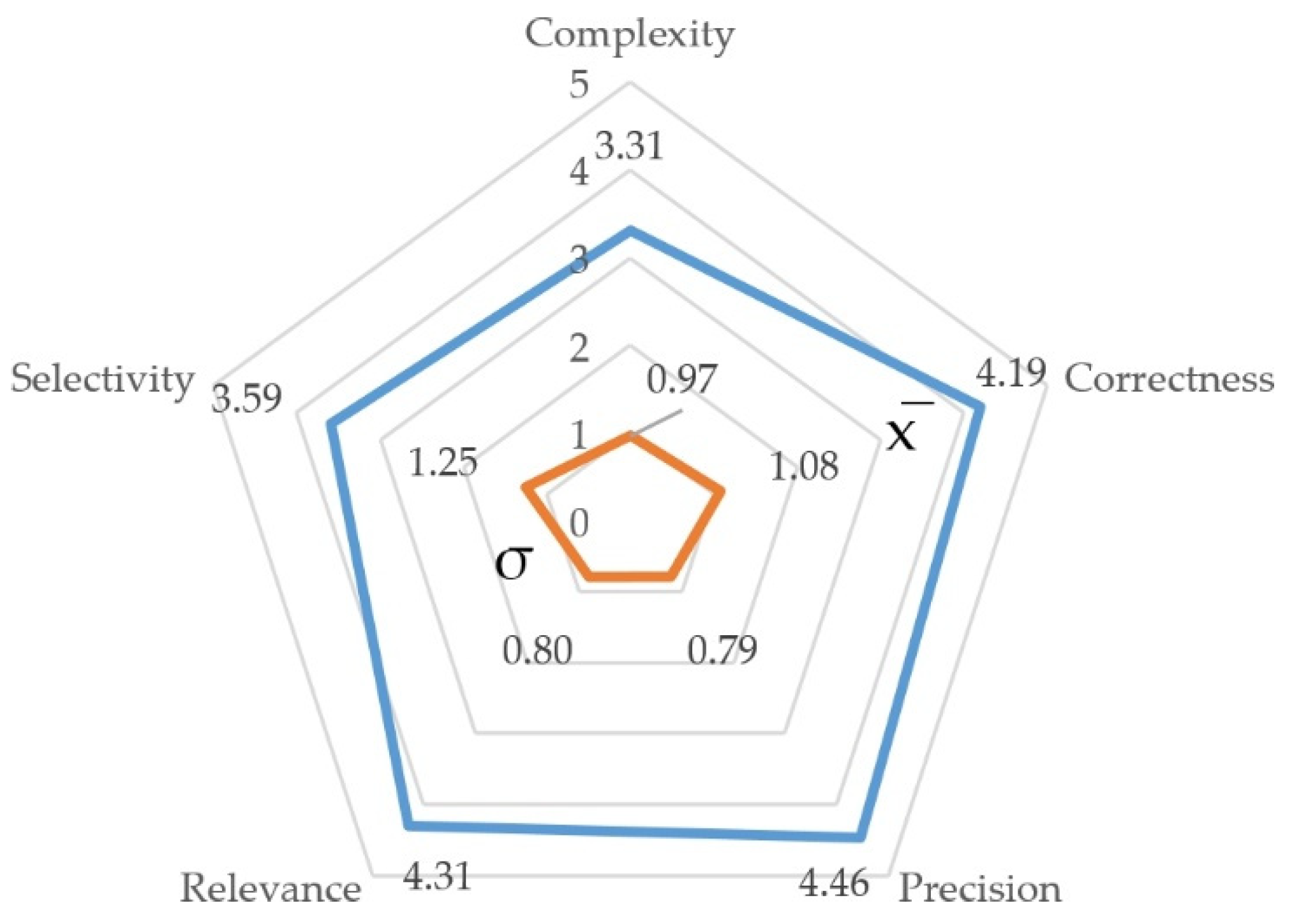

3.1.4. Analysis of the MCQs Generated

3.2. Stage 2: The Reading Game and QuizUp in a Capital Budgeting Course

3.2.1. Motivation and Method

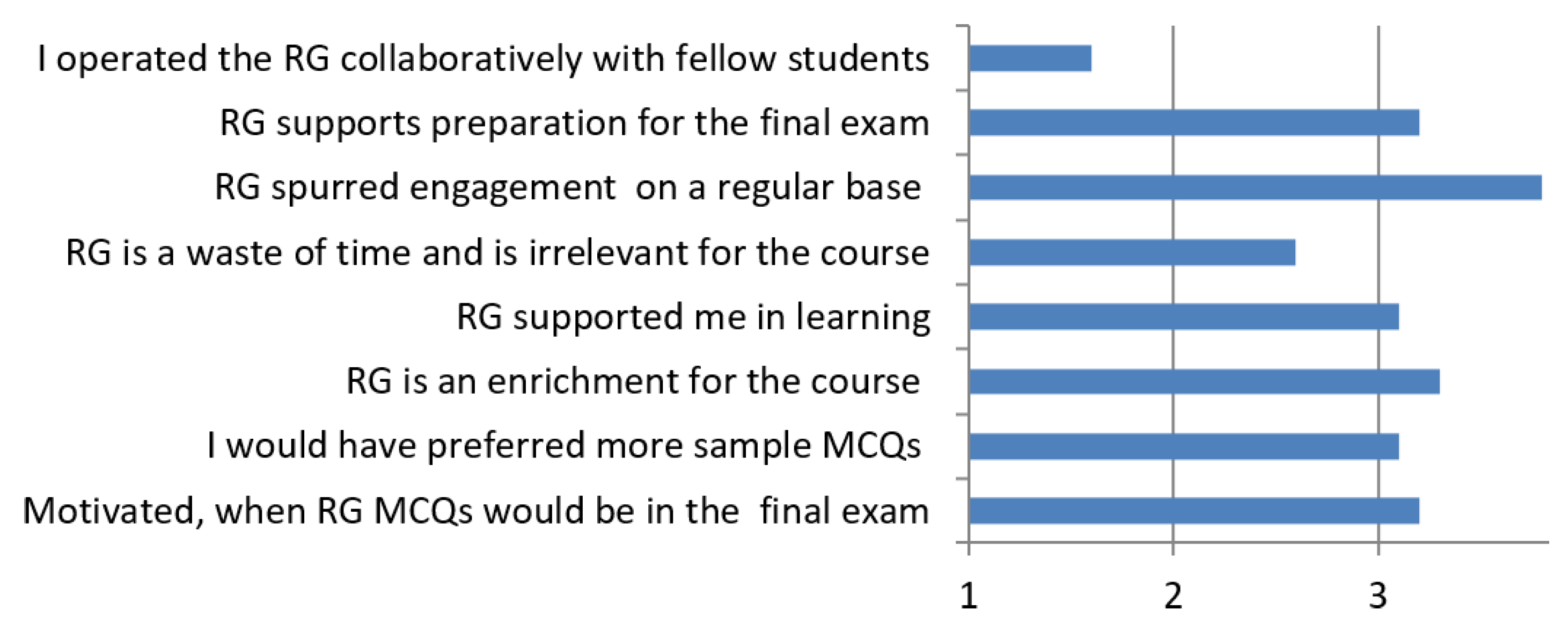

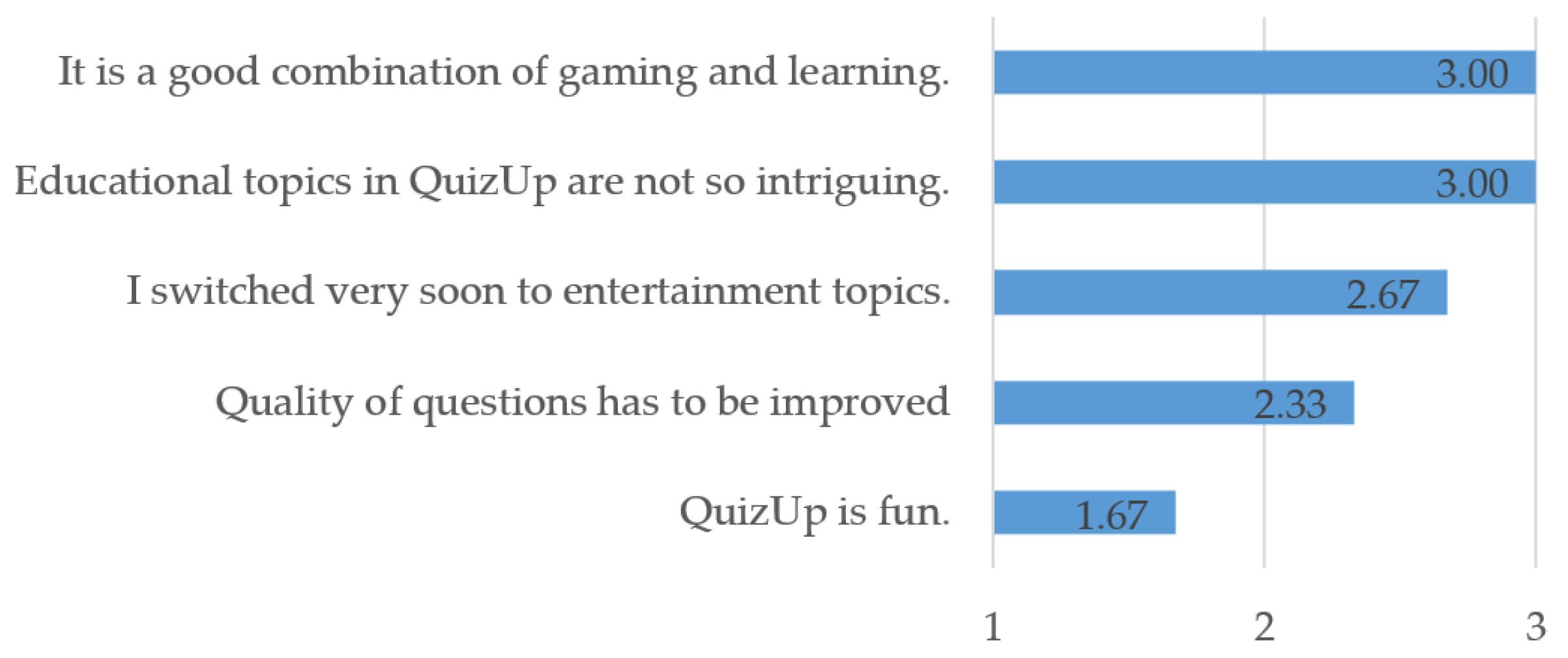

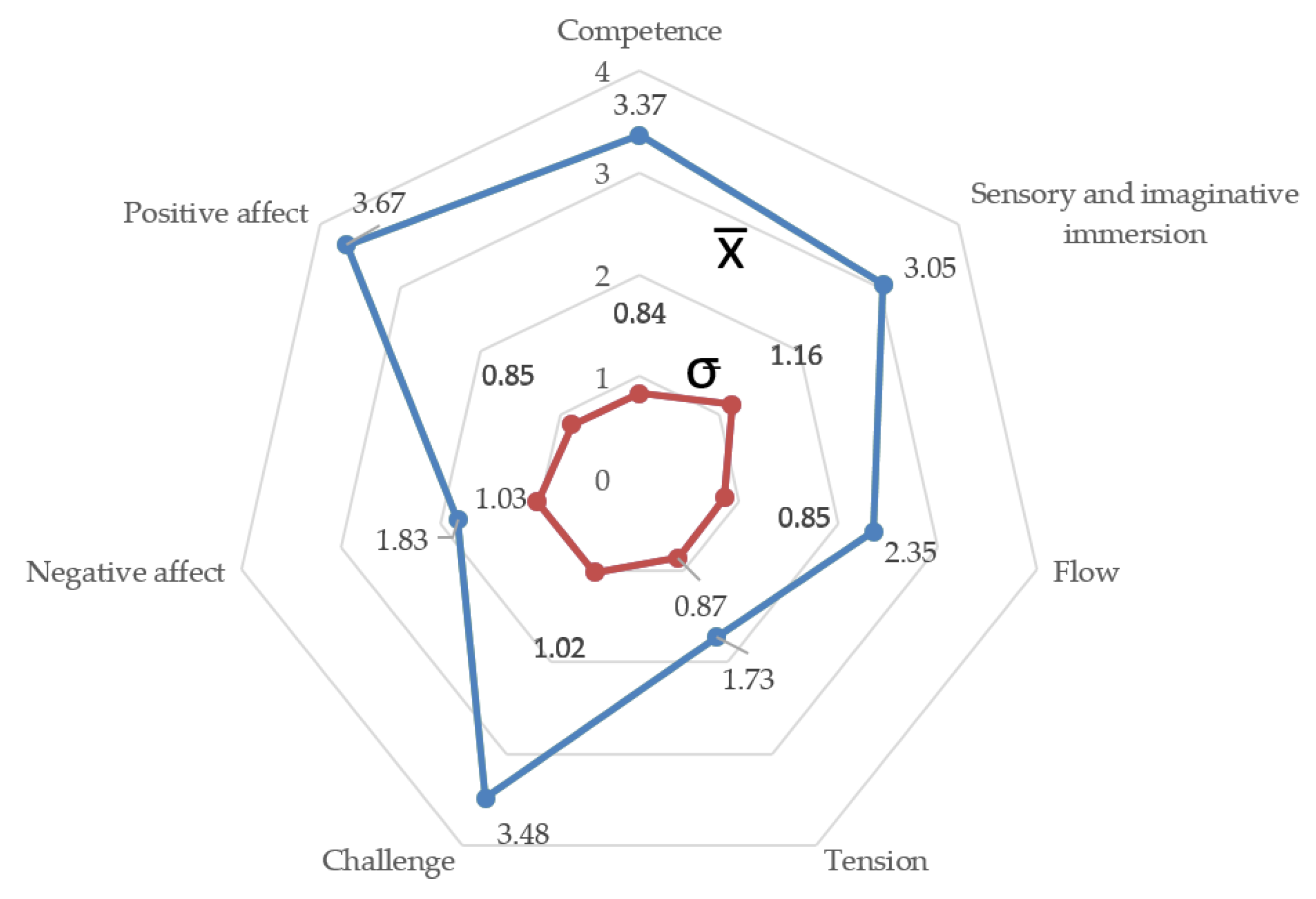

3.2.2. Questionnaire

4. Discussion

5. Conclusions

Supplementary Materials

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Burton, R.F. Multiple-Choice and True/False Tests: Myths and Misapprehensions. Assess. Eval. High. Educ. 2005, 30, 65–72. [Google Scholar] [CrossRef]

- Scouller, K. The Influence of Assessment Method on Students’ Learning Approaches: Multiple Choice Question Examination versus Assignment Essay. High. Educ. 1998, 35, 453–472. [Google Scholar] [CrossRef]

- Simkin, M.G.; Kuechler, W.L. Multiple-Choice Tests and Student Understanding: What Is the Connection? Decis. Sci. J. Innov. Educ. 2005, 3, 73–98. [Google Scholar] [CrossRef]

- Iz, H.B.; Fok, H.S. Use of Bloom’s Taxonomic Complexity in Online Multiple Choice Tests in Geomatics Education. Surv. Rev. 2007, 39, 226–237. [Google Scholar] [CrossRef]

- Harper, R. Multiple-Choice Questions—A Reprieve. Biosci. Educ. 2003, 2, 1–6. [Google Scholar] [CrossRef]

- Palmer, E.J.; Devitt, P.G. Assessment of Higher Order Cognitive Skills in Undergraduate Education: Modified Essay or Multiple Choice Questions? BMC Med. Educ. 2007, 7, 49. [Google Scholar] [CrossRef] [Green Version]

- Carpenter, S.K.; Pashler, H.; Vul, E. What Types of Learning Are Enhanced by a Cued Recall Test? Psychon. Bull. Rev. 2006, 13, 826–830. [Google Scholar] [CrossRef]

- Roediger, H.L.; Karpicke, J.D. The Power of Testing Memory: Basic Research and Implications for Educational Practice. Perspect. Psychol. Sci. 2006, 1, 181–210. [Google Scholar] [CrossRef]

- Greving, S.; Lenhard, W.; Richter, T. The Testing Effect in University Teaching: Using Multiple-Choice Testing to Promote Retention of Highly Retrievable Information. Teach. Psychol. 2022, 0, 1–10. [Google Scholar] [CrossRef]

- Larsen, D.; Butler, A.C. Test-Enhanced Learning. In Oxford Textbook of Medical Education; Walsh, K., Ed.; Oxford University Press: Oxford, UK, 2013; pp. 443–452. [Google Scholar]

- Wiklund-Hörnqvist, C.; Jonsson, B.; Nyberg, L. Strengthening Concept Learning by Repeated Testing. Scand. J. Psychol. 2013, 55, 10–16. [Google Scholar] [CrossRef]

- Karpicke, J.D.; Roediger, H.L. The Critical Importance of Retrieval for Learning. Science 2008, 319, 966–968. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Butler, A.C.; Roediger, H.L. Testing Improves Long-Term Retention in a Simulated Classroom Setting. Eur. J. Cogn. Psychol. 2007, 19, 514–527. [Google Scholar] [CrossRef]

- Butler, A.C.; Karpicke, J.D.; Roediger, H.L. The Effect of Type and Timing of Feedback on Learning from Multiple-Choice Tests. J. Exp. Psychol. Appl. 2007, 13, 273–281. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- McDaniel, M. a.; Agarwal, P.K.; Huelser, B.J.; McDermott, K.B.; Roediger, H.L. Test-Enhanced Learning in a Middle School Science Classroom: The Effects of Quiz Frequency and Placement. J. Educ. Psychol. 2011, 103, 399–414. [Google Scholar] [CrossRef] [Green Version]

- Glass, A.L.; Ingate, M.; Sinha, N. The Effect of a Final Exam on Long-Term Retention. J. Gen. Psychol. 2013, 140, 224–241. [Google Scholar] [CrossRef]

- Pashler, H.; Bain, P.M.; Bottge, B.A.; Graesser, A.; Koedinger, K.; McDaniel, M.; Metcalfe, J. Organizing Instruction and Study to Improve Student Learning. IES Practice Guide. NCER 2007–2004; National Center for Education Research, Institute of Education Sciences, U.S. Department of Education: Washington, DC, USA, 2007. [Google Scholar]

- Quizlet, L.L.C. Simple Free Learning Tools for Students and Teachers|Quizlet. 2022. Available online: http://quizlet.com/ (accessed on 16 February 2022).

- Quitch. Quitch—Learning at your fingertips. 2022. Available online: https://www.quitch.com/ (accessed on 12 September 2018).

- KEEUNIT. Quiz-App für Unternehmen. 2022. Available online: https://www.keeunit.de/quiz-app-business/ (accessed on 28 February 2022).

- Klopfer, E. Augmented Learning: Research and Design of Mobile Educational Games; The MIT Press: Cambridge, MA, USA, 2008. [Google Scholar]

- Celador Productions Ltd. Who Wants to Be a Millionaire. 1998. Available online: http://www.imdb.com/title/tt0166064/ (accessed on 14 December 2014).

- Bellis, M. The History of Trivial Pursuit. 2020. Available online: http://inventors.about.com/library/inventors/bl_trivia_pursuit.htm (accessed on 20 April 2022).

- FEO Media, AB. QuizClash. 2022. Available online: http://www.quizclash-game.com/ (accessed on 20 April 2022).

- Plain Vanilla. QuizUp—Connecting People through Shared Interests. 2013. Available online: https://www.quizup.com/ (accessed on 12 January 2016).

- Russolillo, S. QuizUp: The Next “It” Game App? 2014. Available online: http://www.palmbeachpost.com/videos/news/is-quizup-the-next-it-game-app/vCYDgf/ (accessed on 18 January 2016).

- Malone, T.W.; Lepper, M.R. Making Learning Fun: A Taxonomy of Intrinsic Motivations for Learning. Aptit. Learn. Instr. 1987, 3, 223–253. [Google Scholar]

- Garris, R.; Ahlers, R.; Driskell, J.E. Games, Motivation, and Learning: A Research and Practice Model. Simul. Gaming 2002, 33, 441–467. [Google Scholar] [CrossRef]

- Söbke, H. Space for Seriousness? Player Behavior and Motivation in Quiz Apps. In Entertainment Computing, Proceedings of the ICEC 2015 14th International Conference, ICEC 2015, Trondheim, Norway, 29 September–2 October 2015; Chorianopoulos, K., Chorianopoulos, K., Divitini, M., Baalsrud Hauge, J., Jaccheri, L., Malaka, R., Eds.; Springer: Cham, Switzerland, 2015; pp. 482–489. [Google Scholar] [CrossRef] [Green Version]

- Feraco, T.; Casali, N.; Tortora, C.; Dal Bon, C.; Accarrino, D.; Meneghetti, C. Using Mobile Devices in Teaching Large University Classes: How Does It Affect Exam Success? Front. Psychol. 2020, 11, 1363. [Google Scholar] [CrossRef]

- Kahoot! AS. Kahoot! 2022. Available online: https://getkahoot.com/ (accessed on 12 January 2016).

- Showbie Inc. Socrative. 2022. Available online: http://www.socrative.com (accessed on 20 April 2022).

- Technische Hochschule Mittelhessen. ARSnova. 2014. Available online: https://arsnova.thm.de/ (accessed on 13 March 2019).

- Fullarton, C.M.; Hoeck, T.W.; Quibeldey-Cirkel, K. Arsnova.Click—A Game-Based Audience-Response System for Stem Courses. EDULEARN17 Proc. 2017, 1, 8107–8111. [Google Scholar] [CrossRef]

- Basuki, Y.; Hidayati, Y. Kahoot! Or Quizizz: The Students’ Perspectives. In Proceedings of the ELLiC 2019: The 3rd English Language and Literature International Conference, ELLiC, Semarang, Indonesia, 27 April 2019; EAI: Nitra, Slovakia, 2019; p. 202. [Google Scholar] [CrossRef] [Green Version]

- Wang, A.I.; Lieberoth, A. The Effect of Points and Audio on Concentration, Engagement, Enjoyment, Learning, Motivation, and Classroom Dynamics Using Kahoot! In Proceedings of the 10th European Conference on Game Based Learning (ECGBL), Paisley, Scotland, 6–7 October 2016; Academic Conferences International Limited: Reading, UK, 2016; p. 738. [Google Scholar]

- Wang, A.I.; Tahir, R. The Effect of Using Kahoot! For Learning—A Literature Review. Comput. Educ. 2020, 149, 103818. [Google Scholar] [CrossRef]

- Licorish, S.A.; Owen, H.E.; Daniel, B.; George, J.L. Students’ Perception of Kahoot!’s Influence on Teaching and Learning. Res. Pract. Technol. Enhanc. Learn. 2018, 13, 9. [Google Scholar] [CrossRef] [Green Version]

- Christianson, A.M. Using Socrative Online Polls for Active Learning in the Remote Classroom. J. Chem. Educ. 2020, 97, 2701–2705. [Google Scholar] [CrossRef]

- Mendez, D.; Slisko, J. Software Socrative and Smartphones as Tools for Implementation of Basic Processes of Active Physics Learning in Classroom: An Initial Feasibility Study with Prospective Teachers. Eur. J. Phys. Educ. 2013, 4, 17–24. [Google Scholar]

- Kaya, A.; Balta, N. Taking Advantages of Technologies: Using the Socrative in English Language Teaching Classes. Int. J. Soc. Sci. Educ. Stud. 2016, 2, 4–12. [Google Scholar]

- Pechenkina, E.; Laurence, D.; Oates, G.; Eldridge, D.; Hunter, D. Using a Gamified Mobile App to Increase Student Engagement, Retention and Academic Achievement. Int. J. Educ. Technol. High. Educ. 2017, 14, 31. [Google Scholar] [CrossRef] [Green Version]

- Beatson, N.; Gabriel, C.A.; Howell, A.; Scott, S.; van der Meer, J.; Wood, L.C. Just Opt in: How Choosing to Engage with Technology Impacts Business Students’ Academic Performance. J. Acc. Educ. 2019, 50, 100641. [Google Scholar] [CrossRef]

- IT Finanzmagazin. Quiz-App—Wissenszuwachs Durch Gamification: Wüstenrot Qualifiziert 1.400 Außendienstler. Available online: https://www.it-finanzmagazin.de/quiz-app-wissenszuwachs-durch-gamification-wuestenrot-will-1-400-aussendienstler-qualifizieren-33706/ (accessed on 14 July 2016).

- Woods, B. QuizUp Launches Tools for Creating your Own Trivia Categories and Questions. 2015. Available online: http://thenextweb.com/apps/2015/09/24/quizup-launches-tools-for-creating-your-own-trivia-categories-and-questions/#gref (accessed on 12 January 2016).

- Kurdi, G.; Parsia, B.; Sattler, U. An Experimental Evaluation of Automatically Generated Multiple Choice Questions from Ontologies. In International Experiences and Directions Workshop on OWL; Springer: Cham, Switzerland, 2017; pp. 24–39. [Google Scholar]

- Howe, J. The Rise of Crowdsourcing. Wired Mag. 2006, 14, 1–16. [Google Scholar]

- Harris, B.H.L.; Walsh, J.L.; Tayyaba, S.; Harris, D.A.; Wilson, D.J.; Smith, P.E. A Novel Student-Led Approach to Multiple-Choice Question Generation and Online Database Creation, With Targeted Clinician Input. Teach. Learn. Med. 2015, 27, 182–188. [Google Scholar] [CrossRef]

- Papert, S. Situating Constructionism. In Constructionism; Papert, S., Harel, I., Eds.; Ablex Publishing: Norwood, NJ, USA, 1991. [Google Scholar]

- Moodle.org. Moodle. 2022. Available online: https://moodle.org (accessed on 23 May 2018).

- Campbell, E. MoodleQuiz: Learner-Generated Quiz Questions as a Differentiated Learning Activity; University of Dublin: Dublin, Ireland, 2010. [Google Scholar]

- Jones, J.A. Scaffolding Self-Regulated Learning through Student-Generated Quizzes. Act. Learn. High. Educ. 2019, 20, 115–126. [Google Scholar] [CrossRef]

- Guilding, C.; Pye, R.E.; Butler, S.; Atkinson, M.; Field, E. Answering Questions in a Co-Created Formative Exam Question Bank Improves Summative Exam Performance, While Students Perceive Benefits from Answering, Authoring, and Peer Discussion: A Mixed Methods Analysis of PeerWise. Pharmacol. Res. Perspect 2021, 9, e00833. [Google Scholar] [CrossRef]

- Lin, X.; Sun, Q.; Zhang, X. Using Learners’ Self-Generated Quizzes in Online Courses. Distance Educ. 2021, 42, 391–409. [Google Scholar] [CrossRef]

- Kurtz, J.B.; Lourie, M.A.; Holman, E.E.; Grob, K.L.; Monrad, S.U. Creating Assessments as an Active Learning Strategy: What Are Students’ Perceptions? A Mixed Methods Study. Med. Educ. Online 2019, 24, 1630239. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yu, F.Y.; Wu, W.S.; Huang, H.C. Promoting Middle School Students’ Learning Motivation and Academic Emotions via Student-Created Feedback for Online Student-Created Multiple-Choice Questions. Asia-Pacific Educ. Res. 2018, 27, 395–408. [Google Scholar] [CrossRef]

- Teplitski, M.; Irani, T.; Krediet, C.J.; Di Cesare, M.; Marvasi, M. Student-Generated Pre-Exam Questions Is an Effective Tool for Participatory Learning: A Case Study from Ecology of Waterborne Pathogens Course. J. Food Sci. Educ. 2018, 17, 76–84. [Google Scholar] [CrossRef] [Green Version]

- Hancock, D.; Hare, N.; Denny, P.; Denyer, G. Improving Large Class Performance and Engagement through Student-Generated Question Banks. Biochem. Mol. Biol. Educ. 2018, 46, 306–317. [Google Scholar] [CrossRef]

- Bottomley, S.; Denny, P. A Participatory Learning Approach to Biochemistry Using Student Authored and Evaluated Multiple-Choice Questions. Biochem. Mol. Biol. Educ. 2011, 39, 352–361. [Google Scholar] [CrossRef]

- McClean, S. Implementing PeerWise to Engage Students in Collaborative Learning. Perspect. Pedagog. Pract. 2015, 6, 89–96. [Google Scholar]

- Grainger, R.; Dai, W.; Osborne, E.; Kenwright, D. Medical Students Create Multiple-Choice Questions for Learning in Pathology Education: A Pilot Study. BMC Med. Educ. 2018, 18, 201. [Google Scholar] [CrossRef]

- Yu, F.Y.; Liu, Y.H. Creating a Psychologically Safe Online Space for a Student-Generated Questions Learning Activity via Different Identity Revelation Modes. Br. J. Educ. Technol. 2009, 40, 1109–1123. [Google Scholar] [CrossRef]

- Riggs, C.D.; Kang, S.; Rennie, O. Positive Impact of Multiple-Choice Question Authoring and Regular Quiz Participation on Student Learning. CBE Life Sci. Educ. 2020, 19, ar16. [Google Scholar] [CrossRef]

- Khosravi, H.; Kitto, K.; Williams, J.J. RiPPLE: A Crowdsourced Adaptive Platform for Recommendation of Learning Activities. J. Learn. Anal. 2019, 6, 91–105. [Google Scholar] [CrossRef] [Green Version]

- Wang, X.; Talluri, S.T.; Rose, C.; Koedinger, K. UpGrade: Sourcing Student Open-Ended Solutions to Create Scalable Learning Opportunities. In Proceedings of the L@S’19: The Sixth (2019) ACM Conference on Learning @ Scale, New York, NY, USA, 24–25 June 2019; Association for Computing Machinery: New York, NY, USA; Volume 17, pp. 1–10. [Google Scholar] [CrossRef]

- Parker, R.; Manuguerra, M.; Schaefer, B. The Reading Game—Encouraging Learners to Become Question- Makers Rather than Question-Takers by Getting Feedback, Making Friends and Having Fun. In Proceedings of the 30th ascilite Conference 2013, Sydney, Australia, 1–4 December 2013; Carter, H., Gosper, M., Hedberg, J., Eds.; Australasian Society for Computers in Learning in Tertiary Education: Sydney, Australia, 2013; pp. 681–684. [Google Scholar]

- Haladyna, T.M.; Rodriguez, M.C. Developing and Validating Test Items; Routledge: New York, NY, USA, 2013. [Google Scholar]

- Ch, D.R.; Saha, S.K. Automatic Multiple Choice Question Generation from Text: A Survey. IEEE Trans. Learn. Technol. 2018, 13, 14–25. [Google Scholar] [CrossRef]

- Söbke, H. A Case Study of Deep Gamification in Higher Engineering Education. In Games and Learning Alliance, Proceedings of the 7th International Conference, GALA 2018, Palermo, Italy, December 5–7, 2018; Gentile, M., Allegra, M., Söbke, H., Eds.; Springer: Berlin/Heidelberg, Germany, 2019; pp. 375–386. [Google Scholar] [CrossRef]

- Huang, B.; Hew, K.F. Do Points, Badges and Leaderboard Increase Learning and Activity: A Quasi-Experiment on the Effects of Gamification. In Proceedings of the 23rd International Conference on Computers in Education, Hangzhou, China, 30 November–4 December 2015; pp. 275–280. [Google Scholar]

- Söbke, H.; Chan, E.; von Buttlar, R.; Große-Wortmann, J.; Londong, J. Cat King’s Metamorphosis—The Reuse of an Educational Game in a Further Technical Domain. In Games for Training, Education, Health and Sports; Göbel, S., Wiemeyer, J., Eds.; Springer International Publishing: Darmstadt, Germany, 2014; Volume 8395, pp. 12–22. [Google Scholar] [CrossRef]

- Chi, M.T.H.; Wylie, R. The ICAP Framework: Linking Cognitive Engagement to Active Learning Outcomes. Educ. Psychol. 2014, 49, 219–243. [Google Scholar] [CrossRef]

- Theobald, E.J.; Hill, M.J.; Tran, E.; Agrawal, S.; Nicole Arroyo, E.; Behling, S.; Chambwe, N.; Cintrón, D.L.; Cooper, J.D.; Dunster, G.; et al. Active Learning Narrows Achievement Gaps for Underrepresented Students in Undergraduate Science, Technology, Engineering, and Math. Proc. Natl. Acad. Sci. USA 2020, 117, 6476–6483. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bates, S.P.; Galloway, R.K.; Riise, J.; Homer, D. Assessing the Quality of a Student-Generated Question Repository. Phys. Rev. Spec. Top.-Phys. Educ. Res. 2014, 10, 020105. [Google Scholar] [CrossRef]

- Anderson, L.W.; Krathwohl, D.R.; Airasian, P.W.; Cruikshank, K.A.; Mayer, R.E.; Pintrich, P.R.; Raths, J.; Wittrock, M.C. A Taxonomy for Learning, Teaching, and Assessing: A Revision of Bloom’s Taxonomy of Educational Objectives, Abridged Edition; Pearson: New York, NY, USA, 2000. [Google Scholar]

- Ni, L.; Bao, Q.; Li, X.; Qi, Q.; Denny, P.; Warren, J.; Witbrock, M.; Liu, J. DeepQR: Neural-Based Quality Ratings for Learnersourced Multiple-Choice Questions. arXiv 2021, arXiv:2111.10058. [Google Scholar]

- Weitze, L.; Söbke, H. Quizzing to Become an Engineer—A Commercial Quiz App in Higher Education. In Proceedings of the New Perspectives in Scienze Education, 5th Conference Edition, Florence, Italy, 17–18 March 2016; Pixel, Ed.; Webster srl: Padova, Italy, 2016; pp. 225–230. [Google Scholar]

- Geiger, M.A.; Middleton, M.M.; Tahseen, M. Assessing the Benefit of Student Self-Generated Multiple-Choice Questions on Examination Performance. Issues Account. Educ. 2020, 36, 1–20. [Google Scholar] [CrossRef]

- Caspari-Sadeghi, S.; Forster-Heinlein, B.; Maegdefrau, J.; Bachl, L. Student-Generated Questions: Developing Mathematical Competence through Online-Assessment. Int. J. Scholarsh. Teach. Learn. 2021, 15, 8. [Google Scholar] [CrossRef]

- IJsselsteijn, W.A.; De Kort, Y.A.W.; Poels, K. The Game Experience Questionnaire. Technische Universiteit Eindhoven. 2013. Available online: https://pure.tue.nl/ws/portalfiles/portal/21666907/Game_Experience_Questionnaire_English.pdf (accessed on 20 April 2022).

- Voloshina, A. What Happened to QuizUp? 2021. Available online: https://triviabliss.com/what-happened-to-quizup/ (accessed on 25 February 2022).

- The University of Auckland|New Zealand. PeerWise. 2022. Available online: https://peerwise.cs.auckland.ac.nz/ (accessed on 25 January 2022).

| Required | Min | Max | Mean | |

|---|---|---|---|---|

| MCQs answered | 50 | 77 | 502 | 239.9 |

| MCQs generated | 10 | 11 | 17 | 14.3 |

| Data (Variable) | Description |

|---|---|

| MCQs answered (A) | Number of MCQ answered in RG |

| MCQs generated (B) | Number of the MCQs generated in RG |

| Points (C) | Points in RG, this variable is derived from variable A and B. |

| Performance in online pretests (D) | Percentage of correctly answered MCQs |

| No. of mock tests completed (E) | Between last lecture of the course and final exam, students could train the MCQs of the MCQ pool by means of a mock test, each consisting of 5 randomly selected MCQs. |

| Final exam results (MCQs) (F) | In the final exam, students had to answer MCQs, which have been issued in accordance with those of the MCQ pool. |

| Final exam result (Calculations) (G) | In the second part of the final test, students had to solve calculation tasks. |

| Variable 1 | Variable 2 | Correlation Coefficient |

|---|---|---|

| F (Final test results (MCQs)) | E (No. of completed mock tests) | 0.68 |

| F (Final test results (MCQs)) | A (MCQs answered) | 0.48 |

| F (Final test results (MCQs)) | C (Points) | 0.53 |

| F (Final test results (MCQs)) | A (MCQs answered) + E (No. of completed mock tests) | 0.79 |

| A (MCQs answered) | B (MCQs generated) | 0.58 |

| G (Final exam result (Calculations)) | B (MCQs generated) | −0.60 |

| Dimension | Description Including Guiding Questions for the Assessment |

|---|---|

| Precision | The MCQ is precise and comprehensible. Questions:

|

| Correctness | Question stem, correct answers and distractors are correct technically. Questions:

|

| Relevance | The MCQ’s content is relevant for the technical domain. Question:

|

| Complexity | The knowledge given by the MCQ is complex. Questions:

|

| Selectivity | The distractors are well selected. Questions:

|

| Dimension | r Dimension Karmascore |

|---|---|

| Complexity | 0.34 |

| Correctness | 0.25 |

| Precision | 0.17 |

| Relevance | 0.51 |

| Selectivity | −0.02 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Söbke, H. Exploring (Collaborative) Generation and Exploitation of Multiple Choice Questions: Likes as Quality Proxy Metric. Educ. Sci. 2022, 12, 297. https://doi.org/10.3390/educsci12050297

Söbke H. Exploring (Collaborative) Generation and Exploitation of Multiple Choice Questions: Likes as Quality Proxy Metric. Education Sciences. 2022; 12(5):297. https://doi.org/10.3390/educsci12050297

Chicago/Turabian StyleSöbke, Heinrich. 2022. "Exploring (Collaborative) Generation and Exploitation of Multiple Choice Questions: Likes as Quality Proxy Metric" Education Sciences 12, no. 5: 297. https://doi.org/10.3390/educsci12050297

APA StyleSöbke, H. (2022). Exploring (Collaborative) Generation and Exploitation of Multiple Choice Questions: Likes as Quality Proxy Metric. Education Sciences, 12(5), 297. https://doi.org/10.3390/educsci12050297