Abstract

The Cleveland Clinic Lerner College of Medicine of Case Western Reserve University (CCLCM) was created in 2004 as a 5-year undergraduate medical education program with a mission to produce future physician-investigators. CCLCM’s assessment system aligns with the principles of programmatic assessment. The curriculum is organized around nine competencies, where each competency has milestones that students use to self-assess their progress and performance. Throughout the program, students receive low-stakes feedback from a myriad of assessors across courses and contexts. With support of advisors, students construct portfolios to document their progress and performance. A separate promotion committee makes high-stakes promotion decisions after reviewing students’ portfolios. This case study describes a systematic approach to provide both student and faculty professional development essential for programmatic assessment. Facilitators, barriers, lessons learned, and future directions are discussed.

1. Introduction

The need for multiple sources of assessment evidence—and infrastructure to support programmatic assessment—are by now well-known requirements [1,2,3]. Professional development of faculty and learners has received far less attention in the literature. In this case study, we describe our approach to programmatic assessment at the Cleveland Clinic Lerner College of Medicine (CCLCM) and then focus specifically on professional development activities to promote and sustain programmatic assessment as an educational innovation.

2. Context of Case

The Cleveland Clinic Foundation in Cleveland, Ohio, collaborated with Case Western Reserve University School (CWRU) of Medicine in 2002 to create a new 5-year undergraduate medical education program within CWRU to address growing concerns about the national shortage of physician investigators in the United States [4,5,6]. Additionally, CCLCM faculty desired an assessment system which would promote reflective practice and self-directed learning [7]. The CCLCM curriculum is organized around nine competencies that map to the Accreditation Council for Graduate Medical Education (ACGME) competencies as well as CCLCM’s own mission-specific competencies (research and scholarship, personal and professional development, and reflective practice). Students do not receive grades for courses, clerkships, or electives. Instead, they use portfolios to document their progress and performance. The first class of students matriculated in 2004. Each class has 32 students, with an overall student body of 160 students.

3. Motives Underlying Implementation of Programmatic Assessment

Though the CCLCM program was created within a degree-granting institution (CWRU), the new medical school program was designed de novo, without having to adhere to assessment practices common within our parent institution. This flexibility provided time to contemplate different curricular and assessment models, plan faculty retreats, and consult with experts from other institutions. At one retreat, faculty described their negative experiences during medical school where competitive learning environments and high-stakes exams did not instill self-directed learning skills essential for success during residency and clinical practice. In subsequent retreats, faculty created guiding principles for the assessment system [7], where the primary focus was on assessment for learning [8,9]. Although we did not recognize it at the time, CCLCM’s assessment principles and subsequent assessment system mapped to the core tenets of programmatic assessment [1,2,3,10]. For instance, we adopted a competency-based framework for all assessments, created different types of assessments to provide learners with meaningful feedback, implemented both low- and high-stakes decision points to document learner progress, assigned coaches to help learners process feedback, and established a separate faculty committee to make student promotion decisions.

4. Description of the Assessment Program

CCLCM’s educational program features several components of programmatic assessment intentionally designed to encourage students to take personal ownership for their learning. The curriculum is organized around nine competencies, where each competency has milestones students use to document their progress and performance. All milestones map directly to instructional activities and authentic learning experiences that increase in difficulty throughout the program.

Throughout the five-year program, students receive low-stakes feedback from a myriad of assessors (i.e., research advisors, problem-based learning facilitators, longitudinal clinic preceptors, communication skills preceptors, peers, etc.) across courses and contexts (i.e., classroom, clinical settings, research laboratories, objective structured clinical exams, etc.). All of these assessments are formative and narrative. Weekly assessments for medical knowledge in years 1–2 are also formative and low-stakes [11]. Students are not required to take National Board of Medical Examiners (NBME) subject exams during core clerkships. However, students must pass national medical licensure exams (USMLE Step 1 and Step 2 CK) as a graduation requirement. Assessors complete online assessments which are automatically uploaded into the evidence dashboard of CCLCM’s electronic portfolio system for students to access immediately. This rich, multi-source feedback provides the foundation for CCLCM’s assessment system. We now describe CCLCM’s programmatic assessment system.

Physician advisors. Upon entry into the program, each student is assigned a physician advisor (PA) who helps the student navigate the assessment system. PAs meet with students regularly (typically monthly in years 1–2 and less frequently in years 3–5) to aid them in processing feedback, prioritizing learning needs, developing learning plans, and constructing portfolios. PAs also play a pivotal role in assisting students with developing strategies to negotiate a unique learning environment without high-stakes examinations or grades [12]. PAs meet weekly as a committee to discuss their students’ progress, receive updates on the curriculum, and discuss available resources.

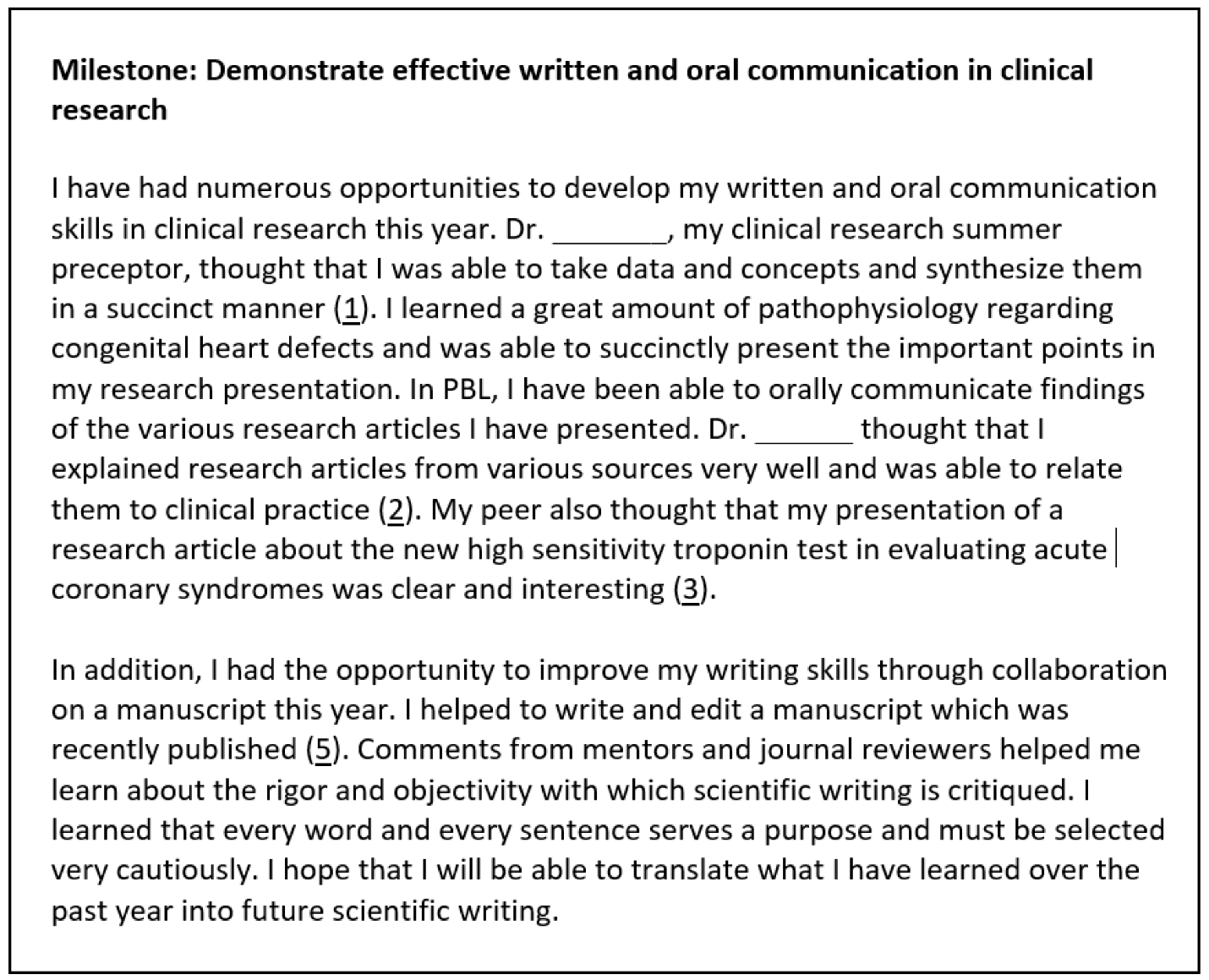

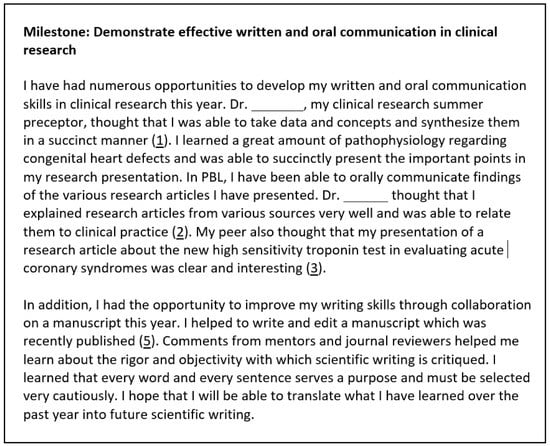

Portfolio assessment. Students submit two types of portfolios during medical school: formative portfolios and summative portfolios. With support from their PAs, students create three formative portfolios in year 1 and two formative portfolios in year 2. Formative portfolios require students to review their assessment evidence in the electronic dashboard and reflect on their performance. Students then write essays for milestones nested within the competencies, being sure to cite assessment evidence to document their progress and achievement (see Figure 1, excerpt from a formative portfolio essay). The number of essays students write in formative portfolios varies. For instance, in year 1, students write essays for five competencies in their first formative portfolio. Additional competencies are added to subsequent formative portfolios as students continue through the curriculum. When writing portfolios, students must purposefully weigh and select assessment evidence from different sources and contexts to provide a defensible picture of performance. For example, peers provide specific, actionable feedback for professionalism milestones (i.e., timeliness, preparation for class, receptiveness to feedback, etc.) while faculty provide expert assessment evidence for medical knowledge milestones. PAs read their students’ formative portfolios and offer advice on students’ argumentative writing, selection of evidence, and reflection. Additionally, PAs encourage their students to take personal ownership for learning when coaching students on how to create learning plans that mirror the reflective practice cycle (i.e., generate SMART goals, select specific strategies, monitor progress, and self-assess performance). Students construct summative portfolios at the end of years 1, 2, and 4. Summative portfolios have the same structure as formative portfolios in that students write an essay and cite assessment evidence for each competency milestone. Students must purposefully choose and cite assessment evidence in their summative portfolio to document growth and performance over the academic year. PAs provide official written attestation to a promotion committee that their students’ summative portfolios are balanced and representative of their performance during the assessment period. Summative portfolios provide the basis for high-stakes promotion decisions.

Figure 1.

Example of student essay in formative portfolio to document performance of a year 2 milestone for the interpersonal and communication skills competency.

Promotion and review committee. The Medical Student Promotion and Review Committee (MSPRC) consists of approximately 30–35 senior-level CCLCM researchers and physicians charged with making all student promotion decisions based on reviews of students’ summative portfolios. The MSPRC annually conducts summative portfolio reviews for all year 1, year 2, and year 4 students. Each summative portfolio review occurs over a 4-day period at staged intervals toward the end of the academic year. The process begins with all members independently reading and rating four randomly selected portfolios. Then, the committee votes, as a whole, on each of the four selected portfolios, where they make competency-specific decisions (pass, pass with concerns, or formal performance improvement required) and overall promotion decisions (pass, pass with concerns, repeat year, or dismissal). Having the committee review the same four portfolios serves as a standard-setting process for members to achieve consensus on the interpretation of milestones and gauge the quality/quantity of available assessment evidence. After standard setting is finished, the committee forms dyads where each pair reads and rates 3–4 summative portfolios. The MSPRC reconvenes and votes on each portfolio after pairs present their promotion recommendations. The MSPRC sends each student a personalized letter announcing the overall promotion decision and describing the student’s strengths, areas for improvement, portfolio quality, and reflective abilities. The committee concludes each review with a discussion among MSPRC members to summarize the educational program’s strengths and weaknesses based on their review of students’ summative portfolios.

5. Implementation Strategies

5.1. Planning and Timeline

Following the announcement of the CCLCM program in 2002, the Cleveland Clinic appointed an Executive Dean to oversee the new educational program and recruit faculty to serve in key roles (i.e., curriculum design, assessment, and program evaluation). The newly hired director of assessment took the lead in designing CCLCM’s assessment system, with a catalyst grant from the Cleveland Foundation to support planning (i.e., assessment consultants, travel to other medical schools, faculty retreats, etc.) and fund essential personnel (i.e., computer programmer, program evaluator, etc.). During 2003–2006, CCLCM faculty participated in several assessment retreats, often co-facilitated by outside experts. Concurrent with these retreats, the director of assessment convened an assessment task force to develop assessment principles, policies, and methods. This task force later became the College Assessment and Outcomes Committee (COAC), which continues to have centralized oversight of all assessment policies and practices within the CCLCM program. As this was a new medical school program, CCLCM’s educational leadership could not pilot its programmatic assessment approach. Our early commitment to continuous quality improvement, however, helped us identify and resolve unanticipated implementation issues.

5.2. Professional Development for Students

We recognized early on that matriculating students would require support as most came from colleges unlike CCLCM, where no grades or high-stakes examinations are used. We observed students often experienced an adjustment period during the first 3–6 months in the program, as they learned how to use frequent, low-stakes narrative assessments to gauge their progress rather than rely upon episodic, high-stakes assessments. Students also lacked experience with providing feedback to peers, which occurs in multiple settings (e.g., journal club, research presentations, PBL, etc.) within CCLCM’s educational program, or in using mastery-based learning strategies essential for success [12]. We conducted several workshops with first-year students to orient them to our assessment system, the reflective practice cycle, peer feedback strategies, learning plan guidelines, and portfolio requirements. Students evaluated each workshop, which helped us gauge their understanding and address their questions. Furthermore, PAs met with their first- and second-year students regularly to coach them on how to decode and process narrative assessments, reflect on performance, prioritize learning needs, and construct portfolios. We used questionnaires and focus groups to solicit student feedback about portfolios and PA advising. Mid-year student feedback, as exemplified in Table 1 for three student cohorts, supported the effectiveness of student development activities, though PAs still required a subset of students to rewrite formative portfolios. In most of these cases, PAs wanted students to select better evidence from the electronic database to document progress or be more reflective about their performance.

Table 1.

First-year medical students’ mid-year perceptions of formative portfolios by class cohort.

5.3. Professional Development for Faculty

As de Jong and colleagues have noted, it is vital that “assessors receive sufficient training to provide consistent, credible high-stakes decisions about student performance” ([13], p. 144). Professional development, also known as faculty development (FD), is thus deemed integral for high quality programmatic assessment.

CCLCM’s assessment system benefits from a systematic approach to the professional development of educators, which includes brief, role-specific FD for CCLCM faculty, faculty development retreats sponsored by its parent medical school (i.e., Case Western Reserve University SOM), short courses, and ‘intensive longitudinal programs’ [14] for faculty who are also Cleveland Clinic Foundation (CCF) employees (see Table A1 and Table A2 for examples). All FD offerings are based upon principles of adult learning [15], best practices in faculty development [16], and findings from learning science research [17,18,19,20]. Core FD sessions emphasize the establishment of safe learning environments [21,22], the centrality of feedback to learning [23], educational strategies which promote active engagement [17,18,19], and the development of assessment skills focused on performance improvement within competency-based assessment (CBA) frameworks [24]. In addition, transfer of skills to authentic health professions education (HPE) settings is a common goal across all faculty development programs.

Role-specific development. Professional development for faculty focuses on skills and knowledge needed to perform a variety of roles within CCLCM. Offerings range from 60-min ad hoc workshops to formal faculty development programs led by the directors of CCLCM courses. Examples include:

FD sessions designed for those advising students and/or reviewing portfolios. New PA training consists of informal sessions focusing on advising strategies (i.e., setting boundaries, interpreting assessment evidence, identifying “at risk” learners, coaching to foster learner reflection, referring students for counseling, etc.) and portfolio mechanics (i.e., accessing students’ assessment evidence, reviewing formative portfolios, approving summative portfolios, etc.). Importantly, PAs meet weekly to provide updates on their learners and share best practices pertinent to advisors, thereby forming a community of practice for advisors [25]. Guest speakers are periodically invited to these weekly meetings to give curriculum updates or conduct workshops on topics the PAs request. New promotion committee members participate in a 2 h workshop explaining the curriculum, portfolio review process, standard setting, and promotion letter template. New members are purposefully paired with experienced members, who offer guidance during summative portfolio reviews. Additionally, the entire promotion committee participates in some form of faculty development (i.e., curriculum updates, levels of reflective writing, etc.) before summative portfolio reviews and during monthly meetings.

FD sessions designed for those who teach students directly. These formal FD sessions are held annually for both new and returning preceptors and facilitators (e.g., PBL facilitators, longitudinal clinic preceptors, communications course facilitators, acute care medicine preceptors, etc.). These sessions help to ensure that faculty are oriented to CCLCM’s mission and expectations related to their specific roles, understand alignment between their own roles and the portfolio assessment system, and have opportunities to practice core skills related to competency-based assessment (e.g., formative assessment and verbal feedback). In 2020–21, new FD offerings specifically designed for clerkship faculty were developed by CCLCM leadership. These sessions cover topics such as observation and verbal feedback, ambulatory teaching and the clinical learning environment, addressing student mistreatment and more (see Table A1).

System-wide development. CCLCM faculty, as CCF employees, also have access to a wide range of professional development opportunities for educators via the Office of Educator & Scholar Development (OESD). The OESD, in addition to supporting role-specific FD, offers a number of formal faculty development programs and courses, including the following:

Essentials Program for Health Professions Educators (Essentials). This synchronous, interprofessional FD program focuses on development of core educator skills [24], including, but not limited to, skills integral to teaching adult learners (e.g., observation and feedback), designing educational experiences within competency based educational frameworks, planning for learner assessment within CBA frameworks, developing skills as an educational scholar, etc. (See Table A2 (Essentials topics)). As post-graduate medical education (PGME) trainees provide a large percentage of the medical student teaching at academic health science centers in the U.S., Essentials is also open to trainees. Participants are required to prepare ahead of time via pre-readings. Active engagement by participants is expected in each session. These live/virtual offerings can now be accessed by interprofessional educators at the majority of Cleveland Clinic locations. All CCLCM faculty have access to this program.

Essentials on Demand (EOD). This asynchronous, online course is open to all interprofessional educators in the CCF system. EOD offers lessons which can be accessed at any time, thus extending the reach of professional development to CCLCM faculty in clinical and research roles who may have difficulty attending live/virtual sessions. Formerly face-to-face, these lessons teach faculty to: (1) write specific, measurable learning objectives aligned with teaching and assessment strategies and (2) design interactive, engaging educational sessions using Gagne’s model of instructional design [26]. Both lessons feature authentic work products and written feedback from EOD instructors. New lessons on adult learning principles and social cognitive learning theory are in development, with projected release dates in 2021–22.

Distinguished Educator Level I Certificate Programs. For CCLCM faculty who are interested in enhancing their educational practices, the OESD offers several formal 6-month certificate programs (i.e., DE I: Feedback, DE I: Teaching, DE I: Instructional Design, DE I: Assessment) utilizing a reflective practitioner model. All programs feature a cycle of self-reflection, written feedback, and post-feedback reflection. For example, in the DE I: Teaching program, participants have the opportunity to be observed as they teach in authentic settings. They then receive specific written feedback on areas they had identified via their self-reflections.

Medical Education Fellowship. A 1-year, project-based Medical Education Fellowship certificate program is open to Cleveland Clinic MD and PhD staff with roles in education. Many, if not most, of these faculty have some role in the education of CCLCM medical students.

Cleveland State University/Cleveland Clinic Master of Education in Health Professions Education (MEHPE) program. This 2-year, cohort-based program offers educators—many of whom are CCLCM faculty—an opportunity to earn an advanced educational degree as they hone skills in curriculum development, teaching strategies, learner assessment, program evaluation, educational technology and educational scholarship. Lessons learned within CCLCM’s learning environment are shared with MEHPE students.

Consult service. Individual consultations and requested sessions are available via an educational consultation service. Typical consults for CCLCM faculty include providing advice on curriculum development, scholarly projects, and professional growth opportunities.

5.4. Main Facilitators

Numerous internal facilitators enabled CCLCM to create and sustain a programmatic assessment system. Top leadership at CCLCM and the Cleveland Clinic have provided ongoing support for the creation and maintenance of the medical school program. A shared vision for CCLCM’s educational and assessment practices was cultivated amongst stakeholders. This started with the development of the school’s mission and principles. Key roles (i.e., director of assessment, director of faculty development, etc.) were filled at the program’s onset, which contributed to the purposeful design of the assessment system. As the assessment system was developed, it was purposefully aligned with CCLCM’s core principles (e.g., assessment for learning, no grades/class ranks, etc.). Faculty who were committed to this novel medical school’s principles and assessment philosophy were recruited, and salary support for faculty in key roles (i.e., physician advisors, PBL facilitators, etc.) was provided. Ongoing professional development is offered to faculty to enhance and maintain teaching and assessment skills. Students are trained to ask for feedback and supported in navigating CCLCM’s unique assessment system. In addition to using multiple assessment methods, CCLCM uses a range of assessment evidence to provide a rich sampling of student performance across courses and contexts. Lastly, CCLCM adopted a continuous quality improvement approach early on to monitor assessment system implementation and quickly address components which required enhancement.

External facilitators also contributed to the successful implementation of CCLCM’s programmatic assessment system. For instance, CCLCM obtained a catalyst grant from a local foundation, which provided credibility, resources, and accountability during the design phase of the assessment system. Experts at other medical schools and competency based educational programs provided feedback and insights during program design. CCLCM’s competencies were closely aligned with post-graduate medical education competencies in the U.S. (i.e., Accreditation Council for Graduate Medical Education), which prepared CCLCM graduates for competency-based education requirements once they entered residency. Lastly, CCLCM received positive feedback on assessment-related accreditation standards from external reviewers representing the Liaison Committee on Medical Education (LCME), the accrediting body for medical schools in North America.

5.5. Main Barriers

In addition to numerous facilitators which aided in the development and maintenance of CCLCM’s programmatic assessment system, we have identified challenges to the maintenance of a high-quality assessment system. These challenges include competing demands on faculty time, inconsistent feedback quality, differing quantities of assessment evidence, pressures due to differing perceptions of the value of competency-based assessment, and technological challenges.

Like medical school programs elsewhere, CCLCM has seen increases in clinical demands on faculty time during the COVID-19 pandemic, which leaves less time for roles and associated tasks (e.g., providing formative assessment to learners). In addition to clinical faculty being deployed during the pandemic, the average clinical preceptor in Year 3 does not receive release time to teach or assess learners. The quantity of assessment evidence related to certain competencies (e.g., systems-based practice) continues to be a challenge as faculty may not have the opportunity to observe learner behaviors aligned with systems thinking, advocacy, or knowledge of clinical microsystems when learners are with them for limited periods of time. During years 3–5, PGME trainees have been recruited as instructors in some courses. Despite faculty development opportunities, they may have limited understanding of the school’s core principles. Another barrier identified during quality improvement efforts includes the overall assessment culture. Not all faculty and students embrace core CCLCM assessment principles (e.g., emphasis on criterion-referenced rather than norm-referenced assessment) for a variety of reasons. Competency-based assessment, with its emphasis on frequent formative assessment (i.e., observation and feedback) [27], may be new to some PGME trainees and faculty. The transition from norm-referenced frameworks in undergraduate medical education to a competency-based framework in medical school may be difficult for some learners [28].

Last, technological challenges have affected how some faculty and students interact with the assessment system. Some faculty have had difficulty completing electronic assessment forms despite strategies (e.g., use cellular phone for dictation, complete only parts of assessment form, etc.) discussed during faculty development sessions. Additionally, a subset of students have complained about the “user friendliness” of the e-portfolio and expressed a desire for technological short cuts to ease the burden associated with portfolio tasks (i.e., selecting, tagging, and uploading assessment evidence). Given concerns that these short cuts would undermine students’ reflection upon and purposeful selection of assessment evidence when constructing portfolios, these short cuts have not been implemented.

6. Evaluation

The College Assessment and Outcomes Committee developed a systematic, internal program evaluation plan that maps directly to key components of CCLCM’s assessment system. This plan is used to focus data collection activities on areas of fairness, reliability, validity, learning processes, and program outcomes, as well the assessment culture itself [13].

Stakeholder engagement is critical to foster continuous quality improvement initiatives. Consequently, we regularly obtain feedback from students about the quality/quantity of assessment evidence and portfolio processes using multiple methods (i.e., questionnaires, focus groups, informal feedback meetings, and exit interviews). Physician advisors discuss any issues with their students’ assessment evidence (e.g., missing assessments, superficial feedback, etc.) or the educational program at weekly meetings, which the director of assessment and evaluation also attends, thereby providing ample opportunities to intervene quickly. Promotion committee members provide written (questionnaire) and verbal (debriefing meeting) feedback after each summative portfolio review (e.g., clarity of milestones, alignment of assessment evidence with milestones, curricular gaps, etc.) and their deliberations (e.g., appropriateness of training, fairness, etc.). Course directors receive student and faculty feedback about the learning environment and students’ aggregate performance on assessments. All FD activities and programs are monitored closely for engagement, quality, and effectiveness. Additionally, residency program directors provide feedback about the performance of CCLCM graduates after the first year of residency training, and CCLCM alumni provide feedback about the program’s impact at different time points (1 year and 10 years post-graduation). Every eight years, the LCME reviews and makes accreditation decisions about the CCLCM program. We monitor assessment and educational program accreditation standards in collaboration with CWRU, our parent institution, and have received full accreditation since the implementation of the CCLCM program in 2004. We also have an active research agenda to examine how CCLCM’s programmatic assessment functions in practice and have published or sponsored scholarship about student and faculty perceptions, portfolio processes/contents, fairness, and remediation outcomes.

Transparent reporting makes quality improvement activities evident to stakeholders. Every year, the director of assessment and evaluation generates a comprehensive report summarizing the assessment system’s strengths and weaknesses and makes recommendations for the next academic year to the COAC, which has broad faculty and student membership, and other key committees (physician advisor committee, promotion committee, and curriculum oversight committee). This internal reporting process provides opportunities to discuss the assessment system as a whole and obtain stakeholder buy-in for educational quality improvement initiatives essential to maintain the program’s assessment for learning culture.

7. Discussion

Descriptions of programmatic assessment consistently emphasize the need for faculty and student development [24,29,30]; yet, few published implementations address this important topic. Those that do note the challenges of moving from theory to practice, especially if an institution underestimates the importance of ongoing professional development for key stakeholders [31,32]. A single workshop will not adequately prepare faculty for the learner-centered instructional and feedback approaches that programmatic assessment demands. Students have also struggled when adapting to programmatic assessment, given their tendency to view all assessments as high-stakes [33,34] rather than a continuum of stakes as intended [35]. Programmatic assessment requires a different, complex way of thinking at both the individual and institutional level, where a growth mindset [36], frequent formative assessments [37], and cycles of informed self-assessment and reflection [38] are critical components of the educational program and its culture. This case study contributes to the literature by elucidating how a purposeful and system-wide approach to student and faculty development can foster an assessment for learning culture within a medical school’s long-standing programmatic assessment system. We discuss lessons learned and conclude with recommendations.

Acculturation to CCLCM’s assessment system. One lesson we learned early on is that students required multiple training sessions and ongoing support in order to navigate CCLCM’s assessment system. Even though we described the assessment system in detail to applicants, our matriculating students struggled with not having high-stakes examinations or receiving grades to gauge their performance. Most of our students were unaccustomed to receiving narrative assessments, especially in the areas of professionalism and interpersonal and communication skills. They also had to adapt their learning strategies given the program’s emphasis upon mastery, which created initial anxiety [12]. Though we interspersed several workshops throughout year 1, these workshops provided insufficient preparation until students actually sorted through their assessment evidence and reflected upon written feedback, with guidance from and frequent meetings with their PAs. Our experience affirms the importance of student development and longitudinal advising as critical components for programmatic assessment system [39]. On the other hand, we observed students required less guidance from their PAs in years 3–5, which we attributed to students’ previous, coached experiences with developing critical reflection, goal setting, and informed self-assessment skills in years 1 and 2.

Development of feedback culture. We also identified early on that faculty and student understanding of and buy in for bidirectional feedback [40]—necessary for effective feedback cultures [22,41]—is critical to the success of CCLCM’s programmatic assessment system. As most faculty do not come to CCLCM with these skills, this meant formally teaching both observation/verbal feedback and written feedback skills across multiple professional development venues (e.g., within longitudinal preceptor training, acute care preceptor training, Essentials courses, etc.). (See Appendix A Table A1 and Table A2.) The necessity to maintain a continuing focus on formative feedback within faculty development has been highlighted by numerous authors [24,27,32,42]. As Hall and colleagues (2020) noted, competency-based medical education (CBME) does not guarantee the provision of specific, actionable feedback.

Systems-level commitment. Stressing the importance of faculty development to instructors is not enough for successful implementation. An ongoing and systems-level commitment to the development of educators is critical. Additionally, for faculty who are not directly employed by a medical school (e.g., CCLCM faculty are not employed by CWRU), a commitment to professional development within the hospital system (and alignment with continuous improvement principles) is essential for success. Intensive longitudinal FD programs held at ‘home’ institutions are only possible via institutional buy in, as participants typically require protected time to fully participate [14]. These operational costs (i.e., cost of time away from clinical practice, etc.) may need to be negotiated with leadership at multiple levels, and faculty self-advocacy skills are often needed.

Sustaining quality. When it comes to systems (e.g., clinical microsystems, educational microsystems, or assessment systems), a continuous focus on basic quality improvement principles [43] can ensure that outcomes will continue to be met via rapid cycles of change, despite changing contexts. Unlike CCLCM, which utilized programmatic assessment from its inception, programmatic assessment will be an innovation for most medical school programs. Hall et al. (2020), in their rapid evaluation study, noted the importance of continuous improvement efforts to sustain innovations such as CBME and programmatic assessment. In our 18-year experience with programmatic assessment, we have learned that the maintenance of our assessment system requires continuous monitoring and evaluation to ensure that CBME principles are still being met, especially as new stakeholders are integrated into the system. For instance, when faculty begin teaching within CCLCM, faculty development is critical to ensuring there is a shared mental model about CBME and programmatic assessment concepts [44]. For more seasoned faculty, a refocusing on program principles is equally important. We have also found continuous quality improvement to be particularly helpful as we quickly adapted faculty development sessions for virtual delivery during the COVID-19 pandemic. Where little to no live/virtual or asynchronous professional development was offered in the past, we now offer a number of asynchronous lessons to reach our faculty wherever they are and a times which are convenient to them.

Future research. More work is needed to understand how best to support faculty and students as they transition from a teacher-directed to a student-directed assessment culture which values bi-directional feedback and supportive learning environments. Future research should examine effective faculty development models (e.g., longitudinal, synchronous faculty development vs. just-in-time, asynchronous delivery) to support programmatic assessment across varied institutional contexts and institutional cultures, as context is critical when evaluating programmatic assessment processes and outcomes [45,46].

Author Contributions

Conceptualization, C.Y.C. and S.B.B.; formal analysis, S.B.B.; data curation, C.Y.C. and S.B.B.; writing—original draft preparation, C.Y.C. and S.B.B.; writing—review and editing, C.Y.C. and S.B.B.; visualization, C.Y.C. and S.B.B.; project administration, C.Y.C. and S.B.B. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

The study was conducted according to the guidelines of the Declaration of Helsinki, and approved by the Cleveland Clinic Foundation (protocol code #6600, approved on 30 April 2021).

Informed Consent Statement

Data are reported for medical students who signed a consent form to release routinely collected program evaluation data for educational research purposes.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Table A1.

Professional development activities for specific faculty roles.

Table A1.

Professional development activities for specific faculty roles.

| Program | Audience | Duration | Topics for Live/Virtual Sessions |

|---|---|---|---|

| Physician Advisor | CCLCM faculty who join the committee | 2 h sessions with ongoing support at weekly meetings |

|

| Promotion Committee Member | CCLCM who have senior level positions who join committee | 2 h training session in addition to monthly meetings |

|

| Cleveland Clinic Longitudinal Clerkship Faculty | CCLCM faculty who teach 3rd-year medical students during their clerkship year | 50 min virtual sessions |

|

| Communication Skills Preceptors | CCLCM faculty who teach 1st and 2nd-year medical students. | 2–5 h per faculty development day |

|

| Problem-Based Learning Facilitator | New and recurring facilitators | 30 min–2 h per session |

|

| Acute Preceptors | CCLCM faculty who act as preceptors to medical students during Acute Care course (inpatient experience) | 45–60 min sessions |

|

| Physical Diagnosis Preceptor | New and recurring facilitators | 1 h sessions |

|

Table A2.

Topics presented in 90-min sessions for health professions educators and essentials on demand audience.

Table A2.

Topics presented in 90-min sessions for health professions educators and essentials on demand audience.

| Topic | Session Title | Live, Virtual? (Synchronous) | Online Lesson? (Asynchronous) |

|---|---|---|---|

| Teaching and learning | Self-Regulation in Learning: Assisting our students to manage their learning | ✓ | |

| Teaching with Adult Learners in Mind | ✓ | ||

| Interactive Teaching Techniques for Classroom and Virtual Environments | ✓ | ||

| Advising, Mentoring and Role Modeling | ✓ | ||

| Feedback | Observation and Feedback for Health Professions Educators | ✓ | |

| Difficult Conversations in Health Professions Education | ✓ | ||

| Making Comments Count: Narrative Assessment Methods | ✓ | ||

| Safe learning environment | Creating Safe, Inclusive Learning Environments | ✓ | |

| Implicit Bias Workshop | ✓ | ||

| Competency based education and assessment | Competency Based Education as a Framework for Teaching and Learning | ✓ | |

| Assessment within Competency Frameworks—Are we All on the Same Page? | ✓ | ||

| Alignment of Assessment Evidence | ✓ | ||

| Quality and Quantity of Assessment Evidence in CBE Frameworks | ✓ | ||

| Curriculum Development | Writing Effective Learning Objectives | ✓ | |

| Make Your Teaching Interactive! A Focus on Gagne’s Events of Instruction | ✓ | ||

| Program Evaluation | Evaluating Educational Activity Effectiveness | ✓ |

References

- van der Vleuten, C.P.; Schuwirth, L.W.T.; Driessen, E.W.; Dijkstra, J.; Tigelaar, D.; Baartman, L.K.J.; van Tartwijk, J. A model for programmatic assessment fit for purpose. Med. Teach. 2012, 34, 205–214. [Google Scholar] [CrossRef]

- Schuwirth, L.W.; van der Vleuten, C.P. Programmatic assessment: From assessment of learning to assessment for learning. Med. Teach. 2011, 33, 478–485. [Google Scholar] [CrossRef] [PubMed]

- van der Vleuten, C.P.; Schuwirth, L.W. Assessing professional competence: From methods to programmes. Med. Educ. 2005, 39, 309–317. [Google Scholar] [CrossRef]

- Rosenberg, L.E. The physician-scientist: An essential—And fragile—Link in the medical research chain. J. Clin. Invest. 1999, 103, 1621–1626. [Google Scholar] [CrossRef] [PubMed]

- Wyngaarden, J.B. The clinical investigator as an endangered species. N. Engl. J. Med. 1979, 301, 1254–1259. [Google Scholar] [CrossRef] [PubMed]

- Zemlo, T.R.; Garrison, H.H.; Partridge, N.C.; Ley, T.J. The physician-scientist: Career issues and challenges at the year 2000. FAESB J. 2000, 14, 221–230. [Google Scholar] [CrossRef] [Green Version]

- Dannefer, E.F.; Henson, L.C. The portfolio approach to competency-based assessment at the Cleveland Clinic Lerner College of Medicine. Acad. Med. 2007, 82, 493–502. [Google Scholar] [CrossRef]

- Wiliam, D. What is assessment for learning? Stud. Educ. Eval. 2011, 37, 3–14. [Google Scholar] [CrossRef]

- Schellekens, L.H.; Bok, H.G.; de Jong, L.H.; van der Schaaf, M.F.; Kremer, W.D.; van der Vleuten, C.P. A scoping review on the notions of assessment as learning (AaL), assessment for learning (AfL), and assessment of learning (AoL). Stud. Educ. Eval. 2021, 71, 101094. [Google Scholar] [CrossRef]

- Heeneman, S.; de Jong, L.H.; Dawson, L.J.; Wilkinson, T.J.; Ryan, A.; Tait, G.R.; Rice, N.; Torre, D.; Freeman, A.; van der Vleuten, C.P.M. Ottawa 2020 consensus statement for programmatic assessment—1. Agreement on the principles. Med. Teach. 2021, 43, 1139–1148. [Google Scholar] [CrossRef]

- Bierer, S.B.; Dannefer, E.F.; Taylor, C.; Hall, P.; Hull, A.L. Methods to assess students’ acquisition, application and integration of basic science knowledge in an innovative competency-based educational program. Med. Teach. 2008, 30, e171–e177. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bierer, S.B.; Dannefer, E.F. The learning environment counts: Longitudinal qualitative analysis of study strategies adopted by first-year medical students in a competency-based educational program. Acad. Med. 2016, 91, S44–S52. [Google Scholar] [CrossRef]

- De Jong, L.; Bok, H.; Bierer, S.B.; van der Vleuten, C. Quality assurance in programmatic assessment. In Understanding Assessment in Medical Education through Quality Assurance; van der Vleuten, C., Hays, R., Malua-Aduli, B., Eds.; McGraw Hill: New York, NY, USA, 2021; pp. 137–170. [Google Scholar]

- Gruppen, L. Intensive longitudinal faculty development programs. In Faculty Development in the Health Professions. A Focus on Research and Practice; Steinert, Y., Ed.; Springer: Dordrecht, The Netherlands, 2014; pp. 197–216. [Google Scholar]

- Knowles, M.S.; Holton, E.F.; Swanson, R.A. The Adult Learner. In The Definitive Classic in Adult Education and Human Resource Development, 6th ed.; Elsevier: Burlington, MA, USA, 2005. [Google Scholar]

- Steinert, Y. (Ed.) Faculty Development in the Health Professions. A Focus on Research and Practice; Springer: Dordrecht, The Netherlands, 2014. [Google Scholar]

- Deslauriers, L.; Schelew, E.; Wieman, C. Improved learning in a large-enrollment physics class. Science 2011, 332, 862–864. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Freeman, S.; Eddy, S.L.; McDonough, M.; Smith, M.K.; Okoroafor, N.; Jordt, H.; Wenderoth, M.P. Active learning increases student performance in science, engineering, and mathematics. Proc. Natl. Acad. Sci. USA 2014, 111, 8410–8415. [Google Scholar] [CrossRef] [Green Version]

- Friedlander, M.J.; Andrews, L.; Armstrong, E.G.; Aschenbrenner, C.; Kass, J.S.; Ogden, P.; Schwartztein, R.; Viggiano, T.R. What can medical education learn from the neurobiology of learning? Acad. Med. 2011, 86, 15–420. [Google Scholar] [CrossRef]

- Kulasegaram, K.M.; Chaudhary, Z.; Woods, N.; Dore, K.; Neville, A.; Norman, G. Contexts, concepts and cognition: Principles for the transfer of basic science knowledge. Med. Educ. 2017, 51, 184–195. [Google Scholar] [CrossRef] [PubMed]

- Carmeli, A.; Brueller, D.; Dutton, J.E. Learning behaviors in the workplace: The role of high-quality interpersonal relationships and psychological safety. Syst. Res. Behav. Sci. 2009, 26, 81–98. [Google Scholar] [CrossRef] [Green Version]

- Ramani, S.; Konings, K.D.; Ginsburg, S.; van der Vleuten, C.P.M. Twelve tips to promote a feedback culture with a growth mindset: Swinging the feedback pendulum from recipes to relationships. Med. Teach. 2019, 41, 625–631. [Google Scholar] [CrossRef]

- Hattie, J.; Timperley, H. The power of feedback. Rev. Educ. Res. 2007, 77, 81–112. [Google Scholar] [CrossRef]

- Holmboe, E.S.; Ward, D.S.; Reznick, R.K.; Katsufrakis, P.J.; Leslie, K.M.; Patel, V.M.; Ray, D.D.; Nelson, E.A. Faculty development in assessment: The missing link in competency-based medical education. Acad. Med. 2011, 86, 460–467. [Google Scholar] [CrossRef]

- Sheu, L.; Hauer, K.E.; Schreiner, K.; van Schaik, S.M.; Chang, A.; O’Brien, B.C. “A friendly place to grow as an educator”: A qualitative study of community and relationships among medical student coaches. Acad. Med. 2020, 95, 293–300. [Google Scholar] [CrossRef]

- Driscoll, M.P. Gagne’s theory of instruction. In Psychology of Learning for Instruction, 3rd ed.; Allyn and Bacon: Boston, MA, USA, 2005; pp. 341–372. [Google Scholar]

- Frank, J.R.; Snell, L.S.; Cate, O.T.; Holmboe, E.S.; Carraccio, C.; Swing, S.R.; Harris, P.; Glasgow, N.J.; Campbell, C.; Dath, D.; et al. Competency-based medical education: Theory to practice. Med. Teach. 2010, 32, 638–645. [Google Scholar] [CrossRef] [PubMed]

- Altahawi, F.; Sisk, B.; Poloskey, S.; Hicks, C.; Dannefer, E.F. Student perspectives on assessment: Experience in a competency-based portfolio system. Med. Teach. 2012, 34, 221–225. [Google Scholar] [CrossRef] [PubMed]

- Pearce, J.; Tavares, W. A philosophical history of programmatic assessment: Tracing shifting configurations. Adv. Health Sci. Educ. Theory Pract. 2021, 26, 1291–1310. [Google Scholar] [CrossRef]

- Dauphinee, W.D.; Boulet, J.R.; Norcini, J.J. Considerations that will determine if competency-based assessment is a sustainable innovation. Adv. Health Sci. Educ. Theory Pract. 2019, 24, 413–421. [Google Scholar] [CrossRef]

- Bok, H.G.; Teunissen, P.W.; Favier, R.P.; Rietbroek, N.J.; Theyse, L.F.; Brommer, H.; Haarhuis, J.C.; van Beukelen, P.; van der Vleuten, C.P.; Jaarsma, D.A. Programmatic assessment of competency-based workplace learning: When theory meets practice. BMC Med. Educ. 2013, 13, 123. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hall, A.K.; Rich, J.; Dagnone, J.D.; Weersink, K.; Caudle, J.; Sherbino, J.; Frank, J.R.; Bandiera, G.; Van Melle, E. It’s a marathon, not a sprint: Rapid evaluation of competency-based medical education program implementation. Acad. Med. 2020, 95, 786–793. [Google Scholar] [CrossRef]

- Schut, S.; Driessen, E.; van Tartwijk, J.; van der Vleuten, C.; Heeneman, S. Stakes in the eye of the beholder: An international study of learners’ perceptions within programmatic assessment. Med. Educ. 2018, 52, 654–663. [Google Scholar] [CrossRef]

- Heeneman, S.; Oudkerk Pool, A.; Schuwirth, L.W.; van der Vleuten, C.P.; Driessen, E.W. The impact of programmatic assessment on student learning: Theory versus practice. Med. Educ. 2015, 49, 487–498. [Google Scholar] [CrossRef]

- Torre, D.; Rice, N.E.; Ryan, A.; Bok, H.; Dawson, L.J.; Bierer, B.; Wilkinson, T.J.; Tait, G.R.; Laughlin, T.; Veerapen, K.; et al. Ottawa 2020 consensus statements for programmatic assessment—2. Implementation and practice. Med. Teach. 2021, 43, 1149–1160. [Google Scholar] [CrossRef]

- Richardson, D.; Kinnear, B.; Hauer, K.E.; Turner, T.L.; Warm, E.J.; Hall, A.K.; Ross, S.; Thoma, B.; Van Melle, E.; ICBME Collaborators. Growth mindset in competency-based medical education. Med. Teach. 2021, 43, 751–757. [Google Scholar] [CrossRef]

- Konopasek, L.; Norcini, J.; Krupat, E. Focusing on the formative: Building an assessment system aimed at student growth and development. Acad. Med. 2016, 91, 1492–1497. [Google Scholar] [CrossRef] [PubMed]

- Sandars, J.; Cleary, T.J. Self-regulation theory: Applications to medical education: AMEE Guide No. 58. Med. Teach. 2011, 33, 875–886. [Google Scholar] [CrossRef]

- Schut, S.; Maggio, L.A.; Heeneman, S.; van Tartwijk, J.; van der Vleuten, C.; Driessen, E. Where the rubber meets the road—An integrative review of programmatic assessment in health care professions education. Perspect Med. Educ. 2021, 10, 6–13. [Google Scholar] [CrossRef]

- Ramani, S.; Könings, K.D.; Ginsburg, S.; van der Vleuten, C.P. Feedback redefined: Principles and practice. J. Gen. Intern. Med. 2019, 34, 744–749. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- London, M.; Smither, J.W. Feedback orientation, feedback culture, and the longitudinal performance management process. Hum. Res. Manag. Rev. 2002, 12, 81–100. [Google Scholar] [CrossRef]

- French, J.C.; Colbert, C.Y.; Pien, L.C.; Dannefer, E.F.; Taylor, C.A. Targeted feedback in the milestones era: Utilization of the ask-tell-ask feedback model to promote reflection and self-assessment. J. Surg. Educ. 2015, 72, e274–e279. [Google Scholar] [CrossRef] [PubMed]

- Best, M.; Neuauser, D. Walter, A Shewhart, 1924, and the Hawthorne factory. Qual. Saf. Health Care 2006, 15, 142–143. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ross, S.; Hauer, K.E.; Wycliffe-Jones, K.; Hall, A.K.; Molgaard, L.; Richardson, D.; Oswald, A.; Bhanji, F.; ICBME Collaborators. Key considerations in planning and designing programmatic assessment in competency-based medical education. Med. Teach. 2021, 43, 758–764. [Google Scholar] [CrossRef]

- Torre, D.M.; Schuwirth, L.W.T.; van der Vleuten, C.P.M. Theoretical considerations on programmatic assessment. Med. Teach. 2020, 42, 213–220. [Google Scholar] [CrossRef]

- Roberts, C.; Khanna, P.; Lane, A.S.; Reiman, P.; Schuwirth, L. Exploring complexities in the reform of assessment practice: A critical realist perspective. Adv. Health Sci. Educ. Theory Pract. 2021, 26, 1641–1657. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).