Unique Problems Require Unique Solutions—Models and Problems of Linking School Effectiveness and School Improvement

Abstract

:1. Introduction

2. Linking School Effectiveness and School Improvement: A Problem Description

3. Models for Linking School Effectiveness Research and School Improvement: CF-ESI and DMEE/DASI

3.1. Comprehensive Framework of Effective School Improvement (CF-ESI)

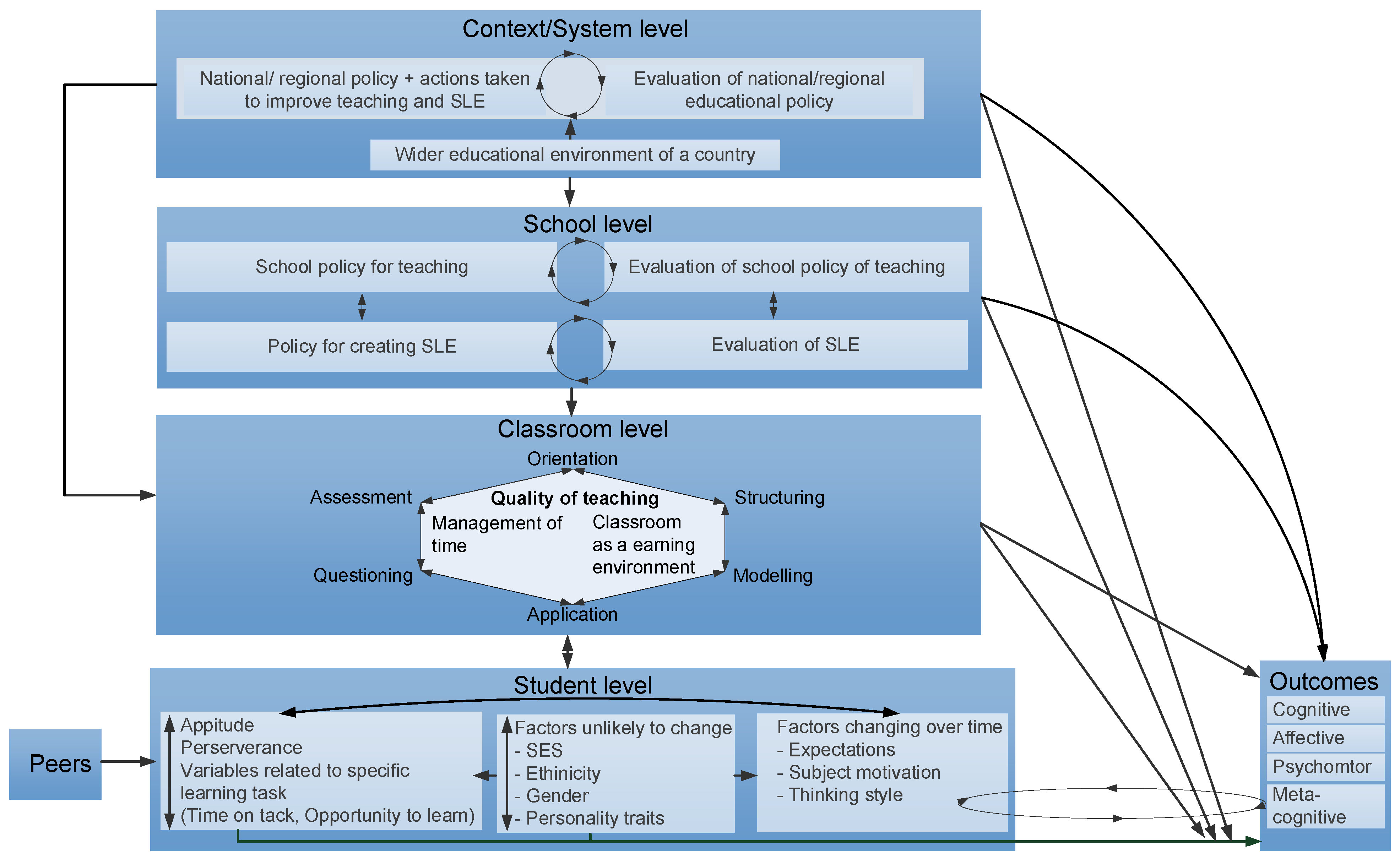

3.2. Dynamic Model of Educational Effectiveness (DMEE) and Dynamic Approach to School Improvement (DASI)

4. Critical Queries

- (1)

- In what ways do the two models construct the link between school effectiveness and school improvement?

- (2)

- To what extent do the models take into account any aspects of a ‘technology deficit’ or tend to reflect potential causality problems?

5. Conclusions, Outlook and Further Implications

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Reynolds, D.; Sammons, P.; Fraine, B.; van Damme, J.; Townsend, T.; Teddlie, C.; Stringfield, S. Educational effectiveness research (EER): A state-of-the-art review. Sch. Eff. Sch. Improv. 2014, 25, 197–230. [Google Scholar] [CrossRef] [Green Version]

- Bischof, L.M. Schulentwicklung und Schuleffektivität: Ihre theoretische und empirische Verknüpfung; Springer VS Imprint: Wiesbaden, Germany, 2017. [Google Scholar]

- Scheerens, J. Recapitulation and Application to School Imrpovement. In Educational Effectiveness and Ineffectiveness; Scheerens, J., Ed.; Springer: Dordrecht, The Netherlands, 2016; pp. 291–332. [Google Scholar]

- Scherer, R.; Nilsen, T. Closing the gaps?: Differential effectiveness and accountability as a road to school improvement. Sch. Eff. Sch. Improv. Int. J. Res. Policy Pract. 2019, 30, 255–260. [Google Scholar] [CrossRef] [Green Version]

- Reynolds, D.; Neeleman, A. School Improvement Capacity. In Concept and Design Developments in School Improvement Research; Oude Groote Beverborg, A., Feldhoff, T., Maag Merki, K., Radisch, F., Eds.; Springer International Publishing: Cham, Switzerland, 2021. [Google Scholar]

- Dreeben, R. The Nature of Teaching: Schools and the Work of Teachers; Scott, Foresman and Company: Glenview, IL, USA, 1970. [Google Scholar]

- Luhmann, N.; Schorr, K.-E. Reflexionsprobleme im Erziehungssystem, 2nd ed.; Suhrkamp: Frankfurt, Germany, 1988. [Google Scholar]

- Weick, K.E. Educational organizations as loosely coupled systems. Adm. Sci. Q. 1976, 21, 1–19. [Google Scholar] [CrossRef]

- Weick, K.E. Administering education in loosely coupled schools. Educ. Dig. 1982, 48, 28–36. [Google Scholar]

- Clark, D.L.; Lotto, L.S.; Astuto, T.A. Effective schools and school improvement: A comparative analysis of two lines of inquiry. Educ. Adm. Q. 1984, 20, 41–68. [Google Scholar] [CrossRef]

- Bonsen, M.; Büchter, A.; van Ophuysen, S. Im Fokus: Leistung. In Jahrbuch der Schulentwicklung: Daten, Beispiele und Perspektiven; Holtappels, H.-G., Klemm, K., Pfeiffer, H., Rolff, H.-G., Schulz-Zander, R., Eds.; Juventa: Weinheim/München, Germany, 2004; pp. 187–223. [Google Scholar]

- Brown, S.; Riddell, S.; Duffield, J. Possibilities and problems of small-scale studies to unpack the findings of large-scale studies of school effectiveness. In Merging Traditions. The Future of Research on School Effectiveness and School Improvement; Gray, J., Reynolds, D., Fitz-Gibbon, C.T., Jesson, D., Eds.; Cassell: London, UK, 1996; pp. 93–120. [Google Scholar]

- Gray, J.; Reynolds, D.; Fitz-Gibbon, C.T.; Jesson, D. (Eds.) Merging Traditions. The Future of Research on School Effectiveness and School Improvement; Cassell: London, UK, 1996. [Google Scholar]

- Harris, A. Contemporary perspectives on school effectiveness and school improvement. In School Effectiveness and School Improvement: Alternative Perspectives; Harris, A., Bennett, N., Eds.; Continuum: London, UK, 2001; pp. 7–25. [Google Scholar]

- Reynolds, D.; Stoll, L. Merging school effectiveness and school improvement. In Making Good Schools: Linking School Effectiveness and School Improvement; Reynolds, D., Bollen, R., Creemers, B.P.M., Hopkins, D., Stoll, L., Lagerweij, N., Eds.; Routledge: London, UK, 1996; pp. 94–112. [Google Scholar]

- Teddlie, C.; Stringfield, S. A history of school effectiveness and improvement research in the USA focusing on the past quarter century. In International Handbook of School Effectiveness and Improvement; Townsend, T., Ed.; Springer: Dordrecht, The Netherlands, 2007; pp. 131–166. [Google Scholar]

- Creemers, B.P.M. From school effectiveness and school improvement to effective school improvement: Background, theoretical analysis, and outline of the empirical study. Educ. Res. Eval. 2002, 8, 343–362. [Google Scholar] [CrossRef]

- Creemers, B.P.M. Educational effectiveness and improvement: The development of the field in Mainland Europe. In International Handbook of School Effectiveness and Improvement; Townsend, T., Ed.; Springer: Dordrecht, The Netherlands, 2007; pp. 223–242. [Google Scholar]

- Reezigt, G.J.; Creemers, B.P.M. A comprehensive framework for effective school improvement. Sch. Eff. Sch. Improv. 2005, 16, 407–424. [Google Scholar] [CrossRef]

- Reynolds, D.; Teddlie, C.; Hopkins, D.; Stringfield, S. Linking School Effectiveness and School Improvement. In The International Handbook of School Effectiveness Research; Teddlie, C., Reynolds, D., Eds.; Falmer Press: London, UK, 2000; pp. 206–231. [Google Scholar]

- Creemers, B.P.M.; Reezigt, G.J. School effectiveness and school improvement: Sustaining links. Sch. Eff. Sch. Improv. 1997, 8, 396–429. [Google Scholar] [CrossRef]

- Reynolds, D.; Hopkins, D.; Stoll, L. Linking school effectiveness knowledge and school improvement practice: Towards a synergy. Sch. Eff. Sch. Improv. 1993, 4, 37–58. [Google Scholar] [CrossRef]

- Stoll, L. Linking school effectiveness and school improvement: Issues and possibilities. In Merging Traditions. The Future of Research on School Effectiveness and School Improvement; Gray, J., Reynolds, D., Fitz-Gibbon, C.T., Jesson, D., Eds.; Cassell: London, UK, 1996; pp. 51–73. [Google Scholar]

- Stoll, L.; Creemers, B.P.M.; Reezigt, G.J. Effective school improvement. In Improving Schools and Educational Systems: International Perspectives, 1st ed.; Harris, A., Chrispeels, J.H., Eds.; Routledge: London, UK, 2006; pp. 90–104. [Google Scholar]

- Creemers, B.P.M.; Kyriakides, L. The Dynamics of Educational Effectiveness: A Contribution to Policy, Practice and Theory in Contemporary Schools; Routledge: London, UK, 2008. [Google Scholar]

- Creemers, B.; Kyriakides, L. Developing, testing, and using theoretical models for promoting quality in education. Sch. Eff. Sch. Improv. 2015, 26, 102–119. [Google Scholar] [CrossRef]

- Kyriakides, L.; Creemers, B.P.; Panayiotou, A.; Charalambous, E. Quality and Equity in Education; Routledge: London, UK, 2020. [Google Scholar]

- Scheerens, J. Improving School Effectiveness; Unesco, International Institute for Educational Planning: Paris, France, 2000. [Google Scholar]

- Fend, H. “Gute Schulen—Schlechte Schulen”. Die einzelne Schule als pädagogische Handlungseinheit. Die Dtsch. Sch. 1986, 78, 275–293. [Google Scholar]

- Fend, H. Schule als pädagogische Handlungseinheit im Kontext. In Schulgestaltung: Aktuelle Befunde und Perspektiven der Schulqualitäts-und Schulentwicklungsforschung. Grundlagen der Qualität von Schule 2, 1st ed.; Steffens, U., Maag Merki, K., Fend, H., Eds.; Waxmann: Münster, Germany, 2017; pp. 85–102. [Google Scholar]

- Bonsen, M.; Bos, W.; Rolff, H.-G. Zur Fusion von Schuleffektivitäts- und Schulentwicklungsforschung. In Jahrbuch der Schulentwicklung: Daten, Beispiele und Perspektiven; Bos, W., Holtappels, H.-G., Pfeiffer, H., Rolff, H.-G., Schulz-Zander, R., Eds.; Juventa: Weinheim/München, Germany, 2008; pp. 11–39. [Google Scholar]

- Gray, J.; Hopkins, D.; Reynolds, D.; Wilcox, B.; Farrell, S.; Jesson, D. Improving Schools: Performance and Potential; Open University Press: Buckingham, UK; Philadelphia, PA, USA, 1999. [Google Scholar]

- Hopkins, D. School Improvement for Real; RoutledgeFalmer: London, UK; New York, NY, USA, 2001. [Google Scholar]

- Feldhoff, T.; Radisch, F. Why must everything be so complicated? Demands and challenges on methods for analyzing school improvement processes. In Concept and Design Developments in School Improvement Research; Oude Groote Beverborg, A., Feldhoff, T., Maag Merki, K., Radisch, F., Eds.; Springer International Publishing: Cham, Switzerland, 2021. [Google Scholar]

- Bonsen, M.; Bos, W.; Rolff, H.-G. On the fusion of school effectiveness and school improvement research. In Yearbook of school improvement, 15th ed.; Bos, W., Holtappels, H.G., Pfeiffer, H., Rolff, H.-G., Schulz-Zander, R., Eds.; Juventa: Weinheim, Germany, 2008; pp. 11–39. [Google Scholar]

- Bennett, N.; Harris, A. Hearing truth from power? Organisation theory, school effectiveness and school improvement. Sch. Eff. Sch. Improv. 1999, 10, 533–550. [Google Scholar] [CrossRef]

- Berkemeyer, N.; Bos, W.; Gröhlich, C. Schulentwicklungsprozesse in Längsschnittstudien. In Handbuch Schulentwicklung: Theorie—Forschungsbefunde—Entwicklungsprozesse—Methodenrepertoire, 1st ed.; Bohl, T., Helsper, W., Holtappels, H.-G., Schelle, C., Eds.; UTB: Stuttgart, Germany, 2010; pp. 147–150. [Google Scholar]

- Bischof, L.; Feldhoff, T.; Hochweber, J.; Klieme, E. Untersuchung von Schulenwticklung anhand von Schuleffektivitätsdaten—“Yes we can?!”. In Aktuelle Befunde und Perspektiven der Schulqualitäts-und Schulentwicklungsforschung. Grundlagen der Qualität von Schule 2; Steffens, U., Maag Merki, K., Fend, H., Eds.; Waxmann: Münster, Germany, 2017; pp. 287–308. [Google Scholar]

- Reezigt, G.J. A Framework for Effective School Improvement; GION, Institute for Educational Research, University of Groningen: Groningen, The Netherlands, 2001. [Google Scholar]

- Stoll, L.; Wikeley, F. Issues on linking school effectiveness and school improvement. In Effective School Improvement: State of the Art: Contribution to a Discussion; Hoeben, W.T.J.G., Ed.; GION, Institute for Educational Research, University of Groningen: Groningen, The Netherlands, 1998; pp. 27–53. [Google Scholar]

- Creemers, B.P.M.; Kyriakides, L. Improving Quality in Education: Dynamic Approaches to School Improvement; Routledge: Abingdon, UK; New York, NY, USA, 2012. [Google Scholar]

- Hoeben, W.T.J.G. Linking different theoretical traditions: Towards a comprehensive framework for effective school improvement. In Effective School Improvement: State of the Art: Contribution to a Discussion; Hoeben, W.T.J.G., Ed.; GION, Institute for Educational Research, University of Groningen: Groningen, The Netherlands, 1998; pp. 135–218. [Google Scholar]

- Scheerens, J.; Demeuse, M. The theoretical basis of the effective school improvement model (ESI). Sch. Eff. Sch. Improv. 2005, 16, 373–385. [Google Scholar] [CrossRef]

- Creemers, B.P.M. The Effective Classroom; Cassell: London, UK, 1994. [Google Scholar]

- Creemers, B.P.M. The goals of school effectiveness and school improvement. In Making Good Schools: Linking School Effectiveness and School Improvement; Reynolds, D., Bollen, R., Creemers, B.P.M., Hopkins, D., Stoll, L., Lagerweij, N., Eds.; Routledge: London, UK, 1996; pp. 21–35. [Google Scholar]

- de Jong, R.; Westerhof, K.; Kruiter, J. Empirical evidence of a comprehensive model of school effectiveness: A multilevel study in mathematics in the 1st year of junior general education in the Netherlands. Sch. Eff. Sch. Improv. 2004, 15, 3–31. [Google Scholar] [CrossRef]

- Kyriakides, L. Extending the comprehensive model of educational effectiveness by an empirical investigation. Sch. Eff. Sch. Improv. 2005, 16, 103–152. [Google Scholar] [CrossRef]

- Kyriakides, L.; Campbell, R.J.; Gagatsis, A. The significance of the classroom effect in primary schools: An application of Creemers’ comprehensive model of educational effectiveness. Sch. Eff. Sch. Improv. 2000, 11, 501–529. [Google Scholar] [CrossRef]

- Reezigt, G.J.; Guldemond, H.; Creemers, B.P.M. Empirical validity for a comprehensive model on educational effectiveness. Sch. Eff. Sch. Improv. 1999, 10, 193–216. [Google Scholar] [CrossRef]

- Creemers, B.P.M.; Kyriakides, L. A theoretical based approach to educational improvement: Establishing links between educational effectiveness research and school improvement. Jahrb. Schulentwickl. 2008, 15, 41–61. [Google Scholar]

- Creemers, B.P.M.; Kyriakides, L. Using the Dynamic Model to develop an evidence-based and theory-driven approach to school improvement. Ir. Educ. Stud. 2010, 29, 5–23. [Google Scholar] [CrossRef]

- Scheerens, J. The use of theory in school effectiveness research revisited. Sch. Eff. Sch. Improv. 2013, 24, 1–38. [Google Scholar] [CrossRef]

- Scheerens, J. Theories on educational effectiveness and ineffectiveness. Sch. Eff. Sch. Improv. 2015, 26, 10–31. [Google Scholar] [CrossRef] [Green Version]

- Ditton, H. Qualitätskontrolle und Qualitätssicherung in Schule und Unterricht: Ein Überblick zum Stand der empirischen Forschung. In Qualität und Qualitätssicherung im Bildungsbereich: Schule, Sozialpädagogik, Hochschule; Helmke, A., Hornstein, W., Terhart, E., Eds.; Beltz: Weinheim, Germany, 2000; pp. 73–92. [Google Scholar]

- Fend, H. Qualität im Bildungswesen: Schulforschung zu Systembedingungen, Schulprofilen und Lehrerleistung; Juventa: Weinheim, Geramny, 1998. [Google Scholar]

- Scheerens, J.; Bosker, R.J. The Foundations of Educational Effectiveness; Pergamon: Oxford, UK, 1997. [Google Scholar]

- Stufflebeam, D.L. Evaluation als Entscheidungshilfe. In Evaluation—Beschreibung und Bewertung von Unterricht, Curricula und Schulversuchen; Wulf, C., Ed.; Piper: München, Germany, 1972. [Google Scholar]

- Teddlie, C.; Reynolds, D. (Eds.) The International Handbook of School Effectiveness Research; Falmer Press: London, UK, 2000. [Google Scholar]

- Hallinger, P.; Heck, R.H. Conceptual and methodological issues in studying school leadership effects as a reciprocal process. Sch. Eff. Sch. Improv. 2011, 22, 149–173. [Google Scholar] [CrossRef]

- Heck, R.H.; Hallinger, P. Collaborative Leadership Effects on School Improvement: Integrating Unidirectional- and Reciprocal-Effects Models. Elem. Sch. J. 2010, 111, 226–252. [Google Scholar] [CrossRef]

- Heck, R.H.; Chang, J. Examining the Timing of Educational Changes Among Elementary Schools After the Implementation of NCLB. Educ. Adm. Q. 2017, 54, 0013161X1771148. [Google Scholar] [CrossRef]

- Heck, R.H.; Reid, T. School leadership and school organization: Investigating their effects on school improvement in reading and math. Z Erzieh. 2020, 23, 925–954. [Google Scholar] [CrossRef]

- Oude Groote Beverborg, A.; Feldhoff, T.; Maag Merki, K.; Radisch, F. (Eds.) Concept and Design Developments in School Improvement Research; Springer International Publishing: Cham, Switzerland, 2021. [Google Scholar]

- Sleegers, P.J.; Thoonen, E.E.; Oort, F.J.; Peetsma, T.T. Changing classroom practices: The role of school-wide capacity for sustainable improvement. J. Educ. Admin 2014, 52, 617–652. [Google Scholar] [CrossRef]

- Thoonen, E.E.; Sleegers, P.J.; Oort, F.J.; Peetsma, T.T. Building school-wide capacity for improvement: The role of leadership, school organizational conditions, and teacher factors. Sch. Eff. Sch. Improv. 2012, 23, 441–460. [Google Scholar] [CrossRef]

- Cuban, L. Effective schools: A friendly but cautionary note. Phi Delta Kappan 1983, 64, 695–696. [Google Scholar]

- Ditton, H. Elemente eines Systems der Qualitätssicherung im schulischen Bereich. In Qualitätssicherung im Bildungswesen: Reihe: Erfurter Studien zur Entwicklung des Bildungswesens; Weishaupt, H., Ed.; Pädagogische Hochschule: Erfurt, Germany, 2000; pp. 13–35. [Google Scholar]

- Fullan, M.G.; Stiegelbauer, S.M. The New Meaning of Educational Change, 2nd ed.; Cassell: London, UK, 1991. [Google Scholar]

- Hargreaves, A. Changing Teachers, Changing Times: Teachers’ Work and Culture in the Postmodern Age; Cassell: London, UK, 1994. [Google Scholar]

- Lezotte, L.W. School improvement based on the effective schools research. Int. J. Educ. Res. 1989, 13, 815–825. [Google Scholar] [CrossRef]

- Newmann, F.M. Student engagement in academic work: Expanding the perspective on secondary school effectiveness. In Rethinking Effective Schools: Research and Practice; Bliss, J.R., Firestone, W.A., Richards, C.E., Eds.; Prentice-Hall: Hoboken, NJ, USA, 1991; pp. 58–75. [Google Scholar]

- Reynolds, D. School effectiveness: Past, present and future directions. In Schulentwicklung und Schulwirksamkeit. Systemsteuerung, Bildungschancen und Entwicklung der Schule; Holtappels, H.-G., Höhmann, K., Eds.; Juventa: München, Germany, 2005; pp. 11–25. [Google Scholar]

- Stoll, L.; Fink, D. Effecting school change: The Halton approach. Sch. Eff. Sch. Improv. 1992, 3, 19–41. [Google Scholar] [CrossRef]

- Fullan, M.G. Change processes and strategies at the local level. Elem. Sch. J. 1985, 85, 391–421. [Google Scholar] [CrossRef] [Green Version]

- Dalin, P.; Rolff, H.-G.; Buchen, H. Institutioneller Schulentwicklungs-Prozeß: Ein Handbuch, 4th ed.; Verlag für Schule und Weiterbildung: Bönen/Westf, Germany, 1998. [Google Scholar]

- Fend, H. Neue Theorie der Schule: Einführung in das Verstehen von Bildungssystemen, 2nd ed.; Springer VS Verlag: Wiesbaden, Germany, 2008. [Google Scholar]

- Fend, H. Schule Gestalten: Systemsteuerung, Schulentwicklung und Unterrichtsqualität, 1st ed.; Springer VS Verlag: Wiesbaden, Germany, 2008. [Google Scholar]

- Luhmann, N.; Schorr, K.-E. Das Technologiedefizit der Erziehung und die Pädagogik. Z. Pädagogik 1979, 25, 345–365. [Google Scholar]

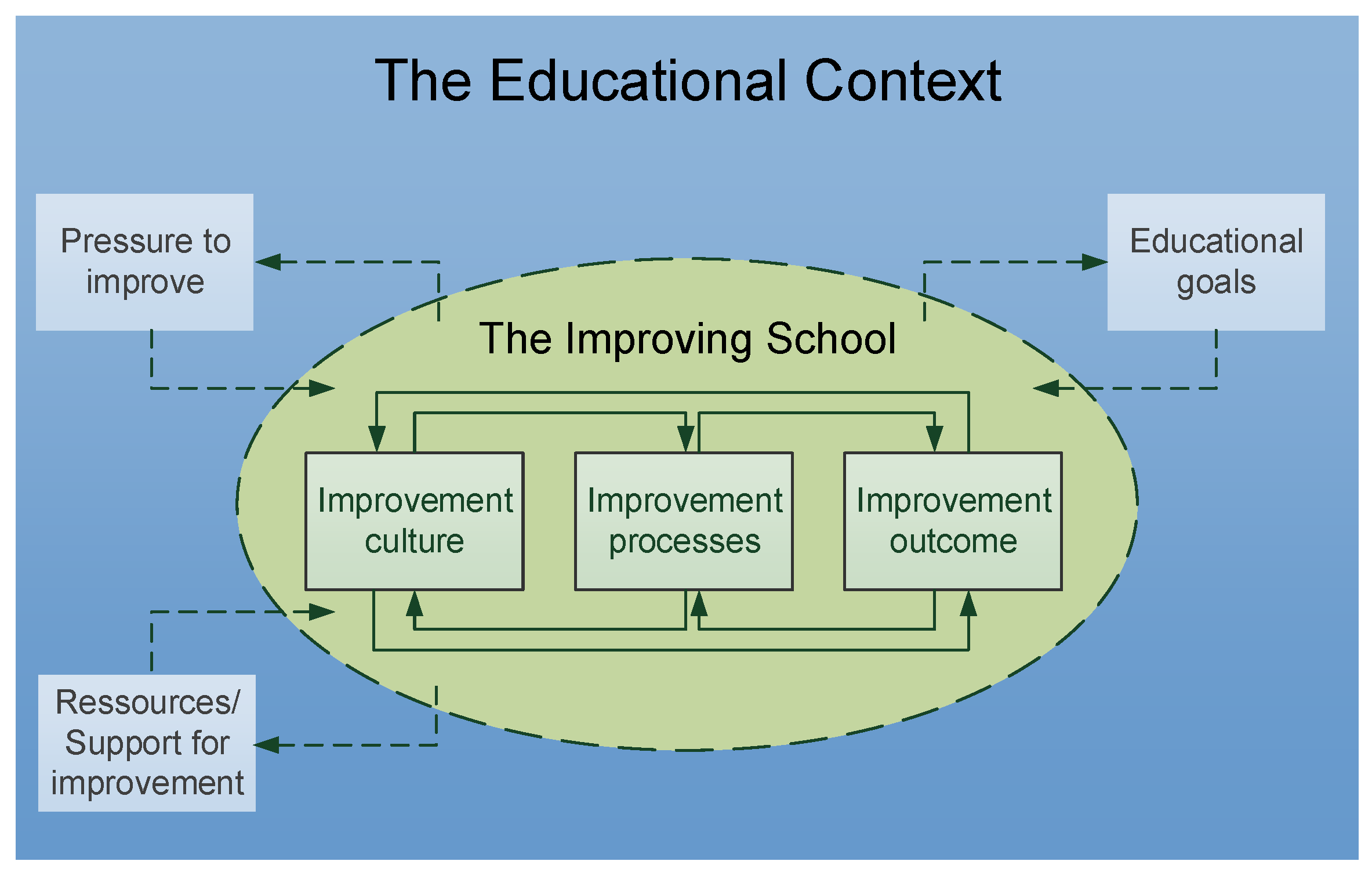

| Pressure to Improve | Resources/Support for Improvement | Educational Goals |

|---|---|---|

|

|

|

| lmprovement Culture | Improvement Processes | Improvement Outcomes |

|---|---|---|

|

|

|

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Feldhoff, T.; Emmerich, M.; Radisch, F.; Wurster, S.; Bischof, L.M. Unique Problems Require Unique Solutions—Models and Problems of Linking School Effectiveness and School Improvement. Educ. Sci. 2022, 12, 158. https://doi.org/10.3390/educsci12030158

Feldhoff T, Emmerich M, Radisch F, Wurster S, Bischof LM. Unique Problems Require Unique Solutions—Models and Problems of Linking School Effectiveness and School Improvement. Education Sciences. 2022; 12(3):158. https://doi.org/10.3390/educsci12030158

Chicago/Turabian StyleFeldhoff, Tobias, Marcus Emmerich, Falk Radisch, Sebastian Wurster, and Linda Marie Bischof. 2022. "Unique Problems Require Unique Solutions—Models and Problems of Linking School Effectiveness and School Improvement" Education Sciences 12, no. 3: 158. https://doi.org/10.3390/educsci12030158

APA StyleFeldhoff, T., Emmerich, M., Radisch, F., Wurster, S., & Bischof, L. M. (2022). Unique Problems Require Unique Solutions—Models and Problems of Linking School Effectiveness and School Improvement. Education Sciences, 12(3), 158. https://doi.org/10.3390/educsci12030158