Abstract

The article aims to outline the proposals for linking school effectiveness research and school improvement that are currently relevant in international discussion and to ask how they deal with the ‘technology deficit’ at the level of model improvement. We will first show the difference between school improvement and school effectiveness. Then, we outline areas showing basic problems associated with linking school effectiveness and school improvement. After that, we present a summary of two relevant models for linking school effectiveness research and school improvement—Comprehensive Framework of Effective School Improvement and Dynamic Model of Educational Effectiveness—and subject them to critical analysis. The result of the critical analysis is that the question arises whether a link between the two traditions leads to a complementation of their respective problems of observation rather than to a compensation of them. This, in particular, concerns the problem of causality, which gives rise to theoretical and methodological considerations in both fields of research.

1. Introduction

The relationship between school effectiveness research and school improvement has been the subject of international discussions among researchers for quite some time [,,]. The discussions are essentially concerned with the possibilities and limits of a methodologically valid evidence base for school improvement programmes and school improvement practices [,,]. However, there is a consensus in theoretical terms that the organisational and interactional structure of multi-level education systems, as well as the varying social contextual conditions of schools, must be taken into account as relevant influencing factors. The recent linking models consider the multi-level structure of organised teaching and learning. However, our further considerations problematise that those linking models are obviously not able to take into account the complexity of organisational and interactional processes in a methodologically appropriate way. Following a relevant educational research tradition, teaching can be conceptualised as a complex interaction process constantly generating unforeseeable and unpredictable events that are potentially able to effectively change the directions and aims of classroom communication. In particular Dreeben’s [] initial research on how classroom teaching has to get ‘working’ based on weak ‘technologies’ highlighted the complex ‘nature’ of concrete educational interaction. E.g., a didactical or instructional ‘technique’ processed by the same teacher may work in one class at one time while failing in another class and/or at another time. Thus, the application of such teaching or instructional techniques should not be conceptualised as an independent variable since the same causes that would explain why it works would also explain why it does not. One common methodological way out of these processual causality issues is to construct a person-related causal chain linked by social background or classroom composition variables embedded in a control-group-design: if students do react differently on the same teaching technology applied by the same teacher, then the discriminatory effect can be assumed to be caused by the students. However statistical likelihood based on person variables overlooks the complexity of the process. This may be one reason for the low effect sizes commonly measured within school improvement and school effectiveness research.

Following Dreeben’s [] approach, Luhmann and Schorr [] theorised classroom education itself as a non-linear, acausal and context-dependant interaction process characterised by a structural and in this perspective insurmountable ‘technology deficit’. This deficit makes the realisation of expected or desired learning effects through classroom interaction quite unlikely. Luhmann and Schorr [] further argue that the education system compensates for the ‘technology deficit’ by building up organisation-based replacement technologies such as ability grouping, rating, tracking and sorting students. It is the organisational dimension that provides output-related expectability, as well as accountability, by using its ability to make decisions.

Rethinking the ‘technological deficit’ by using Weick’s [,] work on schools as loosely coupled systems forces the idea of a de facto given re-technologisation of education through organisation to be rejected. According to Weick [,], the decision-based process of organising is primarily grounded in a loose coupling of actions, interpretations and persons rather than in any decision-making technology. Weick’s considerations imply that schools endemically produce uncertainties about whether or not they did, do and will do the ‘right’ things; whether or not the decisions that have been made were goal-attaining—and if they were not, how and what should be decided next. Modern organisation theory claims a more ‘realistic’ and epistemologically reflected view on organisations as complex social systems. School improvement concepts and strategies apparently depend on a realistic or at least multi-perspective picture of the system they intend to change. Weick’s approach may help to avoid unrealistic ‘technological’ notions of what a school organisation is and how it works.

Bringing the interaction-level back in, school system processes appear to be determined by a twofold technological deficit: on the interaction and the organisation level and, as a ‘causal’ consequence, on the level of ‘linking’ both as well. How could a loosely coupled organisation system provide causality for a loosely coupled interaction system operating in the classroom? This is the question we think is crucial for any school improvement/school effectiveness linking approach. Regarding methodological implications, we need to consider both process dimensions as dependent variables varying contingently over time and space.

With regard to school effectiveness research, the research and measurement of methodological competences are discussed in particular and linked with the claim to be able to validly identify those school process variables that noticeably influence mostly cognitive improvements at student level—measured by the achieved ‘outcome’ of student performance. Based on this, school improvement is ultimately understood as an indicator-supported process optimisation programme for improving the ‘outcome’ of teaching-learning processes in schools. The demand for a link between school effectiveness and school improvement seems to be primarily linked to the question of how such optimisation programmes can be designed and implemented at school level. In contrast, school improvement research is primarily concerned with the problems of both planned change at the organisational level of schools and the ability to influence instructional interaction processes through organisational factors. In this research context, ‘outcomes’ are a relevant evaluation criterion, but the focus of research interest is not on the targeted changeability of cognitive structures, but on social structures and thus action processes.

However, effectiveness research and improvement research do not only differ in terms of their constitutive objects of observation (on the one hand, the learning-effective influenceability of intrapsychic learning processes and, on the other hand, the influenceability of collective action or interaction processes). Rather, in the case of school effectiveness research, the object of reference is predominantly pedagogical-psychological, while school improvement approaches are based on sociological foundations such as organisation and/or action theory. Due to this, a link of both approaches has to overcome fundamental epistemological and research methodological differences.

With regard to scientific and practical conditions that point to a possible link between school effectiveness and school improvement, considerable difficulties are emerging when considering this initial situation. The empirical treatment of the problems of effectiveness and the capacity for improvement of schools or school systems is, confronted with the fundamental problems of educational science. As discussed above, these problems become significant as a result of the twofold ‘technology deficit’ affecting both classroom action and school organisational decision-making [,]. On the one hand, it must be taken into account that neither the complex interaction processes of teaching and learning nor the action processes at the level of school organisation take place under ‘controllable’ conditions of causality. On the other hand, limits within the possibilities of observation are marked where measurable impact models must assume real chains of effects that cannot be verified by the model or by the empirical data it generates.

Nevertheless, the following article aims to outline the proposals for linking school effectiveness research and school improvement that are currently relevant in international discussion and to ask if and how they theoretically and methodologically deal with the ‘technology deficit’ at the level of model improvement. We first attempt to outline areas showing basic problems associated with linking school effectiveness and school improvement (Section 2). We then present a summary of relevant models of linking school effectiveness research and school improvement research (Section 3) and subject them to critical analysis (Section 4). The paper concludes with a summary and conclusions for further theory building and the improvement of possible research designs.

2. Linking School Effectiveness and School Improvement: A Problem Description

The beginnings of the debate on linking the perspectives of school effectiveness and school improvement date back to the 1980s []. Since then, the call for a link has been based on the assumption that the learning effectiveness of schools, as well as their purposeful improvement, can be described in a theoretically and empirically more differentiated way by integrating both approaches [,,,,,,,]. The gain in knowledge thus promised is to be used simultaneously for the improvement of school practice and the change of school reality [,,,,]. However, critical contributions have repeatedly been made to the discussion, emphasising in particular the obstacles that result not least from genuine differences in the object reference of school effectiveness and school improvement [,,,,,].

Nevertheless, in the context of the ongoing debate, different strategies for linking the two perspectives have been discussed. The basic difference is whether these strategies (a) start at the scientific level of theory building and research itself [] or whether they (b) try to integrate research findings and thus research methods into school improvement practice within the framework of theory and data-driven school improvement [,,,,]:

(a) Despite the recurring discussion about the possibilities of a scientific link, a largely independent development of school effectiveness research and school improvement research or school improvement practice has emerged, which can be attributed not least to fundamental differences in the theoretically modelled and empirically realised object reference.

School effectiveness research bases its central object of observation along the question of why schools, despite the same institutional framework and comparable contextual conditions (e.g., school and class composition), generate a statistically significant variance at the level of average student performance [] or, in more recent studies, the improvement of achievement. The research objective is therefore to identify those stable and generalisable indicators at the level of the individual school and the level of teaching that generate this variance and are thus responsible for the effectiveness of a school. From a methodological point of view, the explanation of the variance and change in learning performance and thus mostly cognitive improvement at the individual level of the individual students forms the central ‘causal’ reference point of the effectiveness approach.

The reference problem of school improvement research, in contrast, manifests itself in the variance of collective action structures that characterise the individual school as a ‘pedagogical action unit’ [,]. The guiding research question here is how a sustainable improvement in pedagogical interaction practice can be achieved through changes in action structures at the organisational level of the (individual) school. Accordingly, the primary research goal is the conception of school improvement programmes and individual improvement measures or the methodologically valid evaluation of programmes and measures, as well as the processes involved, which are largely intended as instruments of control policy. As a rule, socio-technological impact expectations and implementation strategies oriented to them are at the centre of research, so that the focus is primarily on the corresponding implementation processes, as well as changes at the level of school action structures [,,,,,,,]. Questions about a possible causal attribution of action structures and cognitive improvement at student level, however, remain largely ignored here. In school effectiveness research, in contrast, school action processes and the question of how schools must change their action structures in order to move from a less effective to a more effective state play a subordinate role [,,,,,]. For the most part, school-based action processes are only given significance, if they make a substantial contribution to elucidating the variance in student performance across several studies (and also did so in the past).

A link of both perspectives on a scientific level seems to be limited by the diverging object reference and the associated different theoretical and methodological focus on the conditions within schools that are relevant with regard to the improvement of school educational processes [,,,,,]. On the one hand, “blind spots” can be exposed in the comparison of both perspectives. The lack of orientation towards an improvement of student performance and thus also a possible improvement of educational opportunities on the part of school improvement research makes the success of indicator-based improvement strategies unlikely. Additionally, the lack of consideration of the complex and ‘loosely coupled’ system of action of the schools with its processes impact the chances of success. On the other hand, the question of whether a scientific link in the sense of an equally basic theoretical and research methodological integration is possible remains largely open in view of the hitherto unsolved theoretical and methodological problems. In recent years, both nationally and internationally, research methodological convergences between school effectiveness and quantitative school improvement research have been observed. This can be seen, for example, in the increased use of longitudinal data in both discourses and in the use of effectiveness research methods in school improvement research. There is also evidence in the consideration of the multi-level structure and of process and contextual factors in school effectiveness research, as well as in the consideration of student learning performance and improvement in school improvement research [,,,].

(b) The second strategy, which attempts to link science and practice, seems to be confronted with lower-threshold problem levels against this background. The strategy is oriented towards application and does not superficially depend on solving insistent questions of causality that primarily arise at the level of scientific observation.

The models for linking the two approaches are recognised within the discussion accordingly within the framework of these two strategies. In the following, we will present (Section 3) and discuss (Section 4) two relevant linking models. However, it can be made clear on the basis of these two models that both the linking of school effectiveness research and school improvement research and the linking of school effectiveness research and school improvement practice are confronted with causality problems, which, however, remain largely unaddressed in this context. This article therefore concludes (Section 5) by arguing that an application-oriented construction of the ‘linking’ between school effectiveness and school improvement also presupposes the reflection of basic theoretical and methodological problems in the respective research areas.

3. Models for Linking School Effectiveness Research and School Improvement: CF-ESI and DMEE/DASI

Comprehensive Framework of Effective School Improvement (CF-ESI) [] and Dynamic Model of Educational Effectiveness (DMEE) [,] are two influential models with slightly different foci. While CF-ESI is designed to focus more on in linking school effectiveness and school improvement research, DMEE is designed to establish a link between effectiveness research and improvement practice. The “Dynamic Approach to School Improvement” model (DASI) [,] was developed following DMEE and, in principle, represents an implementation concept on the basis of which the adaptation of DMEE at a school level (policy, administration and individual school) is to be made possible. In the following, the design principles and the objectives of both models will be summarised to be able to analyse them in a final step regarding their strategies for dealing with the causality problem.

3.1. Comprehensive Framework of Effective School Improvement (CF-ESI)

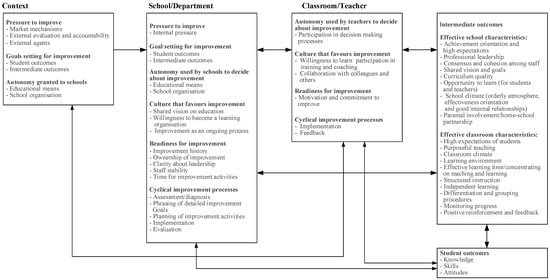

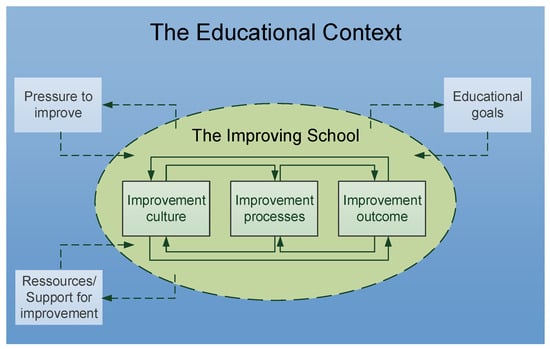

The CF-ESI model was developed as part of the international effective school improvement project. This project had the objective to test whether effective school improvement can be understood and modelled in the same way across participating countries. In this way, school effectiveness and school improvement should be brought closer together []. In its original form, CF-ESI was planned and formulated as a cross-national model (“ESI model”) (cf. Figure 1 and Figure 2) [] (see e.g., Reezigt, 2001). The framework model addresses research, as well as school practice and politics. These should use the framework model for the implementation and analysis of school improvement, as it generates information about relevant factors for school improvement at the levels that are significant [].

Figure 1.

A model for effective school improvement [] (Reezigt, 2001, p. 33).

Figure 2.

Comprehensive Framework of Effective School Improvement [] (CF-ESI; Stoll et al., 2006; p. 100).

In this case, the model improvement is based on the analyses of theories which, according to the authors, are relevant for effective school improvement [,,]: theories of school improvement, curriculum theories, behavioural theories, organisational theories, theories of organisational learning and learning organisations, decision-making theories, theories of rational planning, economic market models, cybernetics, autopoiesis, approaches of the school effectiveness paradigm and theories of human resource management. The improvement of the model is also based on case studies of existing programmes of effective school improvement in eight different countries.

The model was tested by applying it to programmes of effective school improvement that were not used for the improvement of the model. During conferences in the individual countries, participants were able to discuss the strengths and weaknesses of the model and make suggestions for revision (e.g., in the form of a questionnaire on the significance of the factors considered and the characteristics of the model). As a result of these discussions, major differences in the significance and influence of individual factors between the countries became apparent and the idea of a model was discarded in favour of a more open framework (“Comprehensive Framework of Effective School Improvement”). CF-ESI is a framework model that provides a more general description of school improvement and the factors relevant to it and takes greater account of the dynamic aspect of school improvement [,]. As a framework model, it offers a necessary abstract formulation of the relevant factors to ensure greater flexibility in interpreting their influences and thus usability of the framework model for different national education systems [,].

The framework model distinguishes between the school and context levels in terms of a multi-level structure and assumes reciprocal relationships within and between the levels exist (see Figure 1). The authors assume that improvements, even if initiated at other levels (e.g., teaching), must ultimately take place at school level in order to lead to effective school improvement [].

According to the authors, the importance of the context level for school improvement becomes particularly clear from an international perspective, as there are strong differences in contexts between countries [,]. Important influencing factors, which can be differentiated for different countries, are the pressure to improve, external educational goals and the resources/support for improvement (cf. Table 1). Reezigt [] describes the pressure to improve as an important initial condition for school improvement. School improvement goals should also be in line with general educational goals. School improvement is also influenced by the resources and support available to the schools in question. Table 1 shows key factors for these three overarching areas.

Table 1.

Central factors at context level [] (Stoll et al., 2006, p. 101).

The improving school is also characterised by three key concepts: improvement culture, improvement processes and improvement outcomes. These overarching concepts can be assigned factors, which are reflected in Table 2.

Table 2.

Central factors at school level [] (Stoll et al., 2006, p. 102).

The link between school effectiveness and school improvement is made in the model by considering different theories and programmes and in the choice of target criteria (for evaluation). According to the authors, for the evaluation of effective school improvement, both outcomes at student level (as the main goal of school and effectiveness criterion) and changes in factors at school and classroom level (as intermediate outcomes and improvement criterion) need to be considered. By considering both criteria, CF-ESI relates the primary objects of observation of school effectiveness research and school improvement research to each other.

3.2. Dynamic Model of Educational Effectiveness (DMEE) and Dynamic Approach to School Improvement (DASI)

Creemers and Kyriakides’ DMEE is a further improvement of Creemers’ Comprehensive Model of Educational Effectiveness [,], in which additional validation studies of this initial model [,,,,,] were considered in the modelling. The first version of DMEE was proposed in 2008 []. In 2020, a slightly revised version was published to consider the research knowledge base of the previous decade []. Unlike CF-ESI, which had an explicit school improvement orientation, DMEE is rooted in school effectiveness research. Strictly speaking, Creemers and Kyriakides do not speak of “school effectiveness”, but of “educational effectiveness”, since according to their understanding, school effectiveness only focuses on the research at school level, while “educational effectiveness” means the joint investigation of factors at different levels. Since, in our understanding, “educational effectiveness” can refer to more than a school (e.g., effectiveness of university education), we use the term “school effectiveness” here and understand it to mean the (joint) investigation of factors at different levels in relation to the effectiveness of school The embedding of the individual schools in the multi-level structure of school systems, as well as the influence of contextual factors, have long been taken into account in school effectiveness research. The observation of intended, as well as unintended, changes at the level of effectiveness and the indicators influencing this effectiveness over time as a ‘dynamic’ moment of effectiveness (e.g., shifts in the social composition of students) represent an innovation. The consideration of the ‘dynamic’ moment requires the continuous adaptation of the measurement instruments, as well as the continuous evaluative examination of effectiveness factors at school level [,]. In terms of research methodology, longitudinal designs in particular are indicated as an adequate observation procedure.

In addition to more precise research on school effectiveness, linking school effectiveness research and school improvement practice is one of the main goals of DMEE [,,,,,]. The model tries to capture school reality more precisely and thus provides practitioners (policy makers, administrators and schools) with a more ‘realistic’ picture of school reality for the purpose of their targeted improvement. For Creemers and Kyriakides, the link between school effectiveness and school improvement ultimately lies in the applicability of the dynamic observation model in school improvement practice [,,,,]. This is to be done within the framework of DMEE by establishing both theory-guided and data-based school improvement. According to Creemers and Kyriakides, a theoretical model, such as DMEE, is a necessary condition for the usability of school effectiveness research findings in school (improvement) practice. This thus has an expanded knowledge base available as an orientation framework for effectiveness-oriented planning and implementation of improvement measures [].

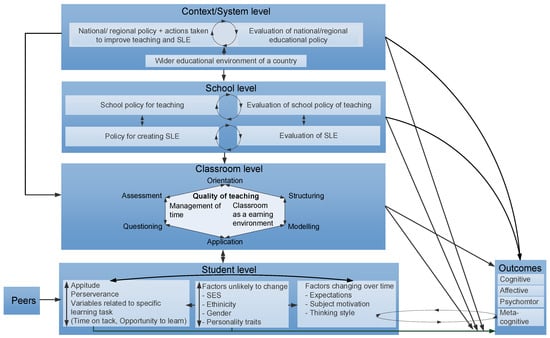

The construction of the model is based on a differentiation of context, school, class and student levels. At each of these levels, the authors define relevant factors that have a direct and indirect impact on school effectiveness (see Figure 3). The description of the different levels of DMEE focuses on teaching and learning as central processes of school and on teachers and students as the most important actors [,]. In the 2020 version of DMEE, a few revisions were made []. The interrelations of the factors described at the different levels were made more explicit in the model. For example, student characteristics (e.g., student background) may have an impact on the teaching and learning situation and vice versa. Another revision was that indirect effects of factors at system and school levels on student achievement were made more explicit and not only direct effects are now assumed. In addition, in the 2020 version, it is assumed that factors on teacher level, school level and system level have an impact on the relationship between student level factors with student achievement. In the course of this [], argue that factors on teacher, school and system levels also promote equity besides quality. In the first version, a non-linear relationship between certain effectiveness factors and student achievement was assumed. Against the background of empirical studies, this assumption was revised because no evidence of curvilinear relationships was found. Furthermore, the central idea of describing effectiveness as a dynamic concept was further developed by making the situational character of system and school level factors more explicit. This means that only changes in these factors are assumed to have direct or indirect impact on the improvement of the school effectiveness status. In the following section, the different levels of DMEE will be presented.

Figure 3.

Dynamic Model of Educational Effectiveness [,] (DMEE; illustration by the authors based on Creemers & Kyriakides, 2008b and Kyriakides et al., 2020).

At student level, Creemers and Kyriakides distinguish between ‘time-varying’ and ‘time-invariant’ factors: ‘Time-varying’ factors are assumed to be undirected and ‘time-invariant’ factors are assumed to be directly related to student-level outcomes. The learning goals at student-level are used as indicators of effectiveness in this model. They are derived from the intended curriculum []. The authors criticise the often limited choice of effectiveness criteria, which is restricted to individual cognitive components and emphasise that the entire curriculum should be taken into account when determining the criteria of effectiveness []. The model includes equally cognitive, affective, meta-cognitive and psychomotor student outcomes. The characteristics can be used to examine the quality of a school and its handling of inequality. In the 2020 version of DMEE, the impact of peers on student level factors was added [].

At classroom-level, only those factors are included in the model that relate directly to characteristics of teaching quality. In the first version of DMEE, the authors distinguish between eight factors. Teacher characteristics are not included in the model. The factors at class level interact with each other, as well as with the factors at student level. They all have an influence on the outcomes at student level and are influenced by the higher levels. In contrast to student-level factors, factors at class and higher levels are measured using five dimensions []. In addition to the most used measurement of factor frequency, the authors describe the equal measurement of quality, phase, differentiation and focus of the factor in order to do justice to the complexity of the characteristics and factors. In this way, the information relevant for school improvement should be taken into account. The updated 2020 version of DMEE now contains five stages of effective teaching, which are based on the factors and dimensions mentioned above []. Effective teaching is assumed to be one construct with different levels of difficulty of teaching skills. Furthermore, the updated version distinguishes between teaching and assessment skills of the teachers. The assessment skills are also differentiated in stages.

At school level, only factors that are directly related to learning and teaching are considered. Creemers and Kyriakides distinguish four factors at school level: “school policy for teaching and actions taken for improving teaching practice” (quantity, quality and learning opportunities), as well as “policy for creating the school learning environment and actions taken for improving the school learning environment (SLE)” (school culture and climate) form the first two factors. These are complemented by the corresponding two factors of evaluation of the respective policy. Actors, such as school leaders, are not part of the model at this level. For Creemers and Kyriakides, it is not decisive by whom or how activities are initiated or carried out, but only that they are carried out. School policies are to be measured by documents, such as concept papers, minutes of meetings and the like. However, much more crucial, according to Creemers and Kyriakides, are the measures taken to make school policies understandable, to make clear the resulting expectations of teachers and to support school staff in implementing school policies []. The aspect of evaluating these policies is particularly emphasised by Creemers and Kyriakides in line with their idea of data-based school improvement. The evaluation of these policies is of great importance as it is necessary for school improvement and the improvement of outcomes at student level. Changes in the 2020 version of the model concerned the revised assumption that relationships between the various effectiveness factors at school level are no longer assumed to be due to the lack of empirical support [].

Context level/system level factors also result from the focus of DMEE on learning and teaching. The (national/regional) policy for improving teaching and the school learning environment, as well as the evaluation of these policies, are seen as key institutional contextual factors. The third factor refers to the influence of the socio-economic context of the school on its effectiveness. This factor is differentiated into the possibilities of learning support, on the one hand, and the expectations and pressures that the school is confronted with by its environment, on the other. In the 2020 version of DMEE, this level is also referred to as system level to take differences in the functioning of schools in different school systems into account []. However, the name of this level is not used consistently throughout the new version.

However, DMEE does not answer the question of how the conceptual implementation of such theory-guided and data-based school improvement is to be ensured at the level of the action and decision-making practice of the individual school. This is not least due to the fact that this model can register changes in the effectiveness of individual indicators by observing performance outcomes, but continues to treat the complex structures of action within schools like a ‘black box’. Only the area of teaching is described in a more differentiated way. Against this background, the solution to this implementation problem proposed by Creemers and Kyriakides [,,] in the form of the “Dynamic Approach to School Improvement” (DASI) becomes visible as an intervention approach. The understanding of improvement does not start from an analysis of the established complex structures of action in a concrete individual school system, but follows a rather ‘socio-technological’ strategy based on a continuous indicator-supported self-evaluation on the basis of DMEE. Thus, DASI (ideally) describes different phases of school improvement, whereby the process begins with the definition of school improvement goals. These are to be focused on or linked to the improvement of student performance. Subsequently, factors, which can be improved, are selected on the basis of DMEE to achieve the goal. In this context, an evaluation of the current state and the prioritisation of improvement areas take place. Afterwards, school improvement strategies are developed, implemented and checked for their success in a “final” evaluation. According to Creemers and Kyriakides, in this improvement process, schools should be supported (if necessary) by an Advisory and Research Team (A&R Team), whose central function is to provide the school with research methodological knowledge and explanations, e.g., for evaluation results or advising on evaluation tools and possible improvement strategies []. The team should ensure that the school moves through the phases of DASI in a conceptually sound manner and that the goal of improving student achievement can be achieved []. However, the main responsibility for the improvement process remains with the school.

4. Critical Queries

The Summary description of both models already makes it clear that fundamental problems regarding the divergences in the constitution of the object, which become visible through the intended linking of school effectiveness research and school improvement practice, are not themselves the object of theoretical and methodological reflection. This applies in particular to the fact that in both research areas there is the claim to be ‘evidence-based’, which is to be achieved by using uniform methodological measurement procedures. However, theory-based reflection on the construction of the respective setting observed and the appropriateness of the methodological instruments used is largely dispensed with [,]. In our view, this results in two overarching critical questions that should be asked of both models:

- (1)

- In what ways do the two models construct the link between school effectiveness and school improvement?

- (2)

- To what extent do the models take into account any aspects of a ‘technology deficit’ or tend to reflect potential causality problems?

(a) CF-ESI

CF-ESI was developed by considering key aspects and concepts from various theories of school improvement and school effectiveness, in addition to theories from other fields (e.g., curriculum improvement, organisational theories), and identifying features through the analysis of existing (and deemed effective) school improvement programmes in the participating countries. Subsequently, the significance of the aspects was verified with the help of data from other school improvement programmes (which were not part of the model improvement), as well as feedback from experts in the different countries. To put it bluntly, CF-ESI tries to achieve the link in an eclectic way and by finding consensus. The desired link takes the shape of a synoptic and primarily additive combination of indicators and criteria from both research areas. Thus, within the framework of the model, output criteria for the evaluation of school improvement (improvement criterion), on the one hand, and performance-based output criteria for measuring effectiveness (effectiveness criterion), on the other hand, are taken into account. However, both criteria and thus the processes that generate the observed output continue to stand next to each other, but in an unconnected way. Due to this, the gain of the intended ‘linking model’, whose task, according to its own claim, would be to make the influence of the social action structures of the school on individual learning processes observable and explainable, does not become clear. How a theoretically and empirically justifiable expansion of observation possibilities can ultimately succeed on the basis of an integration of effectiveness and improvement research is not answered within the framework of CF-ESI.

(b) DMEE/DASI

DMEE takes a different approach than CF-ESI does. The first apparent difference is that DMEE does not intend to find a link at the level of research, but rather a link between school effectiveness research and school improvement practice. As Creemers and Kyriakides themselves note, the approach of the link is mainly to integrate findings from effectiveness research more closely into school improvement practice [,]. According to Creemers and Kyriakides, the findings from effectiveness research were underutilised by practitioners in the past because many models consist primarily of a compilation of lists of variables, whose validity (in terms of correlations with student performance) was also only tested in cross-sectional studies []. These lists of variables are often differentiated by context, input, process, and output levels, with the assumed cause-and-effect relationships between the levels being visualised by vector models [,,,,]. A theory-based model that, like DMEE, is oriented towards contingency-theoretical considerations on the context-related adaptivity of school improvement processes, however, does not only aim at showing the complex interaction of individual variables on the different levels of the multi-level system. It must also find a research methodological way of visualising the change in social and institutional contextual variables and their influence on the variance in student performance, the reference point of adaptive school improvement strategies. Only by orienting itself to the ‘dynamic’ moment of effectiveness, does DMEE supply added value for practice.

This is also the context of the evidence-based approach of the model. The factors, on which DMEE is based, should be able to give practitioners clues as to what they need to change in order to be able to improve student learning performance []. The principle of linking (in the context of DMEE) is ultimately to embed the observational logic of school effectiveness research into the schools themselves. However, this can only be achieved in accordance with the model, if DMEE and its indicator structure are used by schools as an evaluation instrument in order to arrive at methodologically valid findings about their own improvement-related status on the basis of it. In other words, DMEE specifically intervenes in established self-monitoring strategies of the schools.

The factor of dynamics is captured by the longitudinal nature of the data collection, supplemented by the analysis of mediated and non-linear effects across the individual levels and by a more differentiated recording of the factors. Similar methodological designs are sometimes used in quantitative school improvement research [,,,,,,] to also investigate improvements and their effects. Looking at these studies and their underlying models, it becomes clear that DMEE shows a similarity to approaches of quantitatively oriented school improvement research on a research methodological level. However, beyond the methodological references, there are no conceptual convergences, as DMEE remains an effectiveness model due to its logic of observation. In particular, factors that have an influence on the sustainable improvement capacity of the school as an organisation remain unconsidered. This also means that the role of school actors and thus action-theoretical aspects of school improvement are left out, so that questions about which actors can initiate or achieve change and which organisational framework favours this are not part of DMEE.

With Scheerens and Demeuse [], a central problem of DMEE can be exposed. They note that indicator-based knowledge about what is and what is not effective does not yet provide the necessary action knowledge to move (as efficiently as possible) from a less effective to a more effective state [,,,,,,,,,,]. When practitioners use the model, they are informed about which variables have an impact on student learning outcomes. However, they are not yet informed about which action strategies and measures can influence these variables. The claim of evidence-based school improvement processes ultimately fails due to the structural technology deficit of the school. It cannot ensure the certainty of success of school improvement measures and, accordingly, offers only little decision-making certainty in the choice of school improvement measures. Moreover, the contingency-theoretical justification of DMEE, which emphasises the influence of situational factors, contradicts an indicator-based generalisation of individual school improvement goals. Accordingly, when applying DMEE, it remains unclear which factors should be changed first by which actors [,].

This ‘blind spot’ of the model can also be found in DASI. The phases of the improvement cycle described in DASI are kept relatively general and, in principle, they are not directly linked to DMEE. Upon closer examination, DASI consists of a rather normative and programmatic description of a classic school improvement cycle that cannot be derived from DMEE. Rather, the phases of the “Institutional School Improvement Process” (ISP) by Dalin, Rolff and Buchen [] can be found without difficulty in the flow chart of DASI. In this respect, DASI is first and foremost the ‘driving force’ that is to anchor the indicator-based observation technology of DMEE in the schools.

5. Conclusions, Outlook and Further Implications

Efforts to establish a link between school effectiveness and school improvement are driven by the expectation that an evidence-based approach to school improvement can lead to increases in effectiveness in the education system. In view of the preceding analysis of relevant linking models, however, this expectation has, frankly speaking, more the character of “hope for effectiveness”, which ultimately leads to the assumption that the effectiveness of schools cannot only be validly described and predicted, but also purposefully controlled, fostered and generated. This may be seen as a significant reason that should not be ignored, as it matters in the context as to why this discussion has been ongoing for about 40 years and also receives new impulses.

Hence, the question is still whether or not the linking models are based on a ‘realistic’ picture of what occurs in everyday schooling. One apparent problem is that these models are confronted with at least two different process dimensions characterised through incommensurable processual ‘natures’: classroom interaction and organisational decision making. Both dimensions do not operationally ‘link’ in a linear or ‘technical’ manner in reality, nor does each dimension feature processual components that provide causal linkages between process and product. One suggestion our discussion directly implies is the necessity to establish a more advanced and reflexive theory base in a first step and to redesign the processual complexity of education methodologically.

In view of the reported state of discussion, it seems necessary, above all, to first clarify the limits of the respective theory formation achieved and the empirical possibilities in both school effectiveness research and school improvement research before an integration of the object references and the research strategies of both approaches is possible. So far, the question is rather whether a link between the two leads to a compensation or to a complementation of their respective problems of observation. In particular, this concerns the problem of causality, which gives rise to theoretical and methodological reflection in both fields of research. In other words, the preceding discussion of the two linking models tends to indicate that what may not fit together cannot easily be made to ‘fit’ together.

In a further step, this raises questions about the possibilities and limits of research designs and research methods. If causalities within the complex structures of action of the school system do not follow a linear pattern, questions will have to be asked about the methodological possibilities in order to obtain a correspondingly ‘realistic’ picture of school structures and processes. If neither linear chains of effects between the “loosely coupled” elements of teaching and learning [,] can be assumed, nor processes of action in organisations can be understood as rational choices of action, two implications can be derived. Not only does it raise doubts are raised about the socio-technological aspiration for an evidence-based controllability of school improvement practice, it also invokes methodological problems, which, in turn, point to epistemological reflection requirements. Relevant alternative theories exist, which, for example, allow for a description of the complexity of the school as a system of action [,,], but they do not seem to be readily compatible with effectiveness research, which has so far focused on linear impact analyses.

With Scheerens and Demeuse [] in mind, the first question that needs to be asked is how the theories used in effectiveness research describe and explain why one school is more effective than another under comparable and stable contextual conditions and how the theories used in improvement research describe and explain how to move from a less effective state to a more effective one. In other words, the first step is to analyse the theoretical basis of current research practice and to look for alternatives [,,]. The consequences of this, in terms of research methodological problems and alternative research designs, need to be discussed further.

Author Contributions

Conceptualisation, T.F., M.E., F.R. and L.M.B.; writing—original draft preparation, T.F., M.E., F.R., L.M.B. and S.W.; writing—review and editing, T.F., M.E. and S.W.; visualisation, T.F:; project administration, T.F. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Center for School, Education, and Higher Education Research of Johannes Gutenberg-University.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Reynolds, D.; Sammons, P.; Fraine, B.; van Damme, J.; Townsend, T.; Teddlie, C.; Stringfield, S. Educational effectiveness research (EER): A state-of-the-art review. Sch. Eff. Sch. Improv. 2014, 25, 197–230. [Google Scholar] [CrossRef] [Green Version]

- Bischof, L.M. Schulentwicklung und Schuleffektivität: Ihre theoretische und empirische Verknüpfung; Springer VS Imprint: Wiesbaden, Germany, 2017. [Google Scholar]

- Scheerens, J. Recapitulation and Application to School Imrpovement. In Educational Effectiveness and Ineffectiveness; Scheerens, J., Ed.; Springer: Dordrecht, The Netherlands, 2016; pp. 291–332. [Google Scholar]

- Scherer, R.; Nilsen, T. Closing the gaps?: Differential effectiveness and accountability as a road to school improvement. Sch. Eff. Sch. Improv. Int. J. Res. Policy Pract. 2019, 30, 255–260. [Google Scholar] [CrossRef] [Green Version]

- Reynolds, D.; Neeleman, A. School Improvement Capacity. In Concept and Design Developments in School Improvement Research; Oude Groote Beverborg, A., Feldhoff, T., Maag Merki, K., Radisch, F., Eds.; Springer International Publishing: Cham, Switzerland, 2021. [Google Scholar]

- Dreeben, R. The Nature of Teaching: Schools and the Work of Teachers; Scott, Foresman and Company: Glenview, IL, USA, 1970. [Google Scholar]

- Luhmann, N.; Schorr, K.-E. Reflexionsprobleme im Erziehungssystem, 2nd ed.; Suhrkamp: Frankfurt, Germany, 1988. [Google Scholar]

- Weick, K.E. Educational organizations as loosely coupled systems. Adm. Sci. Q. 1976, 21, 1–19. [Google Scholar] [CrossRef]

- Weick, K.E. Administering education in loosely coupled schools. Educ. Dig. 1982, 48, 28–36. [Google Scholar]

- Clark, D.L.; Lotto, L.S.; Astuto, T.A. Effective schools and school improvement: A comparative analysis of two lines of inquiry. Educ. Adm. Q. 1984, 20, 41–68. [Google Scholar] [CrossRef]

- Bonsen, M.; Büchter, A.; van Ophuysen, S. Im Fokus: Leistung. In Jahrbuch der Schulentwicklung: Daten, Beispiele und Perspektiven; Holtappels, H.-G., Klemm, K., Pfeiffer, H., Rolff, H.-G., Schulz-Zander, R., Eds.; Juventa: Weinheim/München, Germany, 2004; pp. 187–223. [Google Scholar]

- Brown, S.; Riddell, S.; Duffield, J. Possibilities and problems of small-scale studies to unpack the findings of large-scale studies of school effectiveness. In Merging Traditions. The Future of Research on School Effectiveness and School Improvement; Gray, J., Reynolds, D., Fitz-Gibbon, C.T., Jesson, D., Eds.; Cassell: London, UK, 1996; pp. 93–120. [Google Scholar]

- Gray, J.; Reynolds, D.; Fitz-Gibbon, C.T.; Jesson, D. (Eds.) Merging Traditions. The Future of Research on School Effectiveness and School Improvement; Cassell: London, UK, 1996. [Google Scholar]

- Harris, A. Contemporary perspectives on school effectiveness and school improvement. In School Effectiveness and School Improvement: Alternative Perspectives; Harris, A., Bennett, N., Eds.; Continuum: London, UK, 2001; pp. 7–25. [Google Scholar]

- Reynolds, D.; Stoll, L. Merging school effectiveness and school improvement. In Making Good Schools: Linking School Effectiveness and School Improvement; Reynolds, D., Bollen, R., Creemers, B.P.M., Hopkins, D., Stoll, L., Lagerweij, N., Eds.; Routledge: London, UK, 1996; pp. 94–112. [Google Scholar]

- Teddlie, C.; Stringfield, S. A history of school effectiveness and improvement research in the USA focusing on the past quarter century. In International Handbook of School Effectiveness and Improvement; Townsend, T., Ed.; Springer: Dordrecht, The Netherlands, 2007; pp. 131–166. [Google Scholar]

- Creemers, B.P.M. From school effectiveness and school improvement to effective school improvement: Background, theoretical analysis, and outline of the empirical study. Educ. Res. Eval. 2002, 8, 343–362. [Google Scholar] [CrossRef]

- Creemers, B.P.M. Educational effectiveness and improvement: The development of the field in Mainland Europe. In International Handbook of School Effectiveness and Improvement; Townsend, T., Ed.; Springer: Dordrecht, The Netherlands, 2007; pp. 223–242. [Google Scholar]

- Reezigt, G.J.; Creemers, B.P.M. A comprehensive framework for effective school improvement. Sch. Eff. Sch. Improv. 2005, 16, 407–424. [Google Scholar] [CrossRef]

- Reynolds, D.; Teddlie, C.; Hopkins, D.; Stringfield, S. Linking School Effectiveness and School Improvement. In The International Handbook of School Effectiveness Research; Teddlie, C., Reynolds, D., Eds.; Falmer Press: London, UK, 2000; pp. 206–231. [Google Scholar]

- Creemers, B.P.M.; Reezigt, G.J. School effectiveness and school improvement: Sustaining links. Sch. Eff. Sch. Improv. 1997, 8, 396–429. [Google Scholar] [CrossRef]

- Reynolds, D.; Hopkins, D.; Stoll, L. Linking school effectiveness knowledge and school improvement practice: Towards a synergy. Sch. Eff. Sch. Improv. 1993, 4, 37–58. [Google Scholar] [CrossRef]

- Stoll, L. Linking school effectiveness and school improvement: Issues and possibilities. In Merging Traditions. The Future of Research on School Effectiveness and School Improvement; Gray, J., Reynolds, D., Fitz-Gibbon, C.T., Jesson, D., Eds.; Cassell: London, UK, 1996; pp. 51–73. [Google Scholar]

- Stoll, L.; Creemers, B.P.M.; Reezigt, G.J. Effective school improvement. In Improving Schools and Educational Systems: International Perspectives, 1st ed.; Harris, A., Chrispeels, J.H., Eds.; Routledge: London, UK, 2006; pp. 90–104. [Google Scholar]

- Creemers, B.P.M.; Kyriakides, L. The Dynamics of Educational Effectiveness: A Contribution to Policy, Practice and Theory in Contemporary Schools; Routledge: London, UK, 2008. [Google Scholar]

- Creemers, B.; Kyriakides, L. Developing, testing, and using theoretical models for promoting quality in education. Sch. Eff. Sch. Improv. 2015, 26, 102–119. [Google Scholar] [CrossRef]

- Kyriakides, L.; Creemers, B.P.; Panayiotou, A.; Charalambous, E. Quality and Equity in Education; Routledge: London, UK, 2020. [Google Scholar]

- Scheerens, J. Improving School Effectiveness; Unesco, International Institute for Educational Planning: Paris, France, 2000. [Google Scholar]

- Fend, H. “Gute Schulen—Schlechte Schulen”. Die einzelne Schule als pädagogische Handlungseinheit. Die Dtsch. Sch. 1986, 78, 275–293. [Google Scholar]

- Fend, H. Schule als pädagogische Handlungseinheit im Kontext. In Schulgestaltung: Aktuelle Befunde und Perspektiven der Schulqualitäts-und Schulentwicklungsforschung. Grundlagen der Qualität von Schule 2, 1st ed.; Steffens, U., Maag Merki, K., Fend, H., Eds.; Waxmann: Münster, Germany, 2017; pp. 85–102. [Google Scholar]

- Bonsen, M.; Bos, W.; Rolff, H.-G. Zur Fusion von Schuleffektivitäts- und Schulentwicklungsforschung. In Jahrbuch der Schulentwicklung: Daten, Beispiele und Perspektiven; Bos, W., Holtappels, H.-G., Pfeiffer, H., Rolff, H.-G., Schulz-Zander, R., Eds.; Juventa: Weinheim/München, Germany, 2008; pp. 11–39. [Google Scholar]

- Gray, J.; Hopkins, D.; Reynolds, D.; Wilcox, B.; Farrell, S.; Jesson, D. Improving Schools: Performance and Potential; Open University Press: Buckingham, UK; Philadelphia, PA, USA, 1999. [Google Scholar]

- Hopkins, D. School Improvement for Real; RoutledgeFalmer: London, UK; New York, NY, USA, 2001. [Google Scholar]

- Feldhoff, T.; Radisch, F. Why must everything be so complicated? Demands and challenges on methods for analyzing school improvement processes. In Concept and Design Developments in School Improvement Research; Oude Groote Beverborg, A., Feldhoff, T., Maag Merki, K., Radisch, F., Eds.; Springer International Publishing: Cham, Switzerland, 2021. [Google Scholar]

- Bonsen, M.; Bos, W.; Rolff, H.-G. On the fusion of school effectiveness and school improvement research. In Yearbook of school improvement, 15th ed.; Bos, W., Holtappels, H.G., Pfeiffer, H., Rolff, H.-G., Schulz-Zander, R., Eds.; Juventa: Weinheim, Germany, 2008; pp. 11–39. [Google Scholar]

- Bennett, N.; Harris, A. Hearing truth from power? Organisation theory, school effectiveness and school improvement. Sch. Eff. Sch. Improv. 1999, 10, 533–550. [Google Scholar] [CrossRef]

- Berkemeyer, N.; Bos, W.; Gröhlich, C. Schulentwicklungsprozesse in Längsschnittstudien. In Handbuch Schulentwicklung: Theorie—Forschungsbefunde—Entwicklungsprozesse—Methodenrepertoire, 1st ed.; Bohl, T., Helsper, W., Holtappels, H.-G., Schelle, C., Eds.; UTB: Stuttgart, Germany, 2010; pp. 147–150. [Google Scholar]

- Bischof, L.; Feldhoff, T.; Hochweber, J.; Klieme, E. Untersuchung von Schulenwticklung anhand von Schuleffektivitätsdaten—“Yes we can?!”. In Aktuelle Befunde und Perspektiven der Schulqualitäts-und Schulentwicklungsforschung. Grundlagen der Qualität von Schule 2; Steffens, U., Maag Merki, K., Fend, H., Eds.; Waxmann: Münster, Germany, 2017; pp. 287–308. [Google Scholar]

- Reezigt, G.J. A Framework for Effective School Improvement; GION, Institute for Educational Research, University of Groningen: Groningen, The Netherlands, 2001. [Google Scholar]

- Stoll, L.; Wikeley, F. Issues on linking school effectiveness and school improvement. In Effective School Improvement: State of the Art: Contribution to a Discussion; Hoeben, W.T.J.G., Ed.; GION, Institute for Educational Research, University of Groningen: Groningen, The Netherlands, 1998; pp. 27–53. [Google Scholar]

- Creemers, B.P.M.; Kyriakides, L. Improving Quality in Education: Dynamic Approaches to School Improvement; Routledge: Abingdon, UK; New York, NY, USA, 2012. [Google Scholar]

- Hoeben, W.T.J.G. Linking different theoretical traditions: Towards a comprehensive framework for effective school improvement. In Effective School Improvement: State of the Art: Contribution to a Discussion; Hoeben, W.T.J.G., Ed.; GION, Institute for Educational Research, University of Groningen: Groningen, The Netherlands, 1998; pp. 135–218. [Google Scholar]

- Scheerens, J.; Demeuse, M. The theoretical basis of the effective school improvement model (ESI). Sch. Eff. Sch. Improv. 2005, 16, 373–385. [Google Scholar] [CrossRef]

- Creemers, B.P.M. The Effective Classroom; Cassell: London, UK, 1994. [Google Scholar]

- Creemers, B.P.M. The goals of school effectiveness and school improvement. In Making Good Schools: Linking School Effectiveness and School Improvement; Reynolds, D., Bollen, R., Creemers, B.P.M., Hopkins, D., Stoll, L., Lagerweij, N., Eds.; Routledge: London, UK, 1996; pp. 21–35. [Google Scholar]

- de Jong, R.; Westerhof, K.; Kruiter, J. Empirical evidence of a comprehensive model of school effectiveness: A multilevel study in mathematics in the 1st year of junior general education in the Netherlands. Sch. Eff. Sch. Improv. 2004, 15, 3–31. [Google Scholar] [CrossRef]

- Kyriakides, L. Extending the comprehensive model of educational effectiveness by an empirical investigation. Sch. Eff. Sch. Improv. 2005, 16, 103–152. [Google Scholar] [CrossRef]

- Kyriakides, L.; Campbell, R.J.; Gagatsis, A. The significance of the classroom effect in primary schools: An application of Creemers’ comprehensive model of educational effectiveness. Sch. Eff. Sch. Improv. 2000, 11, 501–529. [Google Scholar] [CrossRef]

- Reezigt, G.J.; Guldemond, H.; Creemers, B.P.M. Empirical validity for a comprehensive model on educational effectiveness. Sch. Eff. Sch. Improv. 1999, 10, 193–216. [Google Scholar] [CrossRef]

- Creemers, B.P.M.; Kyriakides, L. A theoretical based approach to educational improvement: Establishing links between educational effectiveness research and school improvement. Jahrb. Schulentwickl. 2008, 15, 41–61. [Google Scholar]

- Creemers, B.P.M.; Kyriakides, L. Using the Dynamic Model to develop an evidence-based and theory-driven approach to school improvement. Ir. Educ. Stud. 2010, 29, 5–23. [Google Scholar] [CrossRef]

- Scheerens, J. The use of theory in school effectiveness research revisited. Sch. Eff. Sch. Improv. 2013, 24, 1–38. [Google Scholar] [CrossRef]

- Scheerens, J. Theories on educational effectiveness and ineffectiveness. Sch. Eff. Sch. Improv. 2015, 26, 10–31. [Google Scholar] [CrossRef] [Green Version]

- Ditton, H. Qualitätskontrolle und Qualitätssicherung in Schule und Unterricht: Ein Überblick zum Stand der empirischen Forschung. In Qualität und Qualitätssicherung im Bildungsbereich: Schule, Sozialpädagogik, Hochschule; Helmke, A., Hornstein, W., Terhart, E., Eds.; Beltz: Weinheim, Germany, 2000; pp. 73–92. [Google Scholar]

- Fend, H. Qualität im Bildungswesen: Schulforschung zu Systembedingungen, Schulprofilen und Lehrerleistung; Juventa: Weinheim, Geramny, 1998. [Google Scholar]

- Scheerens, J.; Bosker, R.J. The Foundations of Educational Effectiveness; Pergamon: Oxford, UK, 1997. [Google Scholar]

- Stufflebeam, D.L. Evaluation als Entscheidungshilfe. In Evaluation—Beschreibung und Bewertung von Unterricht, Curricula und Schulversuchen; Wulf, C., Ed.; Piper: München, Germany, 1972. [Google Scholar]

- Teddlie, C.; Reynolds, D. (Eds.) The International Handbook of School Effectiveness Research; Falmer Press: London, UK, 2000. [Google Scholar]

- Hallinger, P.; Heck, R.H. Conceptual and methodological issues in studying school leadership effects as a reciprocal process. Sch. Eff. Sch. Improv. 2011, 22, 149–173. [Google Scholar] [CrossRef]

- Heck, R.H.; Hallinger, P. Collaborative Leadership Effects on School Improvement: Integrating Unidirectional- and Reciprocal-Effects Models. Elem. Sch. J. 2010, 111, 226–252. [Google Scholar] [CrossRef]

- Heck, R.H.; Chang, J. Examining the Timing of Educational Changes Among Elementary Schools After the Implementation of NCLB. Educ. Adm. Q. 2017, 54, 0013161X1771148. [Google Scholar] [CrossRef]

- Heck, R.H.; Reid, T. School leadership and school organization: Investigating their effects on school improvement in reading and math. Z Erzieh. 2020, 23, 925–954. [Google Scholar] [CrossRef]

- Oude Groote Beverborg, A.; Feldhoff, T.; Maag Merki, K.; Radisch, F. (Eds.) Concept and Design Developments in School Improvement Research; Springer International Publishing: Cham, Switzerland, 2021. [Google Scholar]

- Sleegers, P.J.; Thoonen, E.E.; Oort, F.J.; Peetsma, T.T. Changing classroom practices: The role of school-wide capacity for sustainable improvement. J. Educ. Admin 2014, 52, 617–652. [Google Scholar] [CrossRef]

- Thoonen, E.E.; Sleegers, P.J.; Oort, F.J.; Peetsma, T.T. Building school-wide capacity for improvement: The role of leadership, school organizational conditions, and teacher factors. Sch. Eff. Sch. Improv. 2012, 23, 441–460. [Google Scholar] [CrossRef]

- Cuban, L. Effective schools: A friendly but cautionary note. Phi Delta Kappan 1983, 64, 695–696. [Google Scholar]

- Ditton, H. Elemente eines Systems der Qualitätssicherung im schulischen Bereich. In Qualitätssicherung im Bildungswesen: Reihe: Erfurter Studien zur Entwicklung des Bildungswesens; Weishaupt, H., Ed.; Pädagogische Hochschule: Erfurt, Germany, 2000; pp. 13–35. [Google Scholar]

- Fullan, M.G.; Stiegelbauer, S.M. The New Meaning of Educational Change, 2nd ed.; Cassell: London, UK, 1991. [Google Scholar]

- Hargreaves, A. Changing Teachers, Changing Times: Teachers’ Work and Culture in the Postmodern Age; Cassell: London, UK, 1994. [Google Scholar]

- Lezotte, L.W. School improvement based on the effective schools research. Int. J. Educ. Res. 1989, 13, 815–825. [Google Scholar] [CrossRef]

- Newmann, F.M. Student engagement in academic work: Expanding the perspective on secondary school effectiveness. In Rethinking Effective Schools: Research and Practice; Bliss, J.R., Firestone, W.A., Richards, C.E., Eds.; Prentice-Hall: Hoboken, NJ, USA, 1991; pp. 58–75. [Google Scholar]

- Reynolds, D. School effectiveness: Past, present and future directions. In Schulentwicklung und Schulwirksamkeit. Systemsteuerung, Bildungschancen und Entwicklung der Schule; Holtappels, H.-G., Höhmann, K., Eds.; Juventa: München, Germany, 2005; pp. 11–25. [Google Scholar]

- Stoll, L.; Fink, D. Effecting school change: The Halton approach. Sch. Eff. Sch. Improv. 1992, 3, 19–41. [Google Scholar] [CrossRef]

- Fullan, M.G. Change processes and strategies at the local level. Elem. Sch. J. 1985, 85, 391–421. [Google Scholar] [CrossRef] [Green Version]

- Dalin, P.; Rolff, H.-G.; Buchen, H. Institutioneller Schulentwicklungs-Prozeß: Ein Handbuch, 4th ed.; Verlag für Schule und Weiterbildung: Bönen/Westf, Germany, 1998. [Google Scholar]

- Fend, H. Neue Theorie der Schule: Einführung in das Verstehen von Bildungssystemen, 2nd ed.; Springer VS Verlag: Wiesbaden, Germany, 2008. [Google Scholar]

- Fend, H. Schule Gestalten: Systemsteuerung, Schulentwicklung und Unterrichtsqualität, 1st ed.; Springer VS Verlag: Wiesbaden, Germany, 2008. [Google Scholar]

- Luhmann, N.; Schorr, K.-E. Das Technologiedefizit der Erziehung und die Pädagogik. Z. Pädagogik 1979, 25, 345–365. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).