An Interval AHP Technique for Classroom Teaching Quality Evaluation

Abstract

1. Introduction

2. Preliminaries

2.1. Interval Number and Interval Judgment Matrix

2.2. Analytic Hierarchy Process

2.3. Hierarchical Evaluation Structure: Reformed Teaching Observation Protocol

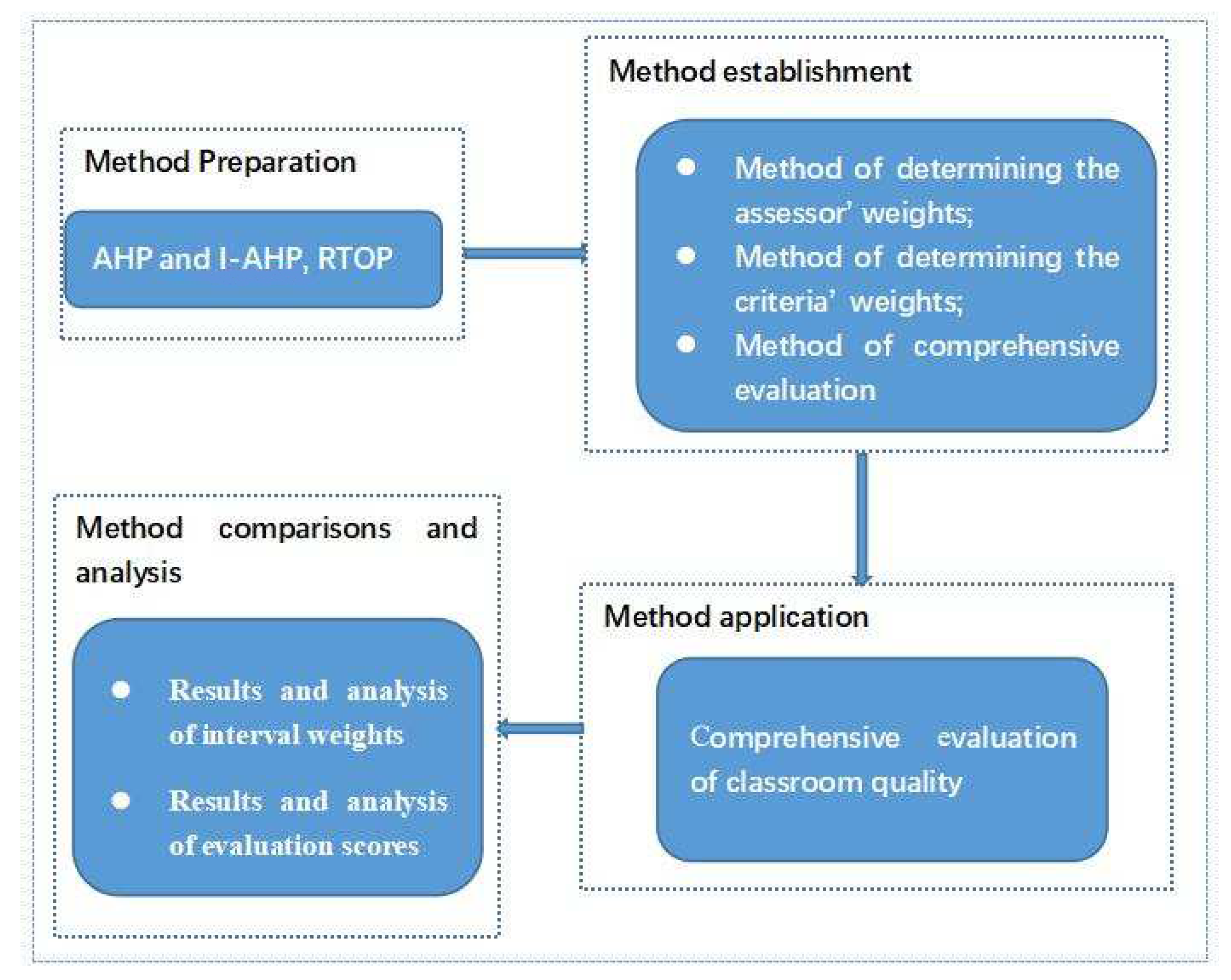

3. Methodology

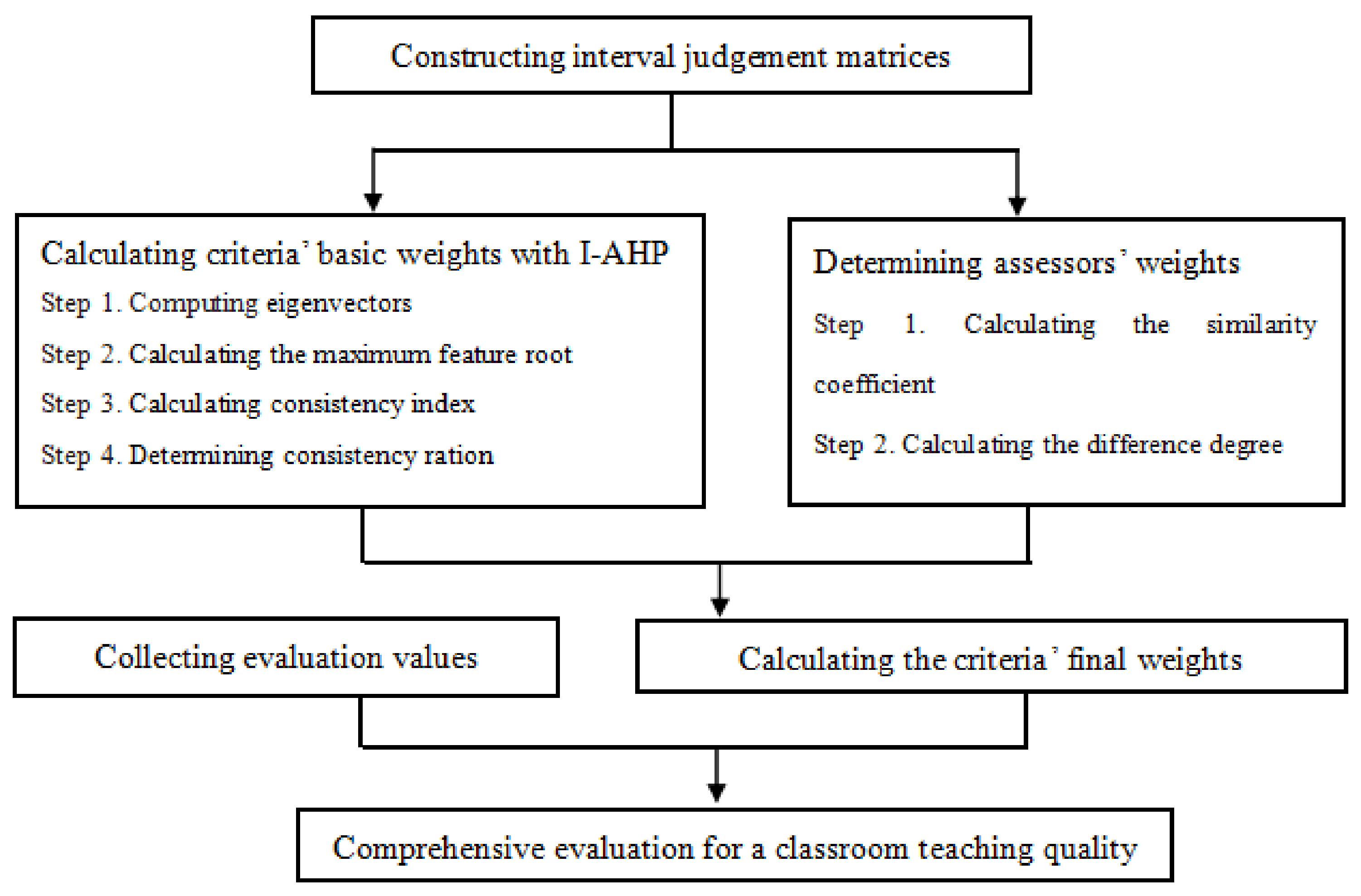

3.1. Determining the Evaluation Criteria’ Weights with I-AHP

3.1.1. Constructing Interval Judgment Matrices

3.1.2. Calculating Criteria’ Basic Weights

3.2. Determining the Assessor’ Weight

3.2.1. Calculating the Similarity Coefficient of Assessors’ Evaluation

3.2.2. Calculating the Difference of Assessors’ Evaluation

3.2.3. Calculating the Weight of Evaluation Assessors

3.2.4. Calculating the Criteria’ Final Weights

3.3. Comprehensive Evaluation Model

4. Case Study

4.1. Evaluation Object and Subject

4.2. Constructing Interval Judgment Matrices

4.3. Calculation the Weights of Factors –

4.3.1. Calculation the Basic Weights of Factors –

4.3.2. Calculating the Weights of Factors –

4.4. Assessors’ Weights

4.4.1. Calculating the Similarity Coefficient of Assessors’ Evaluation

4.4.2. Calculating the Degree of Difference of Assessors’ Evaluation and the Weight of Evaluation Assessors

4.5. Final Weights for Factors –

5. Comprehensive Evaluation with Interval Numbers

5.1. Evaluation Standard and Data Collection

5.2. Comprehensive Evaluation

6. Results and Analysis

6.1. Results and Analysis of Interval Weights

6.1.1. Ranking for Interval Weights

6.1.2. Analysis for the Ranking Results

6.2. Results and Analysis of Aggregated Scores

6.2.1. Ranking for Aggregated Scores

6.2.2. Analysis of Total Aggregated Scores

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Interval Reciprocal Judgment Matrices for Items

References

- Saaty, T.L. How to make a decision: The analytic hierarchy process. Eur. J. Oper. Res. 1990, 48, 9–26. [Google Scholar] [CrossRef]

- Liang, L.; Sheng, Z.H.; Xu, N.R. An improved analytic hierarchy process. Syst. Eng. 1989, 5–7. [Google Scholar]

- Ma, Y.D.; Hu, M.D. Improved AHP method and its application in multiobjective decision making. Syst.-Eng.-Theory Pract. 1997, 6, 40–44. [Google Scholar]

- Jin, J.L.; Wei, Y.M.; Pan, J.F. Accelerating genetic algorithm for correcting the consistency of judgment matrix in AHP. Syst.-Eng.-Theory Pract. 2004, 24, 63–69. [Google Scholar]

- Wang, X.J.; Guo, Y.J. Consistency analysis of judgment matrix based on G1 method. Chin. J. Manag. Sci. 2006, 316, 65–70. [Google Scholar]

- Li, C.H.; Du, Y.W.; Sun, Y.H.; Tian, S. Multi-attribute implicit variable weight decision analysis method. Chin. J. Manag. Sci. 2012, 20, 163–172. [Google Scholar]

- Wang, H.B.; Luo, H.; Yang, S.L. A ranking method of inconsistent judgment matrix based on manifold learning. Chin. J. Manag. Sci. 2015, 23, 147–155. [Google Scholar]

- Wei, C.P.; Zhang, Z.M. An algorithm to improve the consistency of a comparison matrix. Syst.-Eng.-Theory Pract. 2000, 8, 62–66. [Google Scholar]

- Zhu, J.J.; Liu, S.X.; Wang, M.G. A new method to improve the inconsistent judgment matrix. Syst.-Eng.-Theory Pract. 2003, 95–98. [Google Scholar]

- Tian, Z.Y.; Wang, H.C.; Wu, R.M. Consistency test and improvement of possible satisfaction and judgment matrix. Syst.-Eng.-Theory Pract. 2004, 216, 94–99. [Google Scholar]

- Sun, J.; Xu, W.S.; Wu, Q.D. A new algorithm for group decision-making based on compatibility modification and ranking of incomplete judgment matrix. Syst.-Eng.-Theory Pract. 2006, 7, 88–94. [Google Scholar]

- Jiao, B.; Huang, Z.D.; Huang, F.; Li, W. An aggregation method of group AHP judgment matrices based on optimal possible-satisfaction degree. Control. Decis. 2013, 28, 1242–1246. [Google Scholar]

- Liu, B.; Xu, S.B.; Zhao, H.C.; Huo, J.S. Analytic hierarchy process–a decision-making tool for planning. Syst. Eng. 1984, 108, 23–30. [Google Scholar]

- Liu, B. Group judgment and analytic hierarchy process. J. Syst. Eng. 1991, 70–75. [Google Scholar]

- He, K. The scale research of analytic hierarchy process. Syst.-Eng.-Theory Pract. 1997, 59–62. [Google Scholar]

- Xu, Z.S. New scale method for analytic hierarchy process. Syst.-Eng.-Theory Pract. 1998, 75–78. [Google Scholar]

- Wang, H.; Ma, D. Analytic hierarchy process scale evaluation and new scale method. Syst.-Eng.-Theory Pract. 1993, 24–26. [Google Scholar]

- Hou, Y.H.; Shen, D.J. Index number scale and comparison with other scales. Syst.-Eng.-Theory Pract. 1995, 10, 43–46. [Google Scholar]

- Luo, Z.Q.; Yang, S.L. Comparison of several scales in analytic hierarchy process. Syst.-Eng.-Theory Pract. 2004, 9, 51–60. [Google Scholar]

- Lv, Y.J.; Chen, W.C.; Zhong, L. A Survey on the Scale of Analytic Hierarchy Process. J. Qiongzhou Univ. 2013, 20, 1–6. [Google Scholar]

- Wu, Y.H.; Zhu, W.; Li, X.Q.; Gao, R. Interval analytic hierarchy process–IAHP. J. Tianjing Univ. 1995, 28, 700–705. [Google Scholar]

- Deng, S.J.; Zhang, Y.; Ren, J.J.; Yang, K.X.; Liu, K.; Liu, M.M. Evaluation Index of CRTS III Prefabricated Slab Track Cracking Condition Based on Interval AHP. Int. J. Struct. Stab. Dyn. 2021, 21, 2140013. [Google Scholar] [CrossRef]

- Milosevic, M.R.; Milosevic, D.M.; Stanojevic, A.D.; Stevic, D.M.; Simjanovic, D.J. Fuzzy and Interval AHP Approaches in Sustainable Management for the Architectural Heritage in Smart Cities. Mathematics 2021, 9, 304. [Google Scholar] [CrossRef]

- Wang, S.X.; Ge, L.J.; Cai, S.X.; Zhang, D. An Improved Interval AHP Method for Assessment of Cloud Platform-based Electrical Safety Monitoring System. J. Electr. Eng. Technol. 2017, 12, 959–968. [Google Scholar] [CrossRef]

- Wang, S.X.; Ge, L.J.; Cai, S.X.; Wu, L. Hybrid interval AHP-entropy method for electricity user evaluation in smart electricity utilization. J. Mod. Power Syst. Clean Energy 2018, 6, 701–711. [Google Scholar] [CrossRef]

- Ghorbanzadeh, O.; Moslem, S.; Blaschke, T.; Duleba, S. Sustainable Urban Transport Planning Considering Different Stakeholder Groups by an Interval-AHP Decision Support Model. Sustainability 2019, 11, 9. [Google Scholar] [CrossRef]

- Moslem, S.; Ghorbanzadeh, O.; Blaschke, T.; Duleba, S. Analysing Stakeholder Consensus for a Sustainable Transport Development Decision by the Fuzzy AHP and Interval AHP. Sustainability 2019, 11, 3271. [Google Scholar] [CrossRef]

- Xu, Z.S. Research on consistency of interval judgment matrices in group AHP. Oper. Res. Manag. Sci. 2000, 9, 8–11. [Google Scholar]

- Saaty, T.L. The analytic network process. In Decision Making with the Analytic Network Process; Springer: Berlin, Germany, 2006; pp. 1–26. [Google Scholar]

- Saaty, T.L.; Vargas, L.G. Inconsistency and rank preservation. J. Math. Psychol. 1984, 28, 205–214. [Google Scholar] [CrossRef]

- Saaty, T.L. Decision making with the analytic hierarchy process. Int. J. Serv. Sci. 2008, 1, 83–98. [Google Scholar] [CrossRef]

- Adamson, S.L.; Banks, D.; Burtch, M.; Cox, F., III; Judson, E.; Turley, J.B.; Benford, R.; Lawson, A.E. Reformed undergraduate instruction and its subsequent impact on secondary school teaching practice and student achievement. J. Res. Sci. Teach. 2003, 40, 939–957. [Google Scholar]

- Matosas-López, L.; Aguado-Franco, J.C.; Gómez-Galán, J. Constructing an Instrument with Behavioral Scales to Assess Teaching Quality in Blended Learning Modalities. J. New Approaches Educ. Res. 2019, 8, 142–165. [Google Scholar] [CrossRef]

- Metsäpelto, R.; Poikkeus, A.; Heikkilä, M.; Heikkinen-Jokilahti, K.; Husu, J.; Laine, A.; Lappalainen, K.; Lähteenmäki, M.; Mikkilä-Erdmann, M.; Warinowski, A. Conceptual Framework of Teaching Quality: A Multidimensional Adapted Process Model of Teaching; OVET/DOORS working paper. PsyArXiv 2020. [Google Scholar] [CrossRef]

- Budd, D.A.; Van der Hoeven Kraft, K.J.; McConnell, D.A.; Vislova, T. Characterizing Teaching in Introductory Geology Courses: Measuring Classroom Practices. J. Geosci. 2013, 61, 461–475. [Google Scholar]

- Bok, D.C. Higher Learning; Harvard University Press: Cambridge, MA, USA, 1986. [Google Scholar]

- Goe, L.; Holdheide, L.; Miller, T. A Practical Guide to Designing Comprehensive Teacher Evaluation Systems; Center on Great Teachers & Leaders: Washington, DC, USA, 2011. [Google Scholar]

- Danielson, C.; McGreal, T.L. Teacher Evaluation to Enhance Professional Practice; Assn for Supervision & Curriculum: New York, NY, USA, 2000. [Google Scholar]

- Piburn, M.; Sawada, D.; Turley, J.; Falconer, K.; Benford, R.; Bloom, I.; Judson, E. Reformed Teaching Observation Protocol (RTOP) Reference Manual; ACEPT Technical Report No. IN00-3; Tempe: Arizona Board of Regents: Phoenix, AZ, USA, 2000. [Google Scholar]

- Macisaac, D.; Falconer, K. Reforming physics instruction via RTOP. Phys. Teach. 2002, 40, 479–485. [Google Scholar] [CrossRef]

- Lawson, A.E. Using the RTOP to Evaluate Reformed Science and Mathematics Instruction: Improving Undergraduate Instruction in Science, Technology, Engineering, and Mathematics; National Academies Press/National Research Council: Washington, DC, USA, 2003. [Google Scholar]

- Teasdale, R.; Viskupic, K.; Bartley, J.K.; McConnell, D.; Manduca, C.; Bruckner, M.; Farthing, D.; Iverson, E. A multidimensional assessment of reformed teaching practice in geoscience classrooms. Geosphere 2017, 13, 608–627. [Google Scholar] [CrossRef]

- Sawada, D.; Piburn, M.D.; Judson, E.; Turley, J.; Falconer, K.; Benford, R.; Bloom, I. Measuring Reform Practices in Science and Mathematics Classrooms: The Reformed Teaching Observation Protocol. Sch. Sci. Math. 2002, 102, 245–253. [Google Scholar] [CrossRef]

- Lund, T.J.; Pilarz, M.; Velasco, J.B.; Chakraverty, D.; Rosploch, K.; Undersander, M.; Stains, M. The best of both worlds: Building on the COPUS and RTOP observation protocols to easily and reliably measure various levels of reformed instructional practices. CBE Life Sciences Education 2015, 14, 1–12. [Google Scholar] [CrossRef]

- Addy, T.M.; Blanchard, M.R. The problem with reform from the bottom up: Instructional practices and teacher beliefs of graduate teaching assistants following a reform-minded university teacher certificate programme. Int. J. Sci. Educ. 2010, 32, 1045–1071. [Google Scholar] [CrossRef]

- Amrein-Beardsley, A.; Popp, S.E.O. Peer observations among faculty in a college of education: Investigating the summative and formative uses of the Reformed Teaching Observation Protocol (RTOP). Educ. Assess. Eval. Account. 2012, 24, 5–24. [Google Scholar] [CrossRef]

- Campbell, T.; Wolf, P.G.; Der, J.P.; Packenham, E.; AbdHamid, N. Scientific inquiry in the genetics laboratory: Biologists and university science teacher educators collaborating to increase engagements in science processes. J. Coll. Sci. Teach. 2012, 41, 82–89. [Google Scholar]

- Ebert-May, D.; Derting, T.L.; Hodder, J.; Momsen, J.L.; Long, T.M.; Jardeleza, S.E. What we say is not what we do: Effective evaluation of faculty professional development programs. Bioscience 2011, 61, 550–558. [Google Scholar] [CrossRef]

- Erdogan, I.; Campbell, T.; Abd-Hamid, N.H. The student actions coding sheet (SACS): An instrument for illuminating the shifts toward student-centered science classrooms. Int. J. Sci. Educ. 2011, 33, 1313–1336. [Google Scholar] [CrossRef]

- Tong, D.Z.; Xing, H.J.; Zheng, C.G. Localization of Classroom Teaching Evaluation Tool RTOP—Taking the Evaluation of Physics Classroom Teaching as An Example. Educ. Sci. Res. 2020, 11, 31–36. [Google Scholar]

- Tong, D.Z.; Xing, H.J. A Comparative Study of RTOP and Chinese Classical Classroom Teaching Evaluation Tools. Res. Educ. Assess. Learn. 2021, 6. [Google Scholar] [CrossRef]

- Liu, F. Acceptable consistency analysis of interval reciprocal comparison matrices. Fuzzy Sets Syst. 2009, 160, 2686–2700. [Google Scholar] [CrossRef]

- Ghorbanzadeh, O.; Feizizadeh, B.; Blaschke, T. An interval matrix method used to optimize the decision matrix in AHP technique for land subsidence susceptibility mapping. Environ. Earth Sci. 2018, 77, 584. [Google Scholar] [CrossRef]

- Lyu, H.-M.; Sun, W.-J.; Shen, S.-L.; Arulrajah, A. Flood risk assessment in metro systems of mega-cities using a GIS-based modeling approach. Sci. Total Environ. 2018, 626, 1012–1025. [Google Scholar] [CrossRef]

- Chen, J.F.; Hsieh, H.N.; Do, Q.H. Evaluating teaching performance based on fuzzy AHP and comprehensive evaluation approach. Appl. Soft Comput. J. 2014. [Google Scholar] [CrossRef]

- Broumi, S.; Smarandache, F. Cosine similarity measure of interval valued neutrosophic sets. Neutrosophic. Sets Syst. 2014, 5, 15–20. [Google Scholar]

- Chen, X.Z.; Zhang, X.J. The research on computation of researchers’ certainty factor of the indeterminate AHP. J. Zhengzhou Univ. Sci. 2013, 34, 85–89. [Google Scholar]

- Suh, H.; Kim, S.; Hwang, S.; Han, S. Enhancing Preservice Teachers’ Key Competencies for Promoting Sustainability in a University Statistics Course. Sustainability 2020, 12, 9051. [Google Scholar] [CrossRef]

- Guo, Z.M. The evaluation of mathematics self-study ability of college students based on uncertain AHP and whitening weight function. J. Chongqing Technol. Bus. Univ. Sci. Ed. 2019, 36, 65–72. [Google Scholar]

- Jiang, J.; Tang, J.; Gan, Y.; Lan, Z.C.; Qin, T.Q. Safety evaluation of building structure based on combination weighting method. Sci. Technol. Eng. 2021, 21, 7278–7285. [Google Scholar]

- Wang, Y.M.; Yang, J.B.; Xu, D.L. A two-stage logarithmic goal programming method for generating weights from interval comparison matrices. Fuzzy Sets Syst. 2005, 152, 475–498. [Google Scholar] [CrossRef]

- Xu, Z.S.; Chen, J. Some models for deriving the priority weights from interval fuzzy preference relations. Eur. J. Oper. Res. 2008, 184, 266–280. [Google Scholar] [CrossRef]

- Dick, W.; Carey, L. The Systematic Design of Instruction, 4th ed.; Harper Collins College Publishers: New York, NY, USA, 1996. [Google Scholar]

| Value of Importance | Comparative Judgment |

|---|---|

| 1 | is as important as |

| 3 | is slightly more important than |

| 5 | is strongly more important than |

| 7 | is very strongly more important than |

| 9 | is extremely more important than |

| 2,4,6,8 | Represents the median value of the above adjacent judgment |

| Reciprocal | If the ratio of the importance of and is , |

| then ratio of and is |

| Target Level | Factor Level (F) | Item Level (I) |

|---|---|---|

| Teaching quality evaluation | . Lesson Design and Implementation | . Respect student preconceptions and knowledge of mathematics |

| . Form a math learning group | ||

| . Explore before formal presentation | ||

| . Seek alternative approaches different in textbooks | ||

| . Adopt student ideas in teaching | ||

| . Content: Propositional Knowledge | . Involve fundamental concepts of mathematics | |

| . Promote coherent understanding of mathematical concepts | ||

| . Teacher have a solid grasp of the contents (especially for unrelated questions) | ||

| . Encourage abstraction (mathematics models or formulas) | ||

| . Emphasize the connection between mathematics and other disciplines or social life | ||

| . Content: Procedural Knowledge | . Students use models, formulas, graphics to express their understanding | |

| . Students make predictions, assumptions or estimates | ||

| . Make critical inferences or estimates of results | ||

| . Students reflect on their learning in mathematics class | ||

| . Students infer or question corresponding conclusions, concepts and formulas | ||

| . Classroom culture: Communicative Interactions | . Students communicate their understanding and ideas with various ways | |

| . Teachers’ questions lead to students’ thinking differently about mathematics | ||

| . Students actively discuss mathematics problems | ||

| . The direction of the class is determined by the discussion of students | ||

| . Students actively express their views without being ridiculed | ||

| . Classroom culture: Student/ Teacher Relationships | . Encourage students to actively participate in discussion | |

| . Encourage students to solve mathematical problems in many ways | ||

| . Teacher is patient when students think about problems or complete assignments | ||

| . When students investigate or study, the teacher acts as a resource | ||

| . Teacher listens carefully when students discuss and express their views |

| n | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 |

| RI | 0.00 | 0.00 | 0.58 | 0.90 | 1.12 | 1.24 | 1.32 | 1.41 | 1.45 | 1.49 | 1.51 | 1.48 |

| Assessor | |||||

|---|---|---|---|---|---|

| No.1 | 5.2669 | 0.9779 | 0.0596 | =(0.3033, 0.1160, 0.0706, 0.4004, 0.1056) | |

| 5.0958 | 0.9958 | 0.0214 | = (0.4482, 0.1484, 0.0757, 0.2365, 0.0690) | ||

| No.2 | 5.2148 | 0.9896 | 0.0479 | =(0.1424, 0.3161, 0.2915, 0.1143, 0.1170) | |

| 5.3465 | 0.9813 | 0.0773 | = (0.2607, 0.3275, 0.2450, 0.0890, 0.0674) | ||

| No.3 | 5.3339 | 0.9733 | 0.0745 | = (0.1674, 0.0814, 0.0993, 0.3766, 0.2527) | |

| 5.2508 | 0.9775 | 0.0560 | = (0.2856, 0.1094, 0.1224, 0.3050, 0.1509) | ||

| No.4 | 5.2592 | 0.9804 | 0.0579 | = (0.0655, 0.1221, 0.3655, 0.2712, 0.1370) | |

| 5.4217 | 0.9615 | 0.0941 | = (0.1151, 0.1457, 0.3793, 0.2202, 0.1201) | ||

| No.5 | 5.3621 | 0.9582 | 0.0808 | = (0.2589, 0.2254, 0.0958, 0.1962, 0.1767) | |

| 5.4353 | 0.9532 | 0.0972 | = (0.3487, 0.2395, 0.0895, 0.1629, 0.1177) |

| Factor | Lower Weight | Upper Weight |

|---|---|---|

| 0.1936 | 0.3030 | |

| 0.1747 | 0.1940 | |

| 0.1777 | 0.1679 | |

| 0.2695 | 0.2043 | |

| 0.1577 | 0.1057 |

| Factor | Lower Weight | Upper Weight |

|---|---|---|

| 0.1990 | 0.3108 | |

| 0.1795 | 0.199 | |

| 0.1826 | 0.1722 | |

| 0.2769 | 0.2095 | |

| 0.162 | 0.1085 |

| Factor | Lower Weight () | Upper Weight () | Items | Lower Weight () | Upper Weight () |

|---|---|---|---|---|---|

| 0.1990 | 0.3108 | 0.1243 | 0.1717 | ||

| 0.2183 | 0.2497 | ||||

| 0.2604 | 0.2516 | ||||

| 0.2487 | 0.2128 | ||||

| 0.1482 | 0.1142 | ||||

| 0.1795 | 0.1990 | 0.1282 | 0.1914 | ||

| 0.1439 | 0.1768 | ||||

| 0.3149 | 0.3012 | ||||

| 0.2101 | 0.1684 | ||||

| 0.2029 | 0.1623 | ||||

| 0.1826 | 0.1722 | 0.0878 | 0.1460 | ||

| 0.2063 | 0.2454 | ||||

| 0.2499 | 0.2462 | ||||

| 0.3182 | 0.2549 | ||||

| 0.1378 | 0.1075 | ||||

| 0.2769 | 0.2095 | 0.2008 | 0.2646 | ||

| 0.1628 | 0.1938 | ||||

| 0.1574 | 0.1475 | ||||

| 0.2054 | 0.1758 | ||||

| 0.2737 | 0.2183 | ||||

| 0.1620 | 0.1085 | 0.2002 | 0.2694 | ||

| 0.2300 | 0.2444 | ||||

| 0.1802 | 0.1816 | ||||

| 0.2209 | 0.1892 | ||||

| 0.1687 | 0.1155 |

| Factors | Items | Evaluation Value () | ||||

|---|---|---|---|---|---|---|

| Assessor-1 | Assessor-2 | Assessor-3 | Assessor-4 | Assessor-5 | ||

| [0.6,0.7] | [0.65,0.75] | [0.6,0.8] | [0.7,0.8] | [0.8,0.9] | ||

| [0.7,0.8] | [0.8,0.85] | [0.75,0.85] | [0.7,0.8] | [0.85,0.9] | ||

| [0.65,0.75] | [0.7,0.8] | [0.55,0.6] | [0.5,0.6] | [0.6,0.8] | ||

| [0.65,0.75] | [0.7,0.8] | [0.55,0.6] | [0.5,0.6] | [0.6,0.8] | ||

| [0.4,0.5] | [0.5,0.55] | [0.5,0.6] | [0.6,0.7] | [0.6,0.65] | ||

| [0.8,0.9] | [0.75,0.8] | [0.8,0.85] | [0.75,0.85] | [0.8,0.9] | ||

| [0.6,0.8] | [0.7,0.8] | [0.8,0.85] | [0.8,0.9] | [0.75,0.8] | ||

| [0.8,0.9] | [0.8,0.85] | [0.8,0.9] | [0.9,0.9] | [0.75,0.85] | ||

| [0.5,0.6] | [0.75,0.8] | [0.6,0.7] | [0.65,0.7] | [0.785,0.85] | ||

| [0.8.0.9] | [0.8,0.85] | [0.8,0.9] | [0.75,0.8] | [0.75,0.85] | ||

| [0.6,0.6] | [0.55,0.7] | [0.6,0.65] | [0.5,0.6] | [0.7,0.75] | ||

| [0.65,0.7] | [0.65,0.75] | [0.7,0.75] | [0.8,0.9] | [0.75,0.8] | ||

| [0.75,0.8] | [0.7,0.75] | [0.7,0.8] | [0.6,0.8] | [0.75,0.85] | ||

| [0.5,0.6] | [0.4,0.5] | [0.6,0.65] | [0.5,0.55] | [0.6,0.7] | ||

| [0.7,0.8] | [0.6,0.65] | [0.6,0.7] | [0.7,0.8] | [0.75,0.85] | ||

| [0.75,0.9] | [0.7,0.8] | [0.7,0.8] | [0.6,0.8] | [0.7,0.75] | ||

| [0.75,0.8] | [0.7,0.8] | [0.75,0.85] | [0.65,0.8] | [0.8,0.85] | ||

| [0.5,0.6] | [0.55,0.6] | [0.45,0.5] | [0.6,0.7] | [0.6,0.7] | ||

| [0.35,0.4] | [0.5,0.6] | [0.55,0.6] | [0.5,0.6] | [0.5,0.6] | ||

| [0.75,0.8] | [0.7,0.8] | [0.65,0.7] | [0.6,0.7] | [0.75,0.8] | ||

| [0.8,0.9] | [0.75,0.8] | [0.75,0.85] | [0.8,0.85] | [0.75,0.85] | ||

| [0.6,0.7] | [0.7,0.75] | [0.6,0.65] | [0.7,0.8] | [0.7,0.8] | ||

| [0.8,0.9] | [0.85,0.9] | [0.75,0.8] | [0.8,0.9] | [0.7,0.8] | ||

| [0.6,0.8] | [0.6,0.7] | [0.65,0.7] | [0.75,0.8] | [0.7,0.8] | ||

| [0.6,0.7] | [0.5,0.6] | [0.45,0.5] | [0.5,0.6] | [0.6,0.7] | ||

| Factor | Weight () | Average Evaluation Value () | Aggregated Score () | Total Aggregated Score (y) |

|---|---|---|---|---|

| [0.1990,0.3108] | [0.6135,0.721] | [0.122,0.2241] | ||

| [0.1795,0.1990] | [0.7549,0.8372] | [0.1355,0.1666] | [0.6565,0.7510] | |

| [0.1826,0.1722] | [0.6310,0.7193] | [0.1152,0.1239] | ||

| [0.2769,0.2095] | [0.6257,0.7238] | [0.1733,0.1517] | ||

| [0.1620,0.1085] | [0.6817,0.7816] | [0.1104,0.0847] |

| Interval Scale | Evaluation Level | Description |

|---|---|---|

| (0,0.2] | Very poor | The behavior never occurred, the performance is very poor |

| (0.2,0.4] | Poor | The behavior occurred at least once, the performance is poor to describe the lesson |

| (0.4,0.6] | Medium | The behavior occurred more than once, the performance very loosely describes the lesson |

| (0.6,0.8] | Good | The behavior occurred more than two times, the performance fairly descriptive of the lesson |

| (0.8,1] | Very good | The performance extremely descriptive of the lesson |

| Factor | Weight | Ranking | Items of Minimum/Maximum Weight |

|---|---|---|---|

| [0.1990,0.3108] | 1 | ||

| [0.1795,0.1990] | 3 | ||

| [0.1826,0.1722] | 4 | ||

| [0.2769,0.2095] | 2 | ||

| [0.1620,0.1085] | 5 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qin, Y.; Hashim, S.R.M.; Sulaiman, J. An Interval AHP Technique for Classroom Teaching Quality Evaluation. Educ. Sci. 2022, 12, 736. https://doi.org/10.3390/educsci12110736

Qin Y, Hashim SRM, Sulaiman J. An Interval AHP Technique for Classroom Teaching Quality Evaluation. Education Sciences. 2022; 12(11):736. https://doi.org/10.3390/educsci12110736

Chicago/Turabian StyleQin, Ya, Siti Rahayu Mohd. Hashim, and Jumat Sulaiman. 2022. "An Interval AHP Technique for Classroom Teaching Quality Evaluation" Education Sciences 12, no. 11: 736. https://doi.org/10.3390/educsci12110736

APA StyleQin, Y., Hashim, S. R. M., & Sulaiman, J. (2022). An Interval AHP Technique for Classroom Teaching Quality Evaluation. Education Sciences, 12(11), 736. https://doi.org/10.3390/educsci12110736