Abstract

The global coronavirus disease (COVID-19) outbreak forced a shift from face-to-face education to online learning in higher education settings around the world. From the outset, COVID-19 online learning (CoOL) has differed from conventional online learning due to the limited time that students, instructors, and institutions had to adapt to the online learning platform. Such a rapid transition of learning modes may have affected learning effectiveness, which is yet to be investigated. Thus, identifying the predictive factors of learning effectiveness is crucial for the improvement of CoOL. In this study, we assess the significance of university support, student–student dialogue, instructor–student dialogue, and course design for learning effectiveness, measured by perceived learning outcomes, student initiative, and satisfaction. A total of 409 university students completed our survey. Our findings indicated that student–student dialogue and course design were predictive factors of perceived learning outcomes whereas instructor–student dialogue was a determinant of student initiative. University support had no significant relationship with either perceived learning outcomes or student initiative. In terms of learning effectiveness, both perceived learning outcomes and student initiative determined student satisfaction. The results identified that student–student dialogue, course design, and instructor–student dialogue were the key predictive factors of CoOL learning effectiveness, which may determine the ultimate success of CoOL.

1. Introduction

The global coronavirus disease (COVID-19) pandemic has had a massive impact on higher education around the world [1]. In the absence of targeted medication, social distancing has been identified as an essential non-pharmaceutical intervention to curb the spread of the disease [2]. In the aftermath of the World Health Organization’s declaration of a COVID-19 pandemic on 11 March 2020 [3], university campuses across the globe were closed to comply with social distancing measures. Moving learning activities online became the only option to continue university education during the pandemic. Such a rapid, global shift from face-to-face (FTF) to online learning presented an unprecedented challenge to higher education [4].

Online learning is not a novel educational approach. It has been incorporated into higher education for years [5]. In 1989, the University of Phoenix became the first institution to launch fully online Bachelor’s and Master’s degree programs [6]. Subsequently, there was a steady increase in the delivery of online education across the world [7]. In 2017, 19.5% of undergraduate students in the United States took at least one online course in their study [8]. With systematic design processes, online courses were developed for education with the maximal use of technologies, including websites, learning portals, video conferencing, and mobile apps, and instructors and students were equipped to perform remote teaching and learning activities. The effectiveness of online learning has also been reported in various studies [9,10,11].

The emergency COVID-19 online learning (CoOL) is, however, different from the conventional online learning of pre-pandemic times. CoOL describes the quick transition from FTF to online learning. Courses that were originally planned to be delivered FTF were forced online within a very short period of time. Instructors and students, some of whom may not have had any experience with online teaching or learning and may not have been ready for the move, needed to cope with the changes quickly. The rapid transition of learning modes may influence learning effectiveness, which is yet to be investigated. As the COVID-19 pandemic continues to dictate our lives and amid a slow global vaccine rollout, CoOL is likely to be a feature of university education in the coming future. This rapid switch between learning platforms may also be needed to adapt to new changing situations. To improve online teaching strategies to accommodate the rapid transition between learning modes, it is important to investigate the learning effectiveness of CoOL [12,13].

1.1. The Importance of Learning Effectiveness

As the use of computer technology in teaching and learning increases, the focus of higher education is gradually shifting from ‘provider’ to ‘learner’ when it comes to enhancing the learning of individual students. However, the traditional quality measures associated with accreditation do not match this new climate of teaching and learning [14]. For example, ‘seat-time’, ‘physical attendance’, and library holdings’ do not translate to an online environment. Outcome-based measures might be useful in online learning environment [15]. Learning effectiveness, which refers to the degree to which the goals of learning have been achieved or that learning is effective, can better reflect the quality of Internet-based teaching and learning [16]. Information obtained from the analyses of learning effectiveness allows institutions to improve online course development. Research examining the effectiveness of online learning has much increased in recent years. According to an integrative review of 761 publications, learning outcomes, learning attitude, and satisfaction are the most common parameters for assessing learning effectiveness in higher education [17].

Learning outcomes reflect aspects of educational success, such as student perceived achievement of learning objectives, the occurrence of learning, improvement in performance, and attainment of results [16]. It has been reported that student perceived learning outcomes are highly correlated with actual learning performance [18]. A study of blended learning environments indicated that high achieving students were more satisfied with the courses than the low achievers, indicating that high achievers can better adapt to varied learning environments than their low achieving peers [18]. However, some researchers suggested that perception of learning may not correlate with actual gains in knowledge [19]. There are mixed results in this area. Therefore, it is important to investigate and provide more empirical evidence on whether perceived learning outcomes is essential for understanding students’ learning achievement and capability to cope with unconventional learning settings.

Student initiative describes an attitude to learning that is characterized by proactive, self-starting, and persisting behaviors that are deployed to accomplish learning goals [20]. It has been shown that student initiative is closely linked to learning achievement. Ashforth et al. [21] indicated that proactive behaviors positively influence learning outcomes. Wolff et al. [22] revealed that active learning leads to better knowledge acquisition and deeper understanding of learning materials. It is especially important to arouse students’ learning initiative during CoOL because of the extra effort required for students to adapt to the change in learning mode and resist distractions. Research into student initiative is, therefore, as important as the study of learning outcomes in evaluating the learning effectiveness of CoOL.

Student satisfaction reflects how positively students perceive their learning experiences. It is associated with the overall success of online courses [23]. Higher student satisfaction can lead to lower drop-out rates, higher persistence, and greater commitment to the courses, which are the keys of success for university programs [23,24]. Moreover, student satisfaction enables institutions to target areas for improvement and facilitates the development of learning strategies specifically for online learners [25]. Therefore, the study of satisfaction is necessary in investigating the learning effectiveness of CoOL. Furthermore, student satisfaction is an important indicator of program- and student-related learning outcomes [26,27]. It has also been suggested that student initiative, involving the self-management of learning, is positively linked to online learning satisfaction [28]. Considering the importance of perceived learning outcomes and student initiative in CoOL, how these factors relate to satisfaction is worth investigating.

1.2. Aim of Study

The primary goal of this study was to identify the factors that predict the learning effectiveness of CoOL. This study advanced previous research into online learning by considering the potential predictive factors that are relevant to CoOL. It also examined the relationship between the three parameters of learning effectiveness, including perceived learning outcomes, student initiative, and satisfaction.

2. Background Theories

Previous studies have identified the determinants of the success of conventional online learning [29,30,31]. These factors have also been found to be closely related to online learning effectiveness. Therefore, to investigate the learning effectiveness of CoOL, the specific predictive factors of CoOL success should also be identified, drawing on the theories of learning.

Learning theories emerged in the 20th century, with three major theoretical frameworks shaping the study of learning: the behaviorist, cognitivist, and constructivist learning theories [32]. Unlike the behaviorist and cognitivist approaches, which emphasize instruction for knowledge transfer [33,34], the constructivist learning theory focuses on knowledge-building processes [35]. Having emerged during a period of educational reform in the United States, the constructivist theory defined one of the fundamental features of online learning, namely, that knowledge is constructed by students, rather than transferred from instructors to students [30]. The course structure and learning environment should facilitate faculty–student interaction as well as student–student interaction in ways that allow students to construct knowledge and formulate newly learned materials [36]. Several other learning theories are extensions of the constructivist theory. These include the collaborative learning theory, the cognitive information processing theory, and the facilitated learning theory [37,38,39]. These theories address different ways of knowledge construction.

Collaborative learning theory, previously known as online collaborative learning, assumes that knowledge is socially and collaboratively constructed through sharing [38]. Learners rely on one another to accomplish tasks that they otherwise would not be able to complete individually. Within this sharing framework, student–student dialogue and instructor–student dialogue are viewed as critical factors to the success of online learning.

Cognitive information processing theory assumes that knowledge is constructed through our cognitive processes, including attention, perception, encoding, storage, and retrieval of knowledge [37]. Online course should be designed in such a way as to facilitate these cognitive processes, for example through the provision of organized instruction, linking new material with prior knowledge, and creating practice opportunities. Course design is, therefore, a potential channel through which online learning is promoted.

The facilitated learning model assumes that learning occurs through an educator who establishes an atmosphere in which learners feel comfortable to consider new ideas and are not threatened by external factors [39]. Institutional support that provides clear guidelines, updates on the arrangement of online class, and technical assistance to ensure the smooth running of classes, is considered a crucial factor to enhance learning effectiveness.

3. Conceptual Framework and Hypotheses

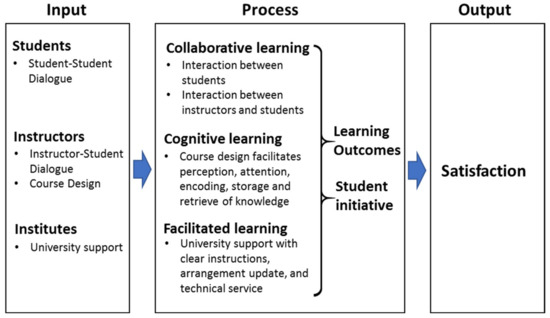

The constructivist learning theories discussed above formed the theoretical foundation of the research model in this study. The construction of the research model itself, by defining the relationships between predictor variables (potential factors) and the outcome variable (satisfaction), were necessary steps in developing appropriate measures and obtaining valid results. The framework of our research model was derived from the study of Eom and Ashill [30], which originated from the web-based Virtual Learning Environment (VLE) effectiveness model [40] and the Technology-Mediated Learning (TML) research framework [41]. Our conceptual framework views CoOL as a system with three input entities—including students, instructors, and institutions (Figure 1). These three entities contribute to four predictive variables: student–student dialogue, instructor–student dialogue, course design, and university support. The four predictive variables regulate the learning processes and predict perceived learning outcomes and student initiative. The learning processes eventually determine satisfaction, which is taken to be the ultimate indicator of learning effectiveness. The development of our hypotheses is discussed in the following sections.

Figure 1.

Conceptual framework of CoOL system.

3.1. Potential Factors of CoOL Learning Effectiveness

3.1.1. University Support

Institutional support for learning is a key element in optimizing students’ academic experiences. The assistance offered to students by their universities may involve instructional, peer, and technical support. Lee et al. [42] reported that students’ perceptions of support were significantly related to their overall satisfaction with online courses. University support during CoOL has been deemed especially important for students to cope with the change in learning mode. In particular, instructional support that provides clear guidelines and updates on the arrangement of online class have made students feel comfortable to continue to learn in the CoOL environment. Technical support has ensured the smooth running of classes to maximize learning during CoOL. We therefore proposed the following hypotheses:

Hypothesis 1a.

A higher level of university support results in a higher level of perceived learning outcomes in CoOL.

Hypothesis 1b.

A higher level of university support results in a higher level of student initiative in CoOL.

3.1.2. Student–Student Dialogue

Interaction between students is an important part of any course experience. In the context of CoOL, it is especially indicative of a successful outcome, as social support is a crucial coping mechanism for students. Interaction between students allows the cohort to build a virtual community to compensate for the sudden loss of FTF communication. It also enables students to exchange information and ideas to promote learning through the new platform.

Student–student interaction facilitates dialogue and inquiry, and promotes supportive relationships between learners. This type of interaction can take the form of group projects or group discussions, for example. Student–student interaction is vital to building community in an online environment, which supports productive learning by enhancing the development of problem-solving and critical thinking skills [43]. In one study, students who displayed high levels of interaction with other students reported high levels of learning outcomes and satisfaction [44]. We therefore proposed the following hypotheses:

Hypothesis 2a.

A higher level of student–student dialogue results in a higher level of perceived learning outcomes in CoOL.

Hypothesis 2b.

A higher level of student–student dialogue results in a higher level of student initiative in CoOL.

3.1.3. Instructor–Student Dialogue

Instructor–student dialogue refers to the bi-directional interaction between instructors and students, which can be observed when, for example, an instructor delivers information, encourages their students, listens to students’ concerns, or provides feedback. Students interact with their instructors by asking questions, or communicating with them about course activities. Instructor–student interaction was found to be a significant contributor to student learning and satisfaction [45]. It is critical for the success of CoOL, as good communication and information sharing is essential for both instructors and students to cope with the change. Interaction between instructors and students may enhance students’ understanding of course materials and stimulate students’ learning interest. We therefore proposed the following hypotheses:

Hypothesis 3a.

A higher level of instructor–student dialogue results in a higher level of perceived learning outcomes in CoOL.

Hypothesis 3b.

A higher level of instructor–student dialogue results in a higher level of student initiative in CoOL.

3.1.4. Course Design

Course design is the process and methodology of creating quality learning environments and experiences for students [46]. It is part of the formal role of instructors [47]. With a deliberate design, online courses offer students structured exposure to course materials, learning activities, and interaction. Students are able to access information, acquire skills, and practice higher levels of thinking with the effective use of appropriate resources and technologies. Swan et al. [48] showed that course design can influence the learning process and learning outcomes of students. A recent survey reported that courses containing specific online course components can promote student satisfaction. These components include breaking up class activities into shorter segments than in an in-person setting, meeting in ‘breakout groups’ during a live class, and live sessions in which students can ask questions and participate in discussions. The ability for instructors to arrange and adjust course design to accommodate CoOL is pivotal to enhancing learning effectiveness. We, therefore, proposed the following hypotheses:

Hypothesis 4a.

A higher level of deliberate course design results in a higher level of perceived learning outcomes.

Hypothesis 4b.

A higher level of deliberate course design results in a higher level of student initiative.

3.2. Relationships between Learning Effectiveness Parameters

According to the literature, the three parameters of learning effectiveness are inter-related. Student initiative is closely linked to learning achievement [21,22], and student satisfaction is an important indicator of learning outcomes [26,27] and student initiative [28]. The relationships between the three parameters must be investigated to understand the learning effectiveness in CoOL. We therefore proposed the following hypotheses:

Hypothesis 5.

A higher level of perceived learning outcomes results in a higher level of student initiative.

Hypothesis 6.

A higher level of perceived learning outcomes results in a higher level of satisfaction.

Hypothesis 7.

A higher level of student initiative results in a higher level of satisfaction.

4. Method

4.1. Sample and Procedure

The study sample included 409 full-time undergraduate students from 11 universities (eight public and three private) in Hong Kong who experienced CoOL, in which both asynchronous and synchronous instruction were included, in the spring semester and FTF learning in the autumn semester of 2020 and prior to the CoOL period. In other words, the CoOL period was their first time receiving formal education online. We identified our target respondents through personal networks and referrals. We then sent an e-mail invitation, an information sheet, and a hyperlink to our online survey created by Qualtrics, an online survey system, to our target respondents. Data collection took place immediately after the end of the spring semester of 2020. The demographic characteristics of the participants are shown in Table 1. The participants represented different academic levels, academic disciplines, and universities. The academic levels were categorized into junior and senior levels. Some 45.0% of the participants were taking junior level courses while 55.0% were taking senior level courses. Greater proportions of participants were found in the major disciplines, including business (26.4%), medicine/healthcare (21.3%), social sciences (13.0%), science (13.2%), and arts (10.0%). About half of the participants came from private universities (52.8%) and half from public universities (47.2%) in Hong Kong. The sample was representative of the undergraduate population in terms of academic level, academic discipline, and type of university attended. A diverse background reduces potential biases due to the influence of academic experience in the study sample. There were more female participants than male participants, which reflects similar responses in previous studies of online learning environments [23,49] where females show a higher tendency to participate in online surveys.

Table 1.

Demographic characteristics of the participants (n = 409).

4.2. Measures

All of the measurement items with their means and standard deviations are given in Appendix A. For the four predictor variables, the student–student dialogue, the instructor–student dialogue, and the course design constructs were designed with reference to the study of Eom and Ashill [30]. The university support construct was developed with items to investigate student perceived support via clear guidelines, updates on the arrangement of online class, and technical support. These instructional and technical supports were found to predict course satisfaction [42]. For the three outcome variables, the perceived learning outcomes construct was based on the study of Eom and Ashill [30], whereas the student satisfaction construct was adapted from an instrument from past research into student satisfaction with online learning settings [11]. The student initiative construct was developed with items to determine students’ learning behaviors, such as asking more questions, spending more time studying, and spending more time reflecting on what they have learned. These behaviors were considered indicators of the learning initiative of students [50].

Each measurement item was rated on a seven-point Likert scale where 1 = strongly disagree and 7 = strongly agree.

4.3. Data Analysis

The data were analyzed through partial least squares structural equation modeling (PLS-SEM). The statistical software SmartPLS version 3 [51] was used to perform the PLS-SEM analysis.

PLS-SEM is a non-parametric modeling technique developed by Wold [52] that can examine a complex research model in exploratory studies with fewer burdens on sample size and data distribution [53]. Due to their high flexibility and prominence, the use of PLS-SEM applications in higher education research has increased [54].

As the objective of PLS-SEM is to maximize endogenous latent variables’ explained variance [53], this analysis approach particularly suits the aim of this study through assessing the predictive capabilities of the potential factor constructs (university support, student–student dialogue, instructor–student dialogue, and course design) toward the learning effectiveness of CoOL.

The reflective measurement model was evaluated by assessing the internal consistency reliability, convergent validity, and discriminant validity [53]. The structural model was evaluated by examining the coefficients of determination (R2) of the three endogenous constructs (i.e., learning outcomes, student initiative, and satisfaction). The values and significance of the path coefficients were measured through the bootstrapping resampling method (n = 500 subsamples) in the PLS-SEM algorithm [55].

In addition, we included gender, academic level, academic discipline, and type of university as control variables in the research.

5. Results

5.1. Descriptive Statistics

The second and third columns of Appendix A display the means and standard deviations for the measurement items we studied (1 = strongly disagree; 7 = strongly agree). It is found that the mean range was from 2.87 (SD = 1.514) to 4.39 (SD = 1.702), representing that there are some differences in the respondents’ agreement to the items.

5.2. Validation of Measurement Model

Table 2 shows the results of the measurement model validation, including the item factor loadings, composite reliabilities, Cronbach’s alpha, and average variance extracted (AVE) of the seven constructs.

Table 2.

Model validation results (construct reliability and validity).

Regarding the construct reliability of the measurement model, both the composite reliabilities and Cronbach’s alphas of the constructs were larger than 0.7, indicating acceptable internal consistency [56,57].

Convergent validity measures the extent to which the items within the same construct relate to each other. Referring to the resulting model (Table 2), the factor loadings of the individual items were all above 0.7 (except ISD4, which was 0.67). The AVE values for all of the constructs were higher than 0.5. These results suggested adequate convergent validity [56,57].

Discriminant validity, which refers to the degree to which each construct in the resulting model is distinct from the others, was measured through the Fornell–Larcker criterion [57]. As illustrated in Table 3, the square root of the AVE value of each construct was greater than its largest correlation with any other construct. Thus, the results demonstrated satisfactory discrimination validity.

Table 3.

Latent variable correlations (discriminant validity).

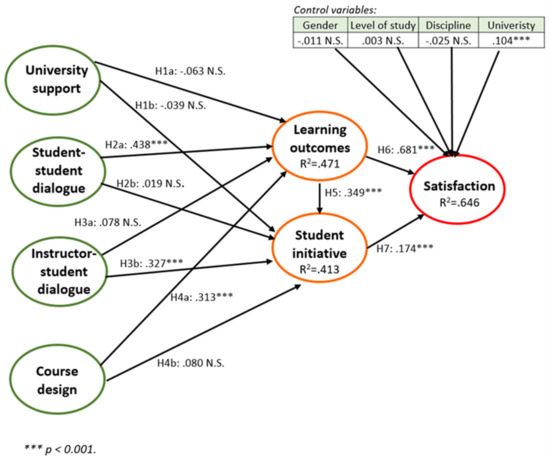

5.3. Evaluation of Structural Models

The structural models were evaluated by the size and significance of the path coefficients, and R2. As illustrated in Figure 2, the R2 values represent the amount of explained variance of the endogenous constructs in the structural model, which determines the structural model’s predictive power [53]. Figure 2 shows that the R2 values of learning outcomes (0.471) and student initiative (0.413) were satisfactory. The R2 value of satisfaction (0.646) also showed adequate predictive power in the resulting structural model [58], proving that approximately 64.6% of the variance of satisfaction was explained by the model. The significance and size of the path coefficients are summarized in Table 4.

Figure 2.

Structural model results.

Table 4.

Results of hypothesis testing.

5.4. Causal Relation Analysis

University support demonstrated no significant relationships with either perceived learning outcomes or student initiative. Thus, both hypothesis Hypothesis 1a and Hypothesis 1b are rejected. Student–student dialogue revealed a significant positive relationship with perceived learning outcomes (β = 0.438, p = < 0.001) but no significant relationship with student initiative, supporting hypothesis Hypothesis 2a and rejecting Hypothesis 2b. Instructor–student dialogue had no significant relationship with perceived learning outcomes but revealed a significant positive relationship with student initiative (β = 0.327, p < 0.001). Hypothesis 3a is therefore rejected, whereas the existence of Hypothesis 3b is supported. Course design demonstrated a significant positive relationship with perceived learning outcomes (β = 0.313, p < 0.001) but had no significant relationship with student initiative. The results support hypothesis Hypothesis 4a and reject Hypothesis 4b.

Perceived learning outcomes was found to have a significant positive relationship with student initiative (β = 0.349, p < 0.001), supporting hypothesis Hypothesis 5. Both perceived learning outcomes and student initiative revealed a significant relationship with satisfaction, and therefore support hypotheses Hypothesis 6 and Hypothesis 7. Of these factors, perceived learning outcomes (β = 0.681, p < 0.001) had a greater relationship with satisfaction than student initiative (β = 0.174, p < 0.001).

Regarding the control variables, gender, academic level, and academic discipline had no effect on student satisfaction with CoOL. Interestingly, the type of university attended influenced student satisfaction; students studying at private universities tended to show greater satisfaction with online classes than those at public universities, when the other variables were kept unchanged.

6. Discussion

This study identified the predictive factors of the learning effectiveness of CoOL. The results indicated that student–student dialogue and course design were significant factors that predicted perceived learning outcomes, whereas instructor–student dialogue was not. In this regard, the determinants of CoOL perceived learning outcomes were found to be different from those of conventional online learning, with all three factors being important predictors in distance learning environments [30]. The specific modifications to course structure and instructional methods in CoOL may explain this difference, as conventional online courses may be better balanced in terms of asynchronized and synchronized sections. However, pre-recorded, asynchronized lectures became one of the major course components in CoOL because much of the online learning material had to be prepared in a short period of time. Instructors also provided clearly written course outlines to ensure that students understood the course requirements and learning objectives. Because most of the course materials were available online, the interaction between instructors and students regarding course content was greatly reduced compared to FTF learning. As the relevance and impact of instructor–student interaction on learning outcomes likely depends on the intensity and frequency with which such interaction occurs [59], instructor–student dialogue may not significantly affect learning outcomes in CoOL. Self-study according to course design and interaction between students through group discussions, group projects, or idea sharing already supported the achievement of learning outcomes. As a result, appropriate course design that encourages student–student interaction is critical for students to achieve learning outcomes in CoOL.

Although instructor–student dialogue had no significant effect on perceived learning outcomes, it was the only factor among the four predictive variables that significantly affected student initiative. Instructor–student interaction, therefore, remains an essential component of CoOL. Interaction with instructors during synchronized lectures, breakout group discussions, or arranged online meetings, allows students to communicate with instructors when they have questions regarding the course content. Instructors can clarify students’ concerns and inspire students’ learning. Interaction with instructors promotes critical thinking and learning interests among students that cannot be achieved by other factors.

The findings indicate the important role of instructors in CoOL. The role of instructors in online learning settings has generally been a neglected area of research [60]. Arbaugh [60] investigated two different roles of instructors, a formal role (teaching presence), and an informal role (immediacy behaviors), and found that both instructor roles were positive and significant predictors of perceived learning outcomes and satisfaction in online MBA courses. The results of our study are consistent with previous findings that the formal role of instructors, including their involvement in course design and promotion of student–student dialogue, positively impacted perceived learning outcomes. In addition, the informal role, which refers to communication behaviors that inspire learning and reduce social and psychological distance between instructors and students, demonstrated a significant impact on student initiative. Both instructor roles, therefore, directly or indirectly affect perceived learning outcomes and student initiative, which eventually determine satisfaction and the ultimate success of CoOL.

University support had no significant relationship with either perceived learning outcomes or student initiative. In this study, the investigation of university support focused on the provision of clear guidelines, updates on the arrangement of online class, and technical support. Students may expect these kinds of assistance to be provided whether a course is delivered FTF or online. Students may not consider these types of support as capable of improving their learning outcomes and initiative during CoOL. In terms of technical support, most of the students in this study had already possessed sufficient information technology skills and had the necessary devices to learn online. Therefore, technical support was not viewed as an essential component that determined learning outcomes and student initiative in CoOL. It is consistent with previous findings that Internet self-efficacy was not a significant predictor of student satisfaction [23,49,61]. However, a recent survey has indicated that other kinds of university support, such as academic advising, tutoring, and administrative support on financial aid, may promote satisfaction and could be included as a subject of future studies [62].

The three demographic characteristics—including gender, academic level, and academic discipline—did not affect student satisfaction toward CoOL, however, the type of university attended did. In Hong Kong, public universities are relatively better known than private institutions, and can recruit students with a higher attainment in the university entrance examinations. The survey results suggest that students’ academic performance influences their preferred mode of learning. More research should be conducted in this area and to see if this finding can be generalized.

In addition, to the best of our knowledge, this study is the first to identify the relationship between the three most common parameters of learning effectiveness. It is likely that perceived learning outcomes significantly affected student initiative because high learning achievement acts as positive reinforcement to promote positive learning behaviors and arouse learning interests. Furthermore, both learning outcomes and student initiative determine student satisfaction, an indicator of the overall success of online courses. Perceived learning outcomes has a greater effect on satisfaction than student initiative, probably because students view their performance as the primary indicator that the course is useful and successful. However, student initiative also has a significant relationship with satisfaction, which should not be neglected. Student initiative implies that students have the drive to learn without being told to do so. Encouraging initiative in students through the ability and attitude to actively self-learn reflects the ultimate success of higher education.

The current research relies on students’ perception of learning and the survey was done at the end of spring asking students to recall fall semester. The passage of time, memory, etc. could impact student answers. Despite the limitations in investigating all possible predictive factors of learning effectiveness, this study identified student–student dialogue, course design, and instructor–student dialogue as the determinants of learning effectiveness in CoOL. Instructors play a major role in incorporating these factors appropriately into courses to promote satisfaction and ensure the ultimate success of CoOL. The further study of more potential predictive factors and a thoroughly detailed study of the known predictive factors (e.g., different forms of student–student and instructor–student interaction, and different types of course design) may provide richer information to guide the improvement of CoOL.

7. Conclusions

This study identified student–student dialogue and course design as predictive factors of perceived learning outcomes, and instructor–student dialogue as a predictive factor of student initiative in CoOL. Both perceived learning outcomes and student initiative determine student satisfaction. For the ultimate success of CoOL, instructors must deliberately incorporate these factors into courses to enhance the learning effectiveness of CoOL.

Author Contributions

Conceptualization, A.M.Y.C. and M.K.P.S.; methodology, J.T.Y.T. and A.M.Y.C.; formal analysis, M.K.P.S., A.C.Y.C. and B.S.Y.L.; writing—original draft preparation, J.T.Y.T. and A.M.Y.C.; writing—review and editing, M.K.P.S., A.C.Y.C. and B.S.Y.L. All authors have read and agreed to the published version of the manuscript.

Funding

The work described in this paper was partially supported from The Hong Kong University of Science and Technology research grant “Big Data Analytics on Social Research” (grant number CEF20BM04); and the Internal Research Grant (RG 53/2020-2021R) and Dean’s Research Fund of the Faculty of Liberal Arts and Social Sciences (FLASS/DRF 04633), The Education University of Hong Kong, Hong Kong Special Administrative Region, China.

Institutional Review Board Statement

The study was conducted according to the guidelines on ethics in research, and approved by the Human Research Ethics Committee of The Education University of Hong Kong (reference number 2019-2020-0104).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The datasets used/or analyzed during the current study are available from the corresponding author on reasonable request.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

Appendix A

Table A1.

Measurement Items with Their Means and Standard Deviations.

Table A1.

Measurement Items with Their Means and Standard Deviations.

| Items (1 = Strongly Disagree; 7 = Strongly Agree) | Mean | Standard Deviation |

|---|---|---|

| University Support (US) | ||

| US1: My university provided me clear guidelines for the online classes due to COVID-19. | 4.13 | 1.528 |

| US2: My university kept me well informed of the arrangement of the online classes due to COVID-19. | 4.37 | 1.498 |

| US3: I could receive instant technological support from my university when I needed. | 3.81 | 1.419 |

| US4: My university paid every effort to ensure the online classes run smoothly due to COVID-19. | 4.00 | 1.432 |

| Student–Student Dialogue (SSD) | ||

| SSD1: In general, I had positive and constructive interactions with other students frequently in the online classes due to COVID-19. | 3.18 | 1.486 |

| SSD2: In the online classes during COVID-19, the level of positive and constructive interactions between students was generally high. | 3.12 | 1.399 |

| SSD3: In the online classes during COVID-19, I, generally, learned more from my fellow students than in face-to-face classes at the university. | 2.87 | 1.514 |

| SSD4: The positive and constructive interactions between students in the online classes due to COVID-19 helped me improve the quality of the learning outcomes in general. | 3.17 | 1.415 |

| Instructor–Student Dialogue (ISD) | ||

| ISD1: In general, I had positive and constructive interactions with the instructors frequently in this online classes due to COVID-19. | 3.61 | 1.465 |

| ISD2: In general, the level of positive and constructive interactions between the instructors and students was high in the online classes due to COVID-19. | 3.44 | 1.491 |

| ISD3: The positive and constructive interactions between the instructors and students in the online classes helped me improve the quality of learning outcomes in general. | 3.52 | 1.528 |

| ISD4: Positive and constructive interactions between students and the instructors was an important learning component in the online classes due to COVID-19. | 4.39 | 1.702 |

| Course Design (CD) | ||

| CD1: The course objectives and procedures of the online classes were generally clearly communicated. | 4.21 | 1.321 |

| CD2: The structure of the modules of the online classes was generally well organized into logical and understandable components. | 4.23 | 1.283 |

| CD3: The course materials of the online classes were generally interesting and stimulated my desire to learn. | 3.76 | 1.376 |

| CD4: In general, the course materials of the online classes due to COVID-19 supplied me with an effective range of challenges. | 4.00 | 1.346 |

| CD5: Student grading components such as assignments, projects, and exams were related to learning objectives of the online classes due to COVID-19 in general. | 4.30 | 1.339 |

| Perceived Learning Outcomes (PLO) | ||

| PLO1: The academic quality of the online classes due to COVID-19 is on par with face-to-face classes I have taken. | 3.51 | 1.574 |

| PLO2: I have learned as much from the online classes due to COVID-19 as I might have from a face-to-face version of the courses. | 3.51 | 1.633 |

| PLO3: I learn more in online classes due to COVID-19 than in face-to-face classes. | 3.20 | 1.693 |

| PLO4: The quality of the learning experience in online classes due to COVID-19 is better than in face-to-face classes. | 3.21 | 1.713 |

| Student Initiative (SI) | ||

| SI1: I asked my instructors more questions during the online classes period than in face-to-face classes period. | 3.70 | 1.789 |

| SI2: I spent more time to learn and study during the online classes period than in face-to-face classes period. | 3.92 | 1.691 |

| SI3: I spent more time to reflect what I have studied during the online classes period than in face-to-face classes period. | 3.74 | 1.617 |

| Satisfaction (SAT) | ||

| SAT1: As a whole, I was very satisfied with the online classes due to COVID-19. | 3.69 | 1.599 |

| SAT2: As a whole, the online classes due to COVID-19 were successful. | 3.83 | 1.530 |

References

- Viyayan, R. Teaching and learning during the COVID-19 pandemic: A topic modeling study. Educ. Sci. 2021, 11, 347. [Google Scholar] [CrossRef]

- WHO. Tracking Public Health and Social Measures a Global Dataset. 2020. Available online: https://www.who.int/emergencies/diseases/novel-coronavirus-2019/phsm (accessed on 1 March 2021).

- WHO. WHO Director-General’s opening remarks at the media briefing on COVID-19. 2020. Available online: https://www.who.int/director-general/speeches/detail/who-director-general-s-opening-remarks-at-the-media-briefing-on-covid-19---11-march-2020 (accessed on 22 February 2021).

- Limniou, M.; Varga-Atkins, T.; Hands, C.; Elshamaa, M. Learning, Student Digital Capabilities and Academic Performance over the COVID-19 Pandemic. Educ. Sci. 2021, 11, 361. [Google Scholar] [CrossRef]

- Kentnor, H. Distance Education and the Evolution of Online Learning in the United States. Curriculum and Teaching Dialogue. 2015, 17, 21–34. [Google Scholar]

- OnlineSchools. The History of Online Schooling. 2020. Available online: https://www.onlineschools.org/visual-academy/the-history-of-online-schooling/ (accessed on 5 March 2021).

- Bidwell, A. Gallup: Online Education Could Be at a ’Tipping Point’. 2014. Available online: https://www.usnews.com/news/blogs/data-mine/2014/04/08/americans-trust-in-online-education-grows-for-third-consecutive-year (accessed on 15 March 2021).

- EducationData. Online Education Statistics. 2020. Available online: https://educationdata.org/online-education-statistics (accessed on 26 February 2021).

- Shukor, N.A.; Tasir, Z.; Van der Meijden, H. An Examination of Online Learning Effectiveness Using Data Mining. Procedia Soc. Behav. Sci. 2015, 172, 555–562. [Google Scholar] [CrossRef] [Green Version]

- Swan, K. Learning effectiveness online: What the research tells us. In Elements of Quality Online Education: Practice and Direction; Sloan Center for Online Education: Needham, MA, USA, 2003; Volume 4, pp. 13–47. [Google Scholar]

- Wang, Y.-S. Assessment of learner satisfaction with asynchronous electronic learning systems. Inf. Manag. 2003, 41, 75–86. [Google Scholar] [CrossRef]

- Bahasoan, A.N.; Ayuandiani, W.; Mukhram, M.; Rahmat, A. Effectiveness of Online Learning in Pandemic Covid-19. Int. J. Sci. Technol. Manag. 2020, 1, 100–106. [Google Scholar] [CrossRef]

- Naddeo, A.; Califano, R.; Fiorillo, I. Identifying factors that influenced wellbeing and learning effectiveness during the sudden transition into eLearning due to the COVID-19 lockdown. Work 2021, 68, 45–67. [Google Scholar] [CrossRef]

- Parker, N.K. The quality dilemma in online education. In Theory and Practice of Online Learning; Anderson, T., Elloumi, F., Eds.; Athabasca University: Athabasca, AB, Canada, 2004; pp. 385–409. [Google Scholar]

- Pond, W. Distributed education in the 21st century: Implications for quality assurance. Online J. Distance Learn. Adm. 2002, 5, 1–8. [Google Scholar]

- Blicker, L. Evaluating quality in the online classroom. In Encyclopedia of Distance Learning; Rogers, P.L., Ed.; IGI Global: Hershey, PA, USA, 2009. [Google Scholar]

- Noesgaard, S.S.; Ørngreen, R. The effectiveness of e-learning: An explorative and integrative review of the definitions, methodologies and factors that promote e-Learning effectiveness. Electron. J. E-Learn. 2015, 13, 278–290. [Google Scholar]

- Owston, R.; York, D.; Murtha, S. Student perceptions and achievement in a university blended learning strategic initiative. Internet High. Educ. 2013, 18, 38–46. [Google Scholar] [CrossRef]

- Persky, A.M.; Lee, E.; Schlesselman, L.S. Perception of Learning Versus Performance as Outcome Measures of Educational Research. Am. J. Pharm. Educ. 2020, 84, ajpe7782. [Google Scholar] [CrossRef]

- Huang, R.-T.; Yu, C.L. Exploring the impact of self-management of learning and personal learning initiative on mobile language learning: A moderated mediation model. Australas. J. Educ. Technol. 2019, 35. [Google Scholar] [CrossRef] [Green Version]

- Ashforth, B.E.; Sluss, D.M.; Saks, A.M. Socialization tactics, proactive behavior, and newcomer learning: Integrating socialization models. J. Vocat. Behav. 2007, 70, 447–462. [Google Scholar] [CrossRef]

- Wolff, M.; Wagner, M.J.; Poznanski, S.; Schiller, J.; Santen, S. Not Another Boring Lecture: Engaging Learners with Active Learning Techniques. J. Emerg. Med. 2015, 48, 85–93. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kuo, Y.-C.; Walker, A.E.; Schroder, K.E.; Belland, B.R. Interaction, Internet self-efficacy, and self-regulated learning as predictors of student satisfaction in online education courses. Internet High. Educ. 2014, 20, 35–50. [Google Scholar] [CrossRef]

- Herbert, M. Staying the course: A study in online student satisfaction and retention. Online J. Distance Learn. Adm. 2006, 9, 300–317. [Google Scholar]

- Levitz, N. National Online Learners Priorities Report. 2016. Available online: https://files.eric.ed.gov/fulltext/ED602841.pdf (accessed on 5 January 2021).

- Biner, P.M.; Welsh, K.D.; Barone, N.M.; Summers, M.; Dean, R.S. The impact of remote-site group size on student satisfaction and relative performance in interactive telecourses1. Am. J. Distance Educ. 1997, 11, 23–33. [Google Scholar] [CrossRef]

- Liao, P.-W.; Hsieh, J.Y. What Influences Internet-Based Learning? Soc. Behav. Pers. Int. J. 2011, 39, 887–896. [Google Scholar] [CrossRef]

- Broadbent, J. Comparing online and blended learner’s self-regulated learning strategies and academic performance. Internet High. Educ. 2017, 33, 24–32. [Google Scholar] [CrossRef]

- Alshare, K.A.; Freeze, R.D.; Lane, P.L.; Wen, H.J. The Impacts of System and Human Factors on Online Learning Systems Use and Learner Satisfaction. Decis. Sci. J. Innov. Educ. 2011, 9, 437–461. [Google Scholar] [CrossRef]

- Eom, S.B.; Ashill, N. The Determinants of Students’ Perceived Learning Outcomes and Satisfaction in University Online Education: An Update*. Decis. Sci. J. Innov. Educ. 2016, 14, 185–215. [Google Scholar] [CrossRef]

- Martín-Rodríguez, Ó.; Fernández-Molina, J.C.; Montero-Alonso, M.Á.; González-Gómez, F. The main components of satisfaction with e-learning. Technol. Pedagog. Educ. 2015, 24, 267–277. [Google Scholar] [CrossRef]

- Ertmer, P.A.; Newby, T.J. Behaviorism, Cognitivism, Constructivism: Comparing Critical Features from an Instructional Design Perspective. Perform. Improv. Q. 2013, 26, 43–71. [Google Scholar] [CrossRef]

- Easley, J.A.; Piaget, J.; Rosin, A. The Development of Thought: Equilibration of Cognitive Structures. Educ. Res. 1978, 7, 18. [Google Scholar] [CrossRef]

- Vygotsky, L.S. Mind in Society: Development of Higher Psychological Process; Harvard University Press: Cambridge, MA, USA, 1978. [Google Scholar]

- Jonassen, D.; Davidson, M.; Collins, M.; Campbell, J.; Haag, B.B. Constructivism and computer-mediated communication in distance education. Am. J. Distance Educ. 1995, 9, 7–26. [Google Scholar] [CrossRef] [Green Version]

- Schell, G.P. Janicki, T.J. Online course pedagogy and the constructivist learning model. J. South. Assoc. Inf. Syst. 2013, 1, 26–36. [Google Scholar]

- Bovy, R.C. Successful instructional methods: A cognitive information processing approach. ECTJ 1981, 29, 203–217. [Google Scholar] [CrossRef]

- Harasim, L. Learning Theory and Online Technologies; Routledge: London, UK, 2017. [Google Scholar]

- Laird, D. Approaches to Training and Development; Addison-Wesley: Reading, MA, USA, 1985. [Google Scholar]

- Piccoli, G.; Ahmad, R.; Ives, B. Web-Based Virtual Learning Environments: A Research Framework and a Preliminary Assessment of Effectiveness in Basic IT Skills Training. MIS Q. 2001, 25, 401–426. [Google Scholar] [CrossRef] [Green Version]

- Alavi, M.; Leidner, D.E. Research Commentary: Technology-Mediated Learning—A Call for Greater Depth and Breadth of Research. Inf. Syst. Res. 2001, 12, 1–10. [Google Scholar] [CrossRef]

- Lee, S.J.; Srinivasan, S.; Trail, T.; Lewis, D.; Lopez, S. Examining the relationship among student perception of support, course satisfaction, and learning outcomes in online learning. Internet High. Educ. 2011, 14, 158–163. [Google Scholar] [CrossRef]

- Kolloff, M. Strategies for Effective Student/Student Interaction in Online Courses. In Proceedings of the 17th Annual Conference on Distance Teaching and Learning, Madison, WI, USA, 6 March 2011. [Google Scholar]

- Swan, K. Building Learning Communities in Online Courses: The importance of interaction. Educ. Commun. Inf. 2002, 2, 23–49. [Google Scholar] [CrossRef]

- Sher, A. Assessing the Relationship of Student-Instructor and Student-Student Interaction to Student Learning and Satisfaction in Web-Based Online Learning Environment. J. Interact. Online Learn. 2009, 8, 102–120. [Google Scholar]

- Fink, L.D. Designing our courses for greater student engagement and better student learning. Perspect. Issues High. Educ. 2010, 13, 3–12. [Google Scholar] [CrossRef]

- Moore, M.G. Theory of Transactional Distance. In Theorectical Principles of Distance Education; Keegan, D., Ed.; Routledge: New York, NY, USA, 1997; pp. 22–38. [Google Scholar]

- Swan, K.; Matthews, D.; Bogle, L.; Boles, E.; Day, S. Linking online course design and implementation to learning outcomes: A design experiment. Internet High. Educ. 2012, 15, 81–88. [Google Scholar] [CrossRef]

- Rodriguez-Robles, F.M. Learner characteristic, interaction and support service variables as predictors of satisfaction in web-based distance education. Diss. Abstr. Int. 2006, 67. UMI No. 3224964. [Google Scholar]

- Fay, D.; Frese, M. The Concept of Personal Initiative: An Overview of Validity Studies. Hum. Perform. 2001, 14, 97–124. [Google Scholar] [CrossRef]

- Ringle, C.M.; Wende, S.; Becker, J.M. SmartPLS 3. Bönningstedt: SmartPLS. 2015. Available online: http://www.smartpls.com (accessed on 5 January 2021).

- Wold, H.O.A. Soft modeling: The basic design and some extensions. In Systems under Indirect Observations: Part II; Jöreskog, K.G., Wold, H.O.A., Eds.; WorldCat: Amsterdam, The Netherlands, 1982; pp. 1–54. [Google Scholar]

- Hair, J.F.; Hult, G.T.M.; Ringle, C.M.; Sarstedt, M. A Primer on Partial Least Squares Structural Equation Modeling (PLS-SEM); Sage: Thousand Oaks, CA, USA, 2017. [Google Scholar]

- Ghasemy, M.; Teeroovengadum, V.; Becker, J.-M.; Ringle, C.M. This fast car can move faster: A review of PLS-SEM application in higher education research. High. Educ. 2020, 80, 1121–1152. [Google Scholar] [CrossRef]

- Chu, A.M.Y.; Chau, P.Y.K.; So, M.K.P. Explaining the Misuse of Information Systems Resources in the Workplace: A Dual-Process Approach. J. Bus. Ethic 2014, 131, 209–225. [Google Scholar] [CrossRef]

- Doll, W.J.; Raghunathan, T.S.; Lim, J.-S.; Gupta, Y.P. Research Report—A Confirmatory Factor Analysis of the User Information Satisfaction Instrument. Inf. Syst. Res. 1995, 6, 177–188. [Google Scholar] [CrossRef] [Green Version]

- Fornell, C.; Larcker, D.F. Evaluating Structural Equation Models with Unobservable Variables and Measurement Error. J. Mark. Res. 1981, 18, 39–50. [Google Scholar] [CrossRef]

- Falk, R.F.; Miller, N.B. A Primer for Soft Modeling; The University of Akron Press: Akron, OH, USA, 1992. [Google Scholar]

- Burnett, K.; Bonnici, L.J.; Miksa, S.D.; Kim, J. Frequency, Intensity and Topicality in Online Learning: An Exploration of the Interaction Dimensions that Contribute to Student Satisfaction in Online Learning. J. Educ. Libr. Inf. Sci. 2007, 48, 21–35. [Google Scholar]

- Arbaugh, J. Sage, guide, both, or even more? An examination of instructor activity in online MBA courses. Comput. Educ. 2010, 55, 1234–1244. [Google Scholar] [CrossRef]

- Puzziferro, M. Online Technologies Self-Efficacy and Self-Regulated Learning as Predictors of Final Grade and Satisfaction in College-Level Online Courses. Am. J. Distance Educ. 2008, 22, 72–89. [Google Scholar] [CrossRef]

- EY-Parthenon. Higher Education and COVID-19 National Student Survey—Remote Learning Experiences. 2020. Available online: https://www.ey.com/Publication/vwLUAssets/ey-parthenon-student-survey-on-remote-learning-sent-to-chronicle/$File/ey-parthenon-student-survey-on-remote-learning-sent-to-chronicle.pdf (accessed on 1 April 2021).

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).