Abstract

Information and Communication Technologies (ICT) provide different opportunities to students with intellectual disabilities and to professionals who work with them. However, few studies address the use of collaborative learning platforms and handheld devices to enhance the integration of people with intellectual disabilities in the labour market. We present a learning experience where active methodologies, such as collaborative work, are combined with the use of iPads and a learning management system following a video self-modelling methodology. The goal of this study was to determine whether the combination of traditional methodologies and new could be appropriate for students with intellectual disabilities and how they behave when having to rate their partner’s work. The results show that the combination of active learning methodologies, video self-modelling and the use of learning platforms and tablets is promising for teaching job related skills to students with intellectual disabilities, as participants experienced increased motivation to complete the tasks, improving their skills in the process.

1. Introduction

Independence and autonomy are key goals for people with intellectual disabilities. Job placement is fundamental to achieving these goals [1]. To find and keep a job effectively, people with intellectual disabilities are trained in labour centres, where caregivers and teachers adapt their training to their students’ needs. The traditional way of training and teaching these skills is task sequencing [2], which involves splitting the whole task into a sequence of simpler instructions. Although this training is prepared thoroughly, it presents some challenges: people with intellectual disabilities often have difficulties with reading, relocating themselves within a text when they become lost, looking for specific information about a certain instruction, and even understanding [3]. In the last years, this methodology has been transferred to mobile devices, which seem appropriate for helping users to be more independent and to allow developers to design software that helps both students and caregivers to fully implement the task-sequencing methodology without much effort [4].

Several handheld multipurpose electronic devices are now available in daily life, such as tablets and smartphones. The most recent reports show that these devices are advantageous with respect to other technological systems. Their main advantages are: social acceptance, affordability, portability, and availability [5]. However, there are some downsides to this type of technology, such as professionals not being trained enough to provide appropriate services and, often, diversion of the focus from communication goals to other purposes, such as entertainment [6]. Kagohara et al. [7] evaluated the benefits of using handheld devices in a learning setting with people with autism and other developmental disabilities in a systematic review of 15 studies. The review focused on the teaching of several skills, including academic, communication, employment, leisure and transition skills. Seven studies used iPod Touches and one study used iPads. The results indicated that iOS-based devices are viable technological aids for individuals with developmental disabilities. Their research stated that it can be possible to teach people with intellectual disabilities to use handheld devices for many purposes such as the aforementioned ones.

However, it seems that developers and researchers [4,8,9,10] are directing their efforts towards the development of applications for handheld devices that can satisfy particular needs in particular scenarios. However, implementing specific apps and providing them with content is a daunting task that involves a costly process and a great amount of time to analyze, plan, execute, test and maintain an application that is designed to satisfy only one concrete requirement. For this reason, some researchers are studying ways of teaching job-related skills to people with intellectual disabilities through learning management systems (LMS) that can be customized for different user profiles [11] and help provide different learning resources according to the user’s disabilities, learning goals and preferences [12]. However, people with any sort of disability are usually excluded from e-learning communities since platforms are not usually designed according to the Web Accessibility Initiative Guidelines [13].

Therefore, we developed ClipIt (http://clipit.es/landing/, accessed on 21 July 2021), an online LMS focused on reflective learning using videos created by students. ClipIt is meant to be used by students with and without disabilities. ClipIt offers a flexible methodology, and it is neither classroom based nor lead by a teacher. Instead, ClipIt promotes social and collaborative interaction in an online learning environment, allowing users to complete the tasks with the guidance provided by the teacher. This platform has been tested successfully with university students [14], but we have still not validated it with students who have intellectual disabilities to determine whether the usability guidelines are addressed and the students’ learning is improved. Therefore, we present an exploratory study where students with intellectual disabilities used iPads and ClipIt to learn job-related skills with the aim of answering the following questions.

RQ1: Can handheld devices be used effectively in learning environments by students with intellectual disabilities?

RQ2: Is the creation of videos a proper methodology to teach job-related skills to students with intellectual disabilities?

RQ3: Can the students be reliable peer reviewers?

2. Theoretical Framework

Educational theories [15] suggest that video can be used more effectively for learning than other elements such as text or images. Some argue that the type of media does not affect learning and that the way in which the media is used affects learning [16]. However, others state that the particularities of different types of media are what makes them more suitable for certain tasks [17]. When used appropriately, video can be a powerful learning medium. Among the benefits are that video allows the students to watch how something works. Moreover, videos may attract the attention of the students and increase their motivation. This may lead to greater student engagement with the task at hand. Furthermore, videos can represent real-life scenarios, therefore enhancing the transition from a learning scenario to a real world scenario. In addition, videos can be designed to stimulate discussion and to address different learning styles.

Although the use of educational videos for learning is not recent [18], little empirical research has been done on video self-modelling (VSM), where students are the protagonists while the role of the teacher is to coordinate the discussion, manage activities and plan the sessions [19]. VSM is a methodology in which an individual creates a video of the performance of a specific task. Bellini and Akullian [20] reviewed 23 studies that used video modelling or VSM in which participants with autism spectrum disorder used these methodologies for different tasks. They found that both methodologies were suitable for developing skills in participants and that the effects were sustained over time. Thus, previous studies have shown that these methodologies are feasible for training different skills in people with autism spectrum disorder [20].

Empirical research shows that VSM is an effective methodology that can help acquire different skills [21]. There is evidence that supports that VSM has been used effectively to teach daily living skills [22], task analyses [20], social skills [23], and vocational skills in young adults with autism spectrum disorder [24]. In order to learn these skills, the learner usually needs to practice the corresponding behaviour in a natural environment. One of the main concerns in this scenario is how the learner could train in that scenario with limited guidance. In addition, it is important to consider that the skills learned need to be sustained over time. Previous research supports VSM as a methodology that can achieve this goal [25], since, thanks to portable devices, such as tablet computers, it can serve as an effective guide within a natural environment [25]. However, even if this methodology can be successfully implemented, there is a lack of platforms to back this method up. In this scenario, the ClipIt platform provides a secure environment for students to load their videos and for supporting the review process of the videos between peers.

3. Clipit

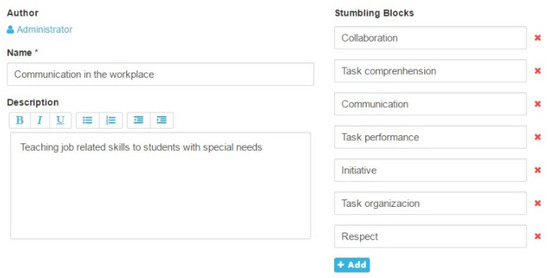

ClipIt is a web platform to support student learning through the creation of educational videos. First, the teacher has to think about a threshold concept [26] that will be the focus of the video that the students will create, as well as associated sub-concepts (an example is shown in Figure 1). There are three main phases that ClipIt supports in order to complete the learning tasks: (i) a production phase in which the students work together in order to upload materials to be used in their videos; (ii) a discussion phase in which the students debate about the uploaded materials and create the video and (iii) a peer review phase in which the groups evaluate each other’s group work.

Figure 1.

Example of the definition of a threshold concept and stumbling blocks in ClipIt.

ClipIt provides a set of tools which help the students with the proposed phases such as forums, a place to upload their materials, and access to the teacher’s materials. Users in the same group can comment on unfinished videos until they want to submit the final version. This corresponds to the first and second phases of the learning process, in which each group works internally to start producing their final video. Teachers have access to the content generated in the group workspace and can contribute to providing guidance to the students, such as through the discussions.

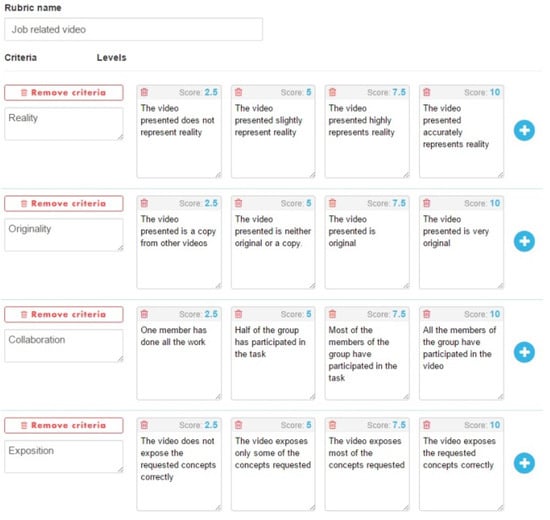

Once the students submit the final result of their work, they can make the video public for the other groups. At his moment, the rest of the members of the other groups can provide feedback about the submitted videos, being able to provide constructive comments. In addition, the teacher may set up a rubric that the students can use in order to score the videos (See example in Figure 2) Both ways of evaluating (comments and rubric) are based on the threshold concept and the associated stumbling blocks. ClipIt guides students during the commenting process through the rubrics provided by the instructor. All the reviews of the video are available to its creators, so they can improve the video thanks to the feedback obtained from their classmates.

Figure 2.

Definition of rubrics to evaluate the uploaded videos.

4. Case Study

We carried out a case study where students with intellectual disabilities experienced a VSM methodology with ClipIt and iPads. The main goal of this study is to explore whether this combination influences the students in a positive manner and how VSM and iPads can help in the learning process of people with intellectual disabilities. Secondary goals were to obtain results about the learning process, platform usability, accessibility, and how the students interacted with the iPads. The participants were enrolled in a job training program. The activity proposed to the students was to represent a real job situation and how they would address the problems that could occur by creating a video. The study consisted of several sessions where the participants had to follow the eight-step cycle [27] to design their own videos, share their creations with their peers, and perform a summative test that helps us to determine whether there was significant knowledge gained from this learning experience.

4.1. Participants

There were 15 participants in the study. Among them, 8 were male and 7 were female, and their age ranged from 18 to 23 years old. All of them had intellectual disabilities. Some of the participants presented visual impairments, which did not allow them to read properly. The participants were students of the first course of the labour inclusion program of Fundación Prodis at Universidad Autónoma de Madrid. The main goal of this course was to teach job skills to their students so they could join the labour market. The study was carried out as a part of the subject “Bases for Learning I” of this course, in which students learn to communicate and collaborate with others. Therefore, the pedagogical team used several methodologies to teach students those skills but have never used the Juxtalearn cycle [27] in their classes. In addition, according to prior talks with the teachers, they had limited knowledge about how iPads can be effectively used to enhance and assess student learning since they only used them for leisure time.

Before starting the study, the participants were divided into groups composed of 3 or 4 people. The groups were organized by the pedagogical team of the institution. In order to create the groups, the participants completed a pre-test where their prior knowledge of the subject was assessed. This allowed the pedagogical team to create heterogeneous groups with all the groups having overall the same knowledge level. In addition, we considered the participants’ specific needs, which helped us to form groups in which the students could help each other. Intellectual disabilities involve a large range of characteristics that lead to different features among all of the participants that make them face tasks that they have to perform in different ways. Therefore, we present a brief description of each of the participants. The descriptions were composed by the psychological team of the institution, who had been working with the participants for two years. To hide their identities, we refer to them as P1 through P15. P1, P4, P5, P10, P13, and P15 are participants with Down syndrome and moderate to severe intellectual disability. They also showed different features that led to variations in the way they approached this learning study. For instance, P1 has anxiety when facing new challenges and quickly tries to disengage from the activity. Although this participant struggled during the first two sessions, the members of his group were able to calm him down and help him understand the task at hand. Once his anxiety was reduced, this participant worked without needing constant supervision.

In the cases of P4, P5, P13, and P15, we found that their main problem is keeping their concentration on the same task for long periods of time. These participants needed constant supervision to proceed with the task since they tended to stop working after half an hour. Finally, participant P10 was eager to participate in the learning experiment, despite not understanding what he had to do at first. This participant only needed help from his peers to proceed with the task at hand. Participant P6 has mild intellectual disability and severe visual disability. This means that he had problems when reading the task (on paper) and had to zoom in on the text when using ClipIt. To properly explain the task, we decided to use graphs and pictures, which helped him to understand it. In addition, P6 does not understand other points of view, which makes him struggle when discussing things. This characteristic is also presented by participant P8, who also has mild intellectual disability. The pedagogical team of the centre decided to place them in the same group to see whether they could get along and properly discuss the task to perform.

Participant P9 has mild intellectual disability. Although she usually works hard and quickly understood the task at hand, she required constant approval from the pedagogical team. Therefore, in each of the steps her group had to take, she asked the pedagogical team of the institution about whether they were doing things correctly and did not believe in the work she was doing. P12 and P14 had similar characteristics. Both of them have mild intellectual disabilities and were enthusiastic about participating in the learning study. These participants were organized and did not have any problems following each of the steps to finally create a video. Although they were not among the students with higher knowledge levels, their liveliness led them to help both their peers in their group and in other groups.

Among all the students, the most prominent ones where P2, P3, P7, and P11. These participants have mild intellectual disability and showed higher knowledge skills than their peers. Due to these characteristics, the pedagogical team decided to distribute them among all the groups to help other students with lower skills. Table 1 presents the composition of each group.

Table 1.

Group assignments.

4.2. Research Design and Procedures

The research design used for this study is a combination between inquiry and test methods [28]. In our study we observed the participants, noting what they said during the sessions and their actions. We have carried out a focus group in order to learn about their experience and we have also performed a statistical analysis of the different data that we gathered throughout the study, such as tests and the video evaluation. Considering those variables, it is possible to discover new design ideas in order to adjust the initial design of the study. On the other hand, statistical analysis will allow us to obtain general conclusions.

The study lasted for 9 sessions over 2 months (one session per week). Each session had an approximate duration of 2 hours. Each session consisted of progressing through the phases mentioned in Section 3, taking into account that one session does not correspond to a complete phase. Before starting to work, and after grouping the participants, we presented the study, telling the participants that they had to create a video to represent a real job situation. Each group was assigned one specific task and all the groups worked on different tasks: (a) taking phone notes while alone at work; (b) receiving mail and distributing it to departments; (c) taking orders from a department, retrieving materials from a store, and distributing them to departments; and (d) checking that computer equipment is working correctly and providing replacements if needed. Then, the case study started and the participants had to complete the following tasks:

Session 1: In this session, the study was introduced, telling the participants what was going to be done, what their assigned task was and how to record videos with the iPads. Afterwards, each group presented the task assigned to them.

Session 2: Each task came with several questions that served the purpose of guiding the participants and helping them to create the video script.

Sessions 3 and 4: In these two sessions, the participants finished their scripts and they began to rehearse the situation they had to reenact.

Sessions 5 and 6: In these sessions, all the groups recorded the final version of their videos. Due to the available space, one group recorded at a time. Two groups (1 and 2) recorded their videos in session 5 and the other two groups (3 and 4) recorded their videos in session 6.

Sessions 7 and 8: In these sessions, the participants accessed ClipIt with their iPads in order to start the evaluation phase. First, the participants uploaded their videos to the platform and, then, each participant evaluated the video presented by the other groups, providing suggestions to improve the video. Finally, each participant completed an individual test to assess whether there was any knowledge gained during the study.

Session 9: In this session, we carried out a focus group where participants expressed their feelings about the study and commented about different situations that arose during the study.

4.3. Measurement Instruments

One of the key points of the study is to teach students collaborative competencies. Since instructors are preparing them for the labour market, where they will work with peers, it is compulsory to learn how to work in groups, which is not something people with intellectual disabilities usually do in this training program. In this sense, we evaluated the overall growth of each member of the group by measuring how much they learned between the pre-test and post-test. Since students worked with people they already knew, we strongly believed that those with higher skills would help those who might struggle with the proposed task, resulting in better results overall.

The first evaluation that the groups had to pass was their peers’ evaluation of the designed videos according to the concepts they had to work on. These concepts were task management, task comprehension, collaboration, respect, initiative and communication, which are key aspects regarding labour inclusion according to the pedagogical team. In addition, the participants had to rate the videos in terms of originality, accuracy and presentation skills, which would help the pedagogical team to evaluate how the participants behave when peer-reviewing. With this evaluation, we may discover external factors that may affect participants when giving scores to the videos of the other groups. To assess their learning growth, they had to complete a final test regarding the formal concepts mentioned. These tests were taken individually. Comparing this test with the first one allows us to evaluate the students’ understanding about the concepts they had been working with throughout all the sessions and whether there was any significant learning among them. This test was prepared by the pedagogical team to obtain an easy-to-read instrument. Both tests consisted of seven questions, each of which had four possible answers.

As mentioned in Section 4.2, we observed and annotated the attitudes and interactions of the participants throughout the study, as well as any piece of information that could help us to understand the participant’s outcomes. We used this technique to gather data about factors that cannot be gathered through normal tests, such as interactions with ClipIt and the iPads. In each session of the study, at least three observers were in the classroom helping the participants and annotating their actions. The observers were the same people in all sessions. The annotations were made using the natural language of the observers. To coordinate the method for annotating these observations, we instructed the observers to take notes regarding the following aspects: how students talked to each other (e.g., “When designing the story script, P7 tried to encourage his/her partners to participate in the discussion); whether there were any interaction issues with the iPad (e.g., “Participants of G2 know how to interact with the device and with ClipIt without training”); and whether they needed any help from teachers to perform the requested tasks (e.g., “I had to help G3 when uploading the video to ClipIt”).

Once the study ended, we analyzed data gathered through direct observation to detect regularities among the groups. For example, different observers wrote down that all the students knew how to interact with the iPad and that they navigated through ClipIt without any problems. Finally, we held a focus group to let the students express themselves about the use of ClipIt and iPads. We searched for comments about the interaction with the platform or any accessibility problems that may have arisen. The information obtained from this focus group and the data gathered by direct observation helped us to understand how the students felt about the combined use of iPads and the video-based collaborative platform. Since participants had to rate the videos of the other groups, we used those scores to evaluate how participants as individuals and how participants within a group behaved. Although we expected participants to rate the videos according to their quality, we suspect that many other factors can influence their ratings.

5. Evaluation

After finishing the learning study, we analyzed the ratings and the data obtained with the tests, the peer review process, direct observations, and the final focus groups. One of the students was ill in session 7, so we could only analyze the test results of the other 14 participants. Throughout the study, there were not any problems with any of the groups. Each participant in a group contributed to the design of the video script, and if anyone had difficulties, the rest of the group would help them to move on. The combination of people with different skills helped the groups to function well, so we did not need to stop the experience at any moment.

5.1. Participant Peer-Review

5.1.1. Global Analysis

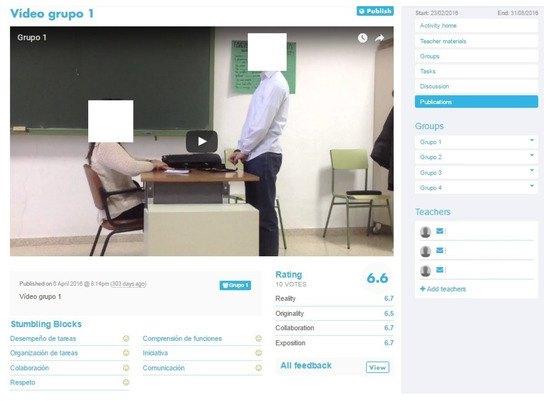

The public videos were accessible by the rest of the classmates involved in the activity. Students reviewed, discussed and evaluated videos created by other students and gave opinions on how the videos could be improved. We asked the participants to tell us whether the videos designed by their partners appropriately represented the job-related situation they were given. In addition, they had to give a score for the following concepts that were part of the evaluation rubric: (i) reality: the video accurately represents reality; (ii) originality: the video is original; (iii) collaboration: all members of the group participated in the video; and iv) exposition: the video exposes the requested concepts correctly. Each concept was scored from 1 to 10. Once a video is rated, its creators can review the scores as shown in Figure 3. They can only see the mean score of the video, not having access to the individual scores given by each student.

Figure 3.

Clipit’s peer-review summary.

Table 2 shows the results of the peer-review evaluation of the videos created. Each column represents the mean score of a certain concept, which is calculated through the mean of the scores of each participant in the other groups. The reality concept had the lowest score. This occurred because the videos were recorded in a classroom where there were no doors to emulate offices, so the students struggled when representing someone knocking on the door, for example. The participants had to recreate objects such as phones, and even though all the groups have to do that, they negatively assessed the representation of a phone by the other groups.

Table 2.

Group assignments.

Firstly, we calculated the coefficient for intellectual validity, which will allow us to ensure that the voting was coherent for each student. In this sense, the Cronbach’s alpha was 0.94. Next, we checked the possibility of obtaining a global indicator about the quality of the video according to the four dimensions the students voted on. In order to evaluate this fact, we need to take into account the correlations among the scores in each dimension (Table 3).

Table 3.

Correlation in each dimension.

The results show that there is a high correlation among the four dimensions (higher than 0.8), which evidences that the four dimensions are components of a more general indicator that will evaluate the quality of the video. The only two dimensions that do not have a high correlation are Reality and Originality, with a score of 0.71. Since students have to represent a real job related scenario, the originality of the video is limited, which gives sense to this result. Continuing with the search of a global indicator, we needed to carry out a principal component analysis. The results of this evaluation are shown in Table 4.

Table 4.

Importance of components.

According to the results of the previous table, the first component alone explains 90% of the variance. Therefore, we can reduce the four scores to this component as a way of measuring the global score of the video. As shown in Table 5, the four dimensions contribute equally to the first component (coefficients near 0.5).

Table 5.

Impact of each dimension in each component.

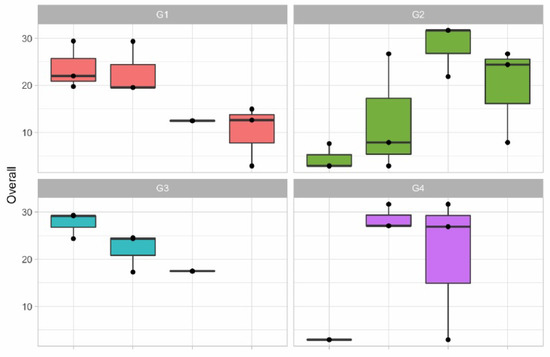

Afterwards, we have to transform all the dimensions into only one indicator, which will be called Overall, which will help us to calculate the scores for each video. The results are shown in Table 6. According to this data, G1 and G4 obtained similar scores, G3 obtained the best score and G2 obtained the worst score.

Table 6.

Video scores.

5.1.2. Participant Analysis

One key factor is to understand how the voting process was carried out. We can expect that the students voted on the videos according to their quality, but we suspect that there are other factors that influenced the participants’ votes. Some external factors can be the competitiveness among the participants (I gave a lower score to my competitors), reciprocity (If you give me high scores I will give you high scores) or sympathy (I gave you high scores because we are friends). We have calculated the reliability of each participant by correlating the votes cast by each participant with the final score of the video. As shown in Table 7, the reliability is close to zero, which indicates no consistency in the voting criteria among the participants of each group.

Table 7.

Reliability scores.

The most reliable students are P02, P06 and P14, although P08 also has a high reliability. However, the rest of the participants have either a close to zero correlation or a negative correlation, which means that they gave high scores to the worst videos or that they gave low scores to the best videos. Regarding the participants whose correlation is NA, this means that they gave the same scores to all the videos.

Figure 4 shows the distribution of the participants’ votes identifying with the colours of the group to which they belong. On one hand, it shows what we have mentioned before, which is that participants over-rate the videos, although there are some participants that gave very low scores. P12, P3 and P09 are participants who gave the same scores to all the videos. Most of the participants present a low variability in their scores except for P06, P09 and P14. In this figure, it is shown that there is no consensus among the votes within each group. Figure 5 shows the scores given grouped by groups to make this situation clearer.

Figure 4.

Overall score per student.

Figure 5.

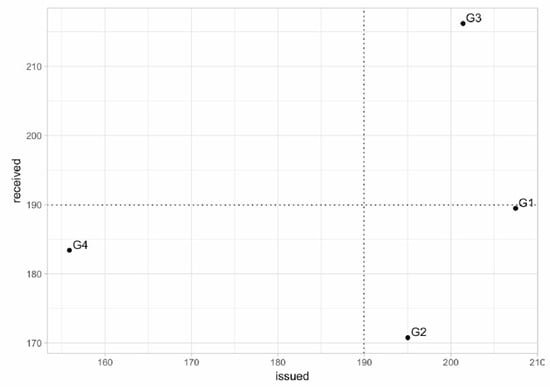

Score given/received by each group.

5.1.3. Group Analysis

Once the individual behaviour has been analysed, we focus our attention on how individual decisions affect the group. Figure 5 shows the relationship between the score received and the score given. This graph shows four different behaviours (score given/score received):

Low/Low: They give and receive lower scores than the average (G4). Low/High: They give lower scores than the average but receive higher scores than the average (None). High/Low: They give higher scores than the average and receive lower scores than the average (G1 and G2). High/High: They give and receive higher scores than the average (G3). As mentioned before, G3 has the video with the higher scores, while G2 has the video with the lower scores. Both groups have contributed in a similar way with their votes. Regarding G4 and G1, while G4 gave lower scores than the rest, G1 gave higher scores.

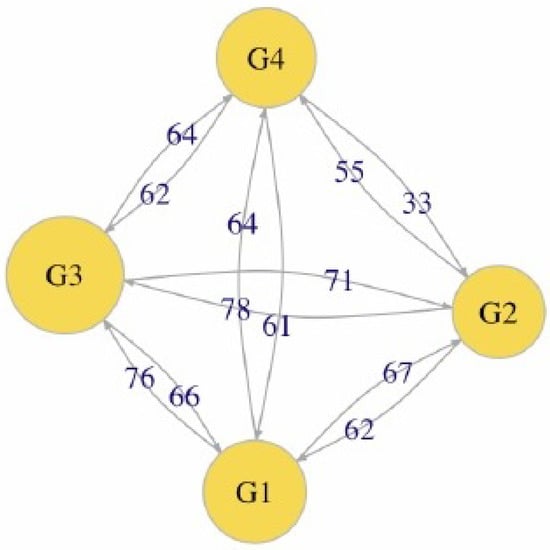

If we focus on the scores given between each group (Figure 6), we can see that the scores are similar, except between G2 and G4. In this scenario, reciprocity is higher in all the cases, which is evidence that there was no strategic vote in which one group voted low to hinder the rest, or that they voted low for one group because they did not like each other.

Figure 6.

Score given/received by each group grouped by pairs.

5.2. Tablet Interaction

As mentioned, the participants used the iPads for accessing ClipIt in sessions 7 and 8. During these sessions, they had to login to ClipIt without any prior information, upload their videos, comment on their peers’ videos, and perform the final test. Each group used two iPads at the same time to perform these tasks. Thus, while two of the participants interacted with the platform, the other two helped them. We did this to avoid confusion arising from several students of the same group uploading the same video to the group archives.

To measure how the participants interacted with the iPads and the application, we first used the direct observation technique. The observer had worked with the participants previously, which helped them to work without any distractions. Overall, the students knew how to interact with iPads since they are included in their curricula and they use them regularly. Touch interaction seemed suitable for them as they discovered the functionality of ClipIt as the sessions progressed. Since ClipIt has a responsive layout, the user interface was adapted automatically to iPads, and the participants were able to see each element of the platform without effort. In addition, the peer-review process using rubrics is easy for touch devices, since the participants only had to touch the score they wanted to give to each concept and then touch a submit button.

On the other hand, we found several issues regarding both iPads and the platform interaction. For instance, expressing written opinions with iPads was difficult for the participants. Not having a keyboard to write made them struggle when performing written tasks. For instance, participants spent more time than expected when writing on the group’s forum or giving feedback to the other groups’ videos. In addition, some of the participants with visual disabilities expressed that they had difficulties when reading texts on the platform due to the contrast between the text and the background.

Finally, in the last session, the students had to complete a satisfaction questionnaire that includes some questions from QUIS regarding user interaction satisfaction. We reduced the number of questions, and it was easy to read since the questionnaire was addressed to people with intellectual disabilities. These questions were focused on the user interface rather than the learning capabilities of ClipIt so we could work on improving the accessibility of the platform in the future. Table 8 shows the results of the five questions asked to the students regarding ClipIt’s accessibility. Using a Likert scale, the participants had to rate the characteristics of ClipIt in the table from strongly disagree (1) to strongly agree (5). Each cell represents the number of participants who gave a score to a question.

Table 8.

Summary of user interaction questions and answers.

Overall, the results in Table 8 again show problems that have been mentioned. Students with visual disabilities sometimes struggled to read the text content provided (four participants strongly disagreed and three participants disagreed in the first item and five participants strongly disagreed and one participant disagreed in the fifth item). They pointed out that they had problems with the colours used in the platform. To solve this issue, we used a set of colours that have better contrast to avoid these visual difficulties. The participants highlighted that the content of the platform was well structured and that it was easy to navigate through ClipIt to perform the requested tasks (six participants agreed and four participants strongly agreed in the second item, two participants agreed and ten participants strongly agreed in the third item and six participants agreed and three participants strongly agreed in the fourth item). In addition, if one participant became lost in the platform, the members of the same group were easily able to explain what to do, although this happened only in the first session when they started to use ClipIt. During the focus group, the participants highlighted that they enjoyed performing the tasks with tablets as a change to their usual way of learning. They were motivated to perform the task, and they wanted to perform it as well as possible, even if it did not count towards the qualification of the course. However, two groups had problems with one of their members who did not participate much in the creation of the educational videos. The discomfort led these members to sabotage the videos of other groups in the peer-review evaluation.

5.3. Test Results

Table 9 shows the results of the pre-test and the post-test. One of the students could not attend the session where the participants had to perform the post-test, so we compared the results of only the participants who completed both tests. These tests were performed individually.

Table 9.

Test results.

We observe that, in the post-test, the number of correct answers is higher than in the pre-test. The median in the pre-test is five, while that in the post-test is six. We checked the normality of both distributions using the Kolmogorov–Smirnov test, which showed that both distributions were normal (p > 0.05). Therefore, we performed a student’s t-test to analyze the distributions, which indicated p < 0.05. This indicates that there was a significant learning gain about the job-related concepts that the participants worked on during the experience.

6. Discussion

During the first part of the study, the participants worked in groups to design a script to use when recording a video about an assigned job scenario. This way of working promoted the active exchange of ideas within the group, which not only increased the interest among the participants, but also promoted their critical thinking. These results aligned with those obtained by Moore [29]. Collaborative learning seems a suitable methodology to use with students who have intellectual disabilities since it provides the students with opportunities to discuss the ideas proposed for the video cooperatively. Wegerif, Mercer and Dawes [30] postulate that the experience of social reasoning can improve individual reasoning, indicating that students can enhance these skills by practicing together with a tutor. When students with intellectual disabilities get the opportunity to take part in the group goal setting, as happened when working in groups to create the video script, this encourages learner autonomy and autonomous motivation [31].

Regarding RQ1, the use of tablet computers in learning scenarios can increase student engagement and motivation [32]. With a single iPad, the students could record, upload, comment on, and rate videos, which gave them a degree of freedom that they would not have had if using a laptop. The pedagogical team remarked that the students were more engaged and motivated than when using traditional methods, such as the teacher asking questions and the students answering them or learning the sequence to follow in certain tasks. In addition, they positively valued touch interaction, since there were some students who had low motor skills and would struggle when using a computer mouse. As found in the literature, the directness of touch interaction and the portability of tablets helped lower the barrier to interacting with computers [33]. In addition, our results support previous research about combining handheld devices and VSM to enhance the learning processes of students with intellectual disabilities [34]. On the other hand, we also recognized that tablets were unsuitable for entering large quantities of text [32].

Although many studies have been conducted using video modelling, there seem to be limited studies where role playing is taught to children with intellectual disabilities via VSM [25]. Our study helps shed some light on this matter (RQ2). The results reveal that VSM was an effective way of teaching role playing and that participants have acquired more knowledge about how to behave and how to act in a workplace, skills that are difficult to train using other means [6]. The effectiveness of the results of this learning experiment seemed to be parallel to the results of Akmanoglu and Tekin-Iftar [35], who stated that, thanks to VSM, it can be observed that motivation to watch oneself on video was enhanced by the portrayal of predominantly positive and successful behaviours, which also increased participants’ attention and enhanced their self-efficacy. Hence, providing VSM with metered guidance—in our case, in the form of questions that helped participants when designing the video script—can be effective for teaching various skills.

Research on content-based digital video production by people with intellectual disabilities still has room to grow, as researchers usually focus on the production process and group interactions rather than on the final learning outcomes [36]. The results of our study showed that video production can provide several benefits in a learning environment, such as (i) students internalizing and using content and being engaged in tasks such as sharing and negotiating about the script for their clips; and (ii) learners developing digital skills (producing the videos) and sociolinguistic skills in an integrative manner, which are similar to the benefits reported by Goulah [18].

Participants developed digital competencies and social skills while designing and producing the videos. Moreover, our study showed how video creation can enhance the transfer of knowledge to the real world, since they allow the recreation of real-life situations in a safe environment that is suitable for people with intellectual disabilities. However, it has to be noted that the benefits of this case study do not come from only the creation of the video. As Karpinnen [37] states, the learning outcomes do not depend on the process of producing or watching a video, but on the way this process is integrated in the overall learning environment.

Participants also learned to act as peer reviewers. As is stated in the literature, there are some validity problems of peer review that have not yet been resolved [38] and that have arisen in our work. For instance, some students have underrated their peers’ video to hurt their competitors and some of them have overrated the videos because they were friends. Further study should examine how to counter the students’ emotions in the peer review process, which makes them become unreliable reviewers. When answering RQ3, the data gathered show that the participants were not prepared to act as reliable reviewers but, during the peer review process, they showed critical thinking and interest in analyzing the mistakes of other groups in order to learn from them.

7. Conclusions

The use of technology and VSM have unique features and are believed to enhance student learning. However, little is known about how their use impacts students with intellectual disabilities. Technology is hindered when teachers struggle to find appropriate content for their students. Moreover, teachers do not have appropriate tools to assess how much their students have actually learned or to track their students’ progress. This study has shown how iPads, an LMS, and VSM can be combined to allow teachers to carry out collaborative activities by using tactile devices. The assessment methods also allow teachers to check student progress and assess their learning accurately.

Using technology in education has proven to be beneficial for students with intellectual disabilities. Moreover, due to the adaptability offered, much effort has been made to incorporate learning technology in the education of students with intellectual disabilities. By joining the most innovative software, appropriate content, and handheld devices, we can increase student motivation while improving their learning process. The combination of all these features eases the transition from the traditional way of teaching to the use of more innovative methodologies, helping the students to achieve academic and non-academic skills.

Using VSM with iPads and ClipIt to share and evaluate videos improved the comprehension of the tasks that students had to perform and their knowledge gain. Considering the low number of participants in our study, the results presented in our work are not sufficient to confirm whether VSM is suitable for teaching job skills to students with intellectual disabilities. Therefore, more research should be conducted in this area in order to find appropriate methodologies that could be used to fulfil this goal.

Author Contributions

For research articles with several authors, a short paragraph specifying their individual contributions must be provided. Conceptualization, D.R.-Á. and E.M.; methodology, D.R.-Á. and E.M.;validation, D.R.-Á., E.M. and P.A.H.; formal analysis, D.R.-Á. and P.A.H.; writing—original draft preparation, D.R.-Á.; writing—review and editing, E.M. and P.A.H.; supervision, E.M.; project administration, E.M.; funding acquisition, E.M. All authors have read and agreed to the published version of the manuscript.

Funding

This work has been funded by the Ministry of Science and Innovation, project Indigo! with reference number PID2019-105951RB-I00/AEI/10.13039/501100011033 and by the Madrid Regional Government through the project eMadrid-CM(S2018/TCS-4307). The later is also co-financed by the Structural Funds (FSE and FEDER). ClipIt has been funded by the European Commission (grant agreement n° 317964 JUXTALEARN).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Acknowledgments

Thanks to the participants who were involved in the experience presented in this paper and to the pedagogical team.

Conflicts of Interest

Neither of the authors have any conflict of interest in writing this manuscript. Datasets have been anonymized to ensure that participants cannot be identified. Students uploaded their own videos to Youtube, and the videos have private access. All participants are of legal age. They knew that they were participants in an educational study and that some research studies could be published in several venues. The names of the students do not appear anywhere. Their legal guardians provided informed consent to be able to record the videos and upload them to the Youtube platform. The videos were deleted at the end of the study.

References

- Taylor, J.; Hodapp, R.M. Doing nothing: Adults with disabilities with no daily activities and their siblings. Am. J. Intellect. Dev. Disabil. 2012, 117, 67–79. [Google Scholar] [CrossRef] [PubMed]

- Robinson, W.; Syed, A.; Akhlaghi, A.; Deng, T. Pattern discovery of user interface sequencing by rehabilitation clients with cognitive impairments. In Proceedings of the IEEE 2012 45th Hawaii International Conference on System Sciences, Maui, HI, USA, 4–7 January 2012; pp. 3001–3010. [Google Scholar]

- Lazar, J.; Kumin, L.; Feng, J. Understanding the computer skills of adult expert users with Down syndrome: An exploratory study. In Proceedings of the 13th international ACM SIGACCESS Conference on Computers and Accessibility (ASSETS ’11), Scotland, UK, 24–26 October 2011; pp. 51–58. [Google Scholar]

- Gómez, J.; Alamán, X.; Montoro, G.; Torrado, J.C.; Plaza, A. AmICog–mobile technologies to assist people with cognitive disabilities in the work place. ADCAIJ Adv. Distrib. Comput. Artif. Intell. J. 2014, 2, 9–17. [Google Scholar] [CrossRef]

- Giannakos, M.N.; Vlamos, P. Using webcasts in education: Evaluation of its effectiveness. Br. J. Educ. Technol. 2013, 44, 432–441. [Google Scholar] [CrossRef]

- MacDonald, R.; Sacromone, S.; Mansfield, R.; Wiltz, K.; Ahearn, W. Using video modelling to teach reciprocal pretend play to children with autism. J. Appl. Behav. Anal. 2009, 42, 43–55. [Google Scholar] [CrossRef]

- Kagohara, D.M.; van der Meer, L.; Ramdoss, S.; O’Reilly, M.F.; Lancioni, G.E.; Davis, T.N.; Sigafoos, J. Using iPods® and iPads® in teaching programs for individuals with developmental disabilities: A systematic review. Res. Dev. Disabil. 2013, 34, 147–156. [Google Scholar] [CrossRef]

- Chang, Y.; Kang, Y.; Huang, P. An augmented reality (AR)-based vocational task prompting system for people with cognitive impairments. Res. Dev. Disabil. 2013, 34, 3049–3056. [Google Scholar] [CrossRef] [PubMed]

- Chang, Y.; Chou, L.; Wang, Y.; Chen, S. A kinect-based vocational task prompting system for individuals with cognitive impairments. Pers. Ubiquitous Comput. 2013, 17, 351–358. [Google Scholar] [CrossRef]

- Kasanen, M. Software Development for People with Intellectual or Developmental Disabilities in 2010–2019: A Systematic Mapping Study; University of Jyväskyläm: Jyväskylä, Finland, 2020. [Google Scholar]

- Blanco, T.; Marco, A.; Casas, R. Online social networks as a tool to support people with special needs. Comput. Commun. 2016, 73, 315–331. [Google Scholar] [CrossRef]

- Nganji, J.T. Designing disability-aware e-learning systems: Disabled students’ recommendations. Int. J. Adv. Sci. Technol. 2012, 48, 1–70. [Google Scholar]

- Arachchi, T.K.; Sitbon, L.; Zhang, J. Enhancing Access to eLearning for People with Intellectual Disability: Integrating Usability with Learning. In IFIP Conference on Human-Computer Interaction; Springer: Cham, Switzerland, 2017; pp. 13–32. [Google Scholar]

- Urquiza-Fuentes, J.; Hernán-Losada, I.; Martín, E. Engaging students in creative learning tasks with social networks and video-based learning. In Proceedings of the 2014 IEEE Frontiers in Education Conference (FIE), Madrid, Spain, 22–25 October 2014; pp. 1–8. [Google Scholar]

- Cruse, E. Using Educational Video in the Classroom: Theory, Research and Practice; Library Video Company: West Conshohocken, PA, USA, 2006. [Google Scholar]

- Clark, J.R. Media Will Never Influence Learning. Educ. Technol. Res. Dev. 1994, 42, 21–29. [Google Scholar] [CrossRef]

- Kozma, R. Will media influence learning? Reframing the debate. Educ. Technol. Res. Dev. 1994, 42, 7–19. [Google Scholar] [CrossRef]

- Goulah, J. Village voices, global visions: Digital video as a transformative foreign language learning tool. Foreign Lang. Ann. 2007, 40, 62–78. [Google Scholar] [CrossRef]

- Palmgren-Neuvonen, L.; Korkeamäki, R.L. Teacher as an orchestrator of collaborative planning in learner-generated video production. Learn. Cult. Soc. Interact. 2015, 7, 1–11. [Google Scholar] [CrossRef][Green Version]

- Bellini, S.; Akullian, J. A meta-analysis of video modelling and video self-modelling interventions for children and adolescents with autism spectrum disorders. Except. Child. 2007, 73, 264–287. [Google Scholar] [CrossRef]

- Allen, K.D.; Wallace, D.P.; Renes, D.; Bowen, S.L.; Burke, R.V. Use of video modelling to teach vocational skills to adolescents and young adults with autism spectrrum disorders. Educ. Treat. Child. 2010, 33, 339–349. [Google Scholar] [CrossRef]

- Shipley-Benamou, R.S.; Lutzker, J.R.; Taubman, M. Teaching daily living skills to children with autism through instructional video modelling. J. Posit. Behav. Interv. 2002, 4, 165–175. [Google Scholar] [CrossRef]

- Apple, A.L.; Billingsley, F.; Schwartz, I.S. Effects of video modelling alone and with self-management on compliment-giving behaviours of children with high-functioning ASD. J. Posit. Behav. Interv. 2005, 7, 33–46. [Google Scholar] [CrossRef]

- Kellems, R.O.; Morningstar, M.E. Using video modelling delivered through iPods to teach vocational tasks to young adults with autism spectrum disorders. Career Dev. Transit. Except. Individ. 2012, 35, 155–167. [Google Scholar] [CrossRef]

- Boudreau, E.; D’Entremont, B. Improving the pretend play skills of preschoolers with autism spectrum disorders: The effects of video modelling. J. Dev. Phys. Disabil. 2010, 22, 415–431. [Google Scholar] [CrossRef]

- Meyer, J.; Land, R. Overcoming Barriers to Student Understanding: Threshold Concepts and Troublesome Knowledge; Routledge: London, UK, 2006. [Google Scholar]

- Llinás, P.; Haya, P.; Gutierrez, M.A.; Martín, E.; Castellanos, J.; Hernán, I.; Urquiza, J. ClipIt: Supporting social reflective learning through student-made educational videos. In European Conference on Technology Enhanced Learning; Springer International Publishing: New York, NY, USA, 2014; pp. 502–505. [Google Scholar]

- Vidal, J.L.; Gonzalez, J.A.; Ordenador, A.I.P. La Interacción Persona-Ordenador; AIPO: Lérida, Spain, 2001. [Google Scholar]

- Moore, D.; McGrath, P.; Thorpe, J. Computer-aided learning for people with autism—A framework for research and development. Innov. Educ. Teach. Int. 2000, 37, 218–228. [Google Scholar] [CrossRef]

- Wegerif, R.; Mercer, N.; Dawes, L. From social interaction to individual reasoning: An empirical investigation of a possible socio-cultural model of cognitive development. Learn. Instr. 1999, 9, 493–516. [Google Scholar] [CrossRef]

- Garrels, V.; Arvidsson, P. Promoting self-determination for students with intellectual disability: A Vygotskian perspective. Learn. Cult. Soc. Interact. 2019, 22, 100241. [Google Scholar] [CrossRef]

- Holzinger, A. Finger instead of mouse: Touch screens as a means of enhancing universal access. In Universal Access Theoretical Perspectives, Practice, and Experience; Springer: Berlin, Germany, 2002; pp. 387–397. [Google Scholar]

- Abinali, F.; Goodwin, M.S.; Intile, S. Recognizing stereotypical motor movements in the laboratory and classroom: A case study with children on the autism spectrum. In Proceedings of the 11th International Conference on Ubiquitous Computing, Orlando, FL, USA, 30 September–3 October 2009; ACM: New York, NY, USA, 2009; pp. 71–80. [Google Scholar]

- Cihak, D.; Bowlin, T. Using video modelling via handheld computers to improve geometry skills for high school students with learning disabilities. J. Spec. Educ. Technol. 2009, 24, 17–29. [Google Scholar] [CrossRef]

- Tekin-Iftar, E.; Kırcaali-Iftar, G. Ozel Egitimde Yanlissiz Ogretim Yontemleri, 2nd ed.; Errorless Teaching Procedures in Special Education; Nobel Yayinevi: Ankara, Turkey, 2006. [Google Scholar]

- Masats, D.; Dooly, M.; Costa, X. Exploring the potential of language learning through video making. In Proceedings of the EDULEARN09 Conference, Barcelona, Spain, 6–8 July 2009. [Google Scholar]

- Karppinen, P. Meaningful Learning with Digital and Online Videos: Theoretical Perspectives; AACE: Norfolk, VA, USA, 2005. [Google Scholar]

- Liu, E.Z.F.; Lin, S.S.; Chiu, C.H.; Yuan, S.M. Web-based peer review: The learner as both adapter and reviewer. IEEE Trans. Educ. 2001, 44, 246–251. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).