Abstract

The design of the formative and summative assessment processes is of paramount importance to help students avoid procrastination and guide them towards the achievement of the learning objectives that are described in the course syllabus. If the assessment processes are poorly designed the outcome can be disappointing, including high grades but poor learning. In this paper, we describe the unexpected and undesirable effects that an on-demand formative assessment and the timetable of a summative assessment that left the most cognitively demanding part, problem-solving, to the end of the course, had on the behavior of students and on both grading and learning. As the formative assessment was voluntary, students procrastinated till the last minute. However, the real problem was that due to the design of the summative assessment, they focused their efforts mainly on the easiest parts of the summative assessment, passing the course with ease, but achieving a low learning level, as evidenced by the low scores of the problem-solving part of the summative assessment.

1. Introduction

The syllabus of a course includes, among other things, the learning objectives, a description of the assessment procedures and the means, including the formative assessment, the students are supposed to use to reach the established objectives. This guide is at the disposal of the students since the beginning of the course and, if properly followed, it is supposed to be a priceless tool for the students in their learning path. However, to reach the learning objectives, the assessment processes and the means at the disposal of students must be carefully chosen and used along the course.

Therefore, one of the most important actions teachers can take is to design cleverly the formative and summative assessment processes [1,2,3]. The first one will provide the students with feedback and useful information about their degree of understanding of the studied matter [4,5] so they can have a predictor of the outcome [6,7] and know where to direct their efforts, leading to a self-regulated learning [8,9]. The second one will provide a formal certification of the acquired knowledges and skills.

To design the assessment procedures, it must be taken into account that a good grade is not always synonymous with a good understanding of the matter. Usually, the main aim of students is to pass the exam optimizing the use of the resources at their disposal [10,11], of which time is the most important and the one that must be reduced to liberate hours that will be used in the extensive social life associated with their age. The main aim of the teacher is to help students in their learning process and that usually means getting the students to spend, in a continuous and well-organized way, as many resources as necessary in their education, as that will guarantee a good outcome. Combining both views is not an easy task [12,13] and calls for a good knowledge of the behavior of students and how and when they will use the resources at their disposal. Lack of experience or unexpected mistakes can spoil easily all the work and, worse still, the consequences can even go unnoticed.

Part of the problem can be caused using automated online tools, mainly based on quizzes, which on the other hand also have multiple and great advantages [14]. In fact, online quizzes are displacing teachers in the task of marking written homework and in giving students the necessary feedback. Furthermore, this change has been accelerated worldwide due to the changes and restrictions imposed due to the COVID-19 pandemic.

Despite this change seems desirable and unstoppable, it has some disadvantages. Two of them are:

- Teachers can feel their involvement in the learning process of students is less and less necessary. The possibilities online quizzes offer can lead to the design of on-demand and automated formative assessment systems based on online quizzes where the participation of the teacher is very limited. This will not be a problem for the more motivated and skilled students, but can be a problem for the rest of them as no one with experience is helping them during the course.

- Quizzes and other online tools are not very well suited for time-consuming and cognitive-demanding activities like solving numerical problems. This does not mean a quiz cannot be used to test high-level cognitive skills [15], but if a quiz is defined as a set of short questions that must be answered in 1 or 2 min, essays and time-consuming questions that require a long calculation process are inevitably ruled out.

The first disadvantage leads immediately to procrastination or avoidance if completing the formative assessment is not mandatory, but voluntary.

Procrastination, which can be defined as an irrational tendency to postpone performing a task is recognized as one of the main threats to student performance [16,17], leading not only to a decrease in the quality and quantity of learning, but also to an increase of stress [18] that affects even private life. The causes of procrastination in an academic context have been studied during recent decades and include variables such as internet addiction [19], self-esteem, perfectionism, planning skills, extroversion, emotional intelligence and self-efficacy [18], although self-efficacy and procrastination are, by definition, antonyms and mutually exclusive. Nevertheless, the main causes of procrastination during the young age could be related to the inevitable preference of students for socializing and enjoying life and the absence of a pressing need to earn a living. The immediate reward associated with socializing and fun activities is hardly comparable to the distant payoff of working today in something that can be done tomorrow [20].

Another problem is that although students can receive the correct answer and even an explanation from the automated system, there is no guarantee that they will understand it, and as the interaction with the teacher is reduced (not on purpose), they could be more reluctant to ask for support, something that is anyway a widespread problem [21], even when encouraging them actively ameliorates the problem [22].

The second disadvantage does not mean online quizzes are not pertinent. As a matter of fact, online quizzes are a highly demanded option by students and seems to improve their summative assessment [23,24,25,26], although there is no advantage with regard to written quizzes [27] and some authors doubt whether doing online quizzes improves the final marks or, simply, the more skilled and willing students do more quizzes [28,29]. Some even doubt if in some cases the relation exists or if it is positive [30,31], possibly due to the detrimental effect of overconfidence [6]. That said, if complex and time-consuming questions are ruled out, it will be difficult for the students to reach a deep understanding of the studied matter.

This paper presents a study of the causes of the undesirable and unexpected consequences changes in the formative and summative assessment procedures had on the learning of the students of a materials science course and why they almost went undetected.

The changes, part of them requested by the students in previous years, consisted of changing from a weekly summative assessment that was also used as formative assessment to an online, automated and on-demand system-based mainly in quizzes for the formative assessment and in using 2 online exams based on quizzes and one last written exam aimed at evaluating the numerical problem-solving skills of students for the summative assessment.

The new assessment procedures led to an increase in the scores obtained in the quizzes part of the summative assessment but also to a significant drop in the scores obtained in the written exam. Most of the students passed the course but with a low grade due to the low scores of the written exam that required a deeper knowledge, calculations and a slower and more thoughtful work.

The problem was the formative and summative assessment were poorly designed to force the activation of self-regulated learning, or rather, self-regulation consisted of a shortcut that avoided the effort of a profound learning. The identified mistakes give a word of advice against assessment procedures that do not prevent procrastination, allow students to pass the course completing only part of the assignments or only evaluate part of the skills students should have acquired.

2. Materials and Methods

2.1. Changes in Assessment Procedures

“Materials” is one of the required courses students must take during their second year of studies towards a bachelor’s degree in Industrial Design Engineering and Product Development at the Valencia Polytechnic University in Spain. This course, with near 150 students enrolled every year, is divided into 14 chapters and the minimum score to pass the course is 5 out of 10. The course extends over 15 weeks.

The summative assessment procedure was changed for the 2019/2020 course to satisfy the demands of part of the students that expressed that a continuous summative assessment was too demanding and stressful, leaving less time that desirable for other courses. In fact, this feeling has also been observed by other authors [32,33] and affects teachers [34]. The old grading was:

- One online quiz with 10 questions for each chapter except the first one: 15%.

- One online problem for each chapter except the first one (by problem we mean time-consuming questions that require a numerical calculation process). The solution was sent scanned or photographed: 15%.

- Two written exams with 10 problems, one mid-semester and another one at the end of the course: 55%.

- Lab reports and post-lab questions and three online quizzes about the lab sessions: 15%

- There is no minimum passing grade for the different parts of the assessment.

The last rule is part of the assessment code established by the Higher Technical School of Design Engineering where the course is taught.

Regarding the formative assessment, the online quizzes and problems were supposed to give enough feedback to the students, as one was done almost every week.

The new grading was:

- Two online quizzes, one mid-semester and another one at the end of the course: 35%.

- One on-site written exam with 10 numerical problems at the end of the course: 40%.

- Lab reports and post-lab questions: 25%

- There is no minimum passing grade for the different parts of the assessment.

In this case, the students had at their disposal a series of online quizzes (10 questions each one) and some online problems involving mathematical calculations from the beginning of the course. The questions for the quizzes were randomly taken from a database that contains between 30 and 110 questions for each chapter. There was no time limit, and the students could take as many quizzes as wished. Once the quiz was finished, the students received their score immediately along with the correct answers. The online formative problems were scored automatically by comparing the numerical result introduced by the students with the correct one, allowing some margin of error, but no description of the correct calculation process was given. This way, feedback was provided by the system without the intervention of the teacher unless students asked for a tutoring meeting. The questions for the summative quizzes were not taken from the same database than the questions for the formative quizzes.

The change was supposed to provide students with a means to obtain direct and continuous formative assessment during the course, but, despite previous years, when the quizzes were mandatory and part of the summative assessment, its use was voluntary and dependent only on the behavior and will of students.

The consequences of the changes showed there was a fault in the design of the assessment procedures that should be investigated. This fault was supposed to be related to how the students adapted their behavior to the new formative and summative assessment.

2.2. Participants

During the year of study, 135 students enrolled the course, 48% male and 52% female. Apart from gender, the students form a homogeneous group of mainly Caucasian Spanish students with a medium-high income and cultural level. Only 2 students were South America natives and other 2 were from North Africa. Those quantities are not high enough to consider an investigation on the student behavior based on ethnicity.

Except 1 north African student, all of them had studied in Spain since childhood and even this student had been living in Spain for 8 years. Therefore, in general, no language difficulties were expected. Additionally, no disabilities were reported by any student.

2.3. Methodology

The effects of the changes in the assessment procedures were evaluated by compared to the mean summative scores obtained by the students in the previous years, mainly, the year before the change, although that year is a good representative of all of them. As some consequences of the changes were undesirable, the changes are not going to be maintained any more. Therefore, there is not the possibility of obtaining new data from the scores of another year with the same assessment procedures, what could confirm the findings presented in this paper. Nevertheless, the differences in the problem-solving part of the summative assessment were considered big enough to be credible and a consequence of the changes. Additionally, once the behavior of students and the assessment timetable was studied, the consequences seem logical.

To study the behavior of students and disclose the relationship between that behavior and learning, we studied the data collected by the learning management system of the university, based on Sakai. The data available is divided in two databases: The first one, automatically collected, includes identification of the student, start and finish time for each formative quiz or problem and scores. This data were available for all chapters except chapter 8 due to computer problems that led to the loss of that data during the onset of the COVID-19 pandemic. The second part included identification of the students and scores of the summative assessment, which was introduced manually by the teachers. After retrieval, the data were analyzed using macros programmed in MS Excel. The students’ ID served as a link to relate both databases.

As the changes consisted mainly of an on-demand formative assessment and of changes in the summative assessment, the relation between them and summative scores were analyzed. The first step was to study the behavior of students with regards to the voluntary formative assessment. The number of formative quizzes and numerical questions taken per course week and per student were counted. This simple analysis provided a rough view of when and how much the students used the formative assessment along the course.

The consequences of the design of the formative assessment were studied by relating the amount and type (quizzes or problems) of formative work done by the students along the course with the scores of the different parts of the summative assessment. The temporal distribution of the work done showed a direct relationship with the summative assessment, what provided the main clue to find the most critical mistakes of the new assessment procedures.

3. Results and Discussion

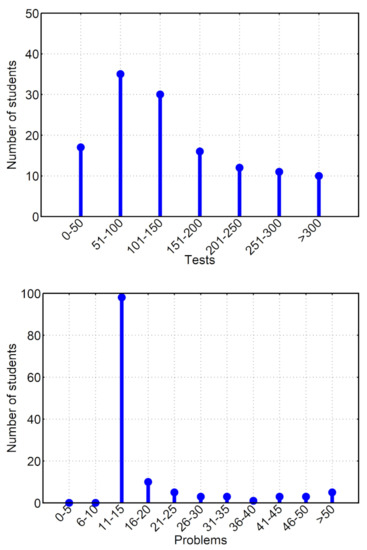

132 students took a least one quiz or solved a numerical problem. In total, 20,358 quizzes and 1205 problems were done (excluding the ones concerning chapter 8 of the course), which implies each student did a mean of 154 quizzes and 9.1 problems, not even one per chapter. Figure 1 gives a better perspective on how many formative quizzes and problems did each student. Almost 60% of them did less than the mean value of quizzes (154 quizzes per student) while 7.5% students did more than 300 quizzes in total.

Figure 1.

Number of students that have done a certain number of tests and problems.

There was a slight difference in the number of tests done between male and female students, while female students did a mean of 165.6 tests along the course, male students did a mean of 141.3 tests. On the other hand, male students did a mean of 9.9 numerical problems while female students did a mean of 8.3 numerical problems. The difference is not big, but seems to indicate female students are more conscious of what parts of the summative assessment are more important to pass the course and, so, they prepare for them with a bit more intensity.

The study of the time students used to complete the quizzes show that 65% of the quizzes were finished in 5 min or less, while 30% were finished the quizzes in 2 or less minutes. The commonly accepted value is 1 min per question [35], with being verified that longer times could lead to a decrease in performance in terms of scores [36]. This means the quizzes should be answered in around 10 minutes.

One of the potential explanations is that as the number of quiz questions in the database is 3 to 11 times the number of questions in the quizzes, the students used to repeat the quizzes time and again in an attempt to view as many questions as possible in their attempt to prepare for the summative assessment. After the first quizzes, many questions repeat, what means the answer is known. If that is the case, the students can answer very quickly (they can even mark the correct answer without reading it if they recognize it visually). In any case, the time needed to complete the test is reduced considerably.

The number of numerical problems done by the students is much lower than that of quizzes. Not only were they available in less quantity, but required more time, were harder to solve and, overall, the capacity to solve such problems was not evaluated until the end of the course. The limited use of the available online numerical problems as a tool for formative assessment is unfortunate because problem-solving require a different set of skills and cognitive skills than common quizzes [37], where the work with data or formulas is very limited, and that work is important to reach all the goals listed in the syllabus.

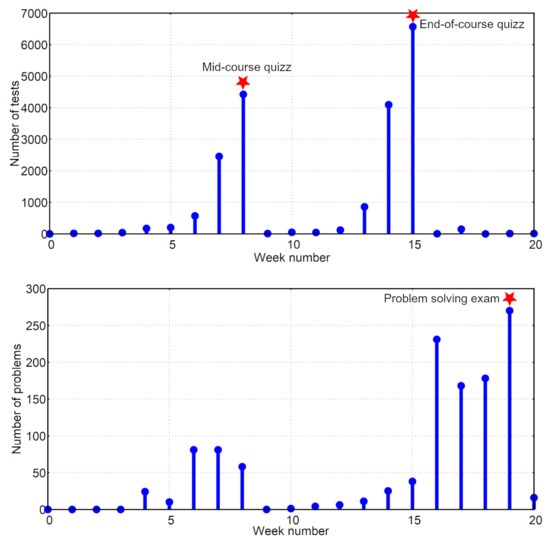

Additionally important is to know if the effort of the students was distributed equally along the course, something that would show a high level of self-regulation, what is accompanied by a higher level of academic success [9]. Figure 2 shows that was not the case and that despite the great number of quizzes done; this kind of formative assessment was concentrated in the two weeks before the summative assessments.

Figure 2.

Distribution of the number of formative quizzes and problems done by week.

Although unfortunate, this is the typical behavior of students if effective measures to avoid academic procrastination are not implemented in the class [38]. The level of procrastination varies from 50 to 90% according to different authors [39,40] or even more [41]. In this case, if the level of procrastination is calculated as the number of quizzes done during the 2 weeks before each exam divided by the total number of quizzes, procrastination reaches nearly 90%. This is not how procrastination percentage is usually calculated (using self-reports about executive functioning [42]), but gives a good image of the problem because 4 weeks (two weeks before each quiz exam) accounts for around 23.5% of the course duration, including non-teaching weeks.

The effect of procrastination is usually stress [43] and a lower learning level [44] With these facts in mind, it is advisable to implement multiple deadlines along the course so that the curve of learning and time dedicated to study changes significantly, as shown in Figure 3.

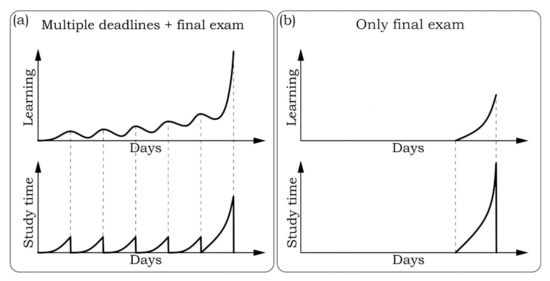

Figure 3.

Evolution of learning (a) with and (b) without multiple deadlines along the course.

According to the model proposed in Figure 3, if students have several deadlines distributed along the course, even if they delay the work until the last minute, they will be forced to study before each deadline. Evidently, they are not studying for the final exam and the learning level could not be as high as desirable and some concepts will be partially forgotten as days go on, but not all of them and the scaffold that is created will help students gain a deeper, greater and faster understanding of the studied matter. With studied at a steady pace will also reduce the stress level and give them more confidence before the final exam.

Unfortunately, it seems self-imposed deadlines do not work too well [45], as they are easily delayed or cancelled. To be effective those deadlines should be somehow imposed by the teacher. This was one of the main problems of the design of the formative assessment. Students were not forced to take the quizzes after each lesson and, as a consequence, they did not take them until the days before the summative assessment.

Nonetheless, online formative quizzes were useful, even despite procrastination, as a means to prepare the students for the summative assessment. In fact, the mean score for the summative quizzes after the change was 7.33, while in previous years it ranged from 6.5 to 7.0 (in the preceding course it was 6.89).

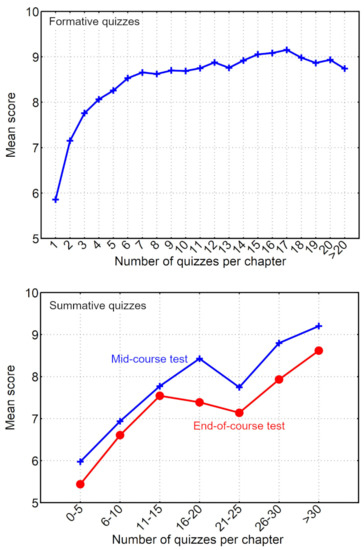

The usefulness of formative quizzes is directly related to the amount of quizzes done by the students. Figure 4 shows how the mean number of right answers in the formative assessment evolves with the number of quizzes done per chapter. That figure shows clearly how the score obtained by students in the formative quizzes increase until a certain number of quizzes is reached. In this case, this number is 11 quizzes. After that threshold the profit of doing more quizzes is small. The mean score of the summative quizzes show a more continuous growth, without a saturation threshold. This is somewhat expected as after some formative quizzes the answers to the questions are known. The summative quizzes do not show this effect, but the outcome of the effort and time of study of each student.

Figure 4.

Evolution of the mean score in the formative (up) and summative quizzes (down) with the number of formative quizzes done.

The evolution of the mean score with the number of formative quizzes done is very similar for the two summative quizzes, with a continuous increase that boosts the mean score from 5.97 to 9.20 (out of 10) for the first summative assessment and from 5.44 to 8.62 for the second summative assessment. This accounts for a 54% and a 58% increment, respectively. Nevertheless, a slight decrease in the score seems to take place for students who have done more than 200 tests (about 18 quizzes per chapter). There is no simple explanation, but it could be these students do not know when to stop and dedicate time to other learning activities once they have done enough quizzes.

The mean score for the formative assessment-based in problem-solving was 2.57 out of 10, although it must be taken into account that the score for each problem is 10 or 0 as only the final result is evaluated by comparing it with the correct value. Most of the students were unable to solve the problems correctly but they did not ask for advice or a tutoring session despite being encouraged to do so many times along the course and not with may possibilities of knowing where they had failed without the detailed solutions to the problems. This fact indicates a serious flaw in the behavior of the students.

The mean score for the summative numerical problems was 1.69. This score is usually the lowest of the different parts of the summative assessment (in the preceding course it was 4.30), but 1.69 is extremely low. In fact, only two students passed that exam, while in the previous year 35.5% passed that part of the assessment (not counting the second-chance exam).

Furthermore, concerning is that if the problem-solving exam had not been passed at the end of the course, the final grades would have shown an improvement compared to previous years and would have led to the conclusion that the changes implemented in the course were greatly successful in improving learning. This would have been an unfortunate mistake, as actually learning levels had decreased due to the fact that part of the educational goals was far from being attained.

Regarding the influence of gender, no significant differences were found, as Table 1 shows. The differences are very small and could be attributed to the quantity of formative quizzes and problems done by each gender.

Table 1.

Gender differences in the summative mean scores obtained by female and male students.

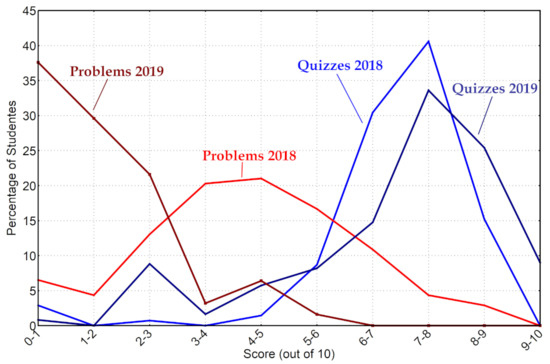

The change in the scores associated with the new assessment is not due to part of the students, but to a general change in student self-regulation that had to be studied and was not due to procrastination. This was the first year the students had online quizzes and problems at their disposal to use as formative assessment, so, an increase in scores was expected (as usual, written questions in the textbook were also available). This happened in the quizzes, but not in the numerical problems. Figure 5 shows this effect in a comparison with the scores of the prior course.

Figure 5.

Percentage of students that have gotten a certain score in the summative quizzes and the numerical problems exam in the year of study and the previous one.

The teachers consider the problems were not different nor more difficult than the ones of previous years.

The cause of the low scores in problems lies in two facts:

- The low interest the students have had in doing that kind of numerical problems along the course. That is an issue, but one that has not affected the scores in summative quizzes.

- The formative goals related to the practical application of theory in problem-solving were deemed as secondary and not worthy of attention by the students.

This second fact is the most important one. The source of the preference of the students can be found in the timetable for the summative assessment and in the percentage of the final grade associated with each assessment activity. The problem-solving exam was done after the two summative quizzes had been done and graded. This means the students knew their scores for the 60% of the final grade (quizzes were automatically scored once they were finished and the score for the lab reports and post-lab questions, which are usually high, were also known). As the scores for the two already graded parts were high enough to assure a final grade over 5 and there was not a minimum passing score for the different parts of the assessment, most of the students knew they had passed the course before the problem-solving exam. Knowing this and with other exams to think about, they decided to devote very little time to prepare the last exam of the summative assessment. There was an obvious increment in the online activity before this last exam, but proportionally much lower than the activity associated with quizzes, and, it must be assumed that with far less interest.

Therefore, the mistakes in the design of the formative and summative assessment can be summarized as follows:

- No mechanism was implemented to avoid procrastination and assure the correct and frequent use of the formative assessment.

- The description of the correct calculation procedure for the problems was not given to the students.

- Problem-solving abilities were evaluated only one time at the end of the course, after the formative quiz tests.

- The students knew if they had passed the course before the problem-solving exam.

- There was no minimal passing grade for the problem-solving exam.

The study has one main limitation, and that is the fact that the data analyzed corresponds to only one year. Nevertheless, the differences in the scores obtained with respect to previous years are too big to think they are the result of pure chance.

According to the scores, procrastination was not the problem it could have been, but nonetheless, it is a behavior that should be avoided. The same cannot be said about the design of the summative assessment that certainly should be changed. Some proposals that should work are:

- The formative online quizzes and problems should remain available to the students, but their availability could be limited to 2 weeks after the corresponding theory has been studied in class. Students will have to do them in those 2 weeks or lose the opportunity. This should reduce considerably procrastination. It also could simply be made mandatory.

- Explanations about the correct answer should be given to the students for both quizzes and problems.

- The summative assessment could be changed to two written exams along the course (one mid-course and another one at the end of the course), both including quizzes and problems. This should also reduce procrastination and will assure no goals will be forgotten, while will reduce the continuous stress associated with the weekly summative assessment.

- All parts of the summative assessment should have a minimum score for the students to pass the course, although right now that is not possible.

Evidently, many other assessment procedures could be used in the course provided all the detected mistakes, which give a word of advice to teachers who are going to change their assessments, are corrected.

Author Contributions

Conceptualization, Á.V.E.; software, F.S.V.; validation, Á.V.E. and F.S.L.; formal analysis, F.S.V.; investigation, Á.V.E. and F.S.V.; resources, M.Á.P.P.; data curation, F.S.V.; writing—original draft preparation; writing—review and editing, M.Á.P.P.; visualization, M.Á.P.P.; supervision, M.Á.P.P. and F.S.L.; project administration, Á.V.E.; funding acquisition, none All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data supporting the study can be obtained from the authors.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Dick, W. Summative evaluation. In Instructional Design: Principles and Applications; Universidad de Salamanca: Salamanca, Spain, 1977; pp. 337–348. [Google Scholar]

- Migueláñez, S.O. Evaluación formativa y sumativa de estudiantes universitarios: Aplicación de las tecnologías a la evaluación educativa. Teoría De La Educación. Educ. Y Cult. En La Soc. De La Inf. 2009, 10, 305–307. [Google Scholar]

- Martínez Rizo, F. Dificultades para implementar la evaluación formativa: Revisión de literatura. Perfiles Educ. 2013, 35, 128–150. [Google Scholar] [CrossRef]

- Rolfe, I.; McPherson, J. Formative assessment: How am I doing? Lancet 1995, 345, 837–839. [Google Scholar] [CrossRef]

- Iahad, N.; Dafoulas, G.; Kalaitzakis, E.; Macaulay, L. Evaluation of online assessment: The role of feedback in learner-centered e-learning. In Proceedings of the 37th Annual Hawaii International Conference on System Sciences, Big Island, HI, USA, 5–8 January 2004. [Google Scholar] [CrossRef]

- Bell, P.; Volckmann, D. Knowledge Surveys in General Chemistry: Confidence, Overconfidence, and Performance. J. Chem. Educ. 2011, 88, 1469–1476. [Google Scholar] [CrossRef]

- Dobson, J.L. The use of formative online quizzes to enhance class preparation and scores on summative exams. Adv. Physiol. Educ. 2008, 32, 297–302. [Google Scholar] [CrossRef]

- Paris, S.G.; Paris, A.H. Classroom Applications of Research on Self-Regulated Learning. Educ. Psychol. 2001, 36, 89–101. [Google Scholar] [CrossRef]

- Zimmerman, B.J. Self-Regulated Learning and Academic Achievement: An Overview. Educ. Psychol. 1990, 25, 3–17. [Google Scholar] [CrossRef]

- Meece, J.L.; Blumenfeld, P.C.; Hoyle, R.H. Students’ goal orientations and cognitive engagement in classroom activities. J. Educ. Psychol. 1988, 80, 514. [Google Scholar] [CrossRef]

- McCoach, D.B.; Siegle, D. Factors That Differentiate Underachieving Gifted Students From High-Achieving Gifted Students. Gift. Child Q. 2003, 47, 144–154. [Google Scholar] [CrossRef]

- Bardach, L.; Yanagida, T.; Schober, B.; Lüftenegger, M. Students’ and teachers’ perceptions of goal structures—Will they ever converge? Exploring changes in student-teacher agreement and reciprocal relations to self-concept and achievement. Contemp. Educ. Psychol. 2019, 59, 101799. [Google Scholar] [CrossRef]

- Rettinger, D.A.; Kramer, Y. Situational and Personal Causes of Student Cheating. Res. High. Educ. 2008, 50, 293–313. [Google Scholar] [CrossRef]

- Marcell, M. Effectiveness of Regular Online Quizzing in Increasing Class Participation and Preparation. Int. J. Scholarsh. Teach. Learn. 2008, 2. [Google Scholar] [CrossRef]

- Cox, K.; Clark, D. The use of formative quizzes for deep learning. Comput. Educ. 1998, 30, 157–168. [Google Scholar]

- van Eerde, W. A meta-analytically derived nomological network of procrastination. Personal. Individ. Differ. 2003, 35, 1401–1418. [Google Scholar] [CrossRef]

- Hayat, A.A.; Jahanian, M.; Bazrafcan, L.; Shokrpour, N. Prevalence of Academic Procrastination Among Medical Students and Its Relationship with Their Academic Achievement. Shiraz E-Med. J. 2020, 21. [Google Scholar] [CrossRef]

- Hen, M.; Goroshit, M. Academic self-efficacy, emotional intelligence, GPA and academic procrastination in higher education. Eurasian J. Soc. Sci. 2014, 2, 1–10. [Google Scholar]

- Aznar-Díaz, I.; Romero-Rodríguez, J.M.; García-González, A.; Ramírez-Montoya, M.S. Mexican and Spanish university students’ Internet addiction and academic procrastination: Correlation and potential factors. PLoS ONE 2020, 15, e0233655. [Google Scholar] [CrossRef] [PubMed]

- Dewitte, S.; Schouwenburg, H.C. Procrastination, temptations, and incentives: The struggle between the present and the future in procrastinators and the punctual. Eur. J. Personal. 2002, 16, 469–489. [Google Scholar] [CrossRef]

- Ryan, A.M.; Pintrich, P.R.; Midgley, C. Avoiding Seeking Help in the Classroom: Who and Why? Educ. Psychol. Rev. 2001, 13, 93–114. [Google Scholar] [CrossRef]

- Pellegrino, C. Does telling them to ask for help work?: Investigating library help-seeking behaviors in college undergraduates. Ref. User Serv. Q. 2012, 51, 272–277. [Google Scholar] [CrossRef][Green Version]

- Pennebaker, J.W.; Gosling, S.D.; Ferrell, J.D. Daily Online Testing in Large Classes: Boosting College Performance while Reducing Achievement Gaps. PLoS ONE 2013, 8, e79774. [Google Scholar] [CrossRef]

- Cantor, A.D.; Eslick, A.N.; Marsh, E.J.; Bjork, R.A.; Bjork, E.L. Multiple-choice tests stabilize access to marginal knowledge. Mem. Cogn. 2014, 43, 193–205. [Google Scholar] [CrossRef]

- Roediger, H.L.; Agarwal, P.K.; McDaniel, M.A.; McDermott, K.B. Test-enhanced learning in the classroom: Long-term improvements from quizzing. J. Exp. Psychol. Appl. 2011, 17, 382–395. [Google Scholar] [CrossRef]

- McDermott, K.B.; Agarwal, P.K.; D’Antonio, L.; Roediger, H.L.; McDaniel, M.A. Both multiple-choice and short-answer quizzes enhance later exam performance in middle and high school classes. J. Exp. Psychol. Appl. 2014, 20, 3–21. [Google Scholar] [CrossRef]

- Anakwe, B. Comparison of Student Performance in Paper–Based Versus Computer–Based Testing. J. Educ. Bus. 2008, 84, 13–17. [Google Scholar] [CrossRef]

- Johnson, G. Optional online quizzes: College student use and relationship to achievement. Can. J. Learn. Technol. 2006, 32. [Google Scholar] [CrossRef]

- Peat, M.; Franklin, S. Has student learning been improved by the use of online and offline formative assessment opportunities? Australas. J. Educ. Technol. 2003, 19. [Google Scholar] [CrossRef][Green Version]

- Merello-Giménez, P.; Zorio-Grima, A. Impact of students’ performance in the continuous assessment methodology through Moodle on the final exam. In EDUCADE—Revista de Educación en Contabilidad, Finanzas y Administración de Empresas; 2017; pp. 57–68. [Google Scholar] [CrossRef]

- Yin, Y.; Shavelson, R.J.; Ayala, C.C.; Ruiz-Primo, M.A.; Brandon, P.R.; Furtak, E.M.; Tomita, M.K.; Young, D.B. On the Impact of Formative Assessment on Student Motivation, Achievement, and Conceptual Change. Appl. Meas. Educ. 2008, 21, 335–359. [Google Scholar] [CrossRef]

- Soe, M.K.; Baharudin, M.S.F. Association of academic stress & performance in continuous assessment among pharmacy students in body system course. IIUM Med. J. Malays. 2016, 15. [Google Scholar] [CrossRef]

- Ghiatău, R.; Diac, G.; Curelaru, V. Interaction between summative and formative in higher education assessment: Students’ perception. Procedia Soc. Behav. Sci. 2011, 11, 220–224. [Google Scholar] [CrossRef][Green Version]

- Trotter, E. Student perceptions of continuous summative assessment. Assess. Eval. High. Educ. 2006, 31, 505–521. [Google Scholar] [CrossRef]

- Brothen, T. Time Limits on Tests. Teach. Psychol. 2012, 39, 288–292. [Google Scholar] [CrossRef]

- Portolese, L.; Krause, J.; Bonner, J. Timed Online Tests: Do Students Perform Better With More Time? Am. J. Distance Educ. 2016, 30, 264–271. [Google Scholar] [CrossRef]

- Litzinger, T.A.; Meter, P.V.; Firetto, C.M.; Passmore, L.J.; Masters, C.B.; Turns, S.R.; Gray, G.L.; Costanzo, F.; Zappe, S.E. A Cognitive Study of Problem Solving in Statics. J. Eng. Educ. 2010, 99, 337–353. [Google Scholar] [CrossRef]

- Zacks, S.; Hen, M. Academic interventions for academic procrastination: A review of the literature. J. Prev. Interv. Community 2018, 46, 117–130. [Google Scholar] [CrossRef] [PubMed]

- Özer, B.U.; Demir, A.; Ferrari, J.R. Exploring Academic Procrastination Among Turkish Students: Possible Gender Differences in Prevalence and Reasons. J. Soc. Psychol. 2009, 149, 241–257. [Google Scholar] [CrossRef]

- Steel, P. The nature of procrastination: A meta-analytic and theoretical review of quintessential self-regulatory failure. Psychol. Bull. 2007, 133, 65–94. [Google Scholar] [CrossRef]

- Ellis, A.; Knaus, W. Overcoming Procrastination; Institute for Rational Living: New York, NY, USA, 1977. [Google Scholar]

- Klingsieck, K.B. Procrastination. Eur. Psychol. 2013, 18, 24–34. [Google Scholar] [CrossRef]

- Sirois, F.M. Procrastination and Stress: Exploring the Role of Self-compassion. Self Identity 2013, 13, 128–145. [Google Scholar] [CrossRef]

- Goda, Y.; Yamada, M.; Kato, H.; Matsuda, T.; Saito, Y.; Miyagawa, H. Procrastination and other learning behavioral types in e-learning and their relationship with learning outcomes. Learn. Individ. Differ. 2015, 37, 72–80. [Google Scholar] [CrossRef]

- Bisin, A.; Hyndman, K. Present-bias, procrastination and deadlines in a field experiment. Games Econ. Behav. 2020, 119, 339–357. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).