Abstract

Conventional methods of teaching structural engineering topics focus on face-to-face delivery of course materials. This study shows that using video-based e-learning in delivering an undergraduate Structural Steel Design course satisfactorily achieved most of the course learning outcomes. Video-based e-learning with animations and simulations gives students a profound understanding of the course intricate design materials. To gauge the effectiveness of video-based e-learning of the course, an online evaluation was conducted by sixty-eight undergraduate students at the United Arab Emirates University using Blackboard. The evaluation consisted of an online survey that was accessible by students who took the structural steel design course in the academic year 2019 using instructional videos provided to them as Quick Response (QR)-codes. The structural steel design course has six learning outcomes (CLOs), and the performance of students in the six CLOs was compared with that of students who took the same course in the academic year 2018 using the traditional face-to-face lecturing method. The survey data was statistically analyzed, and the results revealed that students’ performance improved, and most of the CLOs were attained. Video-based e-learning with animations resulted in better learning outcomes compared to face-to-face lecturing. Accessing the course instruction videos anytime and anywhere is one of the remarkable benefits to the students studying through the e-learning approach.

1. Introduction

Teaching methods and practices are currently being transformed due to technological advancements. The Internet plays a pivotal role in providing information access to users, which was unfeasible earlier. Therefore, emerging technologies and innovations are having a major influence on the traditional in-class course delivery. However, the challenging task is that of optimizing the applications of technology to make education efficient. Structural Engineering is one discipline in Civil Engineering education, and the fundamental core of structural engineering is courses such as Structural Steel Design, which is compulsory for Civil Engineering students. The Structural Steel Design course is a core course offered in all undergraduate civil engineering programs. It may very well be provided in most architectural engineering programs as well. This course incorporates structural analysis, mechanics of materials, materials science, and mathematics to calculate the member internal forces, deformations, stresses, and strains under external loads and, therefore, to design the members accordingly. Most students, in general, have difficulty visualizing the deformed shape of simple structures, an essential skill to understand structural steel member behavior away from theoretical formulae and procedures. This weakness can be mostly credited to the traditional instructional practices that place much effort on the analysis of separate members, and less emphasis on grasping the behavior of the entire 3D structure context.

2. Literature Review

Previous research efforts have concluded that face-to-face lecturing does not appear to be the best instruction method because it does not inspire students nor motivate them to build on present knowledge [1,2]. When students join civil engineering programs, they would want to acquire skills and knowledge to design buildings, towers, bridges, roadways, plants, etc. [3]. In general, to enhance students’ knowledge and learning in structural engineering, including structural steel design, numerous methodologies have been suggested to cover the combination of physical instruction laboratories and virtual instruction gadgets [4,5,6,7,8]. Davalos et al. [5] also established hands-on laboratory examples to have the students better understand the essential structural performance concepts. A web-based application was, moreover, generated by Yuan and Teng [8] for structural behavior theories using a computer-aided learning technique. A 2D computer graphics application was developed by Pena and Chen [9] to be utilized as an instruction lab component and stand-alone instructional module.

The benefits of e-learning comprise communication, enablement of interaction between educators and students, and between different students, unified and updated information, manage the learning environment, and flexibility, which were reported and addressed by Homan and Macpherson [10] as well as Mohammadi et al. [11]. Figures and charts are frequently used as instruction aid to clarify different structural engineering concepts and behavior. Students become uninterested in the lecture hall once many motionless figures and pictures are presented repetitively to demonstrate structural steel design concepts. Many steel design concepts are hard to clarify using figure-aided lectures alone [12]. Integrating the e-learning trend in the pedagogical process has a positive impact on educational learning at all levels [13], for undergraduate and postgraduate studies [14,15]. Besides this, introducing the instructional videos in the pedagogical process will enhance the educational platform and contribute to the quality of the learning knowledge [16,17].

The video-based e-learning materials include instructional videos and the course subjects to be learned. Through using videos, students would be able to halt, repeat, and skip the information. Video-based education is not a new learning method, but uploading educational videos to the Internet is considered a significant step into this new practice of teaching. Generally, though, the available online instructional videos do not comprise any features of interaction. They are typically nonreciprocal educational online videos, and in case interactivity is available, it is primarily limited to in-video quizzes. Interactive instructional videos should be developed online and should be augmented with information challenging activities. The established interactive video-based teaching practice should be evaluated to confirm their educational effectiveness. Researchers have acknowledged video-based e-learning as an influential education resource in online instruction activities. It can improve and moderately swap the conventional classroom-based and face-to-face teaching methods [18]. Using videos leaves a dominant effect on the brain that allows students to do self-study. It could even be better than reading the same theory from a book [18], since it displays material and facts that are difficult to explain through scripts or motionless prints [19].

Videos can interest students and improve their understanding of course topics, leading to better learning outcomes [20,21]. Besides this, video-based e-learning can develop different learning styles [22]. Tuong et al. [23] reviewed 28 video-based learning studies to evaluate the usefulness of the instructional videos in amending wellbeing performances. Greenberg and Zanetis [24] highlighted the optimistic effect of video recording and streaming in teaching. As a conclusion of their study, the authors recommended instructors and educationalists to use collaborative video materials in classes. The growing popularity of tablets, smartphones, and game-based learning has caused a swift change to mobile-based learning platforms. The mobility and wireless interfaces that intelligent devices offer will expedite the transformation to blended and context-sensitive mobile learning activities [25]. Smartphones have had significant implications for the design of socio-technical supports for mobile learning outdoors [26]. In addition, mobile and social media applications have been increasingly used in education due to their educational affordances [27].

A study by Ketsman et al. [28] examined the effect of inserting quizzes at the end of problem-solving videos in an undergraduate physics course on the students’ performance. The study included problem-solving videos with and without examinations. Moreover, the study searched students’ likings to placing questions in the instructional videos when self-learning. Students watched problem-solving videos, took quizzes related to the watched videos, and conducted pre and post surveys. When comparing the effect of problem-solving videos with and without exams on the students’ performance, the study did not show statistical significance.

Qualitative examination verified robust student likings to problem-solving videos in lecture rooms, more in-class engagement, and an essential need for direct comments by the instructor. Reyna et al. [29] suggested an outline to cultivate digital media knowledge and qualify students for digital media creation. The outline highlighted three interdependent fields: theoretical, practical, and audio-visual. Al-Alwani [30] proposed a tool survey as a simple apparatus for adjusting and categorizing digital content in e-content systems. The adjustment and categorization were based on seven main criteria with several elements queries to measure the conduct of subjects in an e-learning environment. The assessment outline was established using reviews from an expert-oriented board of 42 specialists in the higher education arena. Albeit social media application in teaching is an exciting research topic nowadays, there seems to be no unanimity for how social media can best benefit students in the classroom. A critical literature review on the application of social media in teaching was carried out by Stewart [31]. Qualitative works that search the use of social media as a tool in education were addressed, findings of different studies on integrating social media in teaching were highlighted, and recommendations for prospective upcoming research were provided.

3. The Objective of Using Video-Based E-Learning

The literature quest shows that minimal research have addressed enhancing the pedagogical process of teaching structural design courses. In the present educational analysis, sixty-eight undergraduate students at the United Arab Emirates University were surveyed to investigate the usefulness and advantages of employing e-learning techniques in the teaching of civil engineering subjects of an undergraduate course.

This study aims at assessing the effectiveness of using video-based e-learning in teaching and, consequently, attaining the envisioned course objectives and learning outcomes of undergraduate Structural Steel Design courses in engineering curricula. Video-based e-learning with animations and simulations give students a profound understanding of the intricate design materials of the course. To gauge the effectiveness of video-based e-learning of the structural steel design course, an online evaluation was conducted by sixty-eight undergraduate students at the United Arab Emirates university using the Blackboard web-based learning platform.

To learn about the students’ opinions of using instructional videos in the Structural Steel Design course, a questionnaire was developed and distributed to the students to respond to questions related to the use of videos as an e-learning tool. The targeted surveyed students were those who took the course in the 2019 semester using the instructional videos posted on Blackboard. The questions were distributed to all students two weeks prior to the final exam to make sure that all students participated in the survey and that they would feel at easy when answering the survey. The feedback received from students was analyzed, and the studied results were presented. The 2019 sixty-eight students’ performance in the seven-course learning outcomes was compared with that of the 2018 sixty-four students who took the same course a year before using conventional face-to-face lecturing.

4. Digitization of Teaching and Learning

The digital era has reshaped the nature of resources and information, besides its transformation of several essential social and economic enterprises [32]. The trend of online education has been on the rise in academia. Registration rates in online courses have expressively increased recently. However, one of the deciding constituents of online course quality is student engagement in the course activities during the semesters. Therefore, there is a necessity to efficiently assess the students’ participation to evaluate the students’ overall achievement and attainment of the course learning outcomes. It is apparent that there is considerable potential for using different digital resources in different ways for instruction and learning, where more technologies have become available and are easy to access by the instructors to develop and improve the pedagogical process, like videos, websites, interactive tools, and online activities.

One of the practical tools that has been recently used to facilitate deployment of the e-Learning and to promote using the internet resources is the QR code (i.e., Quick Response code), which is explicitly used for marketing and commercial purposes. QR codes include information, data, as well as hyperlinks for a specific material that could be skimmed, as shown in Figure 1. The widespread acceptance of e-learning innovation depends on a shared vision, clear guidance, encouraging culture, and high-quality support. A combination of these pillars offers a setting in which educators would be capable of innovating. E-learning innovations need to be laced into the structural perspective and centrally organized. Accordingly, institutions and senior management supports are recognized as serious components for prosperous engagement. Successful application of e-learning is governed by generating an institutional strategy that proposes a shared vision and involves the necessities and worries of faculties responsible for implementation. Equally important are the student expectations, as they are critical to the implementation of e-learning. On top of that would be increasing student alertness about the effectiveness and value of e-learning and online courses by conducting seminars and workshops tailored to increase students’ perceptions of the usefulness of online education.

Figure 1.

QR code representing the course code CIVL417.

Using QR codes related to different topics of the course was an innovative means in teaching [33]. Son [34] studied the importance of integrating the QR code in the classroom to examine its effect on the students’ understanding and engagement. Moreover, it has been used and implemented in teaching engineering subjects to improve the learning process [35]. The teaching experience using QR was reported by Dorado [36], whereas Tulemissova et al. [37] explained that the QR code could offer exceptional advantages. Maia-Lima et al. [38] combined smartphones and QR codes in problem-solving courses. Teachers should try to figure out practical solutions to leverage mobile tools in the classroom [39], where mobile education allows using free internet resources.

5. Course Objectives and Learning Outcomes

Clear course learning outcomes and course objectives facilitate teaching by steering the instructor towards the course-related materials to be delivered and providing students with an outline of what learning activities, pertinent concepts, and skills they should attain. Crowe et al. [40] investigated the consequence of in-class developmental peer assessment on students’ performance using an experimental design in a technical research methods course. The experimental design is often referred to as a quasi-experimental design. One hundred and seventy students in four sections of the course were included in the experimental design assessment. Two sections implemented in-class developmental peer assessment while the other two sections did not. It was found that in-class developmental peer assessment did not expand the students’ knowledge, nor did it improve the students’ comprehension. Moreover, in-class developmental peer assessment acquired time at the expense of the in-class course delivery. It was concluded that in-class developmental peer assessment is recommended for an out-of-class activity to avoid interfering with in-class course delivery.

Information assimilation is a vital feature of learning. In medical teaching, there is a focus on the incorporation of fundamental medical science with clinical training to deliver a developed command of knowledge for upcoming doctors. Of equal significance in medical learning is the upgrade and growth of necessary collaboration and social skills. Slieman and Camarata [41] proposed a strategy of incorporating two-hour instructional active learning per week for first-year preclinical students to nurture learning assimilation and to stimulate practiced progress. The approach was a case-based program containing three phases focusing on knowledge activities: theory mapping, evaluation of student’s work, and group assessment. Rubrics covered group’s produced theory maps, defining tangible student’s work, and proper assessment of group productivity. Study of rubric evaluations and students’ work assessment established that there was substantial statistical attainment in student’s critical thinking and collaboration among different teams and groups.

Subsequent to the examining theory mapping marks, the statistics information exhibited substantial improvement, indicating that the students were further assimilating fundamental science and medical theories. Premalatha [39] offered plans for developing and identifying course and program outcomes and mapping them together for learning outcome-oriented curricula. The plans highlighted several considerations in the learning outcome-oriented curricula that led to setting numerous attainment levels to program outcomes centered on the identified course outcomes.

Following the formulation of overall educational objectives and outcomes for any engineering program, a set of course objectives and outcomes is identified for each of the program courses. The purpose of identifying course objectives and outcomes is to reach effective teaching, which facilitates students’ attainment of the course objectives and learning outcomes. Thus, assessments of effective teaching centers mainly on the students’ knowledge in accordance with the predefined course learning outcomes and goals. The course objectives and outcomes are intended to meet the general program objectives and outcomes. Furthermore, course objectives are threshold proclamations that specify the overall anticipations from a program. They are announcements of non-evident actions like skills, knowledge, understanding, and learning that students who complete a course are anticipated to attain. For example, intelligence is not directly detected; the student must do something measurable to prove his/her understanding. Alternatively, course outcomes should be gaugeable to be evaluated and upgraded. A course outcome is a declaration of an evident or determinate student achievement that acts as confirmation of skills and knowledge attained in a course [39,42]. The disclosure must comprise an apparent achievement verb (Blomberg action verbs), i.e., explain, compute, formulate, or design to be eligible for a learning objective. Each expected course outcome should match one or more program outcomes. Classification of the Structural Steel Design course objectives and outcomes can be summarized as:

Course Objectives:

- To present different design methods for steel components.

- To comprehend the design procedure using AISC/LRFD specifications.

- To analyze and design direct shear connections.

- To identify the different types of connections.

- To learn the design method for steel connections of axially-loaded steel members.

Course outcomes:

- Calculate the loads on typical steel trusses and floor systems.

- Analyze tension members for different modes of failure.

- Determine the strength of steel compressive members.

- Compute the strength of steel flexural members.

- Design steel connections with tensile members.

- Use computer programs for the analysis of steel members.

6. The Students’ Questionnaire

The significant factors that would impress and stimulate the students for studying the online resources as an aided learning tool of the course ‘Structural Steel Design’ were investigated by the primary instructor of the course, in order to figure out how the nominated videos used in the study could assist the students in understanding the subjects of the course in a more effective way, where the ubiquity of mobile devices together with its potential to integrate classroom learning to real-world has added a new angle to contextualizing for academic learning [43,44]. Survey questions were designed to address these factors for the aimed analysis. Students were informed that their response to the survey questions was vital to improving the quality of teaching. The surveys were filled in by students. Moreover, the analyzed results were helpful in investigating how the provided videos were useful to the students. Students’ feedback was analyzed to learn their opinion regarding online learning and associated instructional videos and how their academic performance in the course was affected. The participated students in this study were asked to express their feedback in scale range ‘1 to 5’, on a Likert scale, for each individual deployed question, where the lowest number ‘1’ indicates a ‘very low’ agreement with the argument, while ‘5’ represents a ‘very high’ evaluation, as depicted in Table 1. Background on the Likert scale rating system and how it was employed in the questionnaire survey of this study are summarized in the following paragraph.

Table 1.

Questionnaire deployed to the students.

The questionnaire was designed to assess students’ opinions regarding the effectiveness of using videos as a learning tool for one of the undergraduate courses to ensure that the questions in the survey represent the domain of opinions towards the required objective of this study [45]. The items of the questionnaire were then reviewed for clarity, readability, and completeness in order to reach to an agreement as to which questions should be included in the survey and which ones should be excluded. In the survey, students will be asked to indicate whether a question is ‘favorable’ (by giving a score of 1 or more) or ‘unfavorable’ (by assigning a score of 0). Different rating systems have been developed over the years, among which the Likert scale has been mainly used [46]. The Likert scale rating system was developed by the social scientist Rensis Likert in 1932 to measure opinions or perceptions of respondents towards a given number of questions or items. An opinion varies on a scale from ‘negative’ to ‘positive’ [47]. Conventionally, a five-point Likert scale is used where the categories of response are mutually exclusive and cover the full range of opinions. Some surveys add a ‘don’t know’ option to distinguish between respondents who are not informed enough to give an opinion or neutral. Using a wider rating scale could offer more options to respondents; however, it is not recommended since respondents normally tend not to select extreme responses. Furthermore, scales with just few categories may not afford sufficient judgment. Using a rating scale with an even number (e.g., a four-point or six-point scale) forces respondents to come down broadly ‘for’ or ‘against’ an argument [48]. In this study, a relative importance index is then calculated for each question in the survey to provide an indication on the importance of this particular question (argument) relative to other questions (arguments) in the survey.

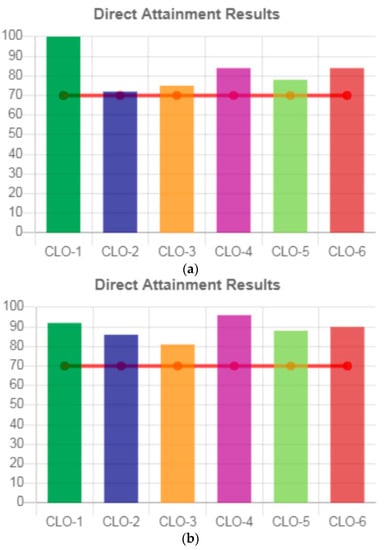

To evaluate the course learning outcomes (CLOs), the survey included statements concerning the students’ attainment of twenty course objective-related questions. In addition, appropriate tools such as assignments, quizzes, exams, and projects were used to assess the CLO attainment level, which was set to 70%. Figure 2a shows that the attainment level of three out of six CLOs (CLO-1, CLO-4, and CLO-6) of the Structural Steel Design course were above 80% for the 2018 traditional face-to-face teaching, whereas for 2019 adoption of videos in blended e-learning the attainment level of all six CLOs were above 80%. It is clear from the figure that adoption of videos in blended e-learning for the Structural Steel Design course demonstrate moderate improvement in the students’ performance in the course.

Figure 2.

CLO attainment levels: (a) for 2018; (b) for 2019.

The questionnaire survey was administered two weeks before the beginning of the final exams. The questionnaire was completed by all of the students enrolled in the course. The student responses were aggregated at the course level, and the average student scores were interpreted as the collective perception of attainment in accordance with the course learning outcomes. The student’s questionnaire is regarded as a practical method of assessing the effectiveness of teaching a specific course.

7. The Evaluation Process

A direct outcome of combining education and technology is e-learning. E-learning has become a great platform of education utilizing mainly Internet technologies. The indisputable importance of e-learning in education has led to a considerable progress in the number of e-learning courses offered online. As a result, the evaluation of online e-learning courses has become essential to guarantee the effectiveness of online courses. To evaluate the student-learning quality in this study, direct and indirect tools were employed. The right tools comprised homework assignments, quizzes, and exams, whereas the indirect means included a qualitative online survey that would provide students’ feedback on the course. To make the evaluation of the video-based learning effect on the students’ performance a continuous improvement process, students would use the survey to assess the course contents and materials in terms of how they felt the video-based learning approach had improved their abilities, comprehension, and skills that the course is anticipated to provide. Responses from the students’ survey were intended to have the instructor of the course aware of student’s recommendations and feedback on the delivery approach of the course and how to improve it to enhance the course learning outcomes and students’ performance. Therefore, the primary objective of considering the students’ comments and feedback is to find any recommended potential improvement or modification to the teaching and delivery style. To maximize the prospect of attaining the course outcomes, each item in the online students’ survey was formulated to correspond to one or more course outcomes.

The Structural Steel Design course is a core course in the Civil and Environmental Engineering Department at the UAEU. All students in the course participated in the online survey to comment on the course learning outcomes and its video-based learning approach. The survey was prepared by the course coordinator and was made available online to the students to fill it out in compliance with the university’s ethical research Policies and Procedures. An anonymous scheme was established to uphold the discretion of the students’ identity using the course management software, Blackboard. The students were informed of the survey early in the semester. Several topics related to the covered course materials were carefully chosen. At the same time, a thorough search was carried out to provide online videos that are most relevant to the course materials. The chosen items are itemized in Table 2, where each item is related to specific online videos. In general, students used smartphones, laptops, or iPads to access a diversity of course topics and materials. In addition, allocating supplementary QR codes for the different course materials was an effective means to upload more online materials.

Table 2.

CIVL 417 course different subjects and relevant YouTube videos.

It was decided to embed videos into the QR code to provide students easy access to the suggested learning topics utilizing cell phones with camera and QR code reader to read the code information (i.e., the hyperlink embedded into the code), where free online resources were used to generate the QR code images, as illustrated in Figure 3 and Figure 4.

Figure 3.

(a): QR code to access the eBook; (b): Cover page of the flipped eBook that includes the YouTube QR codes.

Figure 4.

Sample of pages included in the QR eBook that was distributed to the students.

The educational material was deployed and posted two times, before the midterm exam and before the final exam, through the university’s official e-Learning platform (i.e., Blackboard) and emails as well. The designed questionnaire was distributed to the students during the standard classes and was explained to them before they started responding to the survey.

As is typical, the purpose of the online survey was to collect and review the opinions, recommendations, and feedback of the students on the nominated watched videos that were relevant to the course material. The survey was also used to assess whether or not the suggested assigned videos were helpful to the learning delivery method and students’ comprehension. Furthermore, the survey was designed to examine the students’ favor on whether or not to have recorded lectures with or without the instructor appearing in the videos. Moreover, it examined the students’ tendency for nontraditional teaching techniques. The nominated videos of this course were carefully chosen for relevant structural steel design course learning outcomes.

8. Results and Discussion

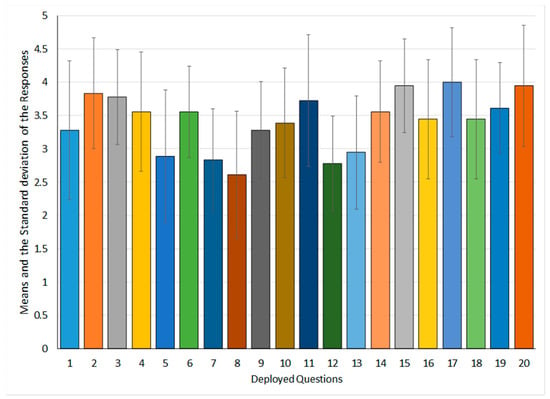

In this section, the students’ feedback collected through the deployed questionnaire was analyzed to highlight the impact of using course-relevant video-based e-learning on the students’ comprehension and assess the learning process. In the first stage, the results were plotted in Figure 5, in terms of the means and the standard deviation of the students’ responses to the different questions in the survey.

Figure 5.

Means and the standard deviation of the deployed questionnaire.

In general, the average responses to all deployed questions were over 3 on a scale of 1 to 5, except for questions 5, 7, 8, 12, and 13. These questions are shown in Table 3 as follows:

Table 3.

Responses that have a scale more than 3.

Answers to query 5 indicated that the instructor should not appear in the videos. On the other hand, the lower response ranks to questions 7, 8, and 13 indicated that the deployed material, which was carefully selected with significant effort, was convenient for the students. These responses also showed that the technological distractions and complexity were minimal, which meant that the participated students did not lag behind technologically and that it was straightforward for them to use the technology for learning [49,50]. Moreover, for question 12, students contemplated that the videos were not commercial ones, nor did they have distracting material and that the videos were meant for the educational purposes [51,52].

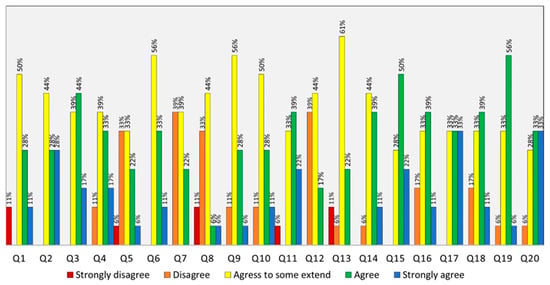

The study revealed that the students were in favor of supplementing the course materials with instructional videos. This was established by performing further statistical analysis of the students’ collected feedback to gauge the satisfaction of the students with using videos in e-learning techniques as shown in Figure 6.

Figure 6.

Statistical analysis of the deployed questionnaire.

Answers to query 1 showed that 11% strongly preferred instructional videos to be part of the delivery method of the course. On the other hand, the answers to question 2 demonstrated that 100% of the participant students considered watching educational videos to be a supportive tool of their study. The answers to question 3 showed that the content of the videos are matching the course subjects and that 89% of the students would favor seeing the course instructor in the videos. However, around 61% of the students do not recommend the presence of the instructor while recording the videos as per the answers to questions 4 and 5. For question 6, responses showed similar feedback to that of question 2, wherein 100% of students agreeing on the importance of the instructional videos as a teaching tool. This reflects students’ contentment of watching videos as a learning tool.

For question 7, 61% replies expressed the need to edit some of the videos. The low response to question 8, around 56%, highlighted some irrelevant course material in the videos. Two identical responses to questions 9 and 10 (89%) revealed the need for adopting blended learning methods and nominating videos that are technically sound and helpful for learning. All the respondents (100%) preferred videos recorded by the course instructor and made available on the university website, which was concluded from analyzing question 11 responses. Many reasons could be attributed to this, like the quality and the appropriateness of the deployed material and the opportunity to improve the content anytime.

Responses to question 12 showed that 61% reported bad quality of recorded videos. Around 83% and 94% of students highlighted in questions 13 and 14, respectively, recording and audio problems associated with the developed videos. Answers to query 15 showed that all students (100%) contemplated that the video provided a better understanding of the course concepts. All respondents to question 16 preferred to have videos directly related to topics in the lectures.

Answers to question 17 demonstrated that all students like to have solved examples related to the lecture materials to enhance their course learning outcomes. Around 83% of respondents to query 18 believed that having similar surveys would help improve the pedagogical technique. This positive feedback was also repeated in the responses to question 19, where around 94% of the students’ replies revealed that this study would improve the online teaching method. Finally, it was noticed that 94% of the students considered that other engineering courses need to take the initiative to use videos in teaching as well.

The second stage of this study was to assess the effect of using videos as a learning tool on the students’ performance in the ‘Structural Steel Design’ undergraduate course and whether or not the course learning outcomes were achieved. For that purpose, the Likert scale and relative importance index were used to find out whether or not an item was desirable, as described by Bolarinawa [46]. The Likert scale is an assessment scheme used to assess the views of respondents towards specific questions. The opinion would vary on a scale from ‘negative’ to ‘positive’ [47]. Considering a larger-scale could offer more choices to respondents, while rating scales with fewer categories may not provide sufficient insight. After receiving the respondents’ score on each question, a relative importance index (RII) is analyzed to give a clear understanding of the relative significance of the different questions in the survey. As such, the relative importance index is estimated to provide any evidence of the relative importance of a particular response to a question as compared with answers to other questions.

The question scores were analyzed, and a relative importance index (RII) was generated for each question to examine the significance of its answers. The RII was calculated as follows, based on Peterson et al. (2011):

where,

: Relative Importance Index of each factor I

k: batch of respondents.

n: number of respondents

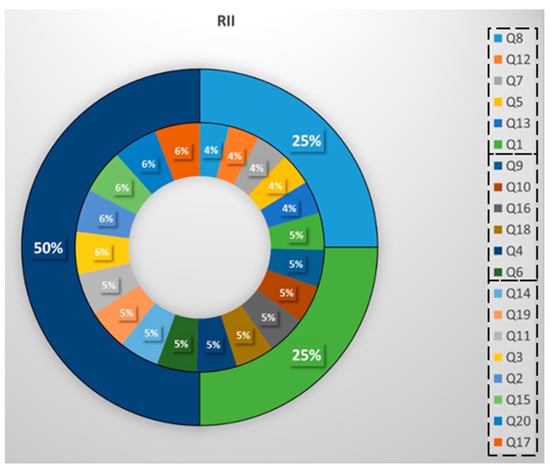

However, the RII method is used to rank the twenty questions of the survey in terms of their importance as indicated by their RII numbers (Figure 7).

Figure 7.

Relative Importance Index of the received responses.

The estimated RII for twenty questions is clustered into three ranking categories (not sure, recommended, and highly recommended). The ‘highly recommended’ category is for RII of 71% or more, while the ‘recommended’ category has RII of more than 59% and less than 71%. Any RII less than 59% is discussed in the ‘not sure’ category. It was noticed that students’ feedback for questions 4, 6, 14, 19, 11, 3, 2, 15, 20, and 17 were in the ‘highly recommended’ class. Feedback for questions 1, 9, 10, 16 and 18, however, were in the ‘recommended’ category, while for questions 8, 12, 7, 5, and 13, the feedback were within the ‘not sure’ group.

As depicted in Figure 7, 50% of the students believed that watching instructional videos in the Structural Steel Design course was an effective tool and would improve the course learning outcomes [53]. Using relevant videos as instructional tool enhanced the students’ learning skill [54,55,56]. The figure also shows that 25% of the students agreed on the importance of blended learning if augmented with relevant, well-selected instructional videos. Likewise, Figure 7 demonstrates that 25% of the students thought that some of the watched videos had irrelevant topics or were of low recording quality.

The results of this study show that the most critical constraints for student learning and satisfaction are the course learning outcomes and the related instructional videos. These parameters present the most substantial connection. This observation is, to some extent, in agreement with the literature on the same matter. An efficient survey and assessment not only evaluate the students’ attainment of the course learning outcomes but also offer an incentive to the students for aiming at learning activities to achieve the course learning outcomes.

The initiative presented in this paper was helpful during the COVID-19 pandemic since instructors were requested by the university to teach all courses online. The reason for this request is that, due to the COVID-19 pandemic, using the normal face-to-face method of teaching was no longer available as it used to be before the pandemic [57,58]. Accordingly, all course instructors had to switch to the online teaching and the majority of students were ready to adopt to online learning [59]. This new shift reflected positively on the academic self-perceptions and course satisfaction among university students and associated factors during virtual classes [60]. It was noticed that technology management increased student awareness to use e-learning and demanding a high level of information technology from instructors, students, and universities was the most influential factor for e-learning during the COVID-19 pandemic [61]. Moreover, e-learning is an effective method that can be used even for the training activities provided by universities [62]. It has been concluded that hybrid learning approaches are preferred, but support enabling the implementation of technology-based and pedagogy-informed teaching is necessary to make the process successful [63]. This is despite the fact that misconception using online media may exist for some courses in universities that utilize the Moodle-based e-learning media as an online learning platform. However, still, the effectiveness of remediating has not been widely and sufficiently researched [64].

9. Conclusions

This study investigates the advantages of using instructional videos to augment and enhance lecture delivery. The instructional videos were collected, reviewed, filtered, or produced by the course instructor to have the same content as the classroom lecture materials and to match the course learning outcomes. To assess the impact of using educational videos on the students’ understanding of course materials, a statistically significant number of sixty-eight students were available to have a reliable evaluation of the video-based e-learning technique used.

The benefits of using open source video-based e-learning in teaching the undergraduate Structural Steel Design course were assessed using student surveys and students’ performance in the course activities such as assignments, quizzes, and exams. The survey responses and the students’ performance clearly showed that the selected instructional videos gave the students the freedom to watch these videos any time and as many times as they need to comprehend the various course contents. Video-based e-learning environments offer a remarkable instructive prospect in times when students cannot attend educational institutions in person. On the other hand, there could be substantial obstacles to education if poor quality e-learning is provided. It is essential that instructors carefully select and produce video-based online materials to make sure that students are provided and equipped with the best possible online learning material. In the classroom, if the students find that the lecture subject hard to follow, the instructor would sense this environment and would try explaining it differently and affording real-time explanations and one-on-one support. This is more difficult to accomplish in online education. Moreover, it is of significance to keep the lecture material of the instructional videos not too hard at the beginning and to make sure that it is delivered in feasible video phases, so the students are not overwhelmed way too soon in the learning process.

This study concluded that the performance of students, as measured by the different course assessment tools, was improved as compared with their performance when the course was taught without using instructional videos. Other studies reported similar conclusions [65,66]. Furthermore, the responses of the majority of students indicated that instructional videos made the learning process of the course much more comfortable and improved their understanding of the different course topics.

Author Contributions

Conceptualization, B.E.-A., E.Z. and W.A.; methodology, B.E.-A., E.Z. and W.A.; software, W.A.; validation, B.E.-A., E.Z. and W.A.; formal analysis, B.E.-A., E.Z. and W.A.; investigation, B.E.-A., E.Z. and W.A.; resources, B.E.-A.; data curation, B.E.-A., E.Z. and W.A.; writing—original draft preparation, B.E.-A., E.Z. and W.A.; writing—review and editing, B.E.-A.; visualization, B.E.-A., E.Z. and W.A.; supervision, B.E.-A., E.Z. and W.A.; project administration, B.E.-A., E.Z. and W.A.; funding acquisition, B.E.-A. All authors have read and agreed to the published version of the manuscript.

Funding

The APC was funded by the United Arab Emirates University, grant number 31N371.

Institutional Review Board Statement

Not Applicable.

Informed Consent Statement

Not Applicable.

Data Availability Statement

The data that support the findings of this study are available from the authors upon reasonable request.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Kirkwood, A. Teaching and learning with technology in higher education: Blended and distance education needs ‘joined-up thinking’ rather than technological determinism. Open Learn. J. Open Distance E-Learn. 2014, 29, 206–221. [Google Scholar] [CrossRef]

- Salter, G. Comparing online and traditional teaching—A different approach. Campus-Wide Inf. Syst. 2003. [Google Scholar] [CrossRef]

- Matusovich, H.M.; Streveler, R.A.; Miller, R.L. Why do students choose engineering? A qualitative, longitudinal investigation of students’ motivational values. J. Eng. Educ. 2010, 99, 289–303. [Google Scholar] [CrossRef]

- Aparicio, A.C.; Ruiz-Teran, A.M. Tradition and innovation in teaching structural design in civil engineering. J. Prof. Issues Eng. Educ. Pract. 2007, 133, 340–349. [Google Scholar] [CrossRef]

- Davalos, J.F.; Moran, C.J.; Kodkani, S.S. Neoclassical active learning approach for structural analysis. Age 2003, 8, 1. [Google Scholar]

- Kitipornchai, S.; Lam, H.; Reichl, T. A new approach to teaching and learning structural analysis. Struct. Granul. Solids Sci. Princ. Eng. Appl. 2008, 247. [Google Scholar] [CrossRef]

- Michael, K. Virtual classroom: Reflections of online learning. Campus-Wide Inf. Syst. 2012. [Google Scholar] [CrossRef]

- Yuan, X.-F.; Teng, J.-G. Interactive Web-based package for computer-aided learning of structural behavior. Comput. Appl. Eng. Educ. 2002, 10, 121–136. [Google Scholar] [CrossRef]

- Pena, N.; Chen, A. Development of structural analysis virtual modules for iPad application. Comput. Appl. Eng. Educ. 2018, 26, 356–369. [Google Scholar] [CrossRef]

- Homan, G.; Macpherson, A. E-learning in the corporate university. J. Eur. Ind. Train. 2005. [Google Scholar] [CrossRef]

- Mohammadi, H.; Monadjemi, S.; Moallem, P.; Olounabadi, A.A. E-Learning System Development using an Open-Source Customization Approach 1. J. Comput. Sci. 2008. [Google Scholar] [CrossRef][Green Version]

- Iqbal, H.; Sheikh, A.K.; Samad, M.A. Introducing CAD/CAM and CNC machining by using a feature based methodology in a manufacturing lab course, a conceptual frame work. In Proceedings of the 2014 IEEE Global Engineering Education Conference (EDUCON), Istanbul, Turkey, 3–5 April 2014; pp. 811–818. [Google Scholar]

- Ahmed, W.K.; Zaneldin, E. E-Learning as a Stimulation Methodology to Undergraduate Engineering Students. Int. J. Emerg. Technol. Learn. 2013, 8. [Google Scholar] [CrossRef][Green Version]

- Al-Marzouqi, A.H.; Ahmed, W.K. Experimenting E-Learning for Postgraduate Courses. Int. J. Emerg. Technol. Learn. 2016, 11. [Google Scholar] [CrossRef]

- Zaneldin, E.; Ahmed, W. Assessing the Impact of Using Educational Videos in Teaching Engineering Courses. In Proceedings of the ASME 2018 International Mechanical Engineering Congress and Exposition, Pittsburgh, PA, USA, 9–15 November 2018. [Google Scholar] [CrossRef]

- Ahmed, W.K.; Marzouqi, A.H.A. Using blended learning for self-learning. Int. J. Technol. Enhanc. Learn. 2015, 7, 91–98. [Google Scholar] [CrossRef]

- Zaneldin, E.; Ahmed, W.; El-Ariss, B. Video-based e-learning for an undergraduate engineering course. E-Learn. Digit. Media 2019, 16, 475–496. [Google Scholar] [CrossRef]

- Merkt, M.; Weigand, S.; Heier, A.; Schwan, S. Learning with videos vs. learning with print: The role of interactive features. Learn. Instr. 2011, 21, 687–704. [Google Scholar] [CrossRef]

- Colasante, M. Using video annotation to reflect on and evaluate physical education pre-service teaching practice. Australas. J. Educ. Technol. 2011, 27. [Google Scholar] [CrossRef]

- Gamoran Sherin, M.; Van Es, E.A. Effects of video club participation on teachers’ professional vision. J. Teach. Educ. 2009, 60, 20–37. [Google Scholar] [CrossRef]

- Zaneldin, E.; Ashur, S. Using spreadsheets as a tool in teaching construction management concepts and applications. In Proceedings of the 2008 Annual Conference and Exposition, Pittsburgh, PA, USA, 17–21 July 2008. [Google Scholar]

- Zhang, D.; Zhou, L.; Briggs, R.O.; Nunamaker Jr, J.F. Instructional video in e-learning: Assessing the impact of interactive video on learning effectiveness. Inf. Manag. 2006, 43, 15–27. [Google Scholar] [CrossRef]

- Tuong, W.; Larsen, E.R.; Armstrong, A.W. Videos to influence: A systematic review of effectiveness of video-based education in modifying health behaviors. J. Behav. Med. 2014, 37, 218–233. [Google Scholar] [CrossRef]

- Greenberg, A.D.; Zanetis, J. The impact of broadcast and streaming video in education. Cisco Wainhouse Res. 2012, 75, 21. [Google Scholar]

- Xu, Y.; Li, H.; Yu, L.; Zha, S.; He, W.; Hong, C. Influence of mobile devices’ scalability on individual perceived learning. Behav. Inf. Technol. 2020, 1–17. [Google Scholar] [CrossRef]

- Hills, D.; Thomas, G. Digital technology and outdoor experiential learning. J. Adventure Educ. Outdoor Learn. 2020, 20, 155–169. [Google Scholar] [CrossRef]

- Martínez-Cerdá, J.-F.; Torrent-Sellens, J.; González-González, I. Promoting collaborative skills in online university: Comparing effects of games, mixed reality, social media, and other tools for ICT-supported pedagogical practices. Behav. Inf. Technol. 2018, 37, 1055–1071. [Google Scholar] [CrossRef]

- Ketsman, O.; Daher, T.; Colon Santana, J.A. An investigation of effects of instructional videos in an undergraduate physics course. E-Learn. Digit. Media 2018, 15, 267–289. [Google Scholar] [CrossRef]

- Reyna, J.; Hanham, J.; Meier, P.C. A framework for digital media literacies for teaching and learning in higher education. E-Learn. Digit. Media 2018, 15, 176–190. [Google Scholar] [CrossRef]

- Al-Alwani, A. Evaluation criterion for quality assessment of E-learning content. E-Learn. Digit. Media 2014, 11, 532–542. [Google Scholar] [CrossRef]

- Stewart, O.G. A critical review of the literature of social media’s affordances in the classroom. E-Learn. Digit. Media 2015, 12, 481–501. [Google Scholar] [CrossRef]

- Neo, T.K.; Neo, M. Classroom innovation: Engaging students in interactive multimedia learning. Campus-Wide Inf. Syst. 2004. [Google Scholar] [CrossRef]

- Álvarez-Hornos, F.J.; Sanchis, M.I.; Ortega, A.C. QR codes implementation and evaluation in teaching laboratories of Chemical Engineering. @tic Rev. D’innovació Educ. 2014, 88–96. [Google Scholar] [CrossRef]

- Son, Y. Pre-Service science teachers perception of STEAM education and analysis of science and art STEAM class by pre-service science teachers. Biol. Educ. 2012, 40, 475–493. [Google Scholar] [CrossRef]

- Torres-Jiménez, E.; Rus-Casas, C.; Dorado, R.; Jiménez-Torres, M. Experiences using QR codes for improving the teaching-learning process in industrial engineering subjects. IEEE Rev. Iberoam. Technol. Aprendiz. 2018, 13, 56–62. [Google Scholar] [CrossRef]

- Dorado, R.; Torres-Jiménez, E.; Rus-Casas, C.; Jiménez-Torres, M. Mobile learning: Using QR codes to develop teaching material. In Proceedings of the 2016 Technologies Applied to Electronics Teaching (TAEE), Seville, Spain, 22–24 June 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Tulemissova, G.; Bukenova, I.; Korzhaspayev, A. Sharing of teaching staff information via QR-code usage. In Proceedings of the European Conference on IS Management and Evaluation, ECIME, Evora, Portugal, 8–9 September 2016; pp. 195–203. [Google Scholar]

- Maia-Lima, C.; Silva, A.; Duarte, P. Geometry teaching, smartphones and QR Codes. In Proceedings of the 2015 International Symposium on Computers in Education (SIIE), Setúbal, Portugal, 25–27 November 2015; pp. 114–119. [Google Scholar] [CrossRef]

- Premalatha, K. Course and program outcomes assessment methods in outcome-based education: A review. J. Educ. 2019, 199, 111–127. [Google Scholar] [CrossRef]

- Crowe, J.A.; Silva, T.; Ceresola, R. The effect of peer review on student learning outcomes in a research methods course. Teach. Sociol. 2015, 43, 201–213. [Google Scholar] [CrossRef]

- Slieman, T.A.; Camarata, T. Case-based group learning using concept maps to achieve multiple educational objectives and behavioral outcomes. J. Med. Educ. Curric. Dev. 2019, 6. [Google Scholar] [CrossRef] [PubMed]

- Hay, L.; Duffy, A.H.; Grealy, M.; Tahsiri, M.; McTeague, C.; Vuletic, T. A novel systematic approach for analysing exploratory design ideation. J. Eng. Des. 2020, 31, 127–149. [Google Scholar] [CrossRef]

- Galimullina, E.; Ljubimova, E.; Ibatullin, R. SMART education technologies in mathematics teacher education-ways to integrate and progress that follows integration. Open Learn. J. Open Distance E-Learn. 2020, 35, 4–23. [Google Scholar] [CrossRef]

- Murray, J.K.; Studer, J.A.; Daly, S.R.; McKilligan, S.; Seifert, C.M. Design by taking perspectives: How engineers explore problems. J. Eng. Educ. 2019, 108, 248–275. [Google Scholar] [CrossRef]

- Sangoseni, O.; Hellman, M.; Hill, C. Development and validation of a questionnaire to assess the effect of online learning on behaviors, attitudes, and clinical practices of physical therapists in the United States regarding evidenced-based clinical practice. Internet J. Allied Health Sci. Pract. 2013, 11, 7. [Google Scholar]

- Bolarinwa, O.A. Principles and methods of validity and reliability testing of questionnaires used in social and health science researches. Niger. Postgrad. Med. J. 2015, 22, 195–201. [Google Scholar] [CrossRef]

- Holt, G.D. Asking questions, analysing answers: Relative importance revisited. Constr. Innov. 2014. [Google Scholar] [CrossRef]

- Jamieson, S. Likert scales: How to (ab) use them? Med. Educ. 2004, 38, 1217–1218. [Google Scholar] [CrossRef]

- Hannon, K. Utilization of an Educational Web-Based Mobile App for Acquisition and Transfer of Critical Anatomical Knowledge, Thereby Increasing Classroom and Laboratory Preparedness in Veterinary Students. Online Learn. 2017, 21, 201–208. [Google Scholar] [CrossRef]

- van Wermeskerken, M.; Ravensbergen, S.; van Gog, T. Effects of instructor presence in video modeling examples on attention and learning. Comput. Hum. Behav. 2018, 89, 430–438. [Google Scholar] [CrossRef]

- Alden, J. Accommodating mobile learning in college programs. J. Asynchronous Learn. Netw. 2013, 17, 109–122. [Google Scholar] [CrossRef]

- Rasheed, R.A.; Kamsin, A.; Abdullah, N.A. Challenges in the online component of blended learning: A systematic review. Comput. Educ. 2020, 144, 103701. [Google Scholar] [CrossRef]

- Miller, M.V.; CohenMiller, A. Open Video Repositories for College Instruction: A Guide to the Social Sciences. Online Learn. 2019, 23, 40–66. [Google Scholar] [CrossRef]

- Bai, X.; Smith, M. Promoting Hybrid Learning through an Open Source EBook Approach. Online Learn. J. 2010, 14. [Google Scholar] [CrossRef]

- Hegeman, J.S. Using instructor-generated video lectures in online mathematics courses improves student learning. Online Learn. 2015, 19, 70–87. [Google Scholar] [CrossRef]

- Shaw, L.; MacIsaac, J.; Singleton-Jackson, J. The Efficacy of an Online Cognitive Assessment Tool for Enhancing and Improving Student Academic Outcomes. Online Learn. 2019, 23, 124–144. [Google Scholar] [CrossRef]

- Adov, L.; Mäeots, M. What Can We Learn about Science Teachers’ Technology Use during the COVID-19 Pandemic? Educ. Sci. 2021, 11, 255. [Google Scholar] [CrossRef]

- Lauret, D.; Bayram-Jacobs, D. COVID-19 Lockdown Education: The Importance of Structure in a Suddenly Changed Learning Environment. Educ. Sci. 2021, 11, 221. [Google Scholar] [CrossRef]

- Martha, A.S.D.; Junus, K.; Santoso, H.B.; Suhartanto, H. Assessing Undergraduate Students’ e-Learning Competencies: A Case Study of Higher Education Context in Indonesia. Educ. Sci. 2021, 11, 189. [Google Scholar] [CrossRef]

- Hassan, S.u.N.; Algahtani, F.D.; Zrieq, R.; Aldhmadi, B.K.; Atta, A.; Obeidat, R.M.; Kadri, A. Academic Self-Perception and Course Satisfaction among University Students Taking Virtual Classes during the COVID-19 Pandemic in the Kingdom of Saudi-Arabia (KSA). Educ. Sci. 2021, 11, 134. [Google Scholar] [CrossRef]

- Alqahtani, A.Y.; Rajkhan, A.A. E-Learning Critical Success Factors during the COVID-19 Pandemic: A Comprehensive Analysis of E-Learning Managerial Perspectives. Educ. Sci. 2020, 10, 216. [Google Scholar] [CrossRef]

- Lu, D.-N.; Le, H.-Q.; Vu, T.-H. The Factors Affecting Acceptance of E-Learning: A Machine Learning Algorithm Approach. Educ. Sci. 2020, 10, 270. [Google Scholar] [CrossRef]

- Müller, A.M.; Goh, C.; Lim, L.Z.; Gao, X. COVID-19 Emergency eLearning and Beyond: Experiences and Perspectives of University Educators. Educ. Sci. 2021, 11, 19. [Google Scholar] [CrossRef]

- Halim, A.; Mahzum, E.; Yacob, M.; Irwandi, I.; Halim, L. The Impact of Narrative Feedback, E-Learning Modules and Realistic Video and the Reduction of Misconception. Educ. Sci. 2021, 11, 158. [Google Scholar] [CrossRef]

- Tan, P.; Wu, H.; Li, P.; Xu, H. Teaching Management System with Applications of RFID and IoT Technology. Educ. Sci. 2018, 8, 26. [Google Scholar] [CrossRef]

- Meinel, C.; Schweiger, S. A Virtual Social Learner Community—Constitutive Element of MOOCs. Educ. Sci. 2016, 6, 22. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).