Abstract

As intelligent systems demand for human–automation interaction increases, the need for learners’ cognitive traits adaptation in adaptive educational hypermedia systems (AEHS) has dramatically increased. AEHS utilize learners’ cognitive processes to attain fair human–automation interaction for their adaptive processes. However, obtaining accurate cognitive trait for the AEHS adaptation process has been a challenge due to the fact that it is difficult to determine what extent such traits can comprehend system functionalities. Hence, this study has explored correlation among learners’ pupil size dilation, learners’ reading time and endogenous blinking rate when using AEHS so as to enable cognitive load estimation in support of AEHS adaptive process. An eye-tracking sensor was used and the study found correlation among learners’ pupil size dilation, reading time and learners’ endogenous blinking rate. Thus, the results show that endogenous blinking rate, pupil size and reading time are not only AEHS reliable parameters for cognitive load measurement but can also support human–automation interaction at large.

1. Introduction

Evolvement of human automation has paid profound effect on the development of intelligent systems that support adaptive learning. The transformation of intelligent systems development from control-based to open learning adaptive systems has resulted from inevitable demand for the interaction between human and automation [1]. Even though for decades human–automation interaction had been found to be effective and productive drive for the software systems development, less effort has been taken to explore to what extent human ability can comprehend such systems functionalities so as to attain fair functional tasks distribution between human and automation [2]. In adaptive educational hypermedia systems (AEHS), such function allocation is more complex as AEHS rely on learner’s cognitive traits to attain fair task distribution through adaptive process. Thus, learners’ cognitive traits play a crucial role in sustaining human–automation interaction in AEHS. However, obtaining accurate cognitive trait that can determine to what extent human ability can effectively comprehend system functionalities through adaptation process is still an open research question. In order to capture accurate cognitive trait that can support effective adaptation, AEHS rely on learners’ cognitive processes such as attention, motivation etc. [3]. Hence, few recent research studies have aggressively started to take cognitive computing approach towards such cognitive processes [3,4,5,6], so as to enable AEHS adaptation process to attain productive human–automation interaction. In our previous studies [3,5], we carefully investigated the possibility of enrolling such cognitive processes into e-learning platforms and proposed an adaptive algorithm that can support AEHS adaptive decision-making process [6]. Therefore, as an extension of such studies [3,5,6], in this study correlation among learner’s endogenous blinking rate, pupil size dilation and reading time when using AEHS has been explored so as to enable cognitive load estimation in support of AEHS adaptive process as an extension of the previously proposed bioinformatics-based approach [6]. This study result has found correlation between cognitive load and learners’ endogenous blinking rate; hence, AEHS adaptive process algorithm has been updated. Thus, the proposed algorithm supports cognitive load estimation and also enhances adaptive process comprehension on addressing such AEHS system functionalities that support human–automation interaction.

The remaining of this paper is organized as follows: The study background information and related works will be stated in Section 2 and Section 3, respectively. The proposed approach will be stated in Section 4. The evaluation of the proposed approach will be presented in Section 5 and it will be discussed in Section 6. Finally, Section 7 will conclude this study with a view for future work.

2. Background Information

As stated earlier, attention and motivation are cognitive processes under this study consideration. During the learning process learners’ intrinsic motivation states vary. When learners experience difficult learning (work overload) their attention levels reflect motivation states [3,5]. Hence, alteration of such cognitive processes can not only be utilized to support AEHS adaptation process [5,6], but also influence their learning efficacy [7].

As previously stated, alteration of learners’ cognitive states do influence their learning efficacy. Hence, some recent studies have been exploring possibilities of using mobile sensors to measure cognitive load [3,5,7], so as to be able to predict learner’s performance [7] and suitable adjustment for multimedia content personalization [4,6] on real-time basis. EEG [7,8] and eye-tracking [4,9] sensors have commonly been used for cognitive load measurements [3] and identification of the learner’s area of interest (AOI) on multimedia content [4,6]. Eye tracking sensors have been found to be suitable as they can easily be embedded into systems and are more resilient to noise environment [6,10,11]. Commonly known parameters for cognitive load measurement in Eye-tracking are pupil size dilation and endogenous blinking rate [9,10]. Real-time cognitive load estimation in multimedia environment is very crucial as self-rating measurements which has been widely used by many tutoring systems cannot capture learners’ cognitive processes alternation [10]. Hence, the need for real-time cognitive load estimation in AEHS is inevitable [6,10,11,12].

An attempt to use eye tracking technology for exploration of various patterns information processing and cognitive load estimations started over a decade ago [12,13]. However, the focus was not on AEHS multimedia learning environment [10,13] due to dynamic nature of AEHS multimedia learning content [6,10,14]. As soon as the challenge evolved, researchers started investigating ways to address the challenge including multimedia content representation [15], identification of learner’s attention [16], visual area of interest (AOI), as well as the technology that can support e-learning platforms [17,18].

Thus, as most of the eye tracking parameters have been found to be reliable indicators [19,20,21,22] for estimation and prediction purposes of intelligent systems, this study is focusing on investigating suitable eye-tracking parameters to support real-time cognitive load estimation in AEHS. In addition, as an extension of our previous study [6], further exploration is presented in this study to investigate possibility of adapting endogenous blinking rate and real-time cognitive load estimation into AEHS adaptative process.

3. Related Works

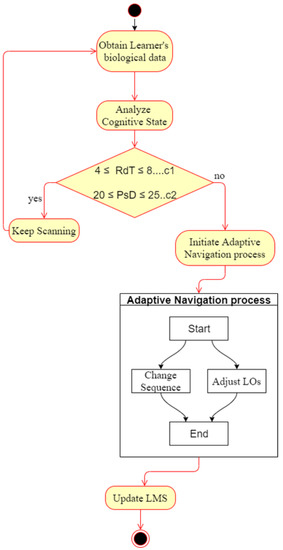

As stated earlier, few recent studies have focused on utilization of eye-tracking sensors to support adaptive learning. Scheiter et al. [4] proposed adaptive learning approach that uses eye-tracking to detect learners’ emotions. As many other eye-tracking studies, their approach focuses on identification of area of interest (AOI) using Euclidean distance proximity. Hence, it focuses more on AOI parameters such as gaze transitions and fixation paths. The approach is also targeting personalization of multimedia content. However, their approach is not meant for dynamic multimedia learning content personalization and cannot be applied to rea-time adaptation. Kruger and Doherty [12] proposed a multimodal methodology for cognitive load measurement that includes psychometric, eye tracking and electroencephalography. It is a very comprehensive approach as it cover wide range of parameters, and this study complies with its Instantaneous load construct that includes blinking rate parameter. However, most of its proposed constructs rely on offline learning environment [12] and it does not focus on real-time estimation. Desjarlais [14] has widely explored eye-gaze measures that have been used in many multimedia research studies. The study provides good interpretation of the eye-tracking parameters in defining learner’s attention levels during the learning process, but does not focus on real-time cognitive load estimation. Jung and Perez [19,20] also proposed a mobile eye-tracking approach that relies on AOI to identify learners’ visual attention and interactions in dynamic environments. It paves the way for the feasibility of mobile eye-tracking sensors to support dynamic multimedia content personalization. However, it does not focus on real-time adaptation. Wang, Tsai and Tsai [21] explored relationship between learners’ visual behavior and learning outcome, the study used eye-tracking and it included pre- and post-test experiments. The study found that learners paid more visual attention to the video than the text on dynamic pages, while the opposite tendency was observed when learners were working on static page. The study proposed that total fixation duration parameter is the best indicator for performance prediction. This study complies with their observation, but argues against the use of single parameter as key indicator for prediction purpose. In addition, the approach does not focus on real-time cognitive load estimation for AEHS. Mwambe et al. [6] proposed real-time adaptive learning navigation support approach that utilizes eye-tracking sensor to detect learners’ attention levels and triggers the AEHS adaptive process. As stated earlier, the approach focuses on learners’ attention levels and motivation; hence, learners’ pupil size parameters have been taken into consideration. The proposed algorithm supports the AEHS adaptive navigation process with respect to learners’ cognitive state alterations (as shown in Figure 1). The algorithm operates based on two conditions: c1 (reading time: RdT (seconds)) and c2 (relative pupil size dilation: PsD), whereby, if the defined conditions are met, then navigation support is initiated. Adjustment of learning objects (LOs) is handled by adaptive navigation process using time-locked hidden link navigation supports that operate with respect to the sequential alteration triggered by the learner’s motivation states. Once the adaptive navigation process is complete, then AEHS LMS (learning management system) is updated (as shown in Figure 1).

Figure 1.

Adaptive educational hypermedia system (AEHS) adaptive process algorithm [6].

As stated in Section 1, effectiveness of human–automation interaction in intelligent software systems highly depends on to what extent humans can comprehend system functionalities. Determination of efficient adaptive features for AEHS adaptation rely on cognitive traits used by AEHS adaptive process. Thus, the previously proposed bioinformatics-based approach for AI framework [6] is also bound to the limitation of having few cognitive traits for prediction of learners’ attention levels and cognitive load estimation. Therefore, in this study, further exploration is presented to enroll an additional parameter (endogenous blinking rate) for the AEHS adaptive process to support cognitive load estimation.

4. Proposed Approach

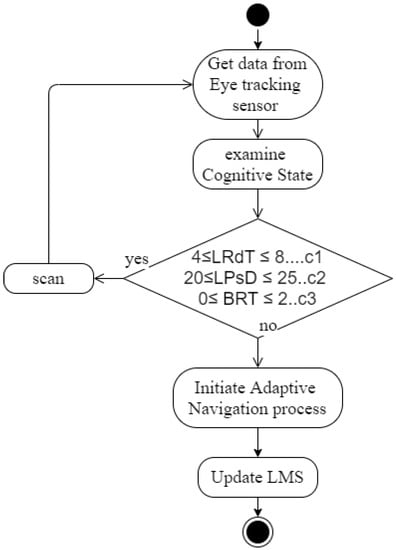

Unlike the previous approaches, the proposed approach accomplishes the adaptation process, based on three conditions, that manages the proposed extended algorithm: c1, c2 and c3, respectively (as shown in Figure 2.). As extension of previously described approach, the newly proposed approach supports cognitive load estimation. It consists of a newly additional parameter that observes the learner’s blinking rates throughout the reading time on multimedia content.

Figure 2.

Updated AEHS adaptive process algorithm.

As an extension of our previous studies [3,5,6], blink frequency has extensively been explored based on experiments conducted to implement bioinformatics-based adaptive navigation support in AEHS. Eye-tracking has commonly been used to investigate relation between visual attention and multimedia content [20]. Pupil size dilation, fixation duration and blink frequency are eye-tracking key parameters associated with measurement of learners “cognitive load” [9,21], and thus, such eye-tracking parameters have the advantage of providing easy implementation. Therefore, considering the demand for suitable cognitive load-measurement traits that can support the AEHS adaptive process, as stated in Section 1, such parameters are needed to enable the AEHS decision-making process to manage real-time cognitive load estimation, which has not yet been implemented [6]. Hence, the proposed approach consists of three parameters: learner’s reading time (LRdT), learner’s pupil size dilation (LPsD) and learner’s endogenous blinking rate (BRT). Based on these parameters, AEHS can form an adaptive decision process. The process relies on parameter threshold values, whereby the algorithm is set to work in such a way that when the three conditions (c1, c2 and c3) are met, then the adaptive process should be carried. Here, c1 observes the learner’s pupil size, whereby the threshold values are set within the range of 4 s ≤ LRdT ≤ 8 s, while c2 (relative pupil size dilation) is within 20 ≤ LPsD ≤25 and blinking rate is within 0 ≤ BRT ≤2 (number of blinks per LRdT). The c1 and c2 parameters have been adapted from a previous study [6], while c3 is a new additional parameter used to assist AEHS functionalities comprehension through its adaptive process (the thresholds of LRdT and LPsD are defined in reference [6], while BRT threshold is obtained from the results described in Section 5), as shown in Figure 2.

The proposed algorithm (as shown in Figure 2) initiates an adjustment of multimedia learning content through the navigation process. The adaptive navigation process can only be processed after carefully examining learner’s cognitive states. The learner’s cognitive state is analyzed based on the proposed parameters (three described conditions), including the learner’s blinking rate. Once the cognitive state (cognitive load) alteration (difficult learning) has been detected, AEHS initiates the adaptive navigation process to support learning object adjustment and, in turn, it supports the learning process. The combination of all three parameters, including the additional proposed parameter (c3), is expected not only to support cognitive load estimation, but also to enhance the adaptive process comprehension in addressing AEHS system functionalities that support human–automation interaction.

5. Evaluation

5.1. Experiment Setup

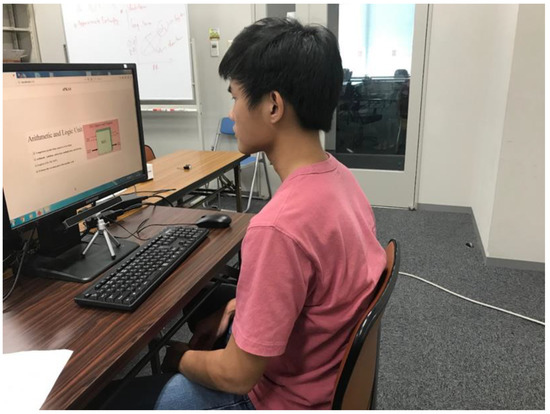

In order to investigate cognitive load measurement with the selected parameters, two experiments were conducted whereby computer science students were subjected to multimedia lecture content and test sessions. The lecture content consisted of computer science topics that were familiar to all the subjects and the lecture slides (LOs) were displayed under limited time interval. The Eye Tribe (Eye Tracking 101) was positioned at a distance of 60 cm in a 500 Lux light intensity room. The content was displayed on a 21-inch screen and the sensor was placed at approximately 26 degrees from subject’s eye position, as shown in Figure 3 below.

Figure 3.

Experiment setup.

Lecture content Experiment 1 consisted of normal content (adaptive e-learning prior knowledge assessment system (AePKAS)), while in Experiment 2, the content consisted of navigation support that was initiated based on the cognitive states’ changes, detected by the eye-tracking sensor (navigated adaptive e-learning prior knowledge assessment system (NePKAS)). The navigation support was intended to reduce the learners’ workload while pursuing the lecture sessions. In both cases, learners’ biological information was collected and analyzed based on the selected parameters. The result will be presented in the following subsection.

Unlike Experiment 1, which was designed to imitate normal e-learning content using normal navigation supports for content adaptation including various navigation links such as hypertext, index pages and non-contextual links [23,24], Experiment 2 was designed using a newly proposed [6] bioinformatics-based navigation support that uses bioinformatics with information hiding, sorting and adaptive annotation techniques to impose content level, presentation level, learner level, link and path levels adaptation [25]; it imitates future AEHS. Based on data collected when the learners performed these experiments, the additional parameters were able to be derived for evaluation and adaptive process consideration.

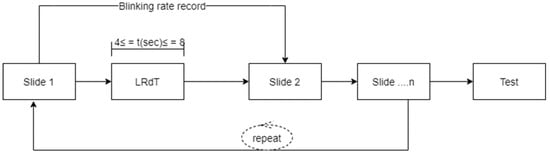

Blinking rate (BRT) data were obtained while learners were going through the lecture slides (as shown in Figure 4) during the lecture sessions while LRdT and LPsD were recorded. Subjects were instructed to follow the lecture carefully and address the tests that followed after every lecture session. The experiments were also conducted in a noise-free environment and imitated a private online class. In this study, a total of 20 subjects participated in the experiment (6 females and 14 males), aged between 20 and 33 years old. All of the learners were in a healthy condition, as found after they provided self-check questionnaires reporting their health condition before beginning the experiment. The main focus of this study was on blinking rate, as it was neglected during the previous study [6]. However, all three parameters are taken into account and discussed in the following sections.

Figure 4.

Learning content organization.

5.2. Experiment Result

The experiment results from both of the experiments are presented in this subsection. The results are presented with respect to the observed parameters, namely, LBRT, LPsD and LRdT. Due to page limits, for individual cases, only five subjects’ data plots were included in this paper. Data were acquired from both eyes (left and right eyes) of the learners. Hence, the presented results reflect the blinking rate and pupil size changes obtained from both eyes of the learner during the learning process.

5.2.1. Left Eye

Blinking rate (BRT): On average, a blinking rate of 1.87 per second was observed when learners used learning content with an ordinary navigation (AePKAS), and a blinking rate of 1.12 per second was found when using bioinformatic adaptive navigation (NePKAS). Hence, the results observed in Experiment 2 (NePKAS) showed a twice as less blinking rate than Experiment 1 (AePKAS).

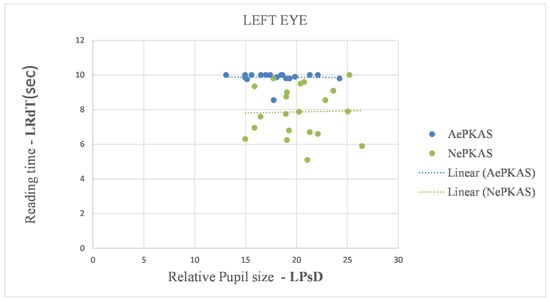

Learner’s pupil size dilation (LPsD): On average, higher relative pupil size (20.24) was observed when learners were going through Experiment 2 (NePKAS), compared to Experiment 1 (AePKAS, 18.05), as shown in Figure 5 below.

Figure 5.

Average reading time vs. relative pupil size for the left eye.

Reading time (LRdT) vs. LPsD: Less LRdT with higher LPsD increment was observed when learners were going through Experiment 2, while more time with less LPsD was observed in Experiment 1, as shown in Figure 5.

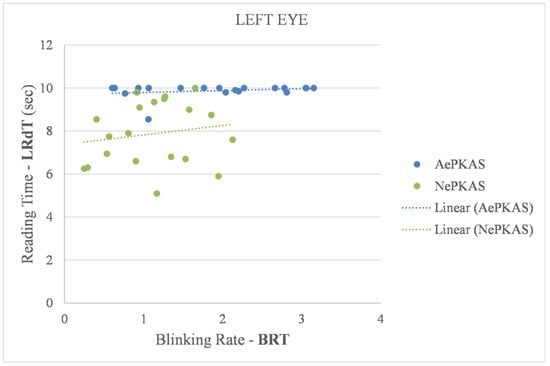

Reading Time (LRdT) vs. BRT: Learners spent more time (9.87 s, Figure 6) going through the learning content in Experiment 1, while it took learners less time (7.88 s, Figure 6) to finish exploring the content in Experiment 2. In Experiment 2, the learners spent less LRdT, with a lower blinking rate and higher accuracy, as shown in Figure 6 below.

Figure 6.

Average reading time vs. blinking rate for the left eye.

Individual differences: There was less individual difference observed as most of individual data complied with the tendency observed in the averaged data

5.2.2. Right Eye

Blinking rate (BRT): On average, blinking rate of 2.16 per second was observed when learners were performing Experiment 1 (AePKAS), and a blinking rate of 1.17 per second was observed when going through Experiment 2 (NePKAS). Therefore, the same tendency of the left eye was observed in the results of Experiment 2, which showed twice as less blinking rate than Experiment 1.

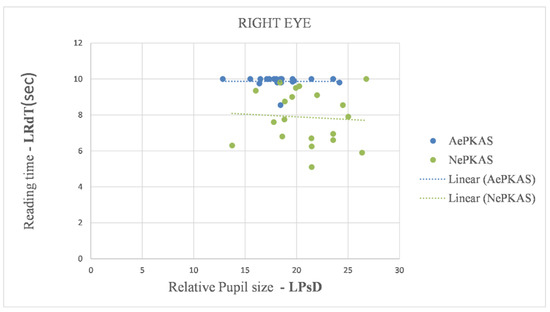

Learner’s pupil size dilation (LPsD): On average, higher relative pupil size LPsD (20.89) was observed when learners were going through Experiment 2 (NePKAS), compared to Experiment 1 (18.75), as shown in Figure 7 below.

Figure 7.

Average reading time vs. relative pupil size for the right eye.

LRdT vs. LPsD: Less LRdT with higher LPsD increment was observed when learners were going through Experiment 2, while more time with less LPsD was observed in Experiment 1, as shown in Figure 8 below.

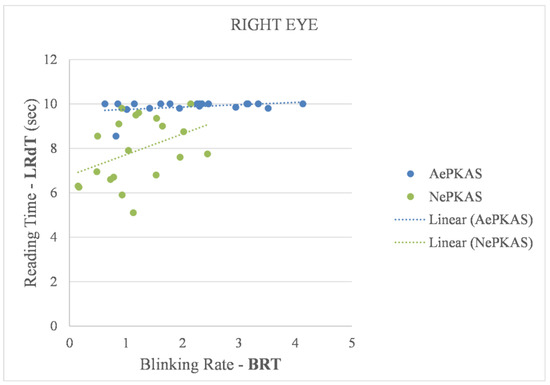

Figure 8.

Average reading time vs. blinking rate on right eye.

LRdT vs. BRT: Learners spent more reading time (9.87 s) when performing Experiment 1 and less LRdT (7.88 s) during Experiment 2. Hence, less reading time in Experiment 2 was observed than in Experiment 1. In Experiment 2, LRdT decrement was observed with increase in performance, as shown in Figure 7 and Figure 9, respectively.

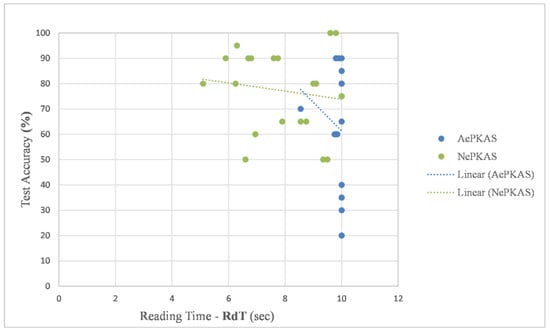

Figure 9.

Performance analysis

Individual differences: There was less individual difference observed as most of individual data complied with the tendency observed in the averaged data.

The results also show that the overall learners’ test accuracy was higher when learners were performing Experiment 2 than Experiment 1. In addition, an increase in performance (percentage of accurate answers during the test), with a decrease in LRdT, was also observed when learners were performing Experiment 2. This is shown in Figure 9 below.

6. Discussion

A decrease in blinking rate (BRT) in Experiment 2 is interpreted as the result of an increase of attention level, whereby learners were able to pay better attention the with help of the visual aid (NePKAS) provided by the navigation support, which ignited their information processing and, in turn, supported learning process. Hence, the increase in pupil size (LPsD) in Experiment 2 is also interpreted as the cognition increment. Learners seem to be more attentive when going through Experiment 2, whereby less reading time was observed alongside improved performance.

As previously explained, the better performance with less reading time (LRdT) observed in Experiment 2, compared to Experiment 1, is interpreted as an increase in the learners’ cognition, as they experienced high attention level when performing Experiment 2. This is because the learners spent less reading time to accomplish the task provided in Experiment 2, with high performance, while their blinking rate (BRT) decreased and pupil size slightly increased. This is also interpreted as cognitive load support offered by the bioinformatic based navigation support and learners’ attention increase.

Overall, the increase of LPsD with a decrease in BRT (as summarized in Table 1 below) are interpreted as a negative correlation between the two parameters. In addition, as shown in Table 2 below, it is very difficult to draw strong conclusions using LPsD alone, as LPsD alone shows no significant difference. Hence, this study suggests that endogenous BRT is not only essential parameter for cognitive load estimation, but also a good indicator for attention level variation and a reliable parameter for AEHS adaptation. Therefore, this study recommends that the two parameters (BRT and LPsD) should be used together during the evaluation and adaptation of these factors.

Table 1.

Comparison of Blinking rate (BRT) and Learner’s pupil size dilation (LPsD).

Table 2.

Comparison of proposed version and previous algorithm.

The observed individual cases are interpreted as individual differences among learners. However, most of the individual data still showed similar tendencies; the study interprets such observed negative and positive correlations as strong reflections of the proposed parameters, supporting cognitive load measurement and attention level indication. In addition, individual differences were interpreted as reflections of learners’ knowledge level differences and metacognitive experiences during their growth. This signifies the need for the constant monitoring of knowledge level updates that are observed to be influencing learners’ learning styles.

Being able to indicate learners’ cognition and attention levels, the study results also imply that LRdT and BRT fulfill qualities to be reliable determinants for AEHS multimedia content adaptation as they can predict learners’ test performance levels. This study’s results also comply with other previous studies, which showed that learners’ endogenous blinking rates decrease with an increase in cognition [21,22].

Compared with the previously proposed algorithm [6], the cognitive load measurement approach proposed in this study uses additional parameters (including learners’ blinking rate, learners’ pupil size dilation and reading time) to estimate learners’ cognitive load, as shown in Table 2 below.

Table 2 shows very similar outputs from the two algorithms, except the additional parameter included in the newly proposed version of algorithm. With respect to the observations made on the presented results summarized in Table 1, it is very difficult to provide clear differences between the two experiments (as shown in Table 1) using a single bioinformatics parameter (LPsD). However, with the addition of BRT, it is easier to differentiate these experiments’ results. This was observed despite that LRdT and LPsD played a great role in the prediction of the learners’ attention levels and performances [6]. Therefore, the study results show the necessity of the newly proposed parameter in cognitive load estimation. Hence, the study finds the proposed version of the algorithm stronger than the previous one, as it includes the additional bioinformatics parameter, as shown in Table 2. This is interpreted as a demand for additional bioinformatics parameters to support the AEHS real-time cognitive load estimation [26].

Therefore, the study suggests that endogenous blinking rate (BRT), LPsD and reading time (LRdT) are, together, reliable parameters to support cognitive load estimation and can also determine what extent AEHS adaptive process can support AEHS human–automation interaction. In addition, the study complies with previous studies in their demand for further investigation on additional bioinformatics parameters in order to support real-time cognitive load estimation [4,6,26] and the need for a better e-learning platform that can deliver learning content in consideration of these cognitive traits [3,4,5,6,25,27,28].

7. Conclusions

In this study, a real-time cognitive load estimation approach was proposed to support the adaptive process of adaptive educational hypermedia systems (AEHS). The study also demonstrated the application of newly proposed approach in AEHS. The proposed algorithm uses learners’ pupil size dilation (LPsD), endogenous blinking rate (BRT) and reading time (LRdT) to estimate cognitive load. The study results suggest that the BRT, LPsD and LRdT are not only reliable parameters for real-time cognitive load estimation, but can also enhance AEHS adaptive process comprehension in order to determine the extent to which learners can comprehend the AEHS e-learning multimedia content specification.

Endogenous BRT, LPsD and LRdT were found to be reliable determinants for real-time cognitive load measurement. However, further investigation is nevertheless recommended in order to find additional bioinformatics parameters for real-time cognitive load estimation. We also recommend further research and development in e-learning multimedia content adaptation and real-time cognitive load estimation, in order to enable AEHS to correspond to learners’ metacognitive learning styles on a real-time basis. A head-stabilized desktop mounted eye-tracker was used in this study, which compelled the tested subjects to maintain a still position; thus, a more user friendly, wearable mobile eye-tracker is also recommended In future experiments.

Better acknowledgement of the usefulness of metacognitive experiences, prior knowledge levels and cognitive processes’ alterations regarding learner’s attention levels prediction and real-time cognitive load estimation would also be useful in future experiments. Hence, we are looking forward to developing a comprehensive AEHS conceptual model/framework for e-learning platforms that can assist dynamic multimedia content personalization.

Author Contributions

Conceptualization, O.O.M.; Formal analysis, O.O.M.; Supervision, P.X.T. and E.K.; Writing—review and editing, O.O.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

The study was conducted according to the guidelines of the Declaration of Helsinki, and approved by the Ethics Committee of Shibaura Institute of Technology.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Not applicable.

Acknowledgments

We would like to acknowledge financial support from Shibaura Institute of Technology (SIT).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Eklund, J.; Sinclair, K. An empirical appraisal of the effectiveness of adaptive interfaces for instructional systems. Educ. Technol. Soc. 2000, 3, 165–177. [Google Scholar]

- Janssen, C.P.; Donker, S.F.; Brumby, D.P.; Kun, A.L. History and future of human-automation interaction. Int. J. Hum. Comput. Stud. 2019, 131, 99–107. [Google Scholar] [CrossRef]

- Mwambe, O.O.; Kamioka, E. EEG cognition detection to support aptitude-treatment interaction in e-learning platforms. In Proceedings of the 12th South East Asian Technical University Consortium (SEATUC), Yogyakarta, Indonesia, 12–13 March 2018; Volume 1, pp. 1–4. [Google Scholar] [CrossRef]

- Scheiter, K.; Schubert, C.; Schüler, A.; Schmidt, H.; Zimmermann, G.; Wassermann, B.; Krebs, M.-C.; Eder, T. Adaptive multimedia: Using gaze-contingent instructional guidance to provide personalized processing support. Comput. Educ. 2019, 139, 31–47. [Google Scholar] [CrossRef]

- Mwambe, O.; Kamioka, E. Utilization of Learners’ Metacognitive Experiences to Monitor Learners’ Cognition States in e-Learning Platforms. Int. J. Inf. Educ. Technol. 2019, 9, 362–365. [Google Scholar] [CrossRef]

- Mwambe, O.O.; Tan, P.X.; Kamioka, E. Bioinformatics-Based Adaptive System towards Real-Time Dynamic E-learning Content Personalization. Educ. Sci. 2020, 10, 42. [Google Scholar] [CrossRef]

- Liu, N.-H.; Chiang, C.-Y.; Chu, H.-C. Recognizing the Degree of Human Attention Using EEG Signals from Mobile Sensors. Sensors 2013, 13, 10273–10286. [Google Scholar] [CrossRef]

- Myrden, A.; Chau, T.T. A Passive EEG-BCI for Single-Trial Detection of Changes in Mental State. IEEE Trans. Neural Syst. Rehabilitation Eng. 2017, 25, 345–356. [Google Scholar] [CrossRef]

- El Haddioui, I. Eye Eye Tracking Applications for E-Learning Purposes: An Overview and Perspectives. In Advances in Educational Technologies and Instructional Design; IGI Global: Hershey, PA, USA, 2019; pp. 151–174. [Google Scholar] [CrossRef]

- Alemdag, E.; Cagiltay, K. A systematic review of eye tracking research on multimedia learning. Comput. Educ. 2018, 125, 413–428. [Google Scholar] [CrossRef]

- Mayer, R.E. Using multimedia for e-learning. J. Comput. Assist. Learn. 2017, 33, 403–423. [Google Scholar] [CrossRef]

- Kruger, J.-L.; Doherty, S. Measuring cognitive load in the presence of educational video: Towards a multimodal methodology. Australas. J. Educ. Technol. 2016, 32, 19–31. [Google Scholar] [CrossRef]

- Lai, M.-L.; Tsai, M.-J.; Yang, F.-Y.; Hsu, C.-Y.; Liu, T.-C.; Lee, S.W.-Y.; Lee, M.-H.; Chiou, G.-L.; Liang, J.-C.; Tsai, C.-C. A review of using eye-tracking technology in exploring learning from 2000 to 2012. Educ. Res. Rev. 2013, 10, 90–115. [Google Scholar] [CrossRef]

- Desjarlais, M. The use of eye gaze to understand multimedia learning. In Eye-Tracking Technology Applications in Educational Research; Was, C., Sansost, F., Morris, B., Eds.; Information Science Reference: Hershey, PA, USA, 2017; pp. 122–142. [Google Scholar]

- O’Keefe, P.A.; Letourneau, S.M.; Homer, B.D.; Schwartz, R.N.; Plass, J.L. Learning from multiple representations: An examination of fixation patterns in a science simulation. Comput. Hum. Behav. 2014, 35, 234–242. [Google Scholar] [CrossRef]

- Copeland, L.; Gedeon, T. What are You Reading Most: Attention in eLearning. Procedia Comput. Sci. 2014, 39, 67–74. [Google Scholar] [CrossRef][Green Version]

- Cantoni, V.; Perez, C.J.; Porta, M.; Ricotti, S. Exploiting eye tracking in advanced e learning systems. In Proceedings of the 13th International Conference on Computer Systems and Technologies—CompSysTech12, Ruse, Bulgaria, 22–23 June 2012; pp. 376–383. [Google Scholar] [CrossRef]

- Drusch, G.; Bastien, J.C.; Paris, S. Analysing eye-tracking data: From scanpaths and heatmaps to the dynamic visualisation of areas of interest. In Advances in Science, Technology, Higher Education and Society in the Conceptual Age: STHESCA; AHFE: Louisville, KY, USA, 2014; Volume 20, p. 25. [Google Scholar]

- Jung, Y.J.; Zimmerman, H.T.; Pérez-Edgar, K. A Methodological Case Study with Mobile eye-tracking of Child Interaction in a Science Museum. TechTrends 2018, 62, 509–517. [Google Scholar] [CrossRef]

- Jung, Y.J.; Zimmerman, H.T.; Pérez-Edgar, K. Mobile Eye-tracking for Research in Diverse Educational Settings. In Press: Research Methods in Learning Design and Technology; Romero-Hall, E., Ed.; Routledge: Abingdon, UK, 2020. [Google Scholar]

- Wang, C.-Y.; Tsai, M.-J.; Tsai, C. Multimedia recipe reading: Predicting learning outcomes and diagnosing cooking interest using eye-tracking measures. Comput. Hum. Behav. 2016, 62, 9–18. [Google Scholar] [CrossRef]

- Ledger, H. The effect cognitive load has on eye blinking. Plymouth Stud. Sci. 2013, 6, 206–223. Available online: http://hdl.handle.net/10026.1/14015 (accessed on 26 January 2021).

- Wascher, E.; Heppner, H.; Möckel, T.; Kobald, S.O.; Getzmann, S. Eye-blinks in choice response tasks uncover hidden aspects of information processing. EXCLI J. 2015, 14, 1207–1218. [Google Scholar]

- Brusilovsky, P.; Sosnovsky, S.; Yudelson, M. Addictive links: The motivational value of adaptive link annotation. New Rev. Hypermedia Multimed. 2009, 15, 97–118. [Google Scholar] [CrossRef][Green Version]

- Du Boulay, B.; Luckin, R. Modelling Human Teaching Tactics and Strategies for Tutoring Systems: 14 Years On. Int. J. Artif. Intell. Educ. 2015, 26, 393–404. [Google Scholar] [CrossRef]

- Premlatha, K.R.; Geetha, T.V. Learning content design and learner adaptation for adaptive e-learning environment: A survey. Artif. Intell. Rev. 2015, 44, 443–465. [Google Scholar] [CrossRef]

- Van Orden, K.F.; Limbert, W.; Makeig, S.; Jung, T.-P. Eye Activity Correlates of Workload during a Visuospatial Memory Task. Hum. Factors J. Hum. Factors Ergon. Soc. 2001, 43, 111–121. [Google Scholar] [CrossRef] [PubMed]

- Akputu, O.K.; Seng, K.P.; Lee, Y.; Ang, K.L.-M. Emotion Recognition Using Multiple Kernel Learning toward E-learning Applications. ACM Trans. Multimedia Comput. Commun. Appl. 2018, 14, 1–20. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).