The Framework DiKoLAN (Digital Competencies for Teaching in Science Education) as Basis for the Self-Assessment Tool DiKoLAN-Grid

Abstract

:1. Introduction

1.1. ICT Frameworks and Models for the Description of Teacher Digital Competencies

1.2. The Framework DiKoLAN

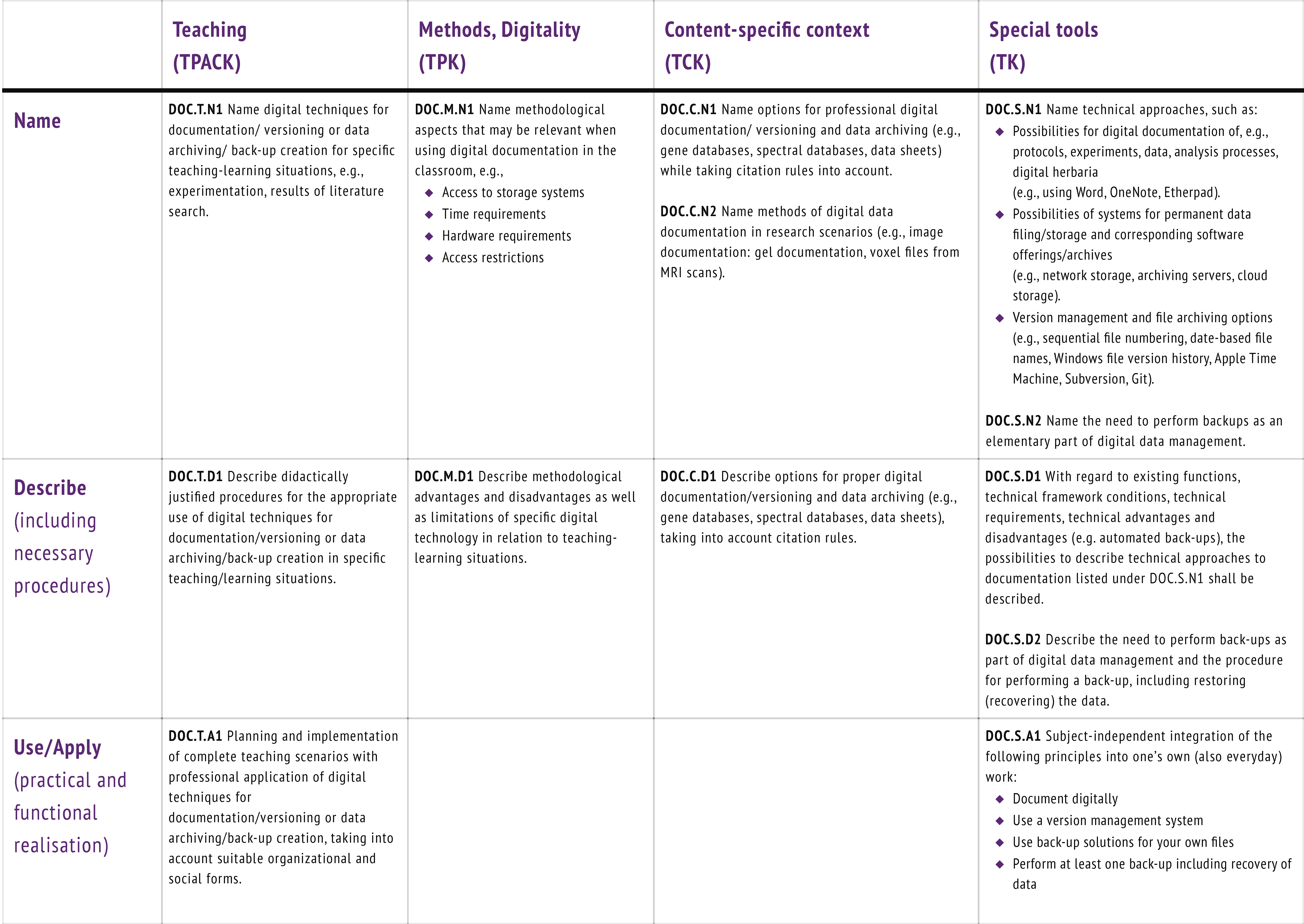

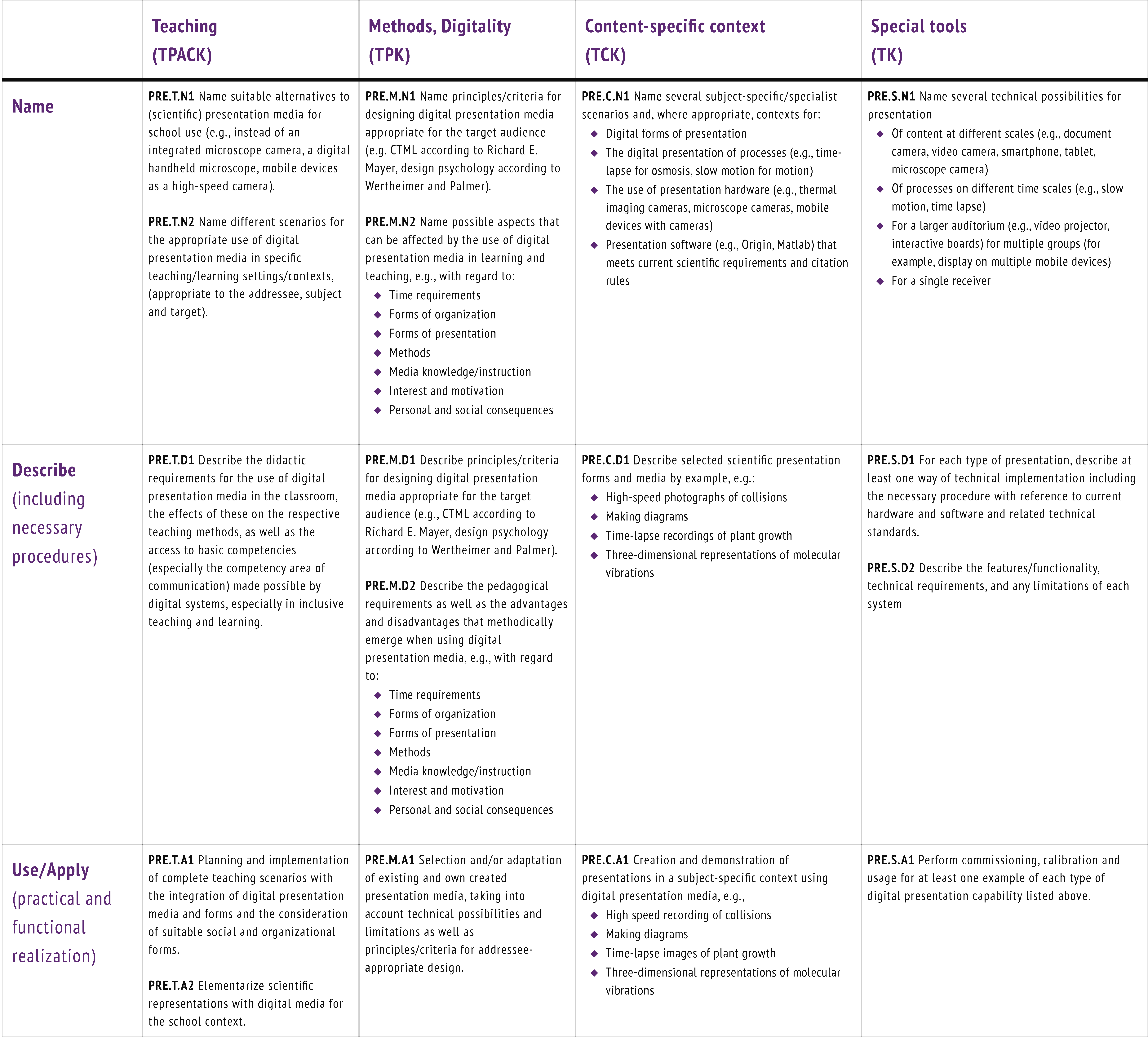

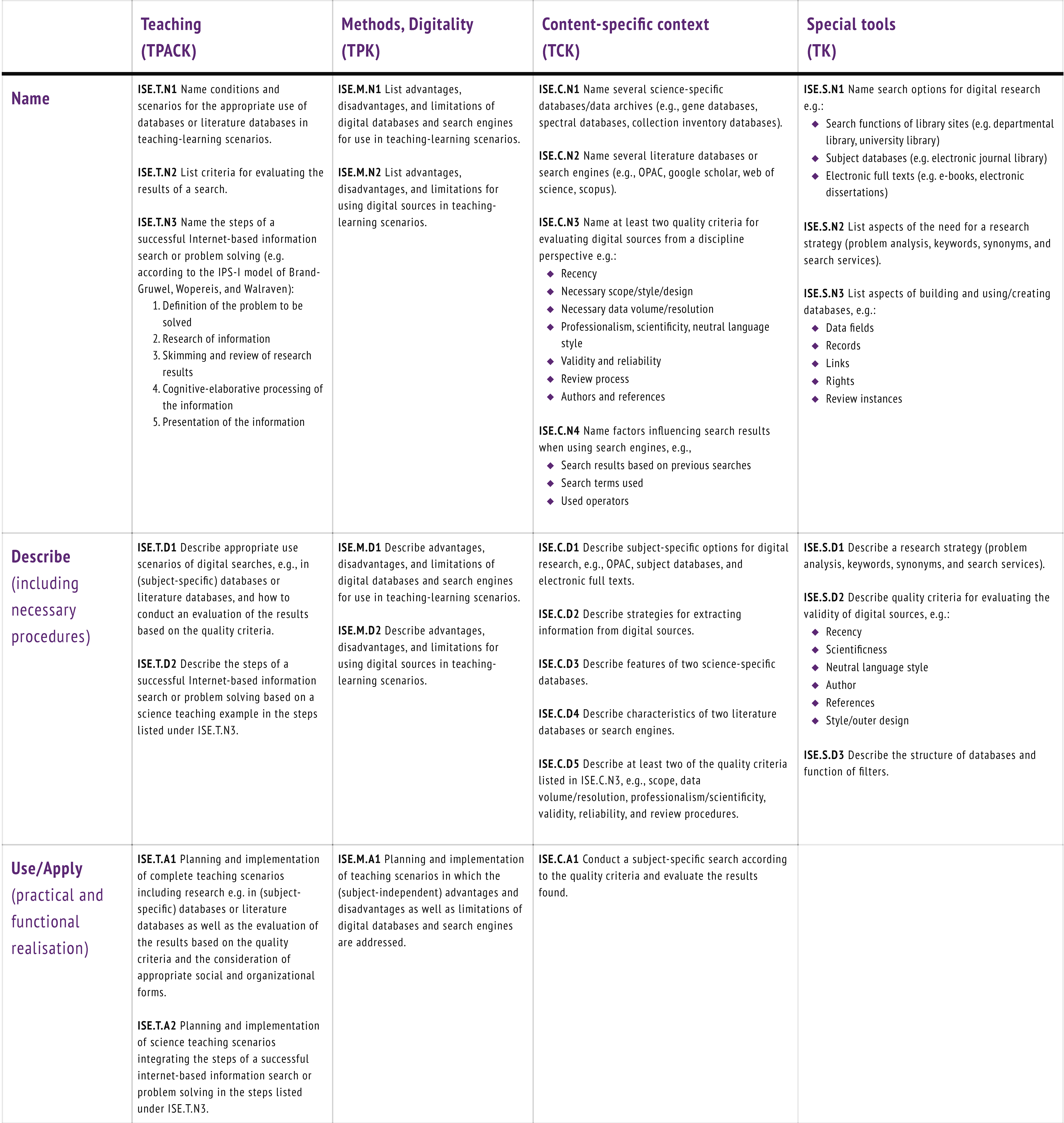

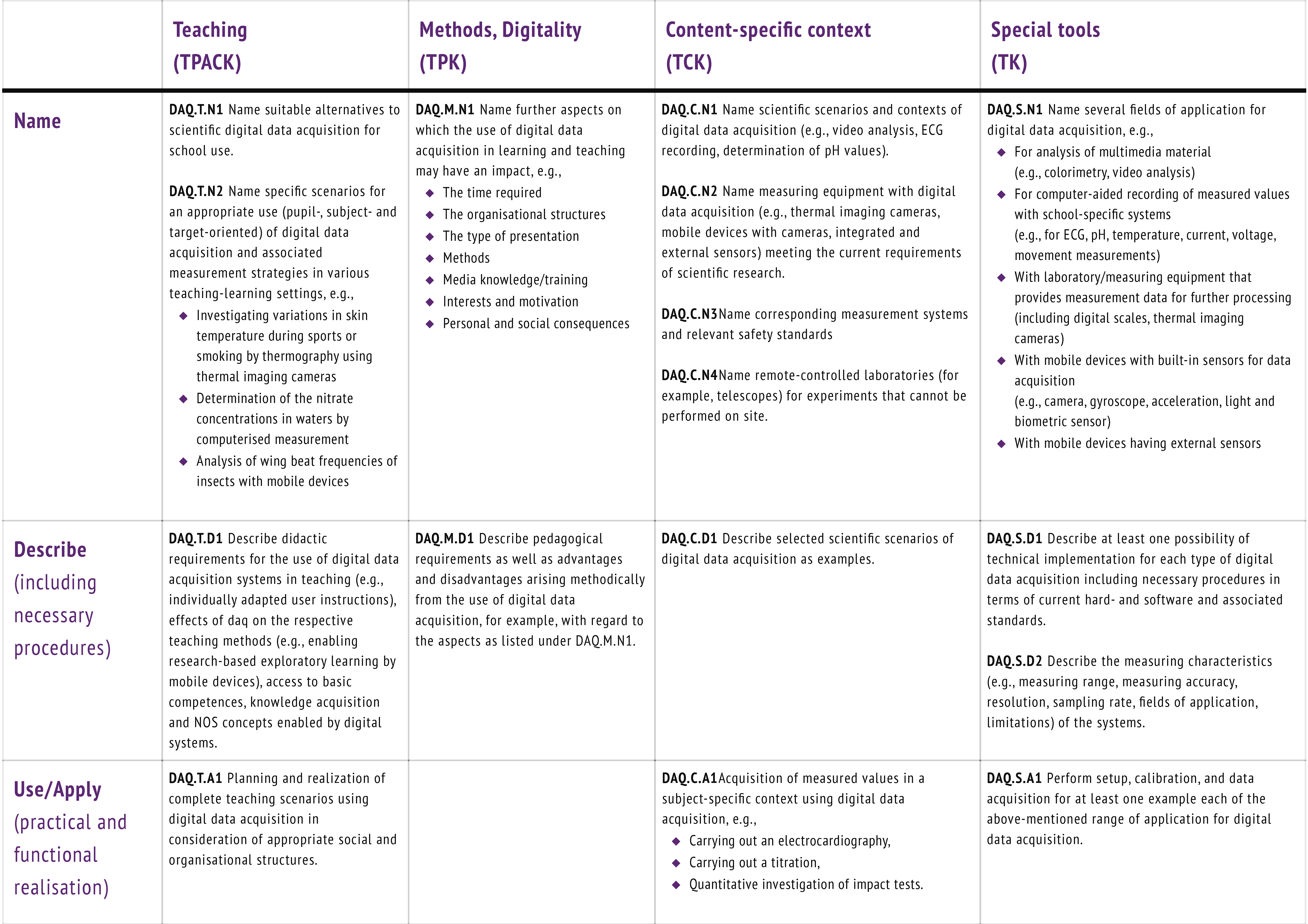

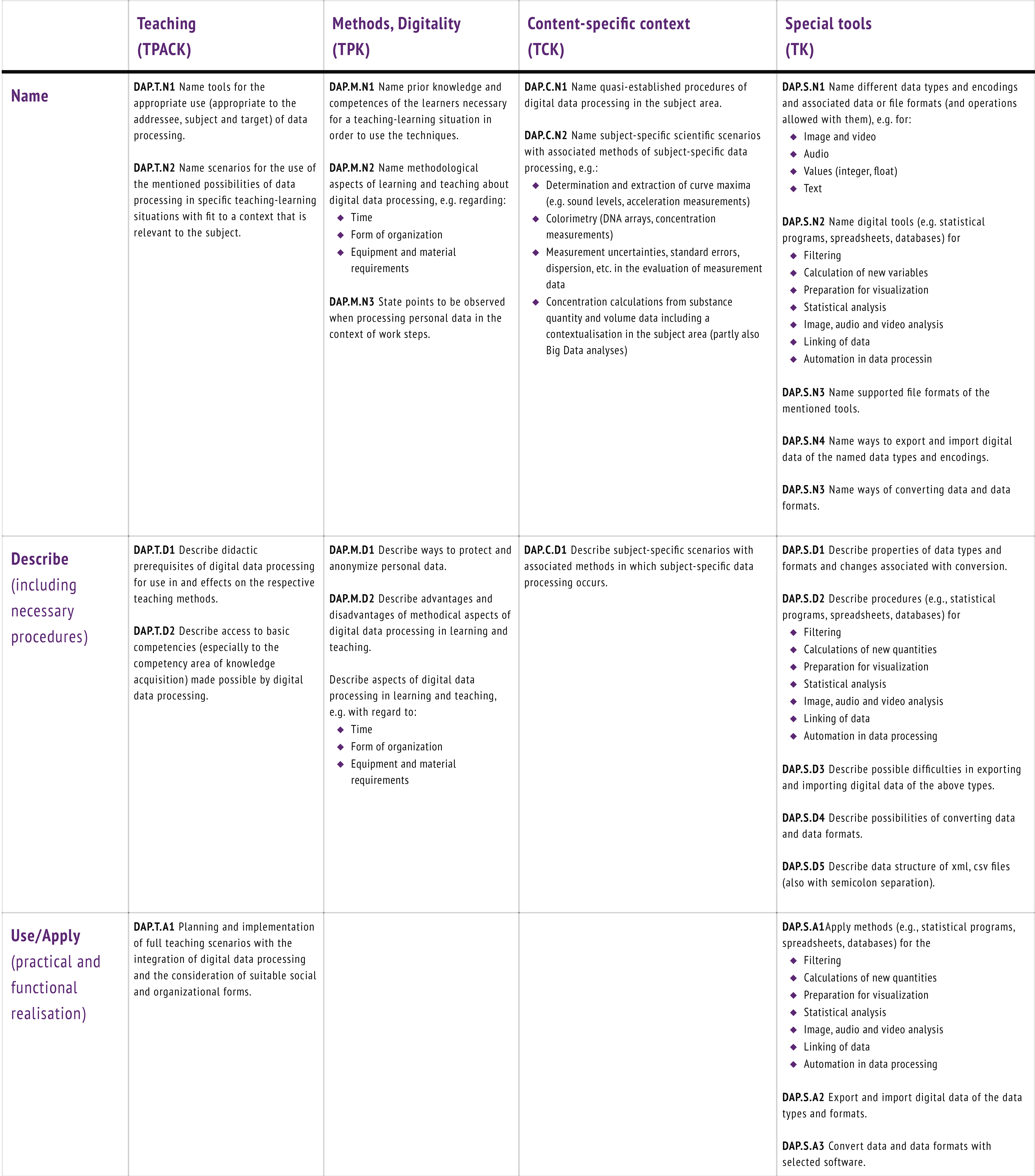

1.2.1. Central Content-Related Competency Areas of DiKoLAN

1.2.2. Structure and Gradations in the Competency Areas of DiKoLAN

1.3. Aims and Research Questions

- How do biology student teachers rate their competencies in Presentation, as well as in Information Search and Evaluation, in the four components of Teaching (T/TPACK), Methods and Digitality (M/TPK), Content-specific context (C/TCK), and Special Tools (S/TK)? Which parallels and differences appear in the level of competency assessment between the four components?

- Which correlations of the four components can be seen within the two competency areas Presentation and Information Search and Evaluation?

2. Materials and Methods

2.1. Participants

2.2. Procedure

2.3. Data Analysis

3. Results

3.1. Descriptive Statistics

3.2. Path Models

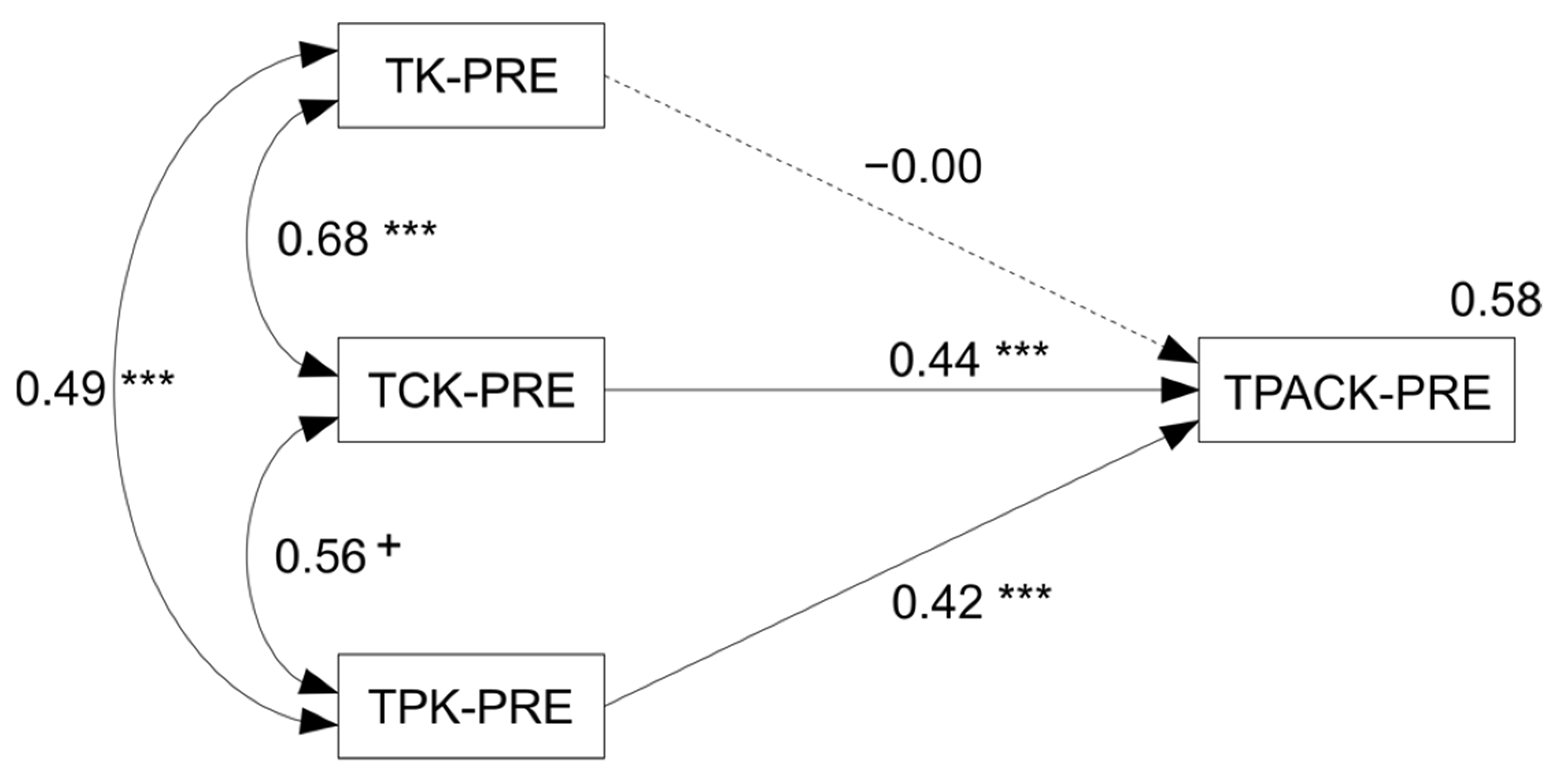

3.2.1. Competency Area Presentation

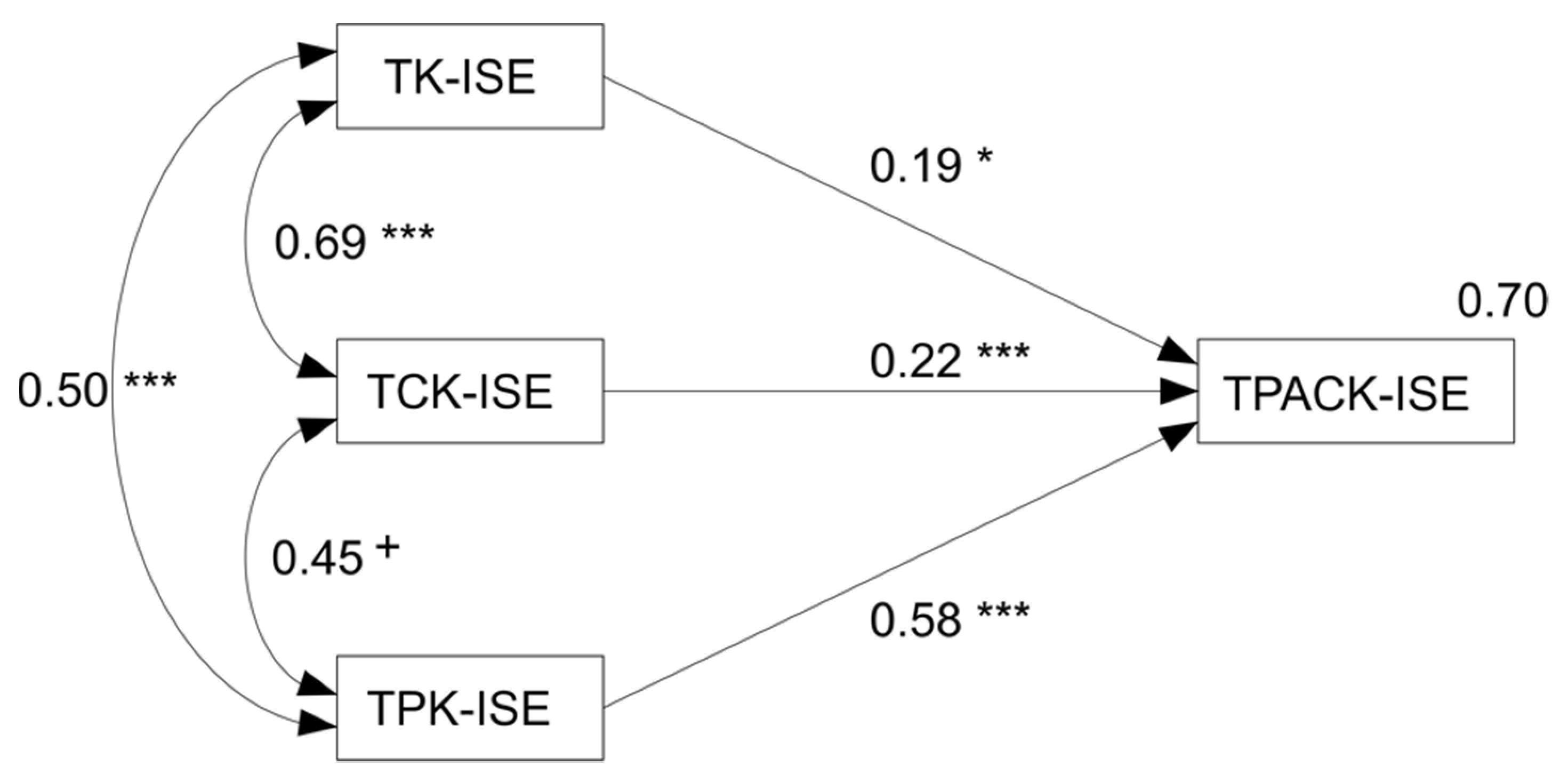

3.2.2. Competency Area Information Search and Evaluation

4. Discussion of the Research Questions

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Partnership for 21st Century Skills. A State Leader’s Action Guide to 21st Century Skills: A New Vision for Education; Partnership for 21st Century Skills: Tuscon, AZ, USA, 2006; Available online: http://apcrsi.pt/website/wp-content/uploads/20170317_Partnership_for_21st_Century_Learning.pdf (accessed on 1 October 2021).

- Saavedra, A.R.; Opfer, V.D. Learning 21st-Century Skills Requires 21st-Century Teaching. Phi Delta Kappan 2012, 94, 8–13. [Google Scholar] [CrossRef]

- International Society for Technology in Education. National Educational Technology Standards for Students; International Society for Technology in Education: Eugene, OR, USA, 1998; Available online: http://cnets.iste.org (accessed on 1 October 2021).

- Wiebe, J.H.; Taylor, H.G.; Thomas, L.G. The national educational technology standards for PK–12 students: Implications for teacher education. J. Comput. Teach. Educ. 2000, 16, 12–17. [Google Scholar]

- Brooks-Young, S. ISTE Standards for Students. A practical Guide for Learning with Technology; International Society for Technology in Education: Eugene, OR, USA, 2016. [Google Scholar]

- KMK. Strategie der Kultusministerkonferenz; Bildung in der digitalen Welt: Berlin, Germany, 2016. [Google Scholar]

- United Nations Educational, Scientific and Cultural Organization. UNESCO ICT Competency Framework for Teachers; UNESCO: Paris, France, 2011; Available online: http://unesdoc.unesco.org/images/0021/002134/213475e.pdf (accessed on 20 September 2021).

- UNESCO International Institute for Capacity Building in Africa (IICBA). ICT-Enhanced Teacher Standards for Africa (ICTeTSA); UNESCO-IICBA: Addis Ababa, Ethiopia, 2012; Available online: https://unesdoc.unesco.org/ark:/48223/pf0000216105 (accessed on 20 September 2021).

- Crompton, H. ISTE Standards for Educators. A Guide for Teachers and Other Professionals; International Society for Technology in Education: Washington, DC, USA, 2017. [Google Scholar]

- Redecker, C. European Framework for the Digital Competence of Educators: DigCompEdu; Publications Office of the European Union: Luxembourg, 2017. [Google Scholar]

- Krueger, K.; Hansen, L.; Smaldino, S. Preservice Teacher Technology Competencies: A Model for Preparing Teachers of Tomorrow to Use Technology. TechTrends Link. Res. Pract. Improv. Learn. 2000, 44, 47–50. Available online: https://www.learntechlib.org/p/90326/ (accessed on 4 September 2021).

- Ghomi, M.; Redecker, C. Digital Competence of Educators (DigCompEdu): Development and Evaluation of a Self-assessment Instrument for Teachers’ Digital Competence. In Proceedings of the 11th International Conference on Computer Supported Education; SCITEPRESS—Science and Technology Publications: Crete, Greece, 2019; pp. 541–548. [Google Scholar] [CrossRef]

- National Institute of Educational Technologies and Teacher Training (INTEF). Common Digital Competence Framework for Teachers—October 2017. 2017. Available online: https://aprende.intef.es/sites/default/files/2018-05/2017_1024-Common-Digital-Competence-Framework-For-Teachers.pdf (accessed on 20 September 2021).

- Kelentrić, M.; Helland, K.; Arstorp, A.T. Professional Digital Competence Framework for Teachers; The Norwegian Centre for ICT in Education: Oslo, 2017; pp. 1–74. [Google Scholar]

- Brandhofer, G.; Kohl, A.; Miglbauer, M.; Nárosy, T. digi.kompP—Digitale Kompetenzen für Lehrende. Open Online J. Res. Educ. 2016, 6, 38–51. [Google Scholar]

- Mishra, P.; Koehler, M.J. Technological pedagogical content knowledge: A framework for teacher knowledge. Teach. Coll. Rec. 2006, 108, 1017–1054. [Google Scholar] [CrossRef]

- Becker, S.; Bruckermann, T.; Finger, A.; Huwer, J.; Kremser, E.; Meier, M.; Thoms, L.-J.; Thyssen, C.; von Kotzebue, L. Orientierungsrahmen Digitale Kompetenzen für das Lehramt in den Naturwissenschaften—DiKoLAN. In Digitale Basiskompetenzen—Orientierungshilfe und Praxisbeispiele für die universitäre Lehramtsausbildung in den Naturwissenschaften; Becker, S., Meßinger-Koppelt, J., Thyssen, C., Eds.; Joachim Herz Stiftung: Hamburg, Germany, 2020; pp. 14–43. [Google Scholar]

- Falloon, G. From digital literacy to digital competence: The teacher digital competency (TDC) framework. Educ. Technol. Res. Dev. 2020, 68, 2449–2472. [Google Scholar] [CrossRef] [Green Version]

- Schultz-Pernice, F.; von Kotzebue, L.; Franke, U.; Ascherl, C.; Hirner, C.; Neuhaus, B.J.; Ballis, A.; Hauck-Thum, U.; Aufleger, M.; Romeike, R.; et al. Kernkompetenzen von Lehrkräften für das Unterrichten in einer digitalisierten Welt. Merz Medien + Erziehung Zeitschrift für Medienpädagogik 2017, 61, 65–74. [Google Scholar]

- Sailer, M.; Stadle, M.; Schultz-Pernice, F.; Franke, U.; Schöffmann, C.; Paniotova, V.; Fischer, F. Technology-related teaching skills and attitudes: Validation of a scenario-based self-assessment instrument for teachers. Comput. Hum. Behav. 2021, 115, 106625. [Google Scholar] [CrossRef]

- Koehler, M.J.; Mishra, P.; Kereluik, K.; Shin, T.S.; Graham, C.R. The technological pedagogical content knowledge framework. In Handbook of Research on Educational Communications and Technology; Spector, J.M., Merrill, D.M., Elen, J., Bishop, M.J., Eds.; Springer: New York, NY, USA, 2014; pp. 101–111. [Google Scholar]

- Schmidt, D.A.; Baran, E.; Thompson, A.D.; Mishra, P.; Koehler, M.J.; Shin, T.S. Technological pedagogical content knowledge (TPACK). J. Res. Technol. Educ. 2009, 42, 123–149. [Google Scholar] [CrossRef]

- Absari, N.; Priyanto, P.; Muslikhin, M. The Effectiveness of Technology, Pedagogy and Content Knowledge (TPACK) in Learning. J. Pendidik. Teknol. Dan Kejuru. 2020, 26, 43–51. [Google Scholar] [CrossRef]

- Koh, J.H.L.; Chai, C.S.; Tsai, C.-C. Examining practicing teachers’ perceptions of technological pedagogical content knowledge (TPACK) pathways: A structural equation modeling approach. Instr. Sci. 2013, 41, 793–809. [Google Scholar] [CrossRef]

- Celik, I.; Sahin, I.; Akturk, A.O. Analysis of the Relations among the Components of Technological Pedagogical and Content Knowledge (Tpack): A Structural Equation Model. J. Educ. Comput. Res. 2014, 51, 1–22. [Google Scholar] [CrossRef]

- Schmid, M.; Brianza, E.; Petko, D. Developing a short assessment instrument for Technological Pedagogical Content Knowledge (TPACK.xs) and comparing the factor structure of an integrative and a transformative model. Comput. Educ. 2020, 157, 103967. [Google Scholar] [CrossRef]

- Šorgo, A.; Špernjak, A. Digital competence for science teaching. In Proceedings of the CECIIS: Central European Conference on Information and Intelligent Systems: 28th International Conference, Varazdin, Croatia, 27–29 September 2017; pp. 45–51. [Google Scholar]

- Nerdel, C. Grundlagen der Naturwissenschaftsdidaktik: Kompetenzorientiert und Aufgabenbasiert für Schule und Hochschule; Springer Spektrum: Heidelberg, Germany, 2017. [Google Scholar]

- Duit, R.; Gropengießer, H.; Stäudel, L. Naturwissenschaftliches Arbeiten: Unterricht und Material 5–10; Erhard Friedrich Verlag: Seelze-Velber, Germany, 2004. [Google Scholar]

- Bäuml, M.A. Fachspezifische Arbeitsweisen im grundlegenden Biologieunterricht. Sachunterr. Math. Grundsch. 1976, 12, 580–589. [Google Scholar]

- Wolff, A.; Gooch, D.; Cavero Montaner, J.; Rashid, U.; Kortuem, G. Creating an Understanding of Data Literacy for a Data-driven Society. J. Community Inform. 2016, 12, 9–26. [Google Scholar] [CrossRef]

- Ridsdale, C.; Rothwell, J.; Smit, M.; Ali-Hassan, H.; Bliemel, M.; Irvine, D.; Kelley, D.; Matwin, S.; Wuetherick, B. Strategies and Best Practices for Data Literacy Education. Knowledge Synthesis Report. 2015. Available online: https://dalspace.library.dal.ca/bitstream/handle/10222/64578/Strategies%20and%20Best%20Practices%20for%20Data%20Literacy%20Education.pdf?sequence=1 (accessed on 1 August 2021).

- Palmer, S.E. Vision Science: Photons to Phenomenology; MIT Press: Cambridge, MA, USA, 1999. [Google Scholar]

- Wertheimer, M. Untersuchungen zur Lehre von der Gestalt. II. Psychol. Forsch. 1923, 4, 301–350. [Google Scholar] [CrossRef]

- Mayer, R.E. The Cambridge Handbook of Multimedia Learning, 2nd ed.; Cambridge University Press: Cambridge, UK, 2014. [Google Scholar]

- Kron, F.W. Grundwissen Didaktik, 2nd ed.; UTB: München, Germany, 1994. [Google Scholar]

- Johnston, B.; Webber, S. Information Literacy in Higher Education: A review and case study. Stud. High. Educ. 2003, 28, 335–352. [Google Scholar] [CrossRef]

- Brand-Gruwel, S.; Wopereis, I.; Walraven, A. A descriptive model of information problem solving while using internet. Comput. Educ. 2009, 53, 1207–1217. [Google Scholar] [CrossRef]

- Walraven, A.; Brand-Gruwel, S.; Boshuizen, H.P. A descriptive model of information problem solving while using internet. Comput. Hum. Behav. 2008, 24, 623–648. [Google Scholar] [CrossRef]

- Von Kotzebue, L.; Fleischer, T. Experimentieren mit digitalen Sensoren—Unsichtbares sichtbar machen. In Digitale Basiskompetenzen—Orientierungshilfe und Praxisbeispiele für die universitäre Lehramtsausbildung in den Naturwissenschaften; Becker, S., Meßinger-Koppelt, J., Thyssen, C., Eds.; Joachim Herz Stiftung Verlag: Hamburg, Germany, 2020; pp. 58–61. [Google Scholar]

- Vollmer, M.; Möllmann, K.-P. High Speed—Slow Motion: Technik digitaler Hochgeschwindigkeitskameras. Phys. Unserer Zeit 2011, 42, 144–148. [Google Scholar] [CrossRef]

- Thyssen, C.; Huwer, J.; Krause, M. Digital Devices als Experimentalwerkzeuge—Potenziale digitalen Experimentierens mit Tablet und Smartphone. Unterricht Biologie 2020, 451, 44–47. [Google Scholar]

- Thyssen, C.; Huwer, J. Promotion of transformative education for sustainability with ICT in real-life contexts. In Building Bridges across Disciplines for Transformative Education and a Sustainable Future; Eilks, I., Markic, S., Ralle, B., Eds.; Shaker Verlag: Aachen, Germany, 2018; pp. 85–96. [Google Scholar]

- Kuhn, J. iMobilePhysics. Smartphone-Experimente im Physikunterricht. Comput. + Unterr. 2015, 97, 20–22. [Google Scholar]

- Koehler, M.J.; Mishra, P.; Cain, W. What is technological pedagogical content knowledge (TPACK)? J. Educ. 2013, 193, 13–19. [Google Scholar] [CrossRef] [Green Version]

- Meier, M.; Thyssen, C.; Becker, S.; Bruckermann, T.; Finger, A.; Kremser, E.; Thoms, L.-J.; von Kotzebue, L.; Huwer, J. Digitale Kompetenzen für das Lehramt in den Naturwissenschaften—Beschreibung und Messung von Kompetenzzielen der Studienphase im Bereich Präsentation. In Bildung in der digitalen Transformation; Wollersheim, H.-W., Pengel, N., Eds.; Waxmann: Münster, Germany, 2021; pp. 185–190. [Google Scholar]

- Thoms, L.-J.; Meier, M.; Huwer, J.; Thyssen, C.; von Kotzebue, L.; Becker, S.; Kremser, E.; Finger, A.; Bruckermann, T. DiKoLAN—A Framework to Identify and Classify Digital Competencies for Teaching in Science Education and to Restructure Pre-Service Teacher Training. In Procedings of the Society for Information Technology & Teacher Education International Conference, Online, 29 March 2021; Association for the Advancement of Computing in Education (AACE): Waynesville, NC, USA, 2021; pp. 1652–1657. [Google Scholar]

- Angeli, C.; Valanides, N. Epistemological and methodological issues for the conceptualization, development, and assessment of ICT-TPCK: Advances in technological pedagogical content knowledge (TPCK). Comput. Educ. 2009, 52, 154–168. [Google Scholar] [CrossRef]

- Jang, S.-J.; Chen, K.-C. From PCK to TPACK: Developing a transformative model for pre-service science teachers. J. Sci. Educ. Technol. 2010, 19, 553–564. [Google Scholar] [CrossRef]

- Jin, Y. The nature of TPACK: Is TPACK distinctive, integrative or transformative? In Procedings of the Society for Information Technology & Teacher Education International Conference, Las Vegas, NV, USA, 18 March 2019; Association for the Advancement of Computing in Education (AACE): Chesapeake, VA, USA, 2019; pp. 2199–2204. [Google Scholar]

- Koh, J.H.L.; Sing, C.C. Modeling pre-service teachers’ technological pedagogical content knowledge (TPACK) perceptions: The influence of demographic factors and TPACK constructs. In Procedings of the ASCILITE—Australian Society for Computers in Learning in Tertiary Education Annual Conference, Hobart, Australia, 4–7 December 2011; pp. 735–746. [Google Scholar]

- Sahin, I.; Celik, I.; Oguz Akturk, A.; Aydin, M. Analysis of Relationships between Technological Pedagogical Content Knowledge and Educational Internet Use. J. Digit. Learn. Teach. Educ. 2013, 29, 110–117. [Google Scholar] [CrossRef]

- Akyuz, D. Measuring technological pedagogical content knowledge (TPACK) through performance assessment. Comput. Educ. 2018, 125, 212–225. [Google Scholar] [CrossRef]

- Deng, F.; Chai, C.S.; So, H.J.; Qian, Y.; Chen, L. Examining the validity of the technological pedagogical content knowledge (TPACK) framework for preservice chemistry teachers. Australas. J. Educ. Technol. 2017, 33, 1–14. [Google Scholar] [CrossRef]

- Dong, Y.; Chai, C.S.; Sang, G.-Y.; Koh, J.H.L.; Tsai, C.-C. Exploring the profiles and interplays of pre-service and in-service teachers’ technological pedagogical content knowledge (TPACK) in China. J. Educ. Technol. Soc. 2015, 18, 158–169. [Google Scholar]

- Pamuk, S.; Ergun, M.; Cakir, R.; Yilmaz, H.B.; Ayas, C. Exploring relationships among TPACK components and development of the TPACK instrument. Educ. Inf. Technol. 2015, 20, 241–263. [Google Scholar] [CrossRef]

- Von Kotzebue, L. (sub.). Two is better than one — Examining biology-specific TPACK and its T-dimensions from two angles.

- Große-Heilmann, R.; Riese, J.; Burde, J.-P.; Schubatzky, T.; Weiler, D. Erwerb und Messung physikdidaktischer Kompetenzen zum Einsatz digitaler Medien. PhyDid B 2021, 171–178. [Google Scholar]

- Kubsch, M.; Sorge, S.; Arnold, J.; Graulich, N. Lehrkräftebildung neu gedacht—Ein Praxishandbuch für die Lehre in den Naturwissenschaften und deren Didaktiken. Waxmann: Münster, Germany, 2021. [Google Scholar]

- Thees, M.; Kapp, S.; Strzys, M.P.; Beil, F.; Lukowicz, P.; Kuhn, J. Effects of augmented reality on learning and cognitive load in university physics laboratory courses. Comput. Hum. Behav. 2020, 108. [Google Scholar] [CrossRef]

- Assante, D.; Cennamo, G.M.; Placidi, L. 3D Printing in Education: An European perspective. In Proceedings of the 2020 IEEE Global Engineering Education Conference (EDUCON), Porto, Portugal, 27–30 April 2020; pp. 1133–1138. [Google Scholar] [CrossRef]

- Küchemann, S.; Becker, S.; Klein, P.; Kuhn, J. Classification of students’ conceptual understanding in STEM education using their visual attention distributions: A comparison of three machine-learning approaches. In Proceedings of the 12th International Conference on Computer Supported Education, Prague, Czech Republic, 2–4 May 2020; Volume 2: CSEDU. Lane, H.C., Zvacek, S., Uhomoibhi, J., Eds.; SciTePress: Setúbal, Portugal, 2020; pp. 36–46. [Google Scholar] [CrossRef]

- Bennett, E.E.; McWhorter, R.R. Digital technologies for teaching and learning. In Handbook of Adult and Continuing Education, 2020 Edition (Chapter 18); Rocco, T.S., Smith, M.C., Mizzi, R.C., Merriweather, L.R., Hawley, J.D., Eds.; American Association for Adult and Continuing Education: Sterling, VA, USA, 2021. [Google Scholar]

| Component | Number of Items | Example | Cronbach’s Alpha |

|---|---|---|---|

| TK-PRE | 5 | I can describe at least one possibility of technical implementation for each type of presentation (e.g., of content, processes/for several groups or individual recipients), including the necessary procedure with reference to current hardware and software, as well as related technical standards. (PRE.S.D1) | 0.85 |

| TCK-PRE | 4 | I can name several subject-specific/specialist scenarios and contexts, as appropriate for digital forms of presentation and digital presentation of processes (e.g., time-lapse for osmosis) and for the use of presentation hardware (e.g., microscope cameras, mobile devices with cameras). (PRE.C.N1) | 0.81 |

| TPK-PRE | 5 | I can select, adapt, and use existing and created presentation media of my own, taking into account technical possibilities and limitations, as well as principles/criteria for audience-appropriate design. (PRE.M.A1) | 0.85 |

| TPACK-PRE | 6 | I can use digital media to simplify subject matter for the school context and make them easier to understand. (PRE.T.A1) | 0.91 |

| Component | Number of Items | Example | Cronbach’s Alpha |

|---|---|---|---|

| TK-ISE | 4 | I can name search options for digital research, e.g., search functions of library sites (including university library); subject databases (including electronic journal library); electronic full texts (including e-books, electronic dissertations). (ISE.S.N1) | 0.80 |

| TCK-ISE | 9 | I can name at least two quality criteria for evaluating digital sources from a subject-specific perspective, e.g., recency; necessary scope/style/design; professionalism, scientificity, neutral language style; validity and reliability; review procedures, references. (ISE.C.N3) | 0.86 |

| TPK-ISE | 5 | I can describe advantages, disadvantages, and limitations of digital databases and search engines for use in teaching-learning scenarios. (ISE.M.D1) | 0.94 |

| TPACK-ISE | 8 | I can plan and implement science instructional scenarios incorporating the steps of a successful internet-based information search or problem solving, such as defining the problem to be solved, researching information, presenting the information. (ISE.T.A2) | 0.94 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kotzebue, L.v.; Meier, M.; Finger, A.; Kremser, E.; Huwer, J.; Thoms, L.-J.; Becker, S.; Bruckermann, T.; Thyssen, C. The Framework DiKoLAN (Digital Competencies for Teaching in Science Education) as Basis for the Self-Assessment Tool DiKoLAN-Grid. Educ. Sci. 2021, 11, 775. https://doi.org/10.3390/educsci11120775

Kotzebue Lv, Meier M, Finger A, Kremser E, Huwer J, Thoms L-J, Becker S, Bruckermann T, Thyssen C. The Framework DiKoLAN (Digital Competencies for Teaching in Science Education) as Basis for the Self-Assessment Tool DiKoLAN-Grid. Education Sciences. 2021; 11(12):775. https://doi.org/10.3390/educsci11120775

Chicago/Turabian StyleKotzebue, Lena von, Monique Meier, Alexander Finger, Erik Kremser, Johannes Huwer, Lars-Jochen Thoms, Sebastian Becker, Till Bruckermann, and Christoph Thyssen. 2021. "The Framework DiKoLAN (Digital Competencies for Teaching in Science Education) as Basis for the Self-Assessment Tool DiKoLAN-Grid" Education Sciences 11, no. 12: 775. https://doi.org/10.3390/educsci11120775

APA StyleKotzebue, L. v., Meier, M., Finger, A., Kremser, E., Huwer, J., Thoms, L.-J., Becker, S., Bruckermann, T., & Thyssen, C. (2021). The Framework DiKoLAN (Digital Competencies for Teaching in Science Education) as Basis for the Self-Assessment Tool DiKoLAN-Grid. Education Sciences, 11(12), 775. https://doi.org/10.3390/educsci11120775