The Continuous Intention to Use E-Learning, from Two Different Perspectives

Abstract

1. Introduction

2. Theoretical Framework and Hypotheses

2.1. Technological Pedagogical Content Knowledge (TPACK)

2.2. Technology Self-Efficacy (TSE)

2.3. Technology Acceptance Model (TAM)

2.4. Perceived Organizational Support (POS)

2.5. Controlled Motivation (CTRLM)

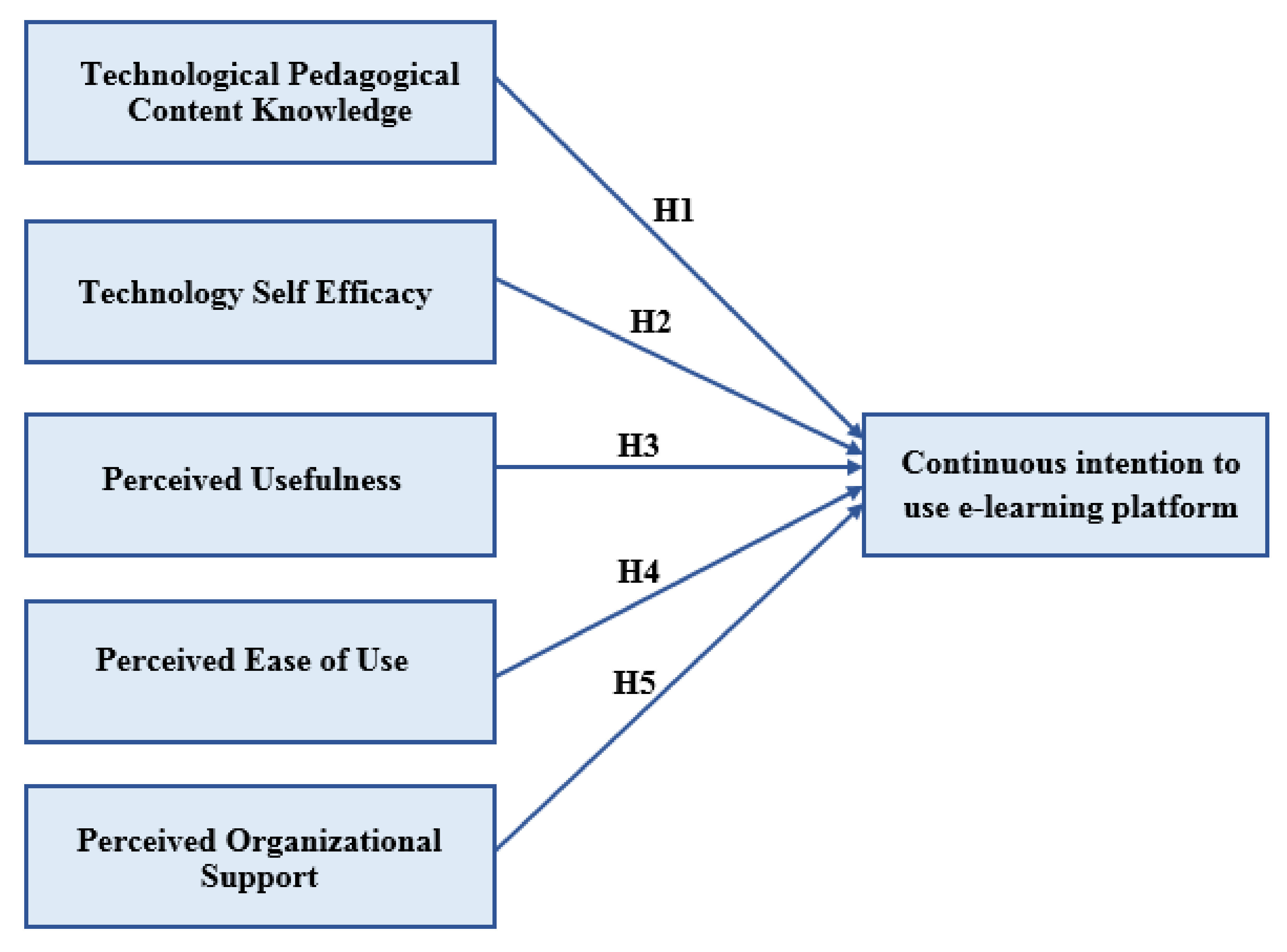

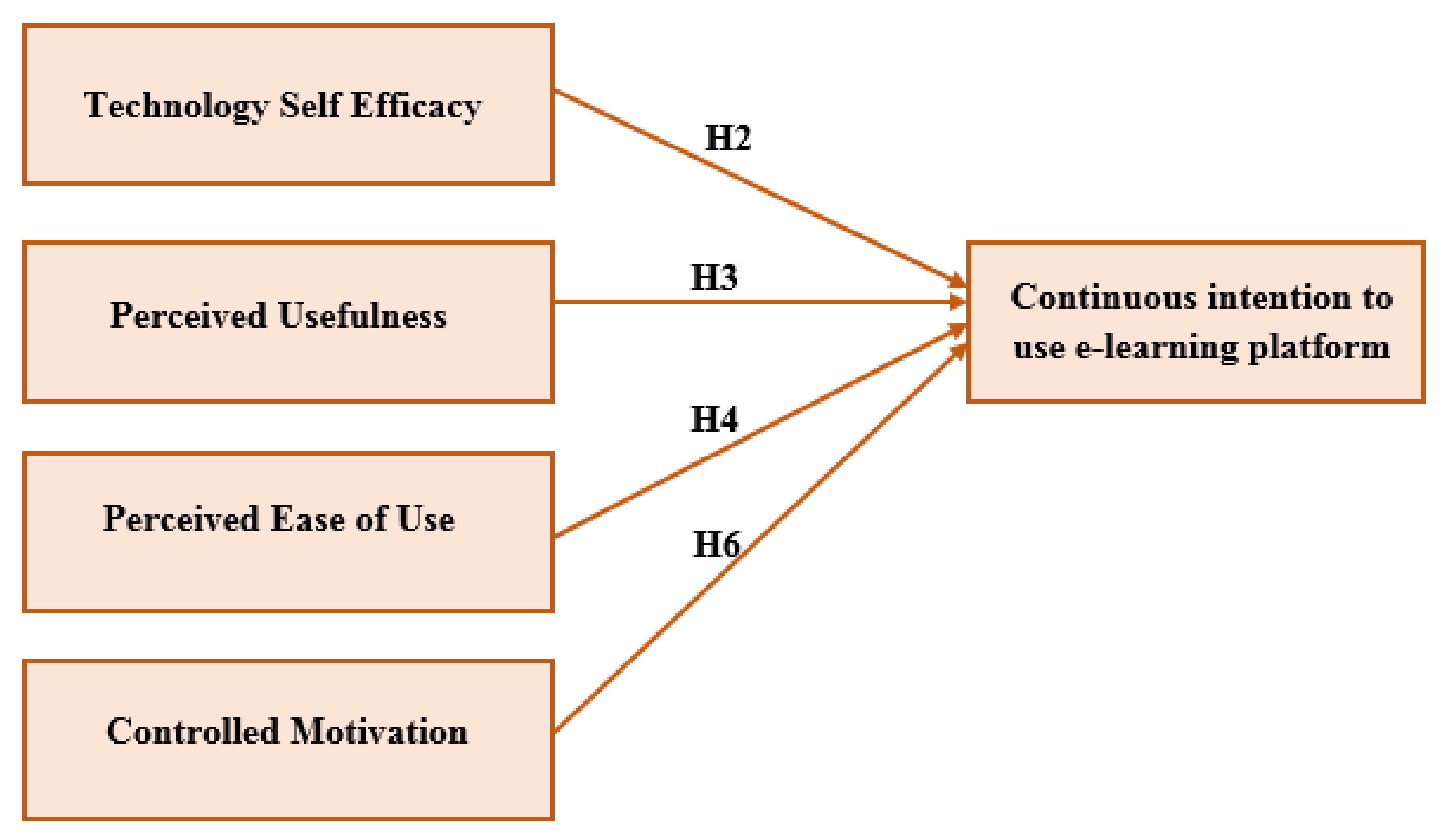

2.6. The Proposed Research Models

3. Methodology

3.1. Participants

3.2. Data Collection

3.3. Students’ Personal Information/Demographic Data

3.4. Study Instrument

3.5. Pilot Study for the Questionnaire

3.6. Survey Structure

4. Findings and Discussion

4.1. Data Analysis

4.2. Convergent Validity

4.3. Discriminant Validity

4.4. Model Fit

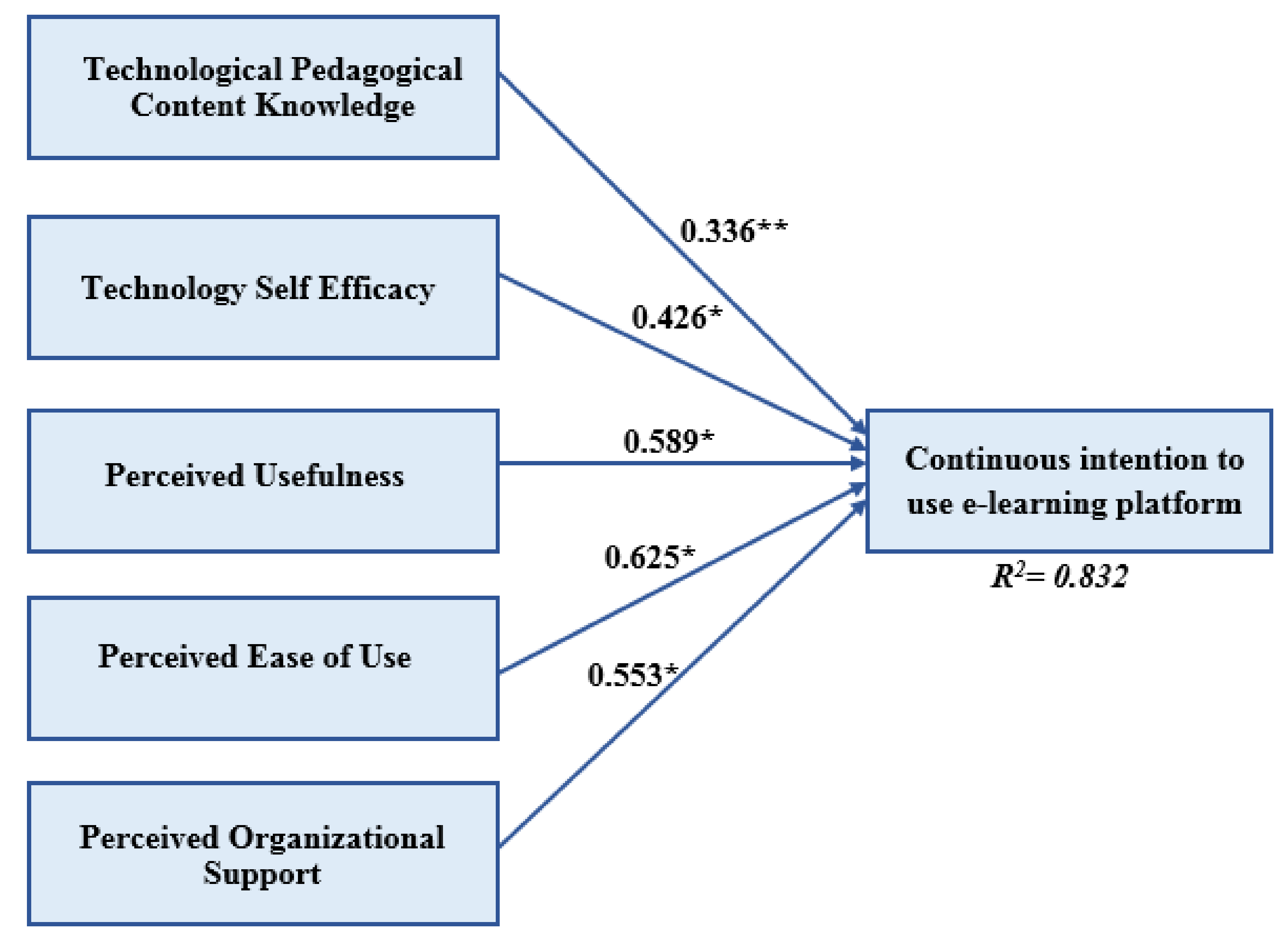

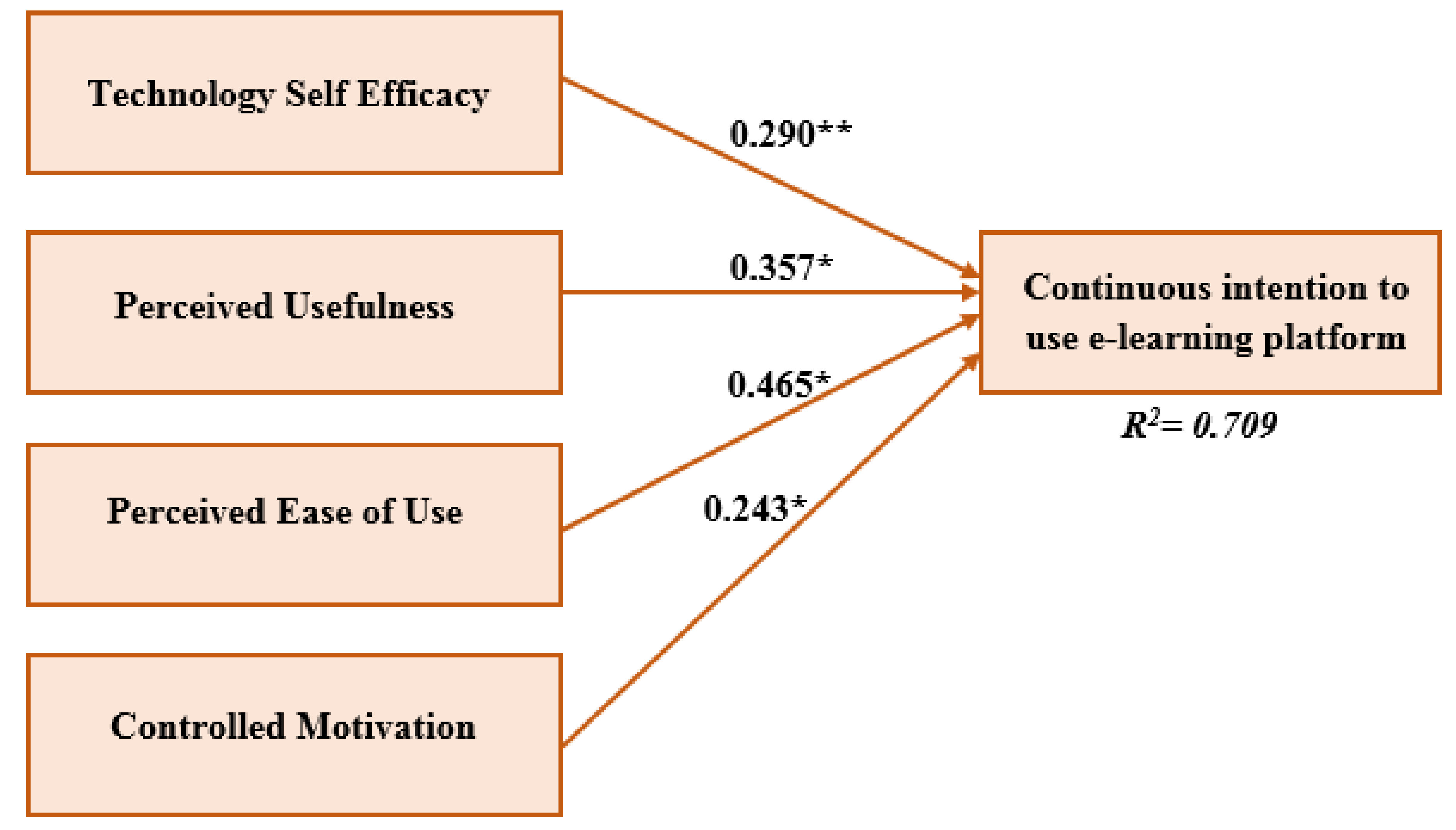

4.5. Hypotheses Testing Using PLS-SEM

5. Discussion and Conclusions

5.1. Practical Implications

5.2. Limitations and Further Research

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Wagner, N.; Hassanein, K.; Head, M. Who is responsible for e-learning success in higher education? A stakeholders’ analysis. J. Educ. Technol. Soc. 2008, 11, 26–36. [Google Scholar]

- Kituyi, G.; Tusubira, I. A Framework for the Integration of E-learning in Higher Education Institutions in Developing Countries. Int. J. Educ. Dev. Using ICT 2013, 9, 19–36. [Google Scholar]

- Luo, N.; Zhang, M.; Qi, D. Effects of Different Interactions on Students’ Sense of Community in E-learning Environment. Comput. Educ. 2017, 115, 153–160. [Google Scholar] [CrossRef]

- Hong, J.-C.; Tai, K.-H.; Hwang, M.-Y.; Kuo, Y.-C.; Chen, J.-S. Internet Cognitive Failure Relevant to Users’ Satisfaction with Content and Interface Design to Reflect Continuance Intention to Use a Government E-learning System. Comput. Human Behav. 2017, 66, 353–362. [Google Scholar] [CrossRef]

- Alghizzawi, M.; Habes, M.; Salloum, S.A.; Ghani, M.A.; Mhamdi, C.; Shaalan, K. The Effect of Social Media Usage on Students’E-learning Acceptance in Higher Education: A case study from the United Arab Emirates. Int. J. Inf. Technol. Lang. Stud. 2019, 3, 13–26. [Google Scholar]

- Alshurideh, M.; al Kurdi, B.; Salloum, S.A. Examining the Main Mobile Learning System Drivers’ Effects: A Mix Empirical Examination of Both the Expectation-Confirmation Model (ECM) and the Technology Acceptance Model (TAM). In Proceedings of the 5th International Conference on Advanced Intelligent Systems and Informatics (AISI’19), Cairo, Egypt, 26–28 October 2019; Volume 1058. [Google Scholar]

- Salloum, S.A.; Al-Emran, M.; Habes, M.; Alghizzawi, M.; Ghani, M.A.; Shaalan, K. Understanding the Impact of Social Media Practices on E-Learning Systems Acceptance. In Proceedings of the 5th International Conference on Advanced Intelligent Systems and Informatics (AISI’19), Cairo, Egypt, 26–28 October 2019; Volume 1058. [Google Scholar]

- Alshurideh, M.; Salloum, S.A.; al Kurdi, B.; Monem, A.A.; Shaalan, K. Understanding the quality determinants that influence the intention to use the mobile learning platforms: A practical study. Int. J. Interact. Mob. Technol. 2019, 13, 157–183. [Google Scholar] [CrossRef]

- Al-Emran, M.; Arpaci, I.; Salloum, S.A. An empirical examination of continuous intention to use m-learning: An integrated model. Educ. Inf. Technol. 2020, 25, 2899–2918. [Google Scholar] [CrossRef]

- Salloum, S.A.; Shaalan, K. Investigating Students’ Acceptance of E-Learning System in Higher Educational Environments in the UAE: Applying the Extended Technology Acceptance Model (TAM); The British University in Dubai: Dubai, UAE, 2018. [Google Scholar]

- Salloum, S.A.; Alhamad, A.Q.M.; Al-Emran, M.; Monem, A.A.; Shaalan, K. Exploring Students’ Acceptance of E-Learning Through the Development of a Comprehensive Technology Acceptance Model. IEEE Access 2019, 7, 128445–128462. [Google Scholar] [CrossRef]

- Muqtadiroh, F.A.; Nisafani, A.S.; Saraswati, R.M.; Herdiyanti, A. Analysis of User Resistance Towards Adopting E-Learning. Procedia Comput. Sci. 2019, 161, 123–132. [Google Scholar] [CrossRef]

- Tawafak, R.M.; Romli, A.B.T.; Arshah, R.b.A.; Malik, S.I. Framework design of university communication model (UCOM) to enhance continuous intentions in teaching and e-learning process. Educ. Inf. Technol. 2019, 25, 817–843. [Google Scholar] [CrossRef]

- al Kurdi, B.; Alshurideh, M.; Salloum, S.A. Investigating a theoretical framework for e-learning technology acceptance. Int. J. Electr. Comput. Eng. 2020, 10, 6484–6496. [Google Scholar] [CrossRef]

- Al-Maroof, R.S.; Salloum, S.A. An Integrated Model of Continuous Intention to Use of Google Classroom. In Recent Advances in Intelligent Systems and Smart Applications; Springer: Cham, Switzerland, 2020; Volume 295. [Google Scholar]

- Al-Maroof, R.A.; Arpaci, I.; Al-Emran, M.; Salloum, S.A.; Shaalan, K. Examining the Acceptance of WhatsApp Stickers Through Machine Learning Algorithms. In Recent Advances in Intelligent Systems and Smart Applications; Springer: Cham, Switzerland, 2020; Volume 295. [Google Scholar]

- Al-Maroof, R.S.; Salloum, S.A.; AlHamadand, A.Q.M.; Shaalan, K. A Unified Model for the Use and Acceptance of Stickers in Social Media Messaging. In International Conference on Advanced Intelligent Systems and Informatics; Springer: Cham, Switzerland, 2019; pp. 370–381. [Google Scholar]

- Al Kurdi, B.; Alshurideh, M.; Salloum, S.A.; Obeidat, Z.M.; Al-dweeri, R.M. An Empirical Investigation into Examination of Factors Influencing University Students’ Behavior towards Elearning Acceptance Using SEM Approach. Int. J. Interact. Mob. Technol. 2020, 14, 19–41. [Google Scholar] [CrossRef]

- Al-Maroof, R.S.; Salloum, S.A.; Al Hamadand, A.Q.; Shaalan, K. Understanding an Extension Technology Acceptance Model of Google Translation: A Multi-Cultural Study in United Arab Emirates. Int. J. Interact. Mob. Technol. 2020, 14, 157–178. [Google Scholar] [CrossRef]

- Demetriadis, S.; Barbas, A.; Molohides, A.; Palaigeorgiou, G.; Psillos, D.; Vlahavas, I.; Tsoukalas, I.; Pombortsis, A. ‘Cultures in negotiation’: Teachers’ acceptance/resistance attitudes considering the infusion of technology into schools. Comput. Educ. 2003, 41, 19–37. [Google Scholar] [CrossRef]

- Buckenmeyer, J. Revisiting teacher adoption of technology: Research implications and recommendations for successful full technology integration. Coll. Teach. Methods Styles J. 2008, 4, 7–10. [Google Scholar] [CrossRef]

- Aldunate, R.; Nussbaum, M. Teacher adoption of technology. Comput. Human Behav. 2013, 29, 519–524. [Google Scholar] [CrossRef]

- Eyyam, R.; Yaratan, H.S. Impact of use of technology in mathematics lessons on student achievement and attitudes. Soc. Behav. Personal. Int. J. 2014, 42, 31S–42S. [Google Scholar] [CrossRef]

- Nelson, M.J.; Hawk, N.A. The impact of field experiences on prospective preservice teachers’ technology integration beliefs and intentions. Teach. Teach. Educ. 2020, 89, 103006. [Google Scholar] [CrossRef]

- Scherer, R.; Siddiq, F.; Tondeur, J. All the same or different? Revisiting measures of teachers’ technology acceptance. Comput. Educ. 2020, 143, 103656. [Google Scholar] [CrossRef]

- Raes, A.; Depaepe, F. A longitudinal study to understand students’ acceptance of technological reform. When experiences exceed expectations. Educ. Inf. Technol. 2020, 25, 533–552. [Google Scholar] [CrossRef]

- Salloum, S.A.; Al-Emran, M.; Shaalan, K.; Tarhini, A. Factors affecting the E-learning acceptance: A case study from UAE. Educ. Inf. Technol. 2019, 24, 509–530. [Google Scholar] [CrossRef]

- Cheng, Y. Antecedents and consequences of e-learning acceptance. Inf. Syst. J. 2011, 21, 269–299. [Google Scholar] [CrossRef]

- Al-Gahtani, S.S. Empirical investigation of e-learning acceptance and assimilation: A structural equation model. Appl. Comput. Inform. 2016, 12, 27–50. [Google Scholar] [CrossRef]

- Sunny, S.; Patrick, L.; Rob, L. Impact of cultural values on technology acceptance and technology readiness. Int. J. Hosp. Manag. 2019, 77, 89–96. [Google Scholar] [CrossRef]

- Jacobs, J.V.; Hettinger, L.J.; Huang, Y.H.; Jeffries, S.; Lesch, M.F.; Simmons, L.A.; Verma, S.K.; Willetts, J.L. Employee acceptance of wearable technology in the workplace. Appl. Ergon. 2019, 78, 148–156. [Google Scholar] [CrossRef]

- Scherer, R.; Siddiq, F.; Tondeur, J. The technology acceptance model (TAM): A meta-analytic structural equation modeling approach to explaining teachers’ adoption of digital technology in education. Comput. Educ. 2019, 128, 13–35. [Google Scholar] [CrossRef]

- Estriegana, R.; Medina-Merodio, J.-A.; Barchino, R. Student acceptance of virtual laboratory and practical work: An extension of the technology acceptance model. Comput. Educ. 2019, 135, 1–14. [Google Scholar] [CrossRef]

- Taherdoost, H. A review of technology acceptance and adoption models and theories. Procedia Manuf. 2018, 22, 960–967. [Google Scholar] [CrossRef]

- Dehghani, M.; Kim, K.J.; Dangelico, R.M. Will smartwatches last? Factors contributing to intention to keep using smart wearable technology. Telemat. Inform. 2018, 35, 480–490. [Google Scholar] [CrossRef]

- Obal, M. What drives post-adoption usage? Investigating the negative and positive antecedents of disruptive technology continuous adoption intentions. Ind. Mark. Manag. 2017, 63, 42–52. [Google Scholar] [CrossRef]

- Choi, B.K.; Yeo, W.-D.; Won, D. The implication of ANT (Actor-Network-Theory) methodology for R&D policy in open innovation paradigm. Knowl. Manag. Res. Pract. 2018, 16, 315–326. [Google Scholar]

- Ifinedo, P. Examining students’ intention to continue using blogs for learning: Perspectives from technology acceptance, motivational, and social-cognitive frameworks. Comput. Human Behav. 2017, 72, 189–199. [Google Scholar] [CrossRef]

- Mishra, P.; Koehler, M.J. Technological pedagogical content knowledge: A framework for teacher knowledge. Teach. Coll. Rec. 2006, 108, 1017–1054. [Google Scholar] [CrossRef]

- Davis, F.D. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q. 1989, 13, 319–340. [Google Scholar] [CrossRef]

- Bandura, A. Social Foundations of Thought and Action. In The Health Psychology Reader; SAGE: Englewood Cliffs, NJ, USA, 1986. [Google Scholar]

- Eisenberger, R.; Huntington, R.; Hutchison, S.; Sowa, D. Perceived organizational support. J. Appl. Psychol. 1986, 71, 500. [Google Scholar] [CrossRef]

- Vallerand, R.J. Toward a hierarchical model of intrinsic and extrinsic motivation. In Advances in Experimental Social Psychology; Elsevier: Amsterdam, The Netherlands, 1997; Volume 29, pp. 271–360. [Google Scholar]

- Ryan, R.M.; Deci, E.L. Self-determination theory and the facilitation of intrinsic motivation, social development, and well-being. Am. Psychol. 2000, 55, 68. [Google Scholar] [CrossRef]

- Al-Emran, M.; Salloum, S.A. Students’ Attitudes Towards the Use of Mobile Technologies in e-Evaluation. Int. J. Interact. Mob. Technol. 2017, 11, 195–202. [Google Scholar] [CrossRef]

- Sabah, N.M. Motivation factors and barriers to the continuous use of blended learning approach using Moodle: Students’ perceptions and individual differences. Behav. Inf. Technol. 2020, 39, 875–898. [Google Scholar] [CrossRef]

- Al-Busaidi, K.A. An empirical investigation linking learners’ adoption of blended learning to their intention of full e-learning. Behav. Inf. Technol. 2013, 32, 1168–1176. [Google Scholar] [CrossRef]

- Al-Busaidi, K.A.; Al-Shihi, H. Key factors to instructors’ satisfaction of learning management systems in blended learning. J. Comput. High. Educ. 2012, 24, 18–39. [Google Scholar] [CrossRef]

- Hwang, I.-H.; Tsai, S.-J.; Yu, C.-C.; Lin, C.-H. An empirical study on the factors affecting continuous usage intention of double reinforcement interactive e-portfolio learning system. In Proceedings of the 2011 6th IEEE Joint International Information Technology and Artificial Intelligence Conference, Chongqing, China, 20–22 August 2011; Volume 1, pp. 246–249. [Google Scholar]

- Lin, K.-M. e-Learning continuance intention: Moderating effects of user e-learning experience. Comput. Educ. 2011, 56, 515–526. [Google Scholar] [CrossRef]

- Ho, C.-H. Continuance intention of e-learning platform: Toward an integrated model. Int. J. Electron. Bus. Manag. 2010, 8, 206. [Google Scholar]

- Lee, M.-C. Explaining and predicting users’ continuance intention toward e-learning: An extension of the expectation–confirmation model. Comput. Educ. 2010, 54, 506–516. [Google Scholar] [CrossRef]

- Shulman, L. Knowledge and teaching: Foundations of the new reform. Harv. Educ. Rev. 1987, 57, 1–23. [Google Scholar] [CrossRef]

- Schmidt, D.A.; Baran, E.; Thompson, A.D.; Mishra, P.; Koehler, M.J.; Shin, T.S. Technological pedagogical content knowledge (TPACK) the development and validation of an assessment instrument for preservice teachers. J. Res. Technol. Educ. 2009, 42, 123–149. [Google Scholar] [CrossRef]

- Chai, C.S.; Koh, J.H.L.; Tsai, C.-C.; Tan, L.L.W. Modeling primary school pre-service teachers’ Technological Pedagogical Content Knowledge (TPACK) for meaningful learning with information and communication technology (ICT). Comput. Educ. 2011, 57, 1184–1193. [Google Scholar] [CrossRef]

- Oner, D. A virtual internship for developing technological pedagogical content knowledge. Australas. J. Educ. Technol. 2020, 36, 27–42. [Google Scholar] [CrossRef]

- Koehler, M.; Mishra, P. What is technological pedagogical content knowledge (TPACK)? Contemp. Issues Technol. Teach. Educ. 2009, 9, 60–70. [Google Scholar] [CrossRef]

- Koehler, M.J.; Shin, T.S.; Mishra, P. How do we measure TPACK? Let me count the ways. In Educational Technology, Teacher Knowledge, and Classroom Impact: A Research Handbook on Frameworks and Approaches; IGI Global: Hershey, PA, USA, 2012; pp. 16–31. [Google Scholar]

- Lu, Y.-L.; Lien, C.-J. Are they learning or playing? Students’ perception traits and their learning self-efficacy in a game-based learning environment. J. Educ. Comput. Res. 2020, 57, 1879–1909. [Google Scholar] [CrossRef]

- Cai, J.; Yang, H.H.; Gong, D.; MacLeod, J.; Zhu, S. Understanding the continued use of flipped classroom instruction: A personal beliefs model in Chinese higher education. J. Comput. High. Educ. 2019, 31, 137–155. [Google Scholar] [CrossRef]

- Venkatesh, V. Determinants of perceived ease of use: Integrating control, intrinsic motivation, and emotion into the technology acceptance model. Inf. Syst. Res. 2000, 11, 342–365. [Google Scholar] [CrossRef]

- Chen, K.; Chen, J.V.; Yen, D.C. Dimensions of self-efficacy in the study of smart phone acceptance. Comput. Stand. Interfaces 2011, 33, 422–431. [Google Scholar] [CrossRef]

- Cebeci, U.; Ertug, A.; Turkcan, H. Exploring the determinants of intention to use self-checkout systems in super market chain and its application. Manag. Sci. Lett. 2020, 10, 1027–1036. [Google Scholar] [CrossRef]

- Bailey, A.A.; Pentina, I.; Mishra, A.S.; Mimoun, M.S.B. Mobile payments adoption by US consumers: An extended TAM. Int. J. Retail Distrib. Manag. 2017, 45, 626–640. [Google Scholar] [CrossRef]

- Fathema, N.; Shannon, D.; Ross, M. Expanding the Technology Acceptance Model (TAM) to Examine Faculty Use of Learning Management Systems (LMSs) In Higher Education Institutions. J. Online Learn. Teach. 2015, 11, 210–232. [Google Scholar]

- Lam, S.; Cheng, R.W.; Choy, H.C. School support and teacher motivation to implement project-based learning. Learn. Instr. 2010, 20, 487–497. [Google Scholar] [CrossRef]

- Kurup, V.; Hersey, D. The changing landscape of anesthesia education: Is Flipped Classroom the answer? Curr. Opin. Anesthesiol. 2013, 26, 726–731. [Google Scholar] [CrossRef]

- Missildine, K.; Fountain, R.; Summers, L.; Gosselin, K. Flipping the classroom to improve student performance and satisfaction. J. Nurs. Educ. 2013, 52, 597–599. [Google Scholar] [CrossRef]

- Bøe, T.; Gulbrandsen, B.; Sørebø, Ø. How to stimulate the continued use of ICT in higher education: Integrating information systems continuance theory and agency theory. Comput. Human Behav. 2015, 50, 375–384. [Google Scholar] [CrossRef]

- Bölen, M.C. Exploring the determinants of users’ continuance intention in smartwatches. Technol. Soc. 2020, 60, 101209. [Google Scholar] [CrossRef]

- Krejcie, R.V.; Morgan, D.W. Determining sample size for research activities. Educ. Psychol. Meas. 1970, 30, 607–610. [Google Scholar] [CrossRef]

- Chuan, C.L.; Penyelidikan, J. Sample size estimation using Krejcie and Morgan and Cohen statistical power analysis: A comparison. J. Penyelid. IPBL 2006, 7, 78–86. [Google Scholar]

- Bhattacherjee, A. Understanding information systems continuance: An expectation-confirmation model. MIS Q. 2001, 25, 351–370. [Google Scholar] [CrossRef]

- Almahamid, S.; Rub, F.A. Factors that determine continuance intention to use e-learning system: An empirical investigation. Int. Conf. Telecommun. Tech. Appl. Proc. CSIT 2011, 5, 242–246. [Google Scholar]

- Thiruchelvi, A.; Koteeswari, S. A conceptual framework of employees’ continuance intention to use e-learning system. Asian J. Res. Bus. Econ. Manag. 2013, 3, 14–20. [Google Scholar]

- Grandgenett, N.F. Perhaps a matter of imagination: TPCK in mathematics education. In Handbook of Technological Pedagogical Content Knowledge (TPCK) for Educators; Routledge: London, UK, 2008; p. 145166. [Google Scholar]

- Compeau, D.R.; Higgins, C.A. Computer self-efficacy: Development of a measure and initial test. MIS Q. 1995, 19, 189–211. [Google Scholar] [CrossRef]

- Nunnally, J.C.; Bernstein, I.H. Psychometric Theory; McGraw-Hill: New York, NY, USA, 1994. [Google Scholar]

- Kline, R.B. Principles and Practice of Structural Equation Modeling; Guilford Publications: New York, NY, USA, 2015. [Google Scholar]

- Dijkstra, T.K.; Henseler, J. Consistent and asymptotically normal PLS estimators for linear structural equations. Comput. Stat. Data Anal. 2015, 81, 10–23. [Google Scholar] [CrossRef]

- Hair, J.F.; Ringle, C.M.; Sarstedt, M. PLS-SEM: Indeed a silver bullet. J. Mark. Theory Pract. 2011, 19, 139–152. [Google Scholar] [CrossRef]

- Henseler, J.; Ringle, C.M.; Sinkovics, R.R. The use of partial least squares path modeling in international marketing. In New Challenges to International Marketing; Emerald Group Publishing Limited: Bingley, UK, 2009; pp. 277–319. [Google Scholar]

- Fornell, C.; Larcker, D.F. Evaluating Structural Equation Models With Unobservable Variables and Measurement Error. J. Mark. Res. 1981, 18, 39–50. [Google Scholar] [CrossRef]

- Trial, D. Model Fit. Available online: https://www.modelfit.com/ (accessed on 20 December 2020).

- Hair, J.F., Jr.; Hult, G.T.M.; Ringle, C.; Sarstedt, M. A Primer on Partial Least Squares Structural Equation Modeling (PLS-SEM); Sage Publications: New York, NY, USA, 2016. [Google Scholar]

- Hu, L.; Bentler, P.M. Fit indices in covariance structure modeling: Sensitivity to underparameterized model misspecification. Psychol. Methods 1998, 3, 424. [Google Scholar] [CrossRef]

- Bentler, P.M.; Bonett, D.G. Significance tests and goodness of fit in the analysis of covariance structures. Psychol. Bull. 1980, 88, 588. [Google Scholar] [CrossRef]

- Lohmöller, J.B. Latent Variable Path Modeling with Partial Least Squares; Physica: Heidelberg, Germany, 1989. [Google Scholar]

- Henseler, J.; Dijkstra, T.K.; Sarstedt, M.; Ringle, C.M.; Diamantopoulos, A.; Straub, D.W.; Ketchen, D.J., Jr.; Hair, J.F.; Hult, G.T.M.; Calantone, R.J. Common beliefs and reality about PLS: Comments on Rönkkö and Evermann (2013). Organ. Res. Methods 2014, 17, 182–209. [Google Scholar] [CrossRef]

- Baranik, L.E.; Roling, E.A.; Eby, L.T. Why does mentoring work? The role of perceived organizational support. J. Vocat. Behav. 2010, 76, 366–373. [Google Scholar] [CrossRef] [PubMed]

- Aselage, J.; Eisenberger, R. Perceived organizational support and psychological contracts: A theoretical integration. J. Organ. Behav. Int. J. Ind. Occup. Organ. Psychol. Behav. 2003, 24, 491–509. [Google Scholar] [CrossRef]

- Stamper, C.L.; Johlke, M.C. The impact of perceived organizational support on the relationship between boundary spanner role stress and work outcomes. J. Manag. 2003, 29, 569–588. [Google Scholar]

- Mitchell, J.I.; Gagné, M.; Beaudry, A.; Dyer, L. The role of perceived organizational support, distributive justice and motivation in reactions to new information technology. Comput. Human Behav. 2012, 28, 729–738. [Google Scholar] [CrossRef]

- Alsofyani, M.M.; Aris, B.b.; Eynon, R.; Majid, N.A. A preliminary evaluation of short blended online training workshop for TPACK development using technology acceptance model. Turkish Online J. Educ. Technol. 2012, 11, 20–32. [Google Scholar]

- Siahaan, A.U.; Aji, S.B.; Antoni, C.; Handayani, Y. Online Social Learning Platform vs E-Learning for Higher Vocational Education in Purpose to English Test Preparation. In Proceedings of the 7th International Conference on English Language and Teaching (ICOELT 2019), Padang, Indonesia, 4–5 November 2019; pp. 76–82. [Google Scholar]

- Heidig, S.; Clarebout, G. Do pedagogical agents make a difference to student motivation and learning? Educ. Res. Rev. 2011, 6, 27–54. [Google Scholar] [CrossRef]

- Corbalan, G.; Kester, L.; van Merriënboer, J.J.G. Towards a personalized task selection model with shared instructional control. Instr. Sci. 2006, 34, 399–422. [Google Scholar] [CrossRef]

- Zaric, N.; Lukarov, V.; Schroder, U. A Fundamental Study for Gamification Design: Exploring Learning Tendencies’ Effects. Int. J. Serious Games 2020, 7, 3–25. [Google Scholar] [CrossRef]

- Hanif, M. Students’ Self-Regulated Learning in Iconic Mobile Learning System in English Cross-Disciplined Program. Anatol. J. Educ. 2020, 5, 121–130. [Google Scholar] [CrossRef]

- Fathema, N.; Sutton, K.L. Factors influencing faculty members’ Learning Management Systems adoption behavior: An analysis using the Technology Acceptance Model. Int. J. Trends Econ. Manag. Technol. 2013, 2, 20–28. [Google Scholar]

- Panda, S.; Mishra, S. E-Learning in a Mega Open University: Faculty attitude, barriers and motivators. EMI Educ. Media Int. 2007, 44, 323–338. [Google Scholar] [CrossRef]

| Authors/Reference | Target Population | Objective/Goal | Models Adopted |

|---|---|---|---|

| [45] | Students | To explain the f-variables that affect continued use of m-learning. | TAM, Theory of Planned Behavior (TPB), and Expectation Confirmation Model (ECM). |

| [46] | Students | To examine students’ continuous use of blended learning, with reference to behavioral attitudes, motivations, and barriers. | TAM, TPB and self-determination theory (SDT). |

| [47] | Students | To make a connection between learners’ adoption and satisfaction with LMS in blended learning in relation to certain learners’ personal characteristics in terms of continuous use of the e-learning environment. | TAM and satisfaction factor (SAT). |

| [48] | Instructors | To examine the influential factors which may contribute to instructors’ satisfaction with LMS use in a blended learning atmosphere. | LMS, system and instructors’ characteristics that are derived from well-established factors. |

| [49] | Students | To investigate students’ behavior of continuance intentions to use the double reinforcement interactive e-portfolio learning system. | TAM and IS continuance post-acceptance model (IS-TAM). |

| [50] | Learners | To investigate the basic determinants behind the continuous intention to use e-learning. | TAM and Negative Critical Incident (NCI). |

| [51] | People chosen randomly through a high-traffic website | To investigate the motivational factors that affect the synthesized model that is composed of a combination of TAM, ECM, COGM and SDM. | TAM, ECM and cognitive model (COGM). |

| [52] | Technology users | To investigate and predict the main reason behind users’ intentions to continue using e-learning. | ECM, TAM, and, TPB. |

| Criterion | Factor | Frequency | Percentage |

|---|---|---|---|

| Gender | Female | 175 | 47% |

| Male | 197 | 53% | |

| Age | Between 18 and 29 | 122 | 33% |

| Between 30 and 39 | 98 | 26% | |

| Between 40 and 49 | 88 | 24% | |

| Between 50 and 59 | 64 | 17% | |

| Faculties | Faculty of Engineering and IT | 145 | 39% |

| Faculty of Education | 129 | 35% | |

| Faculty of Business and Law | 98 | 26% | |

| Education qualification | Bachelor | 182 | 49% |

| Master | 157 | 42% | |

| Doctorate | 33 | 9% |

| Constructs | Number of Items | Source |

|---|---|---|

| CU | 2 | [73,74,75] |

| CTRLM | 5 | [43] |

| TPACK | 4 | [39,76] |

| TSE | 7 | [41,77] |

| PEOU | 3 | [40] |

| PU | 4 | [40] |

| POS | 5 | [42] |

| Constructs | Cronbach’s Alpha |

|---|---|

| CU | 0.756 |

| TPACK | 0.779 |

| TSE | 0.864 |

| PEOU | 0.889 |

| PU | 0.734 |

| POS | 0.852 |

| Constructs | Cronbach’s Alpha |

|---|---|

| CU | 0.872 |

| CTRLM | 0.881 |

| TSE | 0.798 |

| PEOU | 0.736 |

| PU | 0.797 |

| Constructs | Items | Factor Loading | Cronbach’s Alpha | CR | pA | AVE |

|---|---|---|---|---|---|---|

| Technology Self-Efficacy | TSE1 | 0.775 | 0.874 | 0.799 | 0.832 | 0.536 |

| TSE2 | 0.736 | |||||

| TSE3 | 0.820 | |||||

| TSE4 | 0.901 | |||||

| TSE5 | 0.756 | |||||

| TSE6 | 0.723 | |||||

| TSE7 | 0.797 | |||||

| Technological Pedagogical Content Knowledge | TPACK 1 | 0.711 | 0.829 | 0.882 | 0.791 | 0.552 |

| TPACK 2 | 0.869 | |||||

| TPACK 3 | 0.909 | |||||

| TPACK 4 | 0.790 | |||||

| Perceived Ease of Use | PEOU1 | 0.829 | 0.844 | 0.812 | 0.817 | 0.661 |

| PEOU2 | 0.847 | |||||

| PEOU3 | 0.746 | |||||

| Perceived Usefulness | PU1 | 0.734 | 0.816 | 0.828 | 0.825 | 0.623 |

| PU2 | 0.766 | |||||

| PU3 | 0.889 | |||||

| PU4 | 0.850 | |||||

| Perceived Organizational Support | POS1 | 0.729 | 0.863 | 0.814 | 0.883 | 0.718 |

| POS2 | 0.848 | |||||

| POS3 | 0.758 | |||||

| POS4 | 0.819 | |||||

| POS5 | 0.878 | |||||

| Continuous intention to use e-learning platform | CU1 | 0.796 | 0.815 | 0.876 | 0.898 | 0.673 |

| CU2 | 0.801 |

| Constructs | Items | Factor Loading | Cronbach’s Alpha | CR | PA | AVE |

|---|---|---|---|---|---|---|

| Technology Self-Efficacy | TSE1 | 0.726 | ||||

| TSE2 | 0.826 | 0.782 | 0.833 | 0.823 | 0.705 | |

| TSE3 | 0.710 | |||||

| TSE4 | 0.868 | |||||

| TSE5 | 0.746 | |||||

| TSE6 | 0.733 | |||||

| Perceived Ease of Use | PEOU1 | 0.763 | 0.895 | 0.800 | 0.836 | 0.559 |

| PEOU2 | 0.890 | |||||

| PEOU3 | 0.849 | |||||

| Perceived Usefulness | PU1 | 0.793 | 0.856 | 0.879 | 0.808 | 0.696 |

| PU2 | 0.709 | |||||

| PU3 | 0.873 | |||||

| PU4 | 0.821 | |||||

| Controlled Motivation | CTRL1 | 0.832 | 0.805 | 0.796 | 0.807 | 0.700 |

| CTRL2 | 0.802 | |||||

| CTRL3 | 0.875 | |||||

| CTRL4 | 0.810 | |||||

| CTRL5 | 0.796 | |||||

| Continuous intention to use e-learning platform | CU1 | 0.725 | 0.878 | 0.818 | 0.816 | 0.509 |

| CU2 | 0.878 |

| TSE | TPACK | PEOU | PU | POS | CU | |

|---|---|---|---|---|---|---|

| TSE | 0.876 | |||||

| TPACK | 0.165 | 0.845 | ||||

| PEOU | 0.125 | 0.253 | 0.802 | |||

| PU | 0.569 | 0.487 | 0.558 | 0.790 | ||

| POS | 0.187 | 0.202 | 0.291 | 0.115 | 0.787 | |

| CU | 0.369 | 0.198 | 0.378 | 0.383 | 0.178 | 0.803 |

| TSE | PEOU | PU | CTRL | CU | |

|---|---|---|---|---|---|

| TSE | 0.768 | ||||

| PEOU | 0.368 | 0.801 | |||

| PU | 0.267 | 0.229 | 0.887 | ||

| CTRL | 0.649 | 0.492 | 0.399 | 0.844 | |

| CU | 0.422 | 0.327 | 0.302 | 0.188 | 0.870 |

| TSE | TPACK | PEOU | PU | POS | CU | |

|---|---|---|---|---|---|---|

| TSE | ||||||

| TPACK | 0.560 | |||||

| PEOU | 0.136 | 0.487 | ||||

| PU | 0.266 | 0.363 | 0.556 | |||

| POS | 0.296 | 0.200 | 0.270 | 0.544 | ||

| CU | 0.389 | 0.635 | 0.378 | 0.638 | 0.555 |

| TSE | PEOU | PU | CTRL | CU | |

|---|---|---|---|---|---|

| TSE | |||||

| PEOU | 0.232 | ||||

| PU | 0.506 | 0.436 | |||

| CTRL | 0.392 | 0.457 | 0.503 | ||

| CU | 0.697 | 0.609 | 0.210 | 0.264 |

| Complete Model | ||

|---|---|---|

| Saturated Model | Estimated Mod | |

| SRMR | 0.031 | 0.041 |

| d_ULS | 0.786 | 3.216 |

| d_G | 0.565 | 0.535 |

| Chi-Square | 466.736 | 473.348 |

| NFI | 0.624 | 0.627 |

| RMS Theta | 0.073 | |

| Complete Model | ||

|---|---|---|

| Saturated Model | Estimated Mod | |

| SRMR | 0.012 | 0.031 |

| d_ULS | 0.605 | 2.317 |

| d_G | 0.516 | 0.506 |

| Chi-Square | 461.646 | 472.347 |

| NFI | 0.633 | 0.642 |

| RMS Theta | 0.061 | |

| Constructs | R2 | Results |

|---|---|---|

| Continuous intention to use e-learning platform | 0.832 | High |

| Constructs | R2 | Results |

|---|---|---|

| Continuous intention to use e-learning platform | 0.709 | High |

| H | Relationship | Path | t-Value | p-Value | Direction | Decision |

|---|---|---|---|---|---|---|

| H1 | TPACK -> CU | 0.336 | 12.223 | 0.001 | Positive | Supported ** |

| H2 | TSE -> CU | 0.426 | 5.269 | 0.026 | Positive | Supported * |

| H3 | PU -> CU | 0.589 | 6.716 | 0.018 | Positive | Supported * |

| H4 | PEOU -> CU | 0.625 | 5.584 | 0.023 | Positive | Supported * |

| H5 | POS -> CU | 0.553 | 16.108 | 0.000 | Positive | Supported ** |

| H | Relationship | Path | t-Value | p-Value | Direction | Decision |

|---|---|---|---|---|---|---|

| H2 | TSE -> CU | 0.290 | 14.578 | 0.000 | Positive | Supported ** |

| H3 | PEOU -> CU | 0.357 | 3.116 | 0.043 | Positive | Supported * |

| H4 | PU -> CU | 0.465 | 2.646 | 0.035 | Positive | Supported * |

| H6 | CTRLM -> CU | 0.243 | 4.361 | 0.033 | Positive | Supported * |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Saeed Al-Maroof, R.; Alhumaid, K.; Salloum, S. The Continuous Intention to Use E-Learning, from Two Different Perspectives. Educ. Sci. 2021, 11, 6. https://doi.org/10.3390/educsci11010006

Saeed Al-Maroof R, Alhumaid K, Salloum S. The Continuous Intention to Use E-Learning, from Two Different Perspectives. Education Sciences. 2021; 11(1):6. https://doi.org/10.3390/educsci11010006

Chicago/Turabian StyleSaeed Al-Maroof, Rana, Khadija Alhumaid, and Said Salloum. 2021. "The Continuous Intention to Use E-Learning, from Two Different Perspectives" Education Sciences 11, no. 1: 6. https://doi.org/10.3390/educsci11010006

APA StyleSaeed Al-Maroof, R., Alhumaid, K., & Salloum, S. (2021). The Continuous Intention to Use E-Learning, from Two Different Perspectives. Education Sciences, 11(1), 6. https://doi.org/10.3390/educsci11010006