Patrimonializarte: A Heritage Education Program Based on New Technologies and Local Heritage

Abstract

1. Introduction

1.1. The Evaluation of Heritage Education Programs

1.2. An Evaluation of New Technologies in Heritage Education

1.3. An Approach to Evaluation Research in Education

1.4. The Present Study

- An evaluation of the design of a heritage education program based on the use of ICT and local heritage. Is the design of the program coherent and relevant?

- The effectiveness of the use of ICT in the development of heritage education programs. What are students’ assessments of the methodology used?

2. Materials and Methods

2.1. Context and Participants

2.2. Procedure Prior to Implementation

- Information and planning: The teachers inform the pupils of the planning and methodology which will be carried out throughout the academic year.

- Performance of classroom tasks: The pupils carry out the planned activities in order to achieve the aims proposed in the program.

- Carrying out of joint tasks: Activities in which the three levels converge (transmitting results from one year group to another, school celebrations, exhibitions open to the school and local community).

- Evaluation: Evaluation of the knowledge acquired by the pupils and of the program by both pupils and teachers.

- Knowledge transfer: The final products of the program are presented to both the school and the local community via digital resources.

2.3. The Design of the Program

- The evaluation of necessities (detection, prioritisation, and selection of primary needs and the design of the program based on these aspects);

- The initial evaluation (of the design and the tools);

- The implementation of the program;

- The formative evaluation (changes, adjustments and improvements to the program);

- The summative evaluation (analysis of the results and effects of the program);

- Meta-evaluation.

- To design activities relating to heritage elements in proximity to the students in order to stimulate their motivation and identification.

- To include activities that contemplate the use of digital resources in order to encourage the pupils’ motivation.

2.4. Tools

3. Results

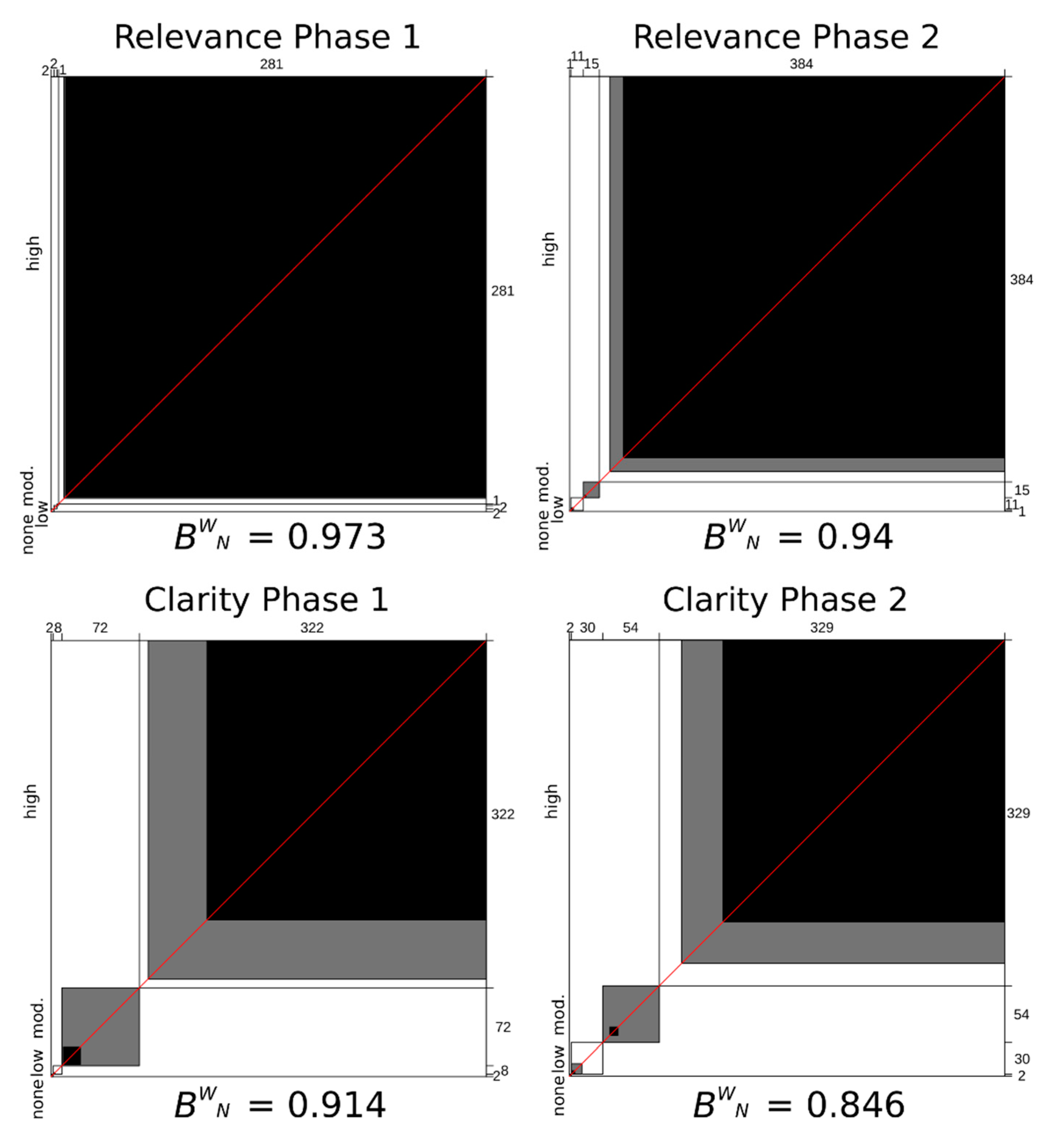

3.1. Analysis

3.2. Evaluation of the Design

3.3. Evaluation of Digital Resources by the Students

3.4. Evaluation of Digital Resources by Teaching Staff

4. Discussion and Conclusions

4.1. Program Design

4.2. Digital Resources

4.3. Main Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Castro-Calviño, L.; López-Facal, R. Heritage education: Felt needs of the teachers of pre-school, primary and secondary education. RIFOP 2019, 94, 97–114. [Google Scholar]

- Fontal, O.; Ibáñez-Etxeberría, A. Estrategias e instrumentos para la educación patrimonial en España. Educ. Siglo XXI 2015, 33, 15–32. [Google Scholar] [CrossRef]

- Fontal, O. Educación patrimonial: Retrospectiva y prospectivas para la próxima década. Estud. Pedagóg. 2016, 42, 415–436. [Google Scholar] [CrossRef]

- Gómez-Redondo, C.; Masachs, R.; Fontal, O. Diseño de un instrumento de análisis para recursos didácticos patrimoniales. Cadmo 2017, 25, 63–80. [Google Scholar] [CrossRef]

- Fontal, O.; Martínez, M. Evaluación de programas educativos sobre Patrimonio Cultural Inmaterial. Estud. Pedagóg. 2017, 43, 69–89. [Google Scholar] [CrossRef][Green Version]

- Marín-Cepeda, S.; García-Ceballos, S.; Vicent, N.; Gillate, I.; Gómez-Redondo, C. Educación patrimonial inclusiva en OEPE: Un estudio prospectivo. Rev. Educ. 2017, 375, 110–135. [Google Scholar]

- Sánchez, I.; Fontal, O.; Rodríguez, J. The Assessment: A pending subject in Heritage Education. RIFOP 2019, 94, 163–186. [Google Scholar]

- Fontal, O.; García, S.; Arias, B.; Arias, V. Assessing the Quality of Heritage Education Programs: Construction and Calibration of the Q-Edutage Scale. Rev. Psicodidáct. 2019, 24, 31–38. [Google Scholar]

- Fontal, O.; García, S. Assessment of Heritage Education programs: Quality standards. ENSAYOS 2019, 34, 1–15. [Google Scholar]

- Ibáñez-Etxeberria, A.; Kortabitarte, A.; De Castro, P.; Gillate, I. Digital competence using heritage theme apps in the DigComp framework. REIFOP 2019, 22, 13–27. [Google Scholar]

- Fombona, J.; Vázquez-Cano, E. Posibilidades de utilización de la Geolocalización y Realidad Aumentada en el ámbito educativo. Educ. XX1 2017, 20, 319–342. [Google Scholar]

- Ott, M.; Pozzi, F. Towards a new era for cultural heritage education: Discussing the role of ICT. Comput. Hum. Behav. 2010, 27, 1365–1371. [Google Scholar] [CrossRef]

- Fontal, O.; García, S. Las plataformas 2.0 como herramientas de aprendizaje y adquisición de competencias en educación patrimonial. RIFOP 2019, 94, 285–306. [Google Scholar]

- Vicent, N.; Ibáñez-Etxeberria, A.; Asensio, M. Evaluation of heritage education technology-based programs. VAR 2015, 6, 1989–9947. [Google Scholar] [CrossRef]

- Calaf, R.; San Fabián, J.L.; Gutiérrez, S. Evaluating educational programs in museums: A new perspective. Bordón 2017, 69, 45–65. [Google Scholar]

- Kortabitarte, A.; Ibáñez-Etxeberria, A.; Luna, U.; Vicent, N.; Gillate, I.; Molero, B.; Kintana, J. Dimensions for learning assessment on APPs regarding heritage. Pulso 2017, 40, 17–33. [Google Scholar]

- Poce, A.; Agrusti, F.; Re, M.R. Mooc design and heritage education. Developing soft and work-based skills in higher education students. J. E Learn. Knowl. Soc. 2017, 13, 97–107. [Google Scholar]

- Ibáñez-Etxeberría, A.; Fontal, O.; Rivero, P. Heritage education and ICT in Spain: Regulatory framework, structuring variables and main points of reference. ARBOR 2018, 194, a448. [Google Scholar]

- Lukas, J.F.; Santiago, K. Evaluación Educative; Alianza Editorial: Madrid, Spain, 2004. [Google Scholar]

- Guba, E.G.; Lincoln, Y.S. Fourth Generation Evaluation; Sage Publications: Thousand Oaks, CA, USA, 1989. [Google Scholar]

- Tyler, R.W. Changing concepts of educational evaluation. In Perspectives of Curriculum Evaluation; AERA Monograph Series Curriculum Evaluation: Chicago, IL, USA, 1967. [Google Scholar]

- Escudero, T. La Construcción de la Investigación Evaluativa. El Aporte Desde la Educación, 1st ed.; Prensas Universitarias de Zaragoza: Zaragoza, Spain, 2011. [Google Scholar]

- Cronbach, L.J. Course improvement through evaluation. Teach. Coll. Rec. 1963, 64, 672–683. [Google Scholar]

- Scriven, M. The methodology of evaluation. In Perspectives of Curriculum Evaluation; AERA Monograph Series Curriculum Evaluation: Chicago, IL, USA, 1967. [Google Scholar]

- Stufflebeam, D.L. Educational Evaluation and Decision-Making; Evaluation Center, Ohio State University: Columbus, OH, USA, 1970. [Google Scholar]

- Alkin, M. An approach to evaluation theory development. Stud. Educ. Eval. 1979, 5, 125–127. [Google Scholar] [CrossRef]

- Scriven, M. Goal-free evaluation. In School Evaluation: The Politics and Process; House, E.R., Ed.; McCutchan: Berkeley, CA, USA, 1973; pp. 319–328. [Google Scholar]

- Stake, R.E. Evaluating the Arts in Education: A Responsive Approach; Merrill: Columbus, OH, USA, 1975. [Google Scholar]

- McDonald, B. Evaluation and the control of education. In Curriculum Evaluation Today: Trends and Implications; Tawney, D., Ed.; McMillan: London, UK, 1976; pp. 125–136. [Google Scholar]

- Parlett, M.; Hamilton, D. Evaluation as illumination: A new approach to the study of innovative programmes. In Beyond the Numbers Game; Hamilton, D., Ed.; MacMillan: London, UK, 1977. [Google Scholar]

- Eisner, E.W. The Art of Educational Evaluation; The Falmer Press: London, UK, 1985. [Google Scholar]

- Joint Committee on Standards for Educational Evaluation. Standards for Evaluations of Educational Programs, Projects, and Materials, 1st ed.; McGraw-Hill: New York, NY, USA, 1988. [Google Scholar]

- Pérez, R. Evaluación de Programas Educativos; Muralla: Madrid, Spain, 2006. [Google Scholar]

- Escudero, T. La investigación evaluativa en el Siglo XXI: Un instrumento para el desarrollo educativo y social cada vez más relevante. RELIEVE 2016, 22, 1–20. [Google Scholar] [CrossRef][Green Version]

- Scriven, M. An introduction to the meta-evaluation. Educ. Prod. Rep. 1969, 2, 36–38. [Google Scholar]

- Stufflebeam, D.L. The metaevaluation imperative. Am. J. Eval. 2001, 22, 183–209. [Google Scholar] [CrossRef]

- Stake, R.E. The Art of Case Study Research; Sage Publications: London, UK, 1995. [Google Scholar]

- Cook, T.D.; Reichardt, C.H.S. Qualitative and Quantitative Methods in Evaluation Research; Sage Publications: London, UK, 1982. [Google Scholar]

- Tejedor, J. El diseño y los diseños en la evaluación de programas. RIE 2000, 18, 319–340. [Google Scholar]

- Fetterman, D.M.; Kaftarian, S.J.; Wandersman, A. Empowerment Evaluation: Knowledge and Tools for Self-Assessment, Evaluation Capacity Building, and Accountability, 2nd ed.; Sage Publications: Thousand Oaks, CA, USA, 2015. [Google Scholar]

- Pinto, H.; Zarbato, J. Construyendo un aprendizaje significativo a través del patrimonio local: Prácticas de Educación patrimonial en Portugal y Brasil. Estud. Pedagóg. 2017, 43, 203–227. [Google Scholar] [CrossRef]

- Saorín, J.; Meier, C.; De la Torre-Cantero, J.; Carbonell-Carrera, C.; Melián-Díaz, D.; Bonnet de León, A. Competencia Digital: Uso y manejo de modelos 3D tridimensionales digitales e impresos en 3D. EDMETIC 2017, 6, 27–46. [Google Scholar] [CrossRef]

- Bangdiwala, K. Using SAS software graphical procedures for the observer agreement chart. In Proceedings of the SAS Users Group International Conference, Dallas, TX, USA, 8–11 February 1987; pp. 1083–1088. [Google Scholar]

- Muñoz, S.R.; Bangdiwala, S.I. Interpretation of kappa and B statistics measures of agreement. J. Appl. Stat. 1997, 24, 105–111. [Google Scholar] [CrossRef]

- Fombona, J.; de Goulao, M.F.; García, M. Melhorar a atratividade da informação a través do uso da Realidade. Perspect. Cienc. Inform. 2014, 19, 37–50. [Google Scholar] [CrossRef][Green Version]

| Needs assessment (Detection, prioritisation and selection of primary needs) | Planning | Contact with schools | Meta-evaluation |

| Meeting with participating teachers | |||

| Design of the program based on needs | |||

| Initial evaluation | Coherence and relevance of the program | ||

| Tools | |||

| Implementation (one course September-June) | Infant education | ||

| Primary education | |||

| Secondary education | |||

| Formative evaluation | Assessment of student knowledge | ||

| Evaluation of partial achievement of the program by teachers and families | |||

| Changes, adjustments and improvements to the program | |||

| Summative evaluation | Assessment of student knowledge | ||

| Evaluation of the final results and effects of the program by teachers, families and local authorities |

| Agents Directly Involved in the Education Process | External Agents | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Total of pupils per level | Total of teachers per level | ||||||||||||

| Year and level | Pupils | Teachers | Infants | Primary | Secondary | Infants | Primary | Secondary | Fathers, mothers, legal guardians | 22 | Local authorities | 2 | |

| School 1 | 6th infants | 15 | 1 | 39 | 60 | 12 | 2 | 3 | 7 | ||||

| 5th primary | 10 | 1 | |||||||||||

| 2nd secondary | 12 | 7 | |||||||||||

| School 2 | 6th infants | 24 | 1 | 21 | 2 | ||||||||

| 2nd primary | 25 | 1 | |||||||||||

| 3rd primary | 25 | 1 | |||||||||||

| Roles of the Agents Involved in the Program | |

|---|---|

| Teaching staff | To review the content guide, activities, competences, teaching aims, criteria and standards of evaluation provided by the external evaluator in order to propose changes and improvements. |

| To establish the number of work sessions and the annual calendar, to review the tasks and grouping of pupils for the work of the program. | |

| To monitor the degree to which the objectives of their part of the program are achieved. | |

| To guide the pupils towards independent learning, motivating them to work autonomously and offering all the information and resources needed during the implementation of the program. | |

| To evaluate the program according to the criteria and standards set out in the evaluation tools. | |

| Coordinator of the program in the school | To call the participating teaching staff to the preparatory and monitoring meetings of the program and advise of any possible changes in the schedule. |

| To collect the evaluation tools from the teachers. | |

| To resolve any doubts which may arise during the course of the program in collaboration with the external evaluator. | |

| External evaluator | To draw up a guide to the program, with contents, activities, competences, teaching aims, criteria and standards of evaluation for each year group. |

| To carry out participant observation during the implementation in order to check the evolution of the program, its problems and possible improvements. | |

| To provide all necessary help at each stage of the application of the education program and evaluate the entire process. |

| Phase 1 | Phase 2 | ||||

|---|---|---|---|---|---|

| Agents | Objectives | Methodology | Agents | Objectives | Methodology |

| Students | Know Respect Preserve Understand Value Enjoy | Activities to raise awareness and processes of identification Opening up to the family and education community | Students | Transmit Act Disseminate | Activities for taking action regarding heritage and dissemination General opening up |

| Teachers | Teachers | ||||

| Family | Family | ||||

| Education community | Education community | ||||

| Local community | |||||

| Heritage managers | |||||

| Evaluation of needs and initial evaluation | Formative evaluation | Summative evaluation and meta-evaluation | |||

| Activities Phase 1 | Activities Phase 2 | |||

|---|---|---|---|---|

| Infant Education (IE) | A1 | Classroom work with digitalised old photographs: School, the passing of time | No activities were carried out relating to ICT | |

| Primary Education (PE) | A2 | Classroom work with digitalised old photographs: The local area and traditional professions | A6 | Field trip to record audio-visual material: forgotten heritage |

| A3 | Field trip to work with photography: Perspectives and shots, changes and continuities in the local area | A7 | Editing of audio-visual materials for dissemination with the CyberLink PowerDirector program | |

| Secondary Education (SE) | A4 | Classroom work with digitalised old photographs: Changes and continuities in the local area | A8 | Work with the Geoaumentaty program: geolocation and geo-referencing of heritage elements researched by all the groups |

| A5 | Editing of audio-visual materials with the Ciberlink Power Director program: Changes and continuities in the local area | A9 | Work with the BlocksCAD program and Ultimaker Cura for the editing and design of a 3D dolmen | |

| Tool 1-Pretest | Tool 2-Posttest |

|---|---|

| C1. Perceptions regarding heritage | C1. Perceptions regarding heritage |

| C2. The passing of time | C2. The passing of time |

| C3. Evaluation of the heritage | C3. Evaluation of the heritage |

| C4. Significant learning | |

| C5. Evaluation of the methodology |

| Tool 1 | Tool 2 (10 Items) | |

|---|---|---|

| C1. Signage | C1. | Knowledge acquired regarding the heritage |

| C2. Preservation | C2. | Awareness of preservation |

| C3. Human action | C3. | Affective and emotional capacities |

| C4. Additional information | C4. | Evaluation of the methodology |

| Phase 1 | Phase 2 |

|---|---|

| C1. Partial achievements of the program | C1. Efficiency. Final achievements |

| C2. Relevance of the development of the contents | C2. Effectiveness. Achievements of the process |

| C3. Usefulness of the activities | C3. Efficiency. Achievements in the improvement of the teaching |

| C4. Suitability of the resources | C4. Impact: overall results |

| C5. Suitability of the methodology | C5. Degree of satisfaction |

| C6. Degree of satisfaction |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Castro-Calviño, L.; Rodríguez-Medina, J.; Gómez-Carrasco, C.J.; López-Facal, R. Patrimonializarte: A Heritage Education Program Based on New Technologies and Local Heritage. Educ. Sci. 2020, 10, 176. https://doi.org/10.3390/educsci10070176

Castro-Calviño L, Rodríguez-Medina J, Gómez-Carrasco CJ, López-Facal R. Patrimonializarte: A Heritage Education Program Based on New Technologies and Local Heritage. Education Sciences. 2020; 10(7):176. https://doi.org/10.3390/educsci10070176

Chicago/Turabian StyleCastro-Calviño, Leticia, Jairo Rodríguez-Medina, Cosme J. Gómez-Carrasco, and Ramón López-Facal. 2020. "Patrimonializarte: A Heritage Education Program Based on New Technologies and Local Heritage" Education Sciences 10, no. 7: 176. https://doi.org/10.3390/educsci10070176

APA StyleCastro-Calviño, L., Rodríguez-Medina, J., Gómez-Carrasco, C. J., & López-Facal, R. (2020). Patrimonializarte: A Heritage Education Program Based on New Technologies and Local Heritage. Education Sciences, 10(7), 176. https://doi.org/10.3390/educsci10070176