Investigating Network Coherence to Assess Students’ Conceptual Understanding of Energy

Abstract

1. Introduction

2. Literature Review

2.1. Network Analysis

2.2. Network Analyses of Conceptual Knowledge

2.3. Energy as a Concept

3. Research Objectives and Hypotheses

- To what extent can network parameters deduced from spoken word transcripts explain students’ test scores after working on a series of energy-related experiments (taking into account students’ prior knowledge)?

- Do network parameters (i.e., coherence) and static parameters (i.e., number of nodes) differ in their predictive power regarding students’ test score?

4. Materials and Methods

4.1. Setting and Sample

4.2. Methodology

Initial statement:Yes, wait, well in the flashlight it is transformed from the chemical energy to electric energy.Cleaned statement:flashlight transform chemical energy electric energy

Initial statement:Well it comes out of the plug as electric energy.Cleaned statement:plug electric energy

5. Results

6. Discussion

6.1. Methodological Discussion

6.2. Theoretical Discussion

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

Appendix B

| Term | Frequency | Term | Frequency | Term | Frequency | Term | Frequency |

|---|---|---|---|---|---|---|---|

| energy | 954 | fall | 50 | wind generator | 23 | hydrogen | 16 |

| wind | 205 | expenditure | 48 | automobile | 22 | fossil | 15 |

| water | 202 | solar | 48 | glucose | 22 | fuel | 15 |

| convert | 191 | Naturally | 47 | submit | 21 | happen | 15 |

| electric | 187 | oxygen | 45 | drive | 21 | physics | 15 |

| kinetic | 147 | burn | 45 | steam | 21 | use | 15 |

| thermal | 141 | volt | 43 | natural gas | 21 | take up | 14 |

| efficiency | 135 | produce | 41 | transport | 21 | Fire | 14 |

| radiation | 122 | rotate | 38 | environment | 21 | electric wire | 14 |

| power plant | 115 | socket | 38 | steam engine | 20 | long-wave | 14 |

| chemically | 113 | to use | 34 | to get | 19 | alga | 13 |

| consume | 109 | potentially | 34 | shortwave | 19 | ecologically | 13 |

| usable | 93 | gas | 33 | to produce | 19 | earth | 12 |

| warmth | 92 | plant | 33 | resistance | 19 | plane | 12 |

| radiation | 89 | alcohol | 32 | effectively | 18 | power-to-ogas | 12 |

| electricity | 78 | amp | 29 | sea | 18 | chlorophyll | 11 |

| need | 76 | 29 | rub | 18 | formula | 11 | |

| to save | 69 | petrol | 28 | sailing | 18 | lie | 11 |

| coal | 67 | light bulb | 28 | go | 17 | source | 11 |

| use | 67 | north | 28 | man | 17 | blow | 11 |

| move | 65 | warm | 28 | react | 17 | battery | 10 |

| fuel | 62 | to take | 27 | see | 17 | organic | 10 |

| arise | 59 | photosynthesis | 25 | pass | 17 | renewable | 10 |

| Sun | 54 | solar system | 24 | form of energy | 16 | dye | 10 |

| air | 52 | differentiate | 24 | put in | 16 | area | 10 |

| generator | 51 | calorific value | 23 | Life | 16 | release | 10 |

| high | 51 | force | 23 | material | 16 | conduct | 10 |

| methane | 10 | Wood | 7 | Kelvin | 5 | reflect | 3 |

| optimal | 10 | Kerosene | 7 | condense | 5 | south | 3 |

| spare | 10 | candle | 7 | Mix | 5 | gravure | 3 |

| temperature | 10 | vacuum cleaner | 7 | engine | 5 | separate | 3 |

| transfer | 10 | current | 7 | solarium | 5 | evaporate | 3 |

| absorb | 9 | work | 6 | columns | 5 | block | 2 |

| alternative | 9 | nuclear power | 6 | surfing | 5 | seal | 2 |

| spend | 9 | form | 6 | cover | 4 | plant | 2 |

| fishing | 9 | chloroplast | 6 | set fire | 4 | arrive | 2 |

| to run | 9 | heat | 6 | hit | 4 | warm up | 2 |

| food | 9 | device | 6 | runthrough | 4 | broadcast | 2 |

| network | 9 | global | 6 | to fly | 4 | camping | 2 |

| photovoltaics | 9 | House | 6 | free | 4 | feed | 2 |

| ship | 9 | Cook | 6 | focus | 4 | explode | 2 |

| turbine | 9 | carbon dioxide | 6 | start up | 3 | field | 2 |

| decompose | 9 | oven | 6 | bounce | 3 | climate change | 2 |

| burn | 8 | rotor | 6 | strain | 3 | carbon | 2 |

| jet | 8 | visible | 6 | tie | 3 | power | 2 |

| win | 8 | Act | 6 | biomass | 3 | by-product | 2 |

| heater | 8 | battery pack | 5 | butane | 3 | raw material | 2 |

| joule | 8 | to breathe | 5 | receive | 3 | begin | 2 |

| deliver | 8 | align | 5 | liquid | 3 | greenhouse effect | 2 |

| pump | 8 | dynamo | 5 | coast | 3 | change | 2 |

| seem to be | 8 | devalue | 5 | Afford | 3 | oil | 2 |

| dam | 8 | oil | 5 | metal | 3 | ||

| sheet | 7 | drive | 5 | molecule | 3 | ||

| produce | 7 | bicycle | 5 | product | 3 |

Appendix C

References

- Murphy, G.L. The Big Book of Concepts; MIT Press: Cambridge, MA, USA, 2004; ISBN 0-262-63299-3. [Google Scholar]

- American Association for the Advancement of Science; National Science Teachers Association. Atlas of Science Literacy: Project 2061; AAAS: Washington, DC, USA, 2007. [Google Scholar]

- KMK. Bildungsstandards im Fach Physik für den Mittleren Schulabschluss [Educational Standards for Middle School Physics]; Sekretariat der Ständigen Konferenz der Kultusminister der Länder in der Bundesrepublik Deutschland: Bonn, Germany, 2004. [Google Scholar]

- KMK. Bildungsstandards im Fach Chemie für den Mittleren Schulabschluss [Educational Standards for Middle School Chemistry]; Sekretariat der Ständigen Konferenz der Kultusminister der Länder in der Bundesrepublik Deutschland: Bonn, Germany, 2004. [Google Scholar]

- Vosniadou, S. (Ed.) International Handbook of Research on Conceptual Change; Routledge: New York, NY, USA, 2008; ISBN 978-0-8058-6044-3. [Google Scholar]

- Goldwater, M.B.; Schalk, L. Relational categories as a bridge between cognitive and educational research. Psychol. Bull. 2016, 142, 729–757. [Google Scholar] [CrossRef]

- Carey, S. The Origin of Concepts; Oxford University Press: Oxford, UK, 2009; ISBN 978-0-19-536763-8. [Google Scholar]

- Vosniadou, S. Capturing and modeling the process of conceptual change. Learn. Instr. 1994, 4, 45–69. [Google Scholar] [CrossRef]

- Carey, S. Sources of conceptual change. In Conceptual Development: Piaget’s Legacy; Scholnick, E.K., Nelson, K., Gelman, S., Miller, P.M., Eds.; Psychology Press: New York, NY, USA, 2011; pp. 293–326. ISBN 0-8058-2500-2. [Google Scholar]

- Di Sessa, A.A. Ontologies in pieces: Response to Chi and Slotta. Cogn. Instr. 1993, 10, 272–280. [Google Scholar]

- Di Sessa, A.A.; Sherin, B.L. What changes in conceptual change? Int. J. Sci. Educ. 1998, 20, 1155–1191. [Google Scholar] [CrossRef]

- Di Sessa, A.A.; Wagner, J.F. What coordination has to say about transfer. In Transfer of Learning from a Modern Multidisciplinary Perspective; Mestre, J.P., Ed.; IAP: Greenwich, CT, USA, 2006; pp. 121–154. ISBN 9781607526735. [Google Scholar]

- Brown, D.E. Students’ conceptions as dynamically emergent structures. Sci. Educ. 2014, 23, 1463–1483. [Google Scholar] [CrossRef]

- Gentner, D.; Boroditsky, L. Individuation, relativity, and early word learning. In Language Acquisition and Conceptual Development; Bowerman, M., Levinson, S., Eds.; Cambridge University Press: Cambridge, UK, 2010; pp. 215–256. ISBN 9780521593588. [Google Scholar]

- Ozdemir, G.; Clark, D. Knowledge structure coherence in Turkish students’ understanding of force. J. Res. Sci. Teach. 2009, 46, 570–596. [Google Scholar] [CrossRef]

- Di Sessa, A.A. A bird’s-eye view of the ‘pieces’ vs. ‘coherence’ controversy (from the ‘pieces’ side of the fence). In International Handbook of Research on Conceptual Change; Vosniadou, S., Ed.; Routledge: New York, NY, USA, 2013; pp. 35–60. ISBN 9780203154472. [Google Scholar]

- Barabási, A.-L.; Albert, R.; Jeong, H. Mean-field theory for scale-free random networks. Phys. A Stat. Mech. Appl. 1999, 272, 173–187. [Google Scholar] [CrossRef]

- Daems, O.; Erkens, M.; Malzahn, N.; Hoppe, H.U. Using content analysis and domain ontologies to check learners’ understanding of science concepts. J. Comput. Educ. 2014, 1, 113–131. [Google Scholar] [CrossRef]

- Bellocchi, A.; King, D.T.; Ritchie, S.M. Context-based assessment: Creating opportunities for resonance between classroom fields and societal fields. Int. J. Sci. Educ. 2016, 38, 1304–1342. [Google Scholar] [CrossRef]

- Chi, M.T.H.; VanLehn, K.A. Seeing deep structure from the interactions of surface features. Educ. Psychol. 2012, 47, 177–188. [Google Scholar] [CrossRef]

- Wagner, J.F. A transfer-in-pieces consideration of the perception of structure in the transfer of learning. J. Learn. Sci. 2010, 19, 443–479. [Google Scholar] [CrossRef]

- Kapon, S.; di Sessa, A.A. Reasoning through instructional analogies. Cogn. Instr. 2012, 30, 261–310. [Google Scholar] [CrossRef]

- Stains, M.N.; Talanquer, V. Classification of chemical reactions: Stages of expertise. J. Res. Sci. Teach. 2008, 45, 771–793. [Google Scholar] [CrossRef]

- Bodin, M. Mapping university students’ epistemic framing of computational physics using network analysis. Phys. Rev. Spec. Top. Phys. Educ. Res 2012, 8, 117. [Google Scholar] [CrossRef]

- Koponen, I.T.; Huttunen, L. Concept development in learning physics: The case of electric current and voltage revisited. Sci. Educ. 2013, 22, 2227–2254. [Google Scholar] [CrossRef]

- Koponen, I.T.; Nousiainen, M. Modelling students’ knowledge organisation: Genealogical conceptual networks. Phys. A Stat. Mech. Appl. 2018, 495, 405–417. [Google Scholar] [CrossRef]

- Thagard, P. Coherence in Thought and Action; MIT Press: Cambridge, MA, USA, 2000; ISBN 9780262700924. [Google Scholar]

- Park, H.-J.; Friston, K. Structural and functional brain networks: From connections to cognition. Science 2013, 342, 1238411. [Google Scholar] [CrossRef]

- Lee, T.L.; Alba, D.; Baxendale, V.; Rennert, O.M.; Chan, W.-Y. Application of transcriptional and biological network analyses in mouse germ-cell transcriptomes. Genomics 2006, 88, 18–33. [Google Scholar] [CrossRef]

- Borgatti, S.P.; Mehra, A.; Brass, D.J.; Labianca, G. Network analysis in the social sciences. Science 2009, 323, 892–895. [Google Scholar] [CrossRef]

- Brandes, U.; Erlebach, T. (Eds.) Network Analysis: Methodological Foundations; Springer: Berlin, Germany, 2005; ISBN 3-540-24979-6. [Google Scholar]

- Novak, J.D.; Canas, A.J. The Theory Underlying Concept Maps and How to Construct Them; Technical Report; IHMC CmapTools: Pensacola, FL, USA, 2008. [Google Scholar]

- Ruiz-Primo, M.A. Examining concept maps as an assessment tool. In Concept Maps: Theory, Methodology, Technology, Proceedings of the First International Conference on Concept Mapping, Pamplona, Spain, 14–17 September 2004; Canas, A.J., Novak, J.D., Gonzalez, F.M., Eds.; Dirección de Publicaciones de la Universidad Pública de Navarra: Pamplona, Spain, 2004; pp. 36–43. [Google Scholar]

- Ruiz-Primo, M.A.; Shavelson, R.J. Problems and issues in the use of concept maps in science assessment. J. Res. Sci. Teach. 1996, 33, 569–600. [Google Scholar] [CrossRef]

- Jacobson, M.J.; Kapur, M. Ontologies as scale free networks: Implications for theories of conceptual change. In Learning in the Disciplines, Proceedings of the 9th International Conference of the Learning Sciences; Gomez, K., Lyons, L., Radinsky, J., Eds.; International Society of the Learning Sciences: Chicago, IL, USA, 2010; pp. 193–194. [Google Scholar]

- Manske, S.; Hoppe, H.U. The “concept cloud”: Supporting collaborative knowledge construction based on semantic extraction from learner-generated artefacts. In Proceedings of the IEEE 16th International Conference on Advanced Learning Technologies (ICALT), Austin, TX, USA, 25–28 July 2016; pp. 302–306. [Google Scholar]

- Chi, M.T.H.; Feltovich, P.J.; Glaser, R. Categorization and representation of physics problems by experts and novices. Cogn. Sci. 1981, 5, 121–152. [Google Scholar] [CrossRef]

- Koedinger, K.R.; Corbett, A.T.; Perfetti, C. The knowledge-learning-instruction framework: Bridging the science-practice chasm to enhance robust student learning. Cogn. Sci. 2012, 36, 757–798. [Google Scholar] [CrossRef] [PubMed]

- Gupta, A.; Hammer, D.; Redish, E.F. The case for dynamic models of learners’ ontologies in physics. J. Learn. Sci. 2010, 19, 285–321. [Google Scholar] [CrossRef]

- Sherin, B. A computational study of commonsense science: An exploration in the automated analysis of clinical interview data. J. Learn. Sci. 2013, 22, 600–638. [Google Scholar] [CrossRef]

- Koponen, I.T.; Nousiainen, M. Concept networks in learning: Finding key concepts in learners’ representations of the interlinked structure of scientific knowledge. IMA J. Complex Netw. 2014. [Google Scholar] [CrossRef]

- Rafols, I.; Meyer, M. Diversity and network coherence as indicators of interdisciplinarity: Case studies in bionanoscience. Scientometrics 2010, 82, 263–287. [Google Scholar] [CrossRef]

- Feynman, R.P.; Leighton, R.B.; Sands, M.L. The Feynman Lectures on Physics; Addison-Wesley Longman: Amsterdam, The Netherlands, 1970; ISBN 978-0201021158. [Google Scholar]

- Coopersmith, J. Energy, the Subtle Concept: The Discovery of Feynman’s Blocks from Leibniz to Einstein; Oxford University Press: New York, NY, USA, 2010; ISBN 978-0-19954650-3. [Google Scholar]

- Chen, R.F.; Eisenkraft, A.; Fortus, D.; Krajcik, J.S.; Neumann, K.; Nordine, J.; Scheff, A. (Eds.) Teaching and Learning of Energy in K—12 Education; Springer International Publishing: New York, NY, USA, 2014; ISBN 978-3-319-05016-4. [Google Scholar]

- Duit, R. Der Energiebegriff im Physikunterricht; IPN: Kiel, Germany, 1986. [Google Scholar]

- Lee, H.-S.; Liu, O.L. Assessing learning progression of energy concepts across middle school grades: The knowledge integration perspective. Sci. Educ. 2010, 94, 665–688. [Google Scholar] [CrossRef]

- Liu, X.; McKeough, A. Developmental growth in students’ concept of energy: Analysis of selected items from the TIMSS database. J. Res. Sci. Teach. 2005, 42, 493–517. [Google Scholar] [CrossRef]

- Neumann, K.; Viering, T.; Boone, W.J.; Fischer, H.E. Towards a learning progression of energy. J. Res. Sci. Teach. 2012. [Google Scholar] [CrossRef]

- Nordine, J.; Krajcik, J.S.; Fortus, D. Transforming energy instruction in middle school to support integrated understanding and future learning. Sci. Educ. 2011, 95, 670–699. [Google Scholar] [CrossRef]

- Opitz, S.T.; Neumann, K.; Bernholt, S.; Harms, U. Students’ Energy Understanding Across Biology, Chemistry, and Physics Contexts. Res Sci Educ 2019, 49, 521–541. [Google Scholar] [CrossRef]

- Duit, R. Teaching and learning the physics energy concept. In Teaching and Learning of Energy in K—12 Education; Chen, R.F., Eisenkraft, A., Fortus, D., Krajcik, J.S., Neumann, K., Nordine, J., Scheff, A., Eds.; Springer International Publishing: New York, NY, USA, 2014; ISBN 978-3-319-05016-4. [Google Scholar]

- Podschuweit, S.; Bernholt, S. Composition-Effects of Context-based Learning Opportunities on Students’ Understanding of Energy. Res Sci Educ 2018, 48, 717–752. [Google Scholar] [CrossRef]

- Biggs, J.B.; Collis, K.F. Evaluating the Quality of Learning: The SOLO Taxonomy; Academic Press: New York, NY, USA, 1982. [Google Scholar]

- Bernholt, S.; Parchmann, I. Assessing the complexity of students’ knowledge in chemistry. Chem. Educ. Res. Pract. 2011, 12, 167–173. [Google Scholar] [CrossRef]

- Heller, K.A.; Perleth, C. Kognitiver Fähigkeitstest für 5. bis 12. Klasse (KFT 5-12 + R); Hogrefe: Göttingen, Germany, 2000. [Google Scholar]

- Klostermann, M.; Busker, M.; Herzog, S.; Parchmann, I. Vorkurse als Schnittstelle zwischen Schule und Universität [Transition Courses at the Intersection of School and University]. In Inquiry-Based Learning—Forschendes Lernen: Gesellschaft für Didaktik der Chemie und Physik, Jahrestagung in Hannover 2012; Bernholt, S., Ed.; IPN: Kiel, Germany, 2013; pp. 224–226. ISBN 978-3-89088-360-1. [Google Scholar]

- Haug, B.S.; Ødegaard, M. From words to concepts: focusing on word knowledge when teaching for conceptual understanding within an inquiry-based science setting. Res. Sci. Educ. 2014, 44, 777–800. [Google Scholar] [CrossRef]

- Opsahl, T.; Agneessens, F.; Skvoretz, J. Node centrality in weighted networks: Generalizing degree and shortest paths. Soc. Netw. 2010, 32, 245–251. [Google Scholar] [CrossRef]

- Opsahl, T. Structure and Evolution of Weighted Networks; University of London (Queen Mary College): London, UK, 2009. [Google Scholar]

- Csardi, G.; Nepusz, T. The igraph software package for complex network research. InterJournal 2006, 1695, 1–9. [Google Scholar]

- Bengtsson, H. matrixStats: Functions that Apply to Rows and Columns of Matrices (and to Vectors). 2019. Available online: https://CRAN.R-project.org/package=matrixStats (accessed on 20 January 2019).

- Feinerer, I.; Hornik, K. tm: Text Mining Package. 2019. Available online: https://CRAN.R-project.org/package=tm (accessed on 20 January 2019).

- Wickham, H.; Averick, M.; Bryan, J.; Chang, W.; McGowan, L.; François, R.; Grolemund, G.; Hayes, A.; Henry, L.; Hester, J.; et al. Welcome to the tidyverse. J. Open Source Softw. 2019, 4, 1686. [Google Scholar] [CrossRef]

- Kenny, D.A. Models of non-independence in dyadic research. J. Soc. Pers. Relatsh. 1996, 13, 279–294. [Google Scholar] [CrossRef]

- Roth, W.-M. Science language wanted alive: Through the dialectical/dialogical lens of Vygotsky and the Bakhtin circle. J. Res. Sci. Teach. 2014, 51, 1049–1083. [Google Scholar] [CrossRef]

- Wellington, J.J.; Osborne, J. Language and Literacy in Science Education; Open University Press: Buckingham, UK, 2001; ISBN 0-335-20599-2. [Google Scholar]

- Kaper, W.H.; Goedhart, M.J. ‘Forms of Energy’, an intermediary language on the road to thermodynamics? Part I. Int. J. Sci. Educ. 2002, 24, 81–95. [Google Scholar] [CrossRef]

- Alin, A. Multicollinearity. WIREs Comput. Stat. 2010, 2, 370–374. [Google Scholar] [CrossRef]

- Chiu, J.L.; Linn, M.C. Knowledge Integration and wise engineering. J. Pre-Coll. Eng. Educ. Res. (J-Peer) 2011, 1. [Google Scholar] [CrossRef]

- Linn, M.C.; Eylon, B.-S. (Eds.) Science Learning and Instruction: Taking Advantage of Technology to Promote Knowledge Integration; Routledge: New York, NY, USA, 2011; ISBN 9780203806524. [Google Scholar]

- Sikorski, T.-R.; Hammer, D. A critique of how learning progressions research conceptualizes sophistication and progress. In Learning in the Disciplines, Proceedings of the 9th International Conference of the Learning Sciences; Gomez, K., Lyons, L., Radinsky, J., Eds.; International Society of the Learning Sciences: Chicago, IL, USA, 2010; pp. 1032–1039. [Google Scholar]

- Ioannides, C.; Vosniadou, S. The changing meaning of force. Cogn. Sci. Q. 2002, 2, 5–61. [Google Scholar]

| Chemical | Electric | Energy | Flashlight | Transform | |

|---|---|---|---|---|---|

| Chemical | 0 | 1 | 1 | 1 | 1 |

| Electric | - | 0 | 1 | 1 | 1 |

| Energy | - | - | 0 | 1 | 1 |

| Flashlight | - | - | - | 0 | 1 |

| Transform | - | - | - | - | 0 |

| Chemical | Electric | Energy | Flashlight | Plug | Transform | |

|---|---|---|---|---|---|---|

| Chemical | 0 | 1 | 1 | 1 | 0 | 1 |

| Electric | - | 0 | 2 | 1 | 1 | 1 |

| Energy | - | - | 0 | 1 | 1 | 1 |

| Flashlight | - | - | - | 0 | 0 | 1 |

| Plug | - | - | - | - | 0 | 0 |

| Transform | - | - | - | - | - | 0 |

| mean | sd | median | min | max | Skew | kurtosis | |

|---|---|---|---|---|---|---|---|

| Pre-test | 0.45 | 0.17 | 0.42 | 0.20 | 0.92 | 0.52 | −0.13 |

| Post-test | 0.61 | 0.16 | 0.64 | 0.28 | 0.92 | −0.30 | −0.53 |

| N words | 682.76 | 284.96 | 604.00 | 197 | 1456 | 0.66 | −0.25 |

| Vertices | 12.31 | 5.70 | 10.50 | 5 | 29 | 0.90 | −0.03 |

| Cnet | 316.66 | 355.84 | 159.43 | 7.32 | 1900.23 | 1.82 | 3.86 |

| mean | sd | median | min | max | skew | kurtosis | |

|---|---|---|---|---|---|---|---|

| N words | 2468.37 | 958.72 | 2425.00 | 969.00 | 4518.00 | 0.44 | −0.67 |

| Vertices | 35.33 | 16.48 | 35.00 | 6.00 | 65.00 | 0.01 | −1.19 |

| Cnet | 3297.32 | 2671.19 | 2664.93 | 1.00 | 9114.42 | 0.61 | −0.76 |

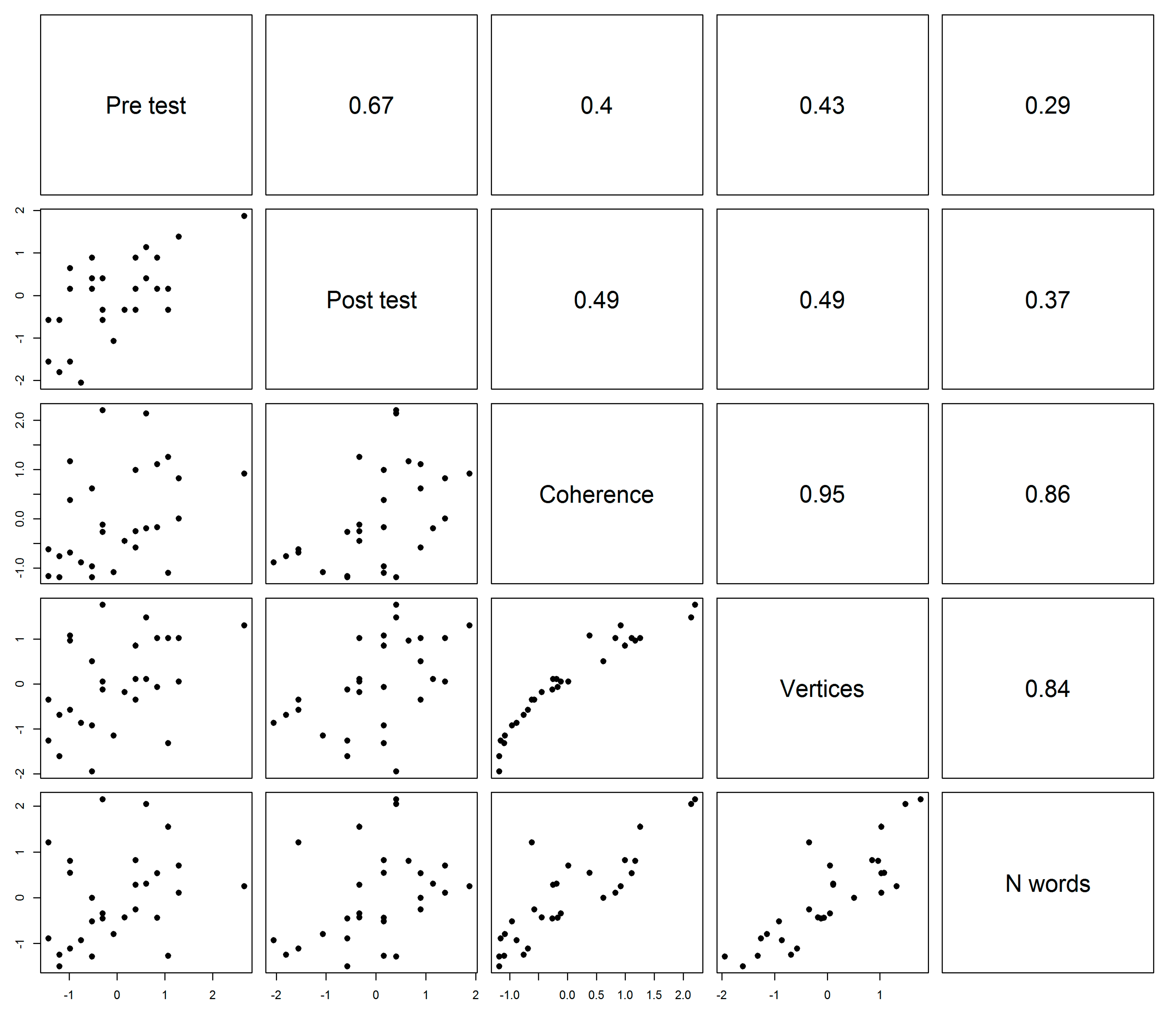

| Test Scores | Concept + Context terms | Concept Terms Only | |||||

|---|---|---|---|---|---|---|---|

| Pre-test | Post-test | Coherence | Vertices | Nwords | Coherence | ||

| Post test | 0.67 *** | - | - | - | - | - | |

| Concept + context terms | Coherence | 0.40 * | 0.49 ** | - | - | - | - |

| Vertices | 0.43 * | 0.49 ** | 0.95 *** | - | - | - | |

| N words | 0.29 | 0.37 | 0.86 *** | 0.84 *** | - | - | |

| Concept terms only | Coherence | 0.34 | 0.49 ** | 0.99 *** | 0.96 *** | 0.84 *** | - |

| Vertices | 0.40 * | 0.34 | 0.71 *** | 0.85 *** | 0.65 *** | 0.73 *** | |

| Estimate | Std. Error | β [95% CI] | p | ||

|---|---|---|---|---|---|

| (Intercept) | 0.61 | 0.02 | <0.001 | *** | |

| Pre-Test Score | 0.09 | 0.02 | 0.57 [0.28, 0.86] | <0.001 | *** |

| Network Coherence | 0.05 | 0.02 | 0.31 [0.02, 0.60] | 0.048 | * |

| Estimate | Std. Error | β [95% CI] | p | ||

|---|---|---|---|---|---|

| (Intercept) | 0.61 | 0.02 | <0.001 | *** | |

| Pre-Test Score | 0.09 | 0.02 | 0.54 [0.25, 0.84] | 0.001 | ** |

| Number of nodes | 0.06 | 0.03 | 0.34 [0.04, 0.63] | 0.034 | * |

| Estimate | Std. Error | β [95% CI] | p | ||

|---|---|---|---|---|---|

| (Intercept) | 0.61 | 0.02 | <0.001 | *** | |

| Pre-Test Score | 0.10 | 0.02 | 0.62 [0.34, 0.91] | <0.001 | *** |

| Number of words | 0.04 | 0.02 | 0.23 [−0.06, 0.52] | 0.127 | |

| Estimate | Std. Error | β [95% CI] | p | ||

|---|---|---|---|---|---|

| (Intercept) | 0.59 | 0.02 | <0.001 | *** | |

| Pre-Test Score | 0.10 | 0.02 | 0.60 [0.33, 0.87] | <0.001 | *** |

| Network Coherence | 0.08 | 0.03 | 0.40 [0.13, 0.67] | 0.005 | ** |

| Estimate | Std. Error | β [95% CI] | p | ||

|---|---|---|---|---|---|

| (Intercept) | 0.61 | 0.02 | <0.001 | *** | |

| Pre-Test Score | 0.10 | 0.03 | 0.61 [0.30, 0.92] | <0.001 | *** |

| Number of nodes | 0.04 | 0.03 | 0.20 [−0.11, 0.50] | 0.226 | |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Podschuweit, S.; Bernholt, S. Investigating Network Coherence to Assess Students’ Conceptual Understanding of Energy. Educ. Sci. 2020, 10, 103. https://doi.org/10.3390/educsci10040103

Podschuweit S, Bernholt S. Investigating Network Coherence to Assess Students’ Conceptual Understanding of Energy. Education Sciences. 2020; 10(4):103. https://doi.org/10.3390/educsci10040103

Chicago/Turabian StylePodschuweit, Sören, and Sascha Bernholt. 2020. "Investigating Network Coherence to Assess Students’ Conceptual Understanding of Energy" Education Sciences 10, no. 4: 103. https://doi.org/10.3390/educsci10040103

APA StylePodschuweit, S., & Bernholt, S. (2020). Investigating Network Coherence to Assess Students’ Conceptual Understanding of Energy. Education Sciences, 10(4), 103. https://doi.org/10.3390/educsci10040103