Digital Learning Environments in Higher Education: A Literature Review of the Role of Individual vs. Social Settings for Measuring Learning Outcomes

Abstract

1. Introduction

2. Theoretical Perspectives on Learning and Research Question

2.1. Individual Perspectives on Learning

2.2. Social Perspectives on Learning

2.3. The Research Presented Here

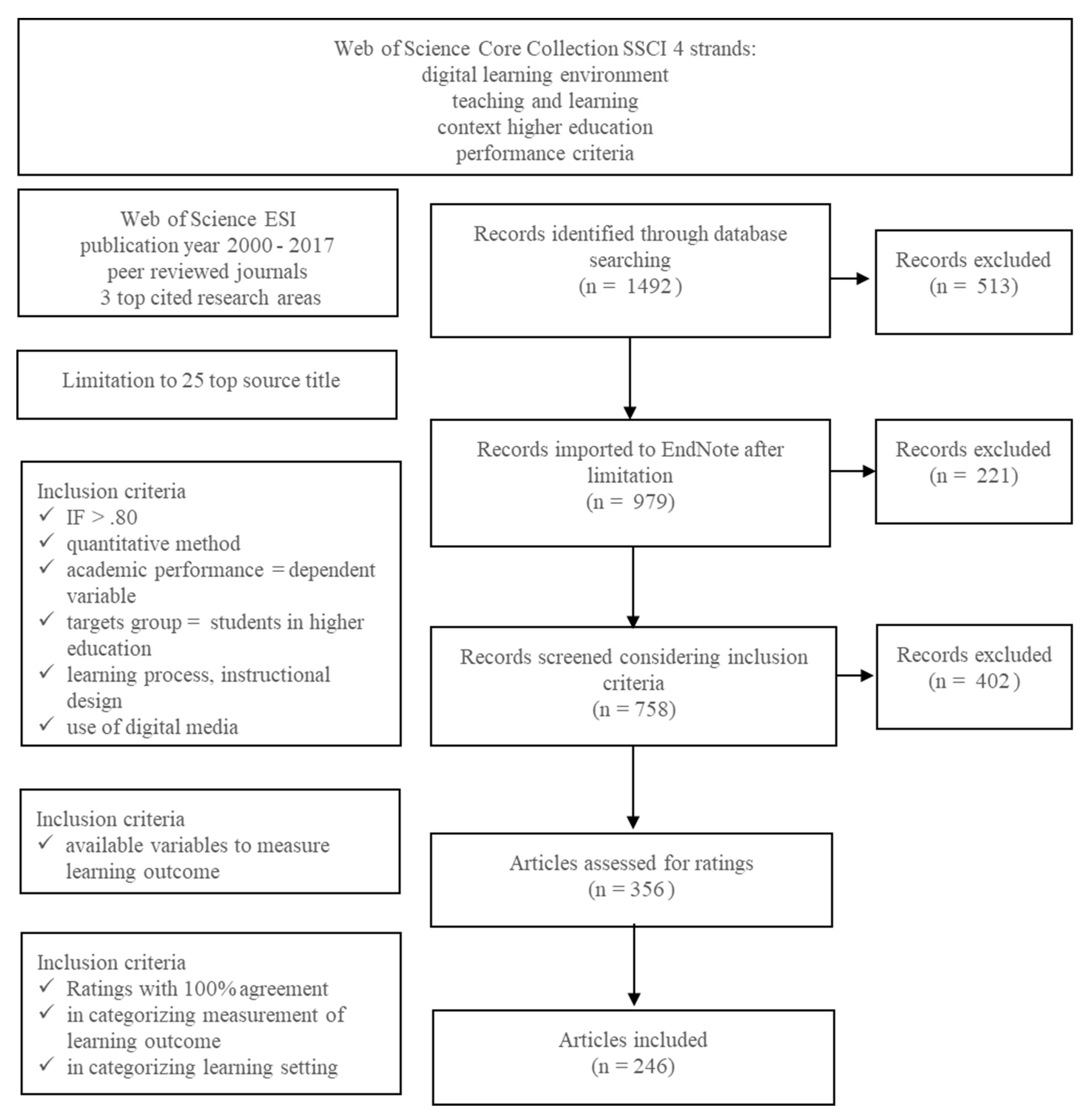

3. Method

3.1. Searching the Literature: Article Selection

3.2. Gathering Information from Studies: Coding Guide

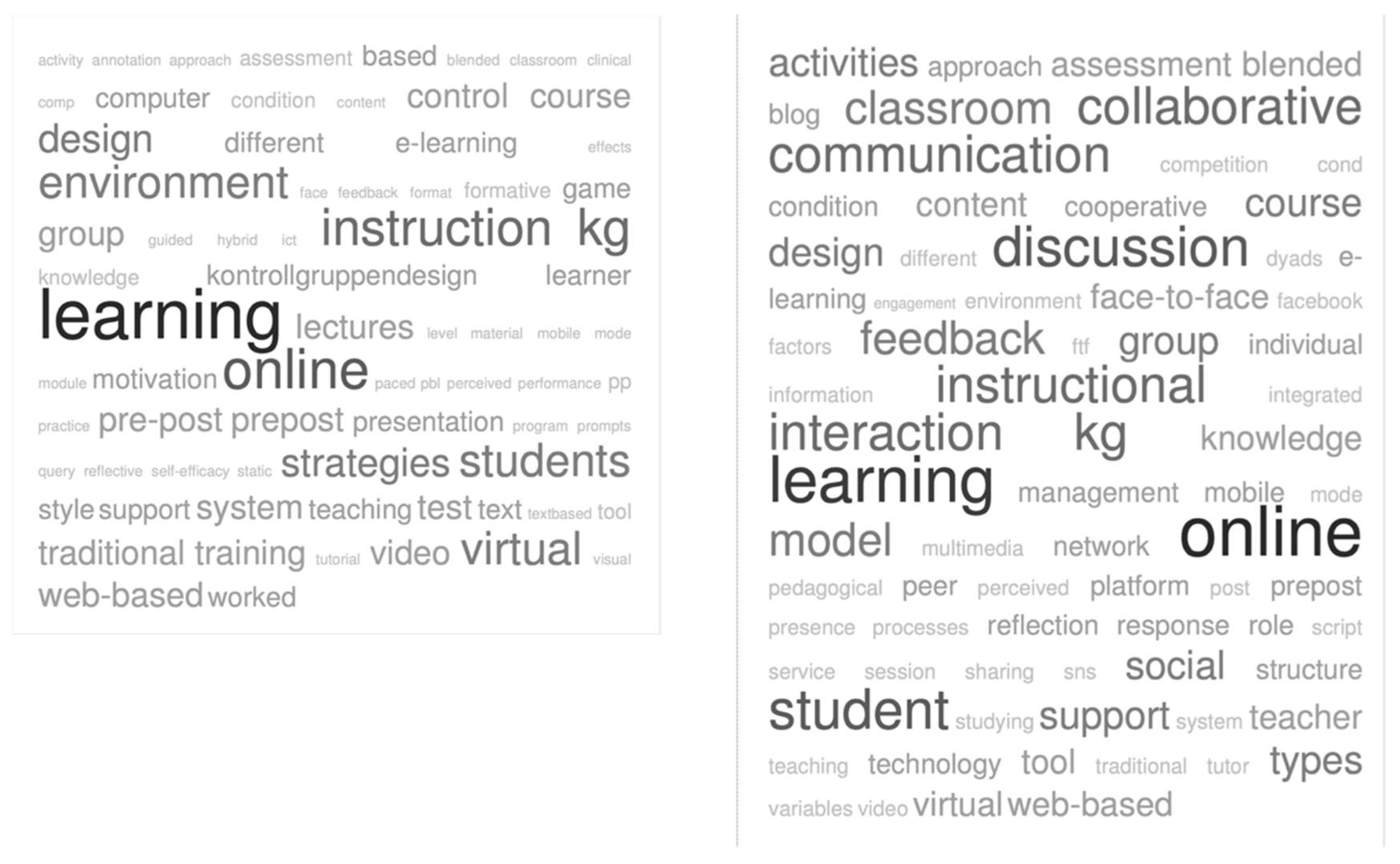

3.2.1. Learning Setting: Individual vs. Social Orientation

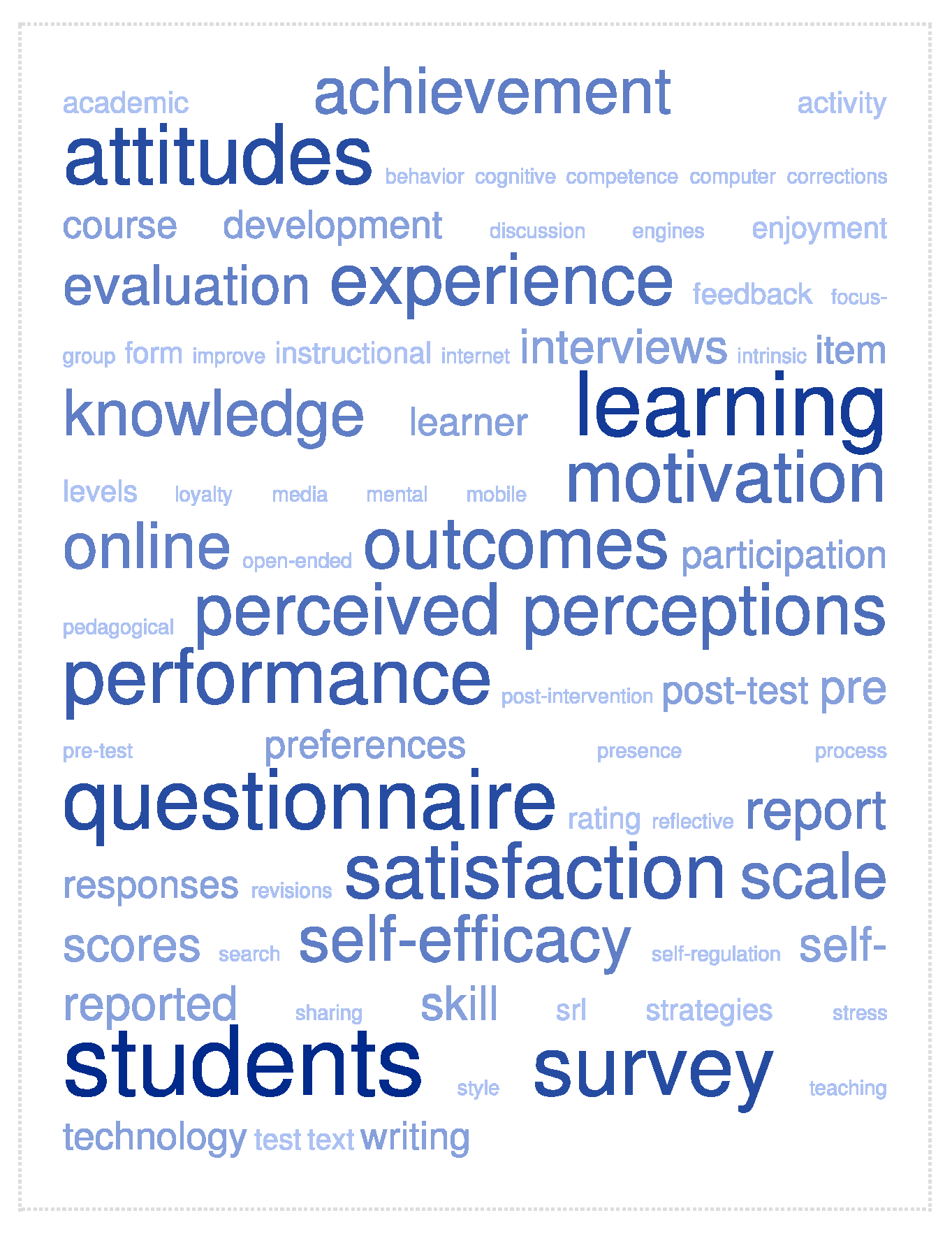

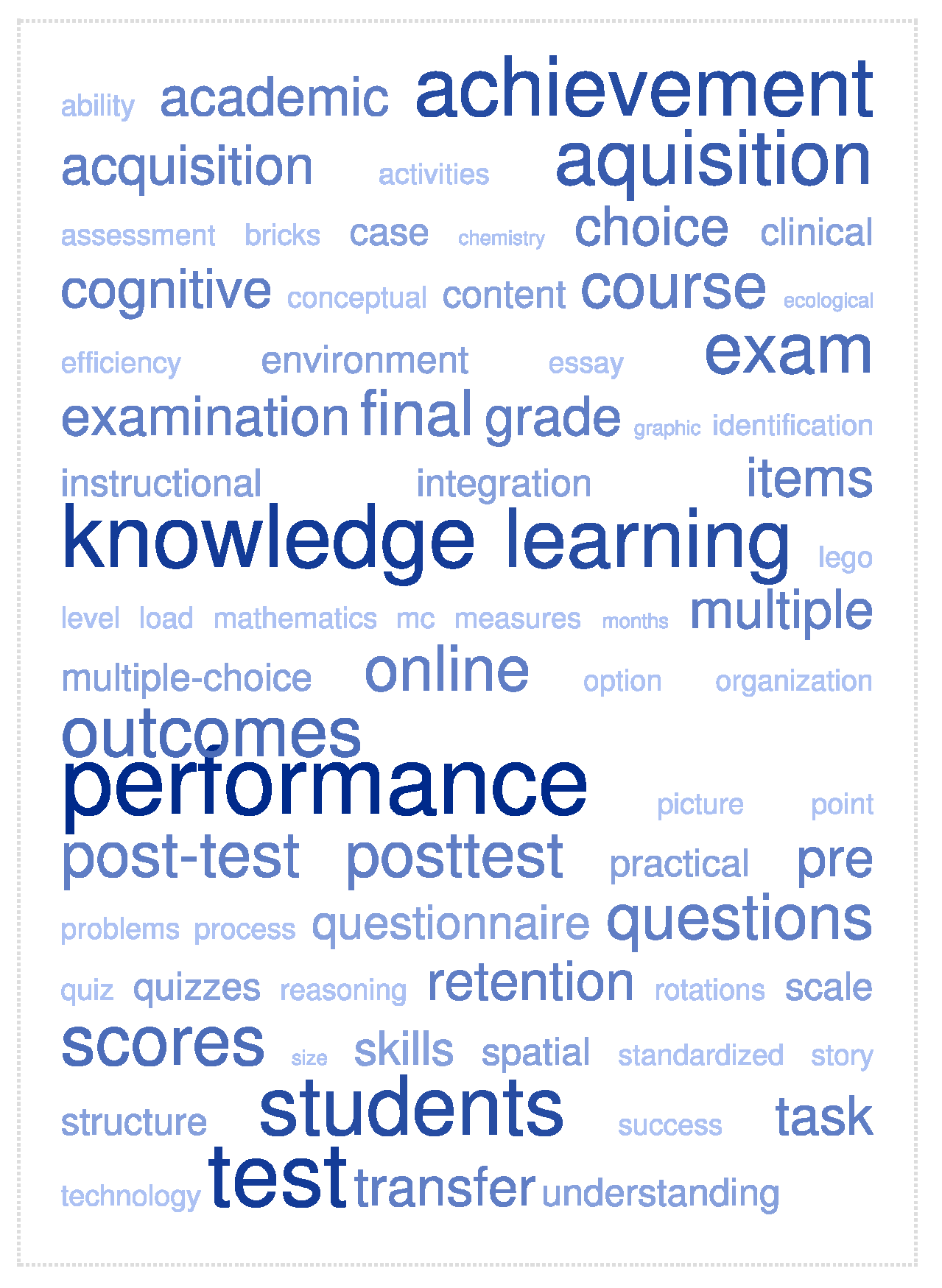

3.2.2. Measurements of Learning Outcomes

3.3. Evaluating the Quality of Studies: Rating Procedure

4. Results

4.1. Learning Settings

4.2. Measurements of Learning Outcomes

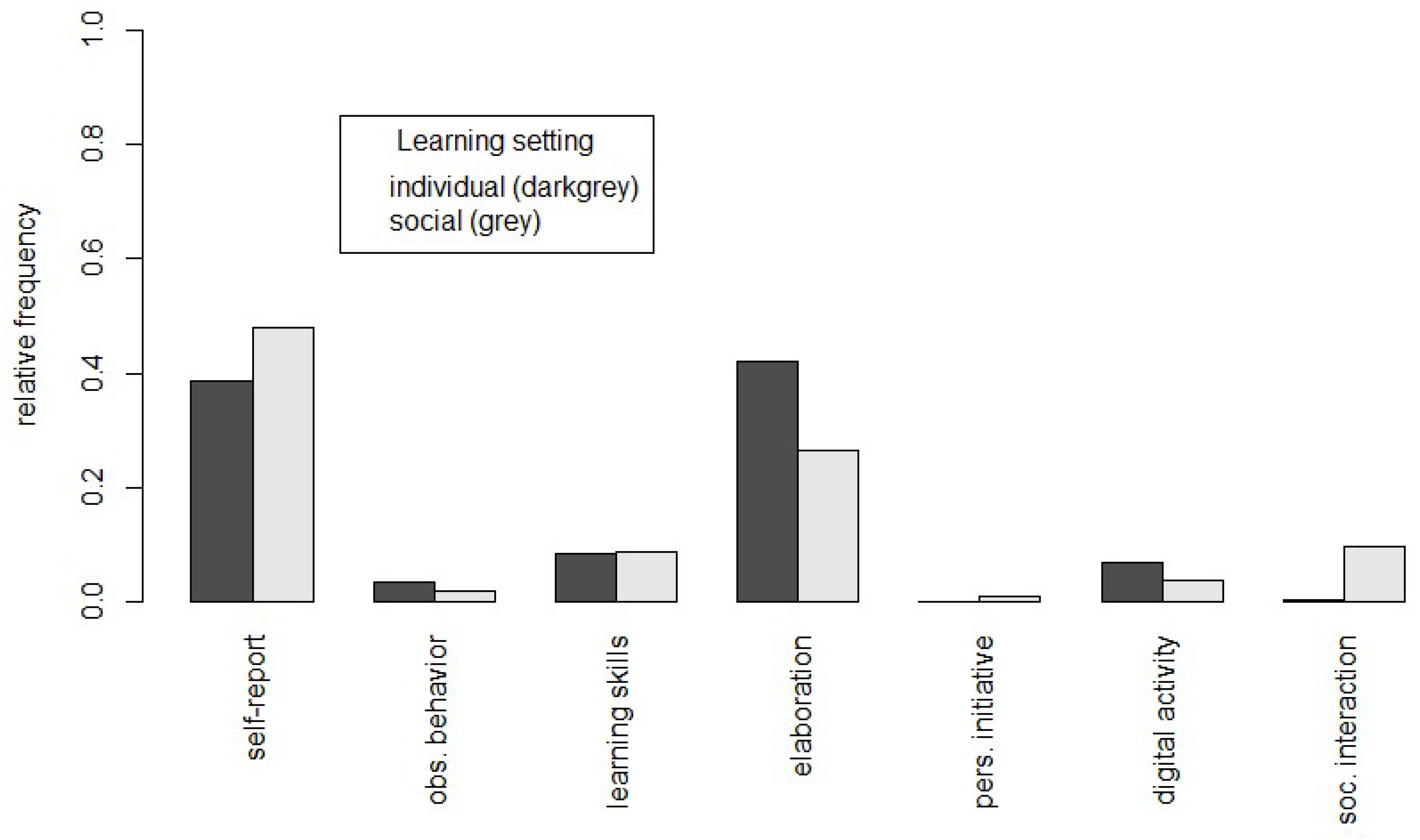

4.3. Measures of Learning Outcomes in Individual and Social Learning Settings

5. Discussion

5.1. Heterogeneous Evaluation of Learning Outcomes

5.2. Learning Outcomes in Learning Settings

5.3. Limitations

5.4. Balancing Perspectives

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Chan, T.-W.; Roschelle, J.; Hsi, S.; Sharples, M.; Brown, T.; Patton, C.; Cherniavsky, J.; Pea, R.; Norris, C. One-to-one technology-enhanced learning: An opportunity for global research collaboration. Res. Pract. Technol. Enhanc. Learn. 2006, 1, 3–29. [Google Scholar] [CrossRef]

- Hartnett, M.; Brown, M.; Anderson, B. Learning in the digital age: How are the ways in which we learn changing with the use of technologies? In Facing the Big Questions in Teaching: Purpose, Power and Learning, 2nd ed.; St. George, S.B., O’Neill, J., Eds.; Cengage: Melbourne, Australia, 2014; pp. 116–125. [Google Scholar]

- Johnason, L.; Adams Becker, S.; Cummins, M.; Estrada, V.; Freeman, A.; Hall, C. NMC Horizon Report: 2016 Higher Education Edition; New Media Consortium: Austin, TX, USA, 2016. [Google Scholar]

- Mothibi, G. A meta-analysis of the relationship between e-learning and students’ academic achievement in higher education. J. Educ. Pract. 2015, 6, 6–9. [Google Scholar]

- Pea, R.D. The social and technological dimensions of scaffolding and related theoretical concepts for learning, education, and human activity. J. Learn. Sci. 2004, 13, 423–451. [Google Scholar] [CrossRef]

- Perelmutter, B.; McGregor, K.K.; Gordon, K.R. Assistive technology interventions for adolescents and adults with learning disabilities: An evidence-based systematic review and meta-analysis. Comput. Educ. 2017, 114, 139–163. [Google Scholar] [CrossRef] [PubMed]

- Schneider, M.; Preckel, F. Variables associated with achievement in higher education: A systematic review of meta-analyses. Psychol. Bull. 2017, 143, 565–600. [Google Scholar] [CrossRef] [PubMed]

- Stepanyan, K.; Littlejohn, A.; Margaryan, A. Sustainable e-learning: Toward a coherent body of knowledge. J. Educ. Technol. Soc. 2013, 16, 91–102. [Google Scholar]

- Volery, T.; Lord, D. Critical success factors in online education. Int. J. Educ. Manag. 2000, 14, 216–223. [Google Scholar] [CrossRef]

- Wu, W.-H.; Wu, Y.-C.J.; Chen, C.-Y.; Kao, H.-Y.; Lin, C.-H.; Huang, S.-H. Review of trends from mobile learning studies: A meta-analysis. Comput. Educ. 2012, 59, 817–827. [Google Scholar] [CrossRef]

- Ellis, R.A.; Pardo, A.; Han, F. Quality in blended learning environments—Significant differences in how students approach learning collaborations. Comput. Educ. 2016, 102, 90–102. [Google Scholar] [CrossRef]

- Graham, C.R.; Woodfield, W.; Harrison, J.B. A framework for institutional adoption and implementation of blended learning in higher education. Internet High. Educ. 2013, 18, 4–14. [Google Scholar] [CrossRef]

- Hassanzadeh, A.; Kanaani, F.; Elahi, S. A model for measuring e-learning systems success in universities. Expert Syst. Appl. 2012, 39, 10959–10966. [Google Scholar] [CrossRef]

- Sun, P.-C.; Tsai, R.J.; Finger, G.; Chen, Y.-Y.; Yeh, D. What drives a successful e-learning? An empirical investigation of the critical factors influencing learner satisfaction. Comput. Educ. 2008, 50, 1183–1202. [Google Scholar] [CrossRef]

- O’Connor, K.; Allen, A. Learning as the organizing of social futures. Natl. Soc. Stud. Educ. 2010, 109, 160–175. [Google Scholar]

- Yang, Y.Q.; van Aalst, J.; Chan, C.K.K.; Tian, W. Reflective assessment in knowledge building by students with low academic achievement. Int. J. Comput. Supported Collab. Learn. 2016, 11, 281–311. [Google Scholar] [CrossRef]

- Fraillon, J.; Ainley, J.; Schulz, W.; Friedman, T.; Gebhardt, E. Preparing for Life in a Digital Age: The IEA International Computer and Information Literacy Study International Report; Springer: Berlin/Heidelberg, Germany, 2014. [Google Scholar]

- Koehler, M.J.; Mishra, P.; Cain, W. What is technological pedagogical content knowledge (TPACK)? J. Educ. 2013, 193, 13–19. [Google Scholar] [CrossRef]

- Laurillard, D.; Alexopoulou, E.; James, B.; Bottino, R.M.; Bouhineau, D.; Chioccariello, A.; Correia, S.; Davey, P.; Derry, J.; Dettori, G. The kaleidoscope scientific vision for research in technology enhanced learning. Position paper prepared for the European Commission, DG INFSO, under contract N°. IST 507838 as a. 2007. Available online: Telearn.archives-ouvertes.fr/hal-00190011/ (accessed on 18 November 2018).

- Wilson, M.; Scalise, K.; Gochyyev, P. Learning in digital networks as a modern approach to ICT literacy. In Assessment and Teaching of 21st Century Skills; Care, E., Griffin, P., Eds.; Springer: Washington, DC, USA, 2018; pp. 181–210. [Google Scholar]

- Muenks, K.; Miele, D.B. Students’ thinking about effort and ability: The role of developmental, contextual, and individual difference factors. Rev. Educ. Res. 2017, 87, 707–735. [Google Scholar] [CrossRef]

- Hatlevik, O.E.; Christophersen, K.-A. Digital competence at the beginning of upper secondary school: Identifying factors explaining digital inclusion. Comput. Educ. 2013, 63, 240–247. [Google Scholar] [CrossRef]

- Scherer, R.; Rohatgi, A.; Hatlevik, O.E. Students’ profiles of ICT use: Identification, determinants, and relations to achievement in a computer and information literacy test. Comput. Hum. Behav. 2017, 70, 486–499. [Google Scholar] [CrossRef]

- Al Zahrani, H.; Laxman, K. A critical meta-analysis of mobile learning research in higher education. J. Technol. Stud. 2016, 42, 2–17. [Google Scholar] [CrossRef][Green Version]

- Bernard, R.M.; Borokhovski, E.; Schmid, R.F.; Tamim, R.M.; Abrami, P.C. A meta-analysis of blended learning and technology use in higher education: From the general to the applied. J. Comput. High. Educ. 2014, 26, 87–122. [Google Scholar] [CrossRef]

- Kirkwood, A.; Price, L. Examining some assumptions and limitations of research on the effects of emerging technologies for teaching and learning in higher education. Br. J. Educ. Technol. 2013, 44, 536–543. [Google Scholar] [CrossRef]

- Steenbergen-Hu, S.; Cooper, H. A meta-analysis of the effectiveness of intelligent tutoring systems on college students’ academic learning. J. Educ. Psychol. 2014, 106, 331–347. [Google Scholar] [CrossRef]

- Røkenes, F.M.; Krumsvik, R.J. Prepared to teach ESL with ICT? A study of digital competence in norwegian teacher education. Comput. Educ. 2016, 97, 1–20. [Google Scholar] [CrossRef]

- Scherer, R.; Siddiq, F.; Tondeur, J. The technology acceptance model (TAM): A meta-analytic structural equation modeling approach to explaining teachers’ adoption of digital technology in education. Comput. Educ. 2019, 128, 13–35. [Google Scholar] [CrossRef]

- Connor, C.M.; Day, S.L.; Zargar, E.; Wood, T.S.; Taylor, K.S.; Jones, M.R.; Hwang, J.K. Building word knowledge, learning strategies, and metacognition with the Word-Knowledge e-Book. Comput. Educ. 2019, 128, 284–311. [Google Scholar] [CrossRef] [PubMed]

- Davis, D.; Chen, G.; Hauff, C.; Houben, G.-J. Activating learning at scale: A review of innovations in online learning strategies. Comput. Educ. 2018, 125, 327–344. [Google Scholar] [CrossRef]

- Thai, N.T.T.; De Wever, B.; Valcke, M. The impact of a flipped classroom design on learning performance in higher education: Looking for the best “blend” of lectures and guiding questions with feedback. Comput. Educ. 2017, 107, 113–126. [Google Scholar] [CrossRef]

- Hoadley, C. A short history of the learning sciences. In International Handbook of the Learning Sciences; Fischer, F., Hmelo-Silver, C.E., Eds.; Routledge: New York, NY, USA, 2018; pp. 11–23. [Google Scholar]

- Brown, J.S.; Collins, A.; Duguid, P. Situated cognition and the culture of learning. Educ. Res. 1989, 18, 32–42. [Google Scholar] [CrossRef]

- Danish, J.; Gresalfi, M. Cognitive and sociocultural perspective on learning: Tensions and synergy in the learning sciences. In International Handbook of the Learning Sciences; Fischer, F., Hmelo-Silver, C.E., Eds.; Routledge: New York, NY, USA, 2018; pp. 34–43. [Google Scholar]

- Kimmerle, J.; Moskaliuk, J.; Oeberst, A.; Cress, U. Learning and collective knowledge construction with social media: A process-oriented perspective. Educ. Psychol. 2015, 50, 120–137. [Google Scholar] [CrossRef]

- Cress, U.; Kimmerle, J. Collective knowledge construction. In International Handbook of the Learning Sciences; Fischer, F., Hmelo-Silver, C.E., Eds.; Routledge: New York, NY, USA, 2018; pp. 137–146. [Google Scholar]

- Anderson, J.R. The Architecture of Cognition; Psychology Press: New York, NY, USA, 2013. [Google Scholar]

- Anderson, J.R. ACT: A simple theory of complex cognition. Am. Psychol. 1996, 51, 355–365. [Google Scholar] [CrossRef]

- Hoadley, C.M. Learning and design: Why the learning sciences and instructional systems need each other. Educ. Technol. 2004, 44, 6–12. [Google Scholar]

- Mayer, R.E. The Cambridge Handbook of Multimedia Learning, 2nd ed.; Cambridge University Press: New York, NY, USA, 2014. [Google Scholar]

- Van Merriënboer, J.J.; Clark, R.E.; De Croock, M.B. Blueprints for complex learning: The 4C/ID-model. Educ. Technol. Res. Dev. 2002, 50, 39–61. [Google Scholar] [CrossRef]

- Winne, P.H.; Hadwin, A.F. Studying as self-regulated learning. Metacognition Educ. Theory Pract. 1998, 93, 27–30. [Google Scholar]

- Förster, M.; Weiser, C.; Maur, A. How feedback provided by voluntary electronic quizzes affects learning outcomes of university students in large classes. Comput. Educ. 2018, 121, 100–114. [Google Scholar] [CrossRef]

- Lotz, C.; Scherer, R.; Greiff, S.; Sparfeldt, J.R. Intelligence in action—Effective strategic behaviors while solving complex problems. Intelligence 2017, 64, 98–112. [Google Scholar] [CrossRef]

- Scherer, R. Learning from the past–the need for empirical evidence on the transfer effects of computer programming skills. Front. Psychol. 2016, 7, 1390. [Google Scholar] [CrossRef]

- Tsarava, K.; Moeller, K.; Ninaus, M. Training computational thinking through board games: The case of crabs & turtles. Int. J. Serious Games 2018, 5, 25–44. [Google Scholar]

- Chandler, P.; Sweller, J. Cognitive load theory and the format of instruction. Cogn. Instr. 1991, 8, 293–332. [Google Scholar] [CrossRef]

- Paas, F.; Renkl, A.; Sweller, J. Cognitive load theory and instructional design: Recent developments. Educ. Psychol. 2003, 38, 1–4. [Google Scholar] [CrossRef]

- Sweller, J. Cognitive load theory, learning difficulty, and instructional design. Learn. Instr. 1994, 4, 295–312. [Google Scholar] [CrossRef]

- Barsalou, L.W. Perceptual symbol systems. Behav. Brain Sci. 1999, 22, 577–660. [Google Scholar] [CrossRef] [PubMed]

- Chi, M.T.; Wylie, R. The ICAP framework: Linking cognitive engagement to active learning outcomes. Educ. Psychol. 2014, 49, 219–243. [Google Scholar] [CrossRef]

- Estes, Z.; Verges, M.; Barsalou, L.W. Head up, foot down: Object words orient attention to the objects’ typical location. Psychol. Sci. 2008, 19, 93–97. [Google Scholar] [CrossRef]

- Glenberg, A.M.; Gallese, V. Action-based language: A theory of language acquisition, comprehension, and production. J. Devoted Study Nerv. Syst. Behav. 2012, 48, 905–922. [Google Scholar] [CrossRef]

- Atkinson, R.C.; Shiffrin, R.M. Human memory: A proposed system and its control processes1. In Psychology of Learning and Motivation. Advances in Research and Theory; Spence, K.W., Spence, J.T., Eds.; Academic Press: New York, NY, USA, 1968; Volume 2, pp. 89–195. [Google Scholar]

- Baddeley, A.D.; Hitch, G. Working Memory. In Psychology of Learning and Motivation; Bower, G.H., Ed.; Academic Press: New York, NY, USA, 1974; Volume 8, pp. 47–89. [Google Scholar]

- Clark, J.M.; Paivio, A. Dual coding theory and education. Educ. Psychol. Rev. 1991, 3, 149–210. [Google Scholar] [CrossRef]

- Paivio, A. Imagery and Verbal Processes; Psychology: New York, NY, USA, 2013. [Google Scholar]

- Higgins, E.T.; Silberman, I. Development of Regulatory Focus: Promotion and Prevention as Ways of Living Motivation and Self-Regulation Across the Life Span; Cambridge University: Cambridge, UK, 1998. [Google Scholar]

- Petty, R.E.; Cacioppo, J.T. The elaboration likelihood model of persuasion. Adv. Exp. Soc. Psychol. 1986, 19, 123–205. [Google Scholar]

- Moreno, R.; Mayer, R. Interactive multimodal learning environments. Educ. Psychol. Rev. 2007, 19, 309–326. [Google Scholar] [CrossRef]

- Strømsø, H.I. Multiple Models of Multiple-Text Comprehension: A Commentary. Educ. Psychol. 2017, 52, 216–224. [Google Scholar] [CrossRef]

- Coldwell, J.; Craig, A.; Goold, A. Using etechnologies for active learning. Interdiscip. J. Inf. Knowl. Manag. 2011, 6, 95–106. [Google Scholar]

- Bailenson, J.N.; Yee, N.; Blascovich, J.; Beall, A.C.; Lundblad, N.; Jin, M. The use of immersive virtual reality in the learning sciences: Digital transformations of teachers, students, and social context. J. Learn. Sci. 2008, 17, 102–141. [Google Scholar] [CrossRef]

- Vygotsky, L.S. Mind in Society; Harvard University Press: Cambridge, MA, USA, 1978. [Google Scholar]

- Doolittle, P.E. Vygotsky’s zone of proximal development as a theoretical foundation for cooperative learning. J. Excell. Coll. Teach. 1997, 8, 83–103. [Google Scholar]

- Cress, U.; Kimmerle, J. A systemic and cognitive view on collaborative knowledge building with wikis. Int. J. Comput. Supported Collab. Learn. 2008, 3, 105. [Google Scholar] [CrossRef]

- Aghaee, N.; Keller, C. ICT-supported peer interaction among learners in Bachelor’s and Master’s thesis courses. Comput. Educ. 2016, 94, 276–297. [Google Scholar] [CrossRef]

- Goggins, S.; Xing, W. Building models explaining student participation behavior in asynchronous online discussion. Comput. Educ. 2016, 94, 241–251. [Google Scholar] [CrossRef]

- Smet, M.D.; Keer, H.V.; Wever, B.D.; Valcke, M. Cross-age peer tutors in asynchronous discussion groups: Exploring the impact of three types of tutor training on patterns in tutor support and on tutor characteristics. Comput. Educ. 2010, 54, 1167–1181. [Google Scholar] [CrossRef]

- Damon, W.; Phelps, E. Critical distinctions among three approaches to peer education. Int. J. Educ. Res. 1989, 13, 9–19. [Google Scholar] [CrossRef]

- Dillenbourg, P. What do you mean by collaborative learning? In Collaborative-Learning: Cognitive and Computational Approaches; Dillenbourg, P., Ed.; Elsevier: Oxford, UK, 1999; pp. 1–19. [Google Scholar]

- Fischer, M.H.; Riello, M.; Giordano, B.L.; Rusconi, E. Singing Number in Cognitive Space—A Dual-Task Study of the Link Between Pitch, Space, and Numbers. Top. Cogn. Sci. 2013, 5, 354–366. [Google Scholar] [CrossRef]

- Engelmann, T.; Dehler, J.; Bodemer, D.; Buder, J. Knowledge awareness in CSCL: A psychological perspective. Comput. Hum. Behav. 2009, 25, 949–960. [Google Scholar] [CrossRef]

- Reis, R.C.D.; Isotani, S.; Rodriguez, C.L.; Lyra, K.T.; Jaques, P.A.; Bittencourt, I.I. Affective states in computer-supported collaborative learning: Studying the past to drive the future. Comput. Educ. 2018, 120, 29–50. [Google Scholar] [CrossRef]

- Eid, M.I.M.; Al-Jabri, I.M. Social networking, knowledge sharing, and student learning: The case of university students. Comput. Educ. 2016, 99, 14–27. [Google Scholar] [CrossRef]

- Sobaih, A.E.E.; Moustafa, M.A.; Ghandforoush, P.; Khan, M. To use or not to use? Social media in higher education in developing countries. Comput. Hum. Behav. 2016, 58, 296–305. [Google Scholar] [CrossRef]

- Buder, J.; Schwind, C.; Rudat, A.; Bodemer, D. Selective reading of large online forum discussions: The impact of rating visualizations on navigation and learning. Comput. Hum. Behav. 2015, 44, 191–201. [Google Scholar] [CrossRef]

- Erkens, M.; Bodemer, D. Improving collaborative learning: Guiding knowledge exchange through the provision of information about learning partners and learning contents. Comput. Educ. 2019, 128, 452–472. [Google Scholar] [CrossRef]

- Schneider, B.; Pea, R. Real-time mutual gaze perception enhances collaborative learning and collaboration quality. Int. J. Comput. Supported Collab. Learn. 2013, 8, 375–397. [Google Scholar] [CrossRef]

- Cooper, H. Research Synthesis and Meta-Analysis. A Step-by-Step Approach, 5th ed.; Sage: Thousand Oaks, CA, USA, 2017; Volume 2. [Google Scholar]

- Richardson, M.; Abraham, C.; Bond, R. Psychological correlates of university students’ academic performance: A systematic review and meta-analysis. Psychol. Bull. 2012, 138, 353–387. [Google Scholar] [CrossRef] [PubMed]

- Sfard, A. On two metaphors for learning and the dangers of choosing just one. Educ. Res. 1998, 27, 4–13. [Google Scholar] [CrossRef]

- Suping, S.M. Conceptual Change among Students in Science. ERIC Digest. Available online: Files.eric.ed.gov/fulltext/ED482723.pdf (accessed on 6 July 2018).

- Liu, C.-C.; Chen, Y.-C.; Diana Tai, S.-J. A social network analysis on elementary student engagement in the networked creation community. Comput. Educ. 2017, 115, 114–125. [Google Scholar] [CrossRef]

- Siqin, T.; van Aalst, J.; Chu, S.K.W. Fixed group and opportunistic collaboration in a CSCL environment. Int. J. Comput. Supported Collab. Learn. 2015, 10, 161–181. [Google Scholar] [CrossRef]

- Wang, X.; Kollar, I.; Stegmann, K.; Fischer, F. Adaptable scripting in computer-supported collaborative learning to foster knowledge and skill acquisition. In Connecting Computer-Supported Collaborative Learning to Policy and Practice: CSCL2011 Conference Proceedings; Spada, H., Stahl, G., Eds.; International Society of the Learning Sciences: Hongkong, China, 2011; Volume I, pp. 382–389. [Google Scholar]

- Binkley, M.; Erstad, O.; Herman, J.; Raizen, S.; Ripley, M.; Miller-Ricci, M.; Rumble, M. Defining twenty-first century skills. In Assessment and Teaching of 21st Century Skills; Care, E., Griffin, P., Eds.; Springer: Washington, DC, USA, 2012; pp. 17–66. [Google Scholar]

- Chi, M.T.; Adams, J.; Bogusch, E.B.; Bruchok, C.; Kang, S.; Lancaster, M.; Levy, R.; Li, N.; McEldoon, K.L.; Stump, G.S. Translating the ICAP theory of cognitive engagement into practice. Cogn. Sci. 2018. [Google Scholar] [CrossRef]

- Wilson, M.; Scalise, K.; Gochyyev, P. Rethinking ICT literacy: From computer skills to social network settings. Think. Ski. Creat. 2015, 18, 65–80. [Google Scholar] [CrossRef]

- Lorenzo, G.; Moore, J. Five pillars of quality online education. Sloan Consort. Rep. Nation 2002, 15, 9. Available online: www.understandingxyz.com/index_htm_files/SloanCReport-five%20pillars.pdf (accessed on 18 April 2017).

- Tondeur, J.; van Braak, J.; Ertmer, P.A.; Ottenbreit-Leftwich, A. Understanding the relationship between teachers’ pedagogical beliefs and technology use in education: A systematic review of qualitative evidence. Educ. Technol. Res. Dev. 2017, 65, 555–575. [Google Scholar] [CrossRef]

- Cho, M.-H.; Heron, M.L. Self-regulated learning: The role of motivation, emotion, and use of learning strategies in students’ learning experiences in a self-paced online mathematics course. Distance Educ. 2015, 36, 80–99. [Google Scholar] [CrossRef]

- Nistor, N.; Neubauer, K. From participation to dropout: Quantitative participation patterns in online university courses. Comput. Educ. 2010, 55, 663–672. [Google Scholar] [CrossRef]

- Boekaerts, M. Self-regulated learning: Where we are today. Int. J. Educ. Res. 1999, 31, 445–457. [Google Scholar] [CrossRef]

- Krathwohl, D.R. A revision of bloom’s taxonomy: An overview. Theory Pract. 2002, 41, 212–218. [Google Scholar] [CrossRef]

- Craik, F.I.M.; Lockhart, R.S. Levels of processing: A framework for memory research. J. Verbal Learn. Verbal Behav. 1972, 11, 671–684. [Google Scholar] [CrossRef]

- Phan, H.P.; Deo, B. The revised learning process questionnaire: A validation of a western model of students’ study approaches to the south pacific context using confirmatory factor analysis. Br. J. Educ. Psychol. 2007, 77, 719–739. [Google Scholar] [CrossRef]

- Biggs, J.B.; Collis, K.F. Evaluating the Quality of Learning: The SOLO Taxonomy (Structure of the Observed Learning Outcome); Academic Press: New York, NY, USA, 2014. [Google Scholar]

- Bloom, B.S. Taxonomy of Educational Objectives. The Classification of Educational Goals. Handbook 1 Cognitive Domain; David McKay: Ann Arbor, MI, USA, 1956; pp. 20–24. [Google Scholar]

- Brabrand, C.; Dahl, B. Using the SOLO taxonomy to analyze competence progression of university science curricula. High. Educ. 2009, 58, 531–549. [Google Scholar] [CrossRef]

- Kahu, E.R. Framing student engagement in higher education. Stud. High. Educ. 2013, 38, 758–773. [Google Scholar] [CrossRef]

- Fredricks, J.A.; Blumenfeld, P.C.; Paris, A.H. School engagement: Potential of the concept, state of the evidence. Rev. Educ. Res. 2004, 74, 59–109. [Google Scholar] [CrossRef]

- Calvani, A.; Fini, A.; Ranieri, M.; Picci, P. Are young generations in secondary school digitally competent? A study on italian teenagers. Comput. Educ. 2012, 58, 797–807. [Google Scholar] [CrossRef]

- Kuh, G.D. The national survey of student engagement: Conceptual and empirical foundations. New Dir. Inst. Res. 2009, 2009, 5–20. [Google Scholar] [CrossRef]

- Delgado-Almonte, M.; Andreu, H.B.; Pedraja-Rejas, L. Information technologies in higher education: Lessons learned in industrial engineering. Educ. Technol. Soc. 2010, 13, 140–154. [Google Scholar]

- Maier, E.M.; Hege, I.; Muntau, A.C.; Huber, J.; Fischer, M.R. What are effects of a spaced activation of virtual patients in a pediatric course? BMC Med. Educ. 2013, 13, 45. [Google Scholar] [CrossRef]

- Hachey, A.C.; Wladis, C.; Conway, K. Prior online course experience and GPA as predictors of subsequent online STEM course outcomes. Internet High. Educ. 2015, 25, 11–17. [Google Scholar] [CrossRef]

- Susskind, J.E. Limits of powerpoint’s power: Enhancing students’ self-efficacy and attitudes but not their behavior. Comput. Educ. 2008, 50, 1228–1239. [Google Scholar] [CrossRef]

- Guillen-Nieto, V.; Aleson-Carbonell, M. Serious games and learning effectiveness: The case of it’s a deal! Comput. Educ. 2012, 58, 435–448. [Google Scholar] [CrossRef]

- Chen, N.S.; Wei, C.W.; Wu, K.T.; Uden, L. Effects of high level prompts and peer assessment on online learners’ reflection levels. Comput. Educ. 2009, 52, 283–291. [Google Scholar] [CrossRef]

- McKinney, D.; Dyck, J.L.; Luber, E.S. ITunes university and the classroom: Can podcasts replace professors? Comput. Educ. 2009, 52, 617–623. [Google Scholar] [CrossRef]

- Hou, H.T. A case study of online instructional collaborative discussion activities for problem-solving using situated scenarios: An examination of content and behavior cluster analysis. Comput. Educ. 2011, 56, 712–719. [Google Scholar] [CrossRef]

- Shea, P.; Hayes, S.; Smith, S.U.; Vickers, J.; Bidjerano, T.; Gozza-Cohen, M.; Jian, S.B.; Pickett, A.M.; Wilde, J.; Tseng, C.H. Online learner self-regulation: Learning presence viewed through quantitative content- and social network analysis. Int. Rev. Res. Open Distance Learn. 2013, 14, 427–461. [Google Scholar] [CrossRef]

- Hoskins, S.L.; Van Hooff, J.C. Motivation and ability: Which students use online learning and what influence does it have on their achievement? Br. J. Educ. Technol. 2005, 36, 177–192. [Google Scholar] [CrossRef]

- Zhou, M.M. SCOOP: A measurement and database of student online search behavior and performance. Br. J. Educ. Technol. 2015, 46, 928–931. [Google Scholar] [CrossRef]

- Kim, P.; Hong, J.S.; Bonk, C.; Lim, G. Effects of group reflection variations in project-based learning integrated in a web 2.0 learning space. Interact. Learn. Environ. 2011, 19, 333–349. [Google Scholar] [CrossRef]

- Barbera, E. Mutual feedback in e-portfolio assessment: An approach to the netfolio system. Br. J. Educ. Technol. 2009, 40, 342–357. [Google Scholar] [CrossRef]

- van Rooij, S.W. Scaffolding project-based learning with the project management body of knowledge (PMBOK (R)). Comput. Educ. 2009, 52, 210–219. [Google Scholar] [CrossRef]

- Scherer, R.; Siddiq, F.; Sánchez Viveros, B. The cognitive benefits of learning computer programming: A meta-analysis of transfer effects. J. Educ. Psychol. 2018. [Google Scholar] [CrossRef]

- Bientzle, M.; Griewatz, J.; Kimmerle, J.; Küppers, J.; Cress, U.; Lammerding-Koeppel, M. Impact of scientific versus emotional wording of patient questions on doctor-patient communication in an internet forum: A randomized controlled experiment with medical students. J. Med. Internet Res. 2015, 17, e268. [Google Scholar] [CrossRef]

- Bientzle, M.; Fissler, T.; Cress, U.; Kimmerle, J. The impact of physicians’ communication styles on evaluation of physicians and information processing: A randomized study with simulated video consultations on contraception with an intrauterine device. Health Expect. 2017, 20, 845–851. [Google Scholar] [CrossRef]

- Griewatz, J.; Lammerding-Koeppel, M.; Bientzle, M.; Cress, U.; Kimmerle, J. Using simulated forums for training of online patient counselling. Med. Educ. 2016, 50, 576–577. [Google Scholar] [CrossRef]

- Grosser, J.; Bientzle, M.; Shiozawa, T.; Hirt, B.; Kimmerle, J. Acquiring clinical knowledge from an online video platform: A randomized controlled experiment on the relevance of integrating anatomical information and clinical practice. Anat. Sci. Educ. 2019, 12, 478–484. [Google Scholar] [CrossRef] [PubMed]

| Category | Examples | Learning Outcome is Evaluated on the Basis of … |

|---|---|---|

| Method | ||

| Self-report | Students report about their satisfaction, motivation or attitude | …experience, perception, or values of a learner. |

| Observable behavior | Enrollment or final completion of lectures or seminars | … intention, persistence or effectiveness of a learner’s behavior. |

| Cognition | ||

| Learning skills | Self-regulation, awareness or writing skills | … meta-cognition. |

| Elaboration | Vocabulary-tests or transfer tasks | …cognitive measurements. |

| Activities | ||

| Personal initiative | Number of contributions to discussions or frequency of use | … mere participation or pro-activeness of a learner. |

| Digital activity | Sourcing and searching behavior | … digital maturity level or active usage of digital tools. |

| Social interaction | Collaboration with peers or communication with professors | … social influence on activities of a learner. |

| Journal | Learning Setting | Total | |

|---|---|---|---|

| Individual | Social | ||

| Advances in Health Sciences Education | 2 | 3 | 5 |

| Assessment & Evaluation in Higher Education | 0 | 3 | 3 |

| Australasian Journal of Educational Technology | 6 | 12 | 18 |

| BMC Medical Education | 35 | 9 | 44 |

| British Journal of Educational Technology | 13 | 11 | 24 |

| Computers & Education | 79 | 23 | 102 |

| Educational Technology & Society | 16 | 12 | 28 |

| Educational Technology Research and Development | 8 | 0 | 8 |

| Instructional Science | 5 | 1 | 6 |

| Interactive Learning Environments | 11 | 6 | 17 |

| International Review of Research in Open and Distance Learning | 6 | 2 | 8 |

| Internet and Higher Education | 8 | 12 | 20 |

| Journal of Computer Assisted Learning | 8 | 7 | 15 |

| Journal of Science Education and Technology | 7 | 1 | 8 |

| 204 | 102 | 306 | |

| Learning Setting | Learning Outcomes | |||||||

|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | ||

| individual | 79 | 7 | 17 | 86 | 0 | 14 | 1 | 204 |

| social | 49 | 2 | 9 | 27 | 1 | 4 | 10 | 102 |

| 128 | 9 | 26 | 113 | 1 | 18 | 11 | 306 | |

| Learning Setting | Learning Outcomes | ||||||

|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | |

| individual | 38.73 | 3.43 | 8.33 | 42.16 | 0 | 6.86 | 0.49 |

| social | 48.04 | 1.96 | 8.82 | 26.47 | 0.98 | 3.92 | 9.80 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kümmel, E.; Moskaliuk, J.; Cress, U.; Kimmerle, J. Digital Learning Environments in Higher Education: A Literature Review of the Role of Individual vs. Social Settings for Measuring Learning Outcomes. Educ. Sci. 2020, 10, 78. https://doi.org/10.3390/educsci10030078

Kümmel E, Moskaliuk J, Cress U, Kimmerle J. Digital Learning Environments in Higher Education: A Literature Review of the Role of Individual vs. Social Settings for Measuring Learning Outcomes. Education Sciences. 2020; 10(3):78. https://doi.org/10.3390/educsci10030078

Chicago/Turabian StyleKümmel, Elke, Johannes Moskaliuk, Ulrike Cress, and Joachim Kimmerle. 2020. "Digital Learning Environments in Higher Education: A Literature Review of the Role of Individual vs. Social Settings for Measuring Learning Outcomes" Education Sciences 10, no. 3: 78. https://doi.org/10.3390/educsci10030078

APA StyleKümmel, E., Moskaliuk, J., Cress, U., & Kimmerle, J. (2020). Digital Learning Environments in Higher Education: A Literature Review of the Role of Individual vs. Social Settings for Measuring Learning Outcomes. Education Sciences, 10(3), 78. https://doi.org/10.3390/educsci10030078