Does Project-Based Learning (PBL) Promote Student Learning? A Performance Evaluation

Abstract

1. Introduction

- Research Question 1: Why should the effectiveness of instructional tools (i.e., PBL) in higher learning institutions be measured?

- Research Question 2: How can instructors measure the impact of instructional tools (i.e., PBL) as an effort towards facilitating student learning?

2. Theoretical Background

2.1. The Role of Project-based Learning (PBL) in Student Learning

2.2. Performance Measurement in the Learning Context

- Analytic/synthetic approach, which relates to scholarship, with emphasis on breadth, analytic ability, and conceptual understanding;

- Organization/clarity, which relates to skills at presentation but is subject-related, not student-related;

- Instructor group interaction, which relates to rapport with the class as a whole, sensitivity to class response, and skill in securing active class participation;

- Instructor individual interaction, which relates to mutual respect and rapport between the instructor and the individual student;

- Dynamism/enthusiasm, which relates to the flair and infectious enthusiasm that comes with confidence, excitement for the subject, and pleasure in teaching.

- Perceived learning, which is associated with increases in knowledge and skills;

- Perceived challenge, which is associated with stimulation and motivation;

- Perceived task difficulty, which is associated with the complexity and difficulty of concepts;

- Perceived performance, which is associated with self-evaluating performance.

- The quality of instruction, which refers to instructors’ skills and ability as well as their friendliness, enthusiasm, and approachability;

- Task difficulty in terms of demands and effort required by students to achieve their desired result;

- Academic development and stimulation, which refers to how stimulated and motivated a student feels and whether they believe they are increasing and developing their academic skills.

3. Method

3.1. Project-Based Assignment

- Providing students with an authentic learning environment that introduces them to the concept of digitalization;

- Providing students with a learning environment that enables them to comprehend the interrelationship between organization, value creation, and technology in digitalization projects.

- An animation of a real-life project case, explaining the main events, the challenges encountered, and useful insights gained from the real-life case;

- A computer simulation that shows how certain project variables are influenced by each other on the dynamics of their interaction;

- A gamified experience of a problem or a project situation using computer games;

- Gamified tests and quizzes to support learning.

3.2. Choice of Data Collection Method: Survey

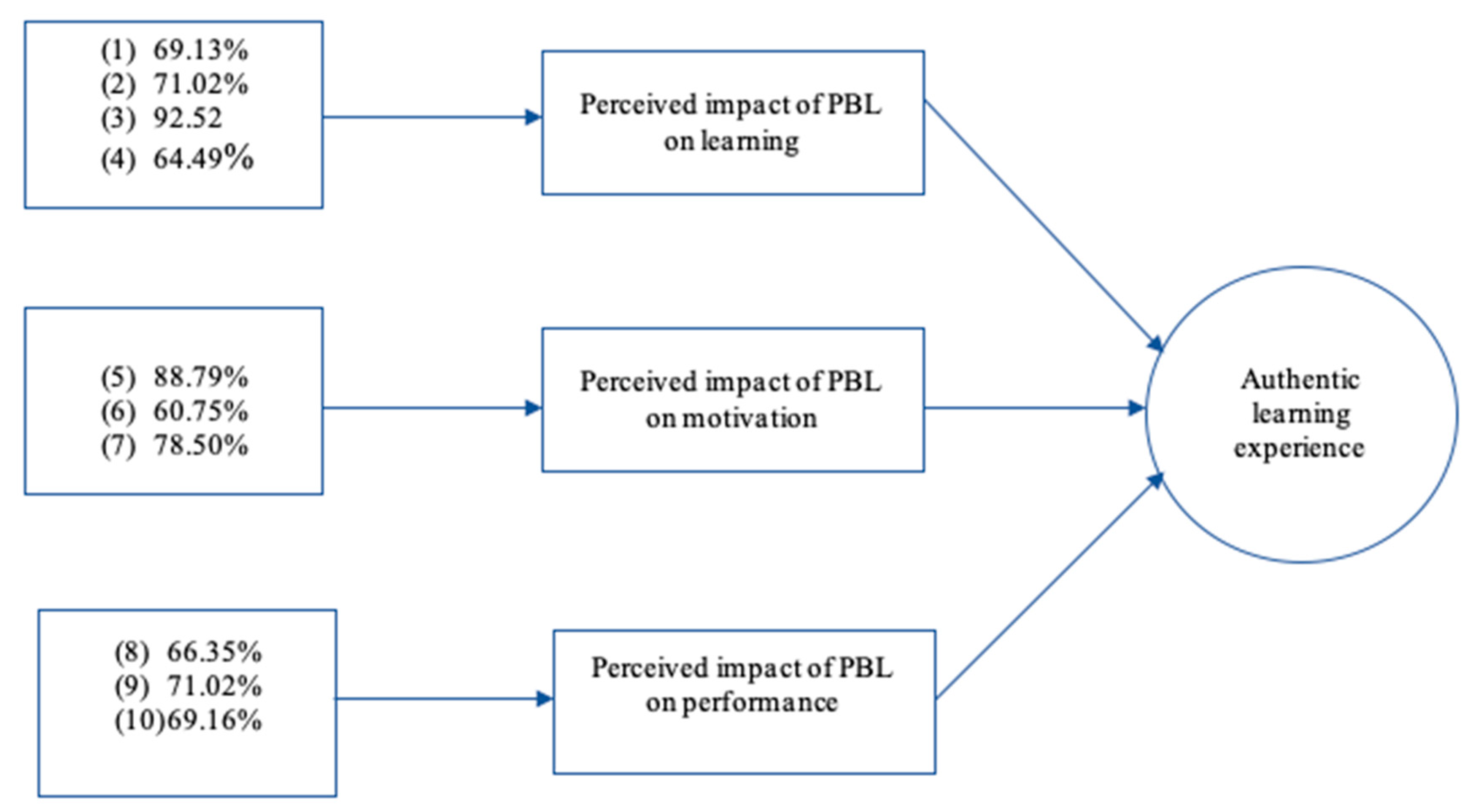

- Learning;

- Motivation;

- Performance.

3.3. Reliability, Validity, and Generalizability of the Scale

3.3.1. Reliability

3.3.2. Validity and generalizability

4. Results

- Students experienced a significant learning outcome;

- High correspondence in the degree of learning between students in both samples.

4.1. Correspondence in the Degree of Learning Performance Exhibited in Samples 1 and 2

4.2. Combined Samples Results

4.2.1. Perceived Learning Performance

4.2.2. The Importance of Instructional Methods

5. Discussion

6. Conclusions

- Research Question 1: Why should we measure the effectiveness of instructional tools (i.e., PBL) in higher learning institutions?

- Research Question 2: How can instructors measure the impact of instructional tools (i.e., PBL) as an effort towards facilitating student learning?

- 1.

- What is the intended learning outcome?In this step, identify the overall outcome the course intends to achieve (e.g., create an authentic learning experience, create good communicators, develop sustainable business skills)

- 2.

- How do you intend to achieve this outcome?Decide on the instructional methods to achieve the overall outcome. Although PBL was evaluated in this study, this process is applicable for any instructional tool. Because not all methods enable the attainment of certain outcomes, it is important to ensure the method is a facilitator of the intended outcome rather than a hindrance.

- 3.

- What are the criteria to be measured?Determine which constructs/criteria are to be examined. Numerous studies of criteria pertaining to student evaluations have been conducted. Revisiting some of the evaluations and using them as a foundation for modifications is worth consideration.

- 4.

- What are the specific questions you want evaluated by students?Develop the scale questions (measurement scales) for each construct and ensure that all questions are related to attaining the overall outcome.

Author Contributions

Funding

Conflicts of Interest

References

- Spitzer, D.R. Transforming Performance Measurement: Rethinking the Way We Measure and Drive Organizational Success, Illustrated ed.; Amacom: New York, NY, USA, 2007; p. 288. [Google Scholar]

- Bedggood, R.E.; Donovan, J.D. University performance evaluations: What are we really measuring? Stud. High. Educ. 2012, 37, 825–842. [Google Scholar] [CrossRef]

- Perrone, V. Expanding Student Assessment; Association for Supervision and Curriculum Development: Alexandria, VA, USA, 1991; pp. 9–45. [Google Scholar]

- Bedggood, R.E.; Pollard, R. Customer satisfaction and teacher evaluation in higher education. In Global Marketing Issues at the Turn of the Millenium: Proceedings of the Tenth Biennial World Marketing Congress, 1st ed.; Spotts, H.E., Meadow, H.L., Scott, S.M., Eds.; Cardiff Business School: Cardiff Wales, UK, 2001. [Google Scholar]

- Hildebrand, M.; Wilson, R.C.; Dienst, E.R. Evaluating University Teaching; Center for Research and Development in Higher Education, University of California: Berkeley, CA, USA, 1971. [Google Scholar]

- John, T.E.R. Instruments for obtaining student feedback: A review of the literature. Assess. Eval. High. Educ. 2005, 30, 387–415. [Google Scholar] [CrossRef]

- Marsh, H.W. Students’ evaluations of university teaching: Research findings, methodological issues, and directions for future research. Int. J. Educ. Psychol. 1987, 11, 253–388. [Google Scholar] [CrossRef]

- SIRS Research Report 2. Student Instructional Rating System, Stability of Factor Structure; Michigan State University, Office of Evaluation Services: East Lansing, MI, USA, 1971. [Google Scholar]

- Ramsden, P. A performance indicator of teaching quality in higher education: The course experience questionnaire. Stud. High. Educ. 1991, 16, 129–150. [Google Scholar] [CrossRef]

- Jimaa, S. Students’ rating: Is it a measure of an effective teaching or best gauge of learning? Proc. Soc. Behav. Sci. 2013, 83, 30–34. [Google Scholar] [CrossRef][Green Version]

- Clanchy, J.; Ballard, B. Generic skills in the context of higher education. High. Educ. Res. Dev. 1995, 14, 155–166. [Google Scholar] [CrossRef]

- Bell, S. Project-based learning for the 21st century: Skills for the future. Clear. House J. Educ. Strateg. Issues Ideas 2010, 83, 39–43. [Google Scholar] [CrossRef]

- Chang, C.-C.; Kuo, C.-G.; Chang, Y.-H. An assessment tool predicts learning effectiveness for project-based learning in enhancing education of sustainability. Sustainability 2018, 10, 3595. [Google Scholar] [CrossRef]

- Pombo, L.; Marques, M.M. The potential educational value of mobile augmented reality games: The case of EduPARK app. Educ. Sci. 2020, 10, 287. [Google Scholar] [CrossRef]

- Hansen, P.K.; Fradinho, M.; Andersen, B.; Lefrere, P. Changing the way we learn: Towards agile learning and co-operation. In Proceedings of the Learning and Innovation in Value Added Networks—The Annual Workshop of the IFIP Working Group 5.7 on Experimental Interactive Learning in Industrial Management, ETH Zurich, Switzerland, 25–26 May 2009. [Google Scholar]

- Prensky, M. Digital game-based learning. Comput. Entertain. 2003, 1, 1–4. [Google Scholar] [CrossRef]

- Grant, M.M. Getting a grip on project based-learning: Theory, cases and recommendations. Middle Sch. Comput. Technol. J. 2002, 5, 83. [Google Scholar]

- Harlen, W.; James, M. Assessment and learning: Differences and relationships between formative and summative assessment. Assess. Educ. Princ. Policy Pract. 1997, 4, 365–379. [Google Scholar] [CrossRef]

- Entwistle, N.; Smith, C. Personal understanding and target understanding: Mapping influences on the outcome of learning. Br. J. Educ. Psychol. 2010, 72, 321–342. [Google Scholar] [CrossRef] [PubMed]

- Espeland, V.; Indrehus, O. Evaluation of students’ satisfaction with nursing education in Norway. J. Adv. Nurs. 2003, 42, 226–236. [Google Scholar] [CrossRef]

- Boyle, P.; Trevitt, C. Enhancing the quality of student learning through the use of subject learning plans. High. Educ. Res. Dev. 1997, 16, 293–308. [Google Scholar] [CrossRef]

- Chen, C.J.; Lin, B.W. The effects of environment, knowledge attribute, organizational climate and firm characteristics on knowledge sourcing decisions. R D Manag. 2004, 34, 137–146. [Google Scholar] [CrossRef]

- Boaler, J. Mathematics for the moment, or the millennium? Educ. Week 1999, 17, 30–34. [Google Scholar] [CrossRef]

- Petrosino, A.J. The Use of Reflection and Revision in Hands-on Experimental Activities by at-Risk Children. Ph.D. Thesis, Vanderbilt University, Nashville, TN, USA, 1988. Unpublished work. [Google Scholar]

- Andersen, B.; Cassina, J.; Duin, H.; Fradinho, M. The Creation of a Serious Game: Lessons Learned from the PRIME Project. In Proceedings of the Learning and Innovation in Value Added Networks: Proceedings of the 13th IFIP 5.7 Special Interest Group Workshop on Experimental Interactive Learning in Industrial Management, ETH Zurich, Center for Enterprise Sciences, Zurich, Switzerland, 24–25 May 2009; pp. 99–108. [Google Scholar]

- Bititci, U.; Garengo, P.; Dörfler, V.; Nudurupati, S. Performance measurement: Challenges for tomorrow. Int. J. Manag. Rev. 2012, 14, 305–327. [Google Scholar] [CrossRef]

- Lebas, M.J. Performance measurement and performance management. Int. J. Prod. Econ. 1995, 41, 23–35. [Google Scholar] [CrossRef]

- Choong, K.K. Has this large number of performance measurement publications contributed to its better understanding? A systematic review for research and applications. Int. J. Prod. Res. 2013, 52, 4174–4197. [Google Scholar] [CrossRef]

- Anderson, B.; Fagerhaug, T. Performance Measurement Explained: Designing and Implementing Your State-of-the-Art System; ASQ Quality Press: Milwaukee, WI, USA, 2002. [Google Scholar]

- Hughes, M.D.; Bartlett, R.M. The use of performance indicators in performance analysis. J. Sports Sci. 2002, 20, 739–754. [Google Scholar] [CrossRef]

- Fortuin, L. Performance indicators—Why, where and how? Eur. J. Eur. Res. 1988, 34, 1–9. [Google Scholar] [CrossRef]

- Euske, K.; Lebas, M.J. Performance measurement in maintenance depots. Adv. Manag. Account. 1999, 7, 89–110. [Google Scholar]

- Winberg, T.M.; Hedman, L. Student attitudes toward learning, level of pre-knowledge and instruction type in a computer-simulation: Effects on flow experiences and perceived learning outcomes. Instr. Sci. 2008, 36, 269–287. [Google Scholar] [CrossRef]

- Fornell, C. A national customer satisfaction barometer: The Swedish experience. J. Mark. 1992, 56, 6–21. [Google Scholar] [CrossRef]

- Anderson, E.W.; Fornell, C.; Lehmann, D.R. Customer satisfaction, market share, and profitability: Findings from Sweden. J. Mark. 1994, 58, 53–66. [Google Scholar] [CrossRef]

- Guolla, M. Assessing the teaching quality to student satisfaction relationship: Applied customer satisfaction research in the classroom. J. Mark. Theory Pract. 1999, 7, 87–97. [Google Scholar] [CrossRef]

- Wiers-Jenssen, J.; Stensaker, B.; Grogaard, J. Student-satisfaction: Towardsan empirical decomposition of the concept. Qual. High. Educ. 2002, 8, 183–195. [Google Scholar] [CrossRef]

- Lo, C.C. How student satisfaction factors affect perceived learning. J. Scholarsh. Teach. Learn. 2010, 10, 47–54. [Google Scholar]

- Hussein, B. A blended learning approach to teaching project management: A model for active participation and involvement: Insights from Norway. Educ. Sci. 2015, 5, 104–125. [Google Scholar] [CrossRef]

- Hussein, B.; Ngereja, B.; Hafseld, K.H.J.; Mikhridinova, N. Insights on using project-based learning to create an authentic learning experience of digitalization projects. In Proceedings of the 2020 IEEE European Technology and Engineering Management Summit (E-TEMS), Dortmund, Germany, 5–7 March 2020; pp. 1–6. [Google Scholar]

- Saunders, M.; Lewis, P. Doing Research in Business & Management: An Essential Guide to Planning Your Project; Pearson: Harlow, UK, 2012. [Google Scholar]

- Baruch, Y. Response Rate in academic studies—A comparative analysis. Hum. Relat. 1999, 52, 421–438. [Google Scholar] [CrossRef]

- Giles, T.; Cormican, K. Best practice project management: An analysis of the front end of the innovation process in the medical technology industry. Int. J. Inf. Syst. Proj. Manag. 2014, 2, 5–20. [Google Scholar] [CrossRef]

- Gliem, J.A.; Gliem, R.R. Calculating, interpreting, and reporting Cronbach’s alpha reliability coefficient for Likert-type scales. In Proceedings of the Midwest Research-to-Practice Conference in Adult, Continuing, and Community Education, Columbus, OH, USA, 8–10 October 2003. [Google Scholar]

- Gravetter, F.J.; Forzano, L.-A.B. Research Methods for the Behavioral Sciences, 4th ed.; Belmont: Wadsworth, OH, USA, 2012. [Google Scholar]

- Yong, A.G.; Pearce, S. A Beginner’s guide to factor analysis: Focusing on exploratory factor analysis. Tutor. Quant. Methods Psychol. 2013, 9, 79–94. [Google Scholar] [CrossRef]

- Rentz, J.O. Generalizability theory: A comprehensive method for assessing and improving the dependability of marketing measures. J. Mark. Res. 1987, 24, 19–28. [Google Scholar] [CrossRef]

- Mann, H.B.; Whitney, D.R. On a test of whether one of two random variables is stochastically larger than the other. Ann. Math. Stat. 1947, 18, 50–60. [Google Scholar] [CrossRef]

- Wiggins, G.P. The Jossey-Bass Education Series. Assessing Student Performance: Exploring the Purpose and Limits of Testing; Jossey-Bass: San Francisco, CA, USA, 1993. [Google Scholar]

- Nair, C.; Sid, P.; Patil, A.; Mertova, P. Re-engineering graduate skills—A case study. Eur. J. Eng. Educ. 2009, 34, 131–139. [Google Scholar] [CrossRef]

- Barker, R. No, management is not a profession. Harv. Bus. Rev. 2010, 88, 52–60. [Google Scholar] [PubMed]

- Kolb, D.A. Experiential Learning: Experience as the Source of Learning and Development; Prentice-Hall: Englewood Cliffs, NJ, USA, 1984. [Google Scholar]

- Smith, G. How does student performance on formative assessments relate to learning assessed by exams? J. Coll. Sci. Teach. 2007, 36, 28–34. [Google Scholar]

- Oliveira, M.F.; Andersen, B.; Pereira, J.; Seager, W.; Ribeiro, C. The use of integrative framework to support the development of competences. In Proceedings of the International Conference on Serious Games Development and Applications, Berlin, Germany, 9–10 October 2014; pp. 117–128. [Google Scholar]

| Student Evaluation Tools (with References) | Criteria | ||

|---|---|---|---|

| Learning | Motivation | Performance | |

| Context (What the Criteria Focuses on Measuring) | |||

| Emphasizes the direct learning outcome (i.e., knowledge and competencies gained) | Emphasizes whether the conditions are favorable to support learning | Emphasizes whether knowledge gained will be beneficial in the long term | |

| [7] |

|

| - |

| [5] |

|

| - |

| [9] |

|

| - |

| [2] |

|

|

|

| Measurement Criteria | Measurement Scales |

|---|---|

| Learning | A: Perceived impact of project-based learning (PBL) on learning

|

| Motivation | B: Perceived impact of PBL on motivation

|

| Performance | C: Perceived impact of PBL on performance

|

| Participants (%) | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Measure | Sample 1 | Sample 2 | ||||||||||

| n | SA | A | N | D | SD | n | SA | A | N | D | SD | |

| 1 | 55 | 20 | 47.27 | 20 | 12.73 | 0 | 52 | 32.69 | 38.46 | 19.23 | 7.69 | 1.92 |

| 2 | 55 | 27.27 | 36.36 | 25.45 | 10.91 | 0 | 52 | 17.31 | 61.54 | 13.46 | 5.77 | 1.92 |

| 3 | 55 | 25.45 | 40 | 25.45 | 9.09 | 0 | 52 | 15.38 | 44.23 | 34.62 | 1.92 | 3.85 |

| 4 | 55 | 25.45 | 34.55 | 25.45 | 14.55 | 0 | 52 | 15.38 | 53.85 | 21.15 | 5.77 | 3.85 |

| 5 | 55 | 54.55 | 34.55 | 7.27 | 3.64 | 0 | 52 | 61.54 | 26.92 | 5.77 | 5.77 | 0 |

| 6 | 55 | 20 | 50.91 | 25.45 | 1.82 | 1.82 | 52 | 17.31 | 32.69 | 21.15 | 23.08 | 5.77 |

| 7 | 55 | 38.18 | 40 | 10.91 | 5.45 | 5.45 | 52 | 44.23 | 34.62 | 17.31 | 0 | 3.85 |

| 8 | 55 | 32.73 | 34.55 | 21.82 | 9.09 | 1.82 | 52 | 15.38 | 50 | 25 | 5.77 | 3.85 |

| 9 | 55 | 25.45 | 47.27 | 20 | 7.27 | 0 | 52 | 21.15 | 48.08 | 23.08 | 3.85 | 3.85 |

| 10 | 55 | 27.27 | 43.64 | 16.36 | 10.91 | 1.82 | 52 | 19.23 | 48.08 | 21.15 | 9.62 | 1.92 |

| Variable | Sample 1 | Sample 2 | Mann-Whitney U | Significance (p-Value) | Decision |

|---|---|---|---|---|---|

| Mean Values | |||||

| Q1 | 50.9 | 57.28 | 1600.50 | 0.261 | Retain H0 |

| Q2 | 55.11 | 52.95 | 1487.50 | 0.700 | Retain H0 |

| Q3 | 41.94 | 65.40 | 803.00 | 0.000 | Reject H0 |

| Q4 | 45.21 | 62.31 | 973.00 | 0.002 | Reject H0 |

| Q5 | 55.58 | 52.51 | 1512.00 | 0.562 | Retain H0 |

| Q6 | 47.15 | 60.47 | 1074.00 | 0.020 | Reject H0 |

| Q7 | 57.74 | 50.46 | 1624.50 | 0.193 | Retain H0 |

| Q8 | 50.55 | 57.26 | 1250.50 | 0.238 | Retain H0 |

| Q9 | 52.38 | 55.53 | 1346.00 | 0.574 | Retain H0 |

| Q10 | 51.92 | 55.96 | 1322.00 | 0.474 | Retain H0 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ngereja, B.; Hussein, B.; Andersen, B. Does Project-Based Learning (PBL) Promote Student Learning? A Performance Evaluation. Educ. Sci. 2020, 10, 330. https://doi.org/10.3390/educsci10110330

Ngereja B, Hussein B, Andersen B. Does Project-Based Learning (PBL) Promote Student Learning? A Performance Evaluation. Education Sciences. 2020; 10(11):330. https://doi.org/10.3390/educsci10110330

Chicago/Turabian StyleNgereja, Bertha, Bassam Hussein, and Bjørn Andersen. 2020. "Does Project-Based Learning (PBL) Promote Student Learning? A Performance Evaluation" Education Sciences 10, no. 11: 330. https://doi.org/10.3390/educsci10110330

APA StyleNgereja, B., Hussein, B., & Andersen, B. (2020). Does Project-Based Learning (PBL) Promote Student Learning? A Performance Evaluation. Education Sciences, 10(11), 330. https://doi.org/10.3390/educsci10110330