Abstract

The purpose of the study on which this paper is based was to conduct a performance evaluation of student learning for an introductory course in project management in a higher educational institution in Norway. This was done by utilizing performance measurement philosophy to evaluate perceived student learning after a project-based assignment was applied as an instructional tool. The evaluation was conducted at the end of the semester to determine whether it facilitated learning effectiveness by providing an authentic learning experience. Relevant learning criteria were identified from existing literature and were measured by means of a questionnaire survey. Ten measurement scales were established using a 5-point Likert scale. The survey was then rolled out for the same subject for two consecutive semesters for just over 100 project management students. The results indicated that the incorporation of project-based assignments has a positive impact on student learning, motivation, and performance both in the short and long term. The study finally revealed that the incorporation of project-based assignments enables the creation of real-life experiences, which further stimulates the creation and development of real-life competencies.

1. Introduction

“Unmeasured things cannot be easily replicated, or managed, or appreciated [1].”

It is common knowledge that the ultimate goal of education is for students to learn [2], but this raises the question, How do we know that learning has been achieved? Student evaluation is necessary if the goal is to ensure learning quality [3]. Student evaluation research has gained popularity and became more evident in academic institutions after it was utilized to measure teaching quality, learning quality, and/or student and teacher satisfaction [2,4,5,6,7].

According to Marsh [7], Remmers can be recognized as the founder of student evaluation research as his work dates back to the 1920’s. In his early research, however, Remmers cautioned against the adoption of his evaluation scale by emphasizing that it should not be used to measure teaching effectiveness but rather to measure students’ opinions on their learning and the relationship between student and instructor [7]. Since then, research has witnessed a tremendous advancement of related studies that have challenged Remmers’ arguments and led to the development of numerous other evaluation scales. Examples of such scales are the Student Instructional Rating System (SIRS) [8], the Course Experience Questionnaire (CEQ), which was developed to measure the perceived quality of teaching [9], and the Student Evaluation of Teaching Effectiveness (SETE) [10], which was developed to evaluate teaching quality.

The biggest difference between higher education and other educational levels is that graduates from higher educational institutions are expected to be ready to compete in the job market. Therefore, the most challenging issues in higher education are to ensure that students develop both generic attributes and competencies such as an ability for clear thinking, to work independently, and to communicate and collaborate well with others [11]. These challenges have stimulated the involvement of additional instructional tools, such as project-based learning (PBL) [12,13], augmented reality (AR) [14], and serious games [15,16] to facilitate the achievement of such attributes and competencies.

For many years, tuition in project management (the instructional course evaluated in our study) has utilized various instructional tools to facilitate student learning. With the ongoing rapid transformation in the business environment, projects, and the field of project management, it is imperative to build a strong foundation for the development of students’ skills, knowledge, and experiences relating to digitalization. It is important to highlight what is expected of them as future project managers if they are to excel in the business environment. As one of the efforts in response to this situation, a real hands-on project-based digital assignment was introduced as an additional learning aid in the project management introductory course in one of the universities in Norway. The aim was to provide students with an authentic experience in project management learning. Through the assignment, the students were expected to have full control of their learning process by utilizing the course material to produce a working digital learning aid that would be utilized by future students taking the same subject. The use of a project-based assignment was selected because it provided an opportunity for students to showcase what they had learnt by creating outputs that would be meaningful to them [17].

Because assignments constituted a new element in the course curriculum, there arose the need to conduct an evaluation to identify whether there would be a positive impact on the students’ development of required skills, knowledge, and real-life experience. A summative evaluation was proposed to identify the impact in relation to facilitating student learning for the purpose of making improvement decisions [18]. Learning outcomes can be measured from the teacher’s perspective, from students’ perspective, or from a university perspective [19]. For the study reported in this paper, we adopted a student-centered approach by focusing on students’ perceptions of their own learning, as recommended by Espeland and Indrehus [20]. Accordingly, in this paper, we aim to answer the question “How can performance measurement methods be utilized to measure the effectiveness of Project-Based Learning in higher institutions?” This objective will be addressed through two research questions.

- Research Question 1: Why should the effectiveness of instructional tools (i.e., PBL) in higher learning institutions be measured?

- Research Question 2: How can instructors measure the impact of instructional tools (i.e., PBL) as an effort towards facilitating student learning?

Although learning quality and teaching quality have not been clearly distinguished, it is important to highlight that this paper focuses on students’ learning and factors that support their learning. In the context of this study, we identify teaching quality as relevant for facilitating student learning and would argue that it should not be regarded as an independent factor.

2. Theoretical Background

2.1. The Role of Project-based Learning (PBL) in Student Learning

The main difference between universities and other educational institutions is that universities aim to enable students to move from a dependent mode of learning to an autonomous one [2]. As stated by Change et al. [13], it is very important for students to be able to apply the knowledge they have acquired in higher education. To achieve this, learning activities that encourage interaction with others, active hands-on experience, the search for information from various sources, and autonomous working and responsibility should be encouraged [21]. The incorporation of project-based learning in the curriculum can facilitate the attainment of these outcomes [13].

Project-based learning (PBL) is a pedagogical means by which the student controls the learning process, whereas the teacher acts more in the capacity of a facilitator [12]. Some of the ways in which PBL can be applied include involving students in the task of creating a new product or engaging their participation in a real task [13]. PBL enables students to improve their ability to learn effectively, stimulates their motivation to learn, and facilitates the implementation of their capabilities [13]. In addition, PBL enables students to acquire autonomy and some control over their own learning. The latter point is in line with Chen and Lin’s suggestion that higher levels of autonomy are needed for one to generate knowledge [22].

Students tend to acquire a different kind of knowledge when taught using PBL as opposed to other traditional programs [23] due to the fact that PBL provides them with an opportunity to integrate knowledge with real-world experiences [21]. The incorporation of PBL in the learning process has been associated with the development of much deeper learning, greater understanding and higher motivation to learn, increased implementation capability, and improvement in learning effectiveness [12,13]. Furthermore, to achieve the positive outcomes of PBL, it is crucial to ensure that a suitable environment is available for students to experience both the knowledge they are intended to acquire and skills development [13].

However, for PBL to be effective, it is important to have a clearly defined and specific goal that is to be achieved by the students. Petrosina [24] found that when students were given a specific goal to achieve through PBL they developed far greater competencies than when they were asked to produce an output with no direct goal. This is in line with the findings of an earlier study by Boyle and Trevitt [21], who highlight that one of the important requirements to facilitate quality teaching and learning is disseminating information clearly through learning goals and values. Petrosino [24] discovered that when students were given a focused goal they were able to point out their own mistakes and even those made by other students, but the same was not found for the group of students that was not given a focused goal. Similarly, in the case of serious games, defining a clear goal for the game is necessary so that the player knows exactly what it to be achieved [25]. Thus, goal setting is an important process in the implementation of PBL.

2.2. Performance Measurement in the Learning Context

Almost all aspects of modern life are subject to some form of measurement [1]. Performance measurement has become a common practice in all private and public sectors [26]. The term performance measurement comprises two elements: performance and measurement. According to Lebas [27], performance is about the future, whereas measures are about the past, which implies the use of data that have been collected in the past to make decisions about the future. In an intensive review, performance measurement is referred to in terms of four attributes: (1) improvement; (2) setting criteria; (3) detection of variances and taking corrective measures; and (4) making information processing more efficient [28]. The author of the review further highlights the importance of data, measuring methods, and measuring attributes such as the measures, metrics, and indicators used to implement the measurement.

Why should performance be measured? One of the reasons for conducting a performance measurement is to enable improvements to be made [1,29]. Two of the reasons for conducting measurements in an academic environment are to gather data that can be used to improve decision-making [7] and to explore ways to facilitate students’ learning [10]. In academic environments, it is quite common to measure teaching and learning quality through the use of student surveys [2]. However, it is argued that the relationship between student learning and the teacher is existent but not very significant; nevertheless, the relationship is between the independent measures of student learning and those of teaching effectiveness [10]. A number of factors have been identified as important to consider if quality teaching and learning is to be achieved: proper communication about expectations, assessment method, criteria of assessment and the need for feedback, and suitable workload; the learning activities and material chosen should also stimulate students’ interests [21].

If it is assumed that learning in itself is an abstract concept; in order to measure whether learning has occurred it is important to identify the indicators with which it can be measured. A performance indicator is defined as “a selection, or combination, of action variables that aim to define some or all aspects of a performance” [30]. Numerous indicators, criteria, measurement instruments, and scales can be used to measure student learning in higher educational institutions. These performance indicators or criteria are not the ultimate goal but rather a means to an end because they provide an opportunity for monitoring and controlling progress [31]. In normal situations, one may expect that similar organizations (in this case, educational institutions) will have similar concepts of performance and therefore have similar measures, but this is not the case [31]. There has been a lack of agreement among similar businesses about the measures that define performance [32].

Hildebrand et al. [5] highlight the importance of measuring the quality of teaching in facilitating learning and they emphasize that the right question to ask is not ‘if’ but rather ‘how’ we should conduct such evaluations. Furthermore, they identify five components of effective teaching as follows:

- Analytic/synthetic approach, which relates to scholarship, with emphasis on breadth, analytic ability, and conceptual understanding;

- Organization/clarity, which relates to skills at presentation but is subject-related, not student-related;

- Instructor group interaction, which relates to rapport with the class as a whole, sensitivity to class response, and skill in securing active class participation;

- Instructor individual interaction, which relates to mutual respect and rapport between the instructor and the individual student;

- Dynamism/enthusiasm, which relates to the flair and infectious enthusiasm that comes with confidence, excitement for the subject, and pleasure in teaching.

Marsh [7] has developed the Student Evaluation of Educational Quality (SEEQ) instrument and identifies measurement factors in student evaluations of quality teaching as learning, enthusiasm, organization, rapport, breadth, assignments, material, workload, and group interaction. Furthermore, he recommends the use of measurement tools that are multidimensional and that comprise multiple criteria in order to gain useful results. By contrast, Ramsden [9] identifies the factors for measuring the perceived teaching quality in higher education as good teaching, setting clear goals, providing an appropriate workload, appropriate assessments, and emphasizing student independence. Although the above-mentioned approaches differ, there is a homogeneity between the factors being measured.

However, most of the literature to date on performance measurement in the context of learning focuses mainly on student satisfaction rather than on the actual objective of student learning [4]. According to Winberg and Hedman [33] student satisfaction “is the subjective perceptions, on the students’ part, of how well a learning environment supports academic success.” There are two types of satisfaction: transaction satisfaction, which is obtained after a one-time-experience, and cumulative satisfaction, which is obtained after repeated transactions [34]. In one study, satisfaction measured at the end of a course was justified as cumulative because of the varying nature of the quality of delivery by the teacher and the receptiveness of the students throughout the course [35]. For this reason, we adopted the same mode of thinking in our study and measured satisfaction as a cumulative experiencer rather than a transactional experience.

Although the types of performance measurements that rely on students’ perceptions in learning environments are highly adequate for measuring satisfaction [10], the findings of one study indicate that learning is strongly related to course satisfaction [36]. Winberg and Hedman [33] support that finding and they speculate that some indicators of student satisfaction are positively related to student learning.

In the context of higher educational institutions, it is imperative to incorporate aspects of both learning and student satisfaction in order to measure accurately the extent to which courses fulfill their objectives [2]. This argument is in line with an earlier study in which a close overlap was found between student satisfaction and teaching evaluation surveys [37]. However, in the earlier study, both student satisfaction and student learning were identified as valid constructs for measuring performance, despite the use of one (i.e., student satisfaction) to measure the other (i.e., learning) [2]. Similarly, an exploration of the association between factors relating to student satisfaction and perceived learning indicated that a higher degree of association exists between the two constructs [38].

Furthermore, Bedggood and Donovan [2] argue that the dimensions for measuring student learning are as follows:

- Perceived learning, which is associated with increases in knowledge and skills;

- Perceived challenge, which is associated with stimulation and motivation;

- Perceived task difficulty, which is associated with the complexity and difficulty of concepts;

- Perceived performance, which is associated with self-evaluating performance.

The authors argue that the dimensions for measuring to measuring satisfaction are:

- The quality of instruction, which refers to instructors’ skills and ability as well as their friendliness, enthusiasm, and approachability;

- Task difficulty in terms of demands and effort required by students to achieve their desired result;

- Academic development and stimulation, which refers to how stimulated and motivated a student feels and whether they believe they are increasing and developing their academic skills.

Bedggood and Donovan [2] found that task difficulty could be used both as a learning measure and as a satisfaction measure.

Although the main aim of the student evaluation tools discussed above is to assess student learning, other factors should be included due to their strong association with student learning. We examined the factors included in the evaluation tools and our findings showed that most of the factors fall into two categories, namely learning and motivation constructs, while the performance construct appeared to be incorporated overall but not specifically itemized. In this paper, we consider all three constructs because they all appear to be significantly related to the facilitation of overall learning effectiveness. The findings are summarized in Table 1.

Table 1.

Student evaluation factors in relation to learning, motivation, and performance.

3. Method

3.1. Project-Based Assignment

A project assignment was introduced into an introductory course in project management. This was done in addition to other instructional tools that were already in use. These tools included normal lectures, guest lectures, Kahoot sessions (i.e., an educational game-based educational platform), hand-in assignments, and visual aids such as animations of project cases on YouTube. An overview of the other instructional methods used in the introductory course can be found in an article by Hussein [39]. The assignment was designed with the intention of offering students an authentic project management experience. The learning objectives of the assignment included the following [40]:

- Providing students with an authentic learning environment that introduces them to the concept of digitalization;

- Providing students with a learning environment that enables them to comprehend the interrelationship between organization, value creation, and technology in digitalization projects.

The main idea of the proposed assignment was to organize student-learning around developing digital resources and then using these developed digital learning resources to support self-paced learning outside the classroom. Students attending the subject were required to work together on smaller project assignments. Each project assignment was supposed to develop a digital learning resource. The assignment was conducted in self-enrolled groups of 4–6 students. Examples of these digital learning resources included but were not limited to:

- An animation of a real-life project case, explaining the main events, the challenges encountered, and useful insights gained from the real-life case;

- A computer simulation that shows how certain project variables are influenced by each other on the dynamics of their interaction;

- A gamified experience of a problem or a project situation using computer games;

- Gamified tests and quizzes to support learning.

The project assignment included various deliverables at various stages of product development, such as revisiting literature on digitalization projects, developing a project plan, delivering a final product, and delivering a peer-review of products developed by other groups. The student assessment was done based on the final product and the peer-review done by other groups.

The assignment was aimed at enabling students to think critically and to search for relevant information on the impact of digital transformation in project management in order to prepare them for the current business environment. To yield the required benefits, the students were given a focused goal to produce a digital learning tool that would be utilized by future students studying the same course. The students were given full autonomy regarding the choice of the output. After completion of the assignment towards the end of the semester, a survey instrument was rolled out to the students to conduct a summative evaluation of the performance of the assignment with a view to facilitating student learning. A summative evaluation was selected because this method is better for providing an overall assessment of learning outcome compared to a formative assessment, which is done at various points during the learning process in order to make necessary future plans and improvements [18].

3.2. Choice of Data Collection Method: Survey

The choice of data collection method is one of several major aspects that requires significant consideration by researchers [41]. The choice of the methodology is linked to the research question. Our study was an explanatory study and focused on a particular situation in order to explain the relationships between variables. A survey instrument was developed to uncover the impact of project-based learning on the following criteria:

- Learning;

- Motivation;

- Performance.

Furthermore, the survey was conducted using a similar set of questions asked in two consecutive semesters with two separate student samples. The first sample comprised students who were taught the same course in the fall semester of 2019 and the second sample comprised students taking the same course in the spring semester of 2020. The administration of the survey was done in class when the course was completed at the end of the semester. All conditions, such as workload, duration, goal, and instructions, were maintained as equal for both samples to control variability. The total number of responses was 107 out of a possible 162 from both sample 1 (n = 52) and sample 2 (n = 55), which is a response rate of 66% and is thus considered acceptable [42]. To ensure that the survey was done ethically, student’ participation was voluntary and not coerced in any way. In addition, to observe confidentiality, the responses were made anonymous by not asking for personal information that could connect the response to any particular student.

The survey tool adopted in the study built on the way of measuring student learning described by Bedggood and Donovan [2], with some modifications. Bedggood and Donovan [2] used six measurement items derived from two constructs of learning quality and student satisfaction, but in our study we used three measurement items derived from three constructs: learning, motivation, and performance. Measurement scales were generated for each criterion to reflect the measurement environment under investigation. Ten measurement scales were generated in total, each using the same 5-point Likert scale: 5 = strongly agree, 4 = agree, 3 = neutral, 2 = disagree, and 1 = strongly disagree (see Table 2). The survey tool excluded the inclusion of factors such as age, race, and gender because it has been found that such factors do not affect student evaluations [10], and in the context of our study they were irrelevant.

Table 2.

Measurement criteria, scales, and items.

The collected data were then imported to Microsoft Excel. The data for each sample data were imported into separate worksheets and formatted. The formatted data was then imported into SPSS for further analysis. The internal consistency of each sample was checked using analysis of variance (ANOVA), and the equality of the samples (groups) was checked using the Mann–Whitney U test. Thereafter, a descriptive analysis of the data was performed for each sample separately and for combined sample to analyze how each of the criterion contributed towards overall student learning.

3.3. Reliability, Validity, and Generalizability of the Scale

3.3.1. Reliability

It is imperative to report the Cronbach’s alpha coefficient for internal consistency reliability for any scales or subscales used in research [43,44]. Although there are several methods for testing the reliability of scales, we used Cronbach’s alpha, following other studies that developed similar scales for evaluating performance related to learning [2,9]. We decided to use the overall alpha instead of individual alphas for each scale because all questions were related and used the same Likert scale; therefore, we assumed that the individual alphas would not be very different. The scale was rolled out to two sets of project management students in two consecutive semesters, and the overall Cronbach’s alpha was calculated to be 0.847 for sample 1 and 0.894 for sample 2. Because both calculated Cronbach’s α were >0.8, this was an indication that the scale had good internal consistency.

3.3.2. Validity and generalizability

Validity indicates that a test measures what it claims to measure [45]. Several types of validity are used by researchers to validate their tests. In our study, face validity and content validity were used. Face validity refers to whether a test appears to measures what it claims to measure [45], whereas content validity is a non-statistical type of validity that can be determined using expert opinion. By applying the two types of validity, the questions were examined closely to ensure that they measured what we intended them to measure. The reason for the close examination was partly due to the nature of the questions, most of which were designed as statements of individual perceptions, and partly due to the number of participants (n < 300), which meant that validating them using methods such as factor analysis would not have been advisable [46]. Generalizability is the degree to which one can generalize the collected data to a larger population [47]. In perceptive questions, it can be challenging to generalize data to a larger population. Our questionnaire is suitable for a study environment similar to the one in our study. For other study environments, it could be adopted with modifications to suit the environment in question.

4. Results

The results of the data from both samples is shown on Table 3. A descriptive analysis of the data conveyed two important results:

Table 3.

Study samples 1 and 2 survey results.

- Students experienced a significant learning outcome;

- High correspondence in the degree of learning between students in both samples.

4.1. Correspondence in the Degree of Learning Performance Exhibited in Samples 1 and 2

A Mann–Whitney u-test was conducted to test the equality between sample 1 and sample 2. This test was selected because it is a non-parametric and enables two independent groups to be compared without assuming that the values are normally distributed [48]. The null and alternate hypotheses were set as follows:

H1:

Sample 1 and sample 2 are equal.

H2:

Sample 1 and sample 2 are not equal.

The Mann–Whitney U test results, as presented on Table 4, indicated that the null hypothesis could only be rejected for three variables (Q3, Q4, and Q6) because they had a p-value < 0.05. While for seven variables (Q1, Q2, Q3, Q5, Q7, Q8, Q9, and Q10), the null hypothesis was retained because they had a p-value > 0.05.

Table 4.

Mann–Whitney U test results.

4.2. Combined Samples Results

4.2.1. Perceived Learning Performance

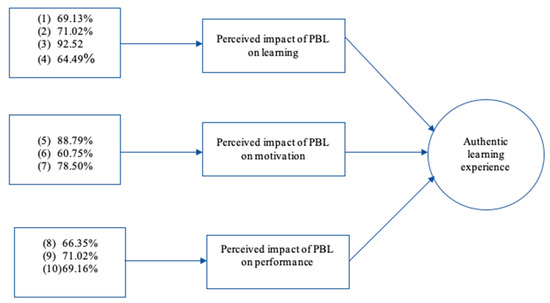

With regards to measuring the perceived impact of PBL on learning, 69% of all students in both samples agreed or strongly agreed that the project-based assignment enabled them to gain an in-depth understanding of project management concepts, 71% of students agreed or strongly agreed that the assignment provided them with an opportunity to relate better to the project management concepts, 92% of students agreed or strongly agreed that the assignment enabled them to recognize the triple tasks of digitalization projects, and 64% of students agreed or strongly agreed that the assignment provided them with an authentic learning experience.

With regard to the measured perceived impact of PBL on motivation, 88% of students agreed or strongly agreed that knowing that the assignment contributed to 40% of their final grade had a higher impact on their motivation, 60% of the students agreed or strongly agreed that knowing that the output of the assignment was going to be useful as a learning aid by future students had a positive impact on their motivation, and 78% of students agreed or strongly agreed that the working environment in their team was quite enjoyable and that had an impact on their motivation.

With regard to the perceived impact of PBL on performance, 66% of students agreed or strongly agreed that, based on the experiences they had gained, they would be able to manage projects better in the future, 71% of students agreed or strongly agreed that their teams had produced an excellent learning aid in project management, and 69% of students agreed or strongly agreed that they would evaluate their team efforts as outstanding with regard to collaboration, proper communication, and knowledge sharing. A summary is presented in Figure 1.

Figure 1.

Combined samples results.

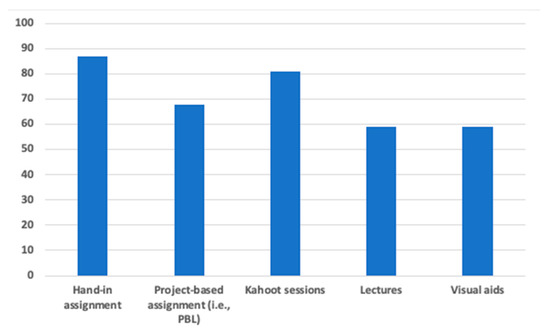

4.2.2. The Importance of Instructional Methods

The students were asked to rate the significance of the instructional methods used in the course in terms of how each one helped to facilitate their learning process. The results indicated that all five instructional tools were perceived to be significant in facilitating learning. Each tool was preferred by at least 60% of the students, as shown in Figure 2. PBL as an instructional tool appeared to be the third most preferred by students; i.e., hand-in assignments (87%), Kahoot sessions (81%), and the project-based assignment (68%). Although there was a high variation in the students’ preference, there was an agreement on the importance of PBL towards facilitating learning.

Figure 2.

Combined results for the importance of instructional methods.

5. Discussion

The purpose of our study was to measure student learning through a project-based assignment by utilizing performance measurement philosophy. Accordingly, a student evaluation tool was developed. The methodology built upon the idea of incorporating PBL as an additional instructional method for facilitating student learning and ultimately assessing the outcome by using the self-designed student evaluation tool.

Although performance evaluations based on students’ perceptions may not provide exact numbers for quantification, they enable feedback that can stimulate improvements in student learning. Any good evaluation or assessment will provide some feedback [49]. Evaluating students using standardized assessments (i.e., examinations) is the most common form of evaluation, but the method does not provide much of an opportunity to understand students’ perceptions of the taught course [3]. A student may achieve a high grade but may not necessarily have sufficient real experience to apply their leaning to tackle real-life problems. This suggestion supports Wiggins [49], who states that intellectual performance is not only about giving the correct answers. Therefore, evaluating students on the basis of exam results alone is challenging for the instructor, as he or she may not be able to understand how the students perceived the course, whether they actually learned from it, or whether the intended outcome would be achieved.

Through the evaluation conducted in the study, we were able to show results similar to those reached by various existing studies, which have shown that the utilization of PBL as an instructional method can facilitate the creation of authentic real-life experiences for students in higher educational institutions (e.g., [12,13,21,23]). For university graduates to succeed in the current job market, especially with the rapid digital transformation resulting in new competency requirements, they are expected to showcase more than the basic ability of passing exams with good grades. Perrone [3] also highlights that students showcasing quality work should be encouraged more than grades. Employers have expressed a shortfall in hired graduates’ competencies, such as lack of competencies in proper communication, decision-making, problem-solving, leadership, emotional intelligence, and social ethics skills [50]. This articulates the need to consider the inclusion of additional instructional methods that include practice in solving real-life problems. This recommendation is in line with studies in which it was found that some management skills could only be gained through first-hand experience [51] and learning that extends beyond the usual classroom environment [14].

Additionally, the findings from our study suggest that students prefer to learn through more interactive instructional methods. The methods most preferred by the students who participated in the study involved some form of communication, collaboration, and team work, and the direct involvement of both the instructor and students. This indicates that achieving effective learning may require some sort of interaction and/or collaboration, as highlighted by Kolb [52], whose research showed that reflecting on new knowledge, building concepts based on new knowledge, and testing the knowledge in real situations was important. By contrast, our study revealed that the least preferred instructional methods were lectures and visual aids (e.g., watching animated videos of lessons on YouTube) that involved either limited or no direct collaboration or direct involvement of both student and instructor. This finding suggests that the acquisition, reflection, conceptualization, and testing (experience) of knowledge can best be achieved through interactions, rather than individually. This is in line with studies that emphasize the adoption of games and PBL in developing knowledge, as they both requires some level of interaction [12,13,15,16].

Furthermore, our findings indicate that the use of just one instructional method in teaching is not an effective way to facilitate student learning. Different methods stimulate different aspects of learning and, therefore, integrating various methods in teaching a course or program can facilitate learning better compared with using a single method. Although the use of different methods may result in the creation of more work for students and teachers, it will improve students’ attitudes towards the course [53]. Similarly, Oliveira et al. [54] state that each person’s learning is unique, as too is their ability to learn, and, therefore, they recommended the integration of various methods to cater for this uniqueness. Thus, to ensure inclusivity of an entire group, integrating various methods may be preferable as some students may be better acquainted with certain methods compared with others.

Numerous student evaluation tools exist but not all of them can be applicable in all scenarios because different criteria have to be scrutinized and measured (e.g., [8,9,10]). This implies that teachers who would like to obtain feedback on the teaching of their courses with regards to their students’ learning outcomes may choose either to build upon an existing evaluation tool with few modifications or to design and implement their own evaluation tool.

6. Conclusions

Our aim in this paper has been to examine how performance measurement methods can be utilized in the evaluation of the effectiveness of project-based learning (PBL) in higher institutions. We addressed this aim by answering two research questions:

- Research Question 1: Why should we measure the effectiveness of instructional tools (i.e., PBL) in higher learning institutions?

Conclusion: Measuring student learning at university level enables the identification of students’ readiness to be successful in the dynamic and competitive business market. Furthermore, with respect to employers who have expressed an inadequacy in the competencies of employees to solve real-life problems, through our performance evaluation study it was revealed that PBL can have a positive long-term impact on students even after they have graduated and can therefore be considered as a solution towards building real-life competencies such as proper communication, collaboration, emotional intelligence, and problem solving. Through our study, it was further revealed that students preferred PBL as an instructional tool because of the level of interaction it provided and the positive attitude towards learning.

- Research Question 2: How can instructors measure the impact of instructional tools (i.e., PBL) as an effort towards facilitating student learning?

Conclusion: For instructors who would aim to gain feedback about the outcome of their courses, we recommend the utilization of the four-step measurement process, which includes asking the questions below:

- 1.

- What is the intended learning outcome?In this step, identify the overall outcome the course intends to achieve (e.g., create an authentic learning experience, create good communicators, develop sustainable business skills)

- 2.

- How do you intend to achieve this outcome?Decide on the instructional methods to achieve the overall outcome. Although PBL was evaluated in this study, this process is applicable for any instructional tool. Because not all methods enable the attainment of certain outcomes, it is important to ensure the method is a facilitator of the intended outcome rather than a hindrance.

- 3.

- What are the criteria to be measured?Determine which constructs/criteria are to be examined. Numerous studies of criteria pertaining to student evaluations have been conducted. Revisiting some of the evaluations and using them as a foundation for modifications is worth consideration.

- 4.

- What are the specific questions you want evaluated by students?Develop the scale questions (measurement scales) for each construct and ensure that all questions are related to attaining the overall outcome.

Author Contributions

Conceptualization, B.N., B.H., and B.A.; methodology, B.N., B.A., and B.H.; validation, B.N., B.H., and B.A.; formal analysis, B.N., B.A., and B.H.; investigation, B.H. and B.N.; resources including accessibility, B.H.; data curation, B.H.; writing—original draft preparation, B.N.; writing—review and editing, B.H. and B.A.; visualization, B.N.; supervision, B.H. and B.A.; project administration, B.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Spitzer, D.R. Transforming Performance Measurement: Rethinking the Way We Measure and Drive Organizational Success, Illustrated ed.; Amacom: New York, NY, USA, 2007; p. 288. [Google Scholar]

- Bedggood, R.E.; Donovan, J.D. University performance evaluations: What are we really measuring? Stud. High. Educ. 2012, 37, 825–842. [Google Scholar] [CrossRef]

- Perrone, V. Expanding Student Assessment; Association for Supervision and Curriculum Development: Alexandria, VA, USA, 1991; pp. 9–45. [Google Scholar]

- Bedggood, R.E.; Pollard, R. Customer satisfaction and teacher evaluation in higher education. In Global Marketing Issues at the Turn of the Millenium: Proceedings of the Tenth Biennial World Marketing Congress, 1st ed.; Spotts, H.E., Meadow, H.L., Scott, S.M., Eds.; Cardiff Business School: Cardiff Wales, UK, 2001. [Google Scholar]

- Hildebrand, M.; Wilson, R.C.; Dienst, E.R. Evaluating University Teaching; Center for Research and Development in Higher Education, University of California: Berkeley, CA, USA, 1971. [Google Scholar]

- John, T.E.R. Instruments for obtaining student feedback: A review of the literature. Assess. Eval. High. Educ. 2005, 30, 387–415. [Google Scholar] [CrossRef]

- Marsh, H.W. Students’ evaluations of university teaching: Research findings, methodological issues, and directions for future research. Int. J. Educ. Psychol. 1987, 11, 253–388. [Google Scholar] [CrossRef]

- SIRS Research Report 2. Student Instructional Rating System, Stability of Factor Structure; Michigan State University, Office of Evaluation Services: East Lansing, MI, USA, 1971. [Google Scholar]

- Ramsden, P. A performance indicator of teaching quality in higher education: The course experience questionnaire. Stud. High. Educ. 1991, 16, 129–150. [Google Scholar] [CrossRef]

- Jimaa, S. Students’ rating: Is it a measure of an effective teaching or best gauge of learning? Proc. Soc. Behav. Sci. 2013, 83, 30–34. [Google Scholar] [CrossRef][Green Version]

- Clanchy, J.; Ballard, B. Generic skills in the context of higher education. High. Educ. Res. Dev. 1995, 14, 155–166. [Google Scholar] [CrossRef]

- Bell, S. Project-based learning for the 21st century: Skills for the future. Clear. House J. Educ. Strateg. Issues Ideas 2010, 83, 39–43. [Google Scholar] [CrossRef]

- Chang, C.-C.; Kuo, C.-G.; Chang, Y.-H. An assessment tool predicts learning effectiveness for project-based learning in enhancing education of sustainability. Sustainability 2018, 10, 3595. [Google Scholar] [CrossRef]

- Pombo, L.; Marques, M.M. The potential educational value of mobile augmented reality games: The case of EduPARK app. Educ. Sci. 2020, 10, 287. [Google Scholar] [CrossRef]

- Hansen, P.K.; Fradinho, M.; Andersen, B.; Lefrere, P. Changing the way we learn: Towards agile learning and co-operation. In Proceedings of the Learning and Innovation in Value Added Networks—The Annual Workshop of the IFIP Working Group 5.7 on Experimental Interactive Learning in Industrial Management, ETH Zurich, Switzerland, 25–26 May 2009. [Google Scholar]

- Prensky, M. Digital game-based learning. Comput. Entertain. 2003, 1, 1–4. [Google Scholar] [CrossRef]

- Grant, M.M. Getting a grip on project based-learning: Theory, cases and recommendations. Middle Sch. Comput. Technol. J. 2002, 5, 83. [Google Scholar]

- Harlen, W.; James, M. Assessment and learning: Differences and relationships between formative and summative assessment. Assess. Educ. Princ. Policy Pract. 1997, 4, 365–379. [Google Scholar] [CrossRef]

- Entwistle, N.; Smith, C. Personal understanding and target understanding: Mapping influences on the outcome of learning. Br. J. Educ. Psychol. 2010, 72, 321–342. [Google Scholar] [CrossRef] [PubMed]

- Espeland, V.; Indrehus, O. Evaluation of students’ satisfaction with nursing education in Norway. J. Adv. Nurs. 2003, 42, 226–236. [Google Scholar] [CrossRef]

- Boyle, P.; Trevitt, C. Enhancing the quality of student learning through the use of subject learning plans. High. Educ. Res. Dev. 1997, 16, 293–308. [Google Scholar] [CrossRef]

- Chen, C.J.; Lin, B.W. The effects of environment, knowledge attribute, organizational climate and firm characteristics on knowledge sourcing decisions. R D Manag. 2004, 34, 137–146. [Google Scholar] [CrossRef]

- Boaler, J. Mathematics for the moment, or the millennium? Educ. Week 1999, 17, 30–34. [Google Scholar] [CrossRef]

- Petrosino, A.J. The Use of Reflection and Revision in Hands-on Experimental Activities by at-Risk Children. Ph.D. Thesis, Vanderbilt University, Nashville, TN, USA, 1988. Unpublished work. [Google Scholar]

- Andersen, B.; Cassina, J.; Duin, H.; Fradinho, M. The Creation of a Serious Game: Lessons Learned from the PRIME Project. In Proceedings of the Learning and Innovation in Value Added Networks: Proceedings of the 13th IFIP 5.7 Special Interest Group Workshop on Experimental Interactive Learning in Industrial Management, ETH Zurich, Center for Enterprise Sciences, Zurich, Switzerland, 24–25 May 2009; pp. 99–108. [Google Scholar]

- Bititci, U.; Garengo, P.; Dörfler, V.; Nudurupati, S. Performance measurement: Challenges for tomorrow. Int. J. Manag. Rev. 2012, 14, 305–327. [Google Scholar] [CrossRef]

- Lebas, M.J. Performance measurement and performance management. Int. J. Prod. Econ. 1995, 41, 23–35. [Google Scholar] [CrossRef]

- Choong, K.K. Has this large number of performance measurement publications contributed to its better understanding? A systematic review for research and applications. Int. J. Prod. Res. 2013, 52, 4174–4197. [Google Scholar] [CrossRef]

- Anderson, B.; Fagerhaug, T. Performance Measurement Explained: Designing and Implementing Your State-of-the-Art System; ASQ Quality Press: Milwaukee, WI, USA, 2002. [Google Scholar]

- Hughes, M.D.; Bartlett, R.M. The use of performance indicators in performance analysis. J. Sports Sci. 2002, 20, 739–754. [Google Scholar] [CrossRef]

- Fortuin, L. Performance indicators—Why, where and how? Eur. J. Eur. Res. 1988, 34, 1–9. [Google Scholar] [CrossRef]

- Euske, K.; Lebas, M.J. Performance measurement in maintenance depots. Adv. Manag. Account. 1999, 7, 89–110. [Google Scholar]

- Winberg, T.M.; Hedman, L. Student attitudes toward learning, level of pre-knowledge and instruction type in a computer-simulation: Effects on flow experiences and perceived learning outcomes. Instr. Sci. 2008, 36, 269–287. [Google Scholar] [CrossRef]

- Fornell, C. A national customer satisfaction barometer: The Swedish experience. J. Mark. 1992, 56, 6–21. [Google Scholar] [CrossRef]

- Anderson, E.W.; Fornell, C.; Lehmann, D.R. Customer satisfaction, market share, and profitability: Findings from Sweden. J. Mark. 1994, 58, 53–66. [Google Scholar] [CrossRef]

- Guolla, M. Assessing the teaching quality to student satisfaction relationship: Applied customer satisfaction research in the classroom. J. Mark. Theory Pract. 1999, 7, 87–97. [Google Scholar] [CrossRef]

- Wiers-Jenssen, J.; Stensaker, B.; Grogaard, J. Student-satisfaction: Towardsan empirical decomposition of the concept. Qual. High. Educ. 2002, 8, 183–195. [Google Scholar] [CrossRef]

- Lo, C.C. How student satisfaction factors affect perceived learning. J. Scholarsh. Teach. Learn. 2010, 10, 47–54. [Google Scholar]

- Hussein, B. A blended learning approach to teaching project management: A model for active participation and involvement: Insights from Norway. Educ. Sci. 2015, 5, 104–125. [Google Scholar] [CrossRef]

- Hussein, B.; Ngereja, B.; Hafseld, K.H.J.; Mikhridinova, N. Insights on using project-based learning to create an authentic learning experience of digitalization projects. In Proceedings of the 2020 IEEE European Technology and Engineering Management Summit (E-TEMS), Dortmund, Germany, 5–7 March 2020; pp. 1–6. [Google Scholar]

- Saunders, M.; Lewis, P. Doing Research in Business & Management: An Essential Guide to Planning Your Project; Pearson: Harlow, UK, 2012. [Google Scholar]

- Baruch, Y. Response Rate in academic studies—A comparative analysis. Hum. Relat. 1999, 52, 421–438. [Google Scholar] [CrossRef]

- Giles, T.; Cormican, K. Best practice project management: An analysis of the front end of the innovation process in the medical technology industry. Int. J. Inf. Syst. Proj. Manag. 2014, 2, 5–20. [Google Scholar] [CrossRef]

- Gliem, J.A.; Gliem, R.R. Calculating, interpreting, and reporting Cronbach’s alpha reliability coefficient for Likert-type scales. In Proceedings of the Midwest Research-to-Practice Conference in Adult, Continuing, and Community Education, Columbus, OH, USA, 8–10 October 2003. [Google Scholar]

- Gravetter, F.J.; Forzano, L.-A.B. Research Methods for the Behavioral Sciences, 4th ed.; Belmont: Wadsworth, OH, USA, 2012. [Google Scholar]

- Yong, A.G.; Pearce, S. A Beginner’s guide to factor analysis: Focusing on exploratory factor analysis. Tutor. Quant. Methods Psychol. 2013, 9, 79–94. [Google Scholar] [CrossRef]

- Rentz, J.O. Generalizability theory: A comprehensive method for assessing and improving the dependability of marketing measures. J. Mark. Res. 1987, 24, 19–28. [Google Scholar] [CrossRef]

- Mann, H.B.; Whitney, D.R. On a test of whether one of two random variables is stochastically larger than the other. Ann. Math. Stat. 1947, 18, 50–60. [Google Scholar] [CrossRef]

- Wiggins, G.P. The Jossey-Bass Education Series. Assessing Student Performance: Exploring the Purpose and Limits of Testing; Jossey-Bass: San Francisco, CA, USA, 1993. [Google Scholar]

- Nair, C.; Sid, P.; Patil, A.; Mertova, P. Re-engineering graduate skills—A case study. Eur. J. Eng. Educ. 2009, 34, 131–139. [Google Scholar] [CrossRef]

- Barker, R. No, management is not a profession. Harv. Bus. Rev. 2010, 88, 52–60. [Google Scholar] [PubMed]

- Kolb, D.A. Experiential Learning: Experience as the Source of Learning and Development; Prentice-Hall: Englewood Cliffs, NJ, USA, 1984. [Google Scholar]

- Smith, G. How does student performance on formative assessments relate to learning assessed by exams? J. Coll. Sci. Teach. 2007, 36, 28–34. [Google Scholar]

- Oliveira, M.F.; Andersen, B.; Pereira, J.; Seager, W.; Ribeiro, C. The use of integrative framework to support the development of competences. In Proceedings of the International Conference on Serious Games Development and Applications, Berlin, Germany, 9–10 October 2014; pp. 117–128. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).