Introducing Tagasaurus, an Approach to Reduce Cognitive Fatigue from Long-Term Interface Usage When Storing Descriptions and Impressions from Photographs

Abstract

1. Introduction

Background Literature

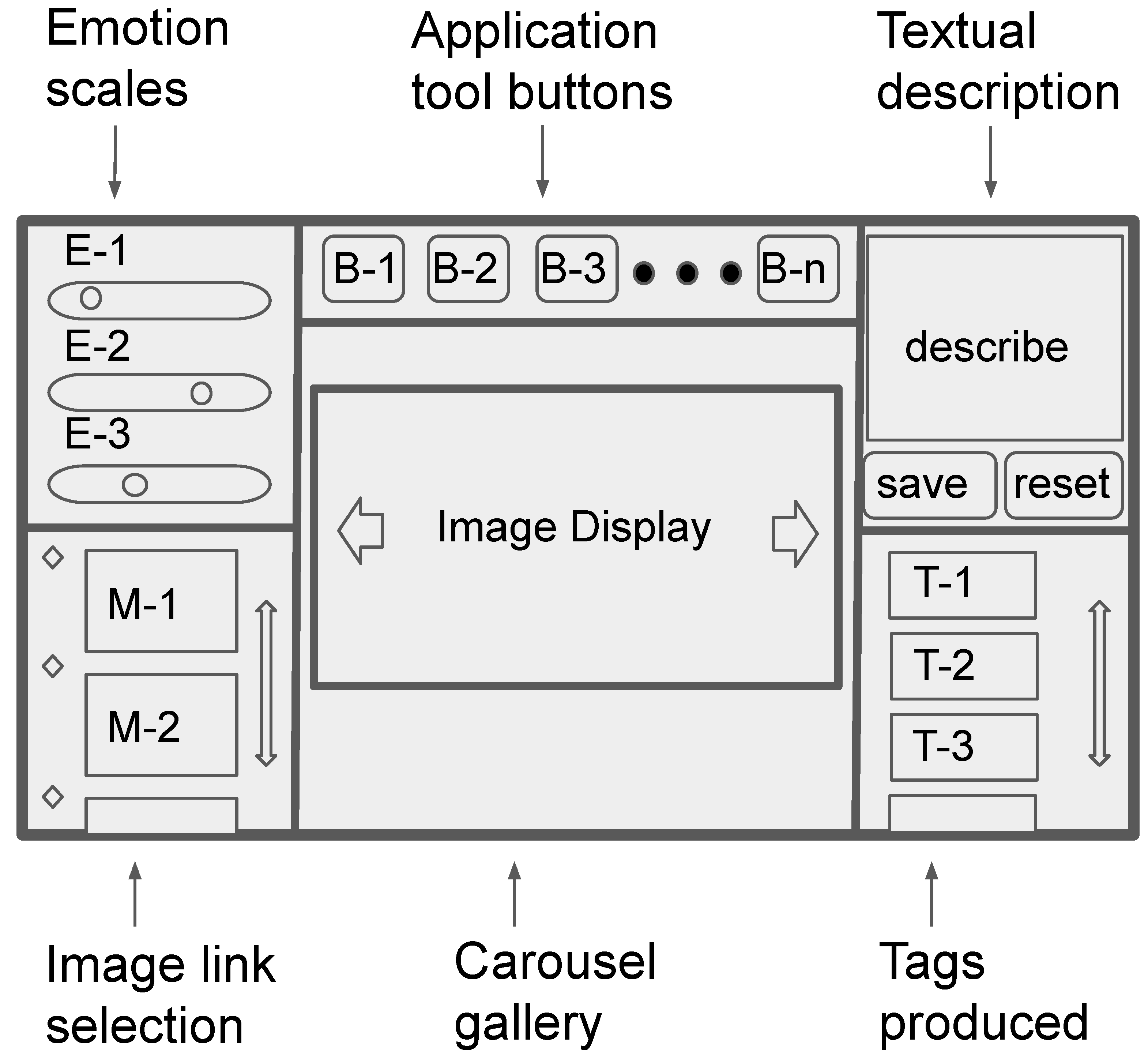

2. Materials and Methods

| Algorithm 1 Tagasaurus Item Addition |

|

Score Metrics

3. Results

4. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| UI | User Interface |

| JS | Javascript |

| ML | Machine Learning |

References

- Small, T.A. What the hashtag? A content analysis of Canadian politics on Twitter. Inf. Commun. Soc. 2011, 14, 872–895. [Google Scholar] [CrossRef]

- Yang, X.; Ghoting, A.; Ruan, Y.; Parthasarathy, S. A framework for summarizing and analyzing twitter feeds. In Proceedings of the 18th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Beijing, China, 12–16 August 2012; pp. 370–378. [Google Scholar]

- Rajabi, A.; Mantzaris, A.V.; Atwal, K.S.; Garibay, I. Exploring the disparity of influence between users in the discussion of Brexit on Twitter. J. Comput. Soc. Sci. 2021, 1–15. [Google Scholar] [CrossRef]

- Mantzaris, A.V. Uncovering nodes that spread information between communities in social networks. EPJ Data Sci. 2014, 3, 1–17. [Google Scholar] [CrossRef]

- Park, J.H.; Kim, S.I. Usefulness of Six emoticon newly adapted to facebook. J. Digit. Converg. 2016, 14, 417–422. [Google Scholar] [CrossRef]

- Miltner, K.M. Internet memes. In The SAGE Handbook of Social Media; Sage: New York, NY, USA, 2018; pp. 412–428. [Google Scholar]

- French, J.H. Image-based memes as sentiment predictors. In Proceedings of the 2017 International Conference on Information Society (i-Society), Dublin, Ireland, 17–19 July 2017; pp. 80–85. [Google Scholar]

- Subramanian, R.R.; Akshith, N.; Murthy, G.N.; Vikas, M.; Amara, S.; Balaji, K. A Survey on Sentiment Analysis. In Proceedings of the 2021 11th International Conference on Cloud Computing, Data Science & Engineering (Confluence), Noida, India, 28–29 January 2021; pp. 70–75. [Google Scholar]

- Myka, A. Nokia lifeblog-towards a truly personal multimedia information system. In MDBIS-Workshop des GI-Arbeitkreises Mobile Datenbanken and Informationsysteme; Citeseer: Princeton, NJ, USA, 2005; pp. 21–30. [Google Scholar]

- Hartnell-Young, E.; Vetere, F. Lifeblog: A new concept in mobile learning? In Proceedings of the IEEE International Workshop on Wireless and Mobile Technologies in Education (WMTE’05), Tokushima, Japan, 28–30 November 2005; p. 5. [Google Scholar]

- Reading, A. Memobilia: The mobile phone and the emergence of wearable memories. In Save as…Digital Memories; Springer: Berlin/Heidelberg, Germany, 2009; pp. 81–95. [Google Scholar]

- Clark, A. What ‘extended me’ knows. Synthese 2015, 192, 3757–3775. [Google Scholar] [CrossRef]

- Belk, R. Extended self and the digital world. Curr. Opin. Psychol. 2016, 10, 50–54. [Google Scholar] [CrossRef]

- Phenice, L.A.; Griffore, R.J. The importance of object memories for older adults. Educ. Gerontol. 2013, 39, 741–749. [Google Scholar] [CrossRef]

- Faiola, A.; Srinivas, P.; Hillier, S. Improving Patient Safety: Integrating Data Visualization and Communication Into Icu Workflow to Reduce Cognitive Load. Proc. Int. Symp. Hum. Factors Ergon. Health Care 2015, 4, 55–61. [Google Scholar] [CrossRef]

- Theis, S.; Nitsch, V.; Jochems, N. Ergonomic Visualization of Personal Health Data; Technical Report; Lehrstuhl und Institut für Arbeitswissenschaft: Aachen, Germany, 2020. [Google Scholar]

- Guerrero-García, J.; González-Calleros, J.M.; González-Monfil, A.; Pinto, D. A method to align user interface to workflow allocation patterns. In Proceedings of the XVIII International Conference on Human Computer Interaction, Cancun Mexico, 25–27 September 2017; pp. 1–8. [Google Scholar]

- Bunian, S.; Li, K.; Jemmali, C.; Harteveld, C.; Fu, Y.; Seif El-Nasr, M.S. VINS: Visual Search for Mobile User Interface Design. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 8–13 June 2021; pp. 1–14. [Google Scholar]

- Mantzaris, A.V.; Walker, T.G.; Taylor, C.E.; Ehling, D. Adaptive network diagram constructions for representing big data event streams on monitoring dashboards. J. Big Data 2019, 6, 24. [Google Scholar] [CrossRef]

- Konrad, A.; Herring, S.C.; Choi, D. Sticker and emoji use in Facebook messenger: Implications for graphicon change. J. Comput.-Mediat. Commun. 2020, 25, 217–235. [Google Scholar] [CrossRef]

- Blohm, I.; Leimeister, J.M. Gamification. Bus. Inf. Syst. Eng. 2013, 5, 275–278. [Google Scholar] [CrossRef]

- Horvat, M.; Popović, S.; Ćosić, K. Towards semantic and affective coupling in emotionally annotated databases. In Proceedings of the 2012 Proceedings of the 35th International Convention MIPRO, Opatija, Croatia, 21–25 May 2012; pp. 1003–1008. [Google Scholar]

- Power, M.J. The structure of emotion: An empirical comparison of six models. Cogn. Emot. 2006, 20, 694–713. [Google Scholar] [CrossRef]

- Qian, X.; Liu, X.; Zheng, C.; Du, Y.; Hou, X. Tagging photos using users’ vocabularies. Neurocomputing 2013, 111, 144–153. [Google Scholar] [CrossRef]

- Fu, J.; Mei, T.; Yang, K.; Lu, H.; Rui, Y. Tagging personal photos with transfer deep learning. In Proceedings of the 24th International Conference on World Wide Web, Florence, Italy, 18–22 May 2015; pp. 344–354. [Google Scholar]

- Li, X.; Xu, C.; Wang, X.; Lan, W.; Jia, Z.; Yang, G.; Xu, J. COCO-CN for cross-lingual image tagging, captioning, and retrieval. IEEE Trans. Multimed. 2019, 21, 2347–2360. [Google Scholar] [CrossRef]

- Andriyanov, N.; Lutfullina, A. Eye Recognition System to Prevent Accidents on the Road. ISPRS-Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2021, 4421, 1–5. [Google Scholar]

- Minaee, S.; Minaei, M.; Abdolrashidi, A. Deep-emotion: Facial expression recognition using attentional convolutional network. Sensors 2021, 21, 3046. [Google Scholar] [CrossRef]

- Qian, X.; Hua, X.S.; Tang, Y.Y.; Mei, T. Social image tagging with diverse semantics. IEEE Trans. Cybern. 2014, 44, 2493–2508. [Google Scholar] [CrossRef]

- Spiekermann, S.; Cranor, L.F. Engineering privacy. IEEE Trans. Softw. Eng. 2008, 35, 67–82. [Google Scholar] [CrossRef]

- Elhai, J.D.; Hall, B.J. Anxiety about internet hacking: Results from a community sample. Comput. Hum. Behav. 2016, 54, 180–185. [Google Scholar] [CrossRef]

- Balakrishnan, S.; Chaudhuri, S.; Narasayya, V. AutoTag’n search my photos: Leveraging the social graph for photo tagging. In Proceedings of the 24th International Conference on World Wide Web, Florence, Italy, 18–22 May 2015; pp. 163–166. [Google Scholar]

- Dertouzos, M.L. The Unfinished Revolution: Human-Centered Computers and What They Can Do for Us; HarperInformation: Palatine, IL, USA, 2001. [Google Scholar]

- Johnson, J.; Jeff, J. GUI Bloopers: Don’ts and Do’s for Software Developers and Web Designers; Morgan Kaufmann: Burlington, MA, USA, 2000. [Google Scholar]

- Nielsen, J. Usability inspection methods. In Proceedings of the Conference Companion on Human Factors in Computing Systems, Boston, MA, USA, 24–28 April 1994; pp. 413–414. [Google Scholar]

- Liou, I.H. A Case Study of Designing a Mobile App Prototype for Seniors. Ph.D. Thesis, The University of Texas Libraries, Austin, TX, USA, 2019. [Google Scholar]

- Johnson, J. Designing with the Mind in Mind: Simple Guide to Understanding User Interface Design Guidelines; Morgan Kaufmann: Burlington, MA, USA, 2020. [Google Scholar]

- McKay, E.N. UI Is Communication: How to Design Intuitive, User Centered Interfaces by Focusing on Effective Communication; Newnes: Wolgan Valley, Australia, 2013. [Google Scholar]

- Jakobus, B.; Marah, J. Mastering Bootstrap 4; Packt Publishing Ltd.: Birmingham, UK, 2016. [Google Scholar]

- Fox, C. A stop list for general text. In ACM SIGIR Forum; ACM: New York, NY, USA, 1989; Volume 24, pp. 19–21. [Google Scholar]

- Cambria, E.; Poria, S.; Gelbukh, A.; Thelwall, M. Sentiment analysis is a big suitcase. IEEE Intell. Syst. 2017, 32, 74–80. [Google Scholar] [CrossRef]

- Ortigosa, A.; Martín, J.M.; Carro, R.M. Sentiment analysis in Facebook and its application to e-learning. Comput. Hum. Behav. 2014, 31, 527–541. [Google Scholar] [CrossRef]

- Grewenig, S. From High-Usability Cross-Device Wireframe-Based Storyboards to Component-Oriented Responsive-Design User Interfaces. Master’s Thesis, Augsburg University, Minneapolis, MN, USA, 2013. [Google Scholar]

- Crockford, D. How JavaScript Works; Virgule-Solidus: Grand Rapids, MI, USA, 2018. [Google Scholar]

- Tambad, S.; Nandwani, R.; McIntosh, S.K. Analyzing programming languages by community characteristics on Github and StackOverflow. arXiv 2020, arXiv:2006.01351. [Google Scholar]

- Järvi, J.; Freeman, J. C++ lambda expressions and closures. Sci. Comput. Program. 2010, 75, 762–772. [Google Scholar] [CrossRef]

- Jasim, M. Building Cross-Platform Desktop Applications with Electron; Packt Publishing Ltd.: Birmingham, UK, 2017. [Google Scholar]

- Sheiko, D. Cross-Platform Desktop Application Development: Electron, Node, NW. js, and React; Packt Publishing Ltd.: Birmingham, UK, 2017. [Google Scholar]

- Camden, R. Client-Side Data Storage: Keeping it Local; O’Reilly Media, Inc.: Newton, MA, USA, 2015. [Google Scholar]

- Tandel, S.S.; Jamadar, A. Impact of progressive web apps on web app development. Int. J. Innov. Res. Sci. Eng. Technol. 2018, 7, 9439–9444. [Google Scholar]

- Singh, K.; Moshchuk, A.; Wang, H.J.; Lee, W. On the incoherencies in web browser access control policies. In Proceedings of the 2010 IEEE Symposium on Security and Privacy, Oakland, CA, USA, 16–19 May 2010; pp. 463–478. [Google Scholar]

- Lubbers, P.; Albers, B.; Salim, F. Using the html5 web storage api. In Pro HTML5 Programming; Springer: Berlin/Heidelberg, Germany, 2010; pp. 213–241. [Google Scholar]

- Gerber, H.U.; Leung, B.P.; Shiu, E.S. Indicator function and Hattendorff theorem. N. Am. Actuar. J. 2003, 7, 38–47. [Google Scholar] [CrossRef]

- Pakhomov, S.; Richardson, J.; Finholt-Daniel, M.; Sales, G. Forced-alignment and edit-distance scoring for vocabulary tutoring applications. In International Conference on Text, Speech and Dialogue; Springer: Berlin/Heidelberg, Germany, 2008; pp. 443–450. [Google Scholar]

- Asquith, W. Lmomco: L-Moments, Censored L-Moments, Trimmed L-Moments, L-Comoments, and Many Distributions; R Package Version 2.3.6; Texas Tech University: Lubbock, TX, USA, 2017. [Google Scholar]

- Richter, G.; Raban, D.R.; Rafaeli, S. Studying gamification: The effect of rewards and incentives on motivation. In Gamification in Education and Business; Springer: Berlin/Heidelberg, Germany, 2015; pp. 21–46. [Google Scholar]

- Lee, J.J.; Hammer, J. Gamification in education: What, how, why bother? Acad. Exch. Q. 2011, 15, 146. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mantzaris, A.V.; Pandohie, R.; Hopwood, M.; Pho, P.; Ehling, D.; Walker, T.G. Introducing Tagasaurus, an Approach to Reduce Cognitive Fatigue from Long-Term Interface Usage When Storing Descriptions and Impressions from Photographs. Technologies 2021, 9, 45. https://doi.org/10.3390/technologies9030045

Mantzaris AV, Pandohie R, Hopwood M, Pho P, Ehling D, Walker TG. Introducing Tagasaurus, an Approach to Reduce Cognitive Fatigue from Long-Term Interface Usage When Storing Descriptions and Impressions from Photographs. Technologies. 2021; 9(3):45. https://doi.org/10.3390/technologies9030045

Chicago/Turabian StyleMantzaris, Alexander V., Randyll Pandohie, Michael Hopwood, Phuong Pho, Dustin Ehling, and Thomas G. Walker. 2021. "Introducing Tagasaurus, an Approach to Reduce Cognitive Fatigue from Long-Term Interface Usage When Storing Descriptions and Impressions from Photographs" Technologies 9, no. 3: 45. https://doi.org/10.3390/technologies9030045

APA StyleMantzaris, A. V., Pandohie, R., Hopwood, M., Pho, P., Ehling, D., & Walker, T. G. (2021). Introducing Tagasaurus, an Approach to Reduce Cognitive Fatigue from Long-Term Interface Usage When Storing Descriptions and Impressions from Photographs. Technologies, 9(3), 45. https://doi.org/10.3390/technologies9030045