1. Introduction

Social media occupies a part in everyday peoples’ life, allowing their users to express and share their views regarding life and timelines. They have also become a key medium that people use on a daily basis to indite their opinion in public matters or to access information. As a result, multiple online social networks are constructed comprising of users with common interests. Unfortunately, in the current threat landscape malicious social media users exist who wish to take advantage of those social networks and use them as a tool to achieve their objectives. One notable example is the USA presidential election in 2016, where Russian users used fake identities to populate Twitter in order to affect the users’ opinion regarding the appropriate candidate. A year later, fake news was spread about the French presidential candidate in the elections of Europe, creating a cyber attack framework of dividing, destabilizing and deceiving users and public opinion [

1].

Nowadays online social media suffers from the proliferation of fake accounts, which are either manually or automatically created. Fake accounts are used as the vector to interact with a population in a social network, aiming to deceive or mislead them by posting either malicious or misleading content. This content includes (i) using shortened URLs that contain malware and tricking innocent users to click them, (ii) identity theft, (iii) data leakage, and (iv) generic social engineering techniques. Additionally, malicious social media users even form and spread rumors, which may affect businesses and society in a large scale. To ensure that fraudulent accounts stay for a longer period on the social network, fake users constantly pursue a diligent strategy based on legitimate profiles, i.e., by mimicking real users. As a result, this impairs the capability of social media to identify and stop the distribution of malicious content from those accounts.

Undoubtedly, identifying and eliminating fake accounts preserves the integrity of social media and can lead to the reduction of malicious behavior. As such, a variety of techniques have been proposed in the literature to detect fake accounts on Twitter [

2,

3]. Amongst them, machine learning has been used in order to optimize and strengthen the detection processes of fake accounts. Considering that the data volume generated by social media constantly grows, there is a need for efficient and robust detection of malicious behavior on these platforms, which will allow them to be more secure and reliable for users. Nonetheless, threat actors have updated their tactics and they have started to target machine learning algorithms themselves with adversarial attacks [

4]. Adversarial attacks, which could facilitate poisoning, evasion or mixed mode attacks, weaken the reliability and robustness of the fake profile detection. Thereat, there is a perpetual need for an in-depth understanding of the effect of adversarial attacks to this machine learning based detection.

To this end, this work studies the feasibility of adversarial attacks on fake Twitter account detection systems that are based on supervised machine learning. We propose and implement two adversarial attacks that aim to poison a classifier that is used to detect fake accounts on Twitter. We evaluate our attacks with two different scenarios where the attacks are used against a classifier, which provides fake account detection and has been trained with a real dataset. We also propose and evaluate a countermeasure that mitigates the adversarial attacks that we propose herein.

The contributions of our work can be summarised as follows:

We mount adversarial attacks against a supervised machine learning classifier that detects fake accounts on Twitter, which has been trained with a real dataset. We explore two types of attacks in which existing entries in the training dataset are poisoned as well as new malicious entries are appended in the dataset.

We study the effect of the aforementioned adversarial attacks in the classification accuracy of the detection system with two scenarios, i.e., static adversarial attacks and dynamic adversarial attacks.

We propose and evaluate the use of a countermeasure to protect from the aforementioned attacks, by detecting potential poisoning that has taken place in the training dataset. We demonstrate that our countermeasure is able to mitigate the type of adversarial attacks that we have focused in this work.

The rest of the paper is organized as follows:

Section 2 includes related work.

Section 3 describes our methodology and

Section 4 provides our experimental results.

Section 5 concludes the paper and provides pointers for future work.

2. Related Work

Profiling a Twitter user is possible by analyzing the tweets and feeds that the user is subscribed to, however this process is not trivial [

5]. Past literature has focused on profiling users based on their social traffic, comparing their profile description, or their demographic data with the topic, or even following frequent patterns along with term frequency [

6], a process that needs to be completed in a timely manner [

7]. Other studies focused on detecting malicious Twitter content arisen from the malicious URLs used by non-legitimate users. As Twitter had a limitation of 140 characters per tweet message (the current limit is 280 characters), according to [

8] malicious users shortened URLs that in turn mask the URL, thus facilitating phishing links using a popular hashtag or responding with interesting lexical content.

Machine learning has been used in the past to detect fake accounts on social media. On Twitter, detecting fake accounts consists of building models based on a user profile and behavior as well as language processing. Fake accounts can be spotted in various ways, such as finding posts with malicious content, having an unbalanced set of more followers than users following them, observing their social behavior in posting new information or reproducing posted content, and their social network interactions. These data can be used as features in machine learning to train and test models that identify fake accounts on Twitter.

In this regard, the majority of past literature analyzes user activities referring to attributes based on the user profile, such as friends and followers, region and language features. Related work also follows an elaborated investigation of posts’ content, the users’ creation time and the users’ responsiveness, aiming to register a timeline of users that will endeavor and fortify the detection process. Stringhini et al. [

9] analyzed how spammers operate on social media platforms, reporting more than 15,000 suspicious accounts. Yang et al. [

10] proposed taxonomy criteria for detecting Twitter spammers, providing experiments on how designed measures have higher detection rates. Liu et al. [

11] developed a hybrid model for detecting spammers in Weibo, a Chinese online platform resembling Twitter, by combining behavioral features, network features, and content-based features.

Moreover, different algorithms have been proposed to identify malicious Twitter accounts as quickly as possible. As Ensemble Learning [

12] enables predictions based on different models it became popular. One of the most popular choices is Random Forest [

13] despite the fact that it is sensitive to noise [

14]. One other popular option is Adaboost, which uses an iterative approach [

15] to learn from the mistakes of weak classifiers, and strengthen them [

16]. Miller et al. [

17] used anomaly detection techniques in spam detection on Twitter, by using clustering on tweet text and a real-time tweet stream. Similarly, Song et al. [

18] applied clustering [

19] by grouping accounts sharing similar name-based features, identifying malicious accounts when users were created within a short period of time. Finally, the goal of Jane Im et al. [

20] was to automatically create a machine learning model for the detection of fake Twitter accounts, presuming upon features of users’ profiles, such as language and region, pieced together with accounts’ relationship on following and followers, in addition to the tweet or retweet feature of Twitter.

Detection of fake accounts with machine learning algorithms is nonetheless prone to adversarial attacks, where carefully crafted input data, either at training or at test time, can subvert the predictions of the models [

21]. There are various types of attacks against machine learning, with evasion attacks being more popular, where the attacker uses malicious samples during the test time in order to evade detection, and poisoning attacks where the attacker injects carefully crafted data in the training dataset and reduces its performance with false negatives [

4]. The selected attack type depends on the knowledge of the system and its data. Nonetheless, poisoning attacks often become more efficient as injecting misleading samples directly into the training data can cause the system to produce more classification errors. In addition, poisoning attacks target training data and due to the need of periodic retraining of a machine learning model this allows poisoning attacks to be used more often and in multiple steps [

21].

Prior work on data poisoning has mostly focused on attacking spam filters, malware detection, collaborative filtering, and sentiment analysis. Shafahi et al. [

22] proposed an attack during training time to manipulate test-time behavior by crafting poison images colliding with a target image, stating the power of these attacks in transfer learning scenarios. Wang et al. [

23] performed a systematic investigation of data poisoning attacks on online learning, claiming that Incremental and Interval attacks have higher performance than Label Flip attacks. Brendel et al. [

24] introduced the Boundary Attack, which does not require hyperparameter tuning and does not rely on substitute models towards decision-based attacks, suggesting that these types of attacks are highly relevant for many real-world deployed machine learning systems. Furthermore, Zhang et al. [

25] proposed a poisoning attack against the federated learning system, showing that it can be launched by any internal participant mimicking other participant’s training samples.

Chen et al. [

26] explored an automated approach for the construction of malware samples with three different threat models, significantly reducing machine learning classifiers’ detection accuracy. With regards to social media, Yu et al. [

27] conducted adversarial poisoning attacks in the training data using two methods, namely: (i) a relabeling technique of existing data and (ii) with the use of crafted data as additional entries. The crafted data can resemble a legitimate user having a decent number of followers and friends; concerning the tweet that can mimic a legitimate tweet by avoiding the inclusion of any sensitive words or hashtags. Moreover, the crafted data can be marked as available for future retraining use for the machine learning model, enforcing the poisoning procedure of the dataset.

A starting point defending against adversarial attacks is to hide important information about the system from the adversary. Although determining the features used by the classifier is not difficult, manipulating all those features may be impossible. Another defending strategy is randomizing the system’s feedback, as it makes it harder for the adversary to gain information, especially on Twitter where the adversary and the benign users use the same channel [

21]. Another strategy is to create an adversary classifier and train it with adversary attack patterns. In that case, as with the use of any classifier, there is a constant need for retraining to avoid poisoning attacks as well as adjust to new adversarial attack patterns.

Laishram et al. [

28] examined poisoning attacks against Support Vector Machine algorithms, proposing a method to protect the classifier from such attacks. Their method, i.e., Curie, works as a filter before the buffered data are used to retrain the classifier removing most of the malicious data points from the training data. Nevertheless an attacker might bypass this detection system using a combination of poison and evasion attack methods. Biggio et al. [

29] experimentally proved that bagging is a general and effective technique to address the problem of poisoning attacks on specific applications, such as spam filtering and web-based intrusion detection systems. They noted that using a good kernel density estimator requires more effort from the adversary, who potentially needs to handle a much larger percentage of training data to enhance his attack. Paudice et al. [

30] proposed an outlier detection based scheme capable of detecting the attack points against linear classifiers. However, in less aggressive attacks, like label flipping, detection can become infeasible since the attack points are on average closer to the genuine points.

3. Methodology

In this section we present our approach to impair the performance of machine learning based detection of fake accounts on Twitter. In doing so we present different scenarios, with the use of dynamic and static thresholds, where adversarial machine learning attacks are mounted against a classifier that is trained on a real dataset in order to be used for the fake account detection.

This work assumes that the attacker has the ability to poison the training set of the machine learning classifier, which a defender uses in order to identify and block fake accounts from Twitter. The goal of the defender is to achieve the highest accuracy in detecting fake accounts on Twitter with the use of machine learning. The goal of the attacker is to: (i) introduce confusion to the classifier, thus decreasing its performance (i.e., increasing false positives and false negatives) and (ii) introduce the minimum possible modifications in the training set.

We assume that the attacker poisons the training dataset by: (i) randomly relabeling data, i.e., altering the label of an entry from the fake account class to otherwise, or (ii) adding new crafted entries following the format of the tweets in the dataset. We also assume that the attacker has to meet a threshold of records, which refers to the percentage of randomly poisoned records. The attacker’s goal is to adhere to this poisoning threshold in order to remain undetected and at the same time reduce the classifier accuracy as much as possible. Finally, we assume that the detection mechanism is capable of detecting if the training dataset has been poisoned more than a specific threshold.

3.1. Adversarial Attacks

The first type of adversarial attack that we examine in this work is a relabeling attack based on the attacker’s pursuit to relabel entries that belong to fake identities in the training set [

31]. The outcome of the attack is a training set poisoned such that it impairs the classification accuracy of the classifier. As per Algorithm 1, the poisoning function aims to lower the classifier’s accuracy to less than 60% in every data frame, whilst the threshold is initialized with the value of 1. Then, the threshold is adjusted until the poisoning target is reached. Specifically, the threshold is increased by three if the classifier accuracy is a lot higher than 60% and by one if the threshold accuracy is higher but close to 60% and the threshold has a value higher than six. In both scenarios our work considers this to be a white box attack, therefore the attacker has knowledge on the targeted system and its data.

| Algorithm 1: Poisoning Relabeling Algorithm. |

![Technologies 08 00064 i001 Technologies 08 00064 i001]() |

The second adversarial attack that is proposed herein is the addition of the new entries attack. In this attack, we assume that the attacker adds new entries in the dataset without disrupting its pre-existing structure, with the aim to decrease the classifier’s performance. To this end, the attacker copies a subset of the entire dataset based on the poisoning threshold and modifies the author, account type, region, updates, and retweet status accordingly. Then, the subset of those newly crafted data entries is appended to the original dataset, before the classifier’s training.

More specifically, the poisoning is semi-random and follows the approach that is described in Algorithm 2. A random row pointer is selected in which the name of the account is replaced with a random author derived from another row. The region is set to Unknown, the account type is replaced with a legitimate one, the updates field is set to the value of the randomly selected row and the retweet field is set to zero.

| Algorithm 2: Poisoning New Entries Algorithm. |

![Technologies 08 00064 i002 Technologies 08 00064 i002]() |

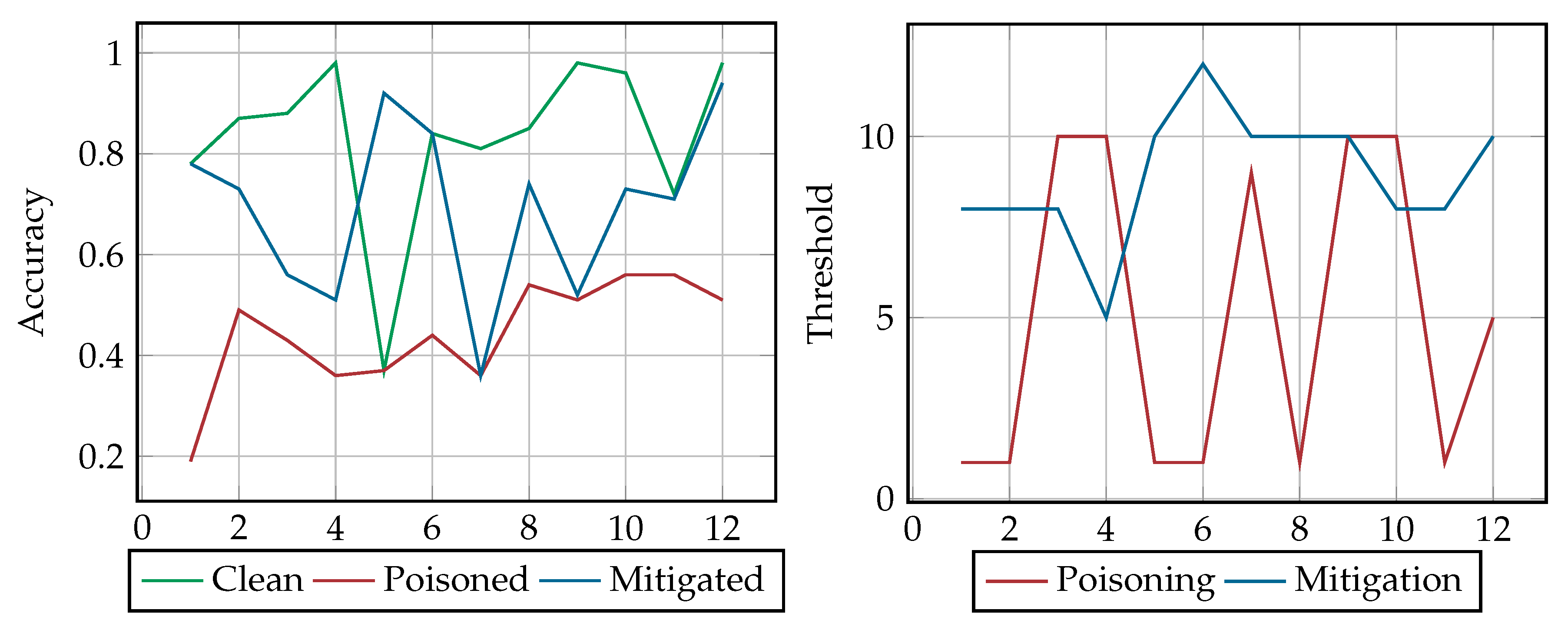

Finally, in this work we study adversarial attacks that are mounted with the use of static and dynamic thresholds. In the static scenarios, the training dataset is available as a whole and therefore the training and poisoning takes place with a static threshold. In contrast, in the dynamic scenario we assume that the data appear in chunks and as a result the classifier is periodically, independently trained, and as a result the threshold used is dynamic. This could happen when the defender cannot process all the available Twitter data as they appear therefore the detection engine is trained with the latest chunks of data. This would allow the defender to periodically adjust the detection engine to match the trends of the Tweets from the malicious and benign users.

3.2. Defensive Mechanisms

In this work we use AdaBoost for the detection of fake Twitter accounts. AdaBoost is a decision tree algorithm combining multiple weak classifiers, forming shorter decision trees and thus increasing the accuracy of classifiers. Predictions are made from individual classifiers by incrementing the weights of the incorrectly classified observations in the training dataset. AdaBoost was used in this work as it is, in general, not prone to overfitting and noise [

16,

32], which is present in our dataset.

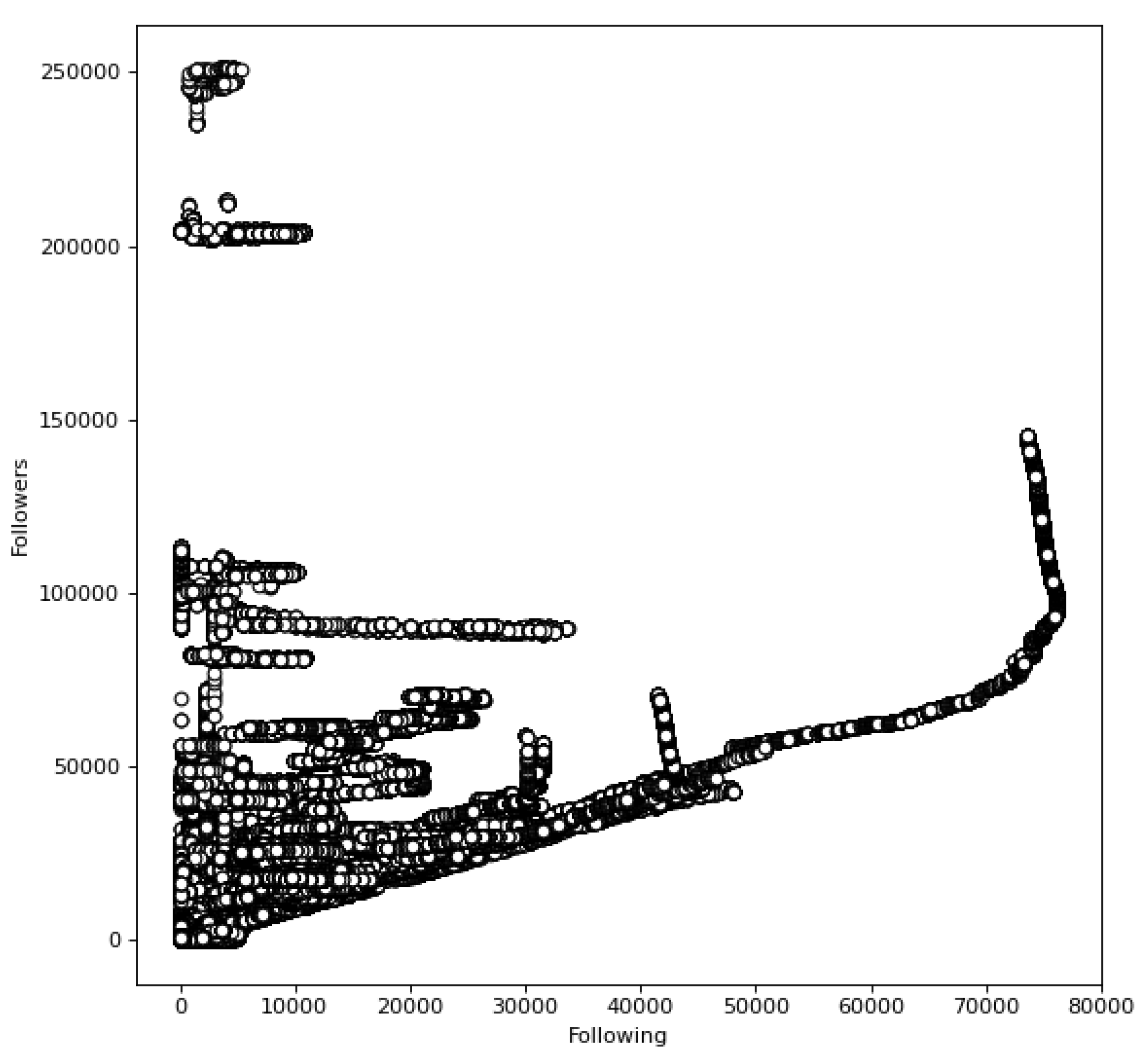

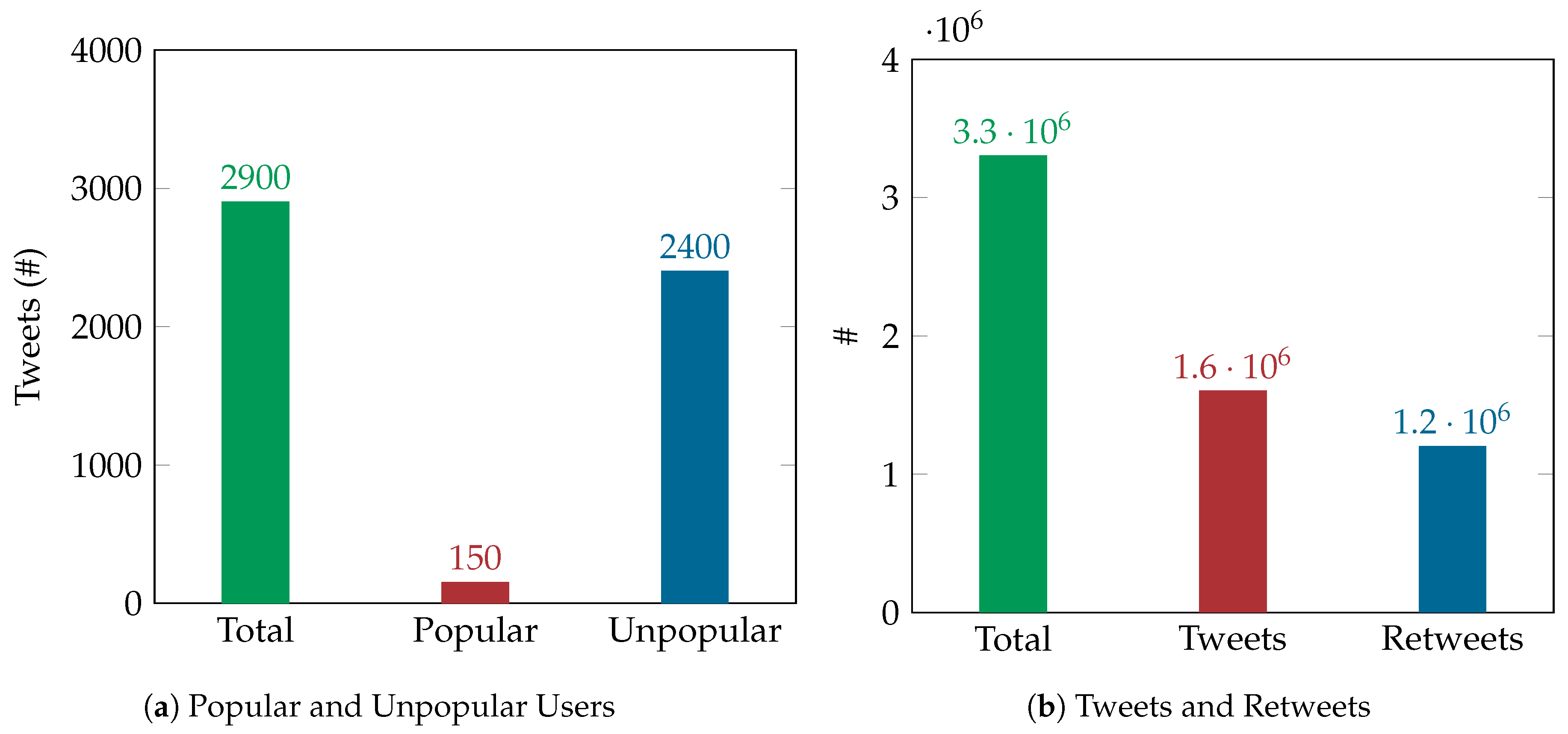

To train the classifier we used the Twitter dataset from [

33]. We used an 80–20 split (i.e., using 80% of data for training and 20% for testing), which is a commonly used split in machine learning. Upon normalization, the classifier uses the following features, which correspond to metadata of the Twitter accounts that are contained in the dataset, namely: (i) region, (ii) language, (iii) number of accounts following, (iv) number of followers, (v) updates, (vi) type (tweet or retweet), and (vii) account type (i.e., malicious or benign). In our experiments we used the default parameters for AdaBoost from Scikit-learn, namely DecisionTreeClassifier with max_depth = 1; maximum number of estimators n_estimators = 50; linear loss function; and learning_rate = 1.

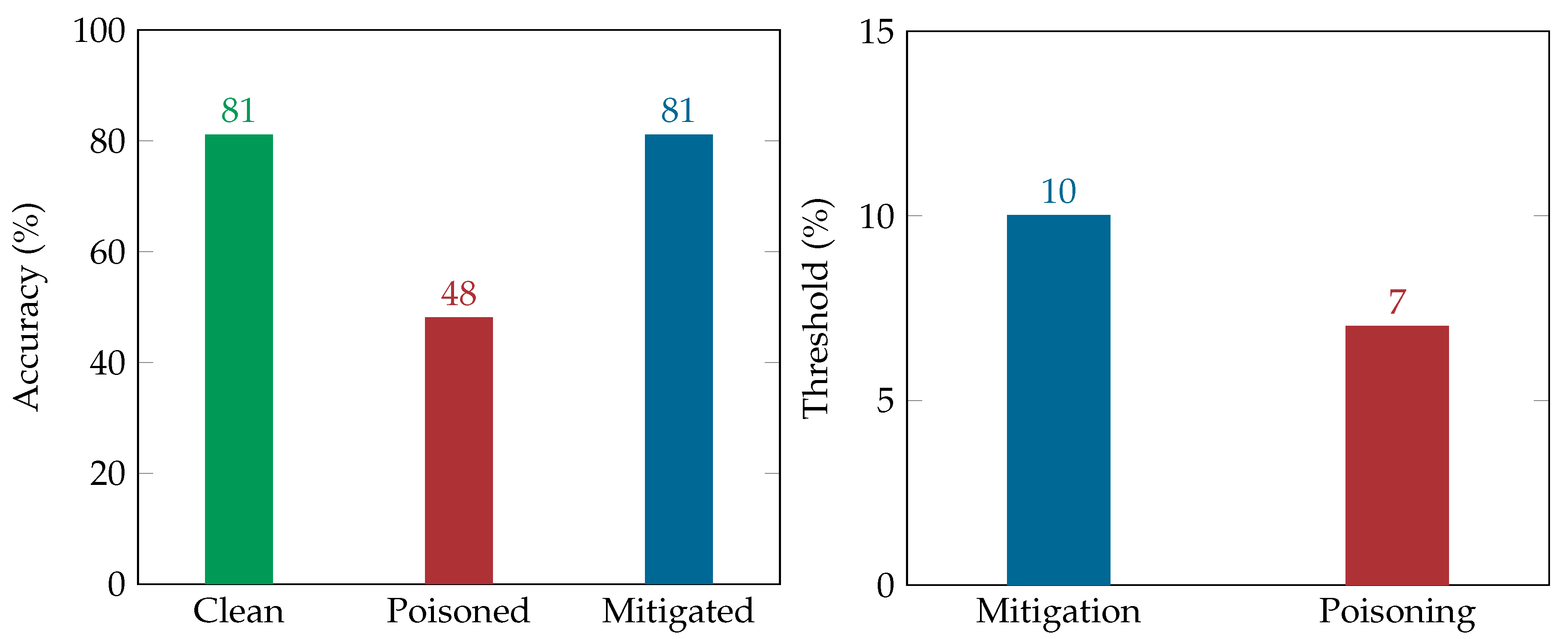

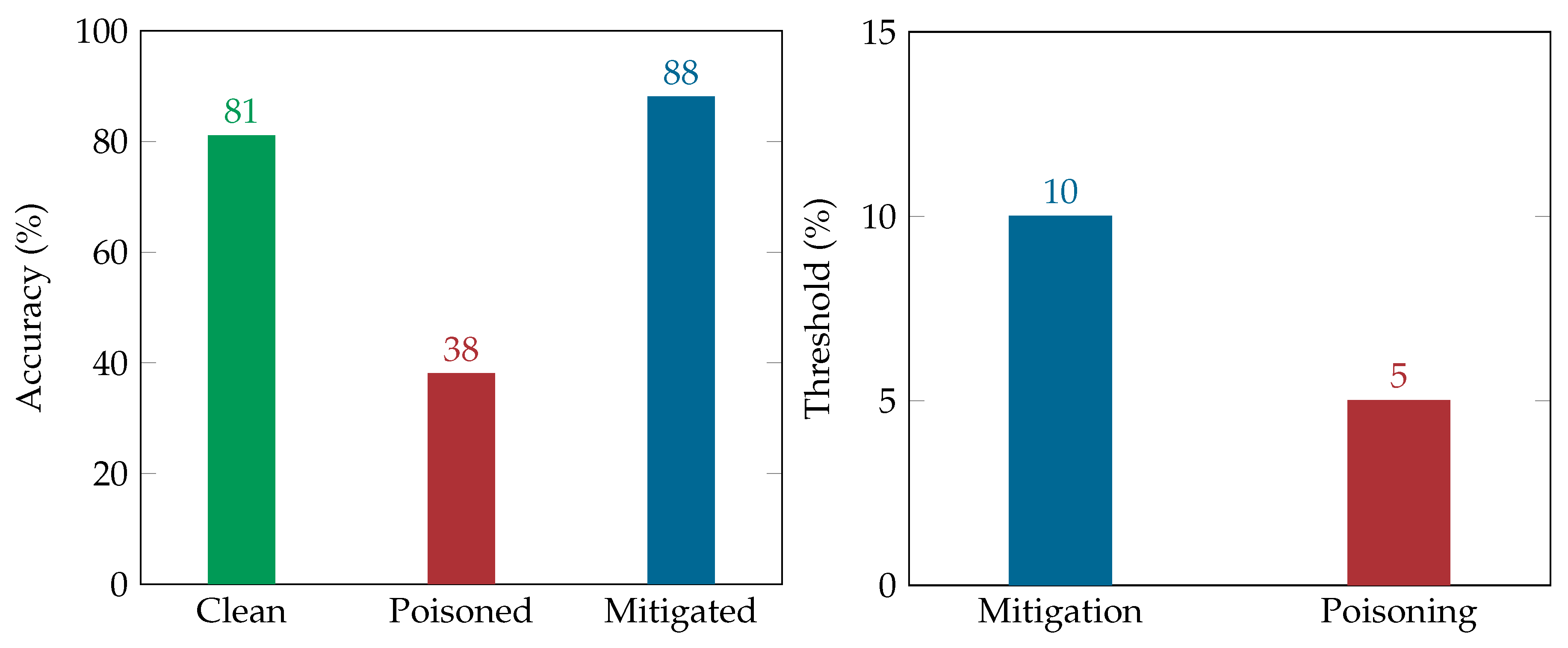

Even without any tuning and feature engineering activities and by solely using the account metadata that have been mentioned beforehand, AdaBoost achieves an accuracy of over 80% in detecting fake Twitter accounts. It worth noting that optimizing the classification performance in identifying fake accounts is not the scope of this work.

Moreover, in this work we propose a defensive algorithm that mitigates the adversarial attacks that are studied in this work. We assume that during the above-mentioned adversarial attacks the defender facilitates Algorithm 3 as a detection mechanism, which scans and reports if the classifier’s training set is poisoned, alongside a poisoning threshold.

| Algorithm 3: Defence Algorithm. |

![Technologies 08 00064 i003 Technologies 08 00064 i003]() |

The algorithm uses k-NN to find the closest neighbors of each sample in the training dataset, with the aim of spotting outliers that might be malicious, and computing the poisoning percentage. For each sample in a potentially poisoned training dataset, the closest neighbors are found using the Euclidean distance, which are then used for majority voting. If the confidence of voting is over 60% and the label differs from the training set’s label, the instance is marked as poisoned. Then, a mitigation percentage of those records is calculated informing the mitigation function for the amount of relabeling that needs to be set, as per Algorithm 3. Afterward, the output of the defense algorithm is passed to the mitigation function for implementing relabeling consequently. It is worth noting that for k-NN we are using the default parameters from Scikit-learn library (namely: n = 5; algorithm = auto; leaf_size = 30; p = 2, euclidean distance; and metric = minkowski), without optimizing the classification performance of the classifier, which we consider out of the scope of this work.

5. Conclusions

During the past decade, social media has become a vital service for people commenting on news and significant global events. Even politicians use them to campaign for elections, communicating directly to voters, thus providing a sense to social media users of direct interaction with society’s prominent personalities about current affairs. As social media interconnects users across the world, this introduces threats to their security and privacy. This holds true as data for companies and individuals can be found on online social networking platforms, alongside the fact that those platforms are widely unable to distinguish fake and legitimate accounts. The popularity of social media, such as Twitter, allows users to disseminate incorrect information through fake accounts, which results in the spread of malicious or fake content as well as the realization of various methods of cyberattacks. The fact that everyone can create an account and interact with others creates a thin line questioning legitimate use. This has attracted the attention of cybercriminals, who use social media as a vector to perform a variety of actions to serve their objectives, thus threatening users or even national security.

In this paper we have conducted adversarial attacks against a detection engine, which uses machine learning in order to uncover fake accounts on Twitter. To this end, a label flipping poisoning attack strategy was proposed and implemented, effectively impairing the accuracy of the detection engine. Also, a new entries poisoning attack was also proposed and implemented, being almost equally efficient in decreasing the classifier’s accuracy. To mitigate these attacks a defense mechanism was recommended based on a k-NN algorithm and relabeling techniques aiming to defend against our poisoning attacks. Our work empirically showed the significant degradation of the classification accuracy as well as the effectiveness of the proposed countermeasure to successfully mitigate the effect of such attacks. While these results are relevant to the classifiers that we have used to implement the defensive mechanisms (i.e., AdaBoost and k-NN), we consider that they are applicable to other boosting algorithms or their respective versions.

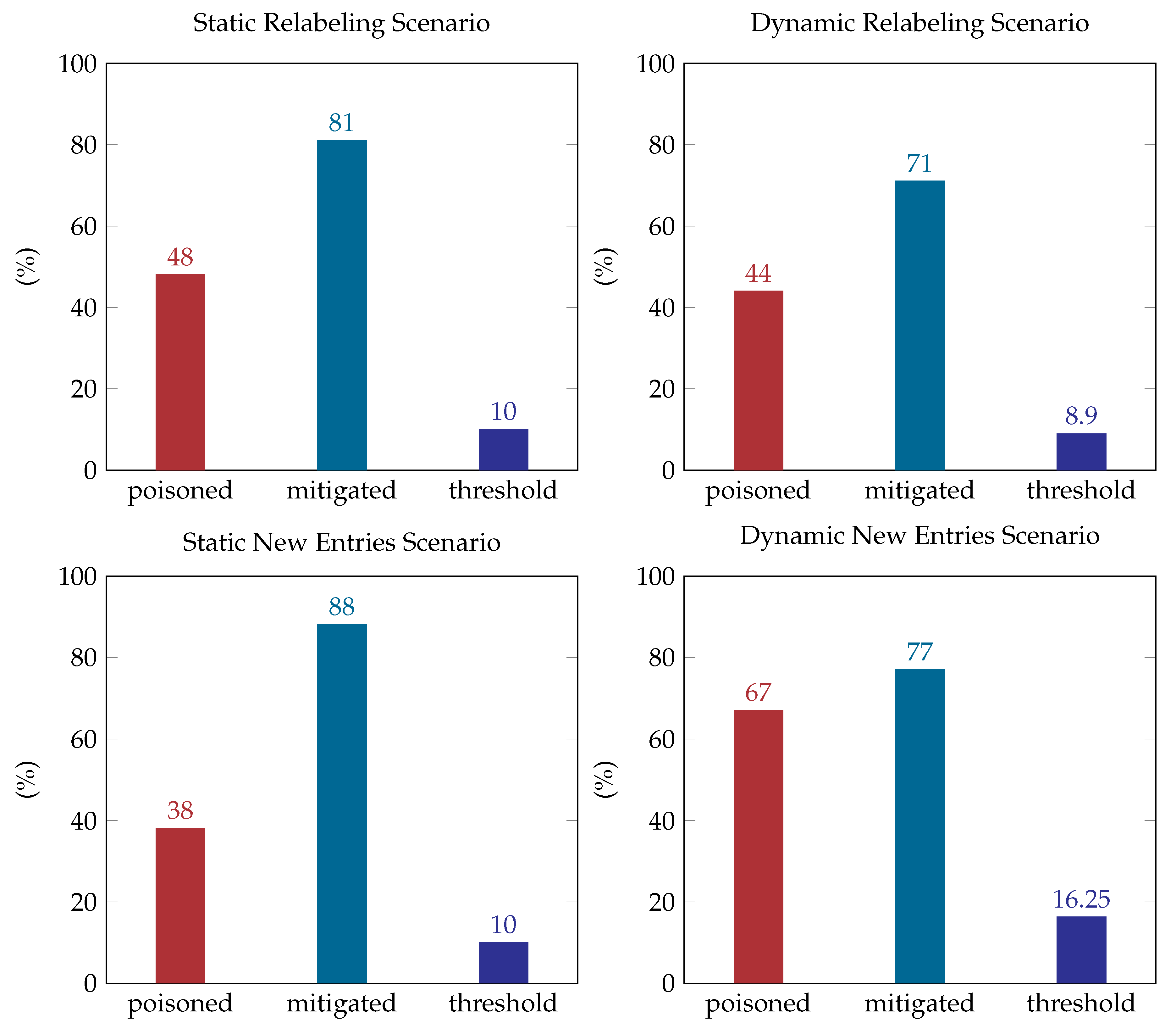

More specifically, considering the implication of the adversarial attacks to the detection system, our results have shown that in every scenario (static or dynamic) that was explored herein the poisoning decreases the classifier’s accuracy from 13% to 53%, having a 30% reduction on average. Specifically, in the static relabeling attack scenario, the poisoning managed to decrease the accuracy of the classifier under 50% with a low poisoning threshold. Moreover, in the new entries static scenario the accuracy reached a value under 40%, with a lower poisoning threshold. This proved that the attacker will be more successful in impairing the classification accuracy by adding new crafted data to the training dataset than relabeling the existing entries in the static scenario. On the other hand, in the dynamic scenarios, poisoning the classifier with relabeling impairs the classifier’s accuracy more (namely to 47% on average) compared to the new entries scenario, where the poisoning decreases its accuracy only 13% on average with a double poisoning threshold.

Our plans for future work include the evaluation of the adversarial attacks and the mitigation strategy to additional datasets, as they become available. In addition, we will investigate other potential scenarios that could be used by the defender or the attacker (such as adding new entries with fake profiles having features that resemble those of real users or with legitimate users having features that normally appear in entries created by fake users). We also plan to explore the effectiveness of our attacking and defending strategies in other social media datasets. Finally, we plan to investigate the effects of our proposed adversarial attacks using other similar supervised machine learning algorithms.