Robustness Enhancement of Self-Localization for Drone-View Mixed Reality via Adaptive RGB-Thermal Integration

Abstract

1. Introduction

- •

- Decoupled and Adaptive Fusion Architecture: We present a decoupled localization architecture for drone-view MR that separates RGB map-based absolute pose correction from RGB–thermal relative tracking, enabling stable overlays under texture-poor facade flights without relying on proprietary drone SDKs or GNSS/RTK.

- •

- Empirical validation in AEC Scenarios: We implemented the proposed system and quantified its tracking robustness in real-world AEC scenarios. We demonstrate that our adaptive fusion maintains overlay stability on texture-less exterior walls where conventional RGB-only baselines fail, validating the system’s practical survivability in proximity inspection tasks.

2. Literature Review

2.1. Drone-Based Mixed Reality in AEC

2.2. Visual Localization Challenges: Texture-Less & Geometric Burstiness

2.2.1. VPS and SLAM

2.2.2. Visual Localization Challenges in AEC

2.3. Thermal Imaging for Visual Localization

2.4. Multi-Modal Sensor Fusion and Uncertainty Estimation Strategies

2.4.1. Tightly-Coupled vs. Loosely-Coupled Architectures

2.4.2. Uncertainty Estimation: Deep Learning vs. Geometric Approaches

3. Proposed Methods

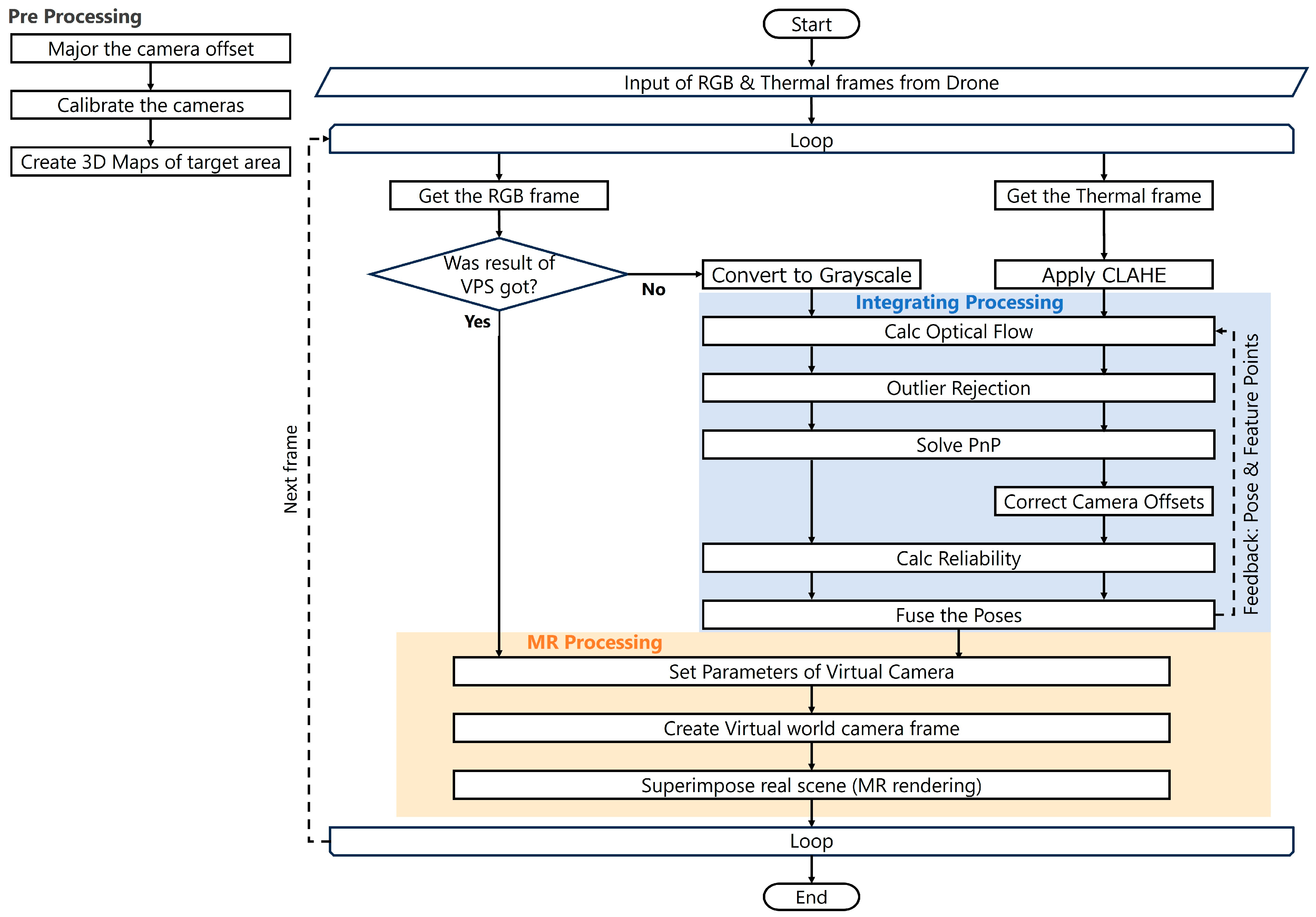

3.1. System Overview

- •

- Global Initialization Loop: To reset drift errors, VPS is executed asynchronously using RGB keyframes to estimate the 6DoF pose in the absolute coordinate system.

- •

- Adaptive Dual-Tracking Loop: To fill the update interval (latency) of VPS, relative pose displacement between frames is estimated in two independent pipelines, RGB and Thermal, and fused adaptively based on reliability.

3.2. Geometric and Radiometric Pre-Processing

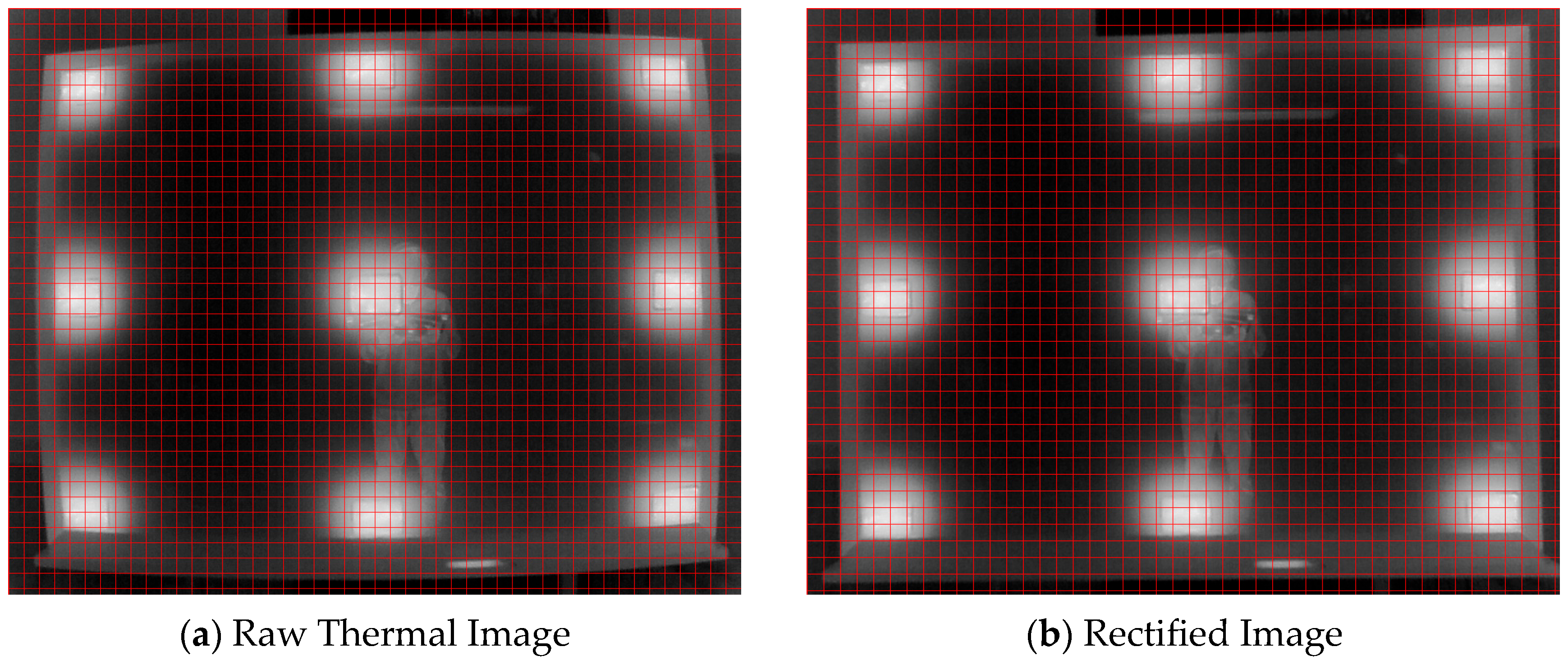

3.2.1. Geometric Rectification and Calibration

3.2.2. Asymmetric Radiometric Enhancement

- •

- RGB Stream: Under general daytime environments, RGB sensors have a sufficient dynamic range. Since excessive enhancement processing may compromise the brightness constancy assumption in optical flow, only standard grayscale conversion is performed to preserve the raw luminance distribution.

- •

- Thermal Stream: Thermal images of isothermal building materials, such as concrete walls, characteristically exhibit histograms concentrated in a narrow dynamic range, resulting in extremely low contrast. To address this, we apply Contrast Limited Adaptive Histogram Equalization (CLAHE). We adopted CLAHE specifically to overcome the limitations of standard enhancement methods in visual self-localization. Unlike Global Histogram Equalization (GHE), which maximizes entropy but indiscriminately amplifies sensor noise (e.g., Fixed Pattern Noise), causing ‘False Features’ and geometric burstiness [51], CLAHE effectively reveals only the latent thermal gradients (thermal texture) within the material. Furthermore, unlike restoration methods based on Mean Squared Error (MSE) minimization—which tend to smooth out high-frequency textures due to the ‘Perception-Distortion Trade-off’ [52] and fail to capture structural information [53]—CLAHE prioritizes the distinctiveness of local features required for robust matching by setting a specific Clip Limit on the degree of contrast enhancement [54]. This pre-processing effectively recovers latent texture gradients essential for tracking while preventing the amplification of noise artifacts common in global equalization methods.

3.3. Independent Dual-Pipeline Tracking

- Optical Flow Estimation: For the undistorted images, feature points from the previous frame are tracked to the current frame using the Lucas-Kanade method [55] or similar.

- Outlier Rejection: Geometric verification using RANSAC is performed to remove outliers due to moving objects or mismatches.

3.4. Adaptive Pose Fusion

3.4.1. Reliability Metric Based on Geometric Consistency

3.4.2. Spatial Pose Fusion Strategy

- •

- Translation: Since this was between two points in Euclidean space, linear interpolation (Lerp) was used.

- •

- Rotation: In a unit quaternion space representing 3D rotation, simple linear interpolation leads to distortion of the rotation speed and norm failure. Therefore, Spherical Linear Interpolation (Slerp), which is a mathematically rigorous rotation interpolation, was adopted [56].

4. Implementation of Prototype System

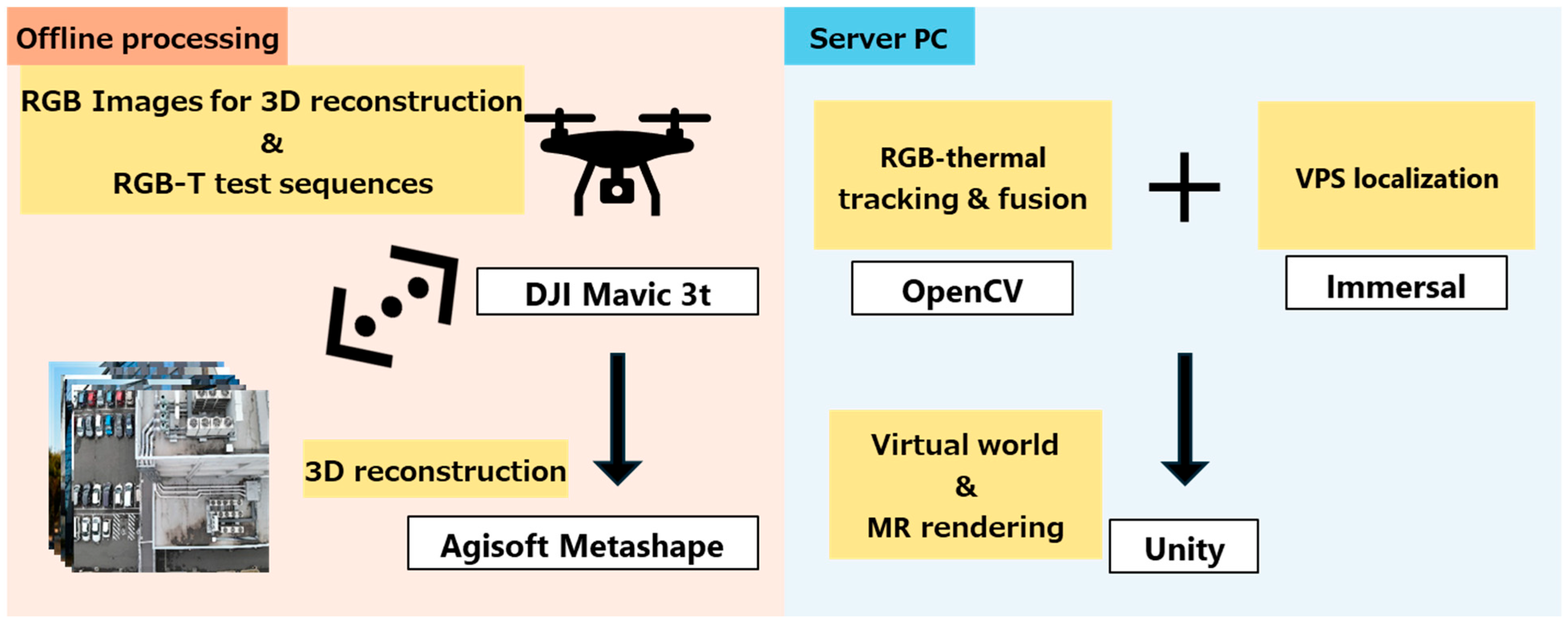

4.1. Overview of Prototype System Implementation

4.2. Dual-Sensor Pre-Processing

- •

- RGB camera: Using Zhang’s method [57] with a standard chessboard pattern, intrinsic parameters and distortion coefficients were calculated using the OpenCV library.

- •

- Thermal camera: Standard chessboard calibration is often unreliable for thermal sensors due to low resolution and thermal diffusion [58]. Therefore, we adopted a Structure-from-Motion (SfM) based self-calibration approach. Crucially, to ensure high precision and avoid optimization errors in low-texture experimental scenes, this calibration was conducted as an offline pre-process, distinct from the main data collection. We utilized a dedicated dataset captured over a structure with rich thermal heterogeneity to derive the intrinsic parameters via bundle adjustment. Since these parameters represent the static physical properties of the lens, the estimated values were fixed and applied to all subsequent experimental reconstructions. The detailed methodology and validation are provided in Appendix A and all estimated calibration results are summarized in Table 4.

- •

- Extrinsic parameters: The extrinsic parameters (rigid body transformation matrix) between both cameras were fixed with a translation vector based on the physical design blueprints of the gimbal mechanism.

4.3. Implementation Details of Adaptive Tracking

- •

- Radiometric Enhancement: The CLAHE algorithm defined in Section 3 was implemented using the Imgproc.createCLAHE function in OpenCV. The parameters were empirically optimized for the specific thermal sensor used in this study. We selected a Clip Limit of 2.0 and a grid size of 8 × 8, as preliminary testing confirmed these values provide the optimal trade-off between enhancing local texture for survivability and suppressing sensor-specific noise (e.g., fixed pattern noise).

- •

- Tracking Parameters: The Lucas-Kanade tracker was configured with a search window size of pixels and a pyramid level of 5 to accommodate rapid drone maneuvers. The outlier rejection was performed using the RANSAC algorithm (specifically, the UPnP solver in solvePnPRansac). To guarantee real-time performance and to bound the computation time, we explicitly set the maximum number of RANSAC iterations to 400 with a confidence level of 0.98, rather than relying on unbounded adaptive iterations. The reprojection error threshold was empirically set to 5.0 pixels to accommodate minor sensor noise and drone vibrations observed in our system. Furthermore, to mitigate the risk that RANSAC would accept geometrically unstable models (e.g., due to strongly clustered inliers), the proposed Effective Inlier Count, , served as a post-RANSAC validation metric and automatically down-weighted pose estimates that lacked sufficient spatial support in the image.

- •

- Fusion Logic Execution: For reliability evaluation, the Effective Inlier Count () defined in Section 3 was used. Following the methodology of Sattler et al. [25], which suggests a cell size of approximately pixels to ensure spatial diversity, we partitioned our resolution frames into a grid. This resulted in an exact cell size of pixels, providing a balanced spatial constraint across the entire field of view. In this implementation, considering that the number of feature points required for robust initialization in standard Visual SLAM, such as ORB-SLAM, is 30 to 50 [30], a conservative value of was adopted. If the of either sensor fell below 50, the system immediately set the reliability of that sensor to zero, thereby excluding unstable estimation results that caused drift from the fusion.

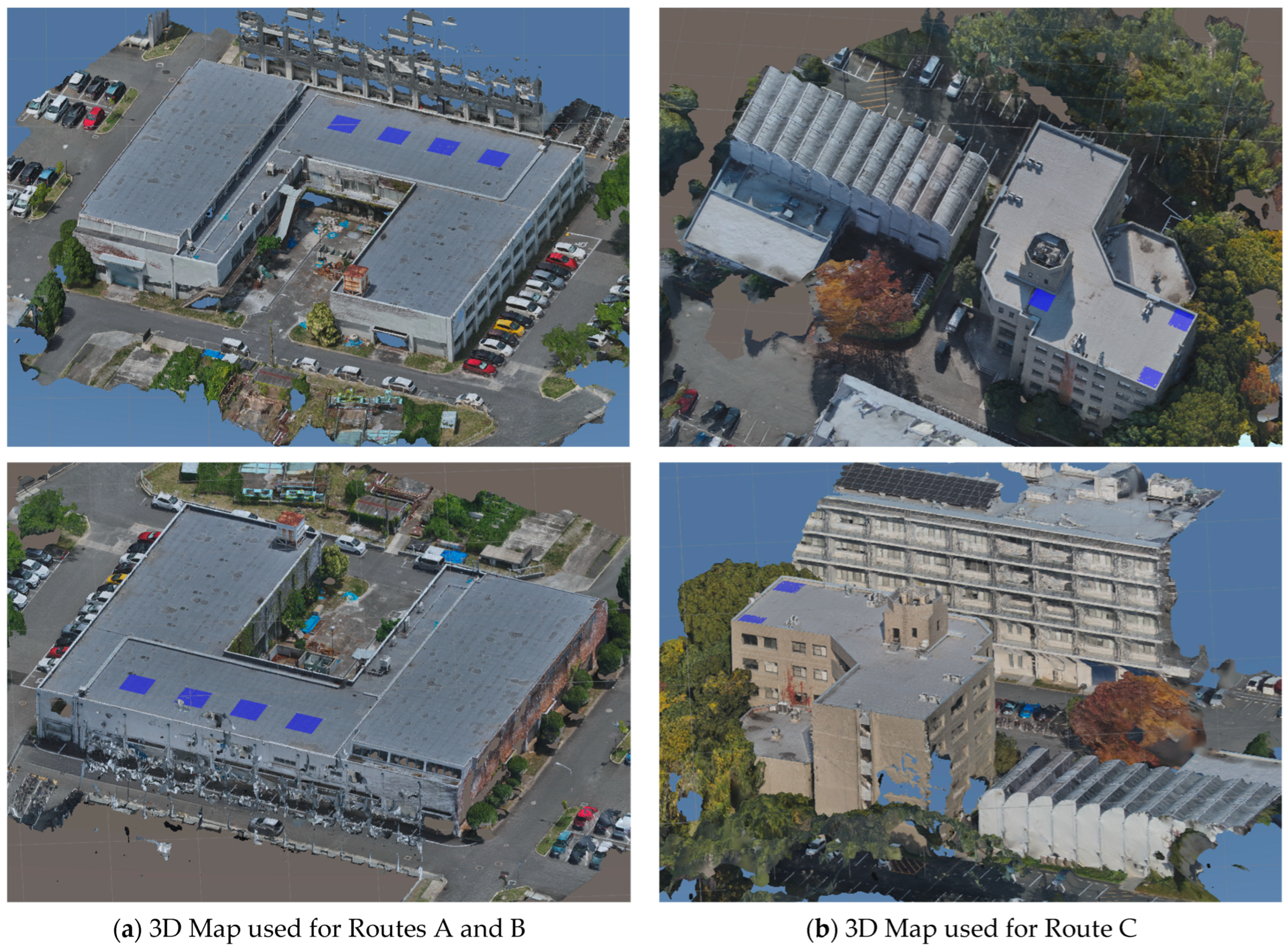

4.4. Experimental Setup Using Pre-Recorded Datasets

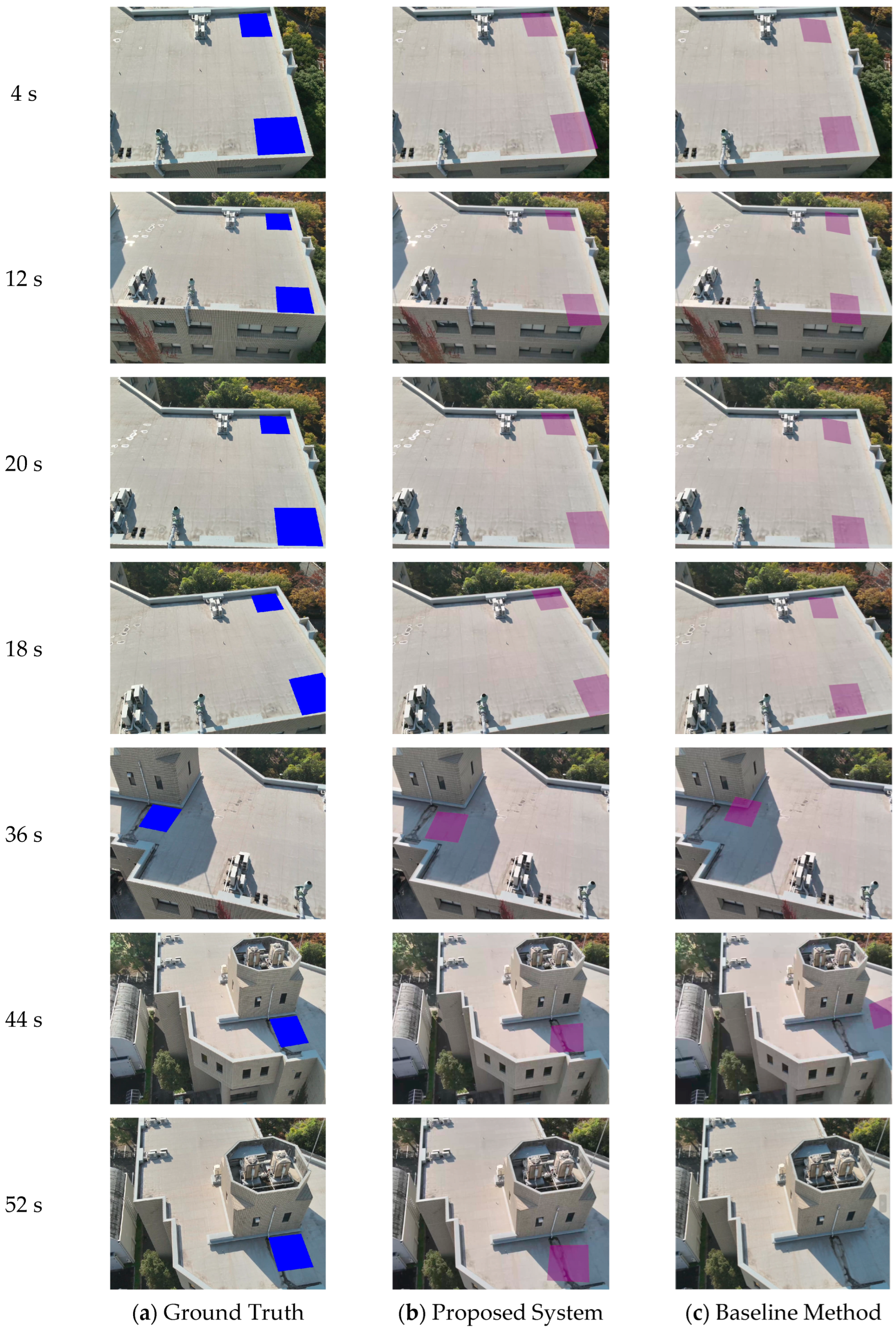

5. Verification Test

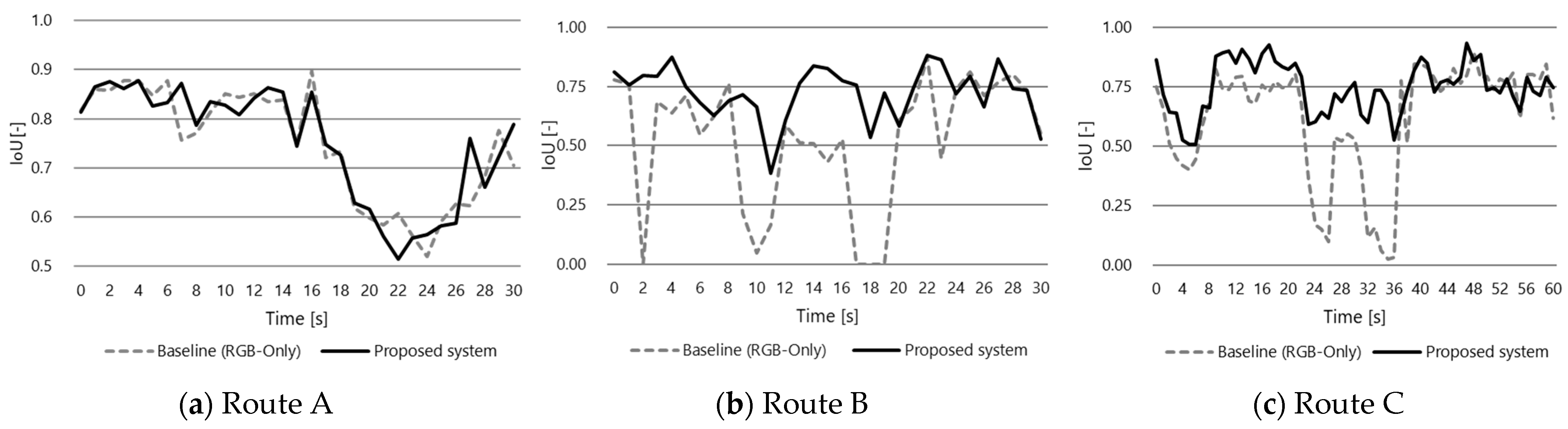

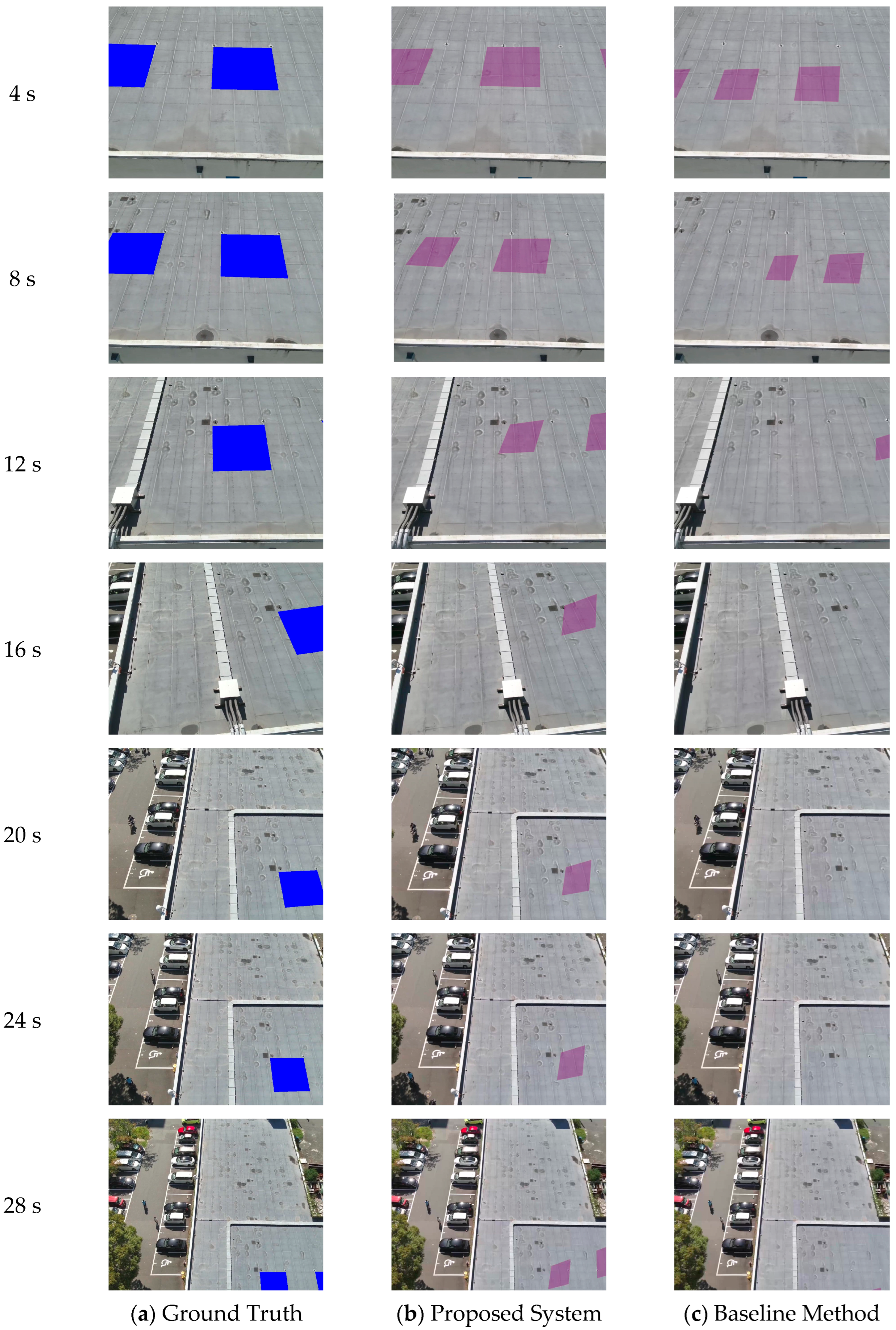

5.1. Comparison of Positioning Accuracy

- •

- Route A (Texture-Rich): An overhead viewpoint from above Building S2. A general environment includes diverse objects such as buildings, roads, and vegetation. It follows an elliptical orbit after translation.

- •

- Route B (Texture-less/Bursty): An environment in close proximity to the rooftop of Building S2. Homogeneous concrete surfaces occupy most of the field of view, accompanied by rapid frame changes due to drone movement.

- •

- Route C (Long-term/Specular): An environment involving close-up photography of the rooftop and walls of Building M4. Although it presents low-texture characteristics similar to Route B, the visibility of tiled walls and surrounding vegetation renders it less challenging than the completely homogeneous environment of Building S2. However, it introduces other difficulties, such as specular reflections from water puddles and repetitions of similar shapes.

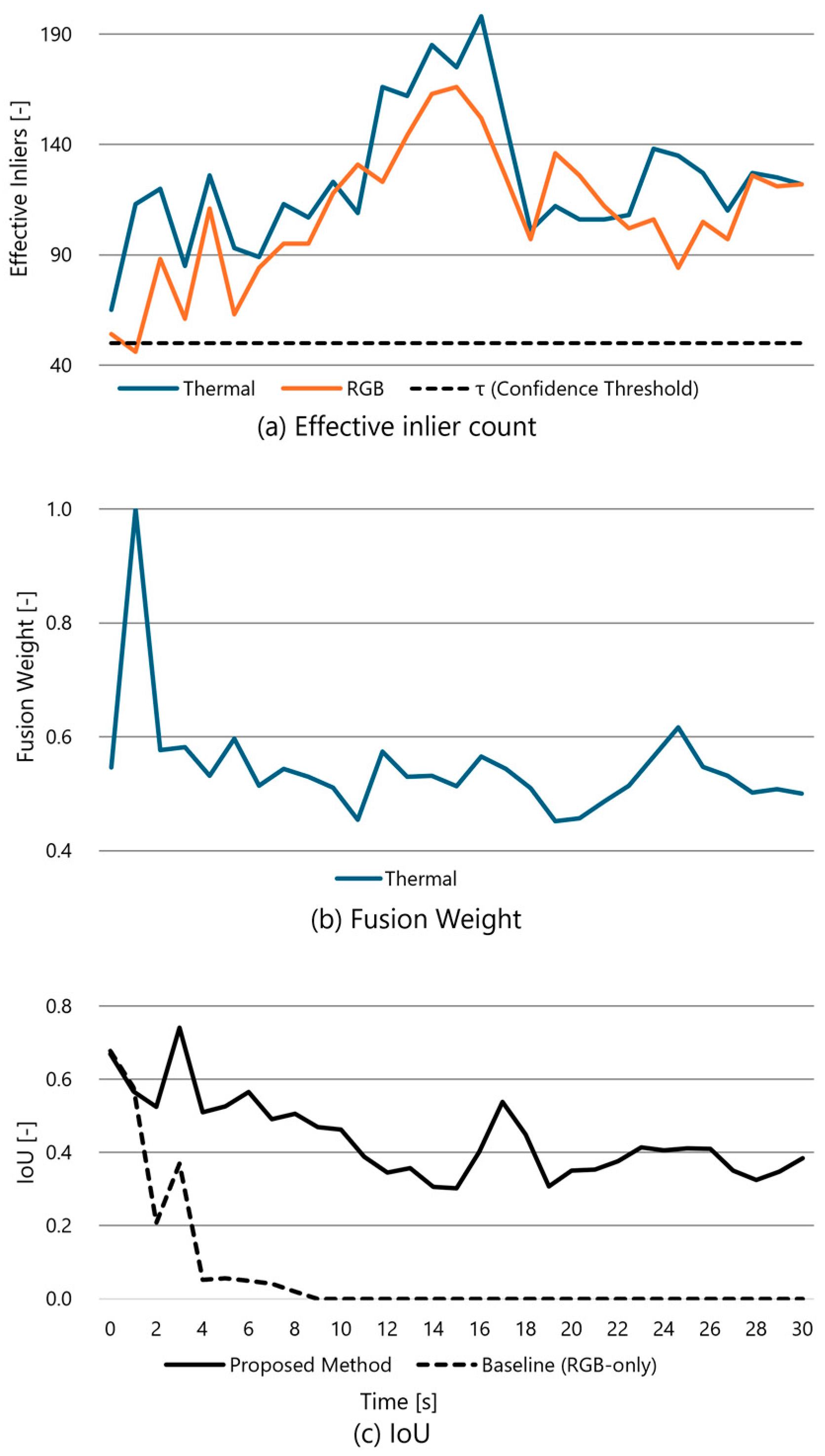

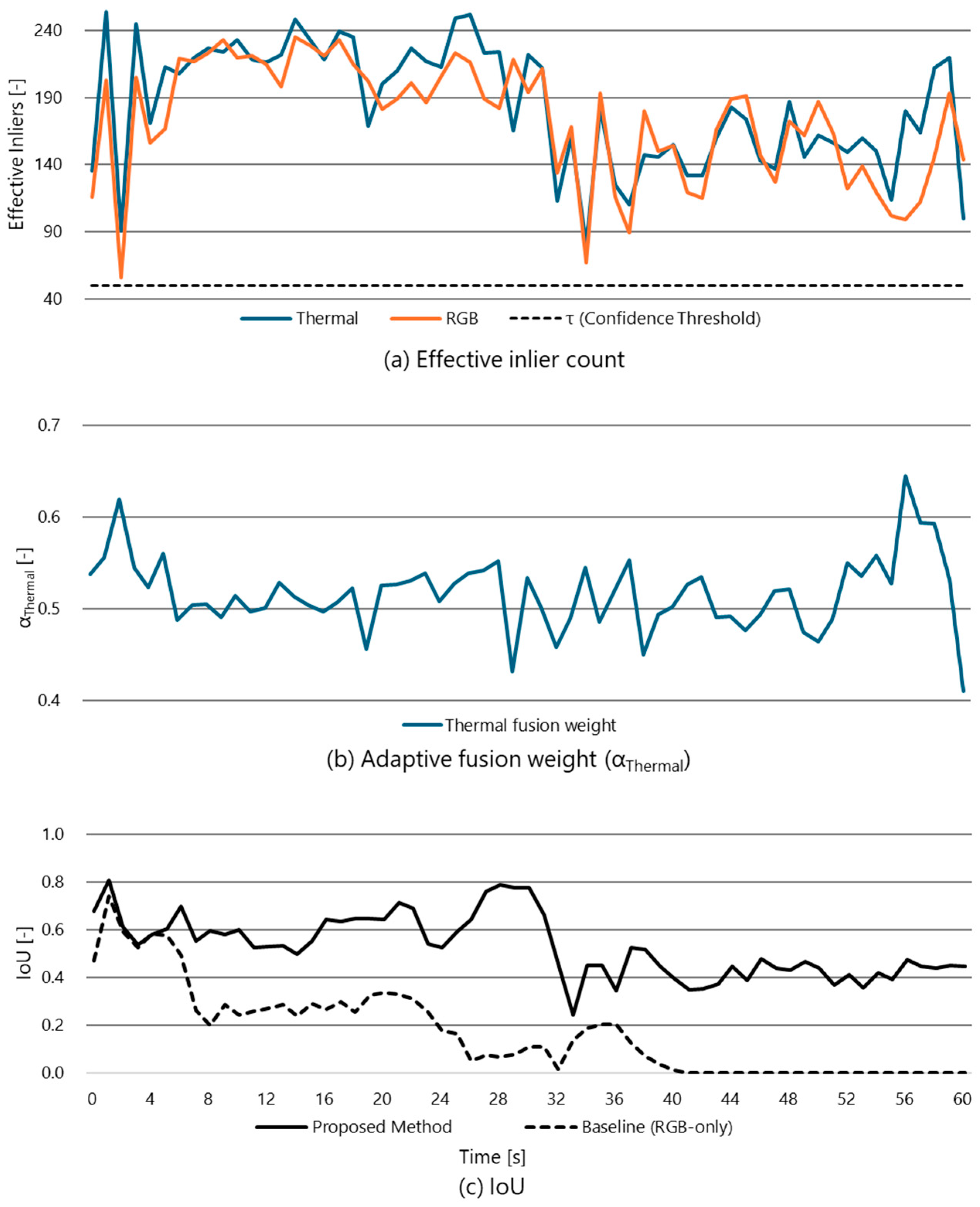

5.2. Durability Analysis in Communication-Denied or VPS-Lost Environments

5.2.1. Analysis of Route B: Mutual Rescue in Geometric Burstiness

5.2.2. Analysis of Route C: Drift Suppression via Consensus

6. Discussion

6.1. Lightweight Spatial Filtering for Real-Time Multimodal Fusion

6.2. Achieving Robust Drone-Based MR via Thermal Integration

6.3. Limitations and Future Work

7. Conclusions

- •

- Establishment of a lightweight geometric gating mechanism: We introduced the Effective Inlier Count as a metric to evaluate the reliability of heterogeneous sensors in real-time and on a unified scale. In drone systems with limited computational resources, this metric effectively eliminates erroneous reliability judgments caused by geometric burstiness (local concentration of feature points) without the need for computationally expensive covariance estimation. This enabled robust sensor selection based on spatial quality rather than mere quantity of feature points.

- •

- Simultaneous achievement of availability and stability: Validation experiments in real-world environments demonstrated that the proposed method exhibits robustness through two distinct mechanisms. First, in critical situations where visible light texture is missing, thermal information functioned as a fail-safe modality to prevent system loss, ensuring operational availability. Second, during long-term flights, the integration effect between sensors with different physical characteristics mitigated drift errors inherent to single modalities, thereby maintaining geometric stability.

- •

- Contribution to Digital Transformation in the AEC industry: The proposed system provides a practical solution for the full automation of infrastructure inspection and construction management by offering an MR experience that remains continuous and accurate even in GPS-denied environments or under adverse conditions. By leveraging existing high-precision RGB map-based foundational technology while complementing its primary weakness through thermal fusion, this approach significantly enhances the reliability of drone utilization within the AEC sector.

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| 6DoF | 6 degrees of freedom |

| AEC | Architecture, Engineering, and Construction |

| BIM | Building Information Modeling |

| CLAHE | Contrast Limited Adaptive Histogram Equalization |

| GNSS | Global Navigation Satellite System |

| IMU | Inertial Measurement Unit |

| IoU | Intersection over Union |

| Lerp | Linear interpolation |

| MR | Mixed Reality |

| PnP | Perspective-n-Point |

| RANSAC | Random Sample Consensus |

| ROI | regions of interest |

| RTK-GNSS | Real-Time Kinematic GNSS |

| SDKs | Software Development Kits |

| SLAM | Simultaneous Localization and Mapping |

| SfM | Structure-from-Motion |

| Slerp | Spherical Linear interpolation |

| VPS | Visual Positioning System |

Appendix A. Thermal Camera Calibration Methodology

Appendix A.1. Physical Nature of Lens Distortion

Appendix A.2. SfM-Based Self-Calibration (Thermal Camera)

Appendix A.3. Calibration Workflow and Results

- Data Acquisition: A dedicated flight was conducted over a test site selected for its high thermal contrast (e.g., a structural complex with varied materials) to maximize feature detection and tie-point generation.

- Estimation: The SfM reconstruction pipeline was executed using Agisoft Metashape. During the image alignment phase, the intrinsic parameters were calculated and retrieved as a computational by-product of the optimization process described in Appendix A.2.

- Validation & Application: The derived parameters (shown in Table 4 in the main text) demonstrated a low reprojection error (0.32).

References

- Song, K.; Zhao, Y.; Huang, L.; Yan, Y.; Meng, Q. RGB-T image analysis technology and application: A survey. Eng. Appl. Artif. Intell. 2023, 120, 105919. [Google Scholar] [CrossRef]

- Bonatti, R.; Ho, C.; Wang, W.; Choudhury, S.; Scherer, S. Towards a Robust Aerial Cinematography Platform: Localizing and Tracking Moving Targets in Unstructured Environments. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 3–8 November 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 229–236. [Google Scholar] [CrossRef]

- Giordan, D.; Adams, M.S.; Aicardi, I.; Alicandro, M.; Allasia, P.; Baldo, M.; De Berardinis, P.; Dominici, D.; Godone, D.; Hobbs, P.; et al. The use of unmanned aerial vehicles (UAVs) for engineering geology applications. Bull. Eng. Geol. Environ. 2020, 79, 3437–3481. [Google Scholar] [CrossRef]

- Outay, F.; Mengash, H.A.; Adnan, M. Applications of unmanned aerial vehicle (UAV) in road safety, traffic and highway infrastructure management: Recent advances and challenges. Transp. Res. Part A Policy Pract. 2020, 141, 116–129. [Google Scholar] [CrossRef]

- Hobart, M.; Pflanz, M.; Tsoulias, N.; Weltzien, C.; Kopetzky, M.; Schirrmann, M. Fruit Detection and Yield Mass Estimation from a UAV Based RGB Dense Cloud for an Apple Orchard. Drones 2025, 9, 60. [Google Scholar] [CrossRef]

- Liu, T.; Wang, X.; Hu, K.; Zhou, H.; Kang, H.; Chen, C. FF3D: A Rapid and Accurate 3D Fruit Detector for Robotic Harvesting. Sensors 2024, 24, 3858. [Google Scholar] [CrossRef]

- Mogili, U.M.R.; Deepak, B.B.V.L. Review on Application of Drone Systems in Precision Agriculture. Procedia Comput. Sci. 2018, 133, 502–509. [Google Scholar] [CrossRef]

- Shakhatreh, H.; Sawalmeh, A.H.; Al-Fuqaha, A.; Dou, Z.; Almaita, E.; Khalil, I.; Othman, N.S.; Khreishah, A.; Guizani, M. Unmanned Aerial Vehicles (UAVs): A Survey on Civil Applications and Key Research Challenges. IEEE Access 2019, 7, 48572–48634. [Google Scholar] [CrossRef]

- Guan, S.; Zhu, Z.; Wang, G. A Review on UAV-Based Remote Sensing Technologies for Construction and Civil Applications. Drones 2022, 6, 117. [Google Scholar] [CrossRef]

- Videras Rodríguez, M.; Melgar, S.G.; Cordero, A.S.; Márquez, J.M. A Critical Review of Unmanned Aerial Vehicles (UAVs) Use in Architecture and Urbanism: Scientometric and Bibliometric Analysis. Appl. Sci. 2021, 11, 9966. [Google Scholar] [CrossRef]

- Tomita, K.; Chew, M.Y. A Review of Infrared Thermography for Delamination Detection on Infrastructures and Buildings. Sensors 2022, 22, 423. [Google Scholar] [CrossRef] [PubMed]

- Fukuda, R.; Fukuda, T.; Yabuki, N. Advancing Building Facade Inspection: Integration of an infrared camera-equipped drone and mixed reality. In Proceedings of the International Conference on Education and Research in Computer Aided Architectural Design in Europe, Nicosia, Cyprus, 9–13 September 2024; pp. 139–148. [Google Scholar]

- Yeom, S. Thermal Image Tracking for Search and Rescue Missions with a Drone. Drones 2024, 8, 53. [Google Scholar] [CrossRef]

- Milgram, P.; Kishino, F. A Taxonomy of Mixed Reality Visual Displays. IEICE Trans. Inf. Syst. 1994, 77, 1321–1329. [Google Scholar]

- Wen, M.-C.; Kang, S.-C. Augmented Reality and Unmanned Aerial Vehicle Assist in Construction Management. In Computing in Civil and Building Engineering (2014); Proceedings; ASCE: Reston, VA, USA, 2014; pp. 1570–1577. [Google Scholar]

- Raimbaud, P.; Lou, R.; Merienne, F.; Danglade, F.; Figueroa, P.; Hernández, J.T. BIM-based Mixed Reality Application for Supervision of Construction. In Proceedings of the 2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), 23–27 March 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1903–1907. [Google Scholar]

- Cao, Y.; Dong, Y.; Wang, F.; Yang, J.; Cao, Y.; Li, X. Multi-sensor spatial augmented reality for visualizing the invisible thermal information of 3D objects. Opt. Lasers Eng. 2021, 145, 106634. [Google Scholar] [CrossRef]

- Botrugno, M.C.; D’Errico, G.; De Paolis, L.T. Augmented Reality and UAVs in Archaeology: Development of a Location-Based AR Application. In Proceedings of the Augmented Reality, Virtual Reality, and Computer Graphics, Cham; Springer: Berlin/Heidelberg, Germany, 2017; pp. 261–270. [Google Scholar]

- Kikuchi, N.; Fukuda, T.; Yabuki, N. Future landscape visualization using a city digital twin: Integration of augmented reality and drones with implementation of 3D model-based occlusion handling. J. Comput. Des. Eng. 2022, 9, 837–856. [Google Scholar] [CrossRef]

- Kinoshita, A.; Fukuda, T.; Yabuki, N. Drone-Based Mixed Reality: Enhancing Visualization for Large-Scale Outdoor Simulations with Dynamic Viewpoint Adaptation Using Vision-Based Pose Estimation Methods. Drone Syst. Appl. 2024, 12, 1–19. [Google Scholar] [CrossRef]

- Xie, X.; Yang, T.; Ning, Y.; Zhang, F.; Zhang, Y. A Monocular Visual Odometry Method Based on Virtual-Real Hybrid Map in Low-Texture Outdoor Environment. Sensors 2021, 21, 3394. [Google Scholar] [CrossRef]

- Qin, Y.W.; Bao, N.K. Infrared thermography and its application in the NDT of sandwich structures. Opt. Lasers Eng. 1996, 25, 205–211. [Google Scholar] [CrossRef]

- Wu, Y.; Wang, L.; Zhang, L.; Chen, M.; Zhao, W.; Zheng, D.; Cai, Y. Monocular thermal SLAM with neural radiance fields for 3D scene reconstruction. Neurocomputing 2025, 617, 129041. [Google Scholar] [CrossRef]

- Sarlin, P.; Cadena, C.; Siegwart, R.Y.; Dymczyk, M. From Coarse to Fine: Robust Hierarchical Localization at Large Scale. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); IEEE: Piscataway, NJ, USA, 2019; pp. 12708–12717. [Google Scholar]

- Sattler, T.; Havlena, M.; Schindler, K.; Pollefeys, M. Large-Scale Location Recognition and the Geometric Burstiness Problem. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 27–30 June 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1582–1590. [Google Scholar]

- Lepetit, V.; Moreno-Noguer, F.; Fua, P. EPnP: An accurate O(n) solution to the PnP problem. Int. J. Comput. Vis. 2009, 81, 155–166. [Google Scholar] [CrossRef]

- Immersal Ltd. Immersal SDK. Available online: https://immersal.com/ (accessed on 20 December 2025).

- Niantic, I. Niantic Lightship VPS. Available online: https://lightship.dev/ (accessed on 20 December 2025).

- Google Developers. ARCore Geospatial API. Available online: https://developers.google.com/ar/develop/geospatial (accessed on 20 December 2025).

- Mur-Artal, R.; Montiel, J.M.M.; Tardós, J.D. ORB-SLAM: A Versatile and Accurate Monocular SLAM System. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef]

- Cadena, C.; Carlone, L.; Carrillo, H.; Latif, Y.; Scaramuzza, D.; Neira, J.; Reid, I.; Leonard, J. Past, Present, and Future of Simultaneous Localization and Mapping: Towards the Robust-Perception Age; IEEE: Piscataway, NJ, USA, 2016. [Google Scholar]

- Behzadan Amir, H.; Kamat Vineet, R. Georeferenced Registration of Construction Graphics in Mobile Outdoor Augmented Reality. J. Comput. Civ. Eng. 2007, 21, 247–258. [Google Scholar] [CrossRef]

- Zollmann, S.; Hoppe, C.; Kluckner, S.; Poglitsch, C.; Bischof, H.; Reitmayr, G. Augmented Reality for Construction Site Monitoring and Documentation. Proc. IEEE 2014, 102, 137–154. [Google Scholar] [CrossRef]

- Zhuang, L.; Zhong, X.; Xu, L.; Tian, C.; Yu, W. Visual SLAM for Unmanned Aerial Vehicles: Localization and Perception. Sensors 2024, 24, 2980. [Google Scholar] [CrossRef]

- Sun, D.; Yang, X.; Liu, M.Y.; Kautz, J. PWC-Net: CNNs for Optical Flow Using Pyramid, Warping, and Cost Volume. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 18–23 June 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 8934–8943. [Google Scholar]

- Li, S.; Ma, X.; He, R.; Shen, Y.; Guan, H.; Liu, H.; Li, F. WTI-SLAM: A novel thermal infrared visual SLAM algorithm for weak texture thermal infrared images. Complex Intell. Syst. 2025, 11, 242. [Google Scholar] [CrossRef]

- Zhao, X.; Luo, Y.; He, J. Analysis of the Thermal Environment in Pedestrian Space Using 3D Thermal Imaging. Energies 2020, 13, 3674. [Google Scholar] [CrossRef]

- Borges, P.V.K.; Vidas, S. Practical Infrared Visual Odometry. IEEE Trans. Intell. Transp. Syst. 2016, 17, 2205–2213. [Google Scholar] [CrossRef]

- Johansson, J.; Solli, M.; Maki, A. An Evaluation of Local Feature Detectors and Descriptors for Infrared Images. In Proceedings of the Computer Vision—ECCV 2016 Workshops, Cham, 2016; James Madison University Libraries: Harrisonburg, VA, USA, 2016; pp. 711–723. [Google Scholar]

- Mouats, T.; Aouf, N.; Nam, D.; Vidas, S. Performance Evaluation of Feature Detectors and Descriptors Beyond the Visible. J. Intell. Robot. Syst. 2018, 92, 33–63. [Google Scholar] [CrossRef]

- Delaune, J.; Hewitt, R.; Lytle, L.; Sorice, C.; Thakker, R.; Matthies, L. Thermal-Inertial Odometry for Autonomous Flight Throughout the Night. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 3–8 November 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1122–1128. [Google Scholar]

- Deng, Z.; Zhang, Z.; Ding, Z.; Liu, B. Multi-Source, Fault-Tolerant, and Robust Navigation Method for Tightly Coupled GNSS/5G/IMU System. Sensors 2025, 25, 965. [Google Scholar] [CrossRef]

- Shan, T.; Englot, B.; Ratti, C.; Rus, D. LVI-SAM: Tightly-coupled Lidar-Visual-Inertial Odometry via Smoothing and Mapping. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), 30 May–5 June 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 5692–5698. [Google Scholar]

- Ebadi, K.; Bernreiter, L.; Biggie, H.; Catt, G.; Chang, Y.; Chatterjee, A.; Denniston, C.E.; Deschênes, S.-P.; Harlow, K.; Khattak, S.; et al. Present and Future of SLAM in Extreme Underground Environments. IEEE Trans. Robot. 2024, 40, 936–959. [Google Scholar] [CrossRef]

- Aguilar, M.T.P.; Sátiro, D.E.A.; Alvarenga, C.B.C.S.; Marçal, V.G.; Amianti, M.; do Bom Conselho Sales, R. Use of Passive Thermography to Detect Detachment and Humidity in Façades Clad with Ceramic Materials of Differing Porosities. J. Nondestruct. Eval. 2023, 42, 92. [Google Scholar] [CrossRef]

- Xu, X.; Zhang, L.; Yang, J.; Cao, C.; Wang, W.; Ran, Y.; Tan, Z.; Luo, M. A Review of Multi-Sensor Fusion SLAM Systems Based on 3D LIDAR. Remote Sens. 2022, 14, 2835. [Google Scholar] [CrossRef]

- Khattak, S.; Homberger, T.; Bernreiter, L.; Andersson, O.; Siegwart, R.; Alexis, K.; Hutter, M. CompSLAM: Complementary Hierarchical Multi-Modal Localization and Mapping for Robot Autonomy in Underground Environments. arXiv 2025, arXiv:2505.06483. [Google Scholar]

- Khedekar, N.; Kulkarni, M.; Alexis, K. MIMOSA: A Multi-Modal SLAM Framework for Resilient Autonomy against Sensor Degradation. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 23–27 October 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 7153–7159. [Google Scholar]

- Shetty, A.; Gao, G. Adaptive covariance estimation of LiDAR-based positioning errors for UAVs. Navigation 2019, 66, 463–476. [Google Scholar] [CrossRef]

- Teed, Z.; Deng, J. DROID-SLAM: Deep Visual SLAM for Monocular, Stereo, and RGB-D Cameras. In Proceedings of the Advances in Neural Information Processing Systems; NeurIPS: San Diego, CA, USA, 2021; pp. 16558–16569. [Google Scholar]

- Keil, C.; Gupta, A.; Kaveti, P.; Singh, H. Towards Long Term SLAM on Thermal Imagery. In Proceedings of the 2024 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 14–18 October 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 10174–10181. [Google Scholar]

- Blau, Y.; Michaeli, T. The Perception-Distortion Tradeoff. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 18–23 June 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 6228–6237. [Google Scholar]

- Zhou, W.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Zuiderveld, K. VIII.5.—Contrast Limited Adaptive Histogram Equalization. In Graphics Gems; Heckbert, P.S., Ed.; Academic Press: Cambridge, MA, USA, 1994; pp. 474–485. [Google Scholar]

- Lucas, B.; Kanade, T. An Iterative Image Registration Technique with an Application to Stereo Vision (IJCAI); IJCAI: Montreal, QC, Canada, 1981; Volume 81. [Google Scholar]

- Ken, S. Animating rotation with quaternion curves. In Proceedings of the 12th Annual Conference on Computer Graphics and Interactive Techniques—SIGGRAPH ’85; Association for Computing Machinery: New York, NY, USA, 1985; pp. 245–254. [Google Scholar] [CrossRef]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- ElSheikh, A.; Abu-Nabah, B.A.; Hamdan, M.O.; Tian, G.-Y. Infrared Camera Geometric Calibration: A Review and a Precise Thermal Radiation Checkerboard Target. Sensors 2023, 23, 3479. [Google Scholar] [CrossRef]

- Neumann, U.; You, S. Natural feature tracking for augmented reality. IEEE Trans. Multimed. 1999, 1, 53–64. [Google Scholar] [CrossRef]

- Everingham, M.; Van Gool, L.; Williams, C.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes (VOC) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

| Purpose of Use in the Prototype | Name | Device Information | |

|---|---|---|---|

| Server PC | Home-built desktop PC | OS | Microsoft Windows 11 Education |

| CPU | Intel(R) Core(TM) i7-9700 CPU @ 3.00 GHz | ||

| RAM | 32.0 GB | ||

| GPU | GeForce RTX 2070 | ||

| Drone | DJI Mavic 3T | Weight | 920 g |

| Focal length | 24 mm (RGB) | ||

| 40 mm (Thermal) | |||

| Max resolution | 3840 × 2160 (RGB) | ||

| 640 × 512 (Thermal) | |||

| Software/Service | Purpose of Use in the Prototype | General Usage |

|---|---|---|

| Unity 2022.3.34f1 | MR scene rendering and visualization in a virtual environment | Game engine/real-time 3D development platform |

| Immersal SDK 2.2.1 | VPS execution | VPS/localization SDK |

| OpenCV for Unity 3.0.1 | Image processing and feature tracking within the Unity runtime | Computer vision library integration for Unity |

| Agisoft Metashape 2.2.1 | SfM-based camera poses estimation and 3D map reconstruction | Structure-from-Motion (SfM)/photogrammetry software |

| Native Resolution [px] | Dual-Stream Resolution [px] | |

|---|---|---|

| Thermal camera | ||

| RGB camera |

| Focal Length () [px] | Principal Point () [px] | Distortion () | |

|---|---|---|---|

| RGB Camera | |||

| Thermal Camera |

| Baseline Method (IoU) | Proposed System (IoU) | |

|---|---|---|

| Route A | 0.747 | 0.750 |

| Route B | 0.510 | 0.725 |

| Route C | 0.62 | 0.735 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2026 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.

Share and Cite

Fukuda, R.; Fukuda, T. Robustness Enhancement of Self-Localization for Drone-View Mixed Reality via Adaptive RGB-Thermal Integration. Technologies 2026, 14, 74. https://doi.org/10.3390/technologies14010074

Fukuda R, Fukuda T. Robustness Enhancement of Self-Localization for Drone-View Mixed Reality via Adaptive RGB-Thermal Integration. Technologies. 2026; 14(1):74. https://doi.org/10.3390/technologies14010074

Chicago/Turabian StyleFukuda, Ryuto, and Tomohiro Fukuda. 2026. "Robustness Enhancement of Self-Localization for Drone-View Mixed Reality via Adaptive RGB-Thermal Integration" Technologies 14, no. 1: 74. https://doi.org/10.3390/technologies14010074

APA StyleFukuda, R., & Fukuda, T. (2026). Robustness Enhancement of Self-Localization for Drone-View Mixed Reality via Adaptive RGB-Thermal Integration. Technologies, 14(1), 74. https://doi.org/10.3390/technologies14010074