1. Introduction

The globally recognized One Health concept emphasizes the importance of animal management focused on controlling zoonotic diseases and ensuring food safety through risk management at the animal source, while also improving animal welfare and productivity [

1]. The basis for efficient livestock management lies in the effective control of animal movements [

2], which in turn ensures traceability of animal products and supports the monitoring of animal behavior and herd health. Individual animal identification plays a crucial role in modern livestock management, with ear tags and RFID (radio-frequency identification) transponders being the most widely used methods [

3]. However, these methods are physically invasive, susceptible to loss or fraud, and prone to errors; therefore, new automated and non-invasive alternatives are being sough [

4].

In recent years, computer vision and machine learning have emerged as promising approaches for visual biometric identification of animals. Convolutional neural networks (CNNs) have achieved high accuracy in recognizing various animal species, including sheep, pigs, cows, and even wild species [

5,

6]. They are capable of automatically extracting complex visual features and distinguishing individual animals even with small differences in appearance [

7].

Nonetheless, numerous studies have shown that models trained in controlled environments often lose accuracy when applied to new or more diverse settings. This occurs because CNN models often learn background features (e.g., walls, lighting, equipment) rather than the intrinsic biometric features of the animal itself. This leads to overfitting to a specific background, which compromises the model’s generalization ability [

4,

8].

Analogous trends have been observed in general classification tasks, where the background acts as a strong prioritization signal for the model. Removing or neutralizing background information can significantly improve the robustness of neural networks to unfamiliar data [

9].

Segmentation of image foreground and background has proven to be an effective method for reducing background interference [

10]. Researchers develop an algorithm for separating foreground and background regions, showing that independent modeling of the two components can improve object recognition accurac [

11].

This study explores how background removal influences cow identification with Neural Networks by evaluating the performance of networks trained on segmented foreground images (cow only), full images (cow plus background), and background-only images. We also compared training strategies–using pre-trained and fine-tuned–across two architectures: ResNet-50 and the Swin Transformer. The presence of constant backgrounds (e.g., walls and pens) in our dataset may facilitate the recognition; however, this reflects the natural conditions of most livestock environments. Unlike artificially created datasets, in which subjects are isolated or backgrounds are randomized, farm environments are inherently repetitive, which can inadvertently train models to rely on contextual features. Accordingly, the experimental goal was to quantify whether constraining the model to rely on foreground features improves robustness to variations in pose and background. Importantly, by isolating the foreground, the model’s ability to focus on the animal’s biometric cues rather than its environmental context, is tested, thereby addressing a previously understudied axis of variation in cattle identification. Over-reliance on these static cues, as demonstrated in our background-only experiments, poses a risk to generalization. Hypothetically, if an animal is moved to a different stall or farm, background-dependent models may fail to correctly identify it. Therefore, the goal of this study is not simply to assess foreground performance, but also to quantify the extent to which background replacement with white noise can support the development of models in realistic farm settings.

The paper is organized as follows:

Section 2 describes the Materials and Methods, including the dataset composition, data collection protocols, segmentation procedures, and training setup for both ResNet-50 and Swin Transformer models. It also outlines the evaluation strategy using Top-k accuracy and cross-validation.

Section 3 presents the experimental results across different background conditions and model architectures, supported by statistical analysis. Finally,

Section 4 concludes the paper by summarizing the main findings and outlining future directions for improving biometric consistency and reducing contextual bias in livestock identification systems.

2. Materials and Methods

The present study represents the second-stage experiment of an ongoing research project on visual cow face identification [

4]. Using the dataset of cow images prepared during the first phase of the project, we further expanded it with new photographs taken on several farms in southern Bulgaria between August and September 2025. Images were captured using a digital camera (Panasonic Lumix DMC-GX8 (manufactured by Panasonic Corporation, Osaka, Japan), equipped with a 15 mm f/1.7 lens) in daylight at the farm sites, resulting in a total of 599 images of 166 dairy cows (all of which were adult individuals with typical breed characteristics). At least three to four images were captured per animal, with records maintained for each cow to ensure accurate traceability and identification. After the expanded dataset was created, it was deduplicated as described below. The dataset represents images that can be easily obtained under typical farm conditions. Images of cows were captured sequentially, with limited variability in pose and background for each individual animal. This uniformity may lead a Neural Network to rely disproportionately on background similarity rather than on the distinctive biometric features of each animal.

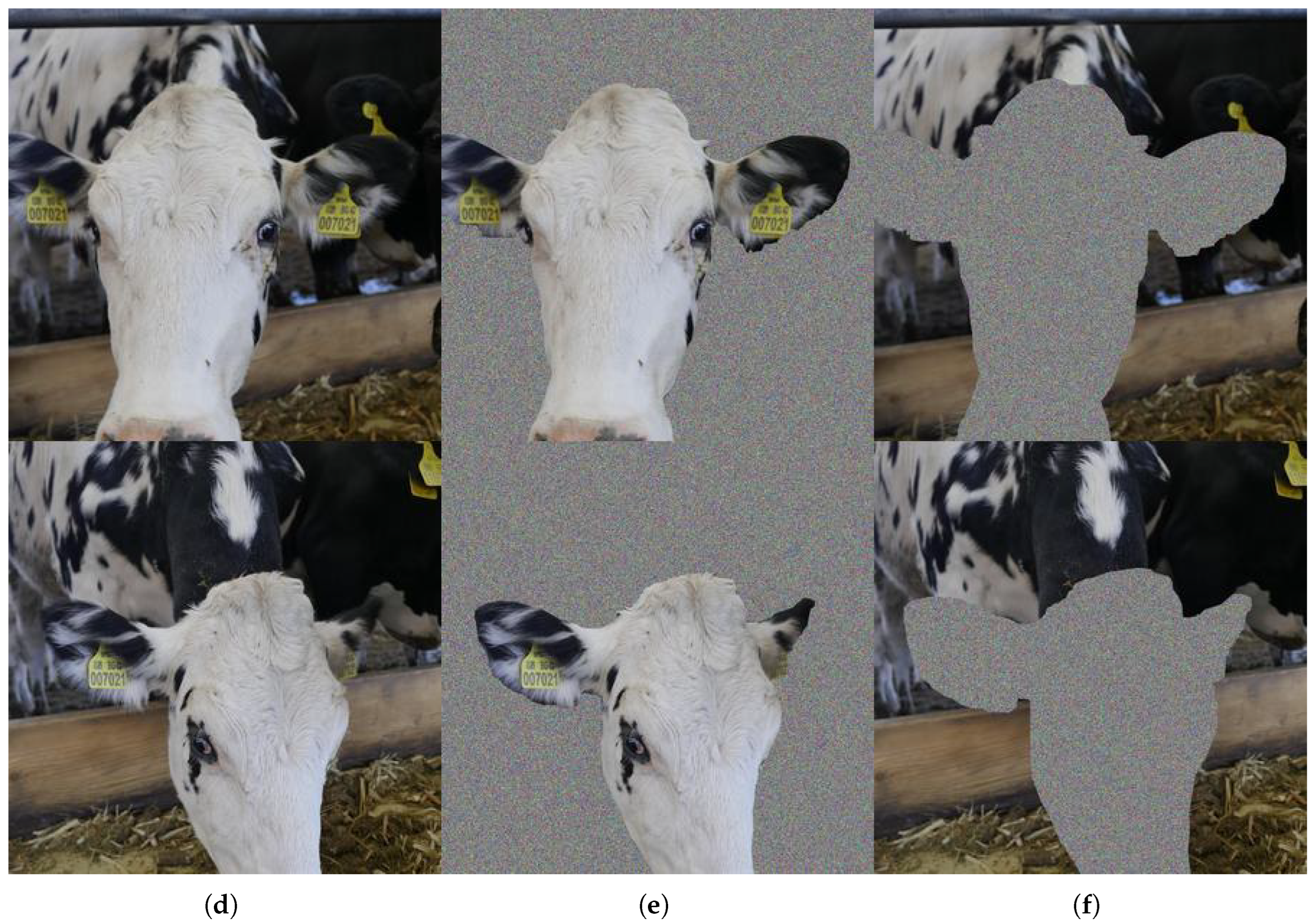

Annotation and segmentation were performed using computer vision platform Roboflow Pro (version October 2025), where veterinarians manually annotate pixel-accurate masks for two classes: foreground (the cow) and background (the surrounding environment) [

12,

13]. Roboflow’s Smart Polygon tool leverages AI, including the Segment Anything Model, to enable rapid and precise instance segmentation annotation by generating detailed polygon masks. The tool provides several refinement options to improve annotation accuracy. Users can click inside the generated mask to remove unwanted regions or outside to expand the selection, allowing for precise adjustments. The tool also supports click-and-drag to define a region of interest, which is especially useful to ensure precise separation between the animal and surrounding objects such as walls, feeding structures, and equipment. Additionally, users can adjust the polygon’s complexity using Convex Hull, Smooth, or Complex settings to control vertex density. Vertices can be manually edited, and points can be undone if needed, ensuring full control over the final segmentation mask. The resulting masks were exported in the COCO format [

14] for the training workflows.

To generate the foreground-only variant, background pixels were replaced with independently sampled white noise, thereby eliminating environmental cues while preserving the original features of animal. The background-only images were produced, similarly, by replacing the foreground pixels.

For the architectures of the model a ResNet-50 [

15] and a Swin Transformer [

16] were chosen. Both architectures were initialized with ImageNet pre-trained weights, their final classification layers were removed to extract embeddings, and finally were fine-tuned on an Nvidia B200 (Nvidia, Santa Clara, CA, USA) graphical processing unit with 180 GB of RAM.

As the focus of the project was the task of identification of cow images, the triplet loss function was used [

17,

18]. The loss function uses triplets of samples—each containing an anchor (the reference example), a positive sample (similar to the anchor), and a negative sample (dissimilar to the anchor). The model was trained to bring similar items closer together in the embedding space while pushing dissimilar ones farther apart.

As described in [

4] the triplet loss function could be expressed mathematically as

where

is the

distance between the anchor,

a, and the positive sample,

p,

is the distance between the anchor and the negative sample,

n, and

is a positive number that ensures a gap between the distances of positive and negative pairs.

2.1. Deduplication

To identify near-duplicate images, perceptual hashing (pHash) was used. For each group of images that pass a selected threshold of 10, only one sample of the group is selected.

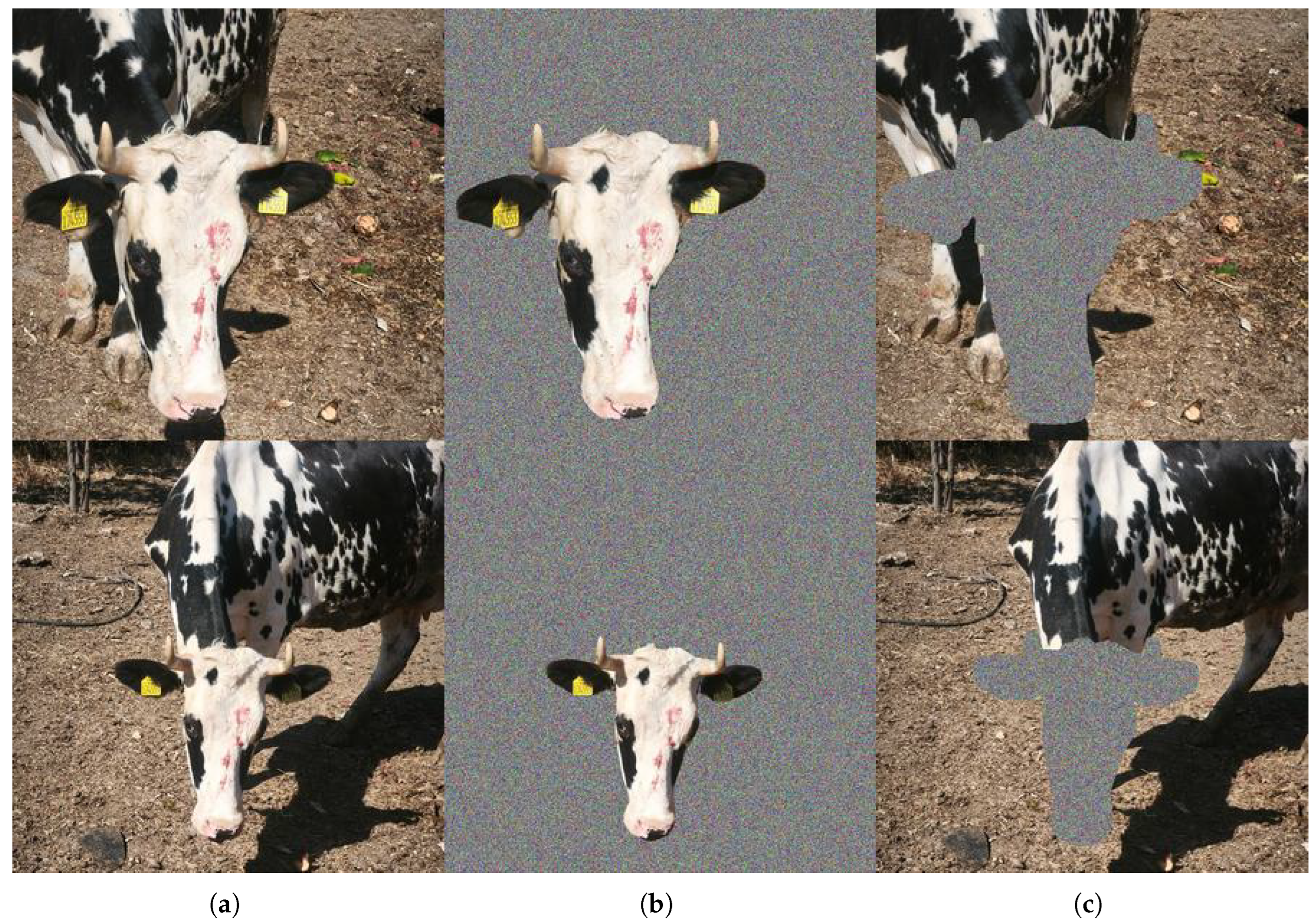

Figure 1 shows an example of images that exceed the similarity threshold and are therefore considered near-duplicates.

Perceptual hash generated a 64-bit hash by resizing an image to 32 × 32 pixels, converting it to grayscale, applying a 2D Discrete Cosine Transform (DCT), and retaining the top-left 8 × 8 low-frequency coefficients. The median of these 64 values was computed, and each coefficient was compared to it: if greater, the corresponding bit was set to 1; otherwise, 0. This resulted in a balanced hash that was robust to minor visual distortions. Similar images were identified using Hamming distance [

19]. Examples of images from the dataset can be found in

Figure 2.

2.2. Data Augmentation and Preprocessing

To enhance model robustness and generalization, we applied distinct transformation pipelines to the training and testing datasets. Training transformations incorporated data augmentation, whereas testing focused on standardization. For training, images were first converted to tensors and then randomly cropped and resized to 1000 × 1000 pixels using a scale between 0.9 and 1.0. Horizontal flips were applied with a 50% probability, followed by random rotations of up to 15 degrees. Small affine transformations were introduced, including rotations of up to 5 degrees, translations of up to 5%, and scaling between 0.95 and 1.05. Finally, tensors were normalized using ImageNet mean and standard deviation values. These augmentations increased dataset diversity and helped reduce overfitting. For testing, images were resized to 1000 × 1000 pixels, converted to tensors, and normalized using the same statistics. This ensured consistent preprocessing without augmentation, enabling fair evaluation.

2.3. Cross-Validation Methodology

To robustly evaluate model performance, three-fold cross-validation on a dataset of 125 individuals was used, dividing the data into three approximately equal groups. In each fold, one group served as the training set, another as the validation set for early stopping to prevent overfitting, and the third as the test set for final evaluation. Specifically, for Fold 1, the groups were assigned as training, validation, and test, respectively; for Fold 2, the assignments cycled such that the original test group became training, training became validation, and validation became test; for Fold 3, the cycle continued with validation serving as training, test as validation, and training as test. This ensured that each group was used for testing exactly once, providing unbiased performance estimates across the entire dataset.

2.4. Model Architecture

ResNet-50 and Swin Transformer (Swin-T) were selected as backbones due to their established status in computer vision research and extensive validation across numerous benchmarks and real-world applications [

20]. Despite this commonality, the two architectures represent fundamentally different design paradigms: ResNet-50 is a convolutional neural network that emphasizes local feature hierarchies through residual connections, whereas Swin-T is a vision transformer that prioritizes global context via self-attention mechanisms.

Swin Transformer was used as a backbone for the embeddings by first initializing with ImageNet pre-trained weights. Then, the final classification head was removed to extract pooled features directly. The output dimension was 768, representing the embedding space for image representations.

For ResNet-50 as embedding backbone network, the weights were initialized again with ImageNet pre-trained weights. The final classification layer was removed to extract features from the average pooling layer. The output dimension is 2048, representing the embedding space for image representations. During the forward pass, input images were processed through the backbone to obtain feature maps, which were then flattened into vectors. These vectors served as embeddings, capturing semantic information from the images for similarity comparison using cosine similarity.

2.5. Training Methodology

The training process utilized the PyTorch Lightning trainer framework [

21], configured with a maximum of 100 epochs to allow sufficient iterations for convergence. An early-stopping callback monitored the validation loss, halting training if no improvement occurred for 20 consecutive epochs to prevent overfitting and optimize computational efficiency. This approach ensures the model trains until either convergence or the patience threshold is reached, balancing thorough learning with resource constraints. After each epoch, the trainer evaluates the the triplet loss on the validation set to track generalization.

Optimization employed the Adam optimizer with a learning rate of and default values for the remaining hyperparameters. Model checkpoints save the best-performing version based on validation metrics for the triplet loss. This setup promotes stable and efficient training across the cross-validation folds and the two backbone architectures.

Triplets were sampled dynamically during training. For each mini-batch, an anchor-positive pair was selected from the same class, while a hard negative was selected from a different class with the closest embedding distance, based on current model predictions. This semi-hard mining strategy encouraged the model to learn more discriminative embeddings.

Training was conducted using PyTorch Lightning (v2.6.0) and PyTorch (v2.8.0) [

22].

2.6. Evaluation Metrics

Model performance in identification tasks was evaluated using multiple metrics to assess ranking accuracy and precision. Top-k accuracy (k = 1, 3, and 5) measures the proportion of tests where the true identity appears among the k most probable predictions, suitable for tasks with high inter-class similarity. For retrieval-based evaluation, Mean Average Precision (mAP) computes the average precision across ranked reference sets, by sorting references by distance to test embeddings and averaging precision at correct identity positions. Cumulative Match Characteristic (CMC) reports the cumulative accuracy at specific ranks (e.g., rank-1, rank-5), indicating the fraction of tests with correct identity within top-k. Confidence intervals (CI) at 95% for top-k and mAP were calculated.

3. Results and Discussion

The experimental framework aims to evaluate how the removal of background information influences the performance of neural networks in the context of visual cow identification. Four distinct training configurations were implemented for each of the two architectures.

Three variants of the dataset were subjected to the experimental analysis:

Results from the models pretrained only on ImageNet are also reported.

For each configuration, performance was assessed through the cross-validation procedure described in

Section 2.3.

Table 1 and

Table 2 shows the results of the ResNet-50 and the Swin-T model, respectively. The 95% confidence intervals for the top-1, top-3, and top-5 accuracies estimates were calculated using the ordinary Wald interval method, a standard normal approximation for binomial proportions. For each accuracy proportion

p derived from 125 test examples, the interval is computed as

, where

(the z-score for 95% confidence) and

. The margins of error reported in the table represent these standard errors, expressed as percentages.

The ResNet-50 model exhibited top-1 accuracies ranging from 78.40% to 80.00% when evaluated on full images, with corresponding top-3 and top-5 accuracies reaching 82.40% to 86.40%. Similar metrics regarding the recent livestock biometric research were reported in [

23,

24]. Performance on isolated backgrounds showed a decline, with top-1 accuracies dropping to 69.60–76.00%, while foreground-only tests yielded even lower results (54.40–60.00%). Notably, the ImageNet-pretrained variant performed comparably to fine-tuned models on full images but struggled more with segmented components.

In contrast, the Swin-T model demonstrated superior performance, achieving top-1 accuracies of 80.00–84.80% across all configurations, with top-5 accuracies occasionally exceeding 88.00%. On full images and backgrounds, accuracies remained in similar ranges (80.00–84.80% top-1), and on foreground isolation showed 78.40–84.00% top-1 accuracy. The ImageNet-pretrained Swin-T model maintained strong baseline performance, suggesting enhanced capability in handling spatial hierarchies for cow identification tasks.

In

Table 3 mean Average Precision (mAP) was used to evaluate identification performance. For each test image, the reference set was ranked by increasing distance to the test embedding, obtained from a triplet loss-trained network. Average Precision (AP) per test was calculated as the mean precision at ranks where correct identities appeared, accounting for multiple correct matches. mAP was then computed as the average AP across all tests. Confidence intervals (95%) were estimated using bootstrap resampling with 20,000 iterations and the Bias-Corrected and Accelerated (BCA) method, which corrects for bias and skewness in the sampling distribution—essential for mAP given the bounded

range and potential non-normality of AP values [

25].

The mAP results demonstrate that Swin-T consistently outperforms ResNet-50 across all training and test set combinations, with improvements ranging from 1.9% to 20.3%. Notably, training on foreground regions yields the highest mAP for full-image tests in Swin-T (70.90%, 95% CI: 65.00–76.06%), suggesting that focusing on cow-specific features enhances generalization. Conversely, for foreground-only tests, ImageNet-pretrained models achieve peak performance (71.28%, 95% CI: 65.50–76.45% for Swin-T), indicating that pretraining on large-scale diverse datasets can achieve identification accuracy on segment-specific regions. Overall, in several comparisons the confidence intervals confirm statistical significance in performance differences, but the rest are not conclusive.

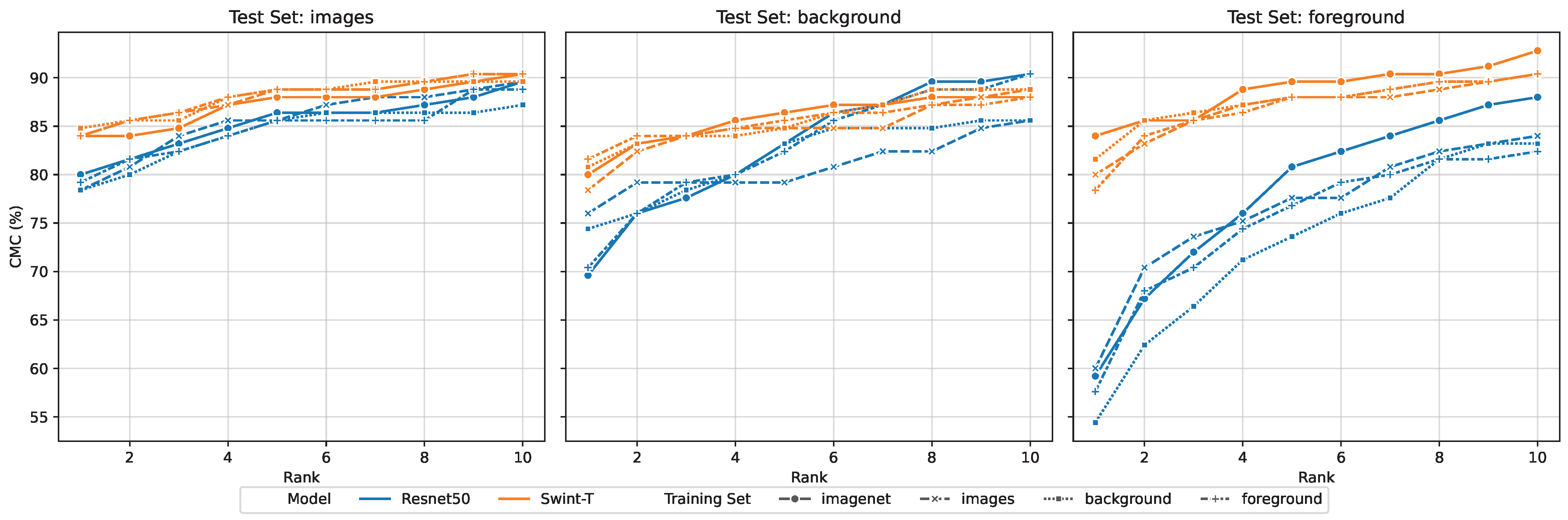

The Cumulative Match Characteristic (CMC) plots in

Figure 3 illustrate the ranking performance of ResNet-50 and Swin-T models across different training and test sets, with each subplot representing a test set (full images, backgrounds, or foregrounds). CMC measures retrieval precision in identification tasks: for a given rank k, it is the fraction of test images where the correct identity appears within the top-k ranked predictions. Curves were computed by ranking predicted labels by confidence for each test image, then calculating the cumulative accuracy at ranks 1 through 10. Specifically, at rank 1, CMC indicates top-1 accuracy. Results show Swin-T consistently outperforms ResNet-50, with the highest CMC at rank 1 (e.g., 84% for foreground-trained Swin-T on images test vs. 79.2% for ResNet-50).

Overall, Swin-T outperformed ResNet-50 in most test scenarios, particularly on segmented datasets, highlighting the advantages of transformer-based architectures over convolutional networks for this application. However, the reported standard deviations reveal overlapping confidence intervals in several configurations, indicating that the observed differences may not be statistically significant. Thus, the results are indicative of potential trends rather than conclusive evidence of superiority. Both models showed reduced accuracy on foreground-only inputs, indicating that background substitution with white noise may not help in overcoming the challenges in identification in farm environments. This finding corroborates earlier studies on the attribution of cow identification models [

4] and multi-species identification tasks [

8], which observed that background information in animal identification datasets often exhibits a strong relation with identity labels.

Based on the survey on [

26] environmental factors and background patterns were also discussed as factors which can influence visual animal analysis.

Comparable patterns of background dependence have been reported in both wildlife re-identification [

27] and cattle identification research [

28,

29]. However, the variability of performance highlights the importance of greater diversity in imaging conditions—such as pose, lighting, and multi-environment sampling—as emphasized in recent livestock biometrics reviews [

23,

24,

30].

Ref. [

9] found similar trends, showing that removing background often reduces accuracy but improves robustness to novel backgrounds. Ref. [

11] likewise demonstrated that foreground-background separation enhances reliability by forcing networks to rely on object-relevant cues rather than environmental artifacts.

The observation that the pretrained ImageNet model achieves accuracies between 59.2% and 84% suggests that CNNs are universal feature extractors. Texture-bias studies [

31] have shown that CNNs trained on natural images preferentially learn textural patterns, which can transfer into farm settings and allow identification based on visual context rather than biometric identity. This behavior matches findings from explainable AI analyses in livestock identification, where CNN attention maps often highlight large background regions [

4]. These results also reinforce the conclusions of [

4] that CNN-based cow identification often relies on background regions even when the animal’s face is prominent. Similar phenomena were documented by [

8], who observed that models trained without foreground-background separation in wildlife datasets exhibited substantial contextual overfitting.

In the present experiment, models trained exclusively on background images outperform those trained on foreground images when tested on full images, although this improvement is not statistically significant as the confidence intervals overlap. This suggests that the neural networks can implicitly use background characteristics as a proxy for identity, especially when background conditions vary across individuals but remain consistent for the same individual. Similar implications have been documented in multi-feature cattle identification systems [

29].

4. Conclusions

The experimental results indicate that background information may influence the performance of neural-network-based cow identification models and, in certain settings, may contribute to confounding effects. The higher accuracy observed in background-inclusive configurations suggests that contextual cues can affect identity recognition, pointing to a potential tendency toward contextual reliance in visual animal recognition systems. In contrast, foreground-only segmentation can be viewed as a useful analytical tool for examining the contribution of intrinsic biometric features, rather than as a definitive or deployable preprocessing strategy.

It should be emphasized that replacing the background with white noise produces images that differ from those encountered in natural farm environments. Accordingly, this approach was used solely as a controlled ablation to suppress structured background information, and not as a realistic data representation. Alternative techniques that preserve isolated foregrounds while remaining closer to the natural image manifold—such as blurring, padding, or inpainting—may offer more practical avenues for future investigation, particularly with respect to model interpretability and robustness.

The comparative evaluation of CNN-based (ResNet-50) and transformer-based (Swin-T) architectures under multiple training configurations provides additional perspective on these observations. The stronger performance observed for Swin-T relative to ResNet-50 indicates that architectural choices may affect how biometric and contextual information are utilized.

Overall, this study points to the challenges associated with background uniformity in farm-based image datasets. While background-related features may contribute to improved performance within a single environment, they may also constrain generalization across different farms or camera configurations. Given the limited dataset size and the exploratory scope of the analysis, the findings should be considered indicative rather than conclusive. Future work should focus on larger and more diverse datasets and training strategies designed to reduce sensitivity to background variation, particularly in cross-environment deployment scenarios.