1. Introduction

Electroencephalography (EEG) is a non-invasive neurophysiological technique that measures electrical activity generated by the brain. First introduced by Hans Berger in 1929, who observed that the brain activity can be measured via electrodes placed on the scalp [

1]. Brain activity measurement has become an essential tool in neuroscience, psychology, and clinical diagnostics [

2]. Unsurprisingly, the majority of articles studying EEG signals serve purely medical purposes, such as the diagnosis of epileptic seizures, dementia, and mental disorders [

3]. However, EEG signals have significant potential also in non-clinical applications, including assistive devices [

4].

Recently, EEG sensors have also been viewed as a communication channel between the human brain and a computer system. This has led to the appearance of the Brain–Computer Interface (BCI) concept. A BCI enables direct communication between the brain and external devices, bypassing traditional neural pathways. Such an interface can convert brain signals into commands that control computers, prosthetics, or other devices [

5]. BCIs are broadly categorized into invasive and non-invasive types. Invasive BCIs involve the implantation of electrodes directly into the brain, offering high spatial resolution but posing surgical risks. Non-invasive BCIs, such as several commercial EEG-based systems, use external sensors on the scalp and they are safer and more practical for everyday use, despite their lower spatial resolution.

Thanks to the non-invasive BCIs, it has become possible to take this technology out of the controlled clinical environment. This has opened opportunities for a wide range of researchers to develop their own EEG reading and analysis systems. Moreover, the emergence of commercially available EEG readers has made it possible to use EEG signals collected from subjects for purposes beyond clinical applications. This field of research has seen rapid growth in the past years, driven by advancements of machine learning (ML). With the advancement of ML, learning models have replaced the time-consuming visual signal analysis. Numerous automated solutions have been proposed for various non-medical purposes, and an increasing number of prototype systems were developed in gaming, robotics, and the entertainment industry, combining BCI and ML technologies [

6,

7,

8].

In EEG sensors, the EEG signal represents voltage fluctuations, which can be detected with non-invasive devices through electrodes placed on the scalp. The voltage changes are generated by the movement of ionic currents within neurons during brain activity [

9]. Nowadays, numerous EEG readers are available on the market. Among them, the most well-known are probably the Neurosky Mindwave Mobile, the Emotiv EPOC, and the OpenBCI EEG Electrode Cap, mainly due to their relatively low prices [

10]. The samples coming from the EEG reader can be seen as a discrete time-varying signal. If this signal is transformed into the frequency domain, it can be further decomposed into five main frequency bands: delta (δ: 0.5–4 Hz), theta (θ: 4–8 Hz), alpha (α: 8–13 Hz), beta (β: 13–30 Hz), and gamma (γ: >30 Hz) [

1,

11]. Each band is associated with specific cognitive or emotional states. For instance, alpha waves are linked to relaxation, while beta waves are associated with concentration and problem-solving. This makes EEG useful for studying mental states such as attention, memory, and emotion, which manifest in distinct brainwave patterns [

12].

EEG readers provide real-time data with high temporal resolution, enabling researchers to observe neural processes in response to various stimuli. It is a widely accepted fact that multimedia devices have a significant impact on the human brain. The images and sounds emitted by multimedia devices have a stimulating effect, influencing human emotions. Researchers have been studying the positive effects of music on mood and behavior for years. Several beneficial effects of music can be highlighted, such as improving stress tolerance and alleviating depressive states [

13].

This study presents a fully self-developed system aimed at examining the effects of different styles of musical stimuli and recognizing possible signal patterns generated by the music using a machine learning model. The contributions of this paper are as follows:

Using data collected from five volunteers, we achieved a music recognition efficiency of 61–96% with a neural network, which received time- and frequency-domain features extracted from EEG in vectorized form.

With the help of confusion matrices, we demonstrate that the recognition efficiency of individual music tracks varies between subjects. This observation leads us to conclude that different music tracks have different effects on individuals.

The results of the study also indicate that if the EEG data used for testing the model were collected at a different time than the EEG data used for training the model, the trained model will not function effectively. In our view, this phenomenon can be traced back to the problem of intra-individual variability.

2. Related Work

The ability of EEG to measure brainwave activity across multiple frequency bands makes it valuable for studying external stimuli such as music. Music has a well-known stimuli effect that significantly influences the listener’s emotions. In several earlier papers, researchers have already examined the relationship between brainwaves and music from various perspectives. The authors of [

8] observed that during music listening, the power in the gamma and theta bands increases, and this depends on whether the sounds are pleasant or unpleasant. Another study found that while rock and classical music decrease the power of the alpha and theta bands, they increase the power of the beta band [

14]. The study in [

13] concluded that different styles of music (such as country, rock, etc.) affect the power of the EEG signal frequency bands differently so the power of bands varies depending on the type of music.

With the advancement of EEG readers, more and more researchers have begun exploring how to determine basic emotional states (happiness, surprise, fear, anger, disgust, and sadness) from collected EEG signals while the subject is under the influence of visual and/or musical stimuli. In the [

15] study, image and sound stimuli were used to elicit emotional reactions. To classify the emotional reactions, the kNN (k-nearest neighbor) and SVM (support vector machine) models were applied, which received high-order spectrum (HOS) features as input. The goal was to compare the emotional states of healthy and Parkinson’s disease subjects. At the end of the experiment, participants had to fill out a questionnaire about the emotional states they experienced. The questionnaire listed six basic emotional states, where participants had to indicate the strength of the emotion on a five-point scale. The authors of [

16] cut out segments from film soundtracks and used them to evoke basic emotions (sadness, pleasure, joy, and anger). To recognize these emotions, they employed an artificial neural network, where the collected data were labeled with emotional states, which were determined based on self-reporting. Beyond the mentioned studies, other papers can also be mentioned where the goal was to recognize emotional states, and the data-labeling process is based on questionnaire responses. For example, the aim was to identify four emotional states, in [

17], and the authors achieved an accuracy of 82% using an SVM. Furthermore, the authors of [

18] claimed that they recognize two emotional states with 81% accuracy.

The above-mentioned studies show that there is a relationship between EEG signals and various cognitive and emotional states. The problem is that in most studies where the goal was to recognize emotional states, data labeling was based on the participants’ self-reports, which can be unreliable because the mental state cannot be directly defined, and it changes relatively slowly [

19]. In [

20], three different methods were used to recognize the natural, pleasant, and unpleasant emotions of elderly couples while they played a computer game. In this study, various approaches were applied to label the data, and ultimately, the authors of the study also noticed that recognizing emotional states based on self-reports is not accurate. This issue has also been mentioned by others [

21,

22].

Unlike the previously presented studies, the authors of articles [

22,

23] examined the effect of music tracks on EEG signals instead of emotions. The study involved multiple participants who had to listen to 10 music tracks. The tracks varied in style, including rock, pop, and classical music. Their hypothesis was that different music styles would generate distinguishable patterns that could be identified using a neural network. The labeling of the collected EEG signals was performed by their self-developed software, which gathered data from the EEG reader during music playback and used the currently playing track as a label. In [

23] the EEG reader was a NeuroSky Mindwave Mobile and the authors measured less than 30% music recognition accuracy. On the other hand, in [

24] a 14-channel EMOTIV EPOC+ EEG reader was used for data collection, and with its help, they were able to determine the title of the music from the recorded EEG signals with an accuracy ranging between 56.3% and 78.9%, depending on the participants. This study conducted the same experiment as the one presented in [

22,

23,

24] but with a 16-channel EEG reader and an improved EEG signal processing method.

3. Materials and Methods

3.1. EEG Reader

In the past decade, several EEG sensor manufacturers have entered the market with their products. One of the well-known ones is OpenBCI (New York, NY, USA), whose electrode cap containing Ag/AgCl electrodes has been used for this study (

Figure 1). The signals coming from the electrodes are captured using the 16-channel OpenBCI CytonDaisy biosensing board. The electrodes were positioned according to the 10–20 international system, ensuring consistent placement over standard regions of the scalp (

Figure 1). The recommended gel for the electrodes was used to reduce the impedance between the scalp and electrodes.

The CytonDaisy board, powered by a PIC32MX250F128B microcontroller, can sample up to 16 channels at a rate of 125 Hz. The board was powered using 4 AA batteries to avoid noise from external power sources. It transmits raw data via a Bluetooth connection to the receiving unit, which appears as a UART (universal asynchronous receiver transmitter) port on the host machine.

3.2. Data Acquisition Software

A real-time EEG signal acquisition software has been developed for this investigation using the Python programming language (Python 3.12.1). The implementation of the software is based on the following libraries:

BrainFlow (5.18.1): For communication with the OpenBCI CytonDaisy board and the EEG data acquisition software.

NumPy (2.3.3) and SciPy (1.16.2): For numerical operations and signal processing.

Pandas (2.3.2): For organizing and managing EEG data.

Matplotlib and Seaborn (0.13.2): For visualizing signals and model performance.

Keras (TensorFlow backend) (3.0): For building and training the ML model.

Scikit-learn (Sklearn) (1.4.2): For model evaluation metrics and machine learning utilities.

PyQt5 (5.15.10) and Pygame (2.6.1): For the GUI (graphical user interface) development and for audio management.

The GUI was designed to manage data acquisition, provide real-time feedback, start, pause, and stop the recording, and synchronize data with music presentation. It was built using PyQt5 and integrated with the BrainFlow API and Pygame for audio playback. The system automatically starts recording EEG data when a track begins playing and saves the EEG data with track information into csv files.

3.3. Data Acquisition Procedure

Five participants, four male and one female, took part in the study. Before the data collection began, all participants were informed about the purpose of the experiment and the process of data collection. The participants’ ages were 25, 22, 20, 21, and 22 years, in that order. None of the participants were bald. The female participant (4th subject) had long hair, while the others had medium-length hair. All of them were undergraduate (BSc) students. According to their own statements, they were physiologically and psychologically healthy, right-handed, and they were not under medical treatment.

During the data collection phase of the study, each participant had to listen to 10 pieces of songs. The goal in selecting the songs was to ensure that they come from different musical genres and that within their respective genres they are popular. In the end, the selected songs can be categorized into 9 musical genres: Latin Pop, Techno, Jazz, Heavy Metal, Hip-Hop, Blues, Neoclassic, Classic, Country. Typically, the genre of a song gives an indication of its BPM (beats per minute) range. Songs in the Classical, Hip-Hop, or Blues categories generally have a low BPM, whereas Techno and Metal include songs with a high BPM. The BPM of a song is a significant factor because it affects the power of EEG frequency bands [

25]. In this investigation, the following songs have been used as music stimulus, similarly to [

22]:

Ricky Martini—Vente Paca (Latin Pop)

Dj Rush—My palazzo (Techno)

Bobby McFerrin—Don’t worry be happy (Jazz)

Los Del Rio—La Macarena (Latin Pop)

Slipknot—Psychosocial (Heavy Metal)

50 cent—In da club (Hip-Hop)

Ray Charles—Hit the road Jack (Blues)

Clint Mansell—Lux aeterna (Neoclassic)

Franz Schubert—The trout (Classic)

Rodnex—Cotton Eye Joe (Country)

During music listening (data acquisition), participants sat in a quiet room with minimal external stimuli, instructed to sit still and keep their eyes closed. Each track lasted for one minute, followed by a 40 s break. Listening to entire songs would take too much time, and our experience suggests that the data collection process could become tiring and monotonous for participants. For this reason, we chose to use only a 1 min segment from the middle of each song. Participants repeated this process five times on the same day, resulting in 5 trials per track for each participant. Before the data collection began, each participant was informed about their task and the goal of the experiment. During the data acquisition process, we have followed the Declaration of Helsinki Ethical Principles.

3.4. Signal Processing

In most practical problems, the collected “raw” data goes through a data-preprocessing stage before reaching the machine learning model. The first step of data preprocessing was the “windowing”. The signal which comes out from the EEG reader can be seen as discretized data flow. This data were divided into small sections called windows. The next step was mean subtraction where the mean value comes from the training dataset collected from the subjects. Mean subtraction removes any DC offset and ensures that the EEG signal is centered around zero.

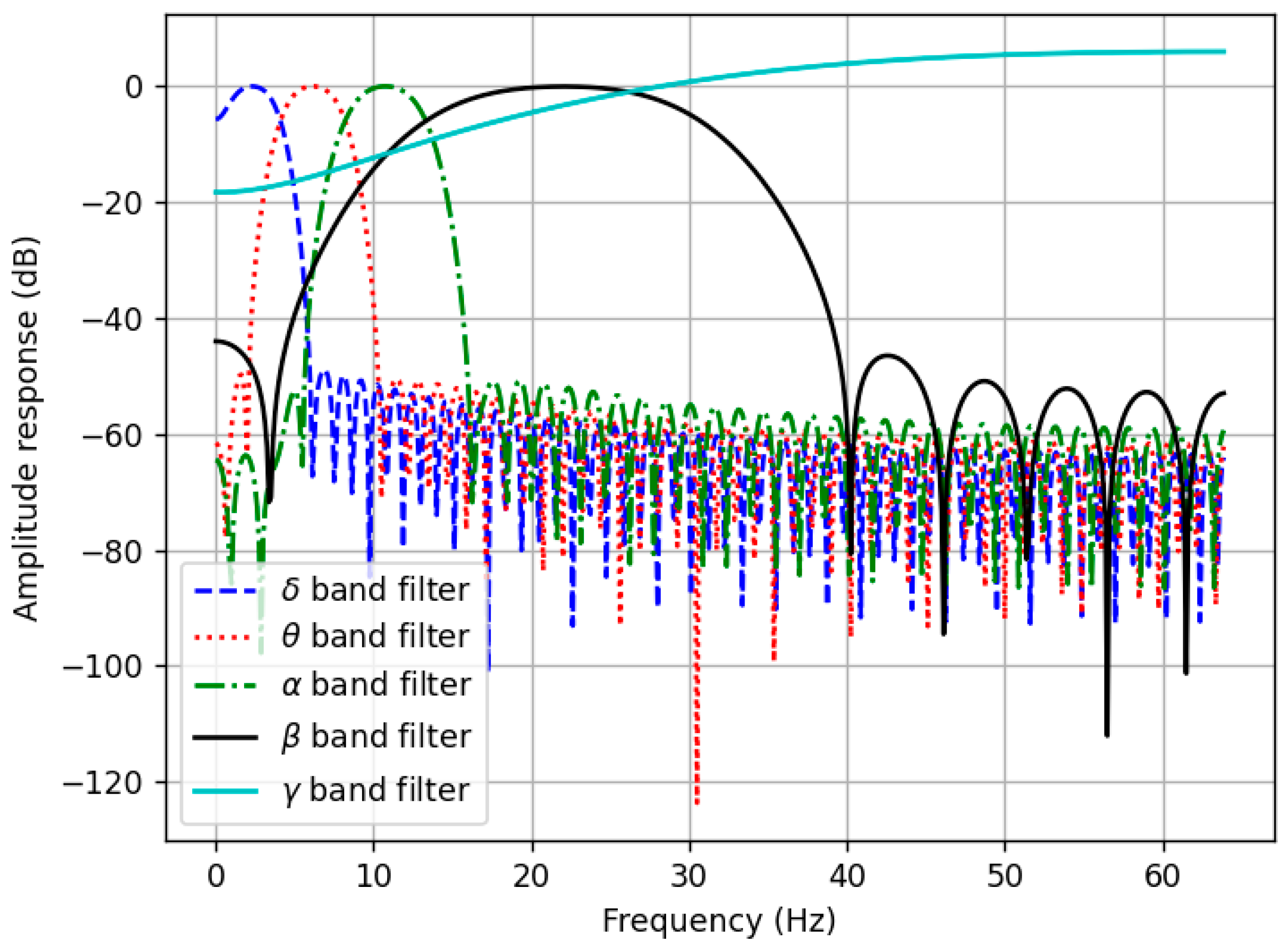

The detection of electrical impulses on the surface of the skull is influenced by several factors. One of the most significant is muscle movement, which generates substantial noise and distorts the shape of the useful signal. This is why the participants in the study had to listen to music with their eyes closed while remaining motionless. However, this only reduces the noise added to the signal but does not eliminate it completely. The most straightforward way to attenuate noise is digital filtering. Additionally, digital filters are also necessary for this investigation because they allow us to extract the previously mentioned δ, θ, α, β, and γ bands from the original signal. Splitting the original signal into frequency bands enables us to analyze the individual bands in both the time and frequency domains. To separate the frequency bands, we designed FIR (finite impulse response) filters. Their frequency responses are depicted in

Figure 2.

Before filtering, a Hann window was applied to smooth the signal in the window and reduce edge effects. So, the frequency band decomposition with the FIR bandpass filters was the third step of the data-preprocessing pipeline. An illustration about the effect of filtering can be seen in

Figure 3.

In many practical problems, if the normalized raw data are used as the input for a classifier model, the model cannot operate with sufficient performance [

26]. Instead of using the normalized raw data, features computed from the EEG signals are used in vectorized form as model inputs. We also followed this approach by calculating 11 time- and frequency-domain features from each frequency band signal. It is the last components of the data-preprocessing pipeline where features from the bands are concatenated into a feature vector. The list of features are as follows:

Standard deviation of time-varying signal.

Mean absolute deviation.

Interquartile range (IQR).

75th percentile.

Kurtosis.

Peak-to-Peak difference.

Signal power.

Power spectral density (PSD).

Spectral centroid.

Spectral entropy.

Peak frequency.

3.5. Classifier Model

As classifier model, an artificial neural network (ANN) has been used. The model has been developed using the Keras framework to classify the feature vectors coming out of data-preprocessing pipeline into 1 of the 10 music tracks. The network architecture consists of an input layer, two hidden layers, and an output layer. The hidden layers use ReLU activation functions and include dropout (with 0.4 probability) and L2 regularization with a 0.002 regularization value to prevent overfitting. The number of neurons on the hidden layers is 128 and 64. The output layer has a softmax activation function and 10 neurons according to the 10 songs. The model was trained using the Adam optimizer with a sparse categorical cross-entropy loss function. The epoch limit was 1500 with a stop condition where the patience limit was 80. The learning rate of the model was set to 0.001, which provides slow but steady convergence during the learning process.

4. Results and Discussion

At the beginning of the investigation, data collection was performed in multiple rounds for each subject on the same day. The collected data from the subjects has been split into training (76.5%), validation (13.5%), and test sets (10%), using stratified sampling to maintain class proportions. We paid special attention to ensure that there was no overlap between the training and test datasets. The model’s performance was evaluated using the accuracy metric and confusion matrix. Moreover, learning curves were plotted to monitor the model’s learning behavior over time. The evaluation includes five separate subjects (subject 0 to subject 4) and a merged dataset from all subjects (referred to as “All Subjects”). The “All Subjects” model is trained on the combined EEG data from all five subjects while other models are also trained on the individual’s data. This approach aims to evaluate the model’s ability to generalize across different subjects, but it typically results in lower classification accuracy compared to models trained on individual subjects due to inter-subject variability. During the investigation multiple window sizes have been tested to examine the possible relationship between window size and model efficiency. The findings indicated that classification accuracy increased with longer time windows (

Table 1).

Table 1 shows that the accuracies ranged from 30% to 46% in the case of a 2 s window while on the “All Subjects” dataset the model reached 23% accuracy. Misclassifications were frequent, particularly between certain classes. In the case of the 10 s window, the model’s accuracies improved to 50.5–70.5%. The next window size (20 s) brought accuracy between 58% and 95%. Finally, in the case of the widest window (30 s), the model could achieve 96% accuracy for one subject. For the rest of the subjects the accuracies ranged from 61% to 76% while it was 53% on the “All Subjects”. The widest window produced the best classification performance for all subjects and the combined dataset.

It is a well-known fact that long hair is a disturbing factor during the collection of EEG signals. Taking this into account, it is worth mentioning that although the fourth subject had longer hair than the others, this additional hindering factor is not reflected in the results. The recognition rates obtained from the fourth subject’s data were not the lowest for any of the window sizes.

The recognition rate varies by subject. The reason behind it is the individual characteristics of subjects which affect the EEG response to musical stimuli differently. The literature refers to this phenomenon as BCI illiteracy [

27]. In relation to this, the result shows that increasing the window size slightly changes the variation in recognition rates among subjects, but these differences persist even with an increased window size. This also explains why the model performed worse on the aggregated “All Subjects” dataset.

The content of

Table 1 clearly shows that the accuracy of song recognition for all subjects significantly exceeds the probability of randomly guessing the correct outcome. This shows that the effect of songs generates distinctive patterns in the EEG signals. To examine the individual impact of songs on each subject, we need to visualize the confusion matrix recorded on the given subject’s data. As an example, the confusion matrices based on the data of subjects 0, 2, and 4 are shown in

Figure 4. By visualizing the confusion matrices, we gain insight into how the songs affected each individual. If we examine

Figure 4, we can see that for the first subject, the model recognized each song with high efficiency, while for the other two subjects, the accuracy of song recognition varied.After the data collection, we asked the participants to rank the musical genres from which they had listened to songs. They were instructed to place the genre they liked the most in the first position, and the one they liked the least in the last position. Comparing the subjects’ order of musical styles with the confusion matrices we could not establish a clear relationship between the recognition rate of the music pieces and the musical style. This requires further research, during which we will examine the energy level changes in the well-known frequency bands of neighboring EEG channels, similar to the methods applied in processing EEG signals of subjects suffering from Parkinson’s disease [

28,

29].

During our investigations, we also encountered another important issue. A substantial decline in performance can be observed when the model is tested on data collected from the same person on a different day. This highlights the significant impact of inter-session variability on EEG-based classification systems. We faced this issue when we collected data from the first three subjects again a few days later, and re-tested the models trained for each subject. In this case, models achieved 12%, 15%, and 19% accuracy, respectively. Consequently, the models were more sensitive to day-to-day fluctuations in brain activity than to the type of music stimulus. The main question is, what are the causes of this phenomenon? We hypothesize that the main source of this problem is intra-individual variability, which describes the phenomenon when the brain signals of the same person are not stable over time, and therefore EEG data measured at different times may yield different results even when analyzed with the same method [

30,

31]. In addition, further factors can also be mentioned that make it more difficult to ensure identical data collection conditions. One of them is the varying positioning of the EEG electrodes, which is not easy to achieve consistently from one measurement to the next. Another potential cause is the change in the physiological state of the subject. For example, the current water content of the skin affects skin surface resistance therefore it can influence the amplitude of the detected EEG signals as well. For those reasons, the method presented in the article can only be applied to investigate the effect of musical stimuli on EEG signals if the datasets used for training and testing are collected consecutively, without modifying the position of the EEG reader on the subject’s head.

5. Conclusions

In this study, we presented a framework based on the OpenBCI CytonDaisy board that enables the examination and recognition of music-induced stimuli in EEG signals and does not require user feedback. The results have demonstrated the feasibility of using EEG signals to classify music stimuli, with longer time windows resulting in higher classification accuracy. The 30 s window yielded the best results out of the tested window sizes. The presented neural network model which is fed by time- and frequency-domain features achieved 61–96% accuracy depending on the subject.

Since it is possible to examine the effect of music tracks on brain activity of an observed person, it can serve as an important starting point for the development of music therapy and music recommendation systems. However, this study raised another unanswered question and unresolved issue. The problem is the significant drop in performance when testing the pre-trained model on data collected on a different day. This problem highlights the need to address inter-session variability for practical applications. The further question is the factors influencing the recognition rate of individual songs. In the continuation of the research, it is necessary to determine what factors influence the recognition rate of individual musical pieces.