1. Introduction

Geological implicit modeling is pivotal for constructing three-dimensional (3D) models of geological bodies by using sparse sampling data, thus enabling the integration and interpretation of multi-source heterogeneous geological data. A key challenge in this domain is the dynamic reconstruction of accurate 3D orebody models from complex cross-contour polylines, particularly when updating models with new geological data. Traditional explicit modeling methods, with their low automation and inability to dynamically update, fail to meet the demands of rapid resource estimation and real-time model adjustments during production exploration.

Cross-contour polylines in mine production mostly originate from geological logging. Mine geological logging is a critical process in operational mining, involving systematic documentation of geological phenomena in exploration boreholes, development headings, and production drifts through textual descriptions, standardized charts, and sketch maps. These records provide high-fidelity spatially referenced data that are essential for delineating orebody morphology and ore-rock boundaries, thus supporting mine planning and resource estimation. Integral to orebody model updating workflows, the raw geological sketches and maps generated from geological logging should be further processed. To obtain reliable vectorized polylines, we developed image recognition and digitization techniques based on the pattern recognition of geological sketches. This facilitates the extraction of geologically interpreted polylines that represent local geometry features. These interpreted polylines are then rigorously utilized to update and refine the orebody model, thereby ensuring its ongoing accuracy and reliability for mine planning, reserve estimation, and operational decision-making throughout the mine lifecycle.

Recent advancements in artificial intelligence (AI) and machine learning (ML) [

1,

2,

3,

4] have significantly advanced geological data digitalization and model updating. For example, Zhang et al. [

1] introduced an AI-based framework for the automated extraction of geological features from borehole data, thus improving model updating efficiency by 30% over manual methods. Similarly, Rotem et al. [

2] applied a convolutional neural network (CNN)-based approach to interpret hyperspectral shortwave infrared core scanning data, automating mineral identification and improving geological model accuracy in mineral exploration. Additionally, Lyu et al. [

3] developed a multi-scale generative adversarial network (GAN) for generating 3D subsurface geological models from limited borehole data, achieving improved resolution and geological consistency by integrating prior geological knowledge. These studies underscore the potential of AI/ML to improve automation in implicit modeling, particularly for dynamic 3D model updates. However, up to now, they are still not competent enough for the task of dynamic orebody modeling that is based on complex cross-contour polylines.

It is an important and difficult problem in the research of digital mines to model geological bodies that are based on a large number of complex cross-contour polylines. Currently, there are several approaches for contour modeling. A common practice is to triangulate the contour polylines using the Delaunay triangulation methods [

5,

6,

7,

8]. Another common practice is to represent the geometry model using the way of free-form curves and surfaces [

9,

10]. The most commonly used surface-fitting methods include the Bezier method [

11], the B-spline method [

12,

13], and the Nurbs method [

14]. However, the above two types of approaches make it difficult to process the contour polylines with complex topology relationships. The most promising approach is to interpolate the contour polylines using implicit modeling methods [

15,

16,

17]. This type of method interpolates the contours using an implicit function (e.g., the radial basis function [

18]) and extracts the isosurface using a surface reconstruction method (e.g., the marching cubes [

19,

20] and the marching tetrahedra methods [

21,

22]). It is certain that there are some other modeling methods [

23], including methods [

24,

25,

26] that combine the above several approaches by using some new ideas.

In this paper, we mainly focus on the third approach, namely the contour interpolation method. At present, most of the existing studies [

27,

28] of contour interpolation are suitable for the cases in which each contour polyline is coplanar. For the non-coplanar polylines, a very important problem for contour interpolation or contour modeling is to determine the orientation of the contour polylines or the topological relationship between the contour polylines. Though there are some works [

29,

30] on contour modeling that do not calculate the normal orientations of contour polylines, these methods require determining the interior and exterior position relationships or other prior input polyline information by using specific ways (e.g., geometry sampling). Generally, the implicit modeling method needs to convert the original geological sampling data and manually interpreted data into interpolation constraints. The automation of this method still mainly depends on the automatic construction of interpolation constraints. However, there is no robust method to automatically determine the normal direction of multiple sparse and uneven contours.

Our work specifically addresses the critical challenge of reconstructing accurate and geologically plausible 3D orebody models from specific geological data, with a primary focus on cross polylines. To model the orebody, based on the geological interpreted data of complex cross-contour polylines, we transformed the original contour polylines into oriented polylines and utilized the implicit modeling method to reconstruct the orebody model dynamically. To improve the reliability of the normal estimation results of contour polylines, we propose a normal estimation method of contour polylines that allows a manual adjustment of some normals based on the combination of the point cloud normal dynamic estimation [

31] and cross-contour normal estimation methods [

32]. Generally speaking, the normal estimation method of contour polylines contains two basic strategies as follows: the approximate estimation rule at intersections, and the manual a priori rule. The approximate estimation rule at intersections is based on the assumption that the local geometric features of the contour polylines near the intersection point satisfy the condition of the least squares fitting plane. However, if the normal estimation at the intersection point is erroneous, the normal reorientation of the adjacent polylines at the corresponding intersection point may fail, which will affect the normal propagation process. In this case, the manual a priori rule can be used to adjust the erroneous estimated normal to improve the reliability of the normal estimation results of cross-contour polylines.

On the basis of transforming the problem of contour normal estimation into a problem of normal estimation of the point cloud at the intersection points, an implicit modeling method of orebody that can dynamically estimate the normals of cross-contour polylines is proposed in this paper. The method allows geological engineers to interactively adjust the normals of some contour polylines according to the geological rules, and thus, automatically update the normals of the corresponding adjacent contour polylines in the process of normal estimation at the intersection points and in the process of normal propagation.

2. Method Overview

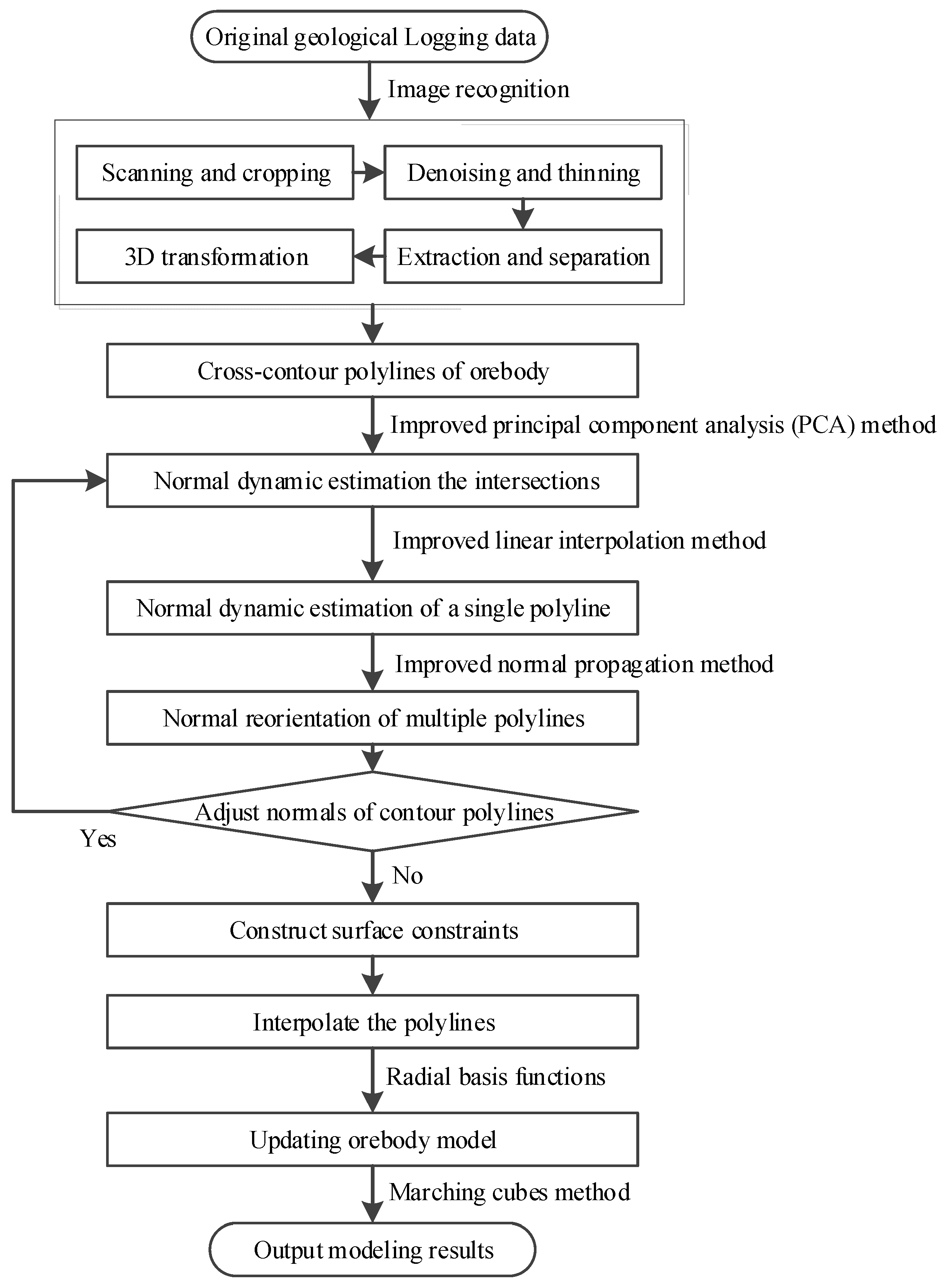

2.1. Method

The overall goal is to reconstruct and dynamically update 3D implicit orebody models directly from geological logging data. The method proposed in this paper consists of the following steps.

- (1)

Scan regions from the original geological log drawings and then crop the relevant graphical sections.

- (2)

Convert the cropped image to grayscale and perform noise removal.

- (3)

Trace the connected non-zero pixels to extract the boundary polylines.

- (4)

Transform the vectorized 2D boundaries to true 3D positions via translation, scaling, rotation, and projection.

- (5)

Preprocess the data of the geological interpreted contour polylines.

- (6)

Interpolate the normals of the intersections of cross-contour polylines.

- (7)

Estimate the normals of a single contour polyline.

- (8)

Reorient the normals of multiple contour polylines.

- (9)

Adjust the normals of some segments according to the a priori geological rule and re-estimate the normals of the contour polylines.

- (10)

Convert the directional polylines into surface constraints.

- (11)

Interpolate the polylines using radial basis functions.

- (12)

Update the orebody model using the marching cubes method.

The overall flow chart of the method is shown in

Figure 1.

2.2. Terminology

Cross-Contour Polylines: These are 2D or 3D line segments that are derived from geological sketches and represent the intersections of geological surfaces with sampling planes and are used to reconstruct 3D orebody models.

Normal Vector: This is a unit vector that is perpendicular to an intersection or a polyline segment and is used to define the orientation for interpolation.

Network Graph: This is a graph structure with nodes as intersection points of the polylines and edges as the polyline segments, thus capturing the topological relationships.

Neighborhood Points: These are points within a specified radius around an intersection and are used for normal estimation.

Oriented/Unoriented Neighborhood Points (ONP/UNP): These are points with or without predefined normal vectors, and they are used to distinguish between user-specified and algorithmically estimated normals.

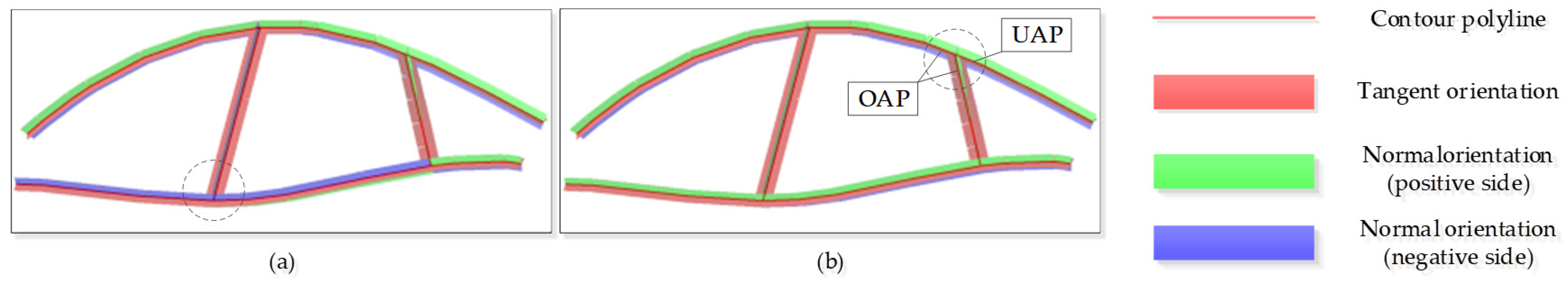

Oriented/Unoriented Adjacent Polylines (OAP/UAP): These are polylines that are adjacent to the intersection with or without predefined normal vectors, and these are used to distinguish between the user-specified and algorithmically estimated normals.

Normal Reorientation Rule: This method employs a strict confidence ranking to define the normal reorientation rule: User-predefined normals (based on prior geological knowledge) > propagated normals (derived from high-confidence anchors via BFS) > PCA-estimated normals (based on local geometric fitting).

3. Geological Implicit Modeling

The basic processes of implicit modeling can be summarized as follows. Following the implicit function field modeling approach, discretely sampled geological data are first converted into spatial interpolation constraints. An implicit function characterizing the spatial potential field distribution of mineralization is then determined through the spatial interpolation methods that are governed by specific geological rules. The surface model of the orebody is represented as a specific isosurface of this implicit function (e.g., the zero-isosurface ). To reconstruct the continuous implicit function representing the orebody model, discrete sampling of mineralized space can be performed, with the function values at the sampling points being evaluated through the implicit function to recover the target isosurface. During any stage of orebody modeling, sampling data and geological constraints can be added, modified, or updated, enabling dynamic model updates by re-solving and reconstructing the implicit function that characterizes the mineralized domain.

3.1. Radial Basis Functions Interpolation

The implicit surface

is defined as the zero level set

of the implicit function

. To recover the target implicit surface

, the radial basis function (RBF)-based implicit function

should be interpolated as

where

is the function value of the geological domain, and

is the number of interpolation constraints. These interpolation constraints are constructed from geological logging data.

For the radial basis functions interpolant, the implicit function

is defined as

where

and

are a type of globally supported radial basis functions, and

are low-order polynomials. The unknown coefficients

can be determined by solving the linear system combined with the above interpolation constraints. The first variable

in

is viewed as an evaluation point (or target point), and the second variable

is viewed as a source point.

Taking the polynomial part

as an example, the smoothest interpolant satisfies the orthogonality conditions

These orthogonality conditions, along with the interpolation conditions, lead to a linear system. The matrix form of the linear system can be written as

where

, and the unknown coefficients

and

can be determined by solving the equation.

3.2. Polyline Constraints

In RBF-based implicit modeling, the polylines cannot be interpolated directly. Polyline constraints are constructed by three basic surface constraints, including on-surface, off-surface, and gradient constraints. Therefore, accurately representing complex geological structures relies heavily on effectively encoding geological structural data through surface constraints.

3.2.1. On-Surface Constraints

On-surface constraints enforce that a geological interface must pass through explicitly defined geological locations.

Given a polyline

representing a geological feature

where

denotes a point along the polyline and should be resampled for sparse polylines. These constraints are “hard conditions”.

Geometric Meaning: forces the implicit surface to interpolate exactly.

3.2.2. Off-Surface Constraints

Off-surface constraints define the inside or outside region of the implicit function relative to the surface, enforcing that points near the polyline

lie either inside (negative

values) or outside (positive

values) the geological body

where the function value

is difficult to determine since the target implicit surface is unknown. In fact, these constraints are generally generated by offsetting the polyline vertices along the user-defined vectors

(e.g., normal direction).

3.2.3. Gradient Constraints

Gradient constraints explicitly specify the directional derivative of the implicit function

at point

.

where

is a known gradient vector (e.g., the normal vector that is perpendicular to a geological surface).

However, standard radial basis function (RBF) formulations cannot directly incorporate vector-valued constraints. We utilized an approximate offsetting strategy to convert the gradient constraints into on-surface and off-surface constraints adaptively.

For a point

on

, generate two off-surface points

and

by

where

is a small initial distance (e.g., 0.1% of the extracted mesh size). And the off-surface constraints are constructed as

4. Image Recognition

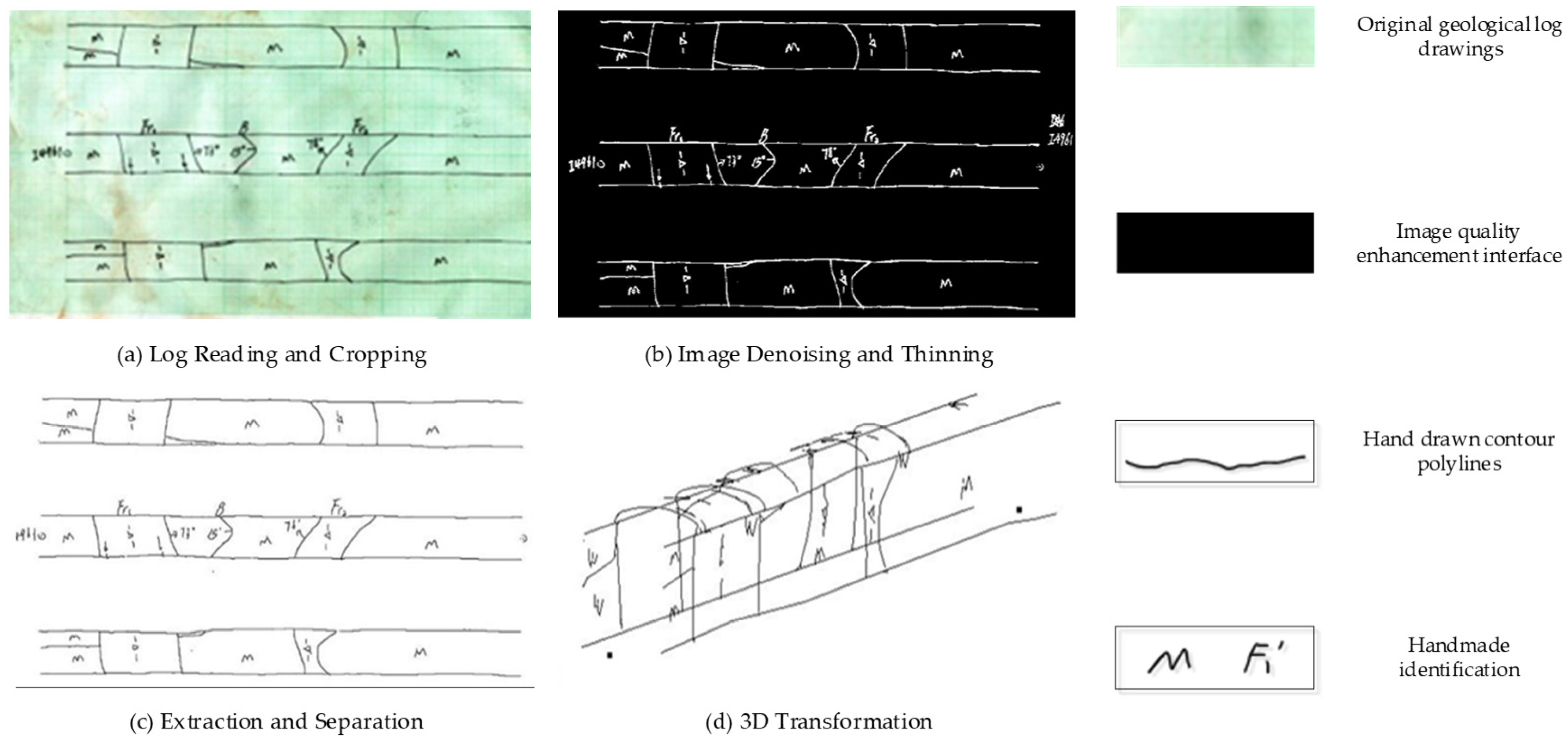

To obtain reliable vectorized polylines, we realized an image recognition-based vectorization method [

33] for raw geological logging data. The method involves four main steps to convert scanned original geological logs into vectorized 3D geological boundaries for implicit modeling. The vectorization processes can be summarized as follows, as shown in

Figure 2.

4.1. Scanning and Cropping

In the initial step, we should scan the target region from the original geological log drawings, followed by file compression. Since the raw logs contain non-graphical elements like text and labels, an interactive approach is used to manually crop the specific sections of the image that require vectorization, thus preparing the data for subsequent processing.

4.2. Image Denoising and Thinning

Based on the OpenCV tool, the cropped image undergoes quality enhancement through grayscale conversion, background blurring, and noise removal. The key denoising steps include bimodal threshold binarization after bilateral filtering, followed by single iterations of erosion and dilation. The cleaned image is then thinned to a single-pixel width skeleton using a combination of lookup table pre-processing and Rosenfeld post-processing to precisely represent the geological boundaries.

4.3. Extraction and Separation

Boundaries are extracted from the thinned image by tracing connected non-zero pixels, recording their coordinates, and resetting them to zero. The resulting complex lines are then simplified using the Douglas–Peucker algorithm [

34] to reduce redundant points while preserving geometric shape, yielding key boundary points. Additionally, erroneously connected geological boundaries and tunnel roof lines are separated by breaking the line at inflection points, leveraging their characteristic large angle difference.

4.4. 3D Transformation

Leveraging the spatial relationships defined in the original log, along with actual engineering survey point coordinates, the vectorized 2D boundaries (including roof, floor, sidewalls, and geological features) are transformed into their true 3D positions through coordinate operations (translation, scaling, rotation) and projection, generating accurate 3D geological boundaries.

5. Cross-Contour Interpolation

The main difficulty of the orebody implicit modeling method based on contour polylines is to find a method that can automatically interpolate the normals of contour polylines according to the geometry shape characteristics of contour polylines. To estimate the normals of contour polylines dynamically, we consider transforming the problem of normal dynamic estimation of contour polylines into the problem of normal dynamic estimation of point clouds. However, compared with the traditional normal estimation problem of point clouds, the normal estimation problem of contour polylines needs to further consider the topological relationship of the contour polylines and the corresponding geometric continuity.

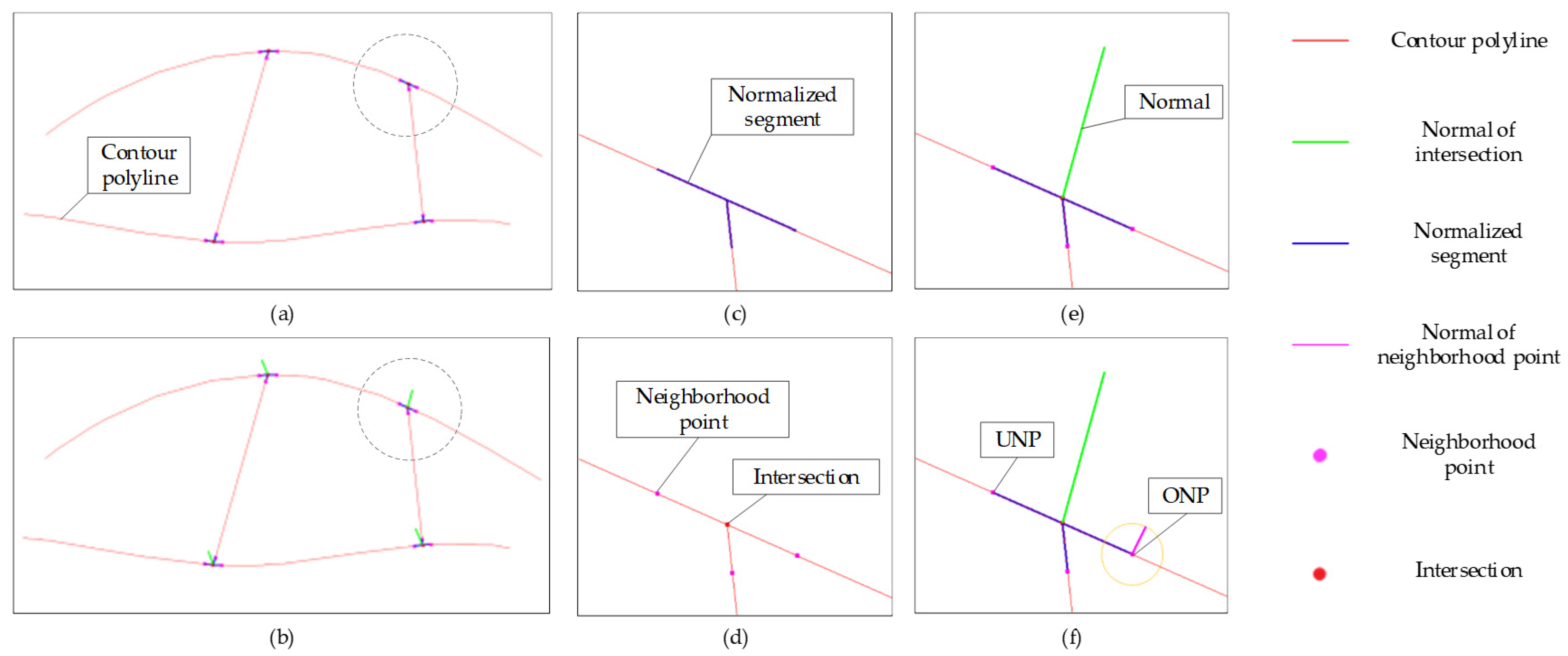

5.1. Normal Estimation

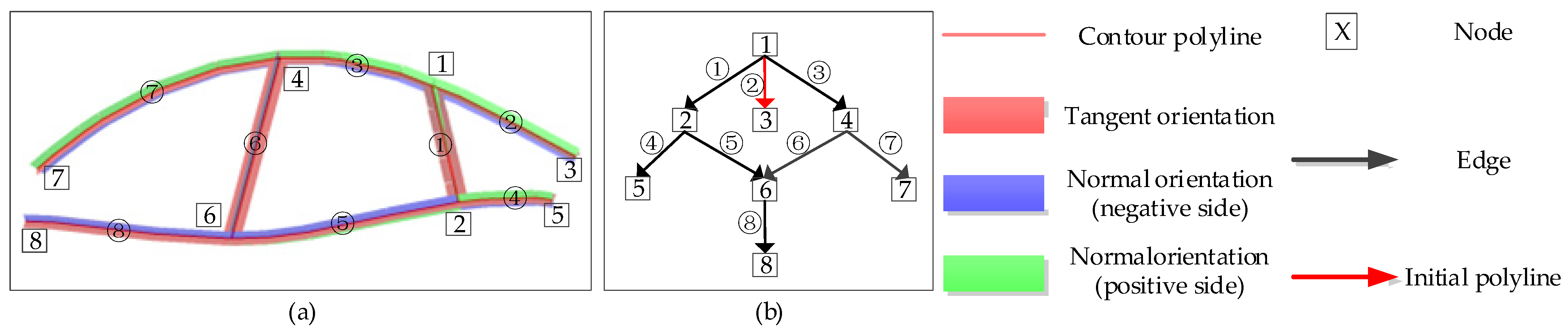

The step of normal estimation at intersection points estimates the normal of each segment using the improved principal component analysis (PCA) method according to the topology relationship of the intersecting polylines. Based on the idea of graph theory, a network graph

representing the topological adjacency relationship between polylines is constructed. The graph is defined by taking the intersection points of polylines as the nodes

of the graph and taking the polylines as the edges

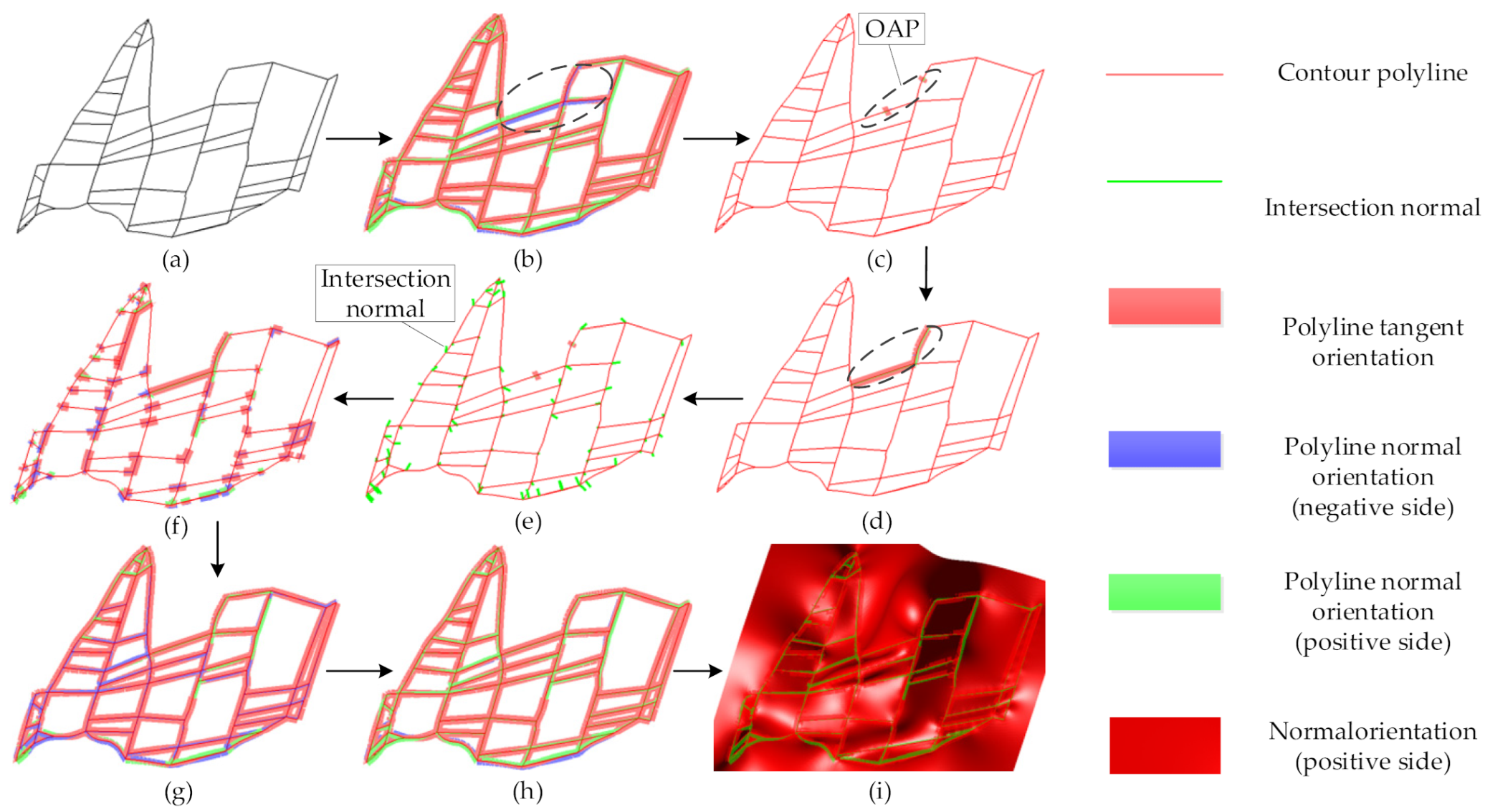

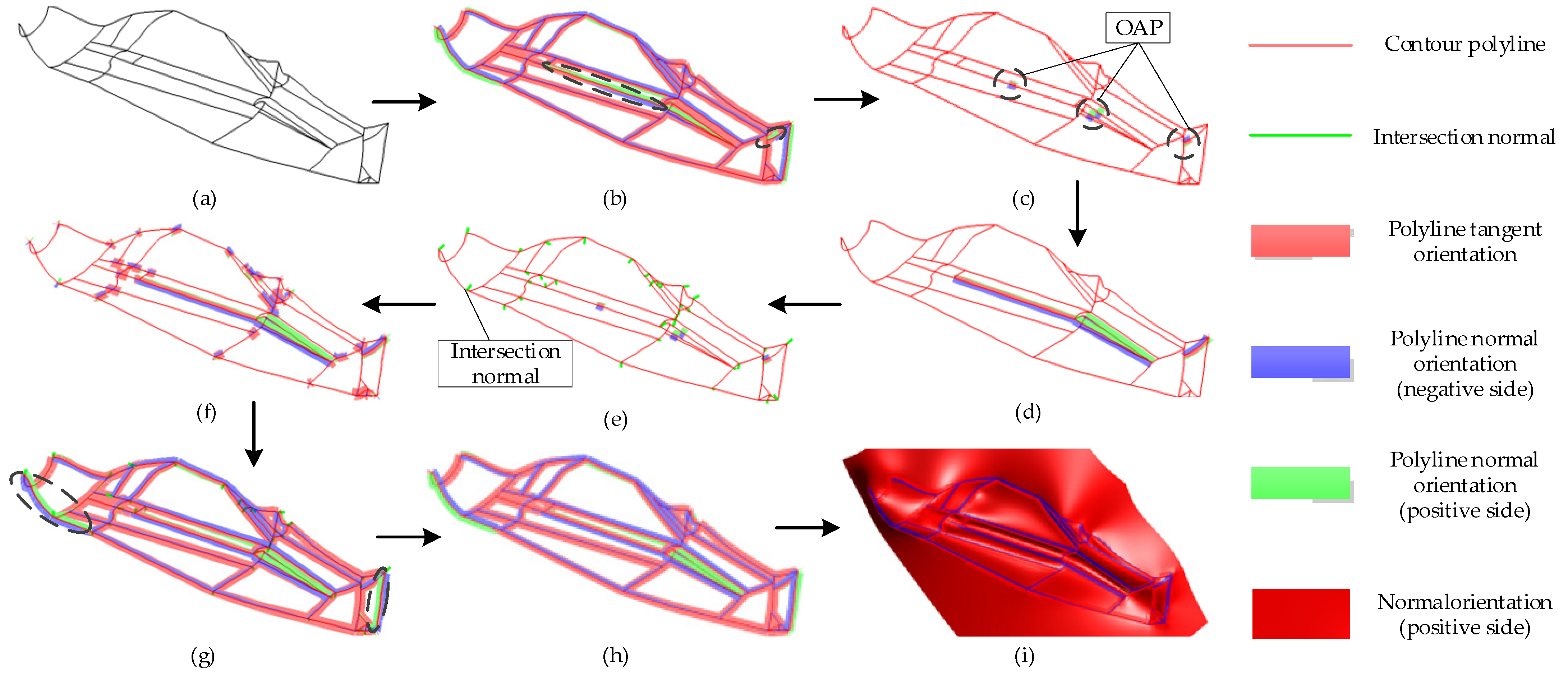

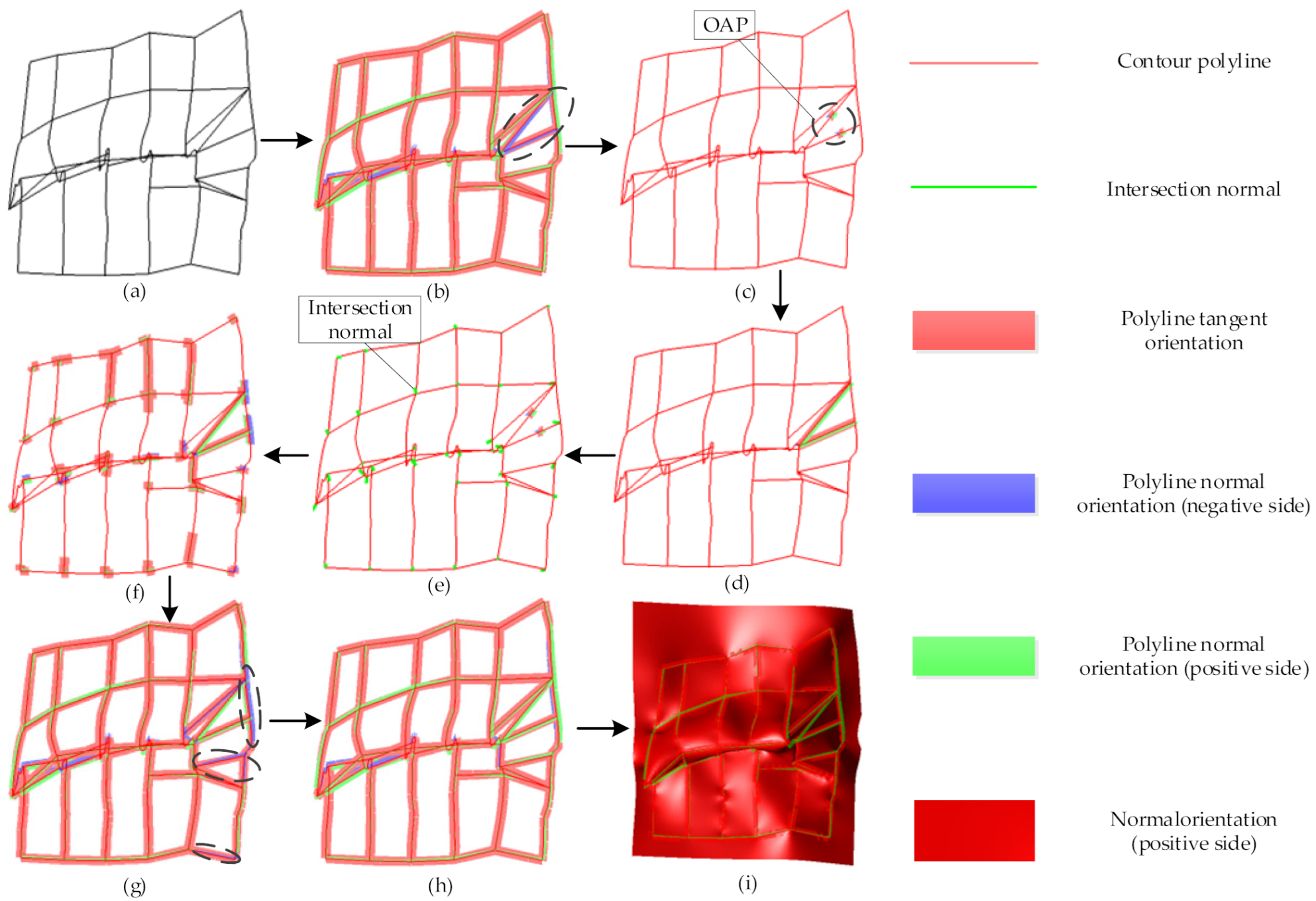

of the graph, as shown in

Figure 3.

Considering that some contour polylines have specified reliable normals, we present a dynamic normal estimation method to determine the normals of contour polylines using an improved PCA method. The basic idea of this method is to extract the neighborhood points normalized by the adjacent line segments at each intersection point to form the point cloud data, so as to transform the contour intersection normal dynamic estimation problem into the point cloud normal dynamic estimation problem. Different from the traditional point cloud estimation method, the point cloud normal dynamic estimation method can consider the influence of the prior oriented points on the normal estimation of the target intersection point.

It is worth noting that the extracted neighborhood points contain oriented and unoriented points. To clearly describe the process of dynamic normal estimation, we divide the neighborhood points of the target intersection point into unoriented neighborhood points (UNP) and oriented neighborhood points (ONP), as shown in

Figure 3. Among them, the unoriented neighborhood points are composed of the points on the adjacent line segments without specified orientations at each intersection point, and the oriented neighborhood points are composed of points on the adjacent line segments with specified orientations at each intersection point. For a node (intersection point

) to be solved, suppose that there are

unoriented neighborhood points

and

oriented neighborhood points

.

In the process of normalizing the weight of neighborhood points, the neighborhood point

is determined by the following formula

where

is the unitized line direction vector of the line segment adjacent to the corresponding intersection point.

In the case of normalizing the weight of the neighborhood points, the objective function formula of the improved PCA method can be defined as

where

is the normal vector to be solved and

are the unoriented neighborhood points at the corresponding intersection point.

represents any one unitized vector orthogonal to the normal direction of the oriented neighborhood point

.

satisfies

Set

then

where

,

=

.

where

,

.

Set

Then the final form of the objective function can be defined as

Similar to the original PCA method, the normal vector at the intersection point can be estimated by solving the matrix using the eigenvalue decomposition method. And the normal vector to be solved corresponds to the smallest eigenvalue. After the normal vector of the intersection point is solved, the normal orientation of the line segment adjacent to the intersection can be calculated directly according to the projection relationship.

5.2. Normal Interpolation

The step of normal estimation for a single polyline interpolates the normals of the unoriented line segments according to the oriented line segments for each polyline using the linear interpolation method.

For a single polyline, there may be several line segments that have been assigned directions. To improve the accuracy of the normal estimation, the polylines with specified directions should be interpolated by the linear interpolation method before the normal estimation step at the intersection point. In this case, the polyline with the specified orientation will improve the normal estimation result of the adjacent polyline.

Set the tangent vector specified or estimated by the

-th segment of the polyline to

and the tangent vector specified or estimated by the

-th segment to

. The formula of linear interpolation can be expressed as

where

and

are the tangent vector and direction vector of the

-th segment to be interpolated of the polyline.

is the distance from the

-th segment to the

-th segment, and

is the distance from the

-th segment to the

-th segment.

The normal vector

of the

-th segment to be interpolated can be expressed as

It is worth noting that the normal vector of the line segment calculated by the above method does not consider the positive or negative orientation of the normal, as shown in

Figure 4. The step of normal reorientation of a single polyline ensures that the positive or negative normal orientation of each segment is consistent according to the shape trend between two adjacent segments of the polyline. Since the normal orientation of some segments already has a specified orientation, it is necessary to unify the normal orientation of orientation segment by segments.

Set the direction vectors and the estimated normal vectors of two adjacent segments of a polyline to

,

,

and

. Generally, if

is the reference normal vector (or manual specified normal vector), the reoriented normal

should satisfy

where

It is worth noting that the normal reorientation process based on the above method is suitable for the condition that the vectors , , and are approximately coplanar. If the above vectors do not satisfy the approximate coplanar condition, we can convert the problem using the vector projection method. In special cases, when the process of normal reorientation is erroneous, it needs to rely on manual dynamic correction of the normal orientation.

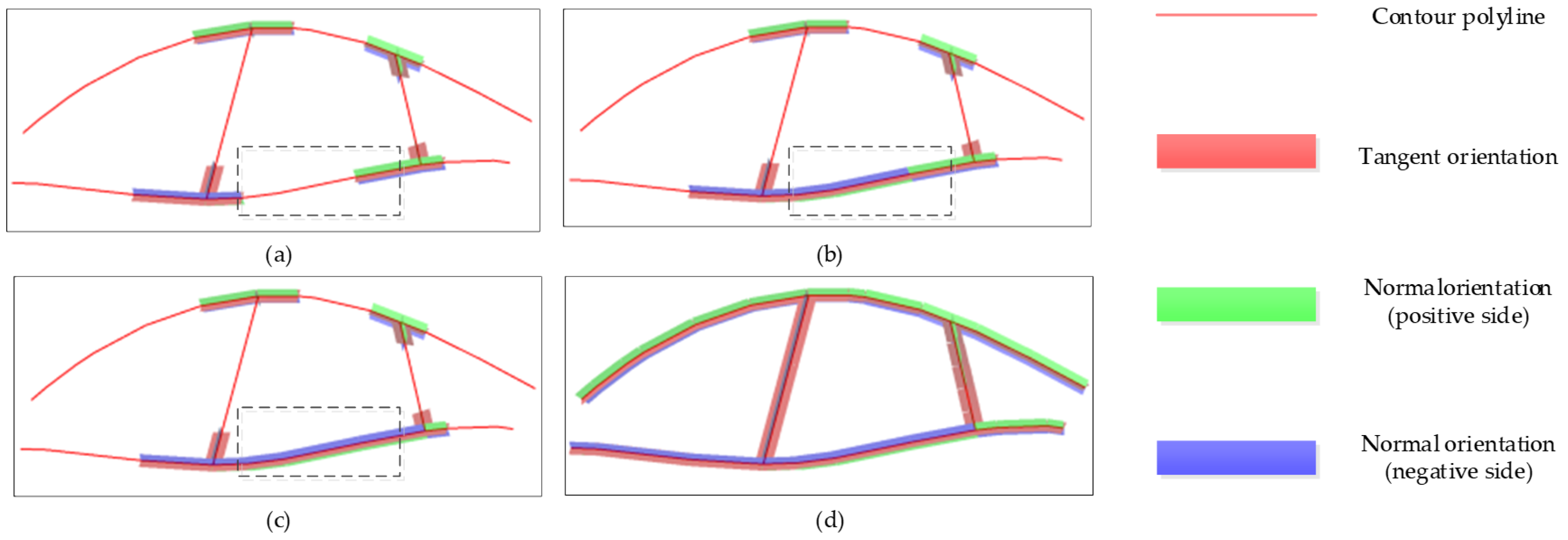

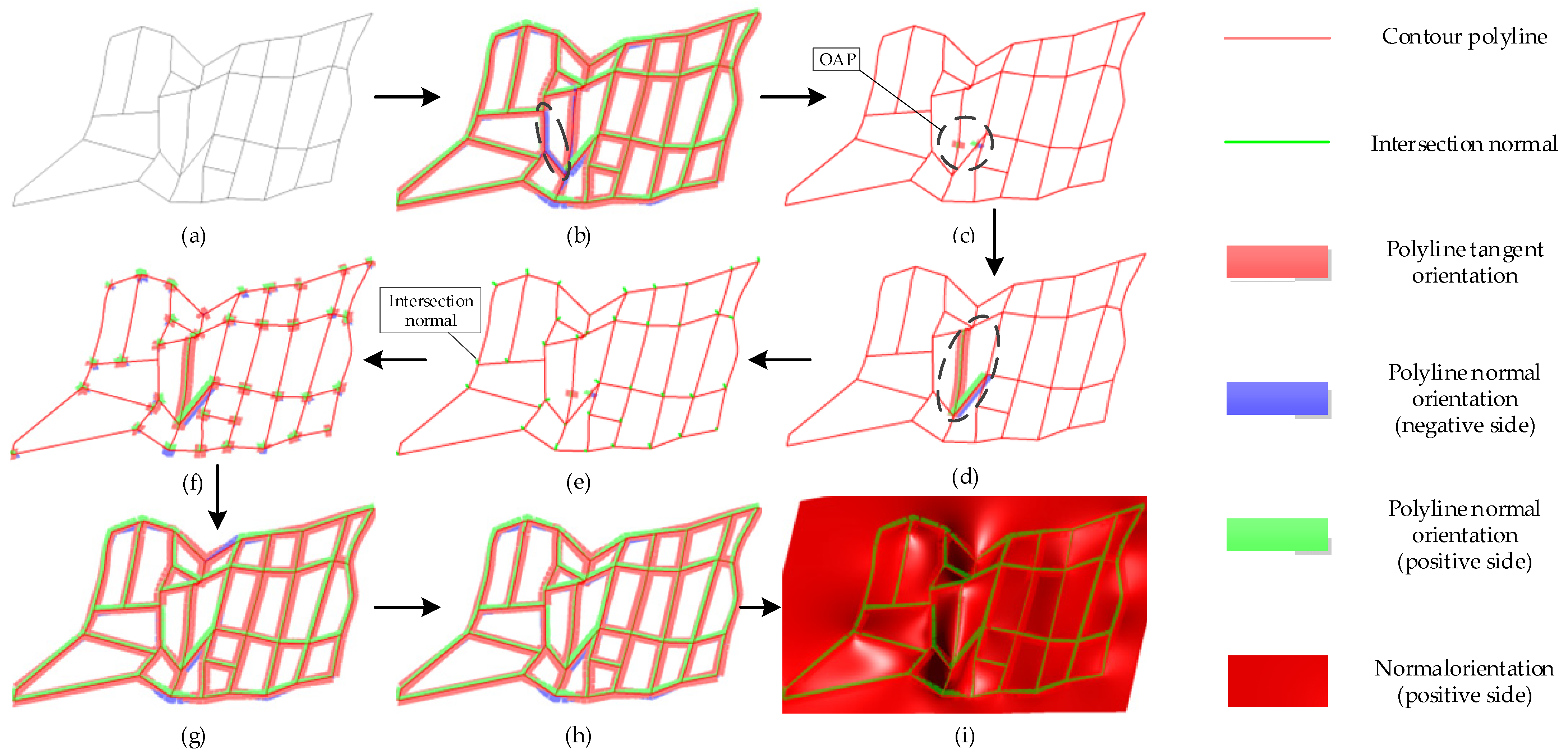

5.3. Normal Reorientation

The step for reorienting normals for all polylines ensures that the normal direction of each polyline at the intersection is consistent according to the estimated normal at each intersection point. We reoriented the normals of the polylines by traversing the network graph by using the normal propagation algorithm.

To clearly describe the process of normal reorientation, we divided the polylines adjacent to the intersection point into unoriented adjacent polylines (UAP) and oriented adjacent polylines (OAP), as shown in

Figure 5. Among them, the unoriented adjacent polyline represents a polyline without a specified normal direction that is adjacent to the intersection, and the oriented adjacent polyline represents a polyline with a specified normal direction that is adjacent to the intersection. The normal direction of an oriented adjacent polyline cannot be flipped.

Suppose that the solved normal vector at the intersection point

is

and the calculated normal vector of the

-th adjacent line segment is

; then, the reoriented normal vector

of the

-th adjacent line segment should satisfy

where

is the reference normal of a line segment adjacent to the intersection point.

When considering the existing direction of the polylines, the reference normal is determined based on the following criteria. If there are some oriented polylines that are adjacent to an intersection point, we will select the normal of any one oriented polyline as the reference normal; otherwise, we will select the normal of the polyline propagating to the current intersection point as the reference normal in the process of traversing.

There are two common traversing methods in the network graph as follows: the breadth-first search (BFS) and the depth-first search (DFS). After traversing, the propagation polylines and the intersection points form a spanning tree of the original network graph. The normal propagation process gradually corrects the normal orientations at each node according to the order in which the graph nodes of the spanning tree are traversed, as shown in

Figure 6. In general, considering the propagation characteristics of the traversal process in the network graph, it is necessary to ensure that the graph nodes with high reliability are propagated first. It is useful to prevent the propagation of erroneous graph nodes from causing a large number of normal directions to be overturned.

Considering the existing orientation of the polylines, we utilized an improved BFS method to unify the normal orientation of all polylines. As this method optimizes the selection of the initial node of the original BFS method, it propagates the graph nodes and graph edges with higher reliability to reduce the erroneous propagation of normals. The specific steps of normal reorientation can be summarized as follows.

Step 1. A queue in the searching process is constructed to store the graph nodes to be processed. The head and tail nodes corresponding to all polylines with specified orientation are added to the queue as initial nodes.

Step 2. Take out the head element of the queue in turn. Reorient the adjacent polylines at the node according to the solved normal vector at the corresponding intersection point.

Step 3. Mark the node and its adjacent polylines as processed to ensure that each polyline is reoriented only once.

Step 4. Obtain the other endpoints of the adjacent polylines of the node . Sort the nodes according to the reliability of the estimation result.

Step 5. Traverse the nodes . If is not processed, add to the end of the queue .

Step 6. Take out the next head element of the queue . Return to Step 2 and repeat the searching process until all polylines are reoriented.

If the cross-contour normal dynamic estimation method is integrated into the 3D modeling software, some flexible interactive tools can be developed to update the normals of the contours dynamically. For geometrically complex polylines, it is useful to improve the accuracy of the normal estimation results. Taking the step of normal estimation for a single polyline as an example, interactive tools can be developed to adjust the normal orientation of a segment. In addition, for the step of reorienting normals for all polylines, interactive tools for freezing the estimated normals can be provided to ensure that the direction with reliable estimation results is not affected by subsequent update steps.

7. Conclusions and Discussion

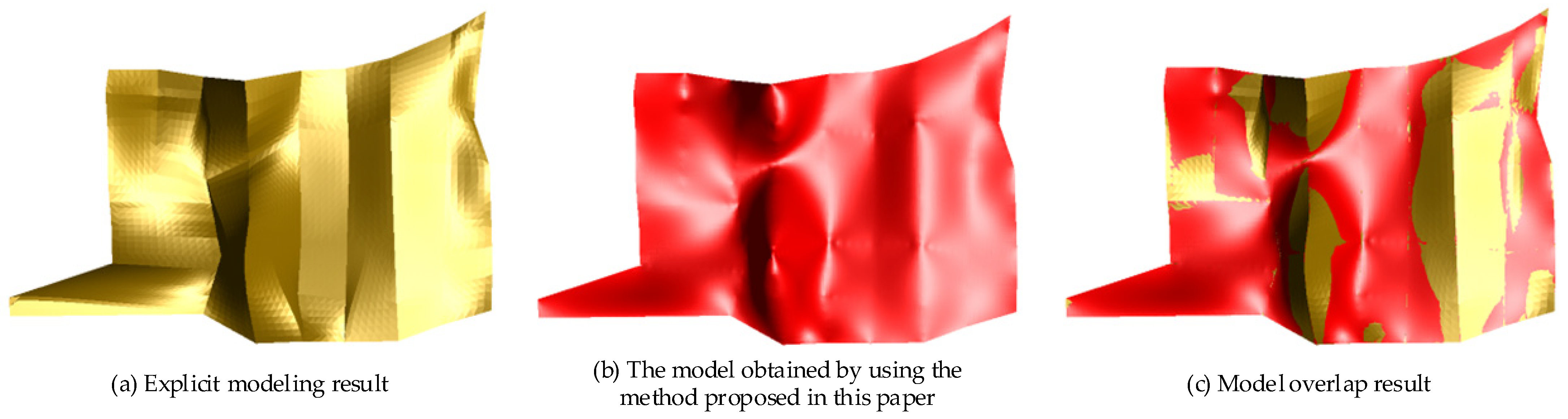

This study presents an innovative framework for dynamically updating 3D orebody models through the direct interpolation of geological logging data. By bridging critical gaps between geological interpretation and computational modeling, the method significantly advances the state-of-the-art in mineral resource estimation and mine planning workflows. As mining progresses toward autonomous operations, such dynamic geological modeling frameworks will form the essential digital substrate for adaptive mine planning and resource optimization.

The dynamic normal estimation algorithm for cross polylines using the improved PCA method enables robust implicit modeling while maintaining geological plausibility during incremental updates. The method can dynamically estimate the normals of contour polylines on the basis of manually specifying the normals of segments step by step, which can greatly improve the reliability of the results of the normal estimation. As the geometry position relationship between contours is integrated into interpolating normals, the original shape of the orebody can be recovered well in implicit modeling. The experimental results of several orebody modeling examples show that the method can be used to dynamically model the geological data set with a large number of complex contour polylines, which is useful for improving the automation of implicit orebody modeling and the reliability of implicit modeling results.

Based on the normal dynamic estimation of contour polylines, we can update the orebody dynamically using the implicit modeling method. However, this method still has some limitations that need to be further improved. Because the normal calculation at intersection points mainly depends on the point cloud normal estimation method based on PCA, the method is suitable for the surface modeling of smooth models. As the contour interpolation integrates the existing normals, the manually specified normals must be reliable enough to avoid affecting the accuracy of the normal estimation of the corresponding adjacent polylines. In the process of normal propagation, the propagation at a node with erroneous estimation results may lead to a large number of normal flipping errors. Therefore, it is necessary to study the method of automatically determining and modifying the nodes with erroneous normals to improve the accuracy of normal dynamic estimation and further improve the automation of implicit modeling.

In practical applications, we find that when the polylines are sparsely distributed or the shape of the orebody is significantly distorted or deformed, the standard estimation and reorientation of the polylines tend to lead to errors. This is an inevitable problem for interpolating sparse data. And the normal estimation method depends on the assumed condition that the geometry shapes of the contours satisfy the requirement of the local surface-fitting method. It is necessary to further optimize the normal direction estimation method for complex contour polylines in the future. Normal estimation results are more reliable where the contour polylines are dense and the gradient is gentle. On the contrary, there are great uncertainties in the normal estimation results in the positions where the contour polylines are sparse and the trend changes greatly. Core failures arise when data violate the PCA assumptions; however, interactive refinement ensures practical applicability. Our future work targets uncertainty-aware sampling to minimize manual inputs.