Web System for Solving the Inverse Kinematics of 6DoF Robotic Arm Using Deep Learning Models: CNN and LSTM

Abstract

1. Introduction

2. Materials and Methods

2.1. Software

2.2. Hardware

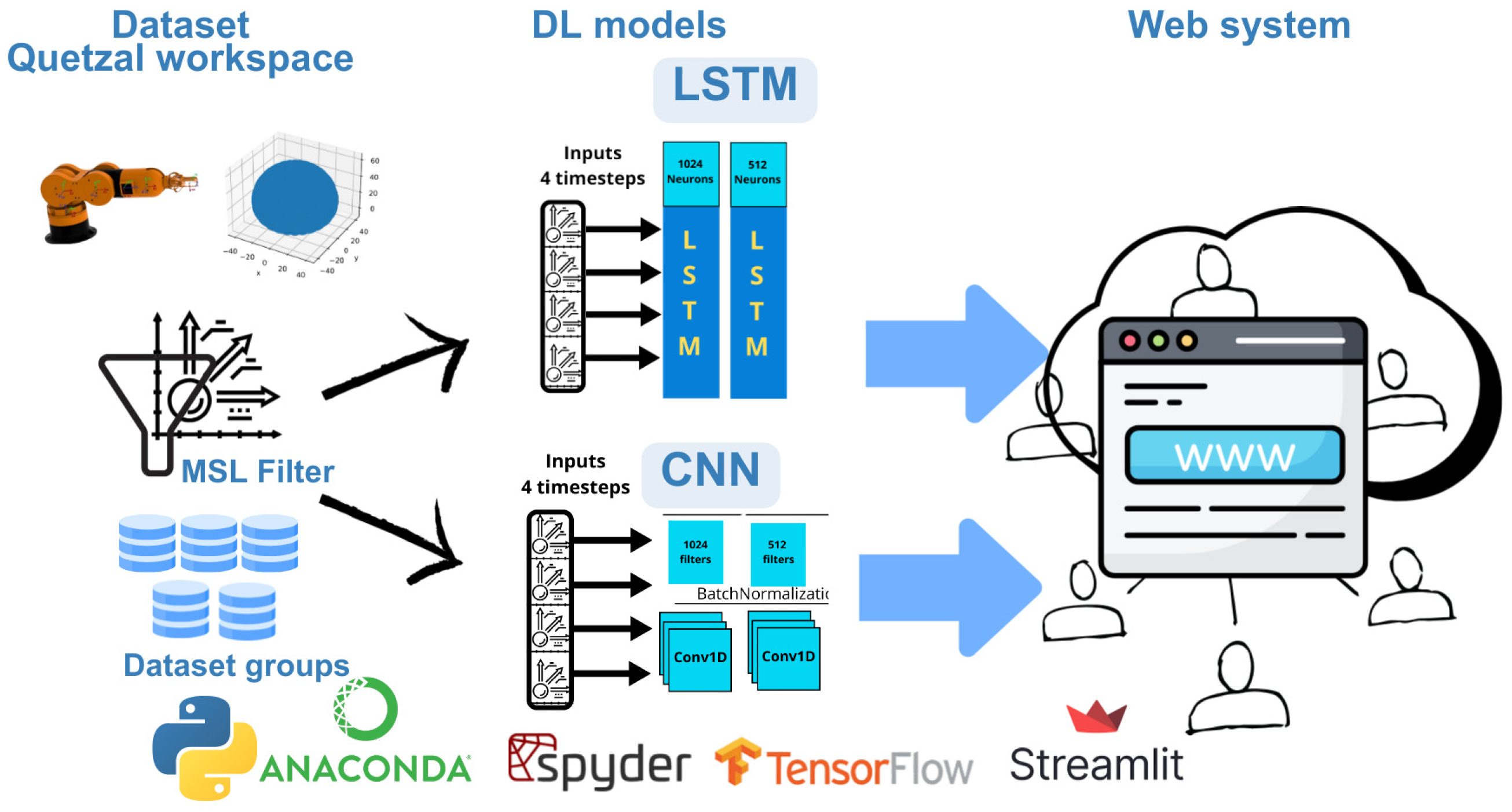

2.3. Methodology

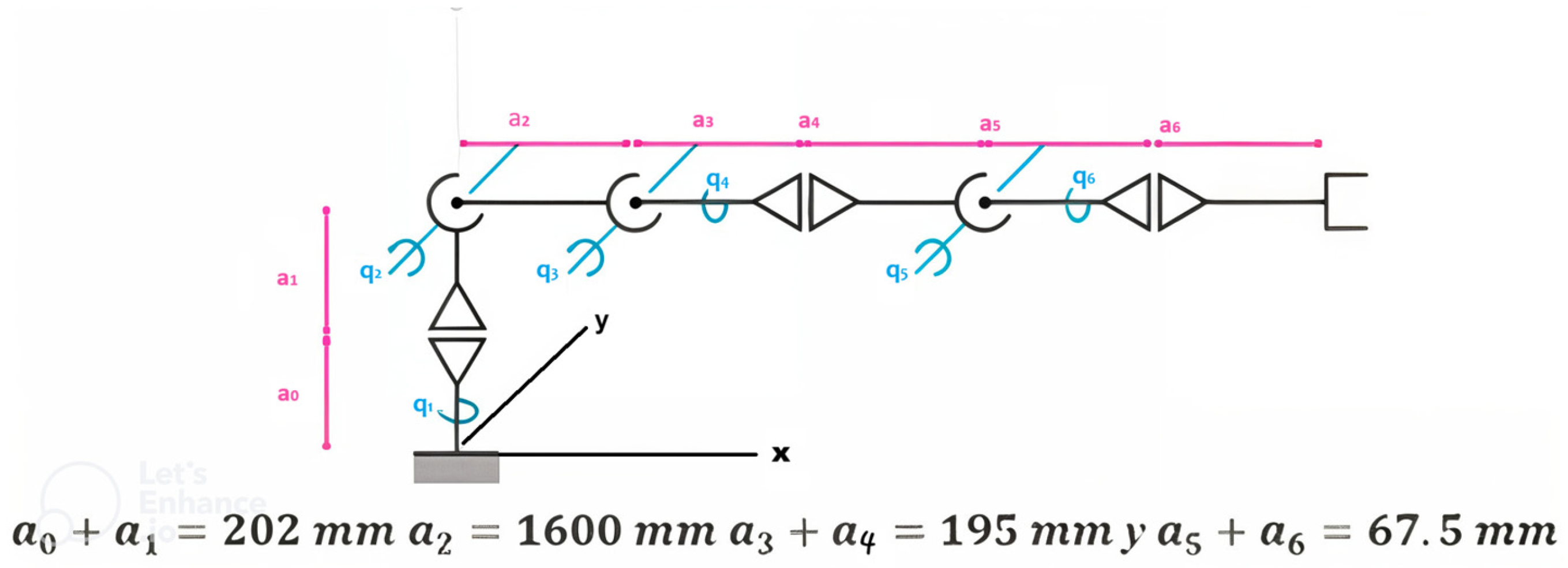

2.3.1. Dataset Quetzal Workspace

2.3.2. Initial Model Design of CNN

2.3.3. Initial Model Design LSTM

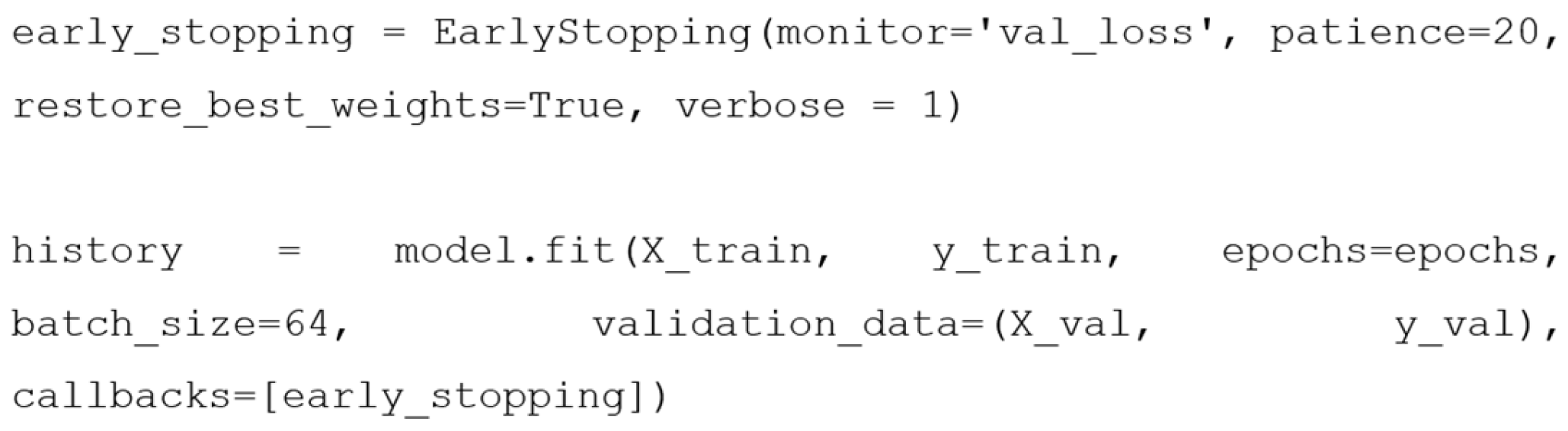

2.3.4. Overfitting Mitigation Techniques

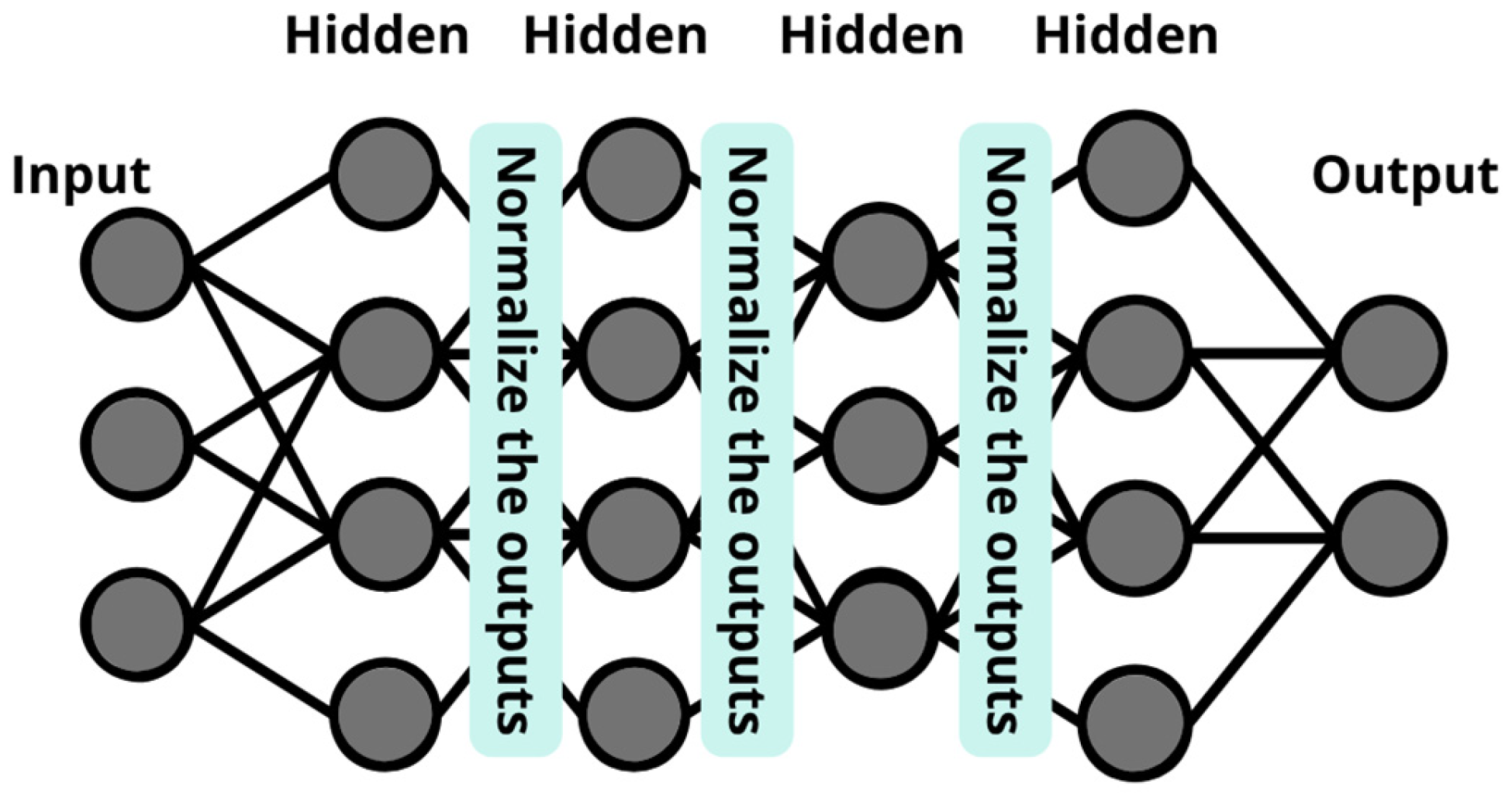

2.3.5. Final CNN Architecture

- Input data: A sequence format of (4, 12) was selected, balancing model performance and training efficiency. Here, 4 represents the number of temporal steps, and 12 the number of features per timestep.

- Convolutional layers (Conv1D): These layers scan through the temporal sequences using a kernel of size 3 to detect local patterns over time. The model includes four Conv1D layers with 1024, 512, 256, and 128 filters. The Swish activation function was applied in the first and third layers, and ReLU in the second and fourth, leveraging the complementary strengths of both activations.

- Batch normalization: This was applied between each Conv1D layer to stabilize and accelerate training by normalizing the activations. This behaviour reduces issues related to input scale imbalances.

- Dropout layers: this was used to prevent overfitting, with a rate of 0.1 between convolutional layers and 0.2 in the fully connected layers.

- Fully connected layers: the model includes dense layers with 600, 400, and 200 neurons, each using the Swish activation function.

- The model uses a loss function MSE, an Adam optimizer with a learning rate 0.001, and evaluation metrics MAE and accuracy to assess overall predictive performance.

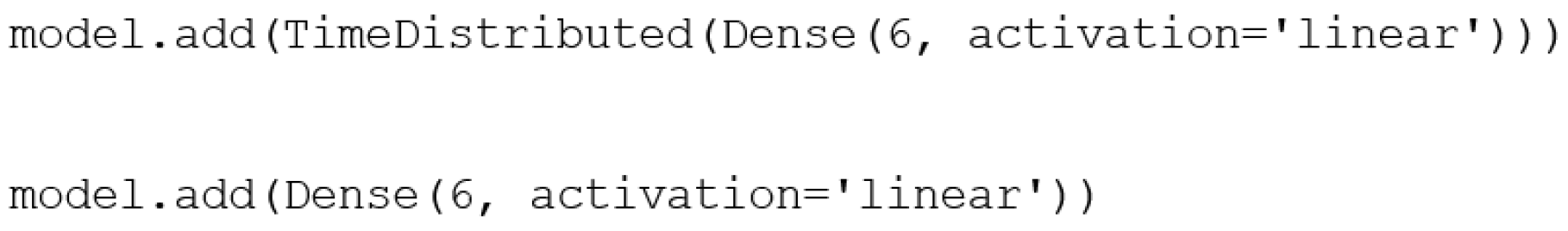

- Output layer: The model outputs six continuous values, representing the predicted joint angles (θ1, θ2, θ3, θ4, θ5, θ6) corresponding to the six degrees of freedom of the robotic arm.

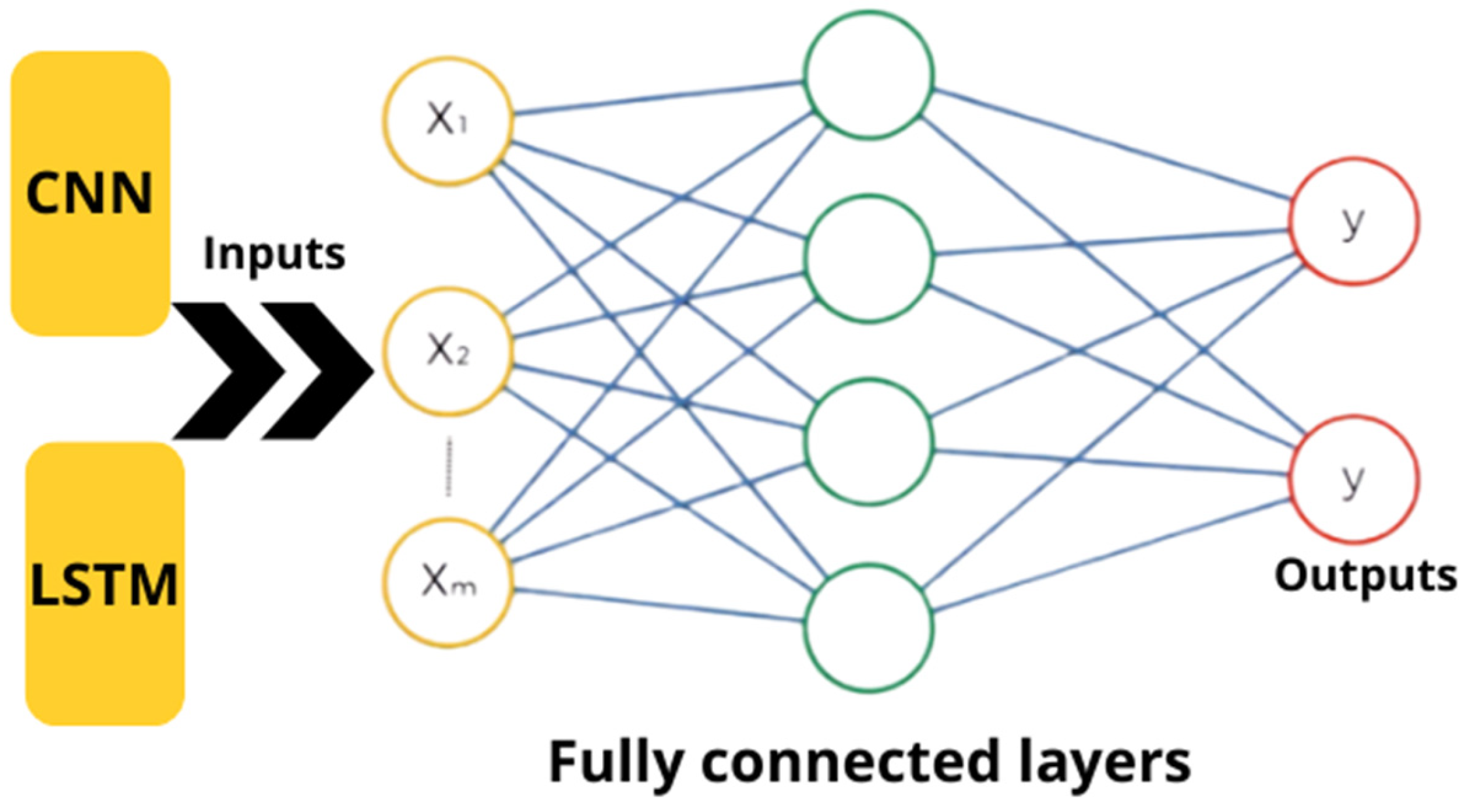

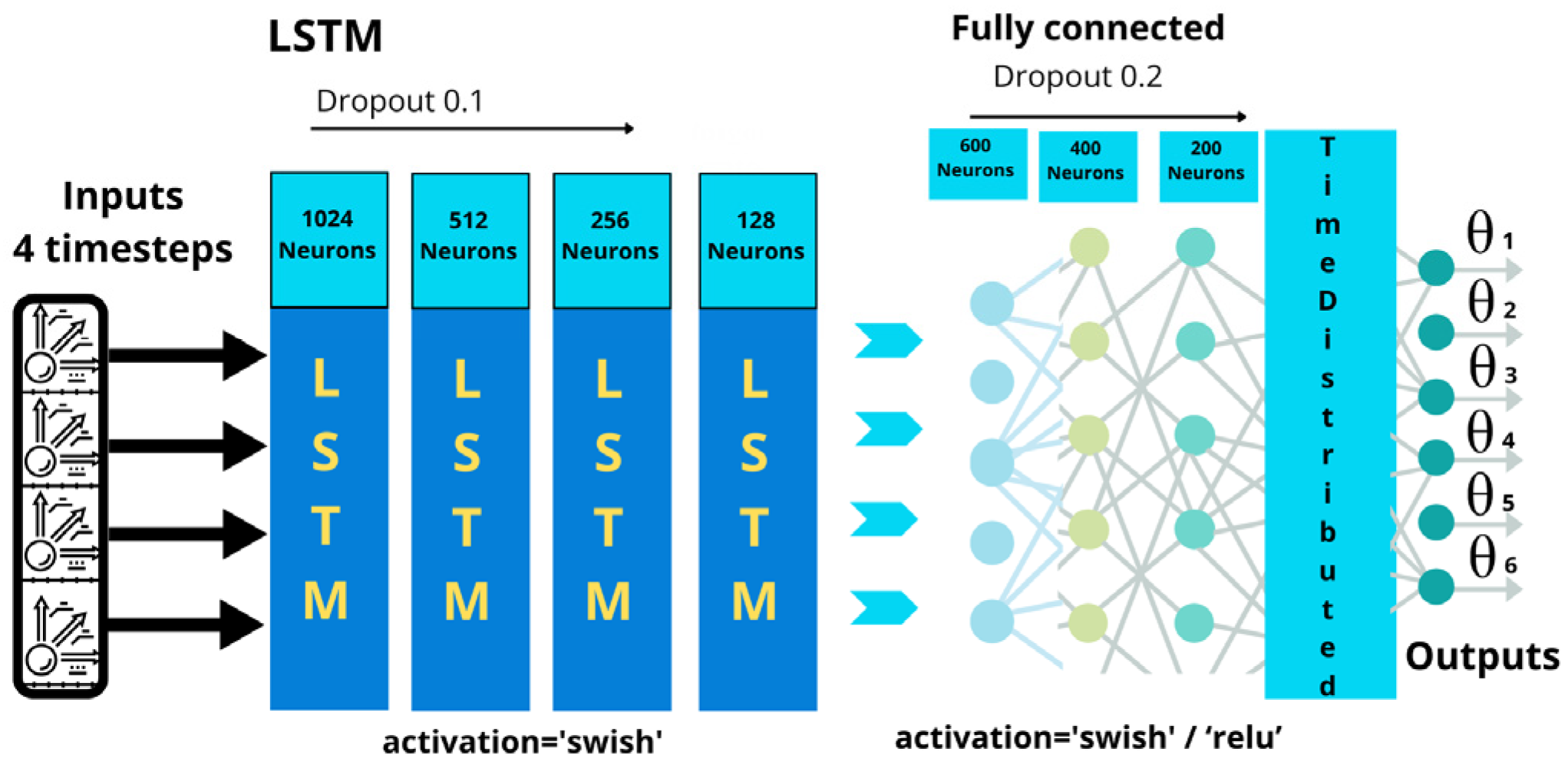

2.3.6. Final LSMT Architecture

- Input data: a sequence shape of (4, 12) was selected to balance training efficiency and model performance, the same as the CNN model.

- Stacked LSTM layers: four LSTM layers were used to allow the model to learn both short-term and long-term temporal dependencies. This deep, hierarchical structure enhances the network’s model of complex temporal patterns.

- Fully connected layers: three dense layers with 600, 400, and 200 units were used, employing Swish activation in the first and third layers, and ReLU in the second.

- Dropout layers: dropout rates of 0.1 were applied between LSTM layers and 0.2 between fully connected layers.

- The time distributed layer, loss function, optimizer, evaluation metrics, and output layer are all the same as those in the CNN model.

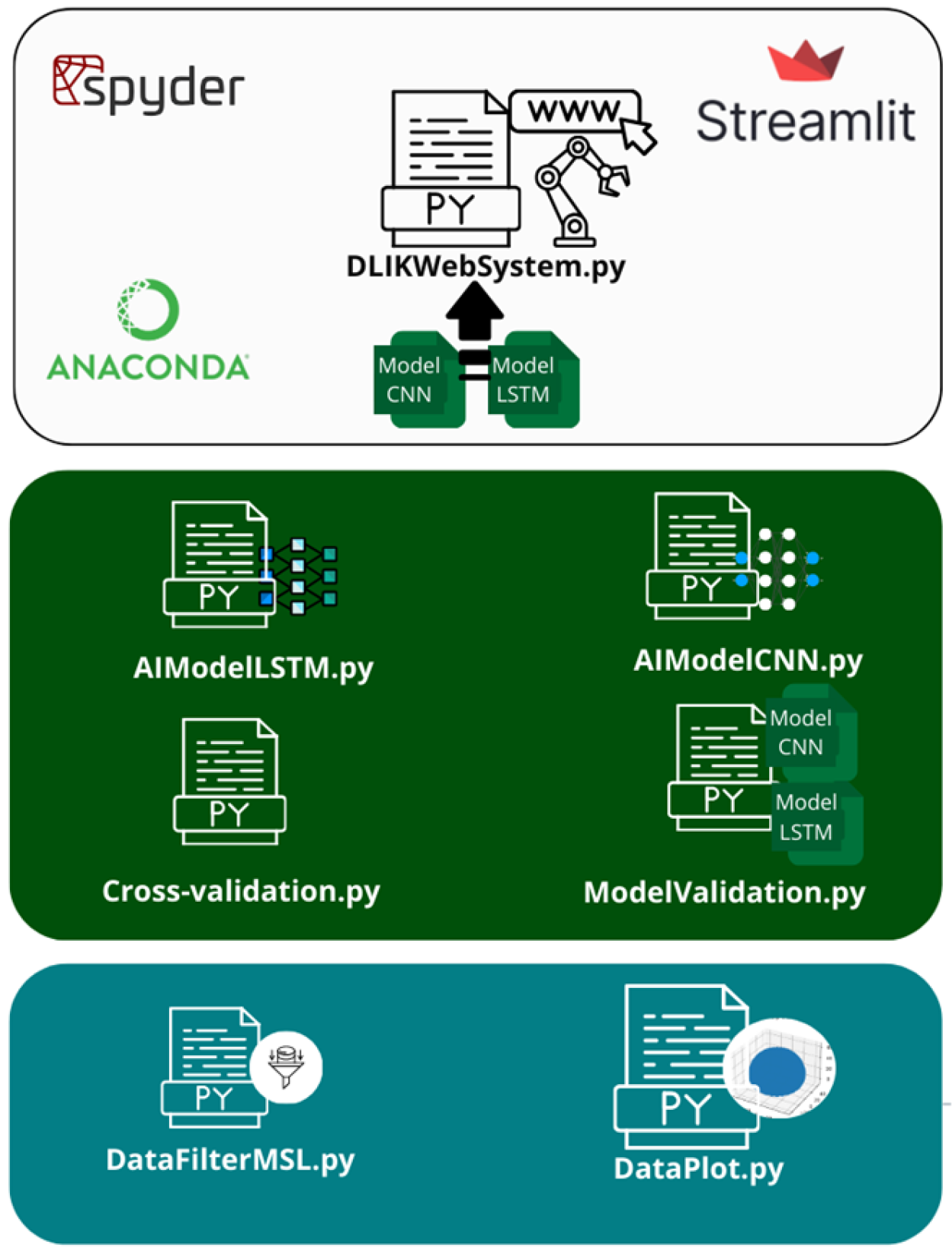

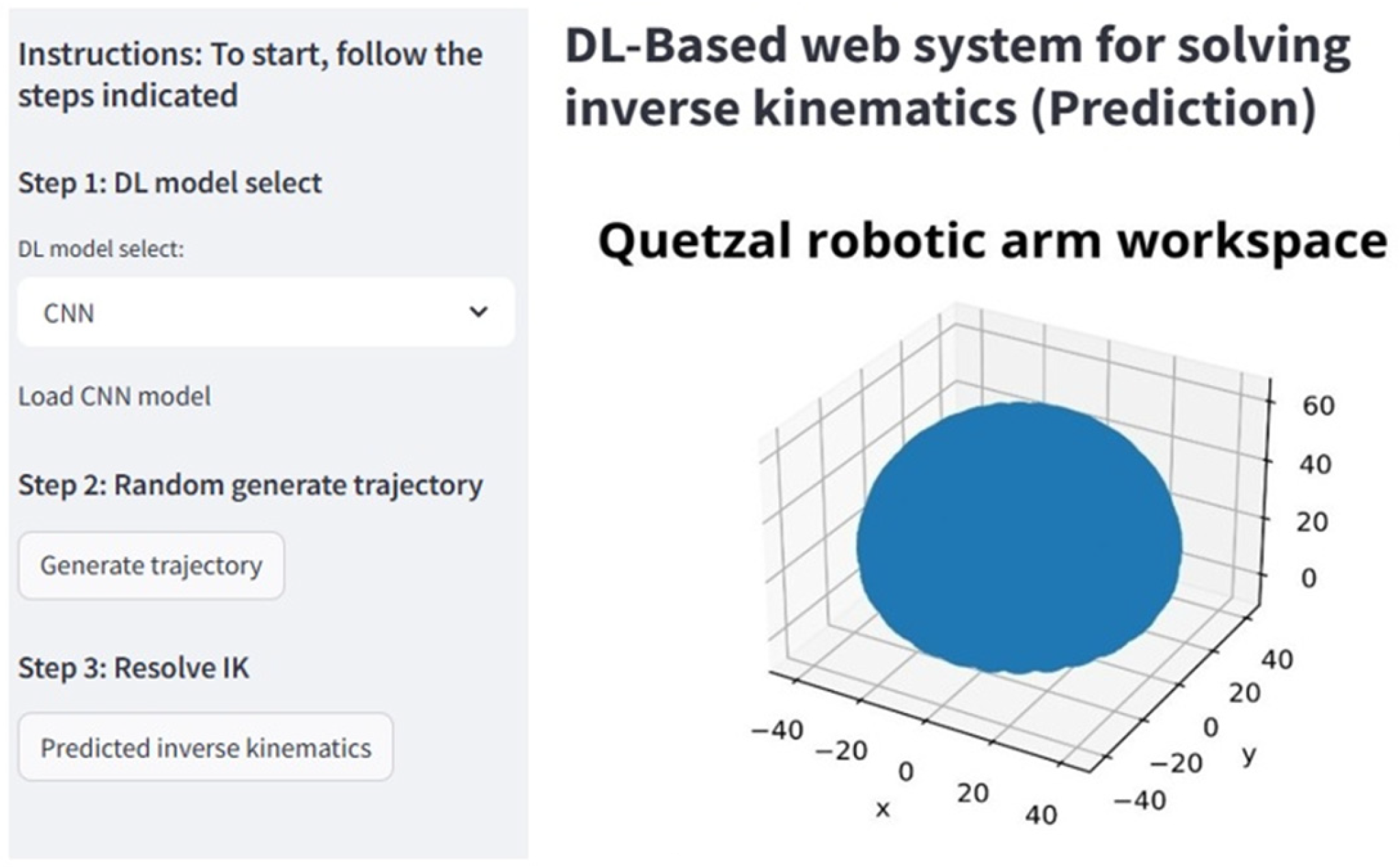

2.3.7. Web System

- DataFilterMSL.py: converts the Quetzal robot workspace from a .mat file to .csv, enabling easier data manipulation in Python. It applies a Systematic Linear Sampling (MSL) method to reduce dataset size while preserving spatial diversity, ensuring efficient DL model training.

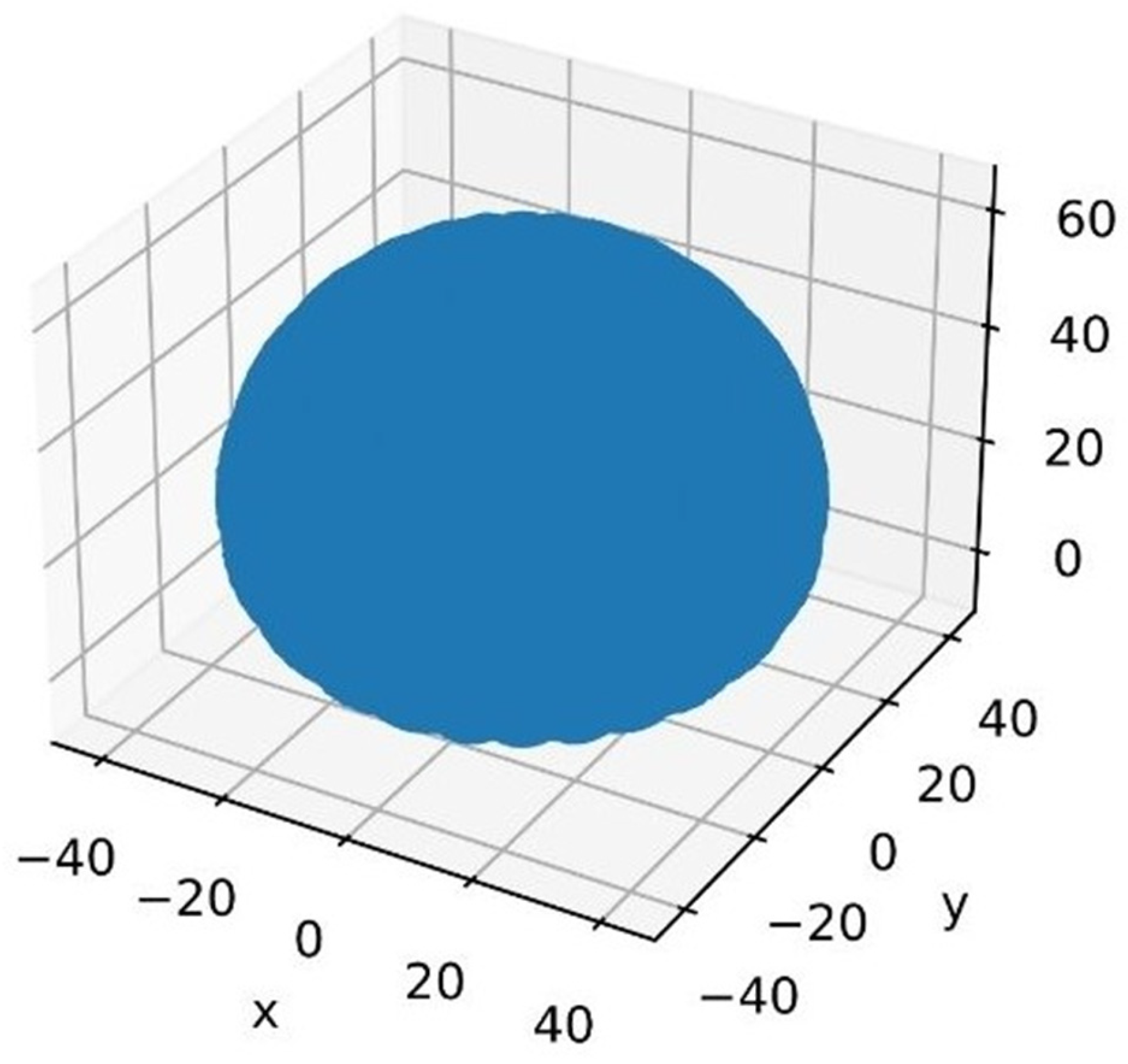

- DataPlot.py generates a 3D visualization of the filtered dataset using Matplotlib, allowing for spatial validation of the robot’s reachable workspace in X, Y, and Z space.

- IAModelCNN.py & IAModelLSTM.py: Define the architecture, activation functions, input shapes, and training settings for the CNN and LSTM models. Once trained, the models are saved for real-time deployment in the web system to predict inverse kinematics.

- CrossValidation.py: implements K-fold cross-validation to assess model robustness.

- ModelValidation.py: evaluates model performance using MSE, MAE, R2, and Euclidean Distance by comparing predictions against a test set of 100,000 unseen samples, with ground truth generated via the Denavit–Hartenberg (D-H) method.

- DLIKWebSistem.py: The main script that runs the web interface built with Streamlit.

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Correction Statement

Abbreviations

| DL | Deep Learning |

| CNN | Convolutional Neural Networks |

| LSTM | Long Short-Term Memory |

| MSE | Mean Squared Error |

| MAE | Mean Absolute Error |

| FC | Fog Computing |

| CC | Cloud Computing |

| DoF | Degrees of Freedom |

| LSS | Linear Systematic Sampling |

| AI | Artificial Intelligence |

References

- Wang, Z.; Chen, D.; Xiao, P. Design of a Voice Control 6DoF Grasping Robotic arm Based on Ultrasonic Sensor, Computer Vision and Alexa Voice Assistance. In Proceedings of the 2019 10th International Conference on Information Technology in Medicine and Education (ITME), Qingdao, China, 23–25 August 2019; pp. 649–654. [Google Scholar] [CrossRef]

- Yasar, M.S.; Alemzadeh, H. Real-Time Context-Aware Detection of Unsafe Events in Robot-Assisted Surgery. In Proceedings of the 2020 50th Annual IEEE/IFIP International Conference on Dependable Systems and Networks (DSN), Valencia, Spain, 29 June–2 July 2020; pp. 385–397. [Google Scholar] [CrossRef]

- Velastegui, R.; Poler, R.; Díaz-Madroñero, M. Revolutionising industrial operations: The synergy of multiagent robotic systems and blockchain technology in operations planning and control. Expert Syst. Appl. 2025, 269, 126460. [Google Scholar] [CrossRef]

- Grischke, J.; Johannsmeier, L.; Eich, L.; Griga, L.; Haddadin, S. Dentronics: Towards robotics and artificial intelligence in dentistry. Dent. Mater. 2020, 36, 765–778. [Google Scholar] [CrossRef]

- Ashibani, Y.; Mahmoud, Q.H. Cyber physical systems security: Analysis, challenges and solutions. Comput. Secur. 2017, 68, 81–97. [Google Scholar] [CrossRef]

- Oyama, E.; Tachi, S. Inverse kinematics learning by modular architecture neural networks. In Proceedings of the IJCNN’99. International Joint Conference on Neural Networks. Proceedings (Cat. No.99CH36339), Washington, DC, USA, 10–16 July 1999; Volume 3, pp. 2065–2070. [Google Scholar] [CrossRef]

- Al-Hamadani, A.A.; Al-Faiz, M.Z. Design and Implementation of Inverse Kinematics Algorithm to Manipulate 5-DOF Humanoid Robotic Arm. In Proceedings of the 2021 International Conference on Innovation and Intelligence for Informatics, Computing, and Technologies (3ICT), Zallaq, Bahrain, 29–30 September 2021; IEEE: New York, NY, USA, 2021; pp. 693–697. [Google Scholar] [CrossRef]

- Alebooyeh, M.; Urbanic, R.J. Neural Network Model for Identifying Workspace, Forward and Inverse Kinematics of the 7-DOF YuMi 14000 ABB Collaborative Robot. IFAC Pap. 2019, 52, 176–181. [Google Scholar] [CrossRef]

- Chen, J.; Lau, H.Y.K. Learning the inverse kinematics of tendon-driven soft manipulators with K-nearest Neighbors Regression and Gaussian Mixture Regression. In Proceedings of the 2016 2nd International Conference on Control, Automation and Robotics (ICCAR), Hong Kong, 28–30 April 2016; pp. 103–107. [Google Scholar] [CrossRef]

- Karlik, B.; Aydin, S. An improved approach to the solution of inverse kinematics problems for robot manipulators. Eng. Appl. Artif. Intell. 2000, 13, 159–164. [Google Scholar] [CrossRef]

- Peng, Y.; Peng, Z.; Lan, T. Neural Network Based Inverse Kinematics Solution for 6-R Robot Implement Using R Package Neuralnet. In Proceedings of the 2021 5th International Conference on Robotics and Automation Sciences (ICRAS), Wuhan, China, 11–13 June 2021; IEEE: New York, NY, USA, 2021; pp. 65–69. [Google Scholar] [CrossRef]

- Karapetyan, V.A.; Miryanova, V.N. Solving the Inverse Kinematics Problem for a Seven-Link Robot-Manipulator by the Particle Swarm Optimization. In Proceedings of the 2023 International Russian Smart Industry Conference (SmartIndustryCon), Sochi, Russia, 27–30 March 2023; IEEE: New York, NY, USA, 2023; pp. 186–190. [Google Scholar] [CrossRef]

- Sharkawy, A.-N.; Khairullah, S.S. Forward and Inverse Kinematics Solution of A 3-DOF Articulated Robotic Manipulator Using Artificial Neural Network. Int. J. Robot. Control Syst. 2023, 3, 330–353. [Google Scholar] [CrossRef]

- Chen, H.; Chen, W.; Xie, T. Wavelet network solution for the inverse kinematics problem in robotic manipulator. J. Zhejiang Univ. Sci. A 2006, 7, 525–529. [Google Scholar] [CrossRef]

- Calzada-Garcia, A.; Victores, J.G.; Naranjo-Campos, F.J.; Balaguer, C. A Review on Inverse Kinematics, Control and Planning for Robotic Manipulators With and Without Obstacles via Deep Neural Networks. Algorithms 2025, 18, 23. [Google Scholar] [CrossRef]

- Cao, Y.; Wang, W.; Ma, L.; Wang, X. Inverse Kinematics Solution of Redundant Degree of Freedom Robot Based on Improved Quantum Particle Swarm Optimization. In Proceedings of the 2021 IEEE 7th International Conference on Control Science and Systems Engineering (ICCSSE), Qingdao, China, 30 July–1 August 2021; IEEE: New York, NY, USA, 2021; pp. 68–72. [Google Scholar] [CrossRef]

- Malik, A.; Lischuk, Y.; Henderson, T.; Prazenica, R. A Deep Reinforcement-Learning Approach for Inverse Kinematics Solution of a High Degree of Freedom Robotic Manipulator. Robotics 2022, 11, 44. [Google Scholar] [CrossRef]

- Aggogeri, F.; Pellegrini, N.; Taesi, C.; Tagliani, F.L. Inverse kinematic solver based on machine learning sequential procedure for robotic applications. J. Phys. Conf. Ser. 2022, 2234, 012007. [Google Scholar] [CrossRef]

- Calzada-Garcia, A.; Victores, J.G.; Naranjo-Campos, F.J.; Balaguer, C. Inverse Kinematics for Robotic Manipulators via Deep Neural Networks: Experiments and Results. Appl. Sci. 2025, 15, 7226. [Google Scholar] [CrossRef]

- Shakerimov, A.; Altymbek, M.; Koganezawa, K.; Yeshmukhametov, A. Machine learning-based inverse kinematics scalability for prismatic tensegrity structural manipulators. Robot. Auton. Syst. 2025, 193, 105102. [Google Scholar] [CrossRef]

- Joshi, R.C.; Rai, J.K.; Burget, R.; Dutta, M.K. Optimized inverse kinematics modeling and joint angle prediction for six-degree-of-freedom anthropomorphic robots with Explainable AI. ISA Trans. 2025, 157, 340–356. [Google Scholar] [CrossRef] [PubMed]

- Le, H.T.N.; Ngo, H.Q.T. Application of the vision-based deep learning technique for waste classification using the robotic manipulation system. Int. J. Cogn. Comput. Eng. 2025, 6, 391–400. [Google Scholar] [CrossRef]

- Phuc, T.D.; Son, B.C. Development of an autonomous chess robot system using computer vision and deep learning. Results Eng. 2025, 25, 104091. [Google Scholar] [CrossRef]

- Lab, E.A.I. Artificial Neural Networks vs Human Brain. Equinox AI Lab. Available online: https://equinoxailab.ai/neural-networks-vs-human-brain/ (accessed on 13 August 2025).

- Ogunmolu, O.; Gu, X.; Jiang, S.; Gans, N. Nonlinear Systems Identification Using Deep Dynamic Neural Networks. arXiv 2016, arXiv:1610.01439. [Google Scholar] [CrossRef]

- Johnson, C.C.; Quackenbush, T.; Sorensen, T.; Wingate, D.; Killpack, M.D. Using First Principles for Deep Learning and Model-Based Control of Soft Robots. Front. Robot. AI 2021, 8, 654398. [Google Scholar] [CrossRef]

- Omisore, O.M.; Han, S.; Ren, L.; Elazab, A.; Hui, L.; Abdelhamid, T.; Azeez, N.A.; Wang, L. Deeply-learnt damped least-squares (DL-DLS) method for inverse kinematics of snake-like robots. Neural Netw. 2018, 107, 34–47. [Google Scholar] [CrossRef]

- Heidari, A.; Navimipour, N.J.; Unal, M. Applications of ML/DL in the management of smart cities and societies based on new trends in information technologies: A systematic literature review. Sustain. Cities Soc. 2022, 85, 104089. [Google Scholar] [CrossRef]

- “Layered Architecture|AppMaster”. Available online: https://appmaster.io/glossary/layered-architecture (accessed on 12 August 2025).

- Lutz, M.; Python, L. Learning Python: Powerful Object-Oriented Programming, 5th ed.; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2013. [Google Scholar]

- Anaconda; Inc. Learn More About Anaconda. Anaconda. Available online: https://www.anaconda.com/about-us (accessed on 31 March 2025).

- Streamlit; Inc. Streamlit • A Faster Way to Build and Share Data Apps. Available online: https://streamlit.io/ (accessed on 31 March 2025).

- Raschka, S.; Mirjalili, V. Python Machine Learning: Machine Learning and Deep Learning with Python, Scikit-Learn, and TensorFlow 2, 3rd ed.; Packt Publishing: Birmingham, UK, 2020. [Google Scholar]

- Ibarra-Pérez, T. Análisis, Diseño e Implementación de Tecnología Basada en Inteligencia Artificial para Resolver la Cinemática Inversa en un Manipulador Robótico de 6DoF. Ph.D. Thesis, Universidad Autónoma de Zacatecas, Zacatecas, Mexico, 2022. [Google Scholar]

- Ibarra-Pérez, T.; Ortiz-Rodríguez, J.M.; Olivera-Domingo, F.; Guerrero-Osuna, H.A.; Gamboa-Rosales, H.; del R, M. A Novel Inverse Kinematic Solution of a Six-DOF Robot Using Neural Networks Based on the Taguchi Optimization Technique. Appl. Sci. 2022, 12, 9512. [Google Scholar] [CrossRef]

- Avenash, R.; Viswanath, P. Semantic Segmentation of Satellite Images using a Modified CNN with Hard-Swish Activation Function. In Proceedings of the 14th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications, Prague, Czech Republic, 25–27 February 2019; SCITEPRESS-Science and Technology Publications: Setúbal, Portugal, 2019; pp. 413–420. [Google Scholar] [CrossRef]

- Sreekar, C.; Sindhu, V.S.; Bhuvaneshwaran, S.; Bose, S.R.; Kumar, V.S. Positioning the 5-DOF Robotic Arm using Single Stage Deep CNN Model. In Proceedings of the 2021 Seventh International Conference on Bio Signals, Images, and Instrumentation (ICBSII), Chennai, India, 25–27 March 2021; IEEE: New York, NY, USA, 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Chandra, R.; Goyal, S.; Gupta, R. Evaluation of Deep Learning Models for Multi-Step Ahead Time Series Prediction. IEEE Access 2021, 9, 83105–83123. [Google Scholar] [CrossRef]

- Anggraeni, W.; Yuniarno, E.M.; Rachmadi, R.F.; Sumpeno, S.; Pujiadi, P.; Sugiyanto, S.; Santoso, J.; Purnomo, M.H. A hybrid EMD-GRNN-PSO in intermittent time-series data for dengue fever forecasting. Expert Syst. Appl. 2024, 237, 121438. [Google Scholar] [CrossRef]

- del Campo, F.A.; Neri, M.C.G.; Villegas, O.O.V.; Sánchez, V.G.C.; de J, H.; Jiménez, V.G. Auto-adaptive multilayer perceptron for univariate time series classification. Expert Syst. Appl. 2021, 181, 115147. [Google Scholar] [CrossRef]

- Montaha, S.; Azam, S.; Rafid, A.K.M.R.H.; Hasan, M.Z.; Karim, A.; Islam, A. TimeDistributed-CNN-LSTM: A Hybrid Approach Combining CNN and LSTM to Classify Brain Tumor on 3D MRI Scans Performing Ablation Study. IEEE Access 2022, 10, 60039–60059. [Google Scholar] [CrossRef]

- Zheng, Y. Hybrid Neural Network Models to Estimate Vital Signs from Facial Videos. BioMedInformatics 2025, 5, 6. [Google Scholar] [CrossRef]

- Karim, F.; Majumdar, S.; Darabi, H.; Harford, S. Multivariate LSTM-FCNs for time series classification. Neural Netw. 2019, 116, 237–245. [Google Scholar] [CrossRef]

- Prechelt, L. Early Stopping—But When? In Neural Networks: Tricks of the Trade; Springer: Berlin/Heidelberg, Germany, 1998; pp. 55–69. [Google Scholar] [CrossRef]

- Anam, M.K.; Defit, S.; Haviluddin, H.; Efrizoni, L.; Firdaus, M.B. Early Stopping on CNN-LSTM Development to Improve Classification Performance. J. Appl. Data Sci. 2024, 5, 312. [Google Scholar] [CrossRef]

- Chollet, F. Deep Learning with Python, Second Edition, 2nd ed.; Simon and Schuster: New York, NY, USA, 2021. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. In Proceedings of the 32nd International Conference on Machine Learning, PMLR, Lille, France, 7–9 July 2015; pp. 448–456. Available online: https://proceedings.mlr.press/v37/ioffe15.html (accessed on 30 March 2025).

- Thakkar, V.; Tewary, S.; Chakraborty, C. Batch Normalization in Convolutional Neural Networks—A comparative study with CIFAR-10 data. In Proceedings of the 2018 Fifth International Conference on Emerging Applications of Information Technology (EAIT), Kolkata, India, 12–13 January 2018; pp. 1–5. [Google Scholar] [CrossRef]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Xu, Q.; Zhang, M.; Gu, Z.; Pan, G. Overfitting remedy by sparsifying regularization on fully-connected layers of CNNs. Neurocomputing 2019, 328, 69–74. [Google Scholar] [CrossRef]

- Basha, S.H.S.; Dubey, S.R.; Pulabaigari, V.; Mukherjee, S. Impact of fully connected layers on performance of convolutional neural networks for image classification. Neurocomputing 2020, 378, 112–119. [Google Scholar] [CrossRef]

- Mohammed, E.U.R.; Soora, N.R.; Mohammed, S.W. A Comprehensive Literature Review on Convolutional Neural Networks. TechRxiv 2022, preprint. [Google Scholar] [CrossRef]

- Heaton, J. Ian Goodfellow, Yoshua Bengio, and Aaron Courville: Deep learning. Genet Program Evolvable Mach 2018, 19, 305–307. [Google Scholar] [CrossRef]

- Samarakoon, S.M.; Herath, H.M.; Yasakethu, S.L.; Fernando, D.; Madusanka, N.; Yi, M.; Lee, B.I. Long Short-Term Memory-Enabled Electromyography-Controlled Adaptive Wearable Robotic Exoskeleton for Upper Arm Rehabilitation. Biomimetics 2025, 10, 106. [Google Scholar] [CrossRef]

- Halim, M.Y.; Awad, M.I.; Maged, S.A. Hybrid Physics-Infused Deep Learning for Enhanced Real-Time Prediction of Human Upper Limb Movements in Collaborative Robotics. J. Intell. Robot. Syst. 2025, 111, 1–17. [Google Scholar] [CrossRef]

| (rad) | θ1 | θ2 | θ3 | θ4 | θ5 | θ6 |

|---|---|---|---|---|---|---|

| Minimum | 0 | 0 | 2π | 0 | 2π | 0 |

| Maximum | 2π | π | π 2 | 2π | π 2 | 2π |

| # Timestep and % of Data from the Total Set | Total Data, Series Used, and Subsets Training—70%, Test 20%, and Validation 10% |

|---|---|

| 1 Timestep 0.04%—100,000 Total  | 100,000 total data distributed as follows: 70,000 training data 20,000 test data 10,000 validation data |

| 3 Timesteps 0.12%—300,000 Total  | 300,000 total data distributed in: 3 series of 100,000 with: 210,000 training data 60,000 test data 30,000 validation data |

| 4 Timesteps 0.16%—400,000 Total  | 400,000 total data distributed in: 4 series of 100,000 with: 280,000 training data 80,000 test data 40,000 validation data |

| 5 Timesteps 0.20%—500,000 Total  | 500,000 total 5 series of 100,000 with: 350,000 training data 100,000 test data 50,000 validation data |

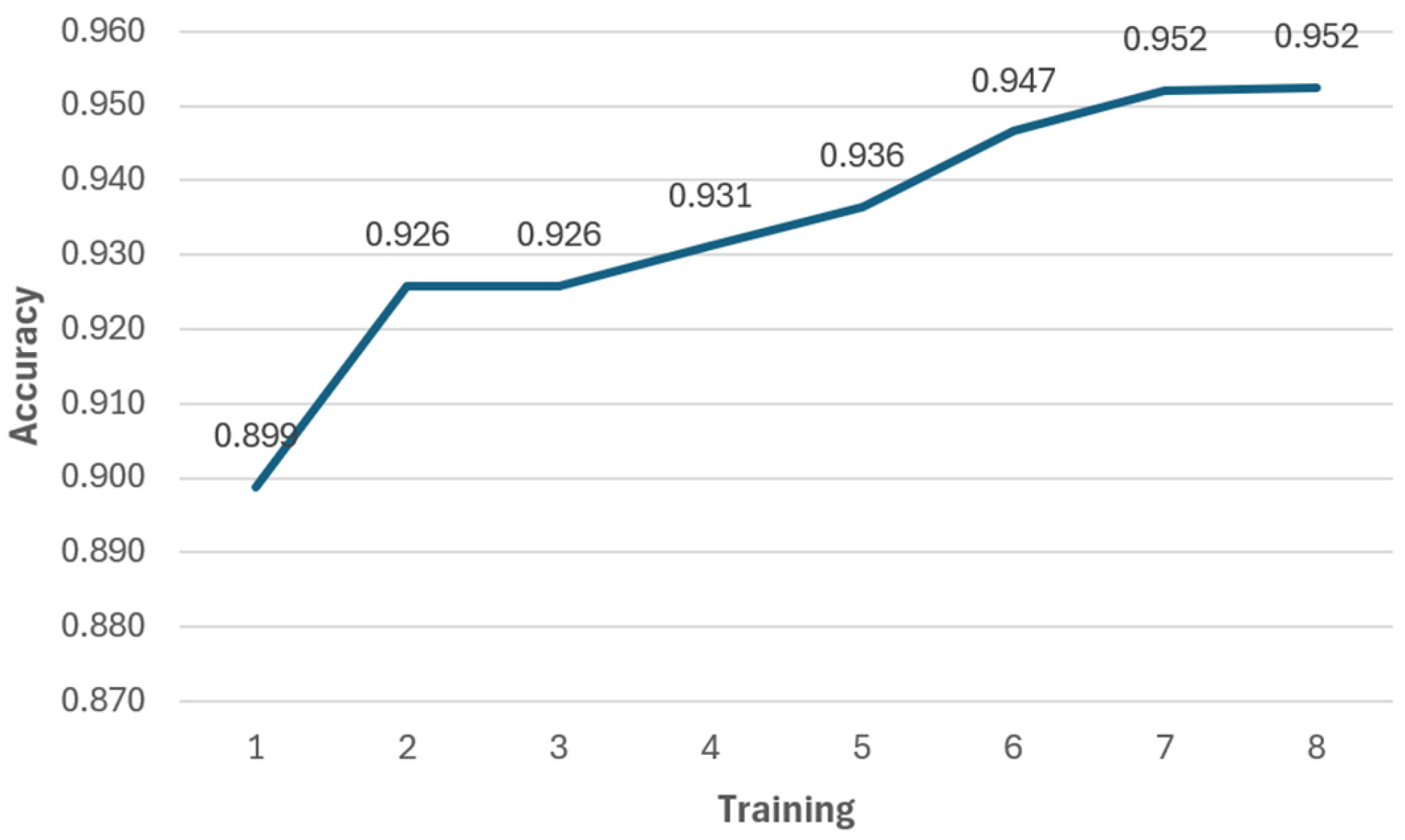

| #Train | Dropout | Conv1D Layers and Filter | Activation Functions | Fully Connected Layers |

|---|---|---|---|---|

| 1 | 0.3 | 128-256-512-1024 | relu | 128–64 |

| 2 | 0.3-0.2 | 1000-800-400-600 | relu/swish | 600-400-200 |

| 3 | 0.5-0.3-0.2 | 1024-512-256-128 | swish | 600-400-200 |

| 4 | 0.5-0.3-0.2 | 128-256-512-1024 | swish | 128-256 |

| 5 | 0.3-0.2 | 128-256-512-1024 | relu | 128-256 |

| 6 | 0.3-0.2 | 1024-512-256-128 | relu/swish | 600-400-200 |

| 7 | 0.2 | 1024-512-256-128 | relu/swish | 600-400-200 |

| 8 | 0.1 | 1024-512-256-128 | relu/swish | 600-400-200 |

| Timesteps | Epochs | Time (min) | Loss MSE | MAE | Accuracy |

|---|---|---|---|---|---|

| 4 | 42 | 54 | 0.003 | 0.040 | 95.9% |

| 5 | 75 | 137 | 0.005 | 0.047 | 95.2% |

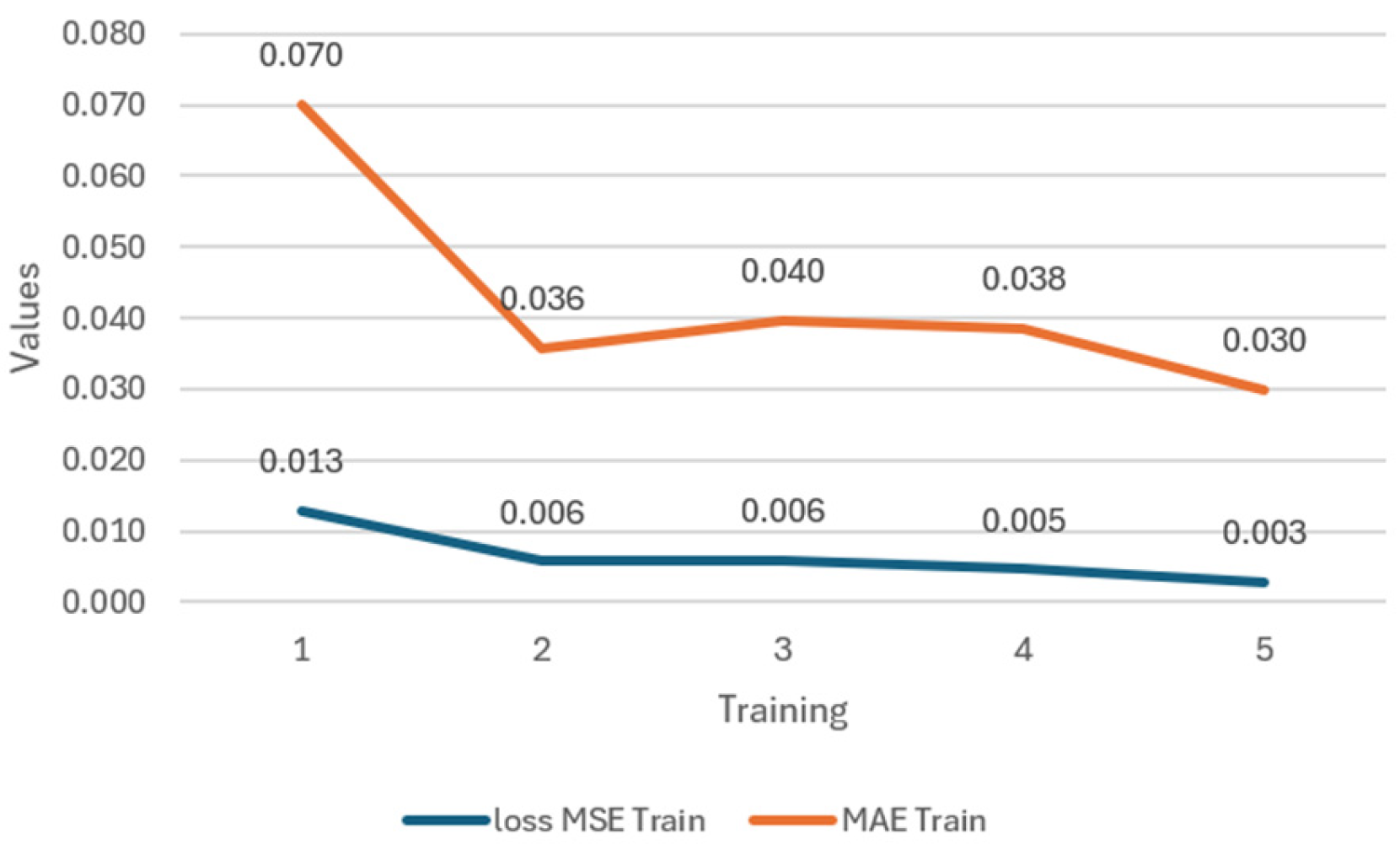

| # Training | Dropout | Batch_Size | Activation Functions | LSTM Layers No. Neurons | Fully Connected No. Neurons |

|---|---|---|---|---|---|

| 1 | 0.3-0.2 | 32 | relu | 128-256-512 | 64-28 |

| 2 | 0.5-0.3 | 64 | swish | 1024-512-256 | 256-512 |

| 3 | 0.3-0.2 | 64 | relu | 1024-512-256 | 256-512 |

| 4 | 0.5-0.3 | 64 | swish | 1024-512-256 | 200-400-600 |

| 5 | 0.1-0.2 | 64 | swish/relu | 1024-512-256 | 600-400-200 |

| Timesteps | Epochs | Time (min) | Loss MSE | MAE | Accuracy |

|---|---|---|---|---|---|

| 4 | 134 | 134.8 | 0.002 | 0.003 | 96.2% |

| 5 | 120 | 196.7 | 0.006 | 0.042 | 95.5% |

| DL Model | Epochs | Time (min) | Accuracy Train | Loss MSE Train | MAE Train | Accuracy Test | Loss MSE Test | MAE Test |

|---|---|---|---|---|---|---|---|---|

| LSTM | 124 | 439 | 97% | 0.003 | 0.030 | 0.96 | 0.008 | 0.035 |

| CNN | 42 | 54 | 95% | 0.005 | 0.047 | 0.94 | 0.011 | 0.048 |

| DL Model | Average MSE | Average MAE | Average R2 | Average Accuracy | Standard Deviation |

|---|---|---|---|---|---|

| LSTM | 0.014 | 0.057 | 0.996 | 92% | 0.003 |

| CNN | 0.013 | 0.068 | 0.991 | 92% | 0.001 |

| Minimum Expected Value of the Metrics | Indicates That |

|---|---|

| MSE < 0.03 | The prediction errors are small and consistent. |

| MAE ≤ 0.05 | The average magnitude of the prediction errors is low, suggesting high accuracy. |

| R2 ≥ 0.9 | The result explains a high proportion of the variance in the target data, reflecting strong predictive capability. |

| DE ≤ 0.5 | The predicted values are very close to the actual values in the multidimensional output space, ensuring high spatial precision. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Torres-Hernández, M.A.; Ibarra-Pérez, T.; García-Sánchez, E.; Guerrero-Osuna, H.A.; Solís-Sánchez, L.O.; Martínez-Blanco, M.d.R. Web System for Solving the Inverse Kinematics of 6DoF Robotic Arm Using Deep Learning Models: CNN and LSTM. Technologies 2025, 13, 405. https://doi.org/10.3390/technologies13090405

Torres-Hernández MA, Ibarra-Pérez T, García-Sánchez E, Guerrero-Osuna HA, Solís-Sánchez LO, Martínez-Blanco MdR. Web System for Solving the Inverse Kinematics of 6DoF Robotic Arm Using Deep Learning Models: CNN and LSTM. Technologies. 2025; 13(9):405. https://doi.org/10.3390/technologies13090405

Chicago/Turabian StyleTorres-Hernández, Mayra A., Teodoro Ibarra-Pérez, Eduardo García-Sánchez, Héctor A. Guerrero-Osuna, Luis O. Solís-Sánchez, and Ma. del Rosario Martínez-Blanco. 2025. "Web System for Solving the Inverse Kinematics of 6DoF Robotic Arm Using Deep Learning Models: CNN and LSTM" Technologies 13, no. 9: 405. https://doi.org/10.3390/technologies13090405

APA StyleTorres-Hernández, M. A., Ibarra-Pérez, T., García-Sánchez, E., Guerrero-Osuna, H. A., Solís-Sánchez, L. O., & Martínez-Blanco, M. d. R. (2025). Web System for Solving the Inverse Kinematics of 6DoF Robotic Arm Using Deep Learning Models: CNN and LSTM. Technologies, 13(9), 405. https://doi.org/10.3390/technologies13090405