1. Introduction

Achieving broadband sound absorption towards low frequencies is a recursive theme that receives constant revivals in view of recent advances within the field of acoustical engineering. Space and mass constraints are the main limitations when addressing the practical design of broadband sound absorbers. Rigidly-backed micro-perforated panels (MPPs) have been proposed [

1] as a lightweight, non-fibrous alternative to bulky porous materials. Their acoustical performance is determined by a number of constitutive parameters (hole diameter, pitch, and thickness) that can be optimized for sound absorption maximization. The definition of the optimization problem includes the cost function, the design variables, and the geometrical or physical constraints. However, optimization is a major challenge because the objective function may show non-convex, noisy, or flat behavior, and depends on many variables, implying several local optima in the multi-dimensional space of parameters. Trial and error methods may be executed, but they require a huge amount of time to provide a near-optimal solution, especially in high-dimensional problems. An exhaustive search is often computationally prohibitive and cannot be accomplished in a reasonable time frame. For the acoustical design of multi-component MPP partitions, global optimization methods that include deterministic and stochastic algorithms have been proposed.

Deterministic algorithms generate a point sequence guided by gradient-based solvers to converge toward the optimal solution [

2]. As they rely on analytical properties of the problem, they guarantee a global optimum solution when applied to convex and monotonous functions. However, most of the real problems are not described by convex functions, and deterministic algorithms are often trapped into local solutions that depend on the selection of the initial estimated parameters [

3]. To avoid this limitation, multiple initial points and trials can be performed, but this is also very time consuming and with no guarantee of global convergence. Generally, another category of algorithms, stochastic methods, has to be employed. These algorithms reproduce natural phenomena or social behavior, and do not require usual mathematical conditions of continuity, derivability and convexity [

4]. This makes them suitable for high-dimensional or noisy problems. Although they do not guarantee optimal solutions, they provide a result in the vicinity of the global optimum in much reduced convergence time. These methods provide a balance between diversification and exploitation for finding a reliable solution with computational efficiency. For that, they use learning strategies at each iteration of the objective function. A brief review of both the deterministic and the stochastic methods applied to the field of phase equilibrium modelling has been presented by Zhang et al. [

5]. For deterministic methods, the authors briefly explain the main features of branch and bound global optimization, homotopy continuation methods, Lipschitz optimization, and interval analysis. For stochastic methods, the pseudo-code of random search, simulated annealing, particle swarm optimization, taboo search, genetic algorithms, differential evolution, ant colony optimization, and harmony search are presented.

Concerning the application of stochastic approaches for the optimization of acoustic dissipative materials, genetic algorithms [

6,

7] have been used successfully in many problems. In this method, the initial population is built up by randomization and the resulting parameter set is encoded to form a string, which represents the chromosome. By evaluation of the objective function, each chromosome is assigned with a fitness. Reproduction includes selection, crossover, and mutation, and the process is finished when the number of generations exceeds a pre-selected value. Chang et al. [

8] optimized a single-layer sound absorber composed of a perforated panel backed by a homogenous and isotropic absorbing material with a total length constrained to be less than 0.2 m. A comparison with improved classical gradient methods was successfully established for tonal noise reduction. Kim and Bolton [

9] also used genetic algorithms for the optimization of a multilayer MPP partition for both broadband absorption and transmission loss. More recently, genetic algorithms have been applied to the design of a micro-perforated panel situated on the ceiling of a fast-speed train [

10]. The authors minimized the transmission loss with constraints on the total mass reducing by 3 dB the noise level measured at one position. Simulated annealing [

11,

12] has also attracted attention in the field of noise control by MPPs [

13,

14]. This is a stochastic optimization technique based on an analogy with the physical annealing process of crystals or metals. A slow cooling rate until the material is crystallized allows reaching the minimal energy state. On the contrary, a fast cooling rate results in an imperfect crystal lattice with a higher energy condition. The local search is based on the hill-climbing algorithms whose uphill moves variations, usually not allowed because they represent worse solutions, are here accepted to avoid becoming stuck in local optimum solutions and with a probability that depends on the thermodynamic Boltzmann law. Using this method, the effect of plate vibrations has been accounted for to optimally tune a double-layered flexible MPP in order to maximize the averaged sound absorption coefficient over the frequency band 200 Hz–1 kHz [

15]. The optimized absorber improved the sound absorption coefficient up to 12.5% compared with the model without the vibro-acoustic effect.

Other works [

16] have used topology optimization methods to find the optimal arrangement of poroelastic layers to maximize the transmission loss of a foam layout subject to a mass constraint. They selected the method of moving asymptotes as a gradient-based constrained optimizer. This methodology has also been used for the optimization of the sequence and thickness of plain and micro-perforated panels in a multilayer partition when considering the absorption and the transmission loss [

17].

The particle swarm approach was successful for the optimization of the normal absorption coefficient of serial, parallel, and serial–parallel MPP absorbers [

18]. This method exploits the collective behavior of a biological social system, like a flock of birds, to explore a design space. Within the same category, the cuckoo search algorithm has been used [

19] for optimizing between 100 Hz and 6 kHz the absorbing coefficient of a multilayer MPP with up to 8 layers. This method reproduces the parasitic behavior of some cuckoo species and fruit flies. The use of the Bayesian inference-based method [

20] presents the advantage of providing quantitative measures of the interdependence and uncertainties between the optimized MPP parameters across multilayer configurations.

The above works have focused on the optimization of sound absorbers within a prescribed frequency range. Other considerations have appeared recently [

21], generalized from electromagnetism, that account for causality in the absorber acoustic response. It led to an equality that relates the absorption spectrum of a material integrated over a given bandwidth to its thickness. It provided a low-frequency bound to broadband optimization processes. It was applied to an acoustic metamaterial unit composed of 16 Fabry–Pérot coiled channels producing a flat absorption spectrum starting at 400 Hz, requiring a minimum overall thickness of 12 cm [

21].

Although many studies have dealt with the optimal design of one-port absorbers, that allow sound to enter from one side only, such as rigidly-backed MPPs or parallel arrays of wall-mounted resonators, the cost efficient optimal design of two-port acoustic wall-treatments that allow sound to enter and exit the system through a traversing waveguide is steered by specific strategies. This latter category includes standard silencers [

22] made up of Helmholtz resonators or partitioned expansion chambers shielded from the flow by a perforate. Their optimal design aims at producing high transmission loss over narrow bandwidths targeted on the disturbance frequency [

23]. A powerful strategy is to optimize the silencer parameters at this frequency in order to achieve an optimal Cremer-type wall impedance [

24] that maximizes the rate of decay of the plane wave mode propagating through the silencer. This reference solution ensures a maximum transmission loss at this frequency [

25], but it does not ensure broadband dissipation of the incident energy.

Advanced fully-opened metasilencers are another category of two-port wall-treatments that have been recently developed [

26]. Their complex internal structure, made up of interconnected cavities [

27] or coiled channels [

28], is engineered at subwavelength scales to control sound transmission on targeted broad bandwidths. Unlike standard silencers, these two-port metasilencers are often multi-resonant [

29] and require the challenging optimization of non-convex cost function landscapes [

30] with typically a large number of local minima. The current study focuses on the cost-efficient optimization of rainbow-trapping silencers (RTSs) [

31] composed of a serial distribution of a large number of side-branch cavities whose depth gradually increases along the sound wave propagation path. It belongs to a class of functionally-graded acoustic metamaterials able to trap and fully dissipate incident waves by visco-thermal effects over the cavity ribs, without blocking airflow circulation. They could be used in automotive exhaust pipes, in aerospace nozzles, in heat, ventilation, and air-conditioning systems, or in industrial large duct machinery, in order to reduce noise emissions. A noteworthy feature is that, unlike standard silencers, they avoid the back-reflection of sound waves that trigger thermo-acoustic instabilities inside the combustion chambers of engines [

32]. As will be seen, the optimization of RTS is challenging as it involves cost function landscapes with flat valleys induced by the merging between the individual cavity resonances.

The goal of this paper is to determine which optimization method, model-based or data-driven, is best suited to maximize the broadband acoustical performance of RTSs at the design stage of a prototype, in terms of accuracy, cost-efficiency, and material weight. The main challenges will be to deal with high-dimensional design spaces and cost functions with flat topology, for which gradient-based optimization fails.

Section 2 describes the transfer matrix modelling of RTSs with low- and high-dimensional design parameters as well as the model-based and data-driven approaches used for RTS optimization.

Section 3 presents the optimization results in terms of acoustical performance, cost-efficiency, and bulkiness of the RTS solutions.

Section 4 discusses the pros and cons of the model-based and data-driven strategies for low- and high-dimensional optimization of RTSs, the sensitivity of the optimization processes to input and output noise, and shows the potential of bi-objective weight-performance optimization.

Section 5 provides recommendations on the choice of the best strategy for RTS optimization.

2. Methods

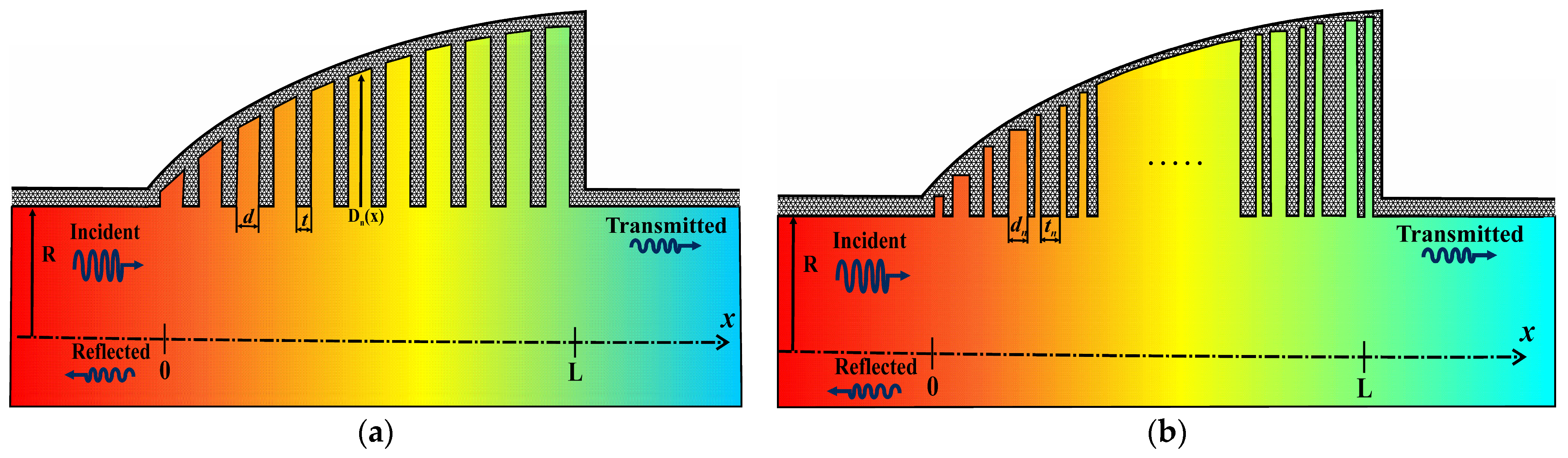

The physical configurations that will be optimized are presented in

Figure 1. They correspond to fully-opened RTSs composed of a number of cavity resonators with physical parameters that are adjusted according to different objective functions. The regular RTS shown in

Figure 1a is composed of a total number of

cavities of width

distributed over the length

. The cavities are separated by thin walls of thickness

and present axially varying depths

[

33] that increase according to the power law

, with

being a positive number that determines the axial rate of increase of the cavity depths.

Figure 1b shows an RTS with variable cavity widths

and rib thickness

such that

. The RTSs are mounted onto a main duct of radius

with cross-sectional area

and a cut-on frequency

below which only plane waves propagate,

being the speed of sound. Constraints on the design impose a maximum axial length equal to

. The largest cavity depth associated to the last resonator is set to

, to ensure axial and radial compactness of the proposed liner.

Assuming an

frequency dependence and axial variation of the RTS wall impedance

, the linearized mass conservation equation reads as follows [

31,

33]:

where

is the normal acoustic velocity over the RTS cavity mouths,

is the axial acoustic velocity,

is the acoustic pressure, and

is the air density. The constant

is the hydraulic duct radius,

is the circumference, and

is the cross-sectional area of the waveguide. Considering the linearized momentum conservation equation

substituted into Equation (2), one obtains the following:

where

is the wall specific admittance and

is the fluid characteristic impedance.

Considering a continuous distribution of annular cavities and neglecting the visco-thermal losses in the cavities, the wall admittance takes the following form [

31]:

where

,

,

and

are the Bessel and Hankel functions of the first kind and orders 0 and 1, respectively, and

is the acoustic wavenumber.

2.1. Transfer-Matrix Modelling of the RTS Acoustical Performance

The transfer-matrix method (TMM) is an analytical cost-efficient approach that has been employed for the prediction of the acoustic performance of rib-designed acoustic black holes constituted of a finite number of cavities [

34]. It has been successfully validated against the visco-thermal finite element model and impedance tube measurements [

31]. Considering an RTS expanding from the inlet at

towards the outlet at

, and assuming plane wave propagation (

), one can apply continuity of the acoustic pressure and acoustic flow rate across each cavity-ring unit. It leads to the following relationship

between the left and right pressures and axial velocities

and

, respectively. The transfer matrix can be written as follows:

where

is the localized side-branch volume admittance and

is the cavity entrance area. In case of a regular RTS, one has

and

. The complete transfer matrix results from the product of the individual transfers, as follows:

The RTS reflection and transmission coefficients are calculated from the entries of the complete transfer matrix

as follows:

where

. The RTS dissipation coefficient takes the expression

, and the transmission loss (TL) is calculated as

. The model of Johnson–Champoux–Allard–Lafarge has been used to describe the visco-thermal dissipation effects within the RTS cavities and in the main waveguide. It provides complex acoustic wavenumbers

and impedances

, where

and

are the effective density [

35] and compressibility [

36,

37] of air that account for the visco-inertial and thermal effects over the duct and cavity walls. The

d-indexed quantities are substituted into the duct transfer matrices, whereas the

c-indexed quantities are inserted into the side-branch transfer matrix of Equation (4).

2.2. Global Optimization Methods

The meta-heuristic global optimization methods currently used in complex engineering design problems have been applied for the optimization of RTS silencers’ dissipative properties. The following gradient-free algorithms have been chosen due to the high-dimensionality of the design space and the non-convex landscape topology, including flat regions of near-zero gradient. A brief description of each method is given below, together with key references:

Inspired by the social behavior of bird flocks, PSO is a population-based, stochastic optimization algorithm [

38]. At each iteration, candidate solutions (so-called particles) are updated based on their own experience and that of neighboring particles, balancing parallel exploration and informed exploitation without relying on gradient calculation [

39]. PSO is a priori well-suited for the optimization of an RTS involving low- or high-dimensional design spaces and complex flat cost functions. Its collective particle behavior enables it to drift across a plateau to find the optima. It may however require a large number of particles to maintain diversity and to avoid premature convergence.

Inspired by the thermal annealing in materials, SA is a single solution probabilistic optimization algorithm [

11,

12]. It explores the design space by accepting worse solutions with a probability following the Boltzmann distribution whose temperature decreases over time [

40]. It allows for escape from local minima and progressive convergence near the global optimum. As a single-solution search, SA is a priori better suited to optimize RTS with a low number of parameters and a single flat region. However, careful tuning of the cooling schedule is required to avoid trapping in a local minimum.

A GA is an evolutionary optimization approach based on principles of natural selection and genetics [

6,

7]. It operates on a population of solutions using selection, cross-over (recombination), and mutation operators to produce better solutions over successive generations [

41]. A GA is able to maintain population diversity and robustness and to explore high-dimensional design spaces, as required by optimization of an RTS with variable cavity widths, although it may show a slow convergence and stagnation in flat regions of the design space.

The SUR method builds an approximate model (a surrogate) of the cost function using radial basis functions [

42]. It iteratively refines the surrogate to guide the search toward promising regions, reducing the number of expensive function evaluations [

43]. SUR is a priori well-suited for low-dimensional RTS optimization problems as it provides fast approximations of a smooth cost function with few evaluations.

In order to ensure a fair comparison between the algorithms performance, the consistent hyperparameter settings defined in

Table 1 have been considered for the PSO, SA, GA, and SUR optimization algorithms. They are associated to low- and high-dimensional design spaces described in

Section 3.

A consistent choice of the key parameters has been guided by the flatness of the cost function, so that the algorithms must sample broadly to be able to provide local improvement in the solution. This requires a larger swarm size for PSO, especially in the high-dimensional case, while GA needs more population diversity, and SUR must use enough initial points to avoid surrogate bias. A high initial temperature has been chosen for SA, with 21 parameters to accept uphill moves (worst solutions), promoting exploration. The function tolerance set in

Table 1 defines how small the change in the cost function must be between successive iterations for the algorithm to stop. It was set to 10

−6 for all the algorithms.

2.3. Bayesian Regularization Neural Networks

Bayesian regularization neural networks (BRNNs) are efficient surrogate models, typically used for global optimization of computationally expensive functions. As they incorporate a regularization term during training, they are able to balance data fitting and model complexity [

44]. By penalizing large weights that connect the input-to-hidden and hidden-to-output layers as well as biases, they prevent the network from memorizing the training data and reduce overfitting, which is of interest for approximating the cost function accurately, even with limited training data. Another advantage is that their hidden layers use the hyperbolic tangent sigmoid activation function, well-suited to model the flat behavior of the cost function. In high-dimensional problems, BRNNs require significantly more data to learn useful patterns but, as will be seen in

Section 3.2.1, this can be mitigated by the smoothness of the cost function shape.

3. Results

The model-based and data-driven optimization methods are applied to two challenging test cases that maximize the total power dissipated by an RTS over a broad frequency band 1.4–2.2 kHz. The first case proceeds with the global optimization of a reduced number of key parameters for a regular RTS. It involves a smooth cost function that exhibits a flat plateau of high performance over a wide range of parameters. The second case requires the global optimization of a large number of parameters for an RTS with variable cavity widths for which a well-resolved exhaustive exploration of the design space is out of reach to obtain a reference solution. All the models and optimization algorithms ran on a workstation by Dell Inc. Precision T3630 Intel Xeon CPU with 128 GB RAM and four CPU cores at 4.01 GHz (Dell Inc., Round Rock, TX, USA).

3.1. Global Optimization of a Regular RTS

Parametric studies [

31] were enabled to identify three key parameters that govern the acoustical performance of a regular RTS with axial positive gradient of the cavity depths and constant cavity widths, as sketched in

Figure 1a. The drive parameters include the increase rate of the cavity depths

m, the cavity width

d, and the number of cavities

N. Their range of variations is set to

,

, and

. Within this range of parameters, the total dissipation

, frequency-averaged over 1.4–2.2 kHz, reaches values up to 0.99. The RTS then ensures nearly full trapping of the incident waves and almost complete dissipation of its energy (more than 99%) by visco-thermal effects. This translates into a performance dissipation factor,

, greater than 20 dB. It corresponds in

Figure 2a–c to a flat plateau of high dissipation performance (yellow area).

Because of its cost-efficiency, the RTS dissipation factor is plotted from the TMM over a well-resolved grid of parameters with steps,

,

and

, at an acceptable computational time (2650 s). An exhaustive search led to a maximum dissipation factor of 23.99 dB for the RTS with the following optimal parameters:

,

, and

. These reference values are reported in the column

OPT of

Table 2, and are located as yellow-filled circles over the response surfaces and within the design space of

Figure 2. A weight factor is also computed that corresponds to the volumetric fraction of material filling the RTS. For a regular RTS, it reduces to the axial filling fraction,

.

The response surfaces show cross-dependency of the RTS parameters to achieve a given dissipation performance, so that the three parameters should be simultaneously optimized. However, one obtains physical insights if one assesses their individual effects on the RTS acoustical properties. If one lets the increase rate m of the cavity depths take large values, then all the cavities have the same depth. The RTS then behaves as a locally-reacting silencer with high values of the dissipation, TL, and low reflection, but limited to a narrow bandwidth centered on the first quarter-wavelength resonance frequency of the cavities. If m tends to zero, the cavities vanish, and the RTS turns into a straight duct with zero dissipation, TL, and reflection. The width of the cavities drives the amount of visco-thermal losses that occur within the viscous boundary layer of thickness over the cavity walls, where is the dynamic viscosity of the air (1.81 × 10−5 N s m−2 at 20 °C) and is the sound wave angular frequency. At low frequencies or for very narrow cavities (), viscous effects dominate the cavity width so that the acoustic waves hardly enter the cavities whose resonance frequencies are over-damped. This results in moderate dissipation and TL values, but still low reflections. At higher frequencies or for large cavity widths (), reactive effects overcome the viscous effects. The cavity resonances frequencies are under-damped. This results in moderate dissipation, but large TL, and reflections at the resonances. Finally, the number N of cavities determines the number of activated resonances over the RTS efficiency range. A too low number of cavities will result in distinct maxima of dissipation at the cavities resonance frequencies and the inability of these resonances to merge over a broad bandwidth. A too large number of cavities involves very narrow cavity widths and a drop in dissipation due to over-damped resonances, though still able to merge. Hence, a trade-off has to be achieved between these parameters to achieve a flat plateau of high dissipation.

3.1.1. Model-Based Optimization of Regular RTS

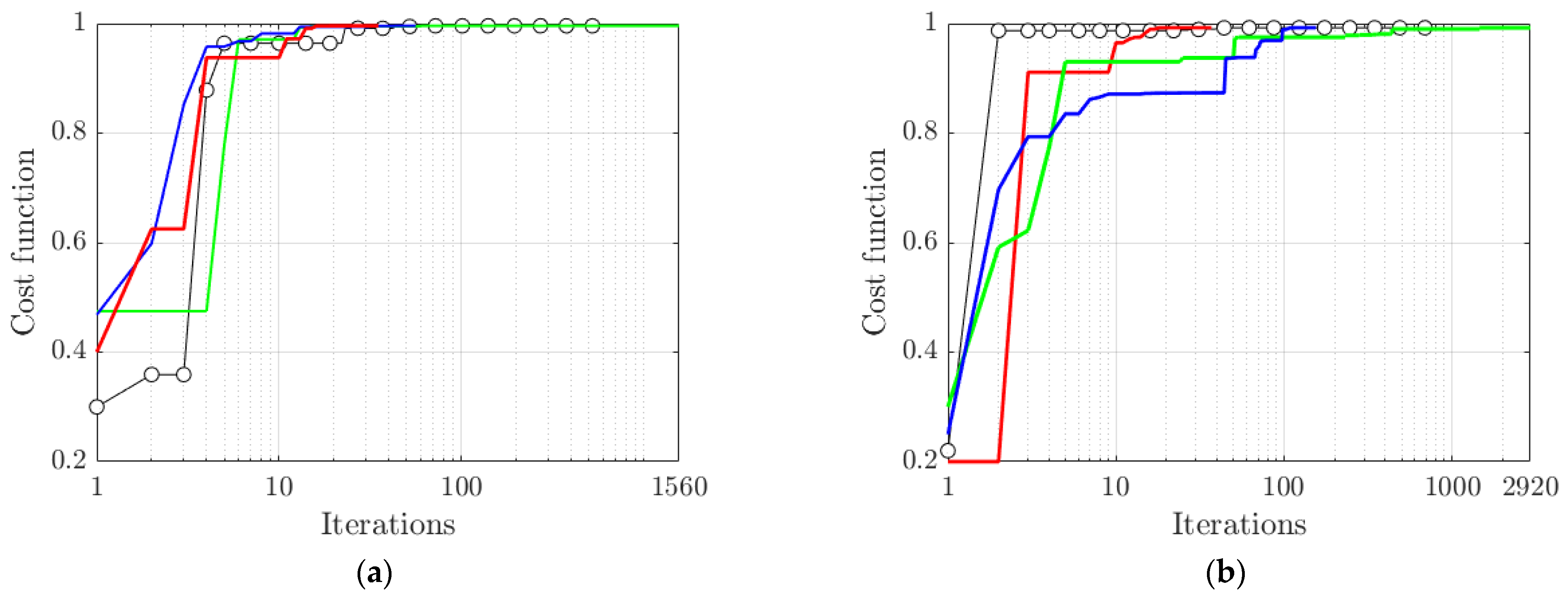

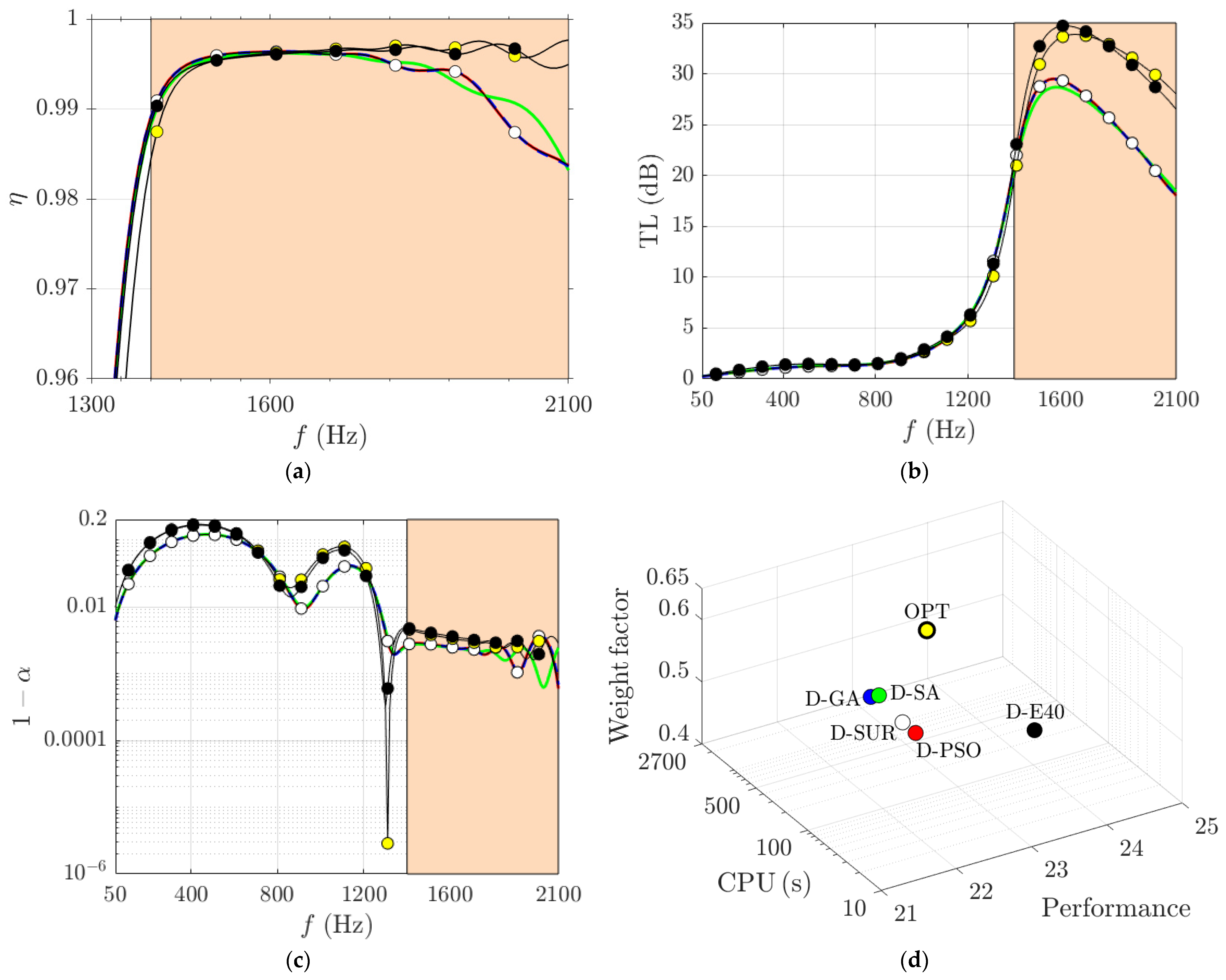

Four model-based optimization methods, PSO, GA, SA, and SUR, have been run to find the global maximum of the RTS total dissipation over the same range of parameters as the one used for the response surfaces shown in

Figure 2. The convergence plots in

Figure 3 compare the computational efficiency of the optimization methods when maximizing the total dissipation of a regular RTS with three parameters. Note that convergence does not warrant accuracy. The reference ideal (totally accurate) solution would correspond to a unit cost function

, frequency-averaged over 1.4–2.2 kHz, e.g., an infinite performance dissipation factor.

Figure 3a shows that the convergence rates of SA, SUR, GA, and PSO range from slowest to fastest, requiring 2920, 522, 56, and 37 iterations for the model-based optimization processes to converge. PSO quickly converges to the most accurate solution (

OPT in

Table 2) due to efficient particle spread and exploration of the flat region. The random recombinations in the GA take longer than PSO to find the global optimum in the flat region. SUR approximates the flat region in a few iterations, but then struggles to find the global optimum. SA has the slowest rate of convergence due to its single solution trajectory that takes a large number of iterations to reach the flat region of the design space.

Figure 2d shows that the solution produced by PSO is very close (within 0.25%) to the reference optimal solution

OPT obtained from the exhaustive search in the design space, whereas GA, SA, and SUR provide between 5% and 8% relative errors, with SA and SUR leading to similar solutions. However, such differences have a small impact on the total dissipation performance that stays within 1% from the optimal one, as seen from

Table 2 and

Figure 4a. This is expected since the cost function exhibits a flat plateau of high values over a wide range of parameters

,

, and

, as seen from

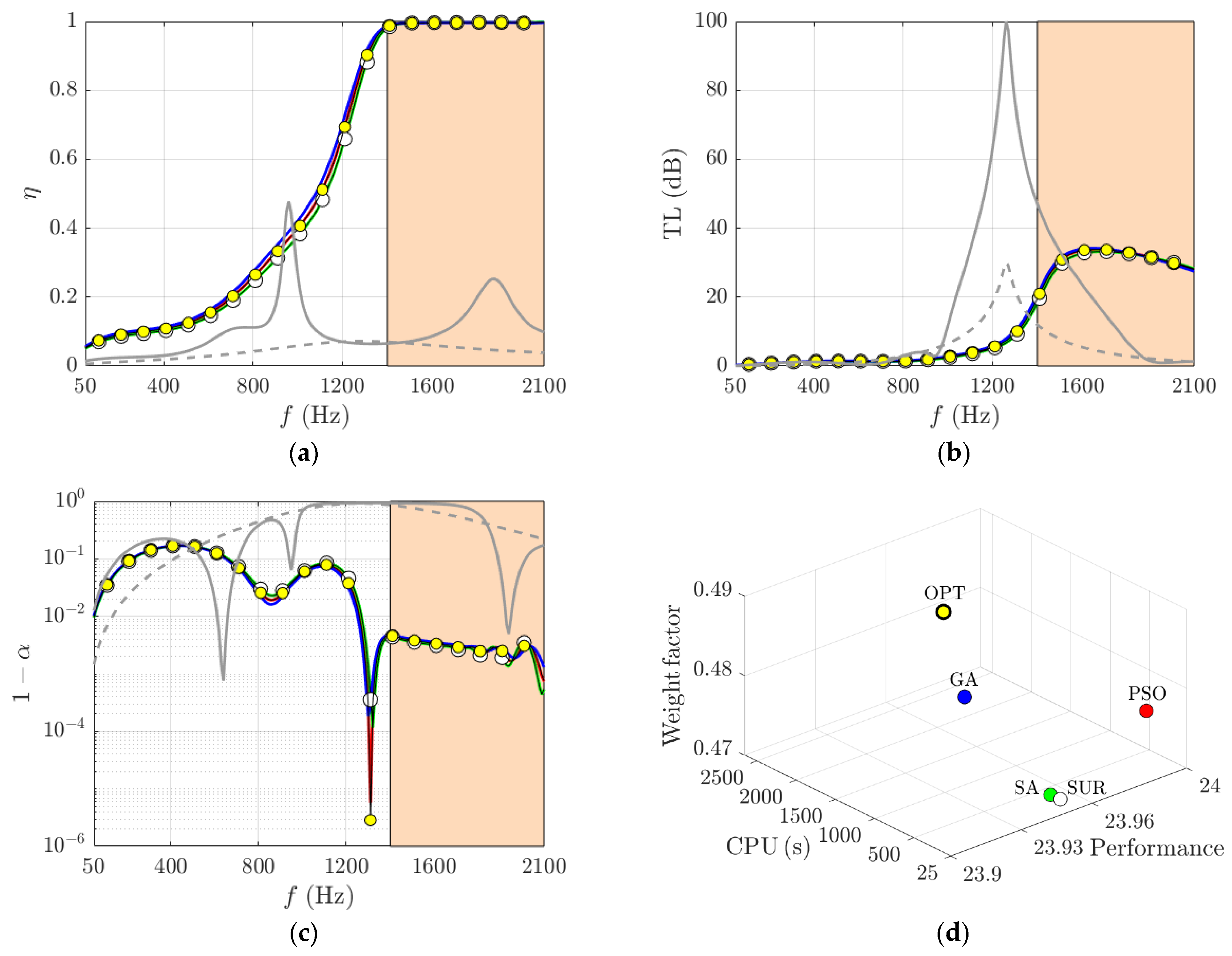

Figure 2a–c. It results in very similar dissipation, TL, and reflection performance over the optimization bandwidth, as seen in

Figure 4a–c. A sufficient number of cavities with suitable width enabled the efficient grouping and merging of the resonances to achieve near-unit dissipation above 1.4 kHz. It led to large TL values between 20 dB and 34 dB, as well as very low values of the reflection coefficient, below 0.4%. The latter performance is favored by excellent impedance matching conditions, due to the optimal values of

m, that enable the full incident wave to gradually enter the RTS for efficient trapping and dissipation.

PSO is thus recommended for the model-based optimization of regular RTS performance, as it provides the most accurate solution at a reasonable computational cost. Surrogate optimization provides a less accurate solution, but with a faster runtime (almost three times faster than PSO). The SUR solution still stays acceptable due to slight variations of the cost function maximum values (yellow area in

Figure 2a–c) over a wide range of parameters. The less efficient methods are GA and SA that provide acceptable solutions, but require 2.4 times (resp. 6.7) more runtime than PSO (resp. SUR) to converge at a slower rate. Note that all four methods provide an RTS solution whose weight penalty stays within 1.5% of the optimal solution.

Table 2 shows that the optimal regular RTS (

OPT and

PSO columns) requires a quadratic rate of increase (

) of the cavity depths to let the incident wave progressively enter the RTS for efficient trapping. The optimal width of the cavities

corresponds to about 40 viscous boundary layer thicknesses over the cavity wall. It enables the incident wave to enter the cavities while being efficiently dissipated at their resonances. The optimal number of cavities (

) ensures an axial density of sidebranches sufficient to merge the resonances while maintaining not too thin cavities that would prevent sound dissipation. The model-based GA slightly overestimates (resp. underestimates)

and

(resp.

) by less than 6%, while SA and SUR underestimate (resp. overestimate)

and

(resp.

) by the same amount. This results in negligible variations of the dissipation, reflection, and transmission curves, as seen in

Figure 4.

Figure 4 shows a quantitative comparison between the optimized performance of the RTS and those of two standard silencers calculated by the TMM and optimized by PSO: a Helmholtz resonator (20 mm neck length and diameter, 40 mm cavity diameter) and a muffler whose cavities are partitioned in three sub-cavities of width 20 mm and of similar axial extent than the RTS. Setting the increase rate

m of the RTS cavity depths to a sufficiently large value (

m = 100) and reducing the number

N of cavities down to three in the TMM turns the RTS into a locally-reacting standard muffler.

Figure 4a shows that, unlike the dissipative RTS that achieves full trapping and dissipation of the incident energy over a broad bandwidth (1.4–2.2 kHz), the standard silencers are reactive as their dissipative properties are low. However, they can achieve high TL values, as seen in

Figure 4b, but over a narrow bandwidth that does not exceed 20% around the resonance at 1270 Hz. At this resonance, less than 10% of the incident energy is dissipated, as seen in

Figure 4a.

Figure 4c shows that 90% of the incident energy is back-reflected by the standard silencers towards the noise source, in practice towards the combustion chamber of an engine. These back-pressures of high amplitudes are prone to trigger thermo-acoustic instabilities and to reduce the combustion efficiency. This drawback is avoided by the use of the RTS, as they fully trap 99% of the incident energy over their efficiency bandwidth, thereby generating minute back-reflections. Moreover, the RTS can be optimized to handle variations of the noise source frequency over specific frequency intervals, which is not the case for standard mufflers that work at fixed frequencies.

PSO has been chosen for optimizing the TL of standard silencers, but the solution is hardly changed if one uses other metaheuristic methods (GA, SA, or SUR) due to the highly convex nature of the cost function that peaks around the single resonance of such silencers. The optimization method should however be chosen carefully when optimizing an RTS whose high performance critically depends on a suitable tuning of a large number of resonances, at least equal to the number of cavities with varying depths. As shown in

Section 3.2, this choice is critical in high-dimensional design spaces.

3.1.2. Data-Driven Optimization of Regular RTS

One assumes that optimization of the RTS acoustical performance can only be performed from a limited number of data (say 40 samples) either due to the limited number of prototypes available for experimental characterization or to a limited number of accurate numerical predictions based, for instance, on computationally demanding visco-thermal finite element simulations.

The training dataset has been generated using the Latin hypercube sampling (LHS) approach [

45], as it provides diversity in the set of data. It avoids the risks of data clustering, a balanced coverage of flat or smooth regions, and a better learning from fewer samples. The input parameters have been normalized between 0 and 1 using min–max scaling law to keep inputs in the sensitive region of the activation function and to avoid saturation and poor learning. The validity of the BRNN results on low and high dimensional design spaces requires a good fit to the training and test data, as well as a high predictive performance. This has been achieved by evaluating the mean squared errors associated to the training

and test

sets, as well as the corresponding coefficients of determination

and

that quantifies how well the BRNN predictions match the actual data. The optimization validity check has been achieved by calculating the relative error between the BRNN optimal solution and the true solution obtained by the TMM, corresponding to a unit total dissipation value

, or equivalently to an “infinite” dissipation performance in dB,

. This error reduces to

.

As shown in

Section 2.3, a BRNN is well-suited for training a regression task over a small dataset. It applies automatic regularization prone to reduce over-fitting. Although all the data could be used for training the BRNN, 30% were reserved for validations tests. The BRNN uses hyperbolic tangent sigmoid activation function in the hidden layers, which naturally models saturation and plateau behaviors of the output cost function. A simple architecture of one input layer with three neurons (one neuron per parameter), one hidden layer with six neurons, and one output layer with one neuron (scalar cost function), resulting in a total number of ten neurons, was chosen. Two hidden neurons per parameter were sufficient to achieve a good fit with the training and test datasets with

and

. The BRNN predictions also well captured the training and test data, since

and

are both very close to one.

Table 1 (column

D-E40) shows that the BRNN optimal solution trained on 40 samples is very close to the reference solution (column

OPT) obtained by an exhaustive search on a finely discretized design space. The relative errors at the optimum are respectively 0.0042 for the BRNN and 0.004 for the reference solution, that shows the validity of the BRNN optimum.

Figure 5a shows that the exhaustive search of the maximum total dissipation (black-filled circles) using a BRNN trained on 40 samples produces a very good match with respect to the optimal configuration (yellow-filled circles), with a minute error on the total dissipation of 2%. It results in an acceptable reproduction of the high values of the dissipation plateau caused by merging between the individual RTS cavities and a suitable balance between the visco-thermal losses and the reflection/transmission leakages. Meanwhile,

Figure 5b,c show that the high TL and low reflection coefficient values of the model-based (

OPT) and the data-driven exhaustive search (

D-E40) RTS solutions are very similar. The D-E40 also produces an RTS solution with a weight penalty comparable to the model-based optimal solution.

A second remarkable point is that the data-driven exhaustive search solution (

D-E40) is obtained at a much lower computational cost (including training duration) than the model-based exhaustive search solution

OPT. The CPU duration is cut by a factor of 50, as seen from

Table 2, without compromising accuracy on the solution. It is comparable to the computational cost required by the model-based global optimization methods, especially the PSO. The computational cost can be further reduced by up to 17% if one uses the trained BRNN as a predictor for the global optimization methods.

Figure 3b shows that the convergence rates of the data-driven SA, SUR, GA, and PSO range from slowest to fastest, requiring 1560, 696, 151, and 35 iterations, respectively, for the optimization processes to converge. A similar trend was found on the model-based convergence rates shown in

Figure 3a, confirming that PSO provides the most accurate solution at the fastest rate in the 3D design space of a regular RTS. The downside is that the data-driven E-40 PSO, GA, SA, and SUR systematically overestimate (resp. underestimate)

m (resp.

d and

N) by at most 29% (resp. 20% and 17%). A sharper rise of the cavity depths results in a fewer number of shallow cavities activated above 1600 Hz and a decay of dissipation above this frequency, as shown in

Figure 5a. An underestimate of

d and

N results in a lower number of thinner cavities which is prone to a larger sound transmission and a lower TL that do not exceed 30 dB in

Figure 5b. The reflection coefficient in

Figure 5c remains unchanged.

3.2. Global Optimization of an RTS with Variable Cavity Widths

The optimization task now aims at finding 21 RTS design parameters—the thicknesses of the 20 cavity walls and the axial rate of increase of the cavities—that maximize the total dissipation between 1.4 and 2.2 kHz. The model-based exhaustive search of the global optimum is out of reach due to a prohibitively high computational cost of 24. Any reference optimal solution is therefore ruled out, the sole criterion being the maximum total dissipation (cost function) achieved by the different optimization methods that should be as close to one as possible. The weight factor, e.g., the volumetric fraction of material filling the RTS with variable cavity widths, is now calculated as follows: .

3.2.1. Model-Based Optimization

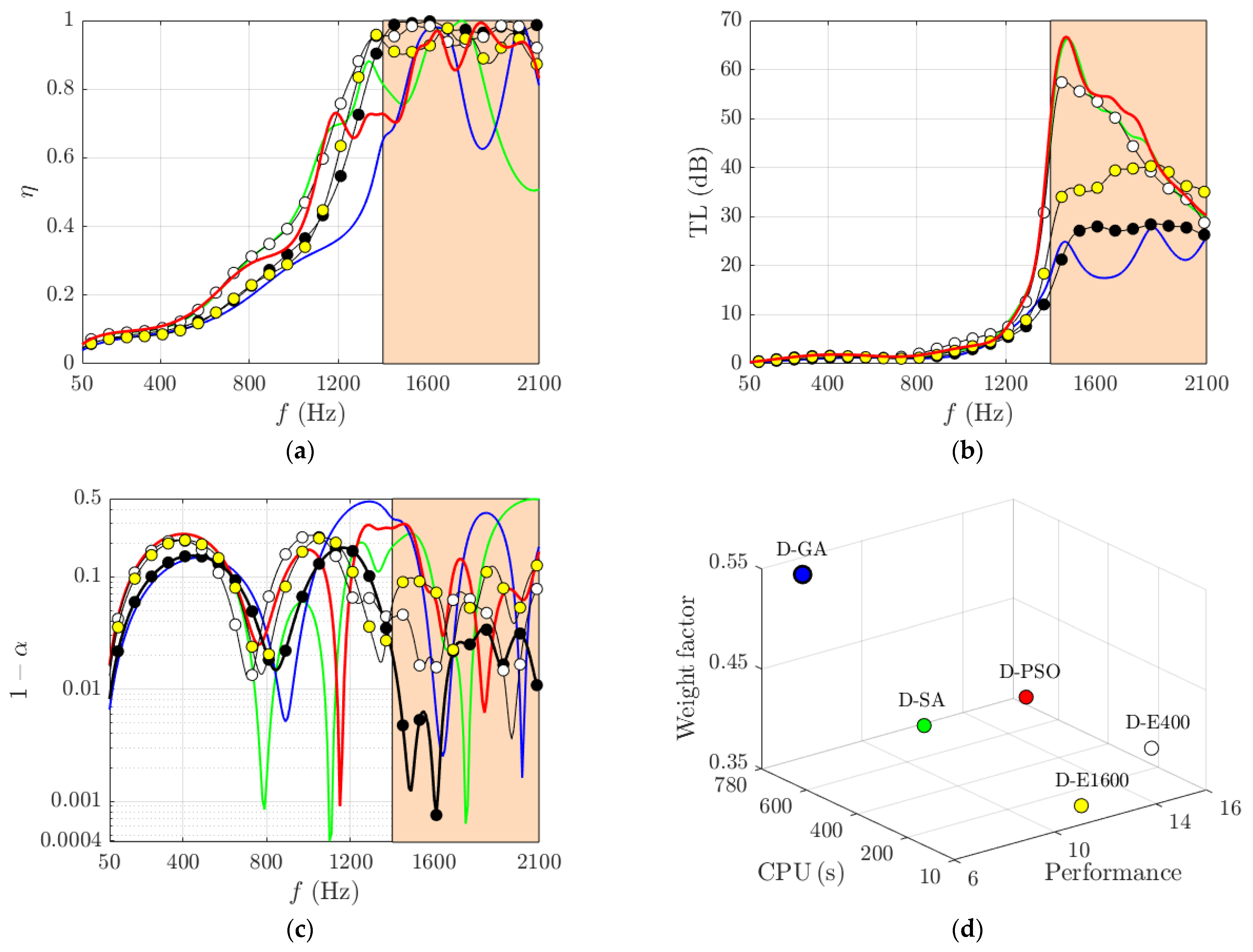

Figure 6a shows that the convergence rates of model-based SA, SUR, PSO, and GA range from slowest to fastest, requiring 26,000, 530, 431, and 181 iterations to converge. Although GA converges faster than PSO by a factor 2.4, it leads to a less accurate solution by 4.6 dB with respect to PSO, as seen in

Table 3. The converged SUR and SA lead to even less accurate solutions, respectively a 6.3 dB and 12.5 dB loss in accuracy with respect to PSO. Hence, PSO provides the best trade-off between convergence rate and accuracy for the model-based optimization of an RTS in high-dimensional design space.

Figure 7 shows that model-based PSO achieves the highest acoustical performance with a near-unit broadband dissipation above 1370 Hz, a TL peak of 85 dB at 1460 Hz, and a very low reflection coefficient dropping down to 10

−7 at 1700 Hz. It provides an RTS solution with the lowest weight factor, which is beneficial to reduce embarked mass. However, it requires a significant computational time (2640s) to converge towards the global maximum of total dissipation. The next best performance is achieved, by descending order, by the GA, SUR, and SA algorithms.

Figure 7a shows a progressive reduction of the flat dissipation bandwidth—from 700 Hz (GA) to 310 Hz (SA)—together with the growing emergence of local dips, especially for the SA solution for which coherent merging starts to degrade between the activated RTS cavity resonances. The TL peak value monotonically decreases in

Figure 7b from 65 dB (GA) to 40 dB (SA). It is accompanied in

Figure 7c by an overall increase of the reflection coefficient that stays below 1% for the SA over the efficiency bandwidth. SUR provides an acceptable acoustical performance at a computational cost cut by a factor seven with respect to PSO, but the solution provides a large weight factor, that exceeds by 37% the PSO solution. GA provides an RTS solution with acoustic performance intermediate between SUR and PSO, and with a slightly reduced computational cost with respect to PSO. The excess weight of the GA solution only exceeds by 14% that due to the PSO solution. The SA solution provides the worst acoustic performance, a large computational cost that exceeds that of PSO, and an excess weight factor of 32% with respect to PSO. Hence, GA and SUR could be suitable optimization strategies of RTSs with a large number of design parameters, as they provide solutions with an acceptable trade-off in terms of accuracy, computational cost and mass reduction, the best trade-off being achieved by PSO.

The model-based optimal solutions provide an increase rate of cavity depth ranging from

(SA, worst performance) to

(PSO, best performance) accompanied by a non-monotonic decrease of the cavity width from 5 mm down to 0.2 mm from the shallowest to the deepest cavity (except for SA, that struggles to reach the optimal solution and provides cavity widths that vary between 7 mm and 1 mm). This decrease of the cavity widths for model-based PSO, GA, and SUR compensates the low damping (or high quality factor) of the deepest cavities so that merging between the cavity resonances is enabled, as verified in

Figure 7a.

3.2.2. Data-Driven Optimization

An architecture of one input layer with 21 neurons (1 neuron per parameter), one hidden layer with 20 neurons, and one output layer with 1 neuron (scalar cost function), resulting in a total number of 42 neurons, was chosen for training the BRNN predictor on 400 samples. The number of hidden neurons roughly follows a criterion of one neuron per parameter. Training on a dataset of 400 samples showed that the number of neurons should not be too high, as BRNN regularization helps generalization but does not eliminate overfitting. However, the risk is low as the cost function is smoothly dependent on each of the 21 parameters. Indeed, well-marked ridges were observed that intersect towards local maxima when plotting with respect to 2 out of 21 parameters. The training dataset was obtained using the LHS approach. The min–max scaling law is applied to the input parameters to normalize them between 0 and 1.

Table 3 shows that the maximum total dissipation associated to the RTS solution predicted by the BRNN model amongst the training dataset (D-E400) achieves a performance (

) only 1.3 dB lower than the E400 model-based exhaustive search solution.

Figure 8a shows comparable dissipation performance of D-E400 (white-filled circles) and E400 (black-filled circles) associated to high dissipation values that stay greater than 0.92 above 1400 Hz, although they do not achieve full merging of the individual cavity resonances. The slight difference in dissipation performance translates into significantly higher TL values for D-E400 than E400 (

Figure 8b), and a higher reflection coefficient between 0.02 and 0.1 (

Figure 8c). Exploring a larger dataset (D-E1600) degrades the performance by 2.8 dB due to BRNN regularization that cannot capture local variations. However, it maintains dissipation values above 0.85, and a TL above 30 dB.

When high-dimensional optimization is performed from a BRNN predictor trained on 400 samples,

Figure 6b shows that the convergence rates of SA, GA, and PSO range from slowest to fastest, requiring 10,000, 735, and 135 iterations. As shown in

Table 3, this correlates with a gain in accuracy, that still stays lower than that obtained from model-based optimization. The systematic loss in accuracy is due to a smoother cost function landscape with respect to the model-based search region. The BRNN predictor provides an acceptable performance that is, however, lower than those obtained with three parameters. It achieves a good fit with the training and test datasets with

and

. The coefficients of determination stay above 0.9 on both the training and test data since

and

.

Table 3 (column

D-E400) shows that the BRNN optimal solution trained on 400 samples provides a relative error at optimum of 0.04, which is suitable as long as it stays below 5%.

In the high-dimensional design space (21 parameters),

Table 3 shows that the data-driven SA, GA, and PSO using a BRNN predictor trained on 400 samples provide dissipation performance lower than D-E400, as they mostly explore regions of the design space not represented in the training set, and are biased by the over-smoothing of the cost function. Despite these adverse conditions, D-PSO and D-SA manage to provide high, but narrowband performance between 1300 Hz and 1700 Hz with a dissipation around (resp. above) 0.9 for D-SA (resp. D-PSO), as seen in

Figure 8a. Significant reflections between 0.1 and 0.6 translate into high TL values that exceed 50dB within the above range, as shown in

Figure 8b,c. As indicated in

Table 3, they require a higher computational cost than direct exploration of the test samples by BRNN.

The data-driven PSO, GA, and SA underestimate the optimal rate of increase of the cavity depth as they provide values of

m ranging from 1.3 (SA) to 1.7 (PSO). Moreover, the cavity widths do not exhibit a decreasing trend from the shallowest to the deepest cavity. This explains the inability of the data-driven PSO, GA, and SA solutions to achieve resonance merging above 1.4 kHz in

Figure 8a. However, a quadratic rate of increase of

, as well as an overall reduction of the cavity widths along the RTS axial extent is recovered from an exhaustive search amongst the training dataset evaluated from the BRNN predictor. This leads to near-unit dissipation (

Figure 8a), high TL values above 30 dB (

Figure 8b), and a minute reflection below 9% (

Figure 8c) as from 1400 Hz.

4. Discussion

4.1. Model-Based Optimization of an RTS

Model-based PSO performs best with respect to other global optimization algorithms such as SA, GA, and SUR, when maximizing the total dissipation of either a regular RTS with three design parameters or an RTS with 20 cavities of variable width.

In the first case, the cost function exhibits a flat plateau with near-zero gradient with respect to the design parameters and within which lies the optimal solution. Population-based PSO is well-adapted to near-zero gradient regions due to parallel exploration of the design space. Hence, even if some particles are located within a flat region, its stochastic cognitive and social updates of the particle positions and velocity let other particles explore the plateau instead of being trapped at a zero-slope solution for which gradient-based optimizers cannot provide search direction. This persistent exploration enables PSO to reach regions of improvement, even within a flat zone.

SA explores the design space with a single solution, relying on the relative cost difference to decide whether or not to accept a new solution. Hence, it makes little progress in exploring flat regions with nearly identical costs, and does not perform as well as PSO. Also, as it enters the flat region, the cooling schedule should not be too fast, as it will stop accepting exploratory moves towards a global optimum. Because SA repeatedly samples similar points in flat regions without obtaining further information at subsequent iterations, it requires significant computational time with respect to PSO, while producing a solution with lower performance. An adaptive slow-down of the cooling process would improve the performance for the three parameters’ optimization, but at the expense of increasing the CPU time.

Flat regions are challenging for the GA as they do not provide sufficiently large fitness variations to guide evolutions. This leads to weak selection pressure without a clear advantage to pass to the next generation. Although cross-over and mutation may help explore more efficiently the flat region, this is not guided by fitness feedback, and the GA finally converges to a suboptimal solution. The SUR algorithm, albeit faster than PSO, SA, and GA, also struggles to find an optimal solution in a flat region due to a lack of informative training data since the training samples provide nearly identical output values in this region. Also, low variance of the predicted values prevents clear guidance for exploration in a flat region.

As shown in

Section 3.1.1, the suboptimal solutions provided by SA, GA, and SUR still provide high performance close to the optimal solution due to the weak sensitivity of the cost function to the design parameters in the flat region. However, they systematically overestimate the weight penalty due to a greater sensitivity of this indicator to the design parameters.

The situation is more challenging in the second case when optimizing a cost function with narrow valleys in a high-dimensional space with 21 design parameters. Once again, PSO performs better than the GA, followed by SUR and SA, as it suitably balances exploitation and exploration to navigate through such a complex landscape. Parallel search and collective memory help PSO to align and guide particles gradually through the valleys towards the global minimum. It is essentially influenced by past trajectories and social learning to smoothly adjust search directions towards promising regions. Also, PSO provides a better scalability in high-dimensional space than the GA or SA, as it searches collectively from information sharing. The GA (resp. SA) relies on mutation/cross-over and recombination tuning (resp. cooling schedules), which is hard to adapt to the shape of narrow valleys in high dimensions. Moreover, mutations and cross-over in the GA can jump out of the valley, whereas the single solution walk in SA may miss the narrow pathway or be slow to align along the valley, as verified from the large computational cost of SA reported in

Table 2. Because PSO is gradient-free, it well accommodates highly-curved valleys or near-zero slope over the valley bottom. By contrast, SUR tends to smooth out the valleys and introduces a bias due to insufficient resolution in high dimensions.

Figure 7a shows that the valley floor in which lies the optimal solution should be sufficiently flat to enable the four meta-heuristic optimization methods to provide a broadband dissipation plateau despite the suboptimal solutions found by GA, SUR, and SA. Greater sensitivity of the TL and reflection coefficient, which quantify reactive effects of the silencer, is observed in

Figure 7c,d with respect to the suboptimal parameters. Hence, model-based PSO is definitely the best candidate to achieve optimal performance in both dissipative and reactive properties of RTS silencers in high- and low-dimensional design spaces.

4.2. Data-Driven Optimization of an RTS

The data-driven methods based on BRNN drastically cut the computational cost compared to model-based methods when performing optimization in high-dimensional design space, whereas the gain in CPU time is more limited in low-dimensional space. In terms of accuracy, BRNN performs better to find RTS optimal solutions through direct search of the optimum in flat valleys governed by a small number of parameters, when training is achieved on typically on about 13× number of parameters. In a high-dimensional space, it clearly requires training on a larger dataset, at least 400 samples which corresponds to 19× number of parameters, otherwise the model struggles to fit useful patterns, as similar cost function values can be generated by different input parameters. As regularized neural networks, BRNNs favor a low-parameter model to avoid over-fitting. In high-dimensional design space, the BRNN trained on a moderate dataset led to smeared predictions across the input space, but with a high dissipation, and acceptable TL and reflection performance of the RTS solutions, due to intrinsically smooth and slow variations of the cost function with respect to the design parameters. Note that this is not generally the case as the BRNN usually generalizes poorly when trained on a small dataset.

Global optimization using a BRNN predictor in a low-dimensional design space provides RTS solutions with lower performance than direct search of the output optimum over a flat landscape, already smoothed by the BRNN predictor. In other terms, as long as the search space is small and tractable, global optimization overhead is unnecessary and direct search of the optimum using a trained model is sufficient. In high-dimensional space, global optimization from a BRNN predictor trained on a moderate dataset cannot well exploit the BRNN smoothed output and provides limited scores. The RTS solution performance improves only if the BRNN is trained over a sufficiently large dataset (say 1000 samples), but at the expense of a larger computational cost (multiplied by 10).

4.3. Robustness of the Optimization Methods to Input and Output Noises

Robustness of PSO, GA, SA, and SUR to uncertainty on the input parameters and to noise in the output cost function is assessed for low-dimensional model-based (

Figure 9a,b) and BRNN (

Figure 9c,d) predictors, as well as for high-dimensional data-driven predictors (

Figure 9e,f), high-dimensional sensitivity analysis for the model-based predictor being computationally too expensive to be estimated. The percentage error,

, imposed on the input parameters of mean value

is related to the standard deviation

of the perturbed data by

, assuming random normal distribution of the perturbation. The total dissipation is evaluated in an acoustic environment characterized by a signal-to-noise ratio SNR (dB), so that the error imposed on the cost function

reads

.

Obviously, the absence of uncertainty in the data (

) or a high SNR (typically 20 dB) leads to the performance values given in

Table 2 (

Figure 9a–d) and

Table 3 (

Figure 9e,f).

Figure 9 shows that, as expected, the acoustic performance degrades in all cases when increasing the errors on parameters up to 10% or when decreasing the SNR down to 5 dB. For low-dimensional model-based optimization of the RTS, PSO, SA, and GA similarly provide good robustness to errors in parameters with a loss of performance that does not exceed 1 dB (

Figure 9a). The loss of performance is much greater (up to 18 dB) when decreasing the SNR in the following order PSO, GA, SA from the least to the largest loss. The SUR method provides the lowest robustness to input noise, especially for

, and with a low SNR.

For low-dimensional data-driven optimization of the RTS, the robustness of SUR is higher than the model-based optimization, and comparable to the other optimization methods. PSO and GA lead to 1.2 dB performance loss when increasing the error on input noise up to 10%, which is similar to the robustness of the model-based optimization. SA is highly sensitive to small errors in the parameters, although it reaches the same loss performance as PSO and GA for . The loss of performance (12 dB) when decreasing the SNR down to 5 dB is similar for the four methods and lower than that observed for the model-based methods (18 dB). Overall, the robustness of data-driven optimization to input noise (resp. output noise) is comparable (resp. higher) with respect to model-based optimization. This is due to the BRNN predictor which can capture cost function trends with fewer data points, leading to better generalization.

Figure 9e,f show that the robustness to input and output noise decreases for high-dimensional data-driven optimization of the RTS, with losses of performance of 4 dB (PSO, GA) and 1.5 dB (SA) when adding at most 10% errors on the parameters. They reach 7 dB (PSO), 5 dB (GA), and 3 dB (SA) when evaluating the cost function in a noisy environment (SNR = 5 dB). Errors in input parameters during training can be amplified by the BRNN, leading to misleading gradients or plateaus. More training data is then required, as similar cost values can arise from different combinations of inputs.

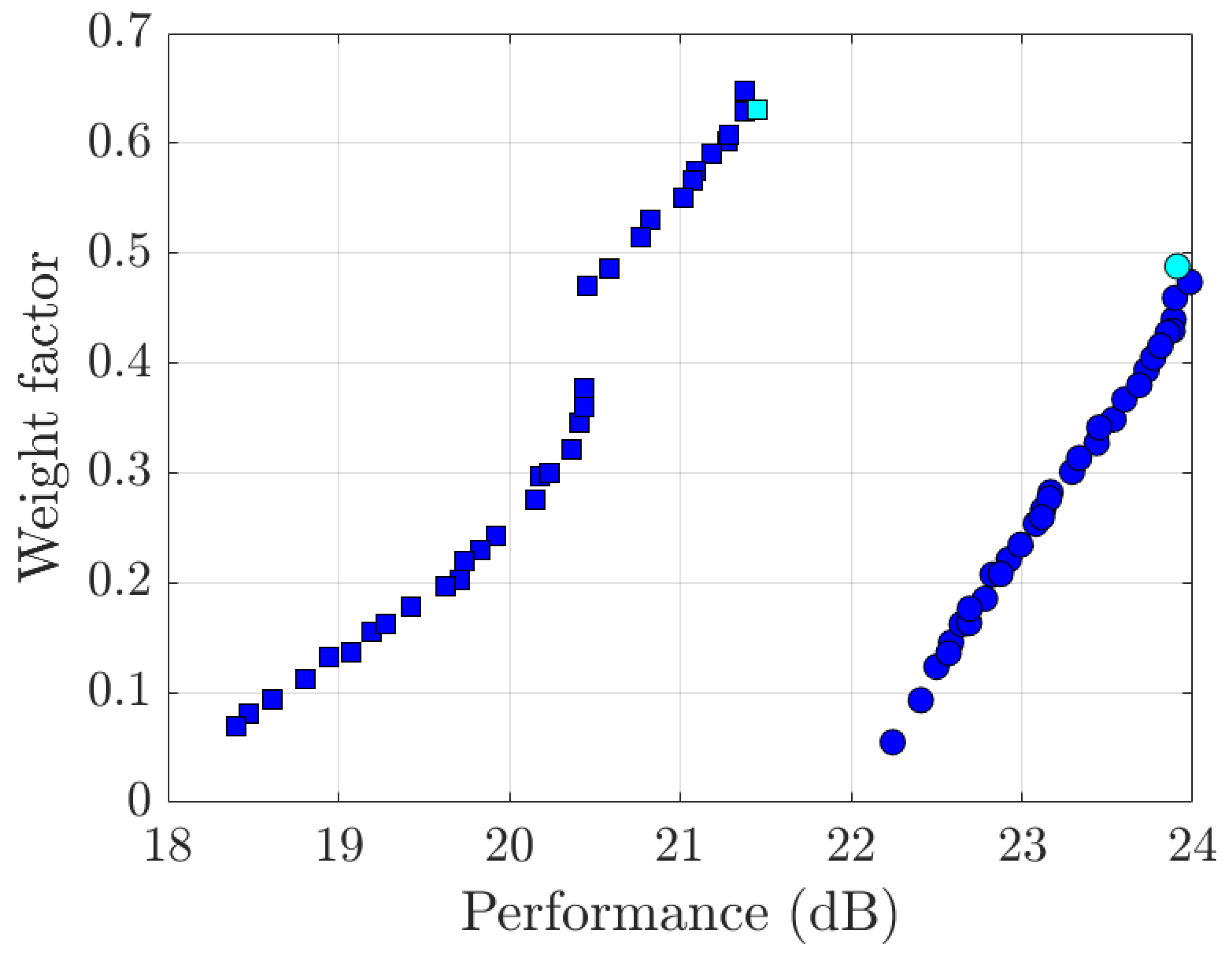

4.4. Bi-Objective Optimization of an RTS

Achieving a minimal weight factor while maximizing the performance of the RTS would be a key leverage to achieve industrial viability of the innovative mufflers.

Figure 10 compares the model-based and data-driven Pareto sets calculated from GA bi-objective optimization of three RTS parameters, assuming the same parameter bounds as in

Section 3.1 and the same GA hyperparameters as in

Table 1. Sole optimization of the RTS total dissipation (cyan markers) corresponds to the highest acoustical performance, but also produces the greatest weight penalty. Concerning model-based optimization, a linear trade-off exists between weight reduction and increase of the acoustic performance. The solutions are non-dominated and well-distributed along the front, offering a broad range of design choices. The leftmost point achieves a lightweight RTS with wider cavities, but with a non-flat dissipation curve between 1.4 and 2.2 kHz due to insufficient visco-thermal losses within the cavities. The rightmost solution is acoustically performant due to thin cavities that maximize the visco-thermal dissipation effect, but that increase the RTS filling fraction and weight.

As seen in

Section 3.1.2 and

Table 2, the mono-objective GA optimization from the BRNN predictor provides acceptable, but still lower acoustical performance (by 2.5 dB) with respect to model-based optimization. This is still the case under bi-objective GA optimization, as shown in

Figure 10, with a data-driven Pareto front downshifted with respect to the model-based Pareto front. Two sets of linear trade-off are obtained along the data-driven Pareto front: one with high acoustical performance (greater than 20.5 dB), but with a significant weight penalty (larger than 46%), and a second one with a lightweight RTS (filling fraction below 38%), but with a lower performance ranging between 18.4 dB and 20.5 dB. This noticeable gap in the central region of the Pareto front suggests that intermediate trade-offs are harder to achieve from the data-driven bi-objective GA optimization of the RTS. Therefore, the model-based bi-objective GA optimization yields a Pareto front that dominates that of the data-driven bi-objective GA optimization, indicating better compromise solutions across the RTS weight and performance objectives.

5. Conclusions

Model-based PSO outperforms other global optimization methods, such as SA, GA, and radial basis function surrogate (SUR) optimization, in maximizing total acoustic dissipation of the RTS, both for a standard 3-parameter configuration and for a 21-parameter design involving variable-width cavities. For the low-dimensional case, while SA, GA, and SUR yield acceptable dissipation due to the flatness of the cost unction, they systematically overestimate the weight penalty, reflecting higher sensitivity in that metric. In high-dimensional optimization, PSO again proves superior, efficiently balancing exploration and exploitation so that particles are guided along the curved valley paths of the cost function landscape. Although computationally more efficient, SUR smooths out narrow features and introduces surrogate bias due to insufficient resolution.

BRNNs significantly reduce computational cost compared to the model-based approaches when optimizing the RTS total dissipation in high-dimensional design spaces, whereas the time savings are modest in low-dimensional problems. In low-dimensional cases, direct optimization on the BRNN output performs well, making global optimization unnecessary. However, in high-dimensional spaces, BRNNs require larger training datasets to capture meaningful patterns, as similar cost values may arise from different combinations of the cavity width variables. When trained on limited data, BRNNs tend to over-smooth the input space. However, suitable performance in dissipation, TL, and reflection can still be achieved due to the inherently smooth nature of the cost function. Meta-heuristic optimization with the BRNN predictor produces limited performance in the high-dimensional design space unless the network is trained on a sufficiently large dataset (at least 50× the number of parameters), however at the expense of an increased computational cost.

In low-dimensional optimization of the RTS, both the model-based (PSO, GA, SA) and the data-driven (BRNN-based) methods show good robustness to input errors, with less than 1.2 dB performance loss. However, performance significantly degrades with reduced signal-to-noise ratio (SNR), especially for the model-based methods (up to 18 dB loss). Data-driven approaches exhibit better robustness to output noise due to the generalization capability of the BRNN. In high-dimensional cases, the robustness decreases overall, with performance losses up to 7 dB, as BRNNs may amplify input noise, requiring larger training datasets for reliable prediction.

The model-based bi-objective optimization of the RTS yields a well-distributed Pareto front showing a linear trade-off between weight and acoustic performance, with superior results compared to the data-driven BRNN-based optimization. The latter produces a downshifted Pareto front with a performance gap in the mid-range, indicating difficulty in capturing intermediate trade-offs, and consistently underperforms by up to 2.5 dB in acoustic performance.

The limitation performance of the model-based and data-driven global optimization methods has been assessed using datasets generated by the cost-efficient TMM, thus providing guidelines on the most appropriate optimization scheme with tractable CPU times. This choice is strategic when the datasets of the RTS dissipation properties are generated by a computationally demanding visco-thermal finite element model that requires a highly-resolved mesh near the walls in order to capture the visco-thermal dissipation effects. It is anticipated that a direct search of the RTS optimal solution using a BRNN trained on a sufficiently representative dataset will be cost-efficient to provide accurate dissipative properties. It should be accompanied by optimization of the attenuation or reflection properties to find the range of design parameters prone to enhance either dissipative or reactive effects.