Smart Textile Design: A Systematic Review of Materials and Technologies for Textile Interaction and User Experience Evaluation Methods

Abstract

1. Introduction

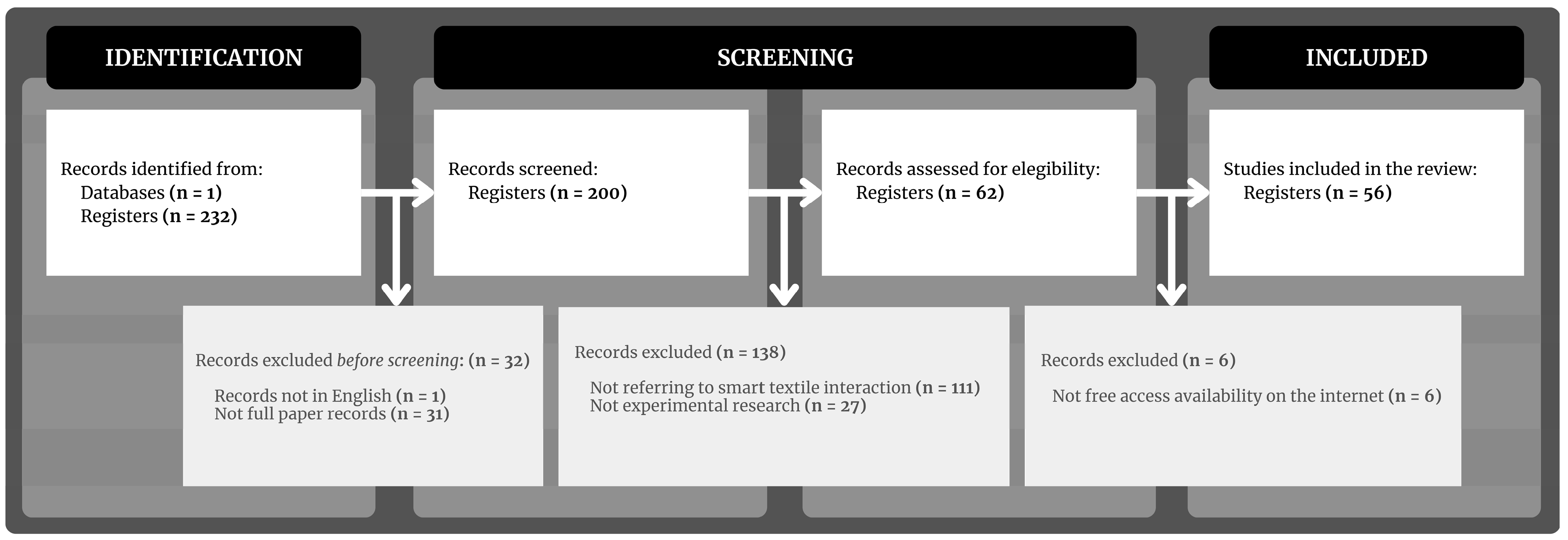

2. Materials and Methods

3. Results

- Single-sensory: audio (P39; P53); visual (P24; P32; P35; P50; P54); touch/haptic (P2; P14; P17; P23; P25; P31; P40; P41); gesture (P21; P55; P56);

- Multisensory (P1; P3; P4; P5; P6; P7; P8; P9; P10; P11; P12; P13; P15; P16; P18; P19; P20; P22; P26; P27; P28; P29; P30; P33; P34; P36; P37; P38; P42; P43; P44; P45; P46; P47; P51; P52);

- Technical performance (P1; P2; P8; P9; P12; P14; P15; P17; P23; P24; P28; P31; P36; P37; P42; P44; P48; P49; P55; P56).

- Feelings and emotions representation (P5; P19; P25; P32);

- Social engagement (P3; P9; P13; P16; P29; P38; P46; P50);

- Emotion and sensory regulation (P4; P26; P29);

- Self- and surrounding-perception alteration (P4; P5; P18; P35; P40; P41; P45);

- Identity and self-expression construction and communication (P51; P53);

- Emotional responses to interactive behavior (P4; P7; P11; P17; P20; P26; P29; P32; P40; P41).

3.1. Materials and Technologies

3.2. UX Evaluation Methods

4. Discussion

- Single and multisensory interaction: It is possible to create experiences with a focus on individual human senses or to design for multisensory interaction. Haptic, visual, and auditory feedback enable sensory interactions commonly explored with smart textiles, which directly influence emotional metrics, such as valence (positive/pleasant or negative/unpleasant), arousal (emotion intensity), and stress levels, whose data can be gathered through self-assessment questionnaires or physiological measurement (GSR/EDA). Designers must explore the materials and technologies used in light of the desired sensory interaction and their effect on UX. For example, using technologies like thermochromic threads, ultra-violet organic light-emitting cells (UV OLECs), and LED feedback systems can create a visually dynamic interaction that aligns with emotional engagement. Additionally, single-sensory does not limit textile interaction to a single output, e.g., vision vs. textile color and light behavior, consisting of a single sense with multiple outputs.

- Input and output system: Smart textiles can integrate sensors able to detect and collect data from the user(s) and/or environment and also embed actuators that enable response to input data. The sensed stimuli may include inputs that can be controlled at a certain level by the user, such as defined gestures in contactless detection, or can regard uncontrolled data, e.g., physiological sensing. The integration of sensors, such as proximity detectors and embroidered electrodes, enables signal acquisition of the textile artifacts, which can occur even in dynamic conditions. On the output side, the actuator response can be direct or programmed, ranging from haptic (vibrotactile) to visual (LEDs, thermochromic threads) and auditory systems. These outputs may provide users with immediate feedback, which elevates the functional and emotional dimensions of the interaction. To design effective input–output systems, it is important that the designer comprehends the relationship of the smart textile sensing, actuating, and processing structure and explores design possibilities that can enable personalized and relevant UX to address both functional and emotional user demands.

- UX evaluation: Since materials experience extends beyond physical characteristics, it is crucial to assess the UX and the evoked emotions and take the results into consideration in the design process. The projects that reached the UX evaluation phase demonstrated a great contribution to the HCI field, both for the improvement of the same project or as a reference for other projects. Combining qualitative and quantitative methods of UX evaluation allows a more comprehensive knowledge regarding the interaction between the user and the textile interface in a specific context.

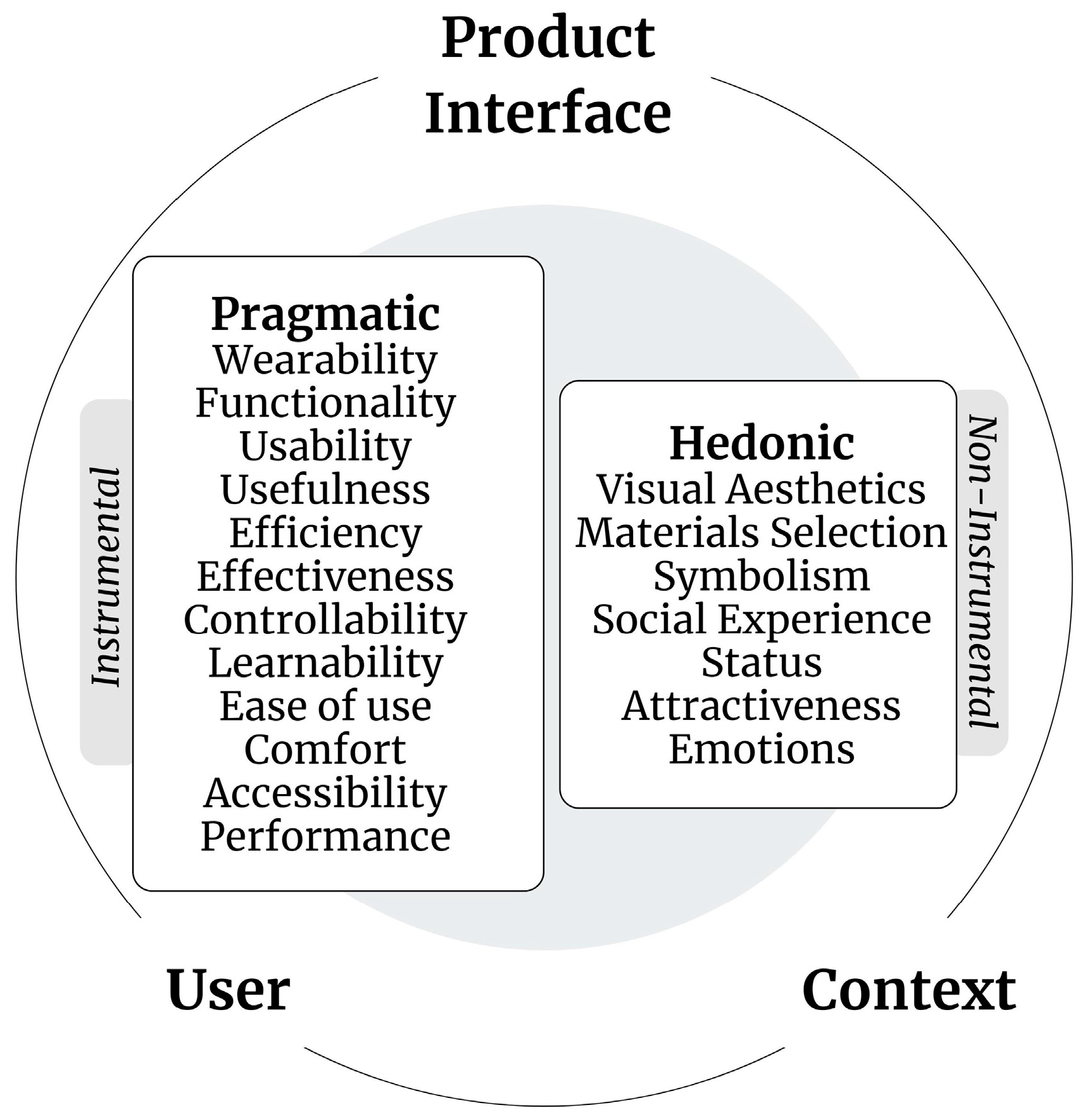

- Pragmatic and hedonic dimensions: Smart textile tangible and interactive features, influenced by selected materials and technologies, impact both usability and the user’s emotional experience. For instance, the integration of customizable features and multisensory feedback not only meets practical needs but also invites users to express their creativity and individuality, making the technology feel more personal and less intrusive. To assess these responses, the UX evaluation must cover the pragmatic and hedonic dimensions in a holistic approach. Furthermore, including a balanced interplay between attributes in the ideation/prototype and evaluation phases of the smart textile interaction design process can enhance overall UX.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

Abbreviations

| 2DST | Two-dimensional signal transmission |

| AI | Artificial intelligence |

| AR | Augmented reality |

| ASMR | Autonomous Sensory Meridian Response |

| BCTB | Bare Conductive Touch Board |

| BLE | Bluetooth Low Energy |

| BMIS | Brief Mood Introspection Scale |

| CEQ | Credibility and Expectancy Questionnaire |

| CCM | Color change material |

| CMU | Capacitance measurement unit |

| CNTs | Carbon nanotube |

| CO | Cotton |

| CPU | Central processing unit |

| CRS | Comfort Rating Scale |

| DE | Dielectric elastomer |

| DIY | Do-it-yourself |

| ECG | Electrocardiogram |

| EDA | Electro-dermal activity |

| EEG | Electroencephalography |

| EM | Evaluation method |

| ERM | Eccentric rotating mass |

| FER | Facial emotion recognition |

| FES | Functional Electrical Stimulation |

| GQS | Godspeed Questionnaire Series |

| GSR | Galvanic skin response |

| GUI | Graphical User Interface |

| HCI | Human–computer interaction |

| HRV | Heart rate variability |

| IMI | Intrinsic Motivation Inventory |

| IMU | Inertial measurement unit |

| IoT | Internet of Things |

| IR | Infrared |

| ISO | International Organization for Standardization |

| LED | Light-emitting diode |

| Li-Po | Lithium polymer |

| M&T | Materials and technologies |

| MF | Main finding |

| MUX | Multiplexer |

| PCB | Printed circuit board |

| PDMS | Polydimethylsiloxane |

| PES | Polyester |

| PLA | Polylactic acid |

| PMMA | Polymethyl methacrylate |

| POF | Polymeric optical fiber |

| PSI | Perceived Social Intelligence Survey |

| PVA | Polyvinyl alcohol |

| RFID | Radio Frequency Identification |

| RGO | Reduced graphene oxide |

| SAM | Self-Assessment Manikin |

| SAP | Super adsorption polymer |

| SBC | Single-board computer |

| SCERTS | Social Communication, Emotional Regulation, Transactional Support |

| SDS | Semantic Differential Scale |

| SE | Sensory ethnography |

| SMM | Shape memory material |

| SPSS | Statistical Package for Social Sciences |

| STQ | Social Touch Questionnaire |

| SUS | System Usability Scale |

| TAM | Technology Acceptance Model |

| TENS | Transcutaneous electrical nerve stimulation |

| TiO2/Ag | Silver-doped titanium dioxide |

| TMMS-24 | Trait Meta-Mood Scale |

| TPU | Thermoplastic polyurethane |

| UEQ | User Experience Questionnaire |

| UI | User interaction |

| USB | Universal Serial Bus |

| UTAUT | Unified Theory of Acceptance and Use of Technology |

| UV OLECs | Ultra-violet organic light-emitting cell |

| UX | User experience |

| VI | Visually impaired |

| VOC | Volatile organic compound |

| VR | Virtual reality |

| Wi-Fi | Wireless Fidelity |

Appendix A

| Code Reference | Main Focus | Summary |

|---|---|---|

| P1 [64,65] | Research a multimorphic textile artifact that reacts to water exposure by shrinking and dissolving fibers, designed for performativity to support open-ended interactions and use in multiple contexts in daily life. | M&T: Tea towel woven with PVA water-soluble yarn. The first prototype (AnimaTo V1.0) was single-layered and made with CO and PVA in a manual loom, and the second prototype (AnimaTo V2.0) was triple-layered and made with linen, CO, and PVA in industrial TC2 digital Jacquard loom. EM: AnimaTo V1.0 user test included interactions between the first author and other people in personal contexts through day-by-day photos, written accounts, and observations. AnimaTo V2.0 user tests were not conducted. MF: Through a material-driven design approach, material tinkering that reacts to water exposure via the shrinking and dissolving of its fibers with multimorphic qualities was carried out to achieve changes in the texture, size, and shape of the prototype. “Insights into how designers can tune material (textile), form, and temporal qualities of textile artefacts across scales towards multiplicity of use, recurring encounters and extended user-artefact relationships” were provided. |

| P2 [65] | Present the development of a flexible smart DE-based sensing array for user control inputs. | M&T: A wearable touchpad containing a silicone-encapsulated pressure detection 3 × 3 DE sensor array made of a sputtered metal electrode connected via crimping method (with crimp connectors, from Nicomatic, and conductive adhesive fleece material, from Imbut GmbH) to custom electronics (multiplexers, capacitance measurement unit, a microcontroller and power supply with a battery charging unit), that are realized with standard PCB in the presented prototype. EM: No UX tests performed. MF: The miniaturized, flexible, and self-standing sensing system demonstrated a reliable and safe electrical connection. The application potential for a highly integrated textile-based sensor system and its ability to offer intuitive and unobtrusive UX for ongoing interactions are emphasized. It is suggested to include the actuation capability of DE systems for tactile and audio-tactile feedback to enhance user interaction (UI). |

| P3 [66] | Present a modular play kit that connects children to nature through embodied play. | M&T: Modular playkit composed of a round textile carpet with detachable felt leaves, interaction cards, and wireless electronic modules fixed through elastic bands and special pockets into the textile structures. The presented prototype used SAM Labs modules that provide interactive features such as light, sound, and vibration, connected to each other and to a controller via Bluetooth. EM: No UX tests. However, prototype evaluation is proposed as future work, with children of different ages and backgrounds engaging with the play kit in various contexts. MF: It was presented as “a design example that connects open-ended play experiences with more-than-human perspectives”, focusing on “meaningful interactions with/for children engaging with non-humans and nature”. The project combined participatory with speculative design approaches—by including children and by empathizing with trees, respectively, in the design process. With the shapes and functions of the materials designed for the modules, children can collaboratively create the story of a tree and its surroundings throughout the four seasons and learn together through collective interactions. The optional use of detachable electronic modules “allow the users to enrich the played scenario with interactive features”, giving them the possibility to combine a variety of sensors (e.g., buttons, light, movement sensors) and actuators (e.g., sound, vibration, light) to achieve desirable scenarios. “To accommodate children of various developmental levels and knowledge backgrounds, Treesense provides three different difficulty levels for exploration”, which vary the use of electronic modules. |

| P4 [67] | Explore how design choices and psychological values influence users’ emotions through a soft-robotic shape-changing textile installation designed for mindful emotion regulation. | M&T: Multilayered central ball with inflatable tubes, composed of two input–output systems: (1) a pulse sensor capturing user’s real-time heart rate signals via conductive wires to provide the “coral’s” illuminating color and volume changes through LEDs and an air control system composed by an air pump and a solenoid valve, respectively; and (2) touch sensors made of Velostat conductive fabric, as illustrated in previous work [68], promoting shape-changing movements on the inflatable tubes through a pneumatic actuation system (not described). EM: 55 participants (25 men and 30 women, 25–55 yo) were assessed before, during, and after interaction through closed-ended questionnaires—(1) background demographic questionnaire, (2) TMMS-24, (3) SAM, (4) BMIS, (5) 14-item adapted version of the STQ, adapted versions of (6) the GQS and (7) the PSI, both containing only dimensions appropriate to the study—physiological measures—(8) facial expressions video-recorded by a mobile phone and analyzed through Morph Cast software—and (9) think-aloud method. MF: A design and evaluation framework for the prototype was developed and proved the successful achievement of the study’s goals. The prototype was perceived as animated, likable, interesting, safe, and socially and emotionally intelligent, evoking positive emotions in a somaesthetic experience considered pleasant, calm, positive, and relaxed. Individual differences in human–robot interaction demonstrated to influence the interactive experience. Five prominent themes, generated from the verbal reports during the experience, were analyzed regarding the somaesthetic experience and the relationships with applied materials and interactive behavior. Guidelines for further studies regarding design (e.g., adding more sensors, exploring other textile shapes and properties, the materiality and morphology of the soft robot, material behavior and haptic action, and forms of customization/personalization) and user testing (e.g., exploring advanced AI models for emotion recognition, applying pre-interaction assessment, combining accurate physiological sensors for interaction and for evaluation) were proposed. |

| P5 [58] | Present the collaborative design process of an interactive textile musical instrument that expresses the experience of chronic pain. | M&T: Wearable artifact that consists of a large centerpiece joined with five interactive arms. The centerpiece is made of felted PES–wool mix fabric containing a Bela Mini SBC—with a multichannel expander and a battery power bank—linked to five piezoelectric contact microphones (as sound and touch sensors, one for each arm)—coupled with an envelope follower and synthesizers—through a mono jack cable. For the output, the SBC can send signals to headphones through a stereo minijack cable or be connected to speakers through a wireless transmitter. EM: Self-reporting of an interactive dance performance with the prototype. Audience experience evaluation not carried out. MF: Contributions of the paper include (1) design process description based on autobiographic somatic exploration and collaborative material exploration; (2) technical specifications of a new textile-embodied interface; and (3) reflections on the impact of interdisciplinary collaboration on the artifact design and its use in performance. The various interaction features of each arm as modules enabled dialogical contact between textile and sound design materials and processes. “Sound designs have a complementary function to touch, inviting or discouraging certain ways of interacting with the artifact”. Performance audience experience assessment, entailing researching interpretability through interviews, was intended as further study. |

| P6 [69] | Explore how to combine capacitive sensors with the tactile qualities and storytelling possibilities of handmade woven tapestries for the design of an interactive, media-immersed art installation that expresses the negative impact of noise pollution on marine life ecosystems. | M&T: For tests: A BCTB microcontroller (with capacitive electrodes) integrated with copper tape, a metal clip, conductive thread (all from DFRobot), and conductive wool (dyed with Bare Conductive electric paint diluted in water-based ink). Weaving samples with conductive threads and felt made with wool and conductive fiber were built. For the final prototype: A woven textile structure joined with textile pieces made with conductive threads and felt as sensors, with two magnets on the back, connected to the microcontroller. EM: No UX tests performed. However, it presents a detailed overview of the user journey. MF: The importance of iterative prototyping of the project, carried out in four cycles—(1) the overall design; (2) weaving techniques, textures, and smart materials; (3) narrative progression; and (4) user interaction, focusing on active participation—was emphasized. The exploration of conductive materials combined with weaving samples, as well as with other textile techniques, was essential to test their conductivity in different structures. All materials were successfully tested, except electric paint, which did not achieve the desired conductivity. “Placing interaction areas as far apart as possible for the final piece design is crucial to prevent sensing issues”. Although audio media was used to communicate the narrative and provide cues about the interaction, sound actuators are not described. Future explorations include assessing the UX through an in situ deployment study. |

| P7 [70] 1 | Report usability evaluation of an illuminative contactless textile with gesture recognition controlled by computer vision. | M&T: Gesture-controlled illuminative textile system that consists of POF fabric and computer vision via an integrated camera and a minicomputer, described in previous work [18]. EM: (1) Observation of 25 participants fulfilling 7 hand number gestures performance tasks registered by a stopwatch when finished, conducted in an enclosed room with subdued lighting. (2) Closed-ended questionnaires: a 10-point Likert-type learnability scale, a 6-point UEQ, and a 5-point SUS. MF: Participants enjoyed using natural number gestures to trigger color changes, positively reacting to the prototype’s attractiveness, perspicuity, and stimulation despite lower efficiency ratings. Future research should involve left-handed gestures, more participants, and interviews for qualitative feedback. For the prototype development, they intend to explore the feasibility of the product and investigate alternative technologies to improve the response stability. |

| P8 [71] | Explore the feasibility and design possibilities to integrate knit structures and pneumatic actuators in soft robots to enhance user wearable experiences. | M&T: Knit sleeves made with nylon and elastane yarns with inserted pneumatic actuators made of EcofexTM 00-10 silicone gel, whose molds were made by 3D printing with polylactic acid (PLA). EM: No UX tests. MF: Knitted structure, tension, and needle layout influence pneumatic actuators’ inflation and deformation. “Practical solutions for integrating pneumatic actuators seamlessly into wearable textiles, thereby unlocking new possibilities for human-centered robotic systems” were provided. Other knitting techniques with different yarn properties should be considered for further exploration, as well as quantitative research methods to evaluate technical performance and the integration of pneumatic actuators in a digital system. Although the paper cites the aim to enhance wearability, usability tests are not conducted, being intended for future studies. |

| P9 [72] | “Explore the interplay between interactive textiles, dual-reality immersive technologies, and social engagement in both physical-digital architectural space”. | M&T: Conical structure coupled with modular textile panels knitted with silver conductive yarns (3× 210D/Denier, from Weiwei Line Industry). Each panel is embedded with two antennas—serving as sensors by “monitoring variations and distribution of the e-field” through the detection of body movements and locations—and is connected to a Teensy 3.1 microprocessor sending signals to a computer that is connected to a 4-channel distributed speaker system (playing AI-generated music and linked to an ambisonic spatialization module) and robotic lighting system (rotating head light module composed by RGB beam lights and a motor) in the central meeting point, where it is also located a stainless-steel ornament working as a field transmitter. The VR system was developed in the Unity environment, which provides a real-time and connectionless data transmission method. EM: Preliminary observation of UI with the installation in exhibitions. MF: By utilizing various sensors, users can be accurately situated within the installation, controlling sound characteristics and volume across panels for an immersive experience. Users could “control the movement and intensity of the lights as they walk (green), dance (blue), and interact (movement of the light) within the space and each fabric panel of the installation”. Foreseen exploration on expanding both physical and digital dimensions of the installation, as well as more in-depth interactions and UX. |

| P10 [73] | “Explore the relationship between gestural input and the output of a deformable interface for multi-gestural music interaction” from the user’s perspective. | M&T: A deformable TPU fabric interface with a five-vertical-line pattern array with two circles at the end of each line inkjet-printed with SicrysTM I60PM-116 conductive ink (based on single-crystal silver nanoparticles) connected to an Arduino UNO microcontroller through crocodile clips and conductive wire. For UX tests, the prototype was connected to a laptop with a headset. EM: (1) Exploratory workshop. (2) Observation of user engagement with the probe recognizing four different gestures to control six sound-effect variations. The individual sessions with 12 participants were audio-recorded and included quantitative measurement—(1) to match sound effects to gesture inputs and (2) to indicate their confidence level through a 5-point scale—and (3) open-ended interviews regarding their choices. MF: The interplay between gestures and sound as input–output presents significant effects on how people interact with the system. TPU fabric was demonstrated to elicit feelings toward sound and allowed the identification of diverse gestures it evoked, enhanced sensory experience by combining touch and hearing and was viewed as attractive, easy, intuitive, playable, and memorable by participants, as well as enabled the mapping of richer and diverse sound parameters compared to rigid input methods. Limitations include a lack of maturity of printed sensor technology. Further studies include the use of (1) other deformable materials, (2) their combination to create more complex gestural interaction, (3) richer sound parameters control, (4) machine learning and other sensing technologies, and (5) deformable flat interfaces in other domains. |

| P11 [74] | Examine how auditory manipulation influences the user’s perception of digital fabric textures through contactless mid-air haptic stimuli. | M&T: (1) Nine fabric samples and a mid-air haptic device (providing contactless haptic feedback) connected to a computer for single-sensory (touch) interaction. (2) One fabric sample and a mid-air haptic device connected to a computer with Sennheiser 400 s headphones for multisensory (touch and hearing) interaction. EM: (1) Closed-ended questionnaire (4 items: 3 × 7-point and 1 × 9-point Likert scale) with 37 participants regarding single-sense (touch) feedback. (2) Closed-ended questionnaire (3 items: 2 × 0–100 scale and 1 × 9-point Likert scale) and semi-structured interview with 18 participants assessing multisensory touch and hearing senses feedback. MF: Audio-tactile experiences with haptic textures in the air modify touch perception and elicit a broader spectrum of emotional reactions, leading to enhanced experiences that can be deeply immersive and captivating for users. In single-sensory evaluation, it was crucial to avoid influences from other senses, such as in the haptic test, where participants used noise-canceling headphones. |

| P12 [75] | Introduce the “multiscale interaction” concept to facilitate the “enhanced delivery of information via textile-based haptic modules” through research on the depth and detail of users’ haptic experiences, considering selection between body-scale and hand-scale interactions. | M&T: Two multiscale haptic textile bands (8- and 4-channel) with pneumatic channels individually linked to an electronic solenoid valve (SYJ314M-6LOZ, from SMC Pneumatics) and air supplied by an air compressor tank (9461K21, from McMaster-Carr) through a pressure regulator (8083T1, from McMaster-Carr) controlled by a computer. EM: Observation of three experiments conducted with 16 participants evaluating interaction efficacy through identification tasks in a Graphical User Interface (GUI). MF: The multiscale paradigm matches the accuracy of body-scale and hand-scale interactions, allowing user flexibility in interaction preferences. To assess only haptic interaction, users were avoided from receiving visual and auditory feedback during user testing. Limitations included encoding of information, scalability of the paradigm, and minimal coverage. Future studies on fully textile-based haptic wearables are suggested. |

| P13 [56] | Present the design of a kinesthetic garment that transforms somaesthetic singing experiences into haptic gestures for intersubjective experiences, evaluated by a micro-phenomenological approach. | M&T: A corset constituted of 6 haptic textile modules with geometric embroidered integration of OmniFibers [48] for fluidic haptic pneumatic actuation connected, via polyurethane connection tubes, to three FlowIO pneumatic controllers coupled with a pressure regulator and a compressed air tank. The software stack was composed of a laptop as a central controller—transducing audio, pressure, and strain inputs via machine learning—connected to each FlowIO controller through USB cables. EM: (1) Preliminary UX observation and think-aloud experiment with a singer. (2) Two sessions with 3-person audiences wearing the prototype (first for HCI researchers and second for musicians) during live performances, each followed by micro-phenomenological interviews. MF: By bridging internal and external bodily sensations, the prototype proved to dissolve the barriers between the singer, audience, and performance and create a sense of a shared intercorporeal experience. The interview results were thematically analyzed, generating 5 themes related to the somatic intra- and intersubjective UX: (1) somatic materialization of voices; (2) narrative dialogues and dinaesthetic sharing; (3) dissolving boundaries; (4) suspension of time and space; and (5) wearing the performance. These findings led to design contributions, which included “Designing for Intersubjective Experience”, introducing the concept of “intersubjective haptics”, and “A Somatically Grounded Design Process”. |

| P14 [76] | Introduce a design and fabrication pipeline “to integrate passive force feedback and binary sensing into fabrics via digital machine knitting.” | M&T: Textile unit with patches made of plated stainless-steel ferromagnetic yarns (from Filix). As a counterpart unit, the fabric has a split pocket made of PES (for passive haptic feedback) and conductive yarn (for binary sensing) containing a permanent magnet (Neodymium N52, from K&J). Except for the magnet, all items are fabricated by an industrial digital knitting machine. EM: Preliminary user studies embraced the observation of (1) identification task: 8 participants experimenting with a glove containing the prototype; (2) fulfilling tasks with time completion recording: 6 participants wearing a watch with the prototype. MF: The sensing mechanism combined with passive force feedback enables interface designs with input and output functions. Participants demonstrated high accuracy in force feedback discrimination and rotation tasks, validating the prototype’s effectiveness. Contributions: (1) six passive haptic interaction designs; (2) parametric design templates; (3) material and fabrication process specifications; and (4) potential application scenarios and artifacts. Future research: (1) integration of programmable magnetic materials into fibers or textiles, (2) user studies to validate their perception during interactions with various designs. |

| P15 [77] | Study heat and movement-sensitive sonic textiles in order to promote users’ awareness of heat exchanges and enhance their felt experience of warmth. | M&T: A blanket and a sweater/wearable blanket made with self-fabricated wool textiles, each containing two piezoelectric microphones (as sound and touch/movement sensors) with an envelope follower and a LM35 temperature sensor, integrated into the inside, all connected to a Bela Mini SBC powered by a 3.7 V Li-Po battery (charged through USB charger). For the output, fabric speakers were built on the textile surface composed of a coil made of copper wire, plastified paper, a magnet, and an amplifier powered by a 9 V battery. Crochet patches covered up electronic components, which were insulated by a layer of synthetic fabric. EM: (1) Quantitative listening test to compare sound models through a rating scale and validate the warmth sonification metaphor, carried out with 30 participants through PsyToolkit online platform. (2) Qualitative physical UX test with the prototypes (containing only the best-rated sound model identified in the listening test) being worn by 10 participants (5 participants tested each prototype in audio and video-recorded individual sessions) followed by think-aloud methods and semi-structured interviews, to understand how users relate to them. The first phase of the UX test was conducted indoors, and the second after a walk outdoors in wintertime to evaluate different thermal conditions and (un)controlled sonic space. MF: Adding fabric speakers allowed to reduce the bulkiness of hard components on the textiles and enhance users’ comfort. The sweater/wearable blanket shape allowed the designer to have more control over the experience, while user experience with blanket shape is “much more open since it can be used in very different ways and the position of sensors and speakers is unpredictable”. This influenced the sense-making perception regarding the relationship of inputs–outputs. “The distinction between heat and movement-sensitive aspects of the sound models was influenced by the different time-scales in which the temperature sensor and the piezo disks operate”, with the first being slower and the second faster. Multimodality sensing entanglement influenced users’ perception regarding prototypes’ function. Users’ emotional reactions could be gathered, such as the positive effect of providing bodily temperature self-awareness. |

| P16 [60] | Examine how sensorial textile-based spatial objects, along with embedded technology, can be incorporated into dementia long-term care settings through a collaborative design approach. | M&T: Three interactive sensory spatial textile objects: (1) an armchair with press sensors (buttons and switches) connected to an Arduino UNO microcontroller sending output signals to an aroma diffuser, a massage vibrator, a fan device, and a speakers with an amplifier; (2) a wall picture frame with three ultrasonic proximity sensors linked to an Arduino Mega microcontroller activating an LED cluster through an LED driver; and (3) a portable cover for a handrail integrated with three pushbuttons (as press sensors) connected to an Arduino UNO microcontroller that, through RF transmitter and receiver modules, wirelessly send signals to a speaker with an amplifier. EM: No user tests conducted. MF: Prototypes were considered accessible, ergonomic, and safe, demonstrating the potential to transform dementia long-term care settings into a comforting, playful narrative and communication-eliciting environment to enhance well-being. Sensory ethnography (SE) methodology is planned for later UX evaluation through observation, notes, and video and semi-structured interviews. |

| P17 [78] | Investigate how different textile materials on the wristbands may influence the affective experience promoted by stroking and squeezing tactile feedback. | M&T: A wrist-worn haptic device that supports the easy replacement of different textile materials, composed of an actuating unit—two servo motors individually linked through connectors to two cylindrical cores—mounted in a 3D-printed two-layer frame, which also holds four strain gauge load cells as pressure sensors connected to an HX711 load-cell amplifier to modulate the pressure applied to the skin. Connected to both servo motors, a microcontroller receives signals from a computer through two SN74LS241N octal buffers via USB. Two elastic conventional PES bands fasten the device onto users’ wrists. EM: Two user experiments evaluating valence and arousal ratings of the investigated feedbacks (1) stroking and (2) squeezing. The tests were subdivided into (1.a and 2.a) evaluation with a motionless prototype and each of the 5 fabrics and (1.b and 2.b) evaluation upon parameterized stimulation of the prototype—both using a 7-point Likert emotion rating scale based on the SAM and on the SDS—followed by a semi-structured interview. Fifteen different participants were included for each test. MF: Five design considerations were promoted for touch and compression feedback on the wrist mediated by different textile materials: valence-arousal maps as affective design references, individual differences to promote personalized affective feedback, correlation between valence produced by interaction with the comfort of the textile material and emotional experience predominantly activated by movement factors. Limitations/future work include the exploration of (1) a multisensory interaction approach; (2) UX evaluation on diverse interaction scenarios; (3) hardware device minimization; and (4) mechanical or chemical changes of textile wristbands. |

| P18 [79] | Explore the intersection between wearable technology and environmental awareness by using haptic feedback to communicate a plant’s comfort level to the user. | M&T: Line of wearables that respond to atmospheric conditions collected through Airspec smart eyeglasses with SHT45 humidity and temperature sensors that send collected data in real time via Bluetooth to a laptop, which are controlled through FlowIO with pneumatic ports that promote haptic feedback (shrinking and pushing) for tubular and circular layers made of inflatable silicone inserted inside two non-elastic textile layers in an arm warmer and a neck warmer. EM: No UX tests. However, observation of interaction scenarios to evaluate the functionality of the prototype is planned. MF: Proposed wearables effectively communicate environmental conditions. The successful combination of sensors and actuators may allow a seamless user experience, promoting a deeper connection with nature through embodied interactions. |

| P19 [80] | Discuss how methodological tools can represent felt pain experience for somaesthetic interactions through a textile extension. | M&T: A soma extension made of conventional textiles with interactive properties created via Arduino microcontroller with a movement sensor module (MPU-6050) and an embedded music shield connected to headphones for sound feedback. EM: Video-recorded first-person (author) prototype tests, second-person (participants) testing of the prototype, self- and in-depth semi-structured interviews, body map drawing, and data analysis. MF: It is important to combine visual, verbal, and textual tools for felt experience in interaction design. |

| P20 [16] | Research gesture affordances when interacting with textile interfaces with different textures and respective emotional user experiences under four feedback modes. | M&T: Five interfaces with different textures obtained through fabric manipulation techniques with a scuba knitting fabric, which integrated Adafruit stainless-steel conductive threads, Adafruit conductive silver fiber net fabric, pressure-sensitive fabric (Adafruit Velostat), LEDs, MP3 module, speaker, and Arduino Mega microcontroller with a dip switch connected, read–write module (CH376S), and a TF memory card. EM: Video-recorded observation, semi-structured interview, emotion valence, arousal, and stress–relaxation level with a Likert-type questionnaire, HRV, and GSR, audio-recorded user’s feedback. MF: Emotional experience was more significantly impacted by the feedback modes (haptic, visual, audio, and combination of visual and audio) in emotional valence, arousal, and GSR. However, stress–relaxation level and HRV were influenced by both feedback modes and textile-afforded gestures. Seven guidelines were proposed for “the design of textile interfaces for emotional interaction”. |

| P21 [81] | Discuss the integration of electronic textile (e-textile) sensors that enable hand interaction in car interior space to assist drivers and empower backseat passengers. | M&T: Development of two study prototypes as proof of concept of e-textile pressure-sensing system using conventional and Adafruit silver-based knit Jersey conductive fabric connected via Karl Grimm soldered silver-plated conductive threads stitched to a Lilypad microcontroller board with Bluetooth module (Adafruit Bluefruit LE UART Friend). EM: No UX evaluation performed. MF: Referred need to conduct research on UI and perception of tactile e-textile application. Discussion of actuator possibilities: media player, window, air conditioning, and visual feedback with thermochromic threads or LEDs/UV OLECs. |

| P22 [18] 1 | Design an interactive illuminating textile with a contactless number gesture recognition function controlled by computer vision via an open-source AI model. | M&T: Striped double-layer woven Jacquard made of PES and POF of PMMA integrated with a micro single camera and an FPC interconnector, which connects to a Raspberry Pi 4B SBC with an open-source AI model (Baidu AI Cloud) based on deep-learning gesture recognition stored in a cloud computing server with Wi-Fi connection. The microprocessor is linked to a 5 V power bank and to a drive circuit that connects RGB LED coupled with POF bundles. EM: Interaction observation in an indoor environment. UX tests for user satisfaction feedback are recommended for future studies. MF: Gesture-controlled contactless detection conveys a novel input approach for interactive textiles. Potential for further research applied for designing “multi-sensory environments and smart wearables”. The open-source AI model provides continuous interaction between the physical textile and intangible technology, whose application in the early design process leads to labor and cost minimization. Bulkiness of system components, directionality of the camera, internet signal strength (to avoid detection delay and failing), and exclusion of users with functional hand disabilities are highlighted limitations. |

| P23 [82] | Demonstrate a 3D textile electrode containing a water-retaining material for bioelectrical signal acquisition with low skin impedance. | M&T: 3D textile electrode made of CO yarns dyed with a conductive ink containing a mixture of RGO, silk sericin, and SAP, fabricated by towel embroidery technique. EM: EMG and ECG measurements as demos for potential clinical applications, ambulatory ECG monitoring for HRV on volunteers performing running/walking exercises. The prototype itself is used as test equipment for usability evaluation. MF: The proposed electrodes achieved high-fidelity signal acquisition in running motion and sweat conditions. Due to the adaptable embroidered integration technology, electrodes may be embedded into everyday clothing, illustrating its customizability. |

| P24 [83] | Explore destaining as a design tool for interactive systems by analyzing the relation between textile, stain, and light and studying a set of design parameters. | M&T: Four fabrics—100% CO, 100% PES, a blend of 65% PES and 35% CO, and 100% nylon—coated by different methods with TiO2/Ag nanoparticles. Light interaction with stained organic matter was studied through passive interaction, with sunlight exposure, and controlled interaction of LEDs (from Adafruit) embedded into the fabrics, connected with a conductive thread to an Adafruit Metro board and a 3.3 V battery in a holder with a switch. The conductive threads also acted as moisture sensors. EM: Observation of color and pattern change. UX evaluation was not performed. MF: Destaining is proposed as a creative tool to design interactive textiles, which enables personalizing or recording users’ memories and experiences within textiles. Design parameters to create destaining textiles were classified, and potential applications were presented, namely the proof of concept of self-cleaning clothing. |

| P25 [14] | Explore the embodiment of negative feelings and emotions related to remote relationships in textile wearable artifacts. | M&T: Two pairs of wearable artifacts: (1) Two vests made of conventional textiles with servo motors, conductive threads, and RFID sensors and tags placed on the chest. (2) One collar made of large draped cushions and a quilted fabric half-vest, both embedded with heat pads. Both pairs are connected to each other via Wi-Fi. Additional electronics (not specified) are hidden on the artifacts’ backside. EM: Observation of prototype use by authors and colleagues for collecting feedback. MF: As “discussion artifacts”, both developed wearables allowed reflections on embodied and experienceable negative emotions related to living apart. The visual interplay of involved materials and components demonstrated to be part of the experience, which became two-fold: individual sensation of wearing and shared experience of looking. Not introducing artifact behaviors in detail for participants allowed them to collect deliberate responses without drawing attention away from focal points. Exploration of how to extend interactivity when dislocated from each other is suggested for future work. |

| P26 [15] | Examine the potential for emotion regulation via movement-based interaction assisted by smart textiles and identify important elements that affect their design. | M&T: A t-shirt with flexion/extension and movement sensors made of silver-based knitted elastic, two conductive fabrics, and conductive thread. Three feedback mechanisms were implemented using four LEDs with different colors and brightness for visual response, four motors for vibrotactile, and an Arduino BLE Nano microcontroller for data communication to a mobile app via Bluetooth for audio feedback. EM: SAM and CRS questionnaires (CRS using 0–20 range), facial emotion capture app (AffdexMe), and interview. MF: Emotional engagement was more effectively encouraged by audio and vibrotactile feedback. Interactive textile interfaces may lessen the obtrusiveness of wearing electronic equipment, increasing user acceptability. Five design guidelines that contribute to movement-based interactions for emotion regulation were suggested. |

| P27 [84] | Explore interaction possibilities of textiles with enhanced sensing capabilities through textile textures and sonic outputs. | M&T: Seven conductive textile samples, used as proximity and touch sensors, were developed by in situ polymerization (2), embroidery with Madeira 40 silver-plated conductive yarn (1), knitted with the same yarn (1), Amman SilverTech 120 silver-coated conductive thread sewn lines (1), LessEMF copper wire mesh fabric (1), and Sefar woven fabric of carbonized yarn (1). For sound output, the samples were connected to a Cypress PSoC4 microcontroller sending data to a computer software. EM: Observation of visitors’ interaction with an installation and discussion. MF: Visitors could act as creators of sonic landscapes. The textile installation provided an immersive and rich experience and proved that “sounds were inseparably intertwined with the textures and tactile sensations”. Future work on an even more immersive structure to allow visitors to interact with other parts of their bodies is suggested. |

| P28 [85] | Discuss and evaluate the design and fabrication of digitally embroidered textile soft speakers and explore potential applications with maker-users to expand audio and haptic feedback on textile interfaces. | M&T: Digitally embroidered pattern made with Karl Grimm copper and silver-plated conductive threads for application on a conventional surface (fabric or leather) with a magnet underneath, connected to a miniature 4–8 Ω amplifier and digital media device through audio cable, powered by 3.7 V Li-Po battery. Microcontrollers and 5 V DC power adapters are suggested as optional components. EM: Autobiographical design with non-experienced users. MF: The do-it-yourself (DIY) process developed and the design parameters evaluated can help makers integrate sound and haptic vibration to soft objects by additive and constructive methods. Seven technical and design parameters were presented. The prototypes were developed to demonstrate how interactive capabilities can be embedded into everyday textiles. |

| P29 [86] | Explore how tangible interactive technology can offer opportunities for socialization and sensory regulation for minimally verbal children with autism through a musical textile interface. | M&T: Inflatable ball wrapped in felt sheets topped with elastic Lycra ribbons, attached with stretch sensors each, connected to a central BCTB microcontroller via conductive threads. The system was powered by a 3.7 V lithium battery and linked to a Minirig speaker through an audio cable. EM: Interaction observation for assessing the way children with autism interact with the prototype alone and with peers using a previously developed framework “combined with an adapted version of Parten’s play stages” and inspired by Social Communication, Emotional Regulation, Transactional Support (SCERTS). Sessions were video-recorded using ELAN software (annotation tool for audio and video recording), and thematic analysis to annotations was applied. MF: Conventional textiles with sonic outputs combination provided rich multisensory feedback and calming experiences enjoyed by all participants. Interaction with and around the prototype attained positive results for social interaction, namely has motivated different types of play. Shareability and socialization were facilitated by the object’s properties (round shape and large-size design with different entries and access points) and by the semi-structured format of the evaluation sessions. Multifunctionality and multimodal interaction enabled creativity about technology use, expression freedom, participation, and agency. |

| P30 [17] | Explore how a craft approach to interactive technologies can support tangible and multisensory experiences through the development of an interactive and tactile e-textile book. | M&T: Interactive book made of conventional fabrics and components (CO fabric, cardboard, felt, and plastic snaps), smart textile elements (optical fiber and thermochromic, hydrochromic, and photochromic pigments), and e-textile elements. These include sensors (microphone, and textile-based pressure and stretch sensors made of spun wool and silver blend, and unspun wool and stainless-steel blend), actuators (enameled copper wire wound into a coil with a magnetic bead, LEDs, speakers and vibration motors), processing units (Arduino Nano board, a motor shield, and greeting card recordable sound modules), power supplies (Li-Po battery with an integrated charger), and other components (reed switch activated by magnet and on/off button). Conductive connections were provided by silverized and gilded copper threads and metal snaps. EM: Observations of (1) two children engaging with the book and (2) a mixed group of adults and children interacting with it in an exhibition setting. MF: Working with technology to augment and potentially improve individual multisensory experiences, it is necessary to “couple it with materiality and storytelling”. Textile craft qualities were extended to electronic and computational interaction. Group tests provided insights on shared experience development possibilities. Future work lies in research about real-life applications, expanding audience, exploring crafted interactions (storytelling) over other disciplines, and reflecting on the sustainability aspects of electronic crafts. |

| P31 [87] | Develop and test a pneumatically actuated soft biomimetic finger with texture discrimination capabilities to provide sensory feedback and create a more natural experience for prosthetic users. | M&T: Soft prosthetic finger mainly fabricated with silicone and fabric. For sensing, a 3 × 3 flexible, textile tactile sensor array made of conductive fabric traces (from LessEMF) and piezoresistive fabric (from Eeonyx) is encased by an elastic fabric, integrated into the fingertips and connected to an Arduino Mega 2560 microcontroller through resistors. The pneumatic actuator system is constituted of the prosthetic finger made of three independently controllable joints made of Dragon Skin 10 Medium (from Smooth-on) silicone rubber, an air compressor, and solenoid valves, each connected to an air channel’s inlet and a Honeywell ASDXACX100PAAA5 pressure sensor. EM: Observation of three healthy individuals receiving sensory feedback sent from the prototype via transcutaneous electrical nerve stimulation (TENS) and performing tasks for identifying 13 standardized textured surfaces. MF: Participants successfully distinguished two or three textures with the applied stimuli. It is suggested that dynamic stimulation may be more effective than static stimulation for improving sensory perception in prosthetics and human–robot interactions. |

| P32 [38] | Present a case study of a somatosensory hat towards exploring an interactive clothing design method that reflects human emotions. | M&T: Three winter hats made of conventional fabric (Berber fleece with sheepskin, partially sewn with alpaca wool) and components (PVC, zipper), integrated with LED lights and strip, and a brainwave sensor system from NeuroSky composed by a ThinkGear ASIC Module (TGAM), Bluetooth module, dry electrode, ear clip, 3.7 V lithium battery, and embedded I3HGP motherboard processor. EM: SDS-based questionnaire with 7-point scales for functional, aesthetic, fashion, interactive, and emotional attributes comparison between proposed and traditional winter hats based on sample pictures. MF: The results show that the proposed dynamic interactive hat can improve the match between product attributes and target users’ emotional response by enhancing the visual appeal of fashion accessories and the humanistic emotional value of smart clothing. The Kansei engineering method provided a scientific basis for the emotional design and enabled us to quantify the user experience. |

| P33 [88] | Introduce the design and preliminary assessment of a textile-based biofeedback system for supporting gait retraining after a fracture of the lower extremities. | M&T: Hallux valgus socks stitched with silver-plated conductive thread to (1) resistive pressure sensors FSR 402 Interlink Electronics connected in series with 4.7 kΩ resistor, Arduino UNO R3, Bluetooth module HC-05 zs-040 version, power bank and (2) five pieces of EeonTex (conductive pressure-sensing fabric) textile sensors—connected in series with a 56 kΩ resistor and stitched as opposing hook-shaped circuits—a power switch and Arduino Pro Mini 3.3 V microcontroller with a Bluetooth module transmitting data to a smartphone. EM: Indirect observation, self-reporting, semi-structured interview, and closed-ended questions based on the UEQ and the CEQ. MF: Multiple feedback modes were successfully combined and perceived as real-time and truthful by users: “graphical, verbal, and music feedback on gait quality during training (…) and verbal and vibrotactile feedback on gait tracking”. Evaluation by patients and therapists indicated “acceptance by targeted users, credibility as a rehabilitation tool, and a positive user experience”. The need for a more flexible calibration/personalized design and the enlargement of the quantitative demonstration was suggested. |

| P34 [89] | Explore the aesthetics and the societal impacts of a hybrid textile that combines traditional handcrafts with digital technologies, chemical processes, and elements created by nature. | M&T: Feathers prepared with dyeing methods, traditional featherwork, and in situ polymerization with pyrrole and Iron (III) Chloride hand embroidered in several silk chiffon with crimping beads and connected through electrical wires to an MPR121 capacitive touch controller board read by an Arduino Nano microcontroller with Bluetooth module that controls a DFPlayer Mini MP3 module. EM: No UX tests. However, prototype implementation in a curiosity cabinet for interaction observation is proposed. MF: By combining haptic interactions with feathers, textiles, and sonic acoustic feedback, the project emphasizes a multisensory experience. The potential of hybrid textiles to foster new forms of artistic expression and societal engagement is highlighted. |

| P35 [90] | Describe a light-emitting textile project that aims to alter people’s perception of self regarding body image by creating a fictional spatial experience. | M&T: Three woven light-emitting interactive textile artifacts made of optical fiber cables integrated with two Sharp infrared (IR) proximity sensors connected to an Arduino microcontroller. EM: Observation and image recording of prototype experience in an installation (not described). MF: The project is “two-fold regarding who is perceiving and what is perceived” since the artifact gained sensory abilities. It demonstrated that, since self-perception is a subjective concept, everyone may be differently affected. Due to the restricted perception range of applied proximity sensors (50–500 cm), image processing via a camera is recommended to improve detection location and movement range in future studies. |

| P36 [91] | Present “a design exploration of the fabrication methods and processes of interweaving mechanical pushbuttons into textiles” through digital embroidery and 3D printing. | M&T: A button layer—with digitally embroidered and 3D-printed (using flexible Tronxy Flexible TPU Filament) star-like buttons on a pre-stretched fabric (Lycra)—fitly integrated into a circuit layer—circuitry made of Madeira HC 40 silver-based highly conductive embroidery thread and hand sewn conductive pads (made of vinyl-cut thin copper). A double layer is connected via conductive yarn to an Arduino microcontroller for real-time signal processing displayed on a computer screen, a circuit board, pull-down resistors, and isolated copper wire with a small crimp. EM: User tests in an informal embodied ideation session to acquire early UX. MF: The tested fabrication processes were shown to be versatile and repeatable. The designed tactile textile pushbuttons proved to be wearable (soft, flexible, highly stretchable, and comfortable), functional (clear tactile feedback and reliable signals), durable (pressed 4000 times), and useful for on-body interactions. For future iterations, alternative designs (such as using diverse embroidered textures for eyes-free interaction) and reducing manual assembling were suggested. |

| P37 [92] | Present an electronic khipu—Andean code system based on knots—as a musical instrument from a decolonial perspective and report its live experimental performance. | M&T: An electronic khipu consisting of a CO main rope and nine secondary strings made of conductive rubber cord stretch sensors arranged and fixed—with screws (on the top) and conductive metal fittings (on the bottom)—on a box with potentiometers and buttons, all connected to a circuit board and to a Teensy 3.6 microprocessor sending data to a computer via cable. For video projection, hand movement and gestures of knotting are captured by a USB camera sending live images to the computer, which sends video and sound signals via cable to not mentioned devices. Other components include banana jack connectors, a knob, and a ring or bracelet (to be used by the performer). EM: Informal observation of performance audiences. MF: The integration of the khipu into a digital music interface offers a novel way to engage users, combining tactile interaction with digital sound production. The project demonstrates the potential for cultural artifacts to inspire new technological applications and user experiences. Conductive rubber sensor strings act as flexible and reusable variable resistance whose values can be mapped. A wide range of sound textures is caused by the different signals produced by the cords, together with the performer’s skin conductivity. “The touch and force used to make the knots produce different intensities”. |

| P38 [93] | Present an e-textile soft toy that explores haptic sensations and encourages physiological and social play experiences. | M&T: Soft toy with NFC tags—tracked by mobile app—Adafruit Flora microcontroller, 5 tiny vibration motors, batteries, and a battery holder. EM: Observation and image recording of an interaction scenario (not described). MF: The research “contributes to a new generation of toy design combining comfortable and tactile characteristics of textiles with digital technologies”. Five interaction scenarios (alone and with peers) were proposed. UX tests are planned as future work by assessing intimate contact and multisensory stimulation related to the UX. |

| P39 [61] | Explore Autonomous Sensory Meridian Response (ASMR) media to create new design opportunities for interactive textile-based wearables for enchanting everyday experiences. | M&T: Two garments, each with a pair of Roland binaural recording headphones for the 3D audio and playback, a Teensy 3.2 microcontroller (with “TouchRead” capacitive touch sensor pins) attached with an audio shield, and specific sensors: (1) a red-plaid jacket connected to the microcontroller’s sensor pins through CO-wrapped copper wire, and (2) a hand-woven cloak with a knitted i-cord (wool and conductive yarn) as breath/stretch sensor and long capacitive sensors made of single CO-covered copper wire attached to microcontroller’s sensor pins, besides a SD memory card. EM: First-person autobiographical approach of each garment by the authors. MF: The autobiographical approach enabled the design of sonic filters as wearable systems that are familiar and comfortable for designers as users. ASMR media is proposed to foster embodied, intimate, felt, and personal attention practices within daily common surroundings in wearable design, and its relevance for HCI is highlighted. |

| P40 [57] 2 | Discuss how a transdisciplinary collaborative design approach between e-textile design, cognitive neuroscience, and HCI can lead to the development of a textile garment that provides one’s body with perceptual changes and emotional responses. | M&T: Tubular jersey dress with tight sleeves integrated with 38 vibration motors distributed along the body, guided by Arduino microcontroller board. Before the presented prototype, exploratory work was conducted [9]. EM: Open-ended interview over the full wearing experience with a dancer, as a body-conscious person. MF: Transdisciplinarity shifted perspectives among arts, neurosciences, and HCI researchers, and the importance of shared language for communication was highlighted. The wearable “boundary object” opens new opportunities for the developing field of “sensorial clothing”, demonstrating that e-textiles allow one to “wear” various experiences since the application of vibrotactile patterns elicited a range of haptic metaphors in the wearer. Further research is necessary to fully comprehend the process of designing hidden body-altering experiences, as well as the emotional and social feedback. |

| P41 [9] 2 | Explore the potential for creating clothing that alters how people perceive their bodies by utilizing tactile feedback. | M&T: (1) Jersey textile with 21 vibration motors connected by Shieldex silver-based conductive thread to an Arduino UNO microcontroller linked to a computer software. (2) 25 vibration motors distributed on felt material connected through thin electric wires, with two types of conventional fabrics (fluffy soft non-woven PES and structured woven PES) as surface and additional components, including three soft buttons. EM: (1) Questionnaire A with 7-point Likert-type, before and after prototype experimentation, assessing “emotional state, body sensations and sensations of materiality”. After prototype tests, it was also conducted Questionnaire B with 9-point Likert-type questions about emotional, subjective reactions (valence, arousal, and dominance) and exploratory questions for material association. (2) After prototype experimentation, adapted Questionnaire A with 5-point Likert-type questions and a check-box list to evaluate “emotional, bodily, and materiality sensations”. MF: The research demonstrated the “potential in considering materials as sensations to design for body perceptions and emotional responses”. Interaction effects between vibrotactile patterns and the textile’s surface elicit different associations and haptic metaphors, influencing emotional arousal and physical sensations. When developing haptic clothing, it is crucial to consider textiles’ surface texture, and the design must be tailored for specific use and user. |

| P42 [94] | Present a seamless textile-based interactive surface that combines electronics and digital knitting techniques for expressive and virtuosic sonic interactions. | M&T: A piano-pattern textile digitally knitted with silver-plated conductive (from Weiwei Line Industry), thermochromic (from Smarol Technology), and high-flex PES yarns combined with melting-yarns. Covering the entire back of the interactive interface, a fabric pressure sensor piezoresistive knit fabric (LG-SLPA 20k, from Eeonyx) in between two conductive knit fabrics (Stretch, from LessEMF). The five proximity sensing fields of the piano’s 60 keys are connected to PCB pads interconnected to capacitive sensing chips (MPR121, from NXP Semiconductor) through highly conductive silver-coated fibers (from Liberator 40) that, together with heating elements, are interconnected to a Teensy 4.0 microcontroller via insulated wires. The processing unit has a voltage follower (TLV2374) and an N-channel Power MOSFET (IRLB8721, from International Rectifier)—powered by a 6 V external battery and with a resistor—and is connected through USB to a computer, which emits the generated sound. EM: No UX tests performed. MF: The integration of electronics at the fiber level into fabrics made by digital knitting techniques “enables personalized, rapid fabrication, and mass-manufacturing” of smart textiles, allowing “performers to experience fabric-based multimodal interaction as they explore the seamless texture and materiality of the electronic textile” through visual and tactile properties. Future research includes “simultaneous knitting of textile heating and pressure-sensing layers on top of the conductive and thermochromic layers, the design of flexible PCB interface circuits for robust textile-hardware connection, and integration of an on-board audio generation system”. |

| P43 [55] | Explore “wearable sensing technology to support posture monitoring for the prevention of occupational low back pain” for nurses. | M&T: T-shirt with an embroidered circuit of insulated high-conductivity silver-plated nylon thread incorporating two IMUs—LSM9DS0 sensors (accelerometer, gyroscope, and magnetometer) from STMicroelectronics—a PCB connected to Adafruit Flora microcontroller linked to an analog switch (NX3L1T3157) and Li-Po battery. The microcontroller has an AHRS sensor fusion algorithm and a BLE (HM10-UART) module sending data to a smartphone application. EM: UX of the initial prototype during four days with impressions and improvement suggestions recorded in a diary and a final interview. Improved prototype tested with wearing trial followed by three validated questionnaires—an adapted version of the UTAUT with additional key constructs (hedonic motivation and behavioral intention), the IMI, and the CEQ—and semi-structured interviews. MF: The need for accurate detection of the low back posture data was explored through personalized sensor placement and tight fit with elastic material. The importance of feedback advice on how to improve posture was also highlighted. Smart garment design demands a holistic approach stressing the relationship between hedonic and intrinsic motivations. The study contributes to temporary change behavior, and further research is aimed at testing long-term posture correction through smart garments. |

| P44 [95] | Explore craft techniques of double weaving and yarn plying for creating smart textiles with touch sensing and color change behavior. | M&T: Conventional yarns (Pearl Cotton from Halcyon Yarn and Zephyr Wool), conductive yarns and threads (magnet wires, Litz wire, CO-covered non-insulated copper wire from wires.co.uk and plied stainless-steel thread from Karlsson Robotics), and blue and red thermochromic pigments with activation temperatures of 28 °C, 43 °C, 56 °C from Chromatic Technologies Incorporated and Liquitex clear acrylic gel medium. The final prototype was a hand double-woven fabric made with Pearl Cotton, plied resistive heating (stainless-steel) conductive thread, and CO-covered non-insulated copper wire painted with thermochromic pastes (blue 28 °C and red 43 °C). EM: No UX evaluation performed nor suggested for future studies. MF: Adaptation of traditional fiber art techniques with smart materials enabled the design of “richly crafted and technologically sophisticated fabrics”. Craft double weaving structures allowed “to support interactivity while hiding circuitry from view,” and both techniques presented great potential for designers to “discover new ways of realizing their smart textile concepts”. Designing creative custom yarns is proposed as future work. |

| P45 [96] | Present a full-body, customizable haptic textile interface to promote an untethered spatial computing experience. | M&T: Full-body clothing made of double-sided conductive textile (composed of “conductive mesh made of metal thread on the front side of each layer and an insulated fabric on the back side”), which powers and controls attached haptic modules via 2DST. Haptic modules include LEDs, a pin connector, and a haptic actuator, and they are controlled by a master module wirelessly communicating with a computer via Bluetooth. EM: No UX assessment. Demonstration of spatial experience using a mixed-reality headset and integrated motion tracking system was described as user experience tests in future work. MF: Personally customizable quality was provided by haptic modules that had a pin, badge-like connector and could be freely attached by the user on the conductive textile. 2DST technology was used to enable garment flexibility, wireless connection, haptic feedback, and customizability. Modules could store both acoustic–tactile data and visual expression of haptic feedback. |

| P46 [97] | Propose design recommendations for integrating meaningful technology into interactive textile books for infants and toddlers aiming to promote sensory–motor and pre-cognitive developmentally appropriate interactions. | M&T: Three-page interactive book: (1) Resistive fabric (force-sensitive stretch sensor) connected to a Lilypad buzzer. (2) Velostat between two layers of conductive fabric (pressure-sensitive sensor) sewn to a vibration motor underneath. (3) Photo resistors (light sensor) connected to Lilypad LEDs and conductive fabric. Specifications reported by [98] refer to conventional components (mainly CO fabrics, Velcro, and foam) and general electronic circuitry (Arduino Pro Mini microcontroller and batteries connected through plastic-coated flexible cables to conductive snaps). EM: Interaction observation and video recording of prototype experimentation in play sessions by infants and preschool children alone and with adults. Data was analyzed through an affinity diagram, open coding (focusing on the child’s play behavior, length of interaction with the book, and interaction with the parent), and timeline in a table, followed by semi-structured interviews with parents and the older children (assessing children interaction early perceptions: usefulness, playfulness, appropriateness, and interest). User study results were discussed in semi-structured framing interviews with experts and focus group with parents, both video/audio-recorded and analyzed through thematic analysis. MF: Authors propose a set of design recommendations for interactive textile books focusing on featured interactions, digital effects, general “story”, and book design. Reduce the book bulkiness and size and better hiding of the electronics to prevent disassembling were mentioned for the next design cycle, as well as using more soft electronic materials (such as “replacing stranded wires with conductive thread without loss in robustness, fully textile-based sensors and actuators, and integrating flexible circuit boards”) and a wider diversity in textile materials to provide extended haptic and sensory experience. |

| P47 [62] | Explore a design framework for embodied design processes to improve communication of tactile properties of textiles digitally. | M&T: Described and analyzed four tools. Pocket tool: Arduino board bridging force-sensitive resistors (pressure sensors) within six different textile pockets and a display. Haptic sleeve: Haptic sleeve made of viscose fabric with a grid of eccentric rotating mass (ERM) vibrotactile motors (connected to a regulator with three potentiometers), a DC-powered electric heating pad, and a temperature sensor (DS18B20) driven by Arduino UNO. Conventional materials include Velcro and kinesiology elastic tape. Hyper textile: Three different fabric sheets (linen, silk, and a coated PES) linked to piezo sensors, audio cables, jumper wires, speakers, and an Arduino board. Employed recorders, wires, and sensors are not described. iShoogle: Digital application with interactive videos of digital textile manipulation. EM: All prototypes included user tests based on interaction observation. Previously developed models of textile experience based on touch behavior types and three tactile-based phases [99] guided the analysis. MF: Detected design strategies focused on “body part”, “textile interaction”, and “who is generating”. Identified digital feedback embraced visual, auditory, tactile, and kinaesthetic. Immersion, mediating, augmenting, and replicating the experience were demonstrated as possible approaches for relational experiences, which are suggested to be further studied. A proposed framework to support design decisions for embodied textile experience unified information from analyzed tools regarding these variables. Concrete applications for the framework were proposed, as well as future work on the employment of haptic, virtual, and augmented reality technologies to research material interactions. |

| P48 [100] | Compare human intuition and technical knowledge in designing smart garments through the use case of sensor arrangements on a jacket to detect diverse situations. | M&T: One-size jacket and acceleration sensors (accelerometers). Other components required for gathering the sensor data are not specified. EM: No UX tests. Sensor performance evaluation for technical measurements conducted. MF: The best-performing and more accurate sensor pattern systems were those created by non-experienced test participants using their intuition rather than those by system design experts. Placement of sensors is more relevant for accuracy than their quantity. Best performance designs presented symmetric layouts. Further studies to calculate optimal sensor layout and algorithms to enhance the relationship between sensor number and accuracy are proposed. |

| P49 [59] | Present usability and maintenance improvements in the design of an interactive textile interface that translates musical scores into tactile sensations. | M&T: A full-body suit with 9 patterns, each integrated with control boards receiving data via Wi-Fi from an ESP8266 microcontroller connected to a computer and sending signals through conductive threads to ERM vibration motors. Modular connectors (nickel buttons and conductive hook-and-loop tape) link patches containing boards and motors, respectively, and join LEDs into the system. EM: Observation of two musicians in different rooms performing a duet, only communicating with each other via the prototype. MF: Implemented alterations on the prototype’s design and applied materials demonstrated to improve the prototype regarding technical issues and system functionalization. Although the rich experience information of musicians and a wider audience about the prototype in performances was mentioned, these UX tests were not detailed (e.g., the augmentation of the audience’s understanding of how the suit works through multisensory interaction provided by the integration of LEDs). Further studies include the incorporation of motion sensing into the system and the exploration of decentralized processing and power supply units. |

| P50 [101] | Explore the user acceptance of a textile interface that merges traditional design elements of Indian culture and smart materials created for non-verbal communication in the social space. | M&T: Scarf made of poly-dupion fabric with hand embroidered embellishments and micro RGB LEDs connected through the conductive thread to three switches controlling colors and a 3.7 V Li-Po battery. EM: Group sessions for brief prototype experimentation followed by questionnaires with a 5-point Likert scale, 5-point SDS (with seven variables of non-verbal communication), 7-point scale adapted version of the TAM, 5-point scale based on the SUS, binary and descriptive questions regarding acceptance, social intelligence, aesthetics, functionality, usability, and emotions evoked. Data collected were analyzed quantitatively on SPSS (version 27) (Statistical Package for Social Sciences). MF: Positive emotions and aesthetic attributes were positively correlated with perceived usefulness and ease of use. Aesthetics had a significant effect on technology acceptance. The functionality of changing color revealed high acceptance for self-expression and daily interactions. Enhancing non-verbal communication through visual cues was proved to improve inter-personal interactions and to promote social and collective intelligence. Further research to correlate usability, acceptance, and cognitive load parameters with larger sample groups with complex interactions is recommended. |

| P51 [102] | Present a “construction kit” for building interactive e-textile patches to introduce ideas around identity and self-expression for children as user-learners. | M&T: A storybook, magnetic patches, and electronic components (Lilypad LEDs, coin cell battery holder-switched, temperature and light sensor) sewn into a fabric piece with a snap to connect to other e-textiles and a magnet on the backside for assembling on the patch. EM: Observation and image recording of an interaction scenario (not described, but available through a link). MF: The first stage of an interactive construction kit was presented through patches and electronic textile circuits working as building blocks. Magnetic patch surface facilitates sharing and flexibility of elements to be integrated into dynamic patch creation. User tests are mentioned as future work with target users to measure and understand experience reflection, as well as subsequent module building. |

| P52 [103] | Discuss the development of a protective and interactive wearable system based on existing sensorial technology to increase workers’ health awareness in small and medium Coating Plants from the user perspective. | M&T: (1) Protective mask made of thermoformed spacer textile padded with soft foam and temperature and humidity sensors. (2) Electronic nose (alert gateway) composed of volatile organic compound (VOC) sensors and LEDs. Both artifacts are connected to mobile applications via Bluetooth. EM: Voice- and image-recorded user sessions involving focus groups, interviews, and scale-based questionnaires to evaluate conceptual mockups, assessing aesthetics, function, comfort, mode of use, etc. MF: Following a Design Thinking process, the development of the wearables was led by the results of user sessions (including the empathy phase). Provided real-time feedback and statistical data increased visible perks of using the wearable system. The system was created to promote multisensory experience to enhance users’ perception and encourage individual and collective behavior change. Users’ engagement helped highlight the system’s core and added values of awareness (“by monitoring environment and personal indicators”) and comfort (by considering wearability, breathable material, and connection to the body/face). Functional prototype tests were proposed as further studies, expecting results on comfort related to aesthetics (textile materials), perception benefits for motivating and educating users, and clarity of information transmission. |

| P53 [104] | Explore how blind and visually impaired (VI) people create personally meaningful objects using e-textile materials and hands-on techniques through making workshops. | M&T: A re-recordable device—microcontroller consisting of PCB, microphone, speaker, record button, adapted playback soft button made of conventional textiles with type, size, and shape chosen by participants fixed with double-sided fabric tape and glue—connected through snap fasteners to soft wires (insulated conductive thread inside long fabric tube yarn) and battery. Pockets were used to hold the electronics (board and battery). EM: Pilot sessions observation for feedback on materials, participatory approach in the e-textile-making sessions, workshops, and follow-up interviews. Data were collected through different media. MF: Modular approach enabled inclusive and accessible construction based on the form and function of affordable materials. Sharing experiences between participants provides creativity and mutual learning. Insights on how to run e-textile workshops to be more accessible and inclusive to a wider community, as well as allowing for ownership, creativity, and self-expression, were provided. |