Abstract

Given the growth of unmanned aerial vehicles (UAVs), their detection has become a recent and complex problem. The literature has addressed this problem by applying traditional computer vision algorithms and, more recently, deep learning architectures, which, while proven more effective than previous ones, are computationally more expensive. In this paper, following the approach of applying deep learning architectures, we propose a simplified LSL-Net-based architecture for UAV detection. This architecture integrates the ability to track and detect UAVs using convolutional neural networks. The biggest challenge lies in creating a model that allows us to obtain good results without requiring considerable computational resources. To address this problem, we built on a recent successful LSL-Net architecture. We introduce a simplified LSL-Net architecture using dilated convolutions to achieve a lower-cost architecture with good detection capabilities. Experiments demonstrate that our architecture performs well with limited resources, reaching 98% accuracy in detecting UAVs.

1. Introduction

The growing number of unmanned aerial vehicles (UAVs) has put our privacy and public safety at risk. They may even represent a security problem. Therefore, their timely detection is vital. However, unfavorable environmental conditions such as the complexity of the environment, the presence of birds and kites, and the small size of UAVs represent a great challenge for their detection.

In the literature, there are two alternatives of algorithms for UAV detection. The first is the traditional algorithms, which achieve high accuracy in austere environments but are computationally expensive, so implementing them in real-time is not feasible [1]. On the other hand, deep learning algorithms are usually more effective, but their computational burden is enormous [2]. Detecting unmanned flying objects at low altitudes in real-time is a big challenge. Although there are algorithms for object detection, they do not guarantee good accuracy in environments with low light, many objects at low altitudes and various scales [3].

Moreover, to detect UAVs, CNN models need many images and videos to be trained. In [4], this problem is solved by generating synthetic images. In [5], the authors implement a YOLO-based UAV detection using dilated CNNs with an embedded GPU. The problem can be partitioned to be attacked by first performing image background subtraction to detect objects and subsequently using a CNN to classify them [6]. Multi-stream use helps to obtain the best scale for object detection since different kernels are applied to the images; it solves the problem of variable scales, which is the basis of the Faster-R-CNN pipeline used to detect moving objects [7]. LSL-Net is a YOLOv4-tiny-based system for real-time drone detection, which balances the computational load and detection accuracy, obtaining very good results [8]. All the works described above use many GPUs to perform UAV detection, making their implementation difficult when hardware resources are scarce.

Many applications can be found using detection and UAVs; in this case, we will mention two applications that we consider to be important. In [9], the authors propose a system that helps in search and rescue missions; this is carried out by employing a UAV coupled with a CNN-based real-time people detection system running on a smartphone, they achieve very good results since they have a 94.73% accuracy and achieve a speed of 6.8 FPS when running on the smartphone. On the other hand, in [10], the authors propose a system based on CNNs to identify structural damage in bridges, such as pillars or wooden beams, using UAVs that fly around the bridge to obtain images that the system can later analyze. The results obtained are considered good since the proposed system was able to identify and measure the damage in bridges with an average error of 9.12% compared to direct measurements.

In this work, we improve the computational efficiency and reduce the number of parameters of LSL-Net by changing the activation function to a lightweight activation function and utilizing dilated convolutions for feature extraction. Additionally, we improve the detection speed of the network. These improvements allow the algorithm to continue recognizing the UAVs even if the camera is in motion. Our work is based on the LSL-Net architecture and the use of a dataset of images of drones in color and a dataset of images with color filters to cover those cases where the drone is not perceptible to the camera. For example, drones cannot be detected in a dark background or with direct light from the angle of view of the camera, which causes drones to be unable to be detected. Therefore, we will achieve that the neural network had better precision in these cases with these images.

The main contribution of this work is a simplified LSL-Net architecture for UAV detection, which is helpful in systems with limited resources. According to our experiments, the proposed architecture achieves an accuracy of 98% in drone detection.

This paper is organized as follows: Section 2 presents the work related to UAV detection. Section 3 introduces the proposed detection architecture based on LSL-Net. Section 4 details the experimentation, datasets, and tools used, together with the results of the experiments. Finally, Section 5 presents our conclusions and future work.

2. Related Work

We find that neural networks are the most used method in object detection. In [11], the visual processing mechanism of the human brain is leveraged to propose an effective framework for UAV detection in infrared images. The framework first determines the relevant parameters of the continuous coupled neural network (CCNN) through the standard deviation, mean, etc., of the image. Then, it inputs the image into the CCNN, clusters the pixels through iteration, obtains the segmentation result through dilation and erosion, and finally obtains the final result through the minimum bounding rectangle.

The literature contains some object detection methods used to detect unmanned aerial vehicles. In [12], a novel approach is proposed to detect and identify intruding UAVs using a hierarchical reinforcement learning technique based on their RF signals. They trained a hierarchical UAV agent with multiple policies using the REINFORCE algorithm with an entropy regularization term to improve the overall accuracy. The research focuses on utilizing features extracted from RF signals to detect intruding UAVs, contributing to the field of reinforcement learning by exploring a less-investigated UAV detection approach.

In [13], the development of a novel UAV detection technique based on YOLOv8 is proposed. The approach successfully detects UAVs in real-world tests using a drone equipped with a camera, highlighting its practical applicability. The research provides an efficient detection methodology that substantially advances aviation security.

In [14], a lightweight, real-time, and accurate anti-drone detection model (EDGS-YOLOv8) based on YOLOv8 is presented. This is achieved by improving the model structure, introducing ghost convolution in the neck to reduce the model size, adding efficient multi-scale attention (EMA), and enhancing the detection head using DCNv2 (deformable convolutional network v2).

In [15], the temporal information of objects in videos is presented, and a Spatio-Temporal Attention Module (STAM) is developed to efficiently enhance the feature map extraction for detecting micro-UAVs. STAM is then integrated into YOLOX to develop a video object detector for micro-UAVs.

In [16], the YOLOv7-GS model is proposed, designed explicitly to identify small UAVs in complex, low-altitude environments. Its objective is to improve the model’s detection capabilities for small UAVs in challenging environments. Enhancements were applied to the YOLOv7-tiny model, including adjustments to anchor box sizes, the incorporation of the InceptionNeXt module at the end of the neck section, and the introduction of the SPPFCSPC-SR and Get-and-Send modules.

Unfavorable environmental conditions such as rain and fog, among others, can impair the detection of unmanned aerial vehicles. The authors of [17] worked on strategies that allow for improving the detection in these conditions; they used a DID-MDN (Diffractive Imaging Deep Multilayer Neural Network), a system of rain densities that manages to remove them from images through a convolutional network and thus allow for detecting objects of interest. Dividing the problem into motion detection and classification is a good idea when dealing with real-time with a static background. Ref. [6] subtracted the background through frame-to-frame differentiation to detect moving objects that will be the input of the MobileNetv2 architecture in charge of classification; however, in environments and situations without a static background, it is limited. Learning algorithms are not the only ones that exist for UAV detection; segmentation algorithms based on MSERs (Maximally Stable Extremal Regions) [18] have been used to detect drones in video sequences in real-time with a rate of 20 frames per second, obtaining an accuracy of 85%.

Recently, an architecture based on YOLOv3-tiny for real-time UAV detection was presented by the authors of [8]. It broadly consists of three modules. The LSM (Lightweight and Stable feature extraction Module) is in charge of reducing the input image and improving the information related to low-level features, the EFM (Enhanced Feature processing Module) enhances the feature extraction, and the ADM (Accurate Detection Module) finds a suitable scale to improve the detection accuracy, obtaining better results than different versions of YOLO and other architectures.

As we have mentioned, the detection of objects has been addressed with good results, but the drawback is the need to have significant computational resources, and in particular cases, the resources can be exaggerated; for example, ref. [8] makes use of four graphics cards of 11 GB each to be able to perform the training and validation, considering the requirements that must be met to achieve good UAV detection. This paper introduces a deep architecture that does not require too many resources for training and execution and still reaches good detection results.

3. Proposed Architecture

Based on the LSL-Net architecture introduced in [8], in this paper, we propose a series of modifications to the LSL-Net architecture for UAV detection. In our architecture, we propose to use dilated convolutions, which, unlike traditional convolutions, introduce another parameter in the layers: the dilation rate, which defines a space between the kernel values. For example, to achieve the same field of view as a 5 × 5 kernel with dilated convolution, we only need to use a 3 × 3 kernel with a dilation rate 2 × 2. Note that dilated convolution only uses nine parameters, which helps reduce computational cost. Dilated convolutions are also very useful in real-time segmentation. They allow for a wide field of view without using multiple convolutions or larger kernels. Dilated convolutions provide a wider receptive field than standard convolutions [19]. It is achieved by inserting null values in the standard convolution map, which allows for extracting more general features with fewer convolutions.

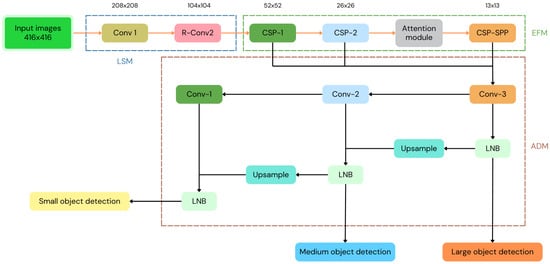

LSL-Net is depicted in Figure 1. Next, we will briefly describe each of its modules. LSM is responsible for extracting low-level features through a multi-branch method. In this method, 1X1 convolutions reduce parameters and integrate information, while 3 × 3 convolutions also reduce images. EFM comprises two CSP-Nets (Cross Stage Partial Networks): an attention module and an SPP-Net (a network employing Spatial Pyramid Pooling). CSP-Net aids in correcting gradient vanishing by dividing the feature map into two parts. One part is input to the next convolutional layer, and the remaining is concatenated to the output. The attention mechanism boosts the importance of relevant information and reduces the significance of irrelevant details, thereby enhancing UAV detection ability. Lastly, the CSP-SPP enhances feature extraction. Conversely, ADM is a multi-scale selection module that enhances UAV detection by incorporating an LNB (lightweight network block) after each scale. The LNBs are modules within a neural network designed to be computationally efficient and have a low number of parameters.

Figure 1.

LSL-Net architecture.

Note that in LSL-Net, the EFM module represents the highest computational requirements of the architecture due to the number of convolutional layers; since this module is in charge of extracting features from the images of the feature map, the operation of the EFM requires a large number of FLOPs (Floating Point Operations per second), impacting the need for more hardware. The elimination of this module would decrease computational requirements, facilitating its implementation in systems with low hardware. However, eliminating the EFM would reduce the ability to detect UAVs. To face this problem, we propose replacing it with dilated convolutional layers in charge of extracting features while maintaining low computational requirements.

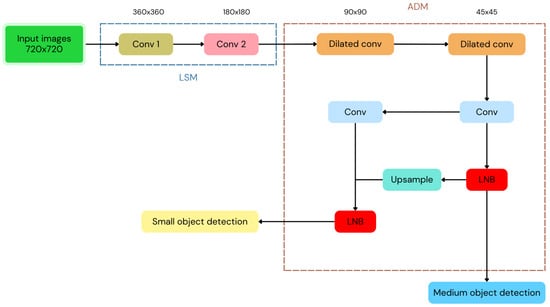

In Figure 2, our proposed architecture is shown. The first two convolutional layers extract low-level features and reduce the image using a kernel. Then, two dilated convolutional layers are responsible for feature extraction using a kernel with a dilation range of 3; these two layers replace the function of the EFM module in LSL-Net. The wide field of view of the dilated kernel allows for better feature extraction without needing more convolutional layers, maintaining low computational requirements. It is important to highlight that LSM-Net in the ADM module of the LSL-Net in Figure 1 has three scales depending on the object’s size. Our proposed architecture eliminates the scale for large objects because we consider it unnecessary, as UAVs rarely have that perspective in images. We retain the scale for small and medium objects, and for each of them, a convolutional layer followed by an LNB is used to determine the presence of the object of interest.

Figure 2.

Our proposed architecture for UAV detection.

Our architecture is expected to have lower UAV detection performance due to the replacement of the EFM module with a more straightforward array. However, the series of experiments shown in the next section yield competent accuracy in real-time UAV detection compared to LSL-Net.

4. Experiments and Results

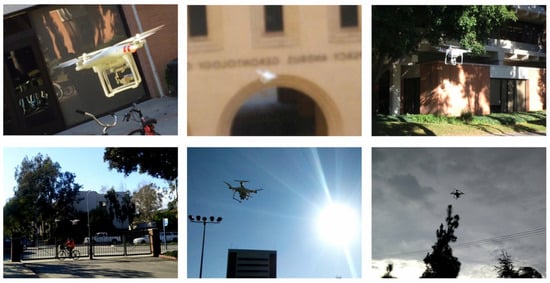

Our architecture and the LSL-Net were implemented and trained using the union of the University of Southern California (USC) dataset [20], consisting of 29,000 UAV images, of which 27,000 are colored and 2000 are thermal images, and the dataset named “Detection” presented in [21] composed of 3263 thermal images. Figure 3 and Figure 4 show some examples of the figures in these datasets, respectively. For our first experiment, 80% (25,810) of the images were used for training and 20 % (6452) for testing; these datasets were selected exclusively as UAVs. Training and testing were performed using a computer with a Windows 10 operating system, a 2.40 GHz Intel Core I7-3630QM processor, 8 Gb RAM, and an NVIDIA GeForce RTX 3060 GPU with 12 GB.

Figure 3.

Examples of the dataset taken from the University of Southern California (USC) by Wang, Chen, Choi, and Kuo (2019a) [20].

Figure 4.

Examples of the dataset Detection (2022).

For the training stage, we used 250 training epochs, set the size of the images to 720 × 720 pixels and a batch size of 4. Adam was used as an optimizer with learning rate of 0.01. These parameters were used to train both our architecture and LSL-Net to compare them; both were retrained with the concatenation of the datasets mentioned above, since LSL-Net was trained with COCO, which contains, besides UAVs, different objects that we consider not relevant for our purposes. In [8], LSL-Net is compared against other versions of YOLO. The authors show that LSL-Net overcomes YOLO’s versions in detecting UAVs, so we will only compare them directly with LSL-Net architecture. Table 1 compares the accuracy reported by the authors of [8], denoted as LSL-Net*, in the first row. The second and third rows show the accuracy of the LSL-Net we implemented and our simplified LSL-Net architecture proposed in this paper with the testing set. In this table, we can see that our proposed architecture was more accurate than LSL-Net.

Table 1.

The accuracy of the LSL-Net reported in [8] (denoted as LSL-Net*), the accuracy obtained by the LSL-Net we implemented, and the accuracy obtained by our simplified LSL-Net architecture.

It is important to highlight that the results reported included images in challenging scenarios, such as birds that could be misidentified, as the UAV training images included birds flying. Figure 5 shows examples of drones and birds in the same frame. In these examples, in the first frame (the one on the left side), our simplified LSL-Net architecture detects the bird as a drone due to the distance and the bird’s pose. Conversely, our model correctly detects the drone in the second frame (the one on the right side).

Figure 5.

Examples of UAV detection in images of UAVs flying with birds.

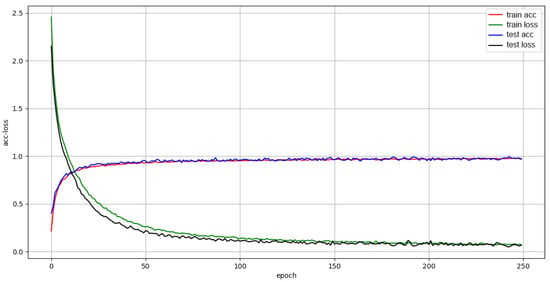

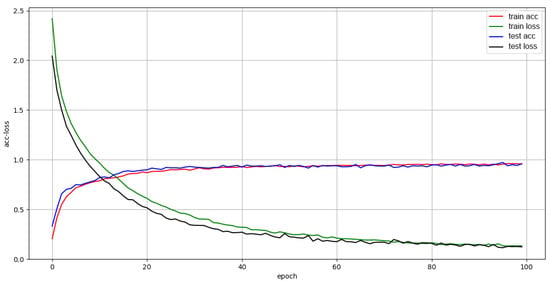

Figure 6 and Figure 7 show the loss and accuracy plots for our proposed simplified LSL-Net architecture and LSL-Net, respectively, showing how the loss decreases and the accuracy increases without showing signs of overfitting throughout the epochs. These figures allow us to appreciate that our proposed simplified LSL-Net architecture behaves similarly to LSL-Net [8] regarding accuracy; however, the simplified LSL-Net architecture’s red and blue lines (in Figure 6) are closer to 1 than those of LSL-Net (see Figure 7). Thus, we can conclude that our proposed architecture achieves similar results to LSL-Net but is smaller, requiring fewer computational resources. In a second experiment, the performance of both architectures is compared using a statistical analysis of their respective accuracies per frame of a series of videos with the presence of UAVs. To evaluate the performance of the architectures, fifteen different videos were tested; in each video, the accuracy of each frame was obtained, and the mean and standard deviation obtained from all accuracies of the video were directly compared. In addition, an ANOVA analysis was performed per video to compare the accuracies of the proposed simplified LSL-Net and the LSL-Net architectures.

Figure 6.

Training results of our proposed simplified LSL-Net architecture.

Figure 7.

LSL-Net training results.

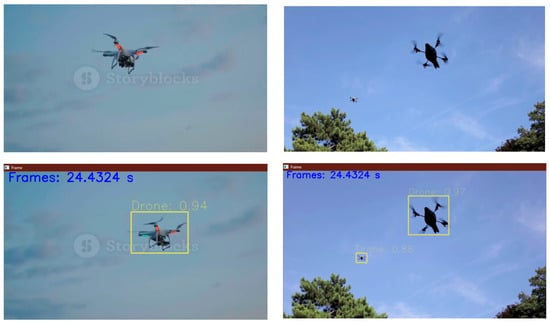

Table 2 shows the mean and standard deviation of the accuracy obtained with the 15 videos in all the frames; the average accuracy obtained by LSL-Net and our proposed architecture is very similar between both architectures, which shows that our architecture is competitive with LSL-Net in detecting UAVs in real-time. Also, the standard deviation obtained with our network is relatively small, so the accuracy is consistent. On the other hand, Table 2 also shows the p-value from the ANOVA analysis performed between the accuracies of both networks; the p-values for the ANOVA analysis of the 15 videos are greater than 0.05, so the null hypothesis cannot be rejected, i.e., the accuracies of both architectures are statistically similar. Figure 8 shows examples of detection results in videos where UAVs are flying; as seen in these figures, our architecture can detect UAVs well, regardless of whether the UAV is flying at low or medium altitudes. This experiment was performed on a laptop without a graphics card, with a Core i7-3630QM processor and 6 Gb RAM. The device used for these tests was the least resourced one available. However, a detection speed of up to 24 fps was achieved, which is reasonable in practice.

Table 2.

Mean, standard deviation of the accuracy, and p-value of the fifteen videos used in our experiments.

Figure 8.

Examples of UAV detection in images from videos where UAVs fly at low and medium altitudes.

Finally, we present an experiment comparing our proposed simplified LSL-Net architecture against two recent state-of-the-art architectures, YOLOv11 [22] and MobileNetV3 [23]. We retrained both networks (YOLOv11 and MobileNetV3) with the datasets we used to train our network for a fairer comparison. Table 3 shows the comparison of our work against YOLOv11 and MobileNetv3 Note that YOLO11 is the best network in all metrics; however, our proposed simplified LSL-Net architecture is the second best with close results to those of YOLOv11. Our simplified LSL-Net architecture’s performance difference against YOLOv11 is minimal, as our simplified LSL-Net architecture is a lighter network. YOLOv11 reports 2.6 million hyperparameters in its lightest version; however, our architecture only uses 1.9 million hyperparameters. Therefore, our proposed simplified LSL-Net architecture is recommended for systems with low computational power without sacrificing accuracy.

Table 3.

Comparison with other models.

5. Conclusions

In this paper, we proposed a modification of the LSL-Net architecture that employs dilated convolutions capable of detecting UAVs in real-time while maintaining low hardware requirements so that it can be implemented in more systems without sacrificing performance in its objective. Our proposed architecture achieves a competitive performance regarding LSL-Net detecting UAVs in real-time; after retraining LSL-Net with the dataset used in our experiments, we achieved an accuracy of 0.9678, while our proposal obtained 0.9847 in accuracy, obtaining an improvement of 1.69%, but with lower hardware requirements.

The improvement could be obtained because our modification was planned to reduce the size of the architecture for UAV detection and highlight the rate of frames per second at which the detection is performed. In our experiments, with a laptop without a graphics card, with a Core i7-3630QM processor and 6 Gb RAM, our architecture achieved 24 fps in the detection. On the other hand, the use of dilated convolutions was the best choice to replace the EFM module of LSL-Net, in charge of high-level feature extraction, achieving a decrease in the computational requirements of the architecture. In addition, the retraining using the datasets exclusively of UAV images allowed the network to adjust its weights only for the detection of UAVs, improving the accuracy of our architecture.

On the other hand, in our experiments, we compared our work against YOLOv11 and MobileNetV3 on different performance metrics. The proposed architecture achieves detection results that compete against the performance of YOLOv11. However, it is a simpler architecture, making it ideal for applications with little computational power and obtaining results similar to those of YOLOv11 that requires much more computational resources.

While our proposed architecture achieves good results, the main limitation is the scarcity of training images for drone detection. However, evaluating the proposed architecture in a real-world environment is desirable since, as mentioned, the experiments and tests were conducted with pre-recorded videos of unmanned aerial vehicles (UAVs). It will allow us to have more training images for drone detection and, based on the results achieved, eventually propose adjustments to the proposed architecture to optimize it even more. Another possible direction for future work is to replicate our work presented in this paper with other recent architectures like YOLOv11 and YOLOv12 [24].

Author Contributions

Conceptualization, J.F.M.-T. and J.A.C.-O.; methodology, J.F.M.-T. and J.A.C.-O.; software, N.A.G.-R. and F.D.C.-G.; validation, F.D.C.-G. and N.A.G.-R.; formal analysis, F.D.C.-G., N.A.G.-R., J.F.M.-T. and J.A.C.-O.; investigation, F.D.C.-G. and N.A.G.-R.; data curation, F.D.C.-G. and N.A.G.-R.; writing—original draft preparation, F.D.C.-G. and N.A.G.-R.; writing—review and editing, J.F.M.-T. and J.A.C.-O.; supervision, J.F.M.-T. and J.A.C.-O. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

No new data were created or analyzed in this study.

Acknowledgments

A thank you is extended to SECIHTI and INAOE for the resources provided for the realization of this work.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Fukui, S.; Nishiyama, R.; Iwahori, Y.; Bhuyan, M.K.; Woodham, R.J. Object tracking with improved detector of objects similar to target. Procedia Comput. Sci. 2015, 60, 740–749. [Google Scholar] [CrossRef]

- Taha, B.; Shoufan, A. Machine Learning-Based Drone Detection and Classification: State-of-the-Art in Research. IEEE Access 2019, 7, 138669–138682. [Google Scholar] [CrossRef]

- Al-Qubaydhi, N.; Alenezi, A.; Alanazi, T.; Senyor, A.; Alanezi, N.; Alotaibi, B.; Alotaibi, M.; Razaque, A.; Abdelhamid, A.A.; Alotaibi, A. Detection of Unauthorized Unmanned Aerial Vehicles Using YOLOv5 and Transfer Learning. Electronics 2022, 11, 2669. [Google Scholar] [CrossRef]

- Öztürk, A.E.; Erçelebi, E. Real UAV-Bird Image Classification Using CNN with a Synthetic Dataset. Appl. Sci. 2021, 11, 3863. [Google Scholar] [CrossRef]

- Yavariabdi, A.; Kusetogullari, H.; Cicek, H. UAV detection in airborne optic videos using dilated convolutions. J. Opt. 2021, 50, 569–582. [Google Scholar] [CrossRef]

- Seidaliyeva, U.; Akhmetov, D.; Ilipbayeva, L.; Matson, E.T. Real-Time and Accurate Drone Detection in a Video with a Static Background. Sensors 2020, 20, 3856. [Google Scholar] [CrossRef]

- Avola, D.; Cinque, L.; Diko, A.; Fagioli, A.; Foresti, G.L.; Mecca, A.; Pannone, D.; Piciarelli, C. MS-Faster R-CNN: Multi-Stream Backbone for Improved Faster R-CNN Object Detection and Aerial Tracking from UAV Images. Remote Sens. 2021, 13, 1670. [Google Scholar] [CrossRef]

- Tao, Y.; Zongyang, Z.; Jun, Z.; Xinghua, C.; Fuqiang, Z. Low-altitude small-sized object detection using lightweight feature-enhanced convolutional neural network. J. Syst. Eng. Electron. 2021, 32, 841–853. [Google Scholar] [CrossRef]

- Martinez-Alpiste, I.; Golcarenarenji, G.; Wang, Q.; Alcaraz-Calero, J.M. Search and rescue operation using UAVs: A case study. Expert Syst. Appl. 2021, 178, 114937. [Google Scholar] [CrossRef]

- Jeong, E.; Seo, J.; Wacker, J.P. UAV-aided bridge inspection protocol through machine learning with improved visibility images. Expert Syst. Appl. 2022, 197, 116791. [Google Scholar] [CrossRef]

- Yang, Z.; Lian, J.; Liu, J. Infrared UAV Target Detection Based on Continuous-Coupled Neural Network. Micromachines 2023, 14, 2113. [Google Scholar] [CrossRef] [PubMed]

- AlKhonaini, A.; Sheltami, T.; Mahmoud, A.; Imam, M. UAV Detection Using Reinforcement Learning. Sensors 2024, 24, 1870. [Google Scholar] [CrossRef] [PubMed]

- Sairam, D.; Bhuvaneswari, R.; Chokkalingam, S. UAV Detection for Aerial Vehicles using YOLOv8. In Proceedings of the 2024 International Conference on Integrated Circuits and Communication Systems (ICICACS), Raichur, India, 23–24 February 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Huang, M.; Mi, W.; Wang, Y. EDGS-YOLOv8: An Improved YOLOv8 Lightweight UAV Detection Model. Drones 2024, 8, 337. [Google Scholar] [CrossRef]

- Xu, H.; Ling, Z.; Yuan, X.; Wang, Y. A video object detector with Spatio-Temporal Attention Module for micro UAV detection. Neurocomputing 2024, 597, 127973. [Google Scholar] [CrossRef]

- Bo, C.; Wei, Y.; Wang, X.; Shi, Z.; Xiao, Y. Vision-Based Anti-UAV Detection Based on YOLOv7-GS in Complex Backgrounds. Drones 2024, 8, 331. [Google Scholar] [CrossRef]

- Luo, K.; Luo, R.; Zhou, Y. UAV detection based on rainy environment. In Proceedings of the 2021 IEEE 4th Advanced Information Management, Communicates, Electronic and Automation Control Conference (IMCEC), Chongqing, China, 18–20 June 2021; Volume 4, pp. 1207–1210. [Google Scholar]

- Zhang, Z.; Tang, L.; Tian, Y.; Pan, Y. A Real-time Visual UAV Detection Algorithm on Jetson TX2. In Proceedings of the 2019 IEEE International Conference on Signal, Information and Data Processing (ICSIDP), Chongqing, China, 11–13 December 2019; pp. 1–4. [Google Scholar]

- Wang, Y.; Wang, G.; Chen, C.; Pan, Z. Multi-scale dilated convolution of convolutional neural network for image denoising. Multimed. Tools Appl. 2019, 78, 19945–19960. [Google Scholar] [CrossRef]

- Wang, Y.; Chen, Y.; Choi, J.; Kuo, C.C.J. Towards visible and thermal drone monitoring with convolutional neural networks. APSIPA Trans. Signal Inf. Process. 2019, 8, e5. [Google Scholar] [CrossRef]

- Detection. Thermal Dataset. 2022. Available online: https://universe.roboflow.com/detection-0wlpq/thermal-pxgmo (accessed on 19 April 2023).

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for MobileNetV3. In Proceedings of the Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Tian, Y.; Ye, Q.; Doermann, D. Yolov12: Attention-centric real-time object detectors. arXiv 2025, arXiv:2502.12524. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).