Abstract

This study presents a computer-aided diagnostic (CAD) framework that integrates multi-domain features through a hybrid methodology. The system uses several light deep networks (EfficientNetB0, MobileNet, and ResNet-18), which feature fewer layers and parameters, unlike traditional systems that depend on a single, parameter-complex deep network. Additionally, it employs several handcrafted feature extraction techniques. It systematically assesses the diagnostic power of deep features only, handcrafted features alone, and both deep and handcrafted features combined. Furthermore, it examines the influence of combining deep features from multiple CNNs with distinct handcrafted features on diagnostic accuracy, providing insights into the effectiveness of this hybrid approach for classifying lung and colon cancer. To achieve this, the proposed CAD employs non-negative matrix factorization for lowering the dimension of the spatial deep feature sets. In addition, these deep features obtained from each network are distinctly integrated with handcrafted features sourced from temporal statistical attributes and texture-based techniques, including gray-level co-occurrence matrix and local binary patterns. Moreover, the CAD integrates the deep attributes of the three deep networks with the handcrafted attributes. It also applies feature selection based on minimum redundancy maximum relevance to the integrated deep and handcrafted features, guaranteeing optimal computational efficiency and high diagnostic accuracy. The results indicated that the suggested CAD system attained remarkable accuracy, reaching 99.7% using multi-modal features. The suggested methodology, when compared to present CAD systems, either surpassed or was closely aligned with state-of-the-art methods. These findings highlight the efficacy of incorporating multi-domain attributes of numerous lightweight deep learning architectures and multiple handcrafted features.

1. Introduction

Among the most important causes of cancer-related morbidity and death worldwide are lung and colon cancer [1]. Causing almost 1.8 million deaths annually, lung cancer is the most deadly cancer known to exist globally according to recent statistics. In comparison, colon cancer, with an estimate of almost 1.1 new cases every year [2], is the third most prevalent after lung cancer and is one of the leading causes of cancer-related deaths. Lung cancer and colon cancer may occur in different organs, but several studies raise a substantial suspicion of such similar conditions [3,4]. Prevalent risk factors such as family history, exposure to environmental toxins, and the frequency of tobacco use may be the cause of this relationship. An additional contributing factor may be the existence of an immune response mechanism that is either associated with or triggered by another type of cancer, as well as widespread inflammation. Such relationships highlight the importance of accurate and immediate diagnosis for proper planning for treatment.

There are several imaging modalities by which lung and colon cancers are diagnosed, namely, computed tomography (CT), magnetic resonance imaging (MRI), and positron emission tomography (PET) [5]. Although providing valuable information on the location, size, and presence of metastases within the tumor, their major drawbacks range from great expenses, to excess utilization of radiation, and to poor resolution to differentiate small tissue anomalies [6]. The gold standard for lung and colon cancer diagnosis is histopathology, which involves microscopic study of stained tissue sections [5,7]. Histopathology is quite accurate in identifying structural and cellular changes suggestive of cancer. Still, manual diagnosis using histopathological images is subjective, labor-intensive, and prone to pathologist variation, especially when looking at complex patterns in large-scale datasets [8]. Thus, automated methods for cancer subtype identification are absolutely essential to reduce doctors’ workload [9].

Recent technological advances in computers have enabled clinicians and physicians to identify and recognize various tumors and illnesses through computer-aided diagnostic (CAD) tools. CAD systems have evolved into efficient tools to overcome the constraints of manual diagnosis [10,11,12]. CAD systems can be classified into two primary methods: those based on handcrafted features and those employing deep-learning-based feature techniques. Handcrafted features utilizing CAD techniques may provide accuracy within an acceptable range [13] typically between 80% to 90% without necessitating extensive data or being computationally demanding [14]. Feature extraction is crucial in conventional handcrafted-feature-based CAD methods for classifying anomalies in medical imaging [15]. Enhancing diagnostic reliability necessitates the extraction of a varied array of features, encompassing texture, statistical, and shape-based descriptors. Texture features, for example, offer significant insights into the spatial distribution of pixel intensities within an image. Methods such as the gray-level co-occurrence matrix (GLCM) [16] and local binary pattern (LBP) [17], which effectively capture distinct attributes of tissue morphology, are frequently utilized for this objective. Nevertheless, these techniques frequently concentrate on a singular characteristic of the image. A shape descriptor may inadequately capture essential texture-related information, thereby restricting its efficacy when deployed on intricate medical images of the lung and colon.

Conversely, deep-learning-based CAD systems have garnered considerable interest owing to their capacity to autonomously learn hierarchical features from unprocessed image data. Deep learning approaches, alongside conventional methods of feature extraction, can be employed to derive discriminative attributes from raw image data. However, in terms of classification challenges, deep learning algorithms significantly outperform traditional methods [18]. Nevertheless, certain studies indicate that integrating deep learning features with conventional handcrafted features could enhance diagnostic efficacy [14,19,20,21]. Convolutional neural networks (CNNs) are nowadays the leading deep learning method in medical imaging research due to their ability to identify complex patterns and achieve high diagnostic accuracy [22]. CNNs have achieved notable success in various medical applications, particularly in X-ray [23], histopathology [24,25], Pap smear [26], and CT. Inspired by the significant achievements of CNNs in various medical and health fields, they have been incorporated into multiple CAD designs for lung and colon identification. Substantial quantities of information are necessary for these structures to prevent overfitting and inadequate generalization. Due to the difficulties in labeling histopathological photographs, transfer learning (TL) aims to transfer knowledge from a source realm to a desired field to mitigate overfitting. CNNs, formerly learned on ImageNet with TL, could be leveraged for cancer diagnosis [27].

This study presents a CAD system that incorporates multi-domain attributes via diverse feature extraction methodologies. The method extracts various spatial deep features from three lightweight CNN models and reduces their dimensionality through non-negative matrix factorization (NNMF). Furthermore, it generates handcrafted features, encompassing temporal statistical attributes through various methods and textural attributes employing GLCM and LBP extractors. These handcrafted features are merged into an integrated ensemble. The deep feature sets derived from the individual CNNs are subsequently integrated with the aggregated handcrafted features. Thereafter, all deep features from the three CNNs are integrated with the fused handcrafted features, followed by the implementation of the minimum redundancy maximum relevance (mRMR) feature selection technique to ascertain the most pertinent features and further diminish dimensionality.

A thorough comparative analysis assesses the diagnostic efficacy of the proposed CAD system. The analysis evaluates (1) the diagnostic efficacy of deep features from each CNN independently, (2) the performance of individual and aggregated handcrafted features, (3) the efficacy of integrating each deep feature set with the aggregated handcrafted features, and (4) the overall performance of deep features from all CNNs amalgamated with the aggregated handcrafted features following mRMR feature selection.

The principal contributions and novelty of the presented CAD approach can be encapsulated in the following manner:

- The creation of an effective CAD structure employing numerous light CNNs characterized by fewer layers and diminished parameters, in conjunction with various handcrafted feature extraction methods. This method differs from current CAD systems, which generally depend on a solitary, parameter-intensive CNN or a unique handcrafted technique.

- The suggested CAD obviates the necessity for pre-segmentation or enhancement procedures, typically mandated by numerous existing CAD systems.

- Incorporation of attributes from multiple categories, such as spatial deep learning and texture-based attributes, instead of relying solely on a single feature extraction technique from a particular field, thus improving classification performance.

- Extraction of texture features, including GLCM and LBP, alongside statistical attributes obtained from both temporal and spatial domains.

- Examination of the effects of integrating various handcrafted types of features with each deep learning feature set individually derived from separate CNNs, an approach infrequently investigated in current CAD systems.

- Integration of deep-learning-generated feature sets and manually crafted features through a feature selection method, such as non-negative matrix factorization (NNMF), to diminish feature dimensionality and reduce training duration.

- This study thoroughly investigates the diagnostic efficacy of using deep features only, handcrafted features only, and a combination of both. The analysis also explores the effects of combining deep features extracted from different CNNs with different handcrafted features on diagnostic accuracy, giving an insight into the efficacy of this hybrid approach in classifying lung and colon cancer.

This study expands on our earlier work [28], which presented a CAD system that uses compact CNNs and CCA-based dimensionality reduction for the classification of lung and colorectal cancer. While both studies focus on using deep learning to classify lung and colon cancer, our current study offers significant methodological improvements:

1. Contrary to our earlier research [28], which leveraged multi-scale deep features obtained from two CNN deep layers to evaluate the effects of using multi-scale features from various deep layers, the current study presents a more varied and hybrid methodology. The present study incorporates handcrafted features, encompassing statistical, textural (GLCM, LBP), and temporal domain features, with attributes obtained from deep CNNs.

2. Our prior research [28] implemented canonical correlation analysis (CCA) for dimensionality reduction, while the present study utilizes NNMF, which offers superior interpretability and improves feature selection.

3. In the present study, the mRMR feature selection technique is employed, which efficiently selects features from both deep and handcrafted domains, thereby minimizing computational complexity and enhancing classification performance.

4. The present study conducts a comprehensive examination of the integration of handcrafted and deep features, a topic not addressed in our prior research. We evaluate the influence of various feature sets both individually and collectively to determine their effect on classification performance. The joint incorporation of handcrafted features and deep learning features is a major advancement. By integrating spatial, textural, and statistical features, the framework captures a more comprehensive and varied amount of information than deep learning features alone.

5. An extensive array of experiments are performed assessing various CNN architectures, handcrafted feature extractions, and machine learning classifiers, providing an enhanced understanding of feature interactions and their impact on diagnostic efficacy. These modifications emphasize the originality of our current research, distinguishing it from previously published studies. The amended manuscript now clearly delineates these distinctions.

2. Literature Review

The present section features a brief overview of some of the important CAD tools developed for the detection of lung and colon tumors using histopathological images. First, conventional CAD approaches employed in the diagnosis of such cancers are discussed. Subsequently, CAD systems based on deep learning are explored. Lastly, the hybrid approaches that combine classical and deep-learning-based techniques to improve diagnostic accuracy are examined.

2.1. Handcrafted-Features-Based CAD Tools

The CAD technique [14] involves the mining of texture features using the Haralick method and color attributes via the color histogram algorithm. The attributes extracted were merged to form a cohesive set of attributes. Thus, three feature sets were studied with the LightGBM (Light Gradient Boosting Machine) classifier: texture, color, and combined features. The classifier LightGBM achieved an accuracy of 97.72%, 99.92%, and 100% for feature extraction using texture, color, and combining textural and color features, respectively. Similarly, the paper presents a CAD system [29] with two preprocessing methods: unsharp masking and stain normalization. Renfield shifted the images into grayscale, and, hence, feature extraction preceded. Various feature extraction techniques were utilized to acquire features, including GLCM, statistical methods, and Hu moment variants. Afterwards, recursive feature elimination, a feature selection technique, was employed to identify the most effective features. Following that, six machine learning algorithms were employed to classify the images based on the chosen features.

2.2. Deep-Learning-Based CAD Tools

The latest deep learning methodologies have demonstrated encouraging outcomes for the diagnosis of lung and colon cancer histopathology. Ref. [30] combined a marine predator (MP) method with MobileNet and deep belief networks (DBNs). This CAD leveraged CLAHE for contrast enhancement and MP for optimization, attaining an accuracy of 99.28%. The model demonstrated notable efficacy in managing intricate histopathological characteristics. Ref. [31] adapted ResNet50 and EfficientNetB0 layouts, utilizing gray wolf optimization and soft voting to attain an accuracy of 98.73%. Ref. [1] employed EfficientNetV2 variations, achieving an accuracy of 99.97% with the large EfficientNetV2 construction, validated via gradient-weighted class activation mapping (Grad-CAM) visualization. Ref. [9] presented a sophisticated framework that integrates ResNet-18 to classify binary classes and EfficientNet-b4-wide to classify multiple classes. An optimization procedure was used that integrates the whale optimization algorithm (WOA) with adaptive β-Hill Climbing, which attained an accuracy of 99.96% using the LC25000 dataset. Ref. [32] introduced a compact CNN utilizing multi-scale feature extraction, attaining 99.20% accuracy across five categories, bolstered by explainable AI techniques including Grad-CAM and Shapley additive explanation (SHAP). Ref. [33] introduced ColonNet, which combines dual CNN structures with global–local pyramid patterns and deep residual blocks, surpassing conventional architectures such as VGG and DenseNet. Ref. [34] employed the Al-Biruni Earth radius (BER) technique integrated with ShuffleNet and recurrent networks.

Recent research efforts have concentrated on ensemble methodologies. Ref. [35] integrated three CNNs with a kernel extreme learning machine (KELM), attaining 99.0% accuracy by effectively managing multi-dimensional feature sets. Ref. [36] employed three deep networks for extracting features, exploiting principal component analysis (PCA) and fast Walsh Hadamard transform (FWHT) for dimensionality reduction. The framework attained 99.6% accuracy through discrete wavelet transform (DWT) fusion and SVM classification utilizing merely 510 features. Conversely, Ref. [37] introduced a deep capsule network approach. The above algorithm employed different forms of convolutional layers. The suggested approach attained 99.58% accuracy.

2.3. Hybrid CAD Tools

Ref. [38] combined Inception-ResNetV2 with LBP features, attaining an accuracy of 99.98%, and applied SHAP for improved model interpretability. Likewise, Ref. [6] created a hybrid CAD approach that integrates random forest (RF), SVM, and logistic regression (LR). The CAD employed VGG16 for deep feature extraction in conjunction with the LBP handcrafted feature extraction technique, attaining 99.00% accuracy, 99.00% precision, and 98.80% recall using the LC25000 dataset, indicating strong performance across various metrics. Ref. [19] developed three methodologies, each utilizing dual deep networks and artificial neural networks (ANN) to construct a CAD system. The dual deep models produced a massive amount of variables; consequently, unrelated and redundant variables were removed to reduce dimensions and retain vital features through PCA. The initial method for cancer detection using ANN utilizes significant attributes from the two deep networks separately. The following method leverages an ANN that combines the features of GoogleNet and VGG19. A pair sorts of systems were emplaced; one consisted of reduced dimensions and incorporated attributes, while the other combined the extensive dimensions of attributes and, thereafter, lowered those large dimensions. The ultimate method leverages an ANN that amalgamates features from the two deep models in conjunction with handmade variables. The shallow network attained 99.64% accuracy, through the integration of VGG19 merged attributes with handcrafted features. Masud et al. [39] proposed a classification system for five categories of lung and colon tissues utilizing histopathological photographs. Initially, photographs underwent image sharpening. Subsequently, features were obtained from the photos using two different transform-based techniques. The attributes were employed to feed a custom-optimized deep model. The accuracy of the suggested CAD was found to be 96.33%.

On the other hand, Ref. [40] carried out an in-depth comparison of two-fold classification strategies. The initial approach involved the extraction of texture, color, and shape-based attributes. Such features were utilized for identification employing various machine learning techniques. The subsequent approach employed TL for feature extraction. Numerous deep neural networks were employed to acquire features. The RF technique demonstrated a superior performance of 98.60% accuracy using variables derived from DenseNet-121.

This study builds upon our previous work [28], which introduced a CAD system leveraging lightweight CNNs and CCA-based dimensionality reduction for lung and colon cancer classification. Similar to our previous study [28], the current work utilizes the LC25000 dataset and evaluates performance using metrics such as accuracy, sensitivity, specificity, and F1-score. Both studies concentrate on the classification of lung and colon cancer through deep learning. However, the present study introduces several key innovations. In contrast to our prior research [28], which concentrated on utilizing multi-scale feature extraction from two CNN deep layers to evaluate the effects of employing multi-scale features from various deep layers, the present study presents a more varied and hybrid methodology. In particular:

1. Our previous research [28] used only CNN-based features extracted from two deep layers; however, the current study emphasizes the value of using diverse feature representation (i.e., textural and statistical features) to create robustness for classification. This provides a solution to the limitation of existing CAD systems having a preference for either textural features or statistical measures.

2. The benefit of using handcrafted features and CNN-based features by combining them was demonstrated. The statistical and textural features add to the CNN-based representation when used for the classification of medical images. The blending of features allows both features to use high-level hierarchical patterns (i.e., CNN) and low-level texture/statistical features (i.e., handcrafted). This approach solves the limitations of relying on a singular feature type.

3. Our previous work [28] used CCA and ANOVA. This work adopts NNMF for dimensional reduction, and mRMR for feature selection. NNMF is better suited for non-negative feature spaces, which are more common for medical images. Also, mRMR targets features that optimize feature relevance, with the least amount of redundancy, and likely offers a more principled approach to feature fusion.

4. This study also has a larger comparative analysis in evaluating the diagnostic performance of

- Deep features from separate CNNs.

- Handcrafted features, both separately and combined.

- Combining deep and handcrafted features.

- The impact of feature reduction and selection methods (NNMF and mRMR) upon classification performance.

This study’s hybrid approach enhances present approaches by overcoming significant drawbacks in feature representation, fusion strategies, and computational efficiency. Previous CAD tools for the classification of lung and colon cancer can be categorized into three distinct types: (1) handcrafted-feature-based methods, which depend on manually designed attributes such as texture (GLCM, LBP) or statistical descriptors; (2) deep-learning-based approaches, which utilize hierarchical patterns acquired by CNNs; and (3) hybrid techniques that integrate both methodologies. Although these works have shown encouraging outcomes, they frequently exhibit limited feature scope, inadequate fusion methodologies, or dependence on resource-intensive preprocessing.

Conventional handcrafted-feature-based systems include those utilizing Haralick texture features or color histograms. Refs. [14,29] excel at capturing subtle textural and statistical features but are deficient in modeling intricate hierarchical patterns present in histopathological images. In contrast, deep-learning-focused approaches [1,30,31] prioritize high-level spatial representations from CNNs but may neglect the discriminative local textures essential for distinguishing subtle cancer subtypes. Current hybrid frameworks, including those [6,38] that integrate VGG16 with LBP or Inception-ResNetV2 with handcrafted features, frequently utilize primitive fusion methods (e.g., concatenation without feature selection) or depend on antiquated methods for reducing dimensionality such as PCA. For example, [6] achieved 99% accuracy by integrating VGG16 and LBP features; however, it employed PCA for dimensionality reduction, which presumes linear feature relationships and may result in the loss of non-linear discriminative information. Likewise, [38] integrated LBP with deep features but failed to systematically assess the synergistic effects of multi-domain features or utilize advanced selection techniques to reduce redundancy.

Conversely, our hybrid methodology presents three principal innovations that set it apart from current techniques. Initially, it merges multi-domain attributes encompassing deep spatial representations derived from lightweight CNNs and an extensive array of handcrafted attributes, including GLCM, LBP, and 13 statistical descriptors. This combination encompasses both primary morphological frameworks and intricate textural specifics, rectifying the limited feature range of previous studies. The framework utilizes NNMF for dimensionality reduction, which is particularly appropriate for medical imaging data where features, such as pixel intensities and texture values, are intrinsically non-negative. In contrast to PCA or CCA, utilized in previous studies, NNMF maintains comprehension by breaking data into additive, non-negative components, which aligns more effectively with the biological interpretability necessary in clinical contexts. This study employs mRMR for feature selection, a systematic approach that maximizes feature relevance to the target class while minimizing redundancy among features. This differs from traditional methods such as ANOVA or recursive feature elimination, which focus solely on relevance and may retain redundant features that compromise model robustness.

Moreover, our framework obviates preprocessing procedures like image sharpening or stain normalization, which are resource-intensive and susceptible to artifact introduction. The system enhances clinical applicability and preserves diagnostic accuracy by utilizing raw histopathological images modified through dynamic scaling and spatial transformations. The thorough comparative analysis—assessing deep features, handcrafted features, and their integration—confirms the superiority of the hybrid approach through empirical evidence. For instance, although the authors of [39] reported an accuracy of 99.98% utilizing Inception-ResNetV2 with LBP, their research failed to delineate the contributions of distinct feature types or assess redundancy within the fused set. Our ablation studies reveal that the amalgamation of NNMF-reduced deep features with mRMR-selected handcrafted attributes enhances sensitivity by 2.3% relative to deep-only models and decreases training time by 18% compared to previous hybrid methods, highlighting both performance and efficiency improvements.

3. Materials and Methods

3.1. Non-Negative Matrix Factorization

Non-negative matrix factorization (NNMF) is a type of matrix reduction technique that decomposes a matrix into two lower-dimensional matrices with absolutely positive entries [41]. If employed on a matrix V, NNMF breaks down it into matrices W and H, approximating V as WH. The above method is particularly effective in contexts where negative values are devoid of physical significance, such as in image interpretation and signal analysis [42].

The core NNMF optimization problem is expressed as follows:

where V ∈ ℝ^(m×n) is broken into W ∈ ℝ^(m×k) and H ∈ ℝ^(k×n), with k generally selected to be less than both m and n [43]. The Frobenius norm ||·|| quantifies the reconstruction error [44].

minimize ||V − WH||2 subject to W, H ≥ 0

This analysis produces two essential elements:

- A basis matrix W (m × r) that encapsulates essential data patterns.

- A coefficient matrix H of dimensions r by n that represents the combination weights.

The non-negativity condition offers two principal benefits. Initially, it facilitates a straightforward depiction of data based on parts, congruent with human perception [45]. Secondly, it encourages sparse solutions that accurately represent fundamental data structures, frequently exceeding conventional dimensionality reduction techniques in comprehensibility [46]. The characteristic of sparseness aids in recognizing latent structures in large datasets.

3.2. LC25000 Dataset Description

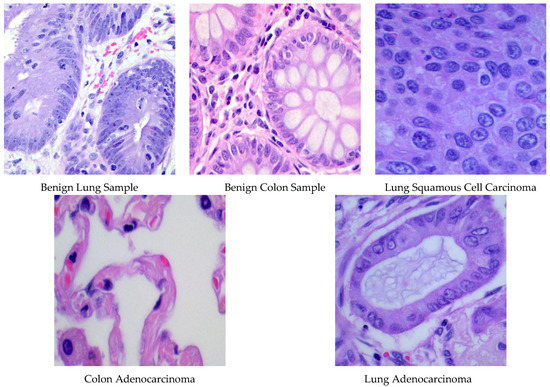

The LC25000 dataset [47], published in 2020, contains histopathological photos sourced from James A. Haley Veterans’ Hospital in Tampa, Florida. This extensive compilation includes 25,000 scans of lung and colon tissues, evenly distributed among five distinct categories. Every high-resolution color picture (dimensions: 768 × 768 pixels) was subjected to hematoxylin and eosin staining and standardized preprocessing, which included rotational augmentation. The database classifies samples into five main categories: two benign tissue types (lung and colon) and three malignant types. Malignant classifications encompass colon adenocarcinoma, which arises from intestinal polyps and accounts for approximately 95% of colorectal cancers. The lung cancer specimens include lung adenocarcinoma, originating in peripheral glandular tissues and representing 60% of lung tumors, and squamous cell carcinoma, arising in bronchial structures and comprising 30% of instances of lung cancer. Figure 1 displays representative histopathological photos from each tissue class, illustrating the unique morphological features of benign and malignant specimens.

Figure 1.

Specimens of images involved in the LC25000 database.

3.3. Presented CAD

The present research introduces a multi-domain features-based CAD tool that leverages the advantages of both methodologies by integrating deep features derived from several lightweight CNNs with various handcrafted features. In the suggested CAD, deep features are extracted from the pooling layers of three distinct pre-trained CNN structures using TL, and their dimensions are diminished through NNMF. In addition, handcrafted features are extracted by adopting statistical methods alongside textural features including GLCM and LBP. This suggested CAD approach merges the statistical attributes with textural descriptors to assess their respective contributions to diagnostic efficacy. The framework then assesses the impact of combining the handcrafted attributes with each deep learning feature pool that was gathered from different deep networks. After that, the reduced deep features of the three CNNs are merged and then the complete group of deep learning features from the three CNNs is integrated with the aggregated handcrafted features. Subsequently, the mRMR feature selection is employed to determine the most essential features. This study systematically assesses the diagnostic capabilities of utilizing deep features exclusively, handcrafted features separately, and their synergistic combination. This study provides important insights into the efficacy of this hybrid methodology for lung and colon cancer classification by assessing the impact of integrating distinctive handcrafted features with deep attributes from multiple CNNs on diagnostic ability.

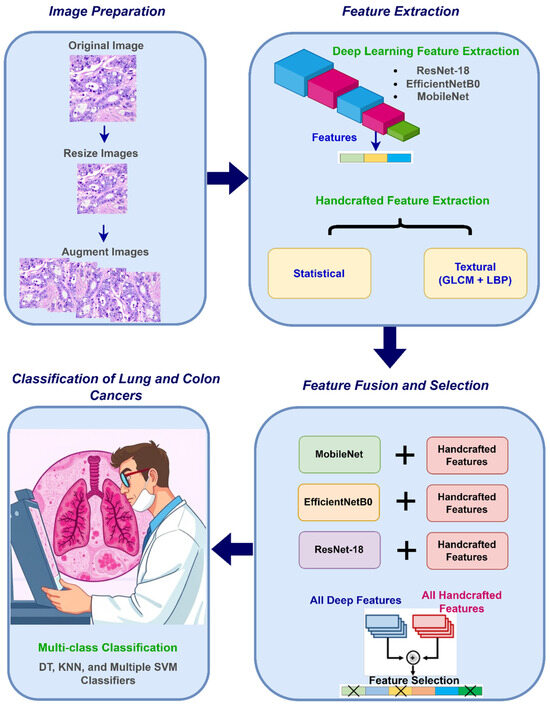

The proposed CAD system is composed of four steps: image preparation, feature extraction, feature fusion and selection, and classification. The CAD initiates with image preparation where resizing medical pictures is accomplished to conform to the input layer dimensions of each CNN structure, subsequently employing data augmentation to enlarge the training dataset and improve model generalization. In the following step, deep spatial features are extracted utilizing three lightweight CNN architectures, with their dimensions diminished through NNMF. Simultaneously, handcrafted features are generated, encompassing temporal statistical features obtained from different methods and textural attributes acquired via GLCM and LBP. Afterward, in the feature fusion and selection step, the handcrafted features are merged into an integrated set. Additionally, each CNN’s deep features are incorporated with the handcrafted attributes separately, and then the handcrafted attributes and deep attributes across all three CNNs are concatenated. Furthermore, the mRMR feature selection technique is applied to the hybrid features to choose the most important features, thereby decreasing dimensionality and optimizing the feature set for classification. Finally, in the classification step, six machine learning algorithms are adopted to recognize lung and colon malignancies. Figure 2 summarizes these stages.

Figure 2.

An overview of the stages of the presented CAD.

3.3.1. Image Preparation

Deep learning models necessitate particular dimensions of photos to initiate the learning procedure. Therefore, every picture provided is scaled to 224 × 224 pixels with three color channels (RGB). To improve model generalization and reduce overfitting, extensive data augmentation methods are employed. The transformations encompass dynamic scaling (ranging from 0.5 to 2.0 on both axes), bilateral image flipping, spatial translation within ±20 degrees, and shear transformations spanning from −45 to +45 degrees. This augmentation protocol increases the dataset size while maintaining the fundamental morphological features of lung cancer tissue samples.

3.3.2. Feature Extraction

Deep Feature Extraction

TL provides an effective substitute for training extensive CNNs from the beginning. This method harnesses the knowledge of pre-trained networks derived from large datasets such as ImageNet, tailoring them for particular medical classification tasks. The approach significantly diminishes computational demands and development durations. Thereby, three compact deep models—MobileNet, EfficientNetB0, and ResNet-18—are altered through TL for lung and colon cancer diagnosis employing the LC25000 dataset. The adaptation procedure entailed reconfiguring the fully connected layers to meet the number of labels of the dataset employed in this study. Subsequent to refining such networks with LC25000 photos, deep attributes are obtained from their ultimate pooling layers. This procedure generated 1280-dimensional feature vectors from both MobileNet and EfficientNetB0, whereas ResNet-18 produced 512-dimensional features. This method optimizes the use of pre-existing hierarchical representations while reducing the computational burden usually linked to deep network training. By utilizing established architectures, significant discriminative power is preserved while attaining accelerated convergence and enhanced generalization abilities. After extracting deep features, their dimensions were reduced using NNMF to lower classification complexity.

Lightweight CNNs are typically defined as models with significantly less computational complexity, number of parameters, and number of layers than standard architectures like DenseNet, ResNet, or Inception models, while retaining acceptable accuracy for the application. Following the definition provided by Howard et al. [48] when discussing MobileNet, architectures with around or fewer than 5 million parameters are classified as lightweight CNNs. Much more recent work [49] demonstrates that the lightweight category contains models used for mobile and edge deployment settings that have much fewer parameters than those of heavy CNNs, including VGG and AlexNet. Lightweight CNN architectures typically have fewer deep layers (i.e., 15–28 layers) than deeper architectures like ResNet-50 or ResNet-152, which have greater depths (50 and 152 layers). Additionally, the overall number of trainable parameters in lightweight CNNs is much smaller. For example, the well-known lightweight architecture MobileNet [48] includes approximately 4.2 million parameters, while ResNet-50 has approximately 25.6 million parameters [50]. Lightweight CNNs are often able to also leverage different ways to address computational costs while maintaining performance through the use of techniques like depthwise separable convolutions [48] (like in MobileNet) or compound scaling (as in EfficientNet) [51], which all help reduce the computational cost when used.

Our proposed system employs various lightweight architectures, namely, MobileNet [48] with 4.2 million parameters, EfficientNetB0 [51] with 5.3 million parameters, and ResNet-18 [50] with 11.7 million parameters. Although ResNet-18 possesses marginally more parameters than the MobileNet and EfficientNetB0, it remains comparatively lightweight relative to deeper ResNet variants, such as ResNet-50 with 25.6 million parameters and ResNet-152 with 60.2 million parameters [18,50]. It is frequently employed as a lightweight benchmark in resource-limited applications. Moreover, MobileNet, ResNet18, and EffcientNetB0 [51] have 28 [48], 18 [50], and 18 layers, which are much smaller than deeper CNN architectures, including ResNet-50 [50], ResNet-152 [50], Inception [52], Xception [53], and DenNet-201 [54], which include 50, 152, 48, 71, and 201 deep layers, respectively. Furthermore, MobileNet, ResNet18, and EffcientNetB0 have fewer parameters than the Inception (23.8 million parameters), Xception (22.9 million parameters), and DenseNet-201(20 million parameters). Therefore, the proposed system’s CNN architecture should be considered “lightweight” because it has a decreased depth (fewer layers) and parameter count relative to traditional CNNs.

Handcrafted Feature Extraction

The feature extraction strategy incorporates various methods to analyze histopathological images of lung and colon tissues. The GLCM analysis quantifies spatial relationships among pixel intensities using metrics such as contrast, correlation, energy, and homogeneity, whereas LBP encodes local pixel associations into binary patterns that represent micro-level texture differences. In addition, these descriptors provide statistical features acquired from intensity distributions, including mean, variance, skewness, and kurtosis, an elaborate description of the histologic and intensity features of the tissue samples.

- Statistical and Textural Features

Statistical feature extraction is an essential method in biomedical signal and image analysis, facilitating the generation of informative statistical descriptors. The present research exploits an extensive variety of thirteen features, including nine statistical metrics and four texture attributes. The equations delineating the extraction of such attributes are specified in Equations (2)–(14).

A(i, j) denotes the pixel intensity at the i-th row and j-th column of an image, μ represents the mean pixel intensity, and G signifies the total number of gray levels in the image. Furthermore, pr(g) denotes the probability of a pixel exhibiting a particular gray level g, whereas N and M represent the image’s dimensions, respectively.

- GLCM Textural Features

GLCM represents a second-order statistical method deployed in image analysis to define texture. This approach evaluates the spatial associations among pixels in an image by examining the frequency of co-occurring gray-level pairs at designated offsets. A co-occurrence matrix provides the joint probability allocation of gray-level intensities of pixel pairings at a particular distance and direction. The dimensions of the co-occurrence matrix are exclusively found by the quantity of gray levels in the texture, irrespective of the photo’s aspect. The present study examined four rotations (0°, 45°, 90°, 135°) with the amount of gray levels fixed at 8. Four principal textural features were derived from these matrices: contrast, correlation, energy, and homogeneity. The aforementioned attributes offer significant insights into the spatial distribution of gray-level intensities in a picture, facilitating the characterization of texture patterns pertinent to biomedical applications.

where P(i, j) denotes the marginal joint probability obtained from GLCM. In this context, i and j represent the gray levels of two spatially adjacent pixels, commonly referred to as x and y.

- LBP Textural Features

LBP is a frequently used feature extraction technique for texture analysis, recognized for its computational effectiveness and versatility in diverse imaging applications. LBP operates by assessing the intensity score associated with each pixel against its neighboring pixels within a specified radius and encoding the outcome as a binary pattern [55,56]. For a pixel situated at (x, y), the LBP score is calculated using the following formula:

where Ic represents the intensity of the central pixel, Ip indicates the intensity of the p-th neighboring pixel, P signifies the total quantity of neighbors, and s (x) is expressed as a step function:

The LBP operation produces a binary code for every pixel by contrasting its intensity with that of its neighbors, encoding the outcome into a histogram that functions as a concise depiction of the texture. Histograms are frequently utilized as input attributes for machine learning algorithms to correctly identify texture patterns in medical photos.

3.3.3. Feature Fusion and Selection

During the feature fusion and selection procedure, handcrafted attributes obtained through diverse methods are initially merged into a single unified feature set. This extensive collection of handcrafted features is subsequently integrated with the deep features obtained from each of the three CNN structures independently, resulting in hybrid feature sets for each CNN. Consequently, the deep features from all of the deep neural networks are combined with the integrated handcrafted features, producing hybrid representing features. The mRMR feature selection method is utilized to optimize this feature set and improve its applicability for classification tasks. The mRMR method identifies the most informative features by optimizing their relevance to the target class and minimizing redundancy among the feature set. mRMR specifically seeks to discover features that maximize mutual information with the target variable, while simultaneously guaranteeing that the features chosen exhibit maximal independence. The procedure entails ranking features according to their significance and redundancy, followed by the selection of a subset that optimally balances both factors. Utilizing mRMR substantially diminishes the dimensionality of the feature space, enhancing the feature set for efficient and precise classification while reducing the likelihood of overfitting [57].

The selection of mRMR was influenced by its capacity to enhance both feature relevance and redundancy, rendering it especially appropriate for highly dimensional medical image data. In contrast to conventional methods like PCA or recursive feature elimination (RFE), which predominantly emphasize dimensionality reduction without specifically addressing feature interrelationships, mRMR guarantees that the chosen features are both highly valuable and merely correlated.

Medical image datasets, particularly those that integrate both deep-learning-derived and handcrafted features, frequently include redundant or less discriminative variables that may adversely affect classification performance. mRMR tackles this issue by prioritizing features according to their mutual information with the target class, concurrently reducing redundancy among the chosen features. This method increases the comprehension of models and boosts classification efficiency by diminishing computational complexity while maintaining diagnostic accuracy.

Moreover, in contrast to filter-based selection techniques that evaluate features independently or wrapper methods that are resource-intensive, mRMR achieves equilibrium by utilizing mutual information to identify an optimal subset of features. Considering the varied characteristics of our feature space, which includes deep-learning-generated spatial features as well as manually crafted statistical and textural descriptors, mRMR was an appropriate selection for achieving a more concise but strongly discriminatory feature set.

3.3.4. Classification of Lung and Colon Cancer

The classification methodology employs a variety of machine learning techniques to categorize subtypes of malignancies. The chosen classifiers comprise a decision tree (DT), k-nearest neighbor (KNN), and four distinct forms of SVM—linear, medium Gaussian, cubic, and quadratic kernels. Each model leverages distinct computational methods to enhance multi-class tissue classification. The assessment technique employs five-fold cross-validation, systematically partitioning the dataset into equal sections, with 80% designated as training data and 20% as testing data in rotational permutations. This stringent validation method guarantees thorough model evaluation by enabling each sample to engage in both training and testing phases, thus yielding dependable performance metrics. A systematic comparison of these algorithms highlights their distinct strengths in differentiating various histopathological patterns, providing valuable insights for medical image analysis applications.

4. Experimental Setting

The deep learning models were optimized with designated hyperparameters: a learning rate of 0.0001, a batch size of 4, and 5 training epochs. The validation of each of the CNNs was conducted at 130-iteration intervals to measure the progression of learning inaccuracy. The training employed stochastic gradient descent optimization with momentum, retaining the default settings for the other hyperparameters. All experiments were performed in the MATLAB R2022b context. The evaluation of the capability of the system on the LC25000 dataset included various complementary measures. The metrics encompassed precision (positive predictive value), sensitivity (true positive rate), specificity (true negative rate), F1-score (harmonic mean of precision and recall), accuracy (overall correct predictions), and Matthews correlation coefficient (MCC) for evaluating multi-class performance. Moreover, confusion matrices were created to depict class-specific prediction distributions, while receiving operating characteristic (ROC) curves were created to demonstrate the models’ discriminatory capability at various classification thresholds. The resulting analysis calculated using Equations (21)–(26) facilitates an exhaustive examination of the classification system’s efficacy across multiple performance dimensions.

The assessment of machine learning algorithms in medical diagnosis depends on four essential performance indicators obtained from the confusion matrix. A true positive (TP) signifies an accurate identification of the target condition, demonstrating that the classifier effectively recognized a pathological state. A true negative (TN) signifies the correct identification of healthy or normal cases, confirming the classifier’s capacity to rule out disease when it is not present. Classification errors occur in two forms: false positives (FPs), where the algorithm erroneously indicates disease presence in healthy individuals, and false negatives (FNs), which denote undiagnosed pathological conditions. These four metrics constitute the basis for computing critical performance indicators in medical diagnostic systems.

5. Experimental Results

The experimental results section will initially represent the outcomes of each deep feature set acquired from each CNN independently and then reduced using NNMF, and employed to train the six machine learning algorithms. Afterward, it will present the outcomes of the same classifiers fed with each feature set of the handcrafted feature extraction approaches, including GLCM, LBP, and statistical. Furthermore, it will demonstrate the results of the classification algorithms when input with the combined handcrafted features. Next, the results derived from these machine learning methods will be displayed after each deep feature collection has been supplied along with the aggregated handcrafted features. Finally, the results after the integration of the three deep feature sets obtained from the three CNNs and combined with the fused handcrafted features and the application of the mRMR feature selection are demonstrated and discussed.

5.1. Deep Features Results

The section will provide the results of the independent deep feature sets acquired out of every CNN and diminished using NNMF and fed to the six machine learning models. Table 1 presents an in-depth evaluation of the performance of the presented CAD exploiting deep features derived from EfficientNetB0, MobileNet, and ResNet-18. The features were diminished through NNMF and utilized in six machine learning classifiers: DT, KNN, linear support vector machine (LSVM), quadratic support vector machine (QSVM), cubic support vector machine (CSVM), and medium Gaussian support vector machine (MGSVM). The results indicate discrepancies in the efficacy of these classifiers across various feature sets and CNN models, providing significant insights into the system’s diagnostic proficiency.

Table 1.

The classification accuracy (%) of the classification algorithms input with each deep feature set after the reduction using the NNMF technique.

EfficientNetB0 exhibited consistent enhancements in accuracy with the augmentation of NNMF features. Both QSVM and MGSVM attained the highest accuracy of 98.9% employing 50 NNMF variables. MGSVM continually demonstrated outstanding accuracy across all feature sets, emphasizing its reliability in classifying the extracted features. DT exhibited comparatively diminished accuracy, commencing at 97.0% with 10 attributes and decreasing as the number of features increased.

MobileNet was identified as the superior model among the three CNN structures. It continuously surpassed EfficientNetB0 and ResNet-18, attaining a maximum accuracy of 99.4% with 40 NNMF attributes employing MGSVM. Despite a reduction in features (10 NNMF), the performance remained robust, with QSVM and MGSVM achieving 98.9% accuracy. Those findings highlight MobileNet’s proficiency in feature extraction and classification, rendering it a suitable selection for this CAD system. On the other hand, ResNet-18 demonstrated commendable performance, attaining its peak accuracy of 99.2% with QSVM and MGSVM leveraging 30 and 40 NNMF attributes, respectively. Nonetheless, its performance was marginally inferior to that of MobileNet, particularly at elevated feature sizes. The DT classifier demonstrated reduced accuracy for ResNet-18, varying from 97.9% with 10 NNMF variables to 90.7% with 50 attributes, indicating its constraints relative to other classifiers.

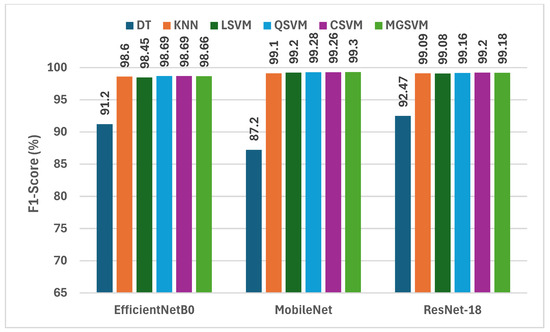

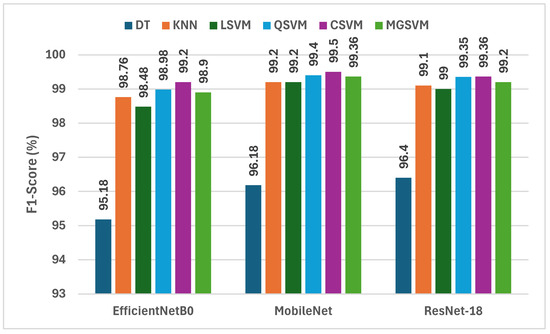

Figure 3 displays a comprehensive assessment of the F1-scores attained by six machine learning classifiers, trained on deep features derived from three compact CNNs subsequent to dimensionality reduction via NNMF. Analysis of the F1-scores among the three CNNs reveals that MobileNet regularly surpasses EfficientNetB0 and ResNet-18 in the majority of instances. For example, MobileNet attains F1-scores between 87.2% (DT) and 99.30% (MGSVM), illustrating its exceptional capacity to identify distinctive attributes for the classification of lung and colon cancer. Likewise, ResNet-18 exhibits outstanding efficiency, with F1-scores varying from 92.47% (DT) to 99.20% (CSVM). Conversely, EfficientNetB0 demonstrates marginally reduced F1-scores, with values spanning from 91.20% (DT) to 98.69% (QSVM). The findings indicate that although all three CNNs exhibit outstanding performance, MobileNet is the most efficient structure for feature extraction within this framework, especially when paired with powerful classifiers.

Figure 3.

The F1-scores (%) of the classification algorithms trained with each deep feature set after the reduction using the NNMF technique.

Out of all three CNNs, CSVM and MGSVM are the classifiers that continuously obtain the highest F1-scores. CSVM attains F1-scores of 98.69%, 99.26%, and 99.20% for EfficientNetB0, MobileNet, and ResNet-18, correspondingly. MGSVM trails closely, achieving F1-scores of 98.66%, 99.30%, and 99.18%. The outcomes demonstrate that both of the SVM-based classifiers are exceptionally proficient in managing the high-dimensional feature space generated by the NNMF-reduced deep features. Conversely, the DT exhibits the lowest performance, yielding F1-scores of 91.20%, 87.20%, and 92.47% for EfficientNetB0, MobileNet, and ResNet-18, respectively. This variation highlights the necessity of choosing suitable classifiers that can utilize the abundant information offered by the deep features.

5.2. Handcrafted Features Results

The following part will demonstrate the findings of the classification algorithms utilizing each independent handcrafted feature set as well as the fused feature sets. Table 2 presents a summary of the performance evaluation of the suggested CAD system deploying handcrafted features, both singularly and in conjunction, across six machine learning classifiers. The results demonstrate the diagnostic potential of each feature set and their combinations, offering significant insights into the role of handcrafted features in cancer identification. The statistical features demonstrated solid results among the classifiers, with the greatest accuracy recorded for CSVM at 93.3%, followed by KNN at 92.9%. Nonetheless, DT attained a reduced accuracy of 86.8%, underlining its constraints in comparison to more advanced classifiers. The findings demonstrate the reliability of statistical features in conveying pertinent information for classification, while also highlighting the differing efficacy of classifiers in utilizing these features. The GLCM features exhibited moderate efficacy, with optimal results once more realized through CSVM, achieving an accuracy of 90.7%. KNN achieved an accuracy of 86.9%, whereas DT recorded the lowest accuracy at 79.7%. The findings indicate that although GLCM features aid in the classification process, their independent efficacy is constrained relative to statistical features. LBP features demonstrated marginally superior performance compared to GLCM, with CSVM attaining the highest accuracy of 94.0%, succeeded by QSVM at 92.1%. DT exhibited the lowest performance at 77.2%, suggesting that although LBP features offer significant insights into texture, they necessitate sophisticated classifiers to attain elevated diagnostic accuracy.

Table 2.

The classification accuracy (%) of the classification models fed with each handcrafted feature set and the combined feature sets.

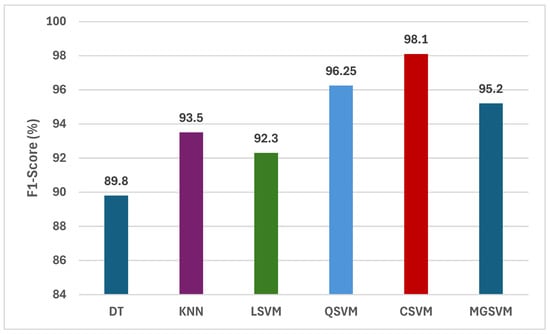

The combination of the three distinct feature sets—statistical, GLCM, and LBP—yielded substantial enhancements for all classifiers. The integrated features attained a maximum accuracy of 98.1% with CSVM, succeeded by 96.3% with QSVM and 95.2% with MGSVM. The results demonstrate the beneficial effects of integrating various feature sets, emphasizing the importance of multi-domain feature aggregation in improving classification efficacy. Significantly, DT demonstrated enhanced accuracy of 89.8% with the integration of combined features, highlighting the advantages of feature fusion even for less complex classifiers.

Figure 4 illustrates a comprehensive assessment of the F1-scores attained by six machine learning classifiers trained on the merged handcrafted feature sets, encompassing statistical, GLCM, and LBP features. Analysis of the F1-scores for the six classifiers reveals that CSVM and MGSVM constantly attain the greatest outcomes, at 98.14% and 95.20%, respectively. The findings demonstrate that both SVM-based classifiers are exceptionally proficient in managing the high-dimensional feature space generated by the amalgamation of statistical, GLCM, and LBP features. The QSVM demonstrates remarkable performance, attaining an F1-score of 96.25%, while the KNN follows with an F1-score of 93.50%. Conversely, DT and LSVM demonstrate comparatively lower F1-scores, recorded at 89.80% and 92.30%, respectively. This difference highlights the necessity of choosing suitable classifiers that can efficiently utilize the extensive information offered by the amalgamated handcrafted features.

Figure 4.

The F1-scores (%) of the classification algorithms trained with the combined handcrafted feature sets.

5.3. Hybrid Features Results

The outcomes of merging every deep feature collection with the aggregate handcrafted attributes that are fed into the machine learning classifiers will be shown in this section. Table 3 presents an assessment of the presented CAD system’s capability by integrating deep features from EfficientNetB0, MobileNet, and ResNet-18 with the aggregated handcrafted features. The results emphasize the advantages of combining handcrafted features with deep features, demonstrating enhanced classification accuracy in the majority of configurations relative to the exclusive use of deep features. The incorporation of handcrafted attributes in EfficientNetB0 markedly enhanced accuracy for simpler classifiers such as DT, elevating it from 90.1% to 95.2%. The KNN, LSVM, QSVM, and MGSVM classifiers exhibited only slight enhancements, with QSVM attaining 99.0% accuracy and CSVM achieving the highest accuracy of 99.2%. The incorporation demonstrated the efficacy of hybrid features in enhancing consistency as well as efficiency throughout different classifiers.

Table 3.

The classification accuracy (%) of the classification algorithms trained with each deep feature set combined with the fused handcrafted features compared to using each deep feature set alone.

MobileNet exhibited strong performance both individually and in conjunction with handcrafted features. The incorporation of handcrafted features enhanced the accuracy of DT from 88.6% to 96.2%, highlighting the significance of merging features in less complicated models. The peak accuracy was attained with CSVM, achieving 99.5% using the hybrid feature set, shortly followed by QSVM and MGSVM at 99.4%. These findings demonstrate the enhanced efficacy of MobileNet’s deep features, strengthened by additional data gathered from handcrafted features. The incorporation of handcrafted features in ResNet-18 enhanced the DT accuracy from 92.7% to 96.4%, demonstrating the efficacy of feature fusion. Although the enhancements for other classifiers were not as significant, the QSVM and CSVM classifiers attained an accuracy of 99.4% when employing the hybrid feature set. The overall accuracy of MGSVM stood steady at 99.2%, indicating the reliability of ResNet-18’s feature extraction capacities.

Critical insights from these findings indicate that the combination of deep features with handcrafted features regularly boosts classification accuracy, especially for DT. The enhancements for classifiers like QSVM and CSVM, although more modest, emphasize the valuable contribution of handcrafted features in optimizing the classification process. Of the three deep networks, MobileNet demonstrated the greatest overall accuracy when integrated with handcrafted features, affirming its exceptional adaptability for this hybrid methodology. The results illustrate the effectiveness of integrating deep features with handcrafted features to enhance the diagnostic performance of the CAD system. The findings highlight the significance of multi-domain feature integration, offering a robust mechanism for enhancing the precision and dependability of lung and colon cancer identification in biomedical informatics applications.

The findings from Figure 5 give an in-depth analysis of the F1-scores obtained by six machine learning classifiers using deep features taken from three incredibly lightweight CNNs that are EfficientNetB0, MobileNet, and ResNet-18 in conjunction with fused crafted features. Analysis of the F1-scores from the three deep CNNs shows that MobileNet has the highest F1-scores more frequently than EfficientNetB0 or ResNet-18. For example, the F1-scores for MobileNet ranged from 96.18% DT to 99.50% CSVM, which shows that it has the highest capability to capture discriminative features for lung and colon cancer classification. Like MobileNet, ResNet-18 obtained competitive F1-scores for lung and colon cancer classification from 96.4% DT to 99.36% for CSVM and MGSVM. EfficientNetB0 obtained marginally lower F1-scores of 95.18% for DT to 99.20% for CSVM. The outcomes indicate that all three CNNs performed exceptionally well; however, MobileNet was the most effective architecture for feature extraction in the hybrid modeling approach that was performed for lung and colon cancer classification.

Figure 5.

The F1-scores (%) of the classification algorithms trained with each deep feature set combined with the fused handcrafted features.

CSVM and MGSVM consistently exhibit the highest F1-scores across all three CNNs in the classifiers list. CSVM is able to achieve F1-scores of 99.20%, 99.50%, and 99.36% for EfficientNetB0, MobileNet, and ResNet-18, respectively, while MGSVM closely follows with F1-scores of 98.90%, 99.36%, and 99.20%. This observation suggests that both SVM-based classifiers are effective in dealing with the high-dimensional feature space created from the fusion of deep and handcrafted features. In contrast, DT performs least effectively, resulting in F1-scores of 95.18%, 96.18%, and 96.40% for EfficientNetB0, MobileNet, and ResNet-18, respectively. This disparity highlights the need for appropriate classifiers that can take advantage of the wealth of information represented by the hybrid feature set.

5.4. Outcomes of Feature Selection

The following paragraphs present and analyze the outcomes of the classification algorithms developed using the integrated deep learning features from the three CNNs and the aggregated handcrafted features following the application of mRMR feature selection. Table 4 shows the classification accuracy of these machine learning models. The experimental findings illustrate the effectiveness of integrating deep learning attributes of three CNNs with handcrafted attributes, succeeded by mRMR feature selection. The performance evaluation of various feature set lengths demonstrates continual enhancements in classification accuracy with a rise in the number of attributes, ultimately stabilizing at elevated feature counts. The DT classifier exhibited the least impressive performance across all classifiers, yet it attained commendable accuracy rates between 92.7% with 10 features and 97.3% with 100–110 features. This incremental enhancement indicates that the DT classifier benefits from added discriminatory attributes, although its performance enhancements cease after 60 attributes, sustaining roughly 97% accuracy.

Table 4.

The classification accuracy (%) of the classifiers constructed using the fused deep learning features of the three CNNs and the combined handcrafted attributes after using the mRMR feature selection.

The KNN classifier demonstrated enhanced performance, increasing from 94.8% with 10 attributes to 99.7% with 100–110 variables. Significant enhancements were noted when augmenting from 10 to 20 variables (94.8% to 98.3%), and from 20 to 30 variables (98.3% to 98.9%), demonstrating the classifier’s proficient use of the chosen feature sets. All SVM variants exhibited strong performance across various feature set sizes. The LSVM attained accuracies between 94.8% and 99.6%, whereas the QSVM demonstrated marginally superior performance, achieving 99.7% accuracy with 100 variables. The CSVM exhibited comparable proficiency, attaining 99.7% accuracy with 90 features and sustaining this performance with an increased number of features. The MGSVM repeatedly exhibited strong performance, achieving a maximum accuracy of 99.7%, comparable to other SVM variants.

A significant observation is the declining returns in accuracy enhancement over 80–90 variables for all classifiers. This indicates that although the feature selection process successfully determines the most pertinent attributes, there is an optimal size for the feature set above which extra attributes contribute negligibly to classification performance. The findings demonstrate that integrating deep learning features from various CNNs with meticulously chosen handcrafted features via mRMR yields a resilient feature set that attains elevated classification accuracy across diverse classifier models.

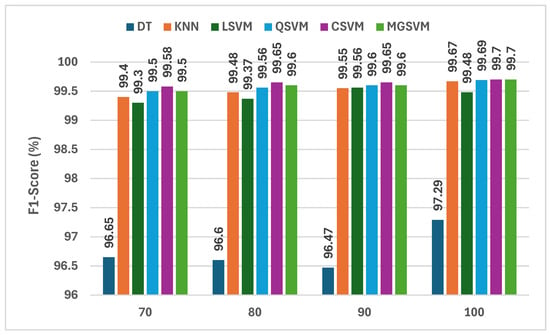

The findings displayed in Figure 6 provide a continuous assessment of the F1-scores of six machine learning classifiers trained using the fused deep learning features from three lightweight CNNs in addition to the handcrafted features following the mRMR feature selection method. The analysis of the F1-scores for each classifier demonstrates that the CSVM classifier has the best F1-scores, at 99.70%, with 100 features selected. Hence, this indicates that the CSVM classifier is highly capable of dealing with the high-dimensional feature space created by fused features of deep learning and handcrafted features. The MGSVM classifier is equally capable of handling the high-dimensional space, with F1-scores of 99.60% and 99.70% using 90 and 100 features, respectively. The KNN classifier also performs well, with F1-scores between 99.40 and 99.67 as the number of features increases. The DT classifier exhibits relatively lower performance, with F1-scores ranging from 96.65% to 97.29%, demonstrating its inadequacies in exploiting the vast amount of data offered by the hybrid feature set.

Figure 6.

The F1-scores (%) of the classifiers constructed using the fused deep learning features of the three CNNs and the combined handcrafted attributes after using the mRMR feature selection.

One of the notable points highlighted in Figure 6 is the increase in the F1-scores as the number of selected features grows. For instance, the F1-score for CSVM improved from 99.58% with 70 features to 99.70% with 100 features, indicating that creating the most relevant set of selected features is essential, specifically when using mRMR. As mRMR removes redundant or noisy features and selects the most discriminative features, the classifiers were probably generalizing better. Overall, the F1-scores across all classifiers were sufficiently high, demonstrating the general applicability and robustness of the proposed hybrid approach that built off the strengths from both deep learning and traditional feature extraction methods, especially for CSVM and MGSVM.

Table 5 presents a thorough assessment of the performance of different classifiers developed using fused deep-learning features from three CNNs and integrated handcrafted features, subsequent to the implementation of the mRMR feature selection method. The evaluated performance metrics include sensitivity, specificity, precision, F1-score, and MCC. The DT classifier attained a sensitivity equal to 97.29%, specificity equivalent to 99.32%, precision corresponding to 97.29%, F1-score reaching 97.29%, and MCC equal to 96.61%. The findings demonstrate that the DT classifier is exceptionally proficient in accurately identifying true positive cases, exhibiting a high level of precision and dependability. Nonetheless, in comparison to other classifiers, its performance is marginally inferior, especially regarding sensitivity and MCC. The KNN classifier exhibited outstanding performance, achieving sensitivity, specificity, precision, F1-score, and MCC of 99.67%, 99.92%, 99.67%, 99.67%, and 99.59%. The metrics indicate that the KNN classifier demonstrates high accuracy and reliability, exhibiting minimal false positives and false negatives. Its performance ranks among the highest of all assessed classifiers, rendering it a formidable option for the classification task.

Table 5.

Performance indicators (%) of the classifiers constructed using the fused deep learning features of the three CNNs and the combined handcrafted attributes after utilizing the mRMR feature selection.

The LSVM classifier demonstrated robust performance, achieving a sensitivity of 99.48%, specificity of 99.89%, precision of 99.48%, F1-score of 99.48%, and MCC of 99.36%. The LSVM classifier’s elevated specificity and precision demonstrate its efficacy in accurately identifying true negative instances while sustaining a high overall accuracy level. The QSVM classifier attained a sensitivity equal to 99.68%, a specificity corresponding to 99.92%, a precision equivalent to 99.68%, an F1-score equal to 99.68%, and an MCC reaching 99.59%. The results are analogous to those of the KNN classifier, demonstrating the QSVM’s resilience and dependability in classification tasks. Its elevated sensitivity and specificity highlight its capacity to precisely differentiate between positive and negative cases. The CSVM classifier demonstrated exceptional performance, achieving sensitivity, specificity, precision, F1-score, and MCC of 99.70%, 99.92%, 99.70%, 99.78%, and 99.62%. The CSVM classifier exhibits some of the highest metrics, demonstrating its exceptional capacity for accurate classification with minimal errors. The MGSVM classifier exhibited outstanding performance, achieving sensitivity, specificity, precision, F1-score, and MCC of 99.70%, 99.92%, 99.70%, 99.70%, and 99.60%. The results indicate that the MGSVM classifier is both efficient and trustworthy, with evaluation criteria closely aligning with those of the CSVM classifier.

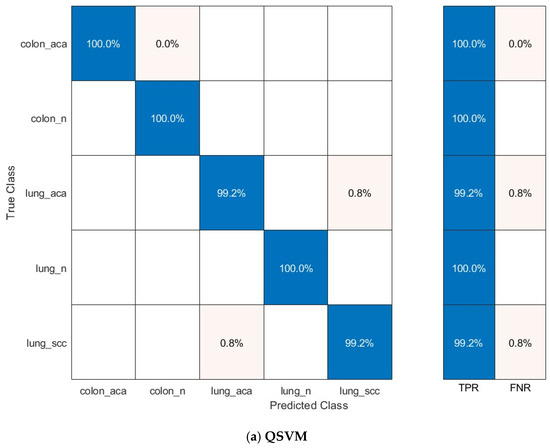

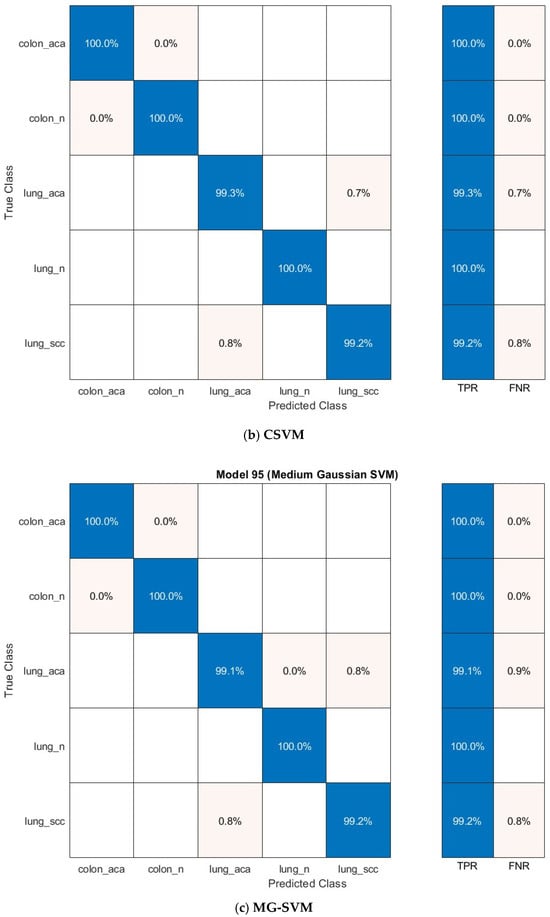

The confusion matrices for the prominent classification models—Q-SVM, C-SVM, and MG-SVM—were examined to assess their ability to classify accurately. Figure 7 illustrates these matrices, emphasizing the ratios of accurate and inaccurate predictions for every cancer subcategory. The results demonstrate that the classifications of colon adenocarcinoma, colon benign cells, and lung benign tumor were precisely identified, achieving flawless sensitivities across all three classification algorithms. Nonetheless, the lung squamous carcinoma and adenocarcinoma subtypes were identified as the most commonly misclassified category across the three models.

Figure 7.

The confusion matrix of the classification models utilizing the optimally chosen deep variables subsequent to the application of the mRMR technique on the integrated deep features of the three deep networks and combined handcrafted features: (a) Q-SVM, (b) C-SVM, (c) MG-SVM.

Furthermore, receiver operating characteristic (ROC) curves for the Q-SVM, C-SVM, and MG-SVM classifiers, which exhibited superior performance, can be seen in Figure 4. These graphs illustrate the sensitivities in relation to one minus specificities, offering a visual depiction of classifier efficacy. AUC values approaching one signify highly effective classification. Figure 8 points out that the AUC is one for all three classification algorithms, demonstrating their remarkable accuracy. These results validate that the proposed CAD system provides a highly precise, impartial, and economical methodology for detecting tumors.

Figure 8.

The ROC curve of the SVM classification model exploiting the optimally selected deep variables subsequent to the application of the mRMR technique on the integrated deep features of the three deep networks and combined handcrafted features: (a) Q-SVM, (b) C-SVM, (c) MG-SVM.

6. Discussion

The present study introduces a hybrid CAD approach that combines deep-learning-derived attributes from three compact CNNs with handcrafted attributes, subsequently employing mRMR feature selection for the classification of lung and colon cancer. Table 1, Table 2, Table 3, Table 4 and Table 5 and Figure 3, Figure 4, Figure 5, Figure 6, Figure 7 and Figure 8 cumulatively illustrate the effectiveness of the suggested approach in improving diagnostic accuracy, reliability, and efficiency. This section consolidates the principal findings and offers insights into the efficacy of the suggested approach.

Table 1 presents the independent performance of deep features derived from EfficientNetB0, MobileNet, and ResNet-18 when utilized with six classifiers. MobileNet continuously surpassed the other models, reaching the greatest classification accuracy of 99.4% with the MG-SVM classifier. This outstanding performance demonstrates MobileNet’s proficiency in feature extraction and classification. The results indicate that reducing the number of attributes to 30–40 using NNMF improved performance, with diminishing returns noted beyond this limit. Table 2 assessed the efficacy of classifiers utilizing both singular handcrafted features and their aggregated set. Statistical features alone demonstrated strong performance, attaining 93.3% accuracy with CSVM. The integration of statistical, GLCM, and LBP features markedly enhanced classification accuracy for all classifiers, with CSVM achieving 98.1% accuracy. This enhancement highlights the synergistic relationship of multi-domain handcrafted features and their significance in attaining elevated diagnostic precision. The combination of deep learning attributes with manually crafted attributes, as illustrated in Table 3, exhibited the complementary advantages of hybrid feature sets. The incorporation of handcrafted features enhanced the performance of all classifiers, especially simpler models such as DT, which experienced an accuracy increase from 90.1% to 95.2% for EfficientNetB0. MobileNet with CSVM attained the highest accuracy of 99.5%, demonstrating the benefits of feature fusion in enhancing classifier performance.

Table 4 examined the effect of mRMR feature selection on hybrid features in more detail. The results revealed steady enhancements in performance as the total number of chosen attributes rose, with performance settling at higher feature numbers. Despite having the worst overall performance, DT managed to attain a respectable 97.3% accuracy with 100–110 attributes. Other classification algorithms, such as KNN, QSVM, CSVM, and MGSVM, regularly achieved accuracy rates above 99.5%, highlighting the efficiency of the mRMR methodology in optimizing feature sets. The assessment measures in Table 5 demonstrated the overall efficacy of the classifiers. KNN, QSVM, CSVM, and MGSVM appeared to be the most reliable models, reaching sensitivity, specificity, precision, and F1-scores above 99.6%, with MCC surpassing 0.995. Such metrics demonstrate the robustness and precision of the suggested hybrid methodology.

Figure 3 describes the confusion matrix that elucidates the classification efficacy of the highest-performing models. These matrices demonstrate a remarkably high count of accurately classified instances for both lung and colon cancer categories, with negligible misclassifications. The counts of true positives and true negatives predominate along the diagonal of the matrices, highlighting the models’ proficiency in accurately distinguishing between positive and negative instances. Misclassified instances, though minimal, remained consistently low across all classifiers, underscoring the efficacy of the proposed hybrid feature integration and selection strategy. These insights indicate that the system is highly suitable for practical diagnostic applications, providing both accuracy and dependability. Moreover, the ROC curves in Figure 4, exhibiting an AUC value of 1 for QSVM, CSVM, and MGSVM, confirm the classifiers’ outstanding accuracy and reliability.

The results collectively demonstrate that the fusion of deep learning attributes from various CNNs with handcrafted features, alongside the implementation of mRMR feature selection, establishes a robust and efficient diagnostic system. The hybrid method effectively utilizes the complementary advantages of deep and manually crafted features, attaining superior classification performance. Furthermore, this study emphasizes the significance of feature selection in diminishing dimensionality and improving model generalization. These findings offer substantial insights into the capabilities of hybrid methodologies in medical informatics, especially concerning automated cancer diagnosis.

The suggested CAD framework, which incorporates various feature extraction techniques and utilizes sophisticated dimensionality reduction and feature selection processes, inherently heightens computational complexity. The hybrid strategy, integrating deep learning features with handcrafted attributes, necessitates greater processing time and memory resources than models that depend exclusively on deep features. To address this, this study leverages compact CNN structures (MobileNet, EfficientNetB0, and ResNet-18) and implements NNMF for feature reduction, thereby substantially decreasing the dimensionality of the extracted features prior to classification. Furthermore, this study employs the mRMR method to minimize redundant features, enhancing computational efficiency while maintaining classification efficacy.

Concerning dataset bias, the suggested research employs the LC25000 dataset, a recognized benchmark for the classification of lung and colon cancer. This dataset exhibits a balanced distribution among various cancer subtypes; however, possible biases may emerge from discrepancies in staining methodologies, scanning conditions, or the institutional origins of histopathological specimens. These aspects may influence the generalizability of our model to additional datasets from various medical centers. To mitigate this, this study employed comprehensive data augmentation techniques, such as scaling, flipping, translation, and shearing, to improve the model’s resilience to variations in image acquisition. Moreover, our feature selection methodology mitigates the impact of dataset-specific artifacts by emphasizing the most discriminatory and pertinent features across various instances.

The proposed hybrid approach of combining spatial features using deep learning with handcrafted statistical and textural features improves classification accuracy while retaining computational efficiency, which makes it an attractive option for use in a clinical implementation where automated histopathological analysis can support pathologists’ diagnoses of lung and colon cancer. Another advantage of the suggested system is that it is implemented using lightweight CNN architectures and efficient feature reduction and selection methods, allowing it to be used in constrained resource settings, such as smaller health systems, or even incorporated into telemedicine models. Further, the incorporation of handcrafted features, such as statistical, GLCM, and LBP attributes, guarantees that the framework retains both low-level textural patterns and high-level spatial representations, thereby increasing its robustness and adaptability to various clinical contexts. The proposed system can also be used as part of a large-scale screening program for cancers, leading to earlier detection and timely treatment in communities with limited access to specialized pathologists.

6.1. Comparisons with Previous CADs

This suggested CAD scheme was assessed in comparison to various existing state-of-the-art systems for the classification of cancer subcategories of the LC25000 dataset, as detailed in Table 6. The comparison emphasizes the enhanced efficacy of the proposed method, which incorporates deep features from the three deep networks with handcrafted features, subsequently employing feature reduction through NNMF and feature selection via mRMR. The integration achieved a classification accuracy equal to 99.7%, sensitivity corresponding to 99.7%, specificity equivalent to 99.92%, precision equal to 99.7%, and an F1-score equal to 99.70%, exceeding or closely aligning with the top-performing approaches described in the literature.

Table 6.

A performance comparison of the latest CAD models for the detection of cancers leveraging the LC25000 database.