Eyes of the Future: Decoding the World Through Machine Vision

Abstract

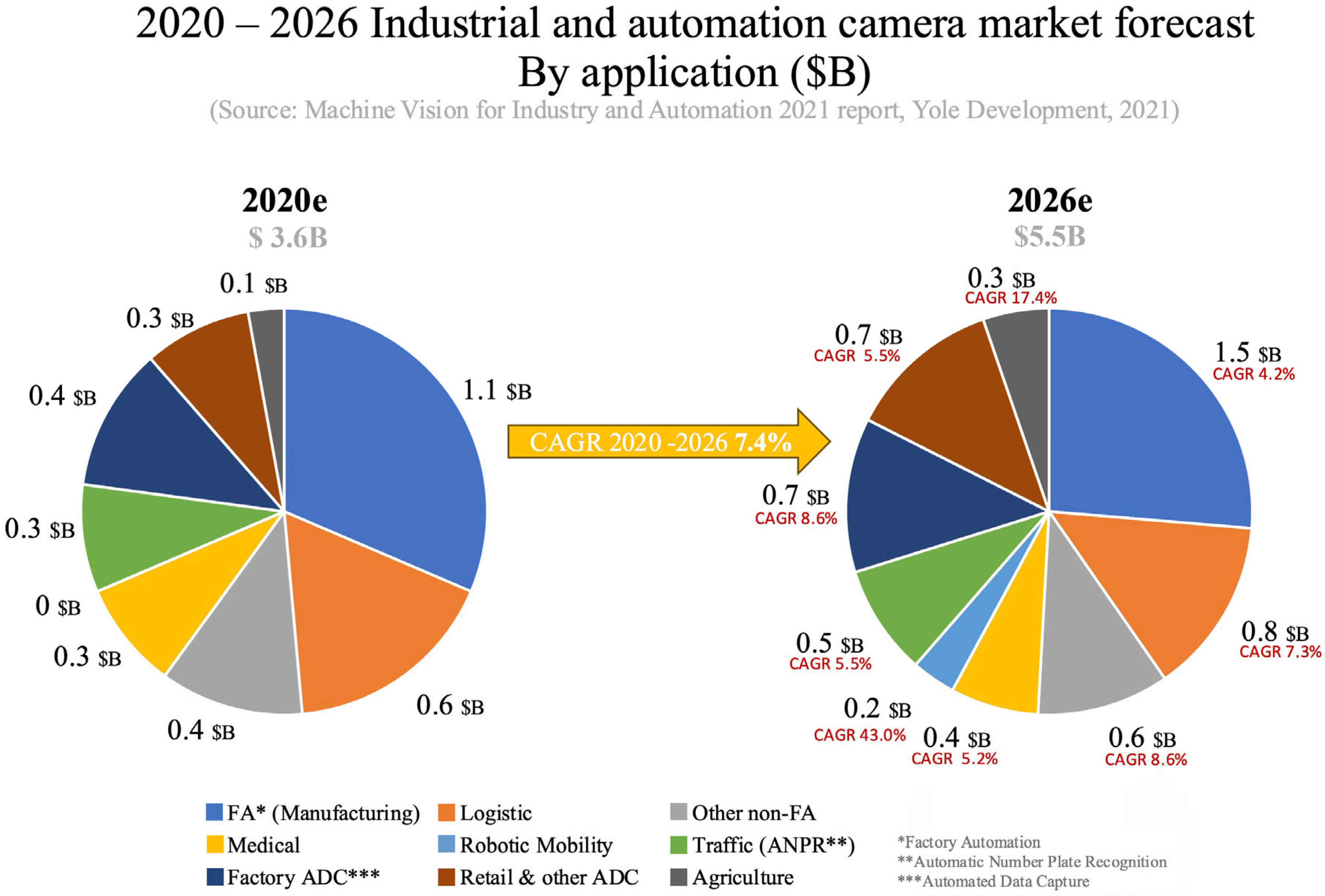

1. Introduction

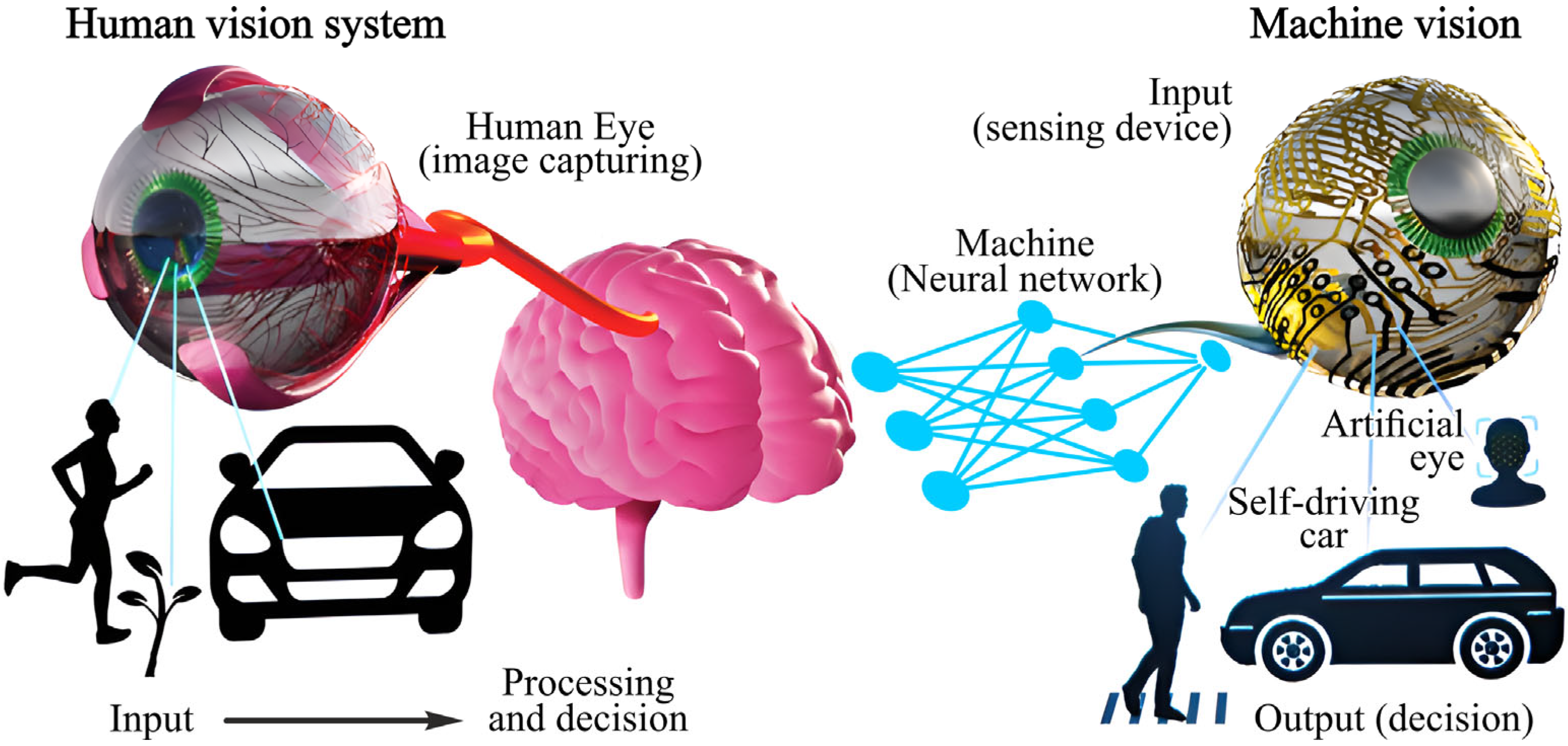

2. Fundamentals of MV

2.1. Basic Components of MV

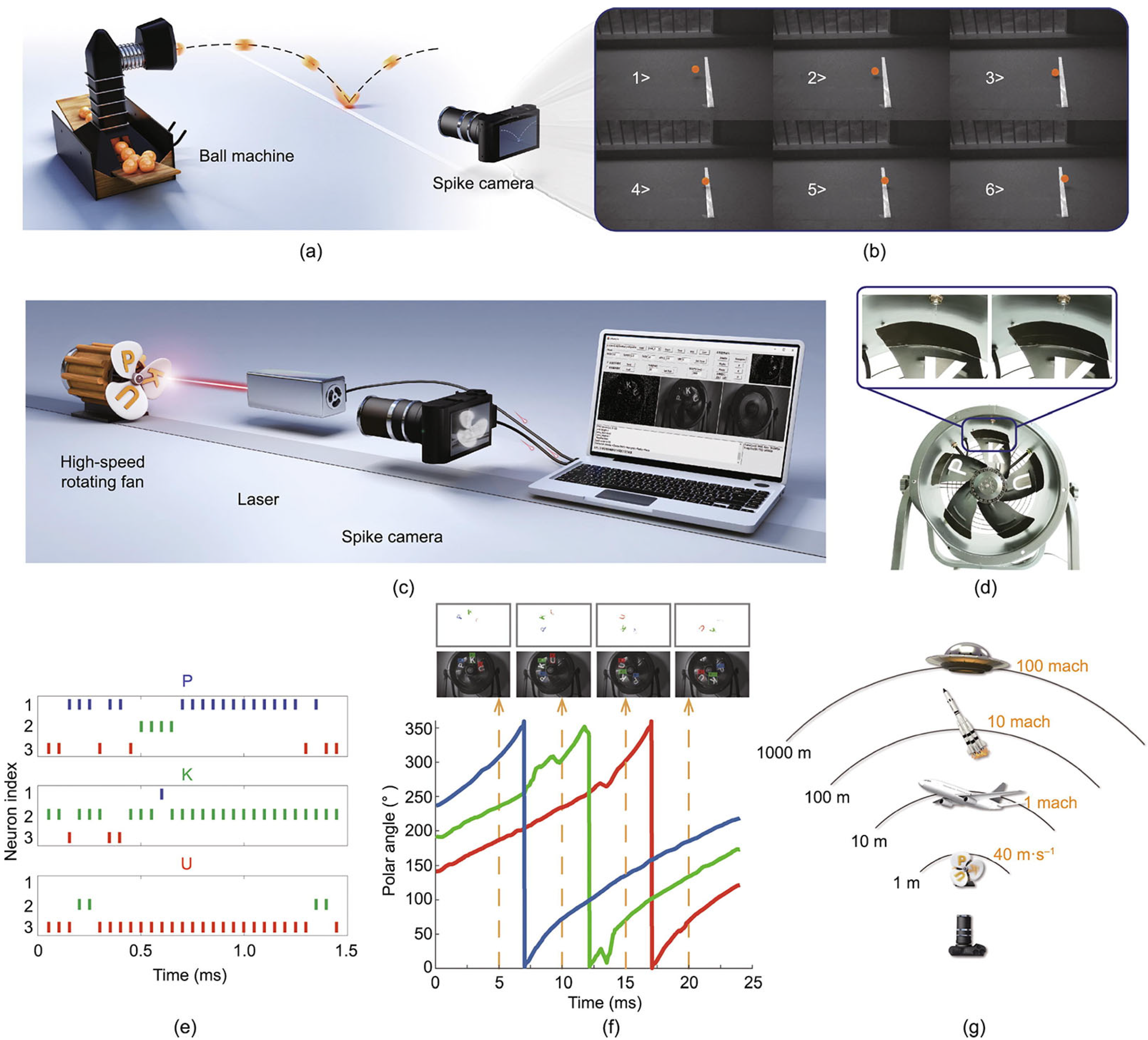

2.1.1. Cameras

2.1.2. Sensors

2.1.3. Lighting

2.1.4. Software

2.2. Comparison with Human Vision

2.3. Role of AI and DL in MV

2.3.1. Feature Extraction and Classification

2.3.2. Pattern Recognition and Anomaly Detection

2.3.3. Autonomous Decision-Making

2.3.4. Adaptive Learning

2.3.5. Edge Computing and Real-Time Processing

3. Applications of MV

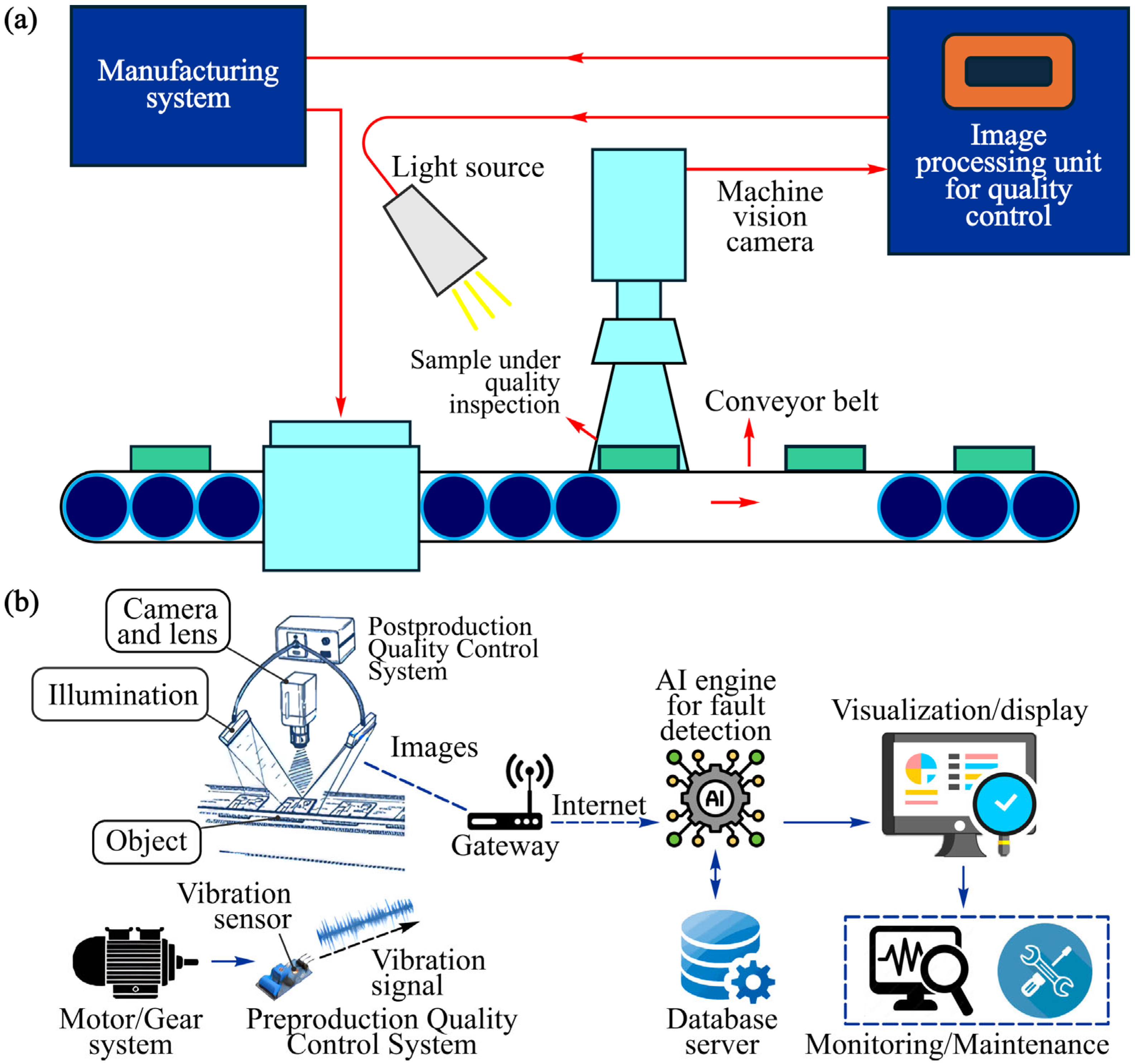

3.1. Industrial Automation and Quality Control

3.2. Medical Imaging and Diagnostics

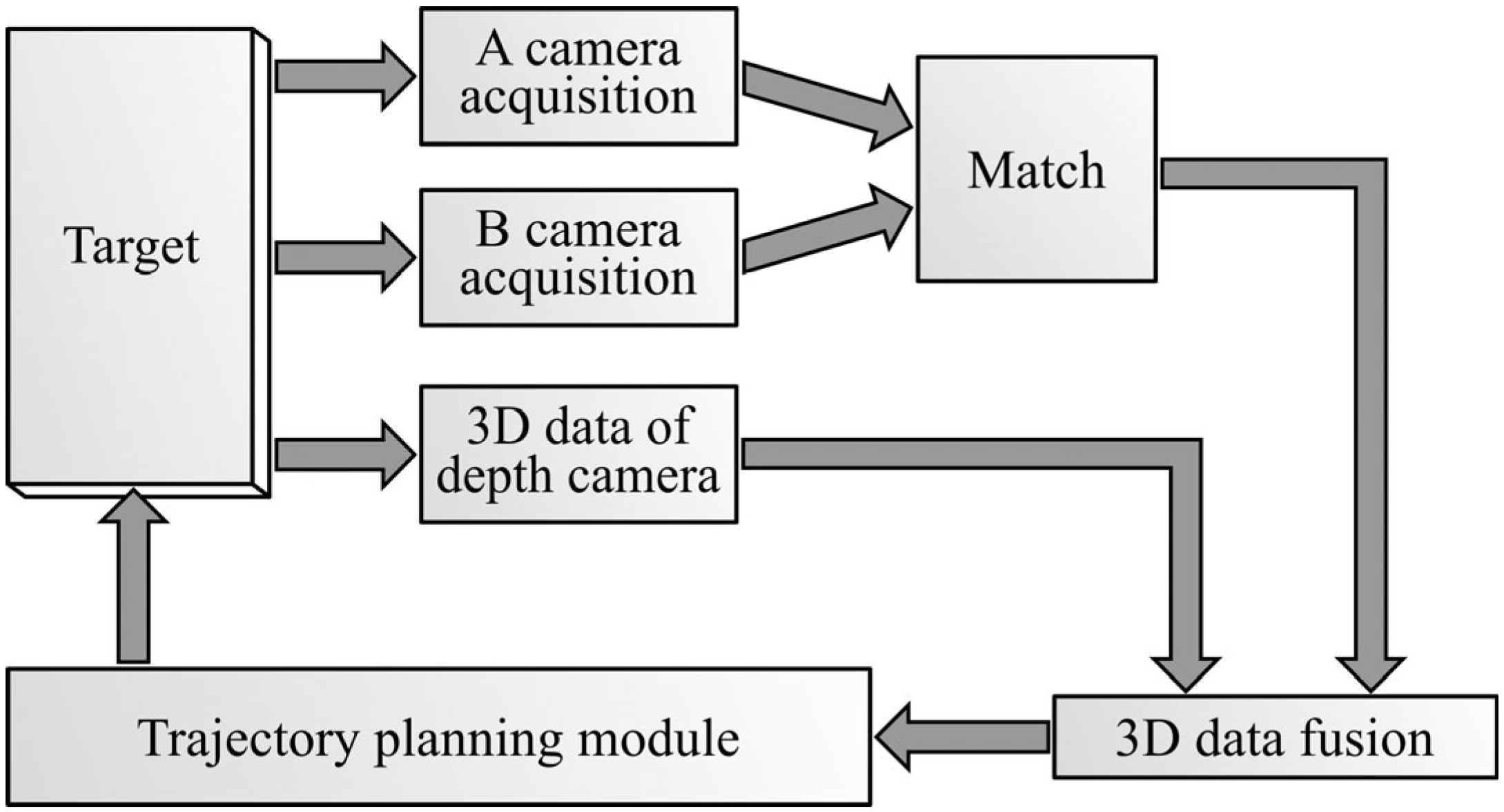

3.3. Autonomous Vehicles and Robotics

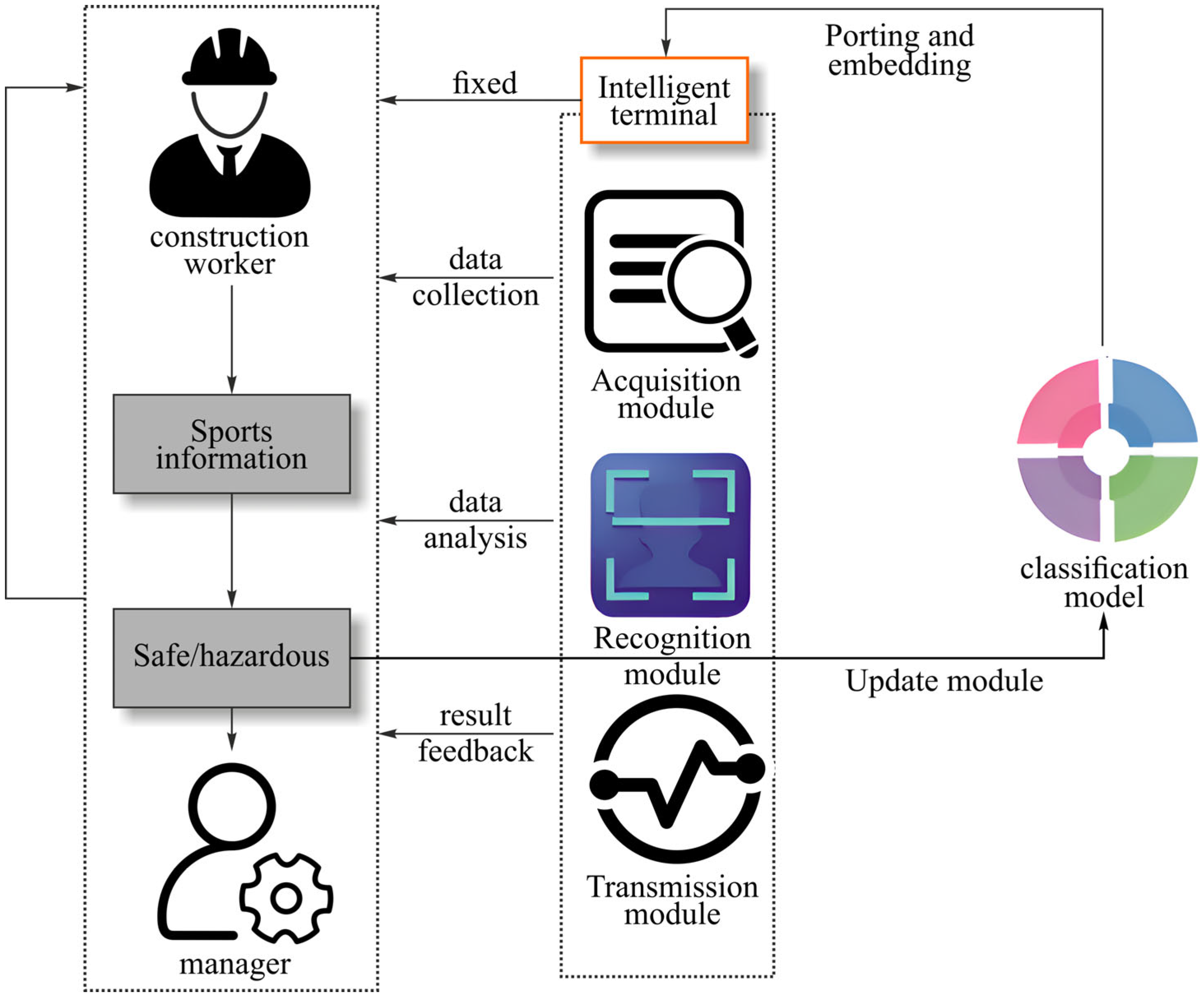

3.4. Security and Surveillance

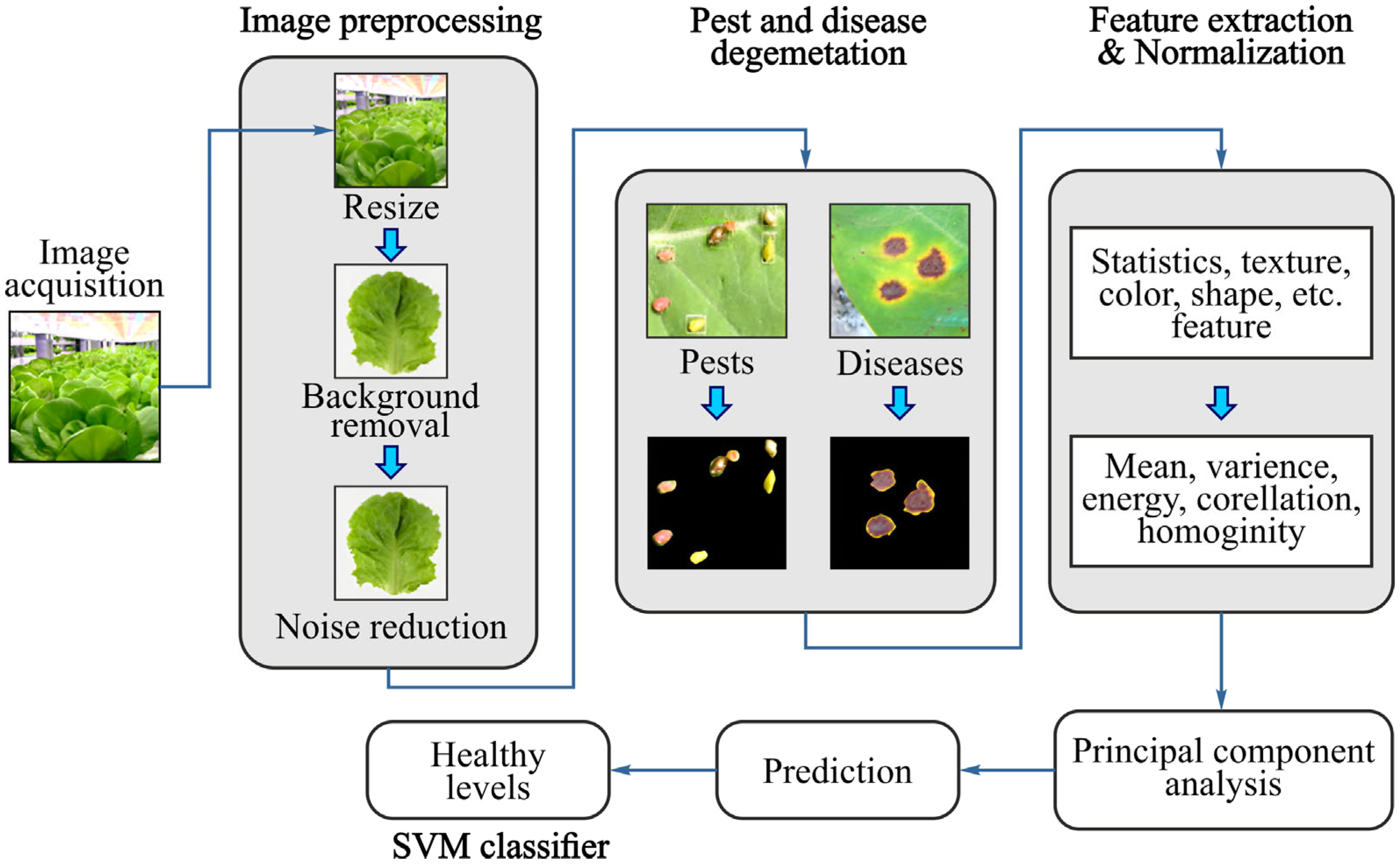

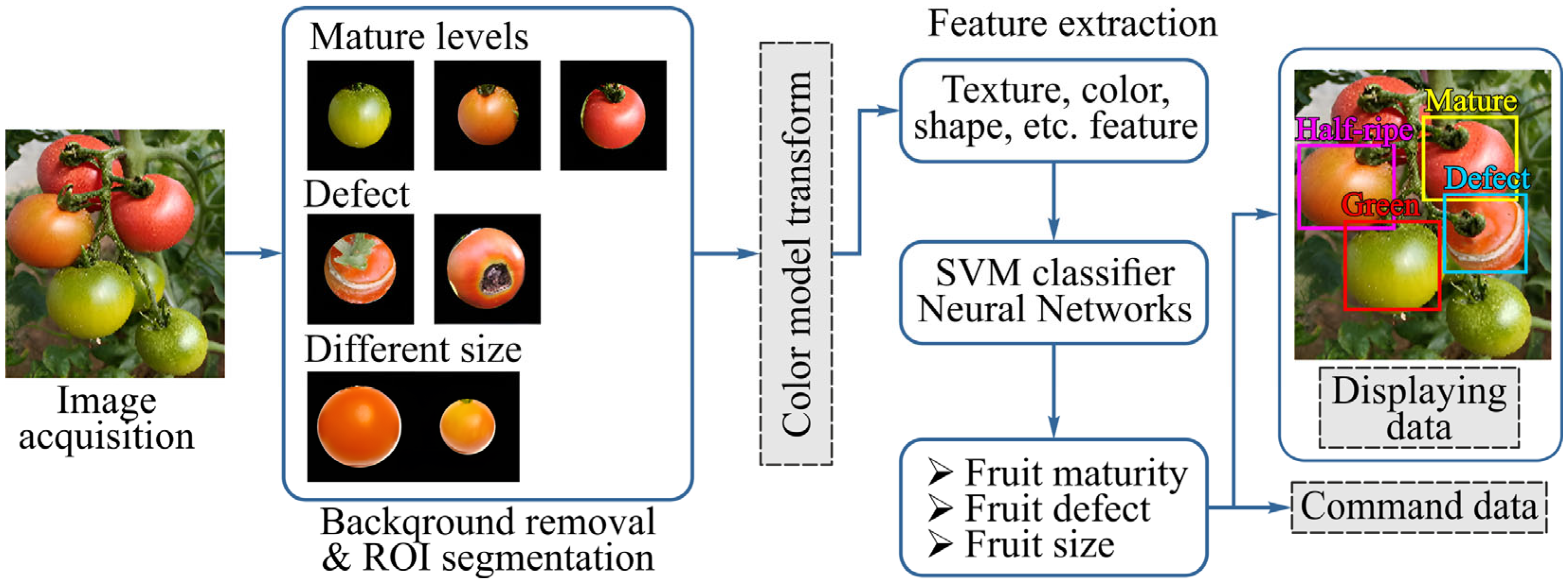

3.5. Agriculture and Environmental Monitoring

4. Future Trends and Research Directions

4.1. Short Term (1–3 Years): Explainability and Robustness

4.2. Mid Term (3–7 Years): Neuromorphic Computing and Efficient Learning

4.3. Long Term (7+ Years): Quantum and Hybrid Paradigms

4.4. Synthesis

5. Challenges and Limitations

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial intelligence |

| CNNs | Convolutional neural networks |

| CV | Computer vision |

| DL | Deep learning |

| ML | Machine learning |

| MV | Machine vision |

References

- History of Machine Vision. Available online: https://mv-center.com/en/history-of-machine-vision/ (accessed on 15 February 2025).

- Zhao, R.; Yang, L. Research and Development of Machine Vision Algorithm Performance Evaluation System in Complex Scenes. J. Phys. Conf. Ser. 2023, 2562, 012022. [Google Scholar] [CrossRef]

- Heyrman, B.; Paindavoine, M.; Schmit, R.; Letellier, L.; Collette, T. Smart camera design for intensive embedded computing. Real-Time Imaging 2005, 11, 282–289. [Google Scholar] [CrossRef]

- Shi, Y.; Raniga, P.; Mohamed, I. A Smart Camera for Multimodal Human Computer Interaction. In Proceedings of the IEEE International Symposium on Consumer Electronics, St Petersburg, Russia, 28 June–1 July 2006. [Google Scholar]

- Lee, K.F.; Tang, B. Image Processing for In-vehicle Smart Cameras. In Proceedings of the IEEE International Symposium on Consumer Electronics, St Petersburg, Russia, 28 June–1 July 2006. [Google Scholar]

- Kitcher, P. Marr’s Computational Theory of Vision. Philos. Sci. 1988, 55, 1–24. [Google Scholar] [CrossRef]

- Machine Vision: 9 Important Aspects to See Beyond Human Limitations. Available online: https://julienflorkin.com/technology/computer-vision/machine-vision/ (accessed on 15 February 2025).

- Javaid, M.; Haleem, A.; Singh, R.P.; Ahmed, M. Computer vision to enhance healthcare domain: An overview of features, implementation, and opportunities. Intell. Pharm. 2024, 2, 792–803. [Google Scholar] [CrossRef]

- Palanikumar, K.; Natarajan, E.; Ponshanmugakumar, A. Chapter 6—Application of machine vision technology in manufacturing industries—A study. In Machine Intelligence in Mechanical Engineering, 1st ed.; Palanikumar, K., Natarajan, E., Ramesh, S., Paulo Davim, J., Eds.; Woodhead Publishing: Cambridge, UK, 2024; Volume 1, pp. 91–122. [Google Scholar]

- Is Machine Vision Surpassing the Human Eye for Accuracy? Available online: https://belmonteyecenter.com/is-machine-vision-surpassing-the-human-eye-for-accuracy/ (accessed on 2 April 2025).

- Kurada, S.; Bradley, C. A review of machine vision sensors for tool condition monitoring. Comput. Ind. 1997, 34, 55–72. [Google Scholar] [CrossRef]

- Charan, A.; Karthik Chowdary, C.; Komal, P. The Future of Machine Vision in Industries-A systematic review. In Proceedings of the IOP, Conf Ser: Mater Sci Eng, London, UK, 14 July 2022. [Google Scholar]

- Mascagni, P.; Alapatt, D.; Sestini, L.; Altieri, M.S.; Madani, A.; Watanabe, Y.; Alseidi, A.; Redan, J.A.; Alfieri, S.; Costamagna, G.; et al. Computer vision in surgery: From potential to clinical value. Npj Digit. Med. 2022, 5, 163. [Google Scholar] [CrossRef]

- Varoquaux, G.; Cheplygina, V. Machine learning for medical imaging: Methodological failures and recommendations for the future. Npj Digit. Med. 2022, 5, 48. [Google Scholar] [CrossRef]

- Esteva, A.; Chou, K.; Yeung, S.; Naik, N.; Madani, A.; Mottaghi, A.; Liu, Y.; Topol, E.; Dean, J.; Socher, R. Deep learning-enabled medical computer vision. Npj Digit. Med. 2021, 4, 5. [Google Scholar] [CrossRef]

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; van der Laak, J.A.W.M.; van Ginneken, B.; Sánchez, C.I. A survey on deep learning in medical image analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef]

- Toolan, C.; Palmer, K.; Al-Rawi, O.; Ridgway, T.; Modi, P. Robotic mitral valve surgery: A review and tips for safely negotiating the learning curve. J. Thorac. Dis. 2021, 13, 1971–1981. [Google Scholar] [CrossRef]

- Gajjar, H.; Sanyal, S.; Shah, M. A comprehensive study on lane detecting autonomous car using computer vision. Expert. Syst. Appl. 2023, 233, 120929. [Google Scholar] [CrossRef]

- Janai, J.; Güney, F.; Behl, A.; Geiger, A. Computer Vision for Autonomous Vehicles: Problems, Datasets and State of the Art. Found. Trends ®Comput. Graph. Vis. 2020, 12, 1–308. [Google Scholar] [CrossRef]

- The Combined Power of Machine Vision Technology and Video Management Systems. Available online: https://www.computar.com/blog/the-combined-power-of-machine-vision-technology-and-video-management-systems (accessed on 2 April 2025).

- Karthikeyan, R.; Karthik, S.; Saurav Menon, M. Vision based Intelligent Smart Security System. In Proceedings of the International Conference on Advancements in Electrical, Electronics, Communication, Computing and Automation (ICAECA), Coimbatore, India, 8–9 October 2021. [Google Scholar]

- Sivarai, D.; Rathika, P.D.; Vaishnavee, K.R.; Easwar, K.G.; Saranyazowri, P.; Hariprakash, R. Machine Vision based Intelligent Surveillance System. In Proceedings of the International Conference on Intelligent Systems for Communication, IoT and Security (ICISCoIS), Coimbatore, India, 9–11 February 2023. [Google Scholar]

- Goodfellow, I.J.; Shlens, J.; Szegedy, C. Explaining and Harnessing Adversarial Examples. In Proceedings of the ICLR, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Machine Vision a Growing Market Driven by Industrial and Automation Applications. Available online: https://www.yolegroup.com/press-release/machine-vision-a-growing-market-driven-by-industrial-and-automation-applications/ (accessed on 15 February 2025).

- Javaid, M.; Haleem, A.; Singh, R.P.; Rab, S.; Suman, R. Exploring impact and features of machine vision for progressive industry 4.0 culture. Sens. Int. 2022, 3, 100132. [Google Scholar] [CrossRef]

- Wu, W.-Q.; Wang, C.-F.; Han, S.-T.; Pan, C.-F. Recent advances in imaging devices: Image sensors and neuromorphic vision sensors. Rare Met. 2024, 43, 5487–5515. [Google Scholar] [CrossRef]

- Sharma, I.; Vanshika. Evolution of Neuromorphic Computing with Machine Learning and Artificial Intelligence. In Proceedings of the IEEE 3rd Global Conference for Advancement in Technology (GCAT), Bangalore, India, 7–9 October 2022. [Google Scholar]

- Gill, S.S.; Buyya, R. Transforming Research with Quantum Computing. J. Econ. Technol. 2026, 4, 1–8. [Google Scholar] [CrossRef]

- Villalba-Diez, J.; Ordieres-Meré, J.; González-Marcos, A.; Larzabal, A.S. Quantum Deep Learning for Steel Industry Computer Vision Quality Control. IFAC-Pap. 2022, 55, 337–342. [Google Scholar] [CrossRef]

- Viéville, T.; Clergue, E.; Enciso, R.; Mathieu, H. Experimenting with 3D vision on a robotic head. Robot. Auton. Syst. 1995, 14, 1–27. [Google Scholar] [CrossRef]

- AI at the Edge: Transforming Machine Vision into Reality. Available online: https://www.intellectyx.com/ai-at-the-edge-transforming-machine-vision-into-reality/ (accessed on 14 February 2025).

- Zhong, S.; Liu, Y.; Chen, Q. Visual orientation inhomogeneity based scale-invariant feature transform. Expert. Syst. Appl. 2015, 42, 5658–5667. [Google Scholar] [CrossRef]

- Vardhan, A.H.; Verma, N.K.; Sevakula, R.K.; Salour, A. Unsupervised approach for object matching using Speeded Up Robust Features. In Proceedings of the Applied Imagery Pattern Recognition Workshop (AIPR), Washington, DC, USA, 10–12 October 2015. [Google Scholar]

- Gao, Q.; Lim, S.; Jia, X. Hyperspectral Image Classification Using Convolutional Neural Networks and Multiple Feature Learning. Remote Sens. 2018, 10, 299. [Google Scholar] [CrossRef]

- Tempelaere, A.; De Ketelaere, B.; He, J.; Kalfas, I.; Pieters, M.; Saeys, W.; Van Belleghem, R.; Van Doorselaer, L.; Verboven, P.; Nicolaï, B.M. An introduction to artificial intelligence in machine vision for postharvest detection of disorders in horticultural products. Postharvest Biol. Technol. 2023, 206, 112576. [Google Scholar] [CrossRef]

- Matsuzaka, Y.; Yashiro, R. AI-Based Computer Vision Techniques and Expert Systems. AI 2023, 4, 289–302. [Google Scholar] [CrossRef]

- Voulodimos, A.; Doulamis, N.; Doulamis, A.; Protopapakis, E. Deep Learning for Computer Vision: A Brief Review. Comput. Intell. Neurosci. 2018, 2018, 7068349. [Google Scholar] [CrossRef]

- Khan, A.I.; Al-Habsi, S. Machine Learning in Computer Vision. Procedia Comput. Sci. 2020, 167, 1444–1451. [Google Scholar] [CrossRef]

- Deng, F.; Huang, J.; Yuan, X.; Cheng, C.; Zhang, L. Performance and efficiency of machine learning algorithms for analyzing rectangular biomedical data. Lab. Investig. 2021, 101, 430–441. [Google Scholar] [CrossRef] [PubMed]

- Dhanush, G.; Khatri, N.; Kumar, S.; Shukla, P.K. A comprehensive review of machine vision systems and artificial intelligence algorithms for the detection and harvesting of agricultural produce. Sci. Afr. 2023, 21, e01798. [Google Scholar] [CrossRef]

- Kääriäinen, T.; Seppä, J. 3D camera based on laser light absorption by atmospheric oxygen at 761 nm. Opt. Express 2024, 32, 6342–6349. [Google Scholar] [CrossRef] [PubMed]

- Shults, R.; Levin, E.; Aukazhiyeva, Z.; Pavelka, K.; Kulichenko, N.; Kalabaev, N.; Sagyndyk, M.; Akhmetova, N. A Study of the Accuracy of a 3D Indoor Camera for Industrial Archaeology Applications. Heritage 2023, 6, 6240–6267. [Google Scholar] [CrossRef]

- Huang, T.; Zheng, Y.; Yu, Z.; Chen, R.; Li, Y.; Xiong, R.; Ma, L.; Zhao, J.; Dong, S.; Zhu, L.; et al. 1000× Faster Camera and Machine Vision with Ordinary Devices. Engineering 2023, 25, 110–119. [Google Scholar] [CrossRef]

- Yang, Y.; Meng, X.; Gao, M. Vision System of Mobile Robot Combining Binocular and Depth Cameras. J. Sens. 2017, 2017, 4562934. [Google Scholar] [CrossRef]

- Sergiyenko, O.; Tyrsa, V.; Flores-Fuentes, W.; Rodriguez-Quiñonez, J.; Mercorelli, P. Machine Vision Sensors. J. Sens. 2018, 2018, 3202761. [Google Scholar] [CrossRef]

- Silva, C.A.d.S.; Paladini, E.P. Smart Machine Vision System to Improve Decision-Making on the Assembly Line. Machines 2025, 13, 98. [Google Scholar] [CrossRef]

- Gierecker, J.; Schoepflin, D.; Schmedemann, O.; Schüppstuhl, T. Configuration and Enablement of Vision Sensor Solutions Through a Combined Simulation Based Process Chain. In Proceedings of the Annals of Scientific Society for Assembly, Handling and Industrial Robotics, Garbsen, Germany, 20 December 2021. [Google Scholar]

- Lim, S.-J.; Leem, D.-S.; Park, K.-B.; Kim, K.-S.; Sul, S.; Na, K.; Lee, G.H.; Heo, C.-J.; Lee, K.-H.; Bulliard, X.; et al. Organic-on-silicon complementary metal–oxide–semiconductor colour image sensors. Sci. Rep. 2015, 5, 7708. [Google Scholar] [CrossRef] [PubMed]

- Imanbekova, M.; Saridag, A.M.; Kahraman, M.; Liu, J.; Caglayan, H.; Wachsmann-Hogiu, S. Complementary Metal-Oxide-Semiconductor-Based Sensing Platform for Trapping, Imaging, and Chemical Characterization of Biological Samples. ACS Appl. Opt. Mater. 2023, 1, 329–339. [Google Scholar] [CrossRef]

- Lesser, M. 3-Charge coupled device (CCD) image sensors. In High Performance Silicon Imaging, 1st ed.; Durini, D., Ed.; Woodhead Publishing: Cambridge, UK, 2014; Volume 1, pp. 78–97. [Google Scholar]

- Chen, H.; Cui, W. A comparative analysis between active structured light and multi-view stereo vision technique for 3D reconstruction of face model surface. Optik 2020, 206, 164190. [Google Scholar] [CrossRef]

- Karim, A.; Andersson, J.Y. Infrared detectors: Advances, challenges and new technologies. In Proceedings of the IOP Conference Series: Materials Science Engineering, Bandung, Indonesia, 8–10 March 2013. [Google Scholar]

- Askar, C.; Sternberg, H. Use of Smartphone Lidar Technology for Low-Cost 3D Building Documentation with iPhone 13 Pro: A Comparative Analysis of Mobile Scanning Applications. Geomatics 2023, 3, 563–579. [Google Scholar] [CrossRef]

- Cremons, D.R. The future of lidar in planetary science. Front. Remote Sens. 2022, 3, 1042460. [Google Scholar] [CrossRef]

- Khonina, S.N.; Kazanskiy, N.L.; Oseledets, I.V.; Nikonorov, A.V.; Butt, M.A. Synergy between Artificial Intelligence and Hyperspectral Imagining—A Review. Technologies 2024, 12, 163. [Google Scholar] [CrossRef]

- Hou, B.; Chen, Q.; Yi, L.; Sellin, P.; Sun, H.-T.; Wong, L.J.; Lui, X. Materials innovation and electrical engineering in X-ray detection. Nat. Rev. Electr. Eng. 2024, 1, 639–655. [Google Scholar] [CrossRef]

- Bhargava, A.; Sachdeva, A.; Sharma, K.; Alsharif, M.H.; Uthansakul, P.; Uthansakul, M. Hyperspectral imaging and its applications: A review. Heliyon 2024, 10, e33208. [Google Scholar] [CrossRef]

- Khan, M.A.; Sun, J.; Li, B.; Przybysz, A.; Kosel, J. Magnetic sensors-A review and recent technologies. Eng. Res. Express 2021, 3, 022005. [Google Scholar] [CrossRef]

- Goodman, D.S. Illumination in machine vision. Opt. Soc. Am. Annu. Meet. 1991, 1, WB2. [Google Scholar]

- Yan, M.T.; Surgenor, B.W. A Quantitative Study of Illumination Techniques for Machine Vision Based Inspection. In Proceedings of the International Manufacturing Science and Engineering Conference (MSEC), Corvallis, OR, USA, 13–17 June 2011. [Google Scholar]

- Kumar, V.; Sudheesh Kumar, C.P. Investigation of the influence of coloured illumination on surface texture features: A Machine vision approach. Measurement 2020, 152, 107297. [Google Scholar] [CrossRef]

- Chen, J.; Wang, M.; Hsia, C.-H. Artificial Intelligence and Machine Learning in Sensing and Image Processing. Sensors 2025, 25, 1870. [Google Scholar] [CrossRef] [PubMed]

- Huang, L.; Yao, C.; Zhang, L.; Luo, S.; Ying, F.; Ying, W. Enhancing computer image recognition with improved image algorithms. Sci. Rep. 2024, 14, 13709. [Google Scholar] [CrossRef] [PubMed]

- Huang, C.; Lim, C.-C.; Ming, C. Comparison of image processing algorithms and neural networks in machine vision inspection. Comput. Ind. Eng. 1992, 23, 105–108. [Google Scholar] [CrossRef]

- Lu, Y.; Duanmu, L.; Zhai, Z.; Wang, Z. Application and improvement of Canny edge-detection algorithm for exterior wall hollowing detection using infrared thermal images. Energy Build. 2022, 274, 112421. [Google Scholar] [CrossRef]

- Lynn, N.D.; Sourav, A.I.; Santoso, A.J. Implementation of Real-Time Edge Detection Using Canny and Sobel Algorithms. In Proceedings of the IOP Conference Series: Materials Science and Engineering, Bristol, UK, 13 November 2021. [Google Scholar]

- Feng, Y.; Zhao, H.; Li, X.; Zhang, X.; Li, H. A multi-scale 3D Otsu thresholding algorithm for medical image segmentation. Digit. Signal Process. 2017, 60, 186–199. [Google Scholar] [CrossRef]

- Zhu, N.; Wang, G.; Yang, G.; Dai, W. A Fast 2D Otsu Thresholding Algorithm Based on Improved Histogram. In Proceedings of the 2009 Chinese Conference on Pattern Recognition, Nanjing, China, 4–6 November 2009. [Google Scholar]

- Bansal, M.; Kumar, M.; Kumar, M. 2D object recognition: A comparative analysis of SIFT, SURF and ORB feature descriptors. Multimed. Tools Appl. 2021, 80, 18839–18857. [Google Scholar] [CrossRef]

- Tareen, S.A.K.; Saleem, Z. A comparative analysis of SIFT, SURF, KAZE, AKAZE, ORB, and BRISK. In Proceedings of the 2018 International Conference on Computing, Mathematics and Engineering Technologies (iCoMET), Sukkur, Pakistan, 3–4 March 2018. [Google Scholar]

- Van Droogenbroeck, M.; Talbot, H. Fast computation of morphological operations with arbitrary structuring elements. Pattern Recognit. Lett. 1996, 17, 1451–1460. [Google Scholar] [CrossRef]

- Lee, Y.H. Algorithms for Mathematical Morphological Operations with Flat Top Structuring Elements. In Proceedings of the Applications of Digital Image Processing VIII, SPIE, San Diego, CA, USA, 20–22 August 1985. [Google Scholar]

- Gupta, A.; Sintorn, I.-M. Efficient high-resolution template matching with vector quantized nearest neighbour fields. Pattern Recognit. 2024, 151, 110386. [Google Scholar] [CrossRef]

- Bergamini, L.; Sposato, M.; Peruzzini, M.; Vezzani, R.; Pellicciari, M. Deep Learning-Based Method for Vision-Guided Robotic Grasping of Unknown Objects. In Proceedings of the 25th ISPE Inc. International Conference on Transdisciplinary Engineering, Modena, Italy, 6–9 July 2018; Volume 1, pp. 281–290. [Google Scholar]

- Walia, S. Light-operated On-chip Autonomous Vision Using Low-dimensional Material Systems. Adv. Mater. Technol. 2022, 7, 2101494. [Google Scholar] [CrossRef]

- Hunter, D.B. Machine Vision Techniques for High Speed Videography. In Proceedings of the High-Speed Photography, Videography, and Photonics II, SPIE, Bellingham, WA, USA, 1–2 November 1984. [Google Scholar]

- Are High-Resolution Event Cameras Really Needed? Available online: https://arxiv.org/abs/2203.14672 (accessed on 29 March 2022).

- Kazanskiy, N.L.; Khonina, S.N.; Butt, M.A. Transforming high-resolution imaging: A comprehensive review of advances in metasurfaces and metalenses. Mater. Today Phys. 2025, 50, 101628. [Google Scholar] [CrossRef]

- Cherian, A.K.; Poovammal, E. Classification of remote sensing images using CNN. In Proceedings of the IOP Conference Series: Materials Science and Engineering, Bristol, UK, 13 November 2021. [Google Scholar]

- Firsov, N.; Myasnikov, E.; Lobanov, V.; Khabibullin, R.; Kazanskiy, N.; Khonina, S.; Butt, M.A.; Nikonorov, A. HyperKAN: Kolmogorov–Arnold Networks Make Hyperspectral Image Classifiers Smarter. Sensors 2024, 24, 7683. [Google Scholar] [CrossRef]

- Krichen, M. Convolutional Neural Networks: A Survey. Computers 2023, 12, 151. [Google Scholar] [CrossRef]

- Tulbure, A.-A.; Tulbure, A.-A.; Dulf, E.-H. A review on modern defect detection models using DCNNs–Deep convolutional neural networks. J. Adv. Res. 2022, 35, 33–48. [Google Scholar] [CrossRef] [PubMed]

- Lawrence, S.; Giles, C.L.; Tsoi, A.C.; Back, A.D. Face recognition: A convolutional neural-network approach. IEEE Trans. Neural Netw. 1997, 8, 98–113. [Google Scholar] [CrossRef] [PubMed]

- Sharma, N.; Jain, V.; Mishra, A. An Analysis Of Convolutional Neural Networks For Image Classification. Procedia Comput. Sci. 2018, 132, 377–384. [Google Scholar] [CrossRef]

- Benefits and Applications of AI-Powered Machine Vision. Available online: https://www.micropsi-industries.com/blog/benefits-and-applications-of-ai-powered-machine-vision (accessed on 29 March 2025).

- Van der Velden, B.H.M.; Kuijf, H.J.; Gilhuijs, K.G.A.; Viergever, M.A. Explainable artificial intelligence (XAI) in deep learning-based medical image analysis. Med. Image Anal. 2022, 79, 102470. [Google Scholar] [CrossRef]

- Li, J.Q.; Dukes, P.V.; Lee, W.; Sarkis, M.; Vo-Dinh, T. Machine learning using convolutional neural networks for SERS analysis of biomarkers in medical diagnostics. J. Raman Spectrosc. 2022, 53, 2044–2057. [Google Scholar] [CrossRef]

- Lodhi, S.K.; Gill, A.Y.; Hussain, I. AI-Powered Innovations in Contemporary Manufacturing Procedures: An Extensive Analysis. Int. J. Multidiscip. Sci. Arts 2024, 3, 15–25. [Google Scholar] [CrossRef]

- Rashid, A.B.; Kausik, M.A.K. AI revolutionizing industries worldwide: A comprehensive overview of its diverse applications. Hybrid. Adv. 2024, 7, 100277. [Google Scholar] [CrossRef]

- Aggarwal, C.C. Outlier Analysis, 2nd ed.; Springer International Publishing: Cham, Switzerland, 2017; p. 481. [Google Scholar]

- Khalifa, M.; Albadawy, M. AI in diagnostic imaging: Revolutionising accuracy and efficiency. Comput. Methods Programs Biomed. Update 2024, 5, 100146. [Google Scholar] [CrossRef]

- Al-Antari, M.A. Artificial Intelligence for Medical Diagnostics—Existing and Future AI Technology! Diagnostics 2023, 13, 688. [Google Scholar] [CrossRef] [PubMed]

- AI in Logistics: Uncovering More Major Benefits and Use Cases. Available online: https://litslink.com/blog/ai-in-logistics-uncovering-more-major-benefits-and-use-cases (accessed on 31 March 2025).

- Ghonasgi, K.; Kaveny, K.J.; Langlois, D.; Sigurðarson, L.D.; Swift, T.A.; Wheeler, J.; Young, A.J. The case against machine vision for the control of wearable robotics: Challenges for commercial adoption. Sci. Robot. 2025, 10, eadp5005. [Google Scholar] [CrossRef] [PubMed]

- Mercedes-Benz Accelerates AI and Robotics at Berlin-Marienfelde, Transforming Digital Production with Humanoid Robots and Next-Generation Automation Technologies. Available online: https://www.automotivemanufacturingsolutions.com/robotics/mercedes-benz-advances-ai-and-robotics-in-production/46909.article (accessed on 31 March 2025).

- BMW Taps Humanoid Startup Figure to Take on Tesla’s Robot. Available online: https://www.reuters.com/business/autos-transportation/bmw-taps-humanoid-startup-figure-take-teslas-robot-2024-01-18/ (accessed on 31 March 2025).

- How Autonomous Robots are Transforming Logistics. Available online: https://www.reisopack.com/en/how-autonomous-robots-are-transforming-logistics/ (accessed on 31 March 2025).

- Tan, H. Line inspection logistics robot delivery system based on machine vision and wireless communication. In Proceedings of the 2020 International Conference on Cyber-Enabled Distributed Computing and Knowledge Discovery (CyberC), Chongqing, China, 29–30 October 2020. [Google Scholar]

- Valero, S.; Martinez, J.C.; Montes, A.M.; Marin, C.; Bolanos, R.; Alvarez, D. Machine vision-assisted design of end effector pose in robotic mixed depalletizing of heterogeneous cargo. Sensors 2025, 25, 1137. [Google Scholar] [CrossRef] [PubMed]

- Fang, J.; Lu, X.; Feng, X.; Zhang, Y. Research into an intelligent logistics handling robot based on front-end machine vision. In Proceedings of the 2024 5th International conference on computer engineering and application (ICCEA), Hangzhou, China, 12–14 April 2024. [Google Scholar]

- Sharma, K.; Shivandu, S.K. Integrating artificial intelligence and Internet of Things (IoT) for enhanced crop monitoring and management in precision agriculture. Sens. Int. 2024, 5, 100292. [Google Scholar] [CrossRef]

- Guebsi, R.; Mami, S.; Chokmani, K. Drones in Precision Agriculture: A Comprehensive Review of Applications, Technologies, and Challenges. Drones 2024, 8, 686. [Google Scholar] [CrossRef]

- Profili, A.; Magherini, R.; Servi, M.; Spezia, F.; Gemmiti, D.; Volpe, Y. Machine vision system for automatic defect detection of ultrasound probes. Int. J. Adv. Manuf. Technol. 2024, 135, 3421–3435. [Google Scholar] [CrossRef]

- Li, L.; Li, S.; Wang, W.; Zhang, J.; Sun, Y.; Deng, Q.; Zheng, T.; Lu, J.; Gao, W.; Yang, M.; et al. Adaptative machine vision with microsecond-level accurate perception beyond human retina. Nat. Commun. 2024, 15, 6261. [Google Scholar] [CrossRef]

- Dodda, A.; Jayachandran, D.; Subbulakshmi Radhakrishnan, S.; Pannone, A.; Zhang, Y.; Trainor, N.; Redwing, J.M.; Das, S. Bioinspired and Low-Power 2D Machine Vision with Adaptive Machine Learning and Forgetting. ACS Nano 2022, 16, 20010–20020. [Google Scholar] [CrossRef]

- Ibn-Khedher, H.; Laroui, M.; Mabrouk, M.B.; Moungla, H.; Afifi, H.; Oleari, A.N. Edge Computing Assisted Autonomous Driving Using Artificial Intelligence. In Proceedings of the 2021 International Wireless Communications and Mobile Computing (IWCMC), Beijing, China, 28 June–2 July 2021. [Google Scholar]

- Shi, W.; Cao, J.; Zhang, Q.; Li, Y.; Xu, L. Edge Computing: Vision and Challenges. IEEE Internet Things J. 2016, 3, 637–646. [Google Scholar] [CrossRef]

- Verde Romero, D.A.; Villalvazo Laureano, E.; Jiménez Betancourt, R.O.; Navarro Álvarez, E. An open source IoT edge-computing system for monitoring energy consumption in buildings. Results Eng. 2024, 21, 101875. [Google Scholar] [CrossRef]

- Manogaran, N.; Nandagopal, M.; Abi, N.E.; Seerangan, K.; Balusamy, B.; Selvarajan, S. Integrating meta-heuristic with named data networking for secure edge computing in IoT enabled healthcare monitoring system. Sci. Rep. 2024, 14, 21532. [Google Scholar] [CrossRef] [PubMed]

- Zacchigna, F.G. Methodology for CNN Implementation in FPGA-Based Embedded Systems. IEEE Embed. Syst. Lett. 2023, 15, 85–88. [Google Scholar] [CrossRef]

- Vasile, C.-E.; Ulmămei, A.-A.; Bîră, C. Image Processing Hardware Acceleration—A Review of Operations Involved and Current Hardware Approaches. J. Imaging 2024, 10, 298. [Google Scholar] [CrossRef]

- Sailesh, M.; Selvakumar, K.; Narayanan, P. A novel framework for deployment of CNN models using post-training quantization on microcontroller. Microprocess. Microsyst. 2022, 94, 104634. [Google Scholar]

- Canpolat Şahin, M.; Kolukısa Tarhan, A. Evaluation and Selection of Hardware and AI Models for Edge Applications: A Method and A Case Study on UAVs. Appl. Sci. 2025, 15, 1026. [Google Scholar] [CrossRef]

- Wu, L.; Xiao, G.; Huang, D.; Zhang, X.; Ye, D.; Weng, H. Edge Computing-Based Machine Vision for Non-Invasive and Rapid Soft Sensing of Mushroom Liquid Strain Biomass. Agronomy 2025, 15, 242. [Google Scholar] [CrossRef]

- Akundi, A.; Reyna, M. A Machine Vision Based Automated Quality Control System for Product Dimensional Analysis. Procedia Comput. Sci. 2021, 185, 127–134. [Google Scholar] [CrossRef]

- Sioma, A. Vision System in Product Quality Control Systems. Appl. Sci. 2023, 13, 751. [Google Scholar] [CrossRef]

- Ivaschenko, A.; Avsievich, V.; Reznikov, Y.; Belikov, A.; Turkova, V.; Sitnikov, P.; Surnin, O. Intelligent Machine Vision Implementation for Production Quality Control. In Proceedings of the 2023 34th Conference of Open Innovations Association (FRUCT), Riga, Latvia, 15–17 November 2023. [Google Scholar]

- Xiao, Z.; Wang, J.; Han, L.; Guo, S.; Cui, Q. Application of Machine Vision System in Food Detection. Front. Nutr. 2022, 9, 888245. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Z.; Wang, R.; Liu, M.; Bai, L.; Sun, Y. Application of machine vision in food computing: A review. Food Chem. 2025, 463, 141238. [Google Scholar] [CrossRef] [PubMed]

- Tzampazaki, M.; Zografos, C.; Vrochidou, E.; Papakostas, G.A. Machine Vision—Moving from Industry 4.0 to Industry 5.0. Appl. Sci. 2024, 14, 1471. [Google Scholar] [CrossRef]

- Gao, X. Artificial intelligence applied to supermarket intelligent replenishment robot based on machine vision. In Proceedings of the 2023 Asia-Europe Conference on Electronics, Data Processing and Informatics (ACEDPI), Prague, Czech Republic, 17–19 April 2023. [Google Scholar]

- Yang, R.; Jiang, Q. Research on the application of machine vision technology in industrial automation assembly line. In Proceedings of the International Conference on Mechatronics and Intelligent Control (ICMIC 2024), Wuhan, China, 20–22 September 2024. [Google Scholar]

- Ali, Y.; Shah, S.W.; Arif, A.; Tlija, M.; Siddiqi, M.R. Intelligent Framework Design for Quality Control in Industry 4.0. Appl. Sci. 2024, 14, 7726. [Google Scholar] [CrossRef]

- Rana, M.; Bhushan, M. Machine learning and deep learning approach for medical image analysis: Diagnosis to detection. Multimed. Tools Appl. 2023, 82, 26731–26769. [Google Scholar] [CrossRef]

- Pinto-Coelho, L. How Artificial Intelligence Is Shaping Medical Imaging Technology: A Survey of Innovations and Applications. Bioengineering 2023, 10, 1435. [Google Scholar] [CrossRef]

- Hassan, C.; Spadaccini, M.; Iannone, A.; Maselli, R.; Jovani, M.; Chandrasekar, V.T.; Antonelli, G.; Yu, H.; Areia, M.; Dinis-Ribeiro, M.; et al. Performance of artificial intelligence in colonoscopy for adenoma and polyp detection: A systematic review and meta-analysis. Gastrointest. Endosc. 2021, 93, 77–85. [Google Scholar] [CrossRef]

- Van Leeuwen, K.G.; Schalekamp, S.; Rutten, M.J.C.M.; van Ginneken, B.; de Rooij, M. Artificial intelligence in radiology: 100 commercially available products and their scientific evidence. Eur. Radiol. 2021, 31, 3797–3804. [Google Scholar] [CrossRef]

- Penza, V.; De Momi, E.; Enayati, N.; Chupin, T.; Ortiz, J.; Mattos, L.S. EnViSoRS: Enhanced Vision System for Robotic Surgery. A User-Defined Safety Volume Tracking to Minimize the Risk of Intraoperative Bleeding. Front. Robot. AI 2017, 4, 00015. [Google Scholar] [CrossRef]

- Rosen, C.A. Machine Vision and Robotics: Industrial Requirements. In Computer Vision and Sensor-Based Robots, 1st ed.; Doll, G.G., Rossol, L., Eds.; Springer: New York, NY, USA, 1979; Volume 1, pp. 3–22. [Google Scholar]

- The Use of Machine Vision for Robot Guidance Offers New Possibilities. Available online: https://www.qualitymag.com/articles/97057-the-use-of-machine-vision-for-robot-guidance-offers-new-possibilities (accessed on 1 April 2025).

- Das, S.; Das, I.; Shaw, R.N.; Ghosh, A. Chapter Seven-Advance machine learning and artificial intelligence applications in service robot. Artif. Intell. Future Gener. Robot. 2021, 1, 83–91. [Google Scholar]

- Do, Y.; Kim, G.; Kim, J. Omnidirectional vision system developed for a home service robot. In Proceedings of the 2007 14th International Conference on Mechatronics and Machine Vision in Practice, Xiamen, China, 4–6 December 2007. [Google Scholar]

- Grigorescu, S.M.; Prenzel, O.; Gräser, A. Model driven developed machine vision system for service robotics. In Proceedings of the 2010 12th International Conference on Optimization of Electrical and Electronic Equipment, Brasov, Romania, 20–22 May 2010. [Google Scholar]

- Wang, L.; Schmidt, B.; Nee, A.Y.C. Vision-guided active collision avoidance for human-robot collaborations. Manuf. Lett. 2013, 1, 5–8. [Google Scholar] [CrossRef]

- Wei, Z.; Tian, F.; Qiu, Z.; Yang, Z.; Zhan, R.; Zhan, J. Research on Machine Vision-Based Control System for Cold Storage Warehouse Robots. Actuators 2023, 12, 334. [Google Scholar] [CrossRef]

- Abba, S.; Bizi, A.M.; Lee, J.-A.; Bakouri, S.; Crespo, M.L. Real-time object detection, tracking, and monitoring framework for security surveillance systems. Heliyon 2024, 10, e34922. [Google Scholar] [CrossRef] [PubMed]

- Attard, L.; Farrugia, R.A. Vision based surveillance system. In Proceedings of the 2011 IEEE EUROCON-International Conference on Computer as a Tool, Lisbon, Portugal, 27–29 April 2011. [Google Scholar]

- Nurnoby, M.F.; Helmy, T. A Real-Time Deep Learning-based Smart Surveillance Using Fog Computing: A Complete Architecture. Procedia Comput. Sci. 2023, 218, 1102–1111. [Google Scholar] [CrossRef]

- Nigam, R.; Kundu, A.; Yu, X.; Saniie, J. Machine Vision Surveillance System-Artificial Intelligence For COVID-19 Norms. In Proceedings of the 2022 IEEE International Conference on Electro Information Technology (eIT), Mankato, MN, USA, 19–21 May 2022. [Google Scholar]

- Khan, H.; Thakur, J.S. Smart traffic control: Machine learning for dynamic road traffic management in urban environments. Multimed. Tools Appl. 2024, 84, 10321–10345. [Google Scholar] [CrossRef]

- Zhang, Y. Safety Management of Civil Engineering Construction Based on Artificial Intelligence and Machine Vision Technology. Adv. Civ. Eng. 2021, 2021, 1–14. [Google Scholar] [CrossRef]

- Ghazal, S.; Munir, A.; Qureshi, W.S. Computer vision in smart agriculture and precision farming: Techniques and applications. Artif. Intell. Agric. 2024, 13, 64–83. [Google Scholar] [CrossRef]

- Shin, J.; Mahmud, M.S.; Rehman, T.U.; Ravichandran, P.; Heung, B.; Chang, Y.K. Trends and Prospect of Machine Vision Technology for Stresses and Diseases Detection in Precision Agriculture. AgriEngineering 2023, 5, 20–39. [Google Scholar] [CrossRef]

- Kim, Y.; Glenn, D.M.; Park, J.; Ngugi, H.K.; Lehman, B.L. Hyperspectral image analysis for water stress detection of apple trees. Comput. Electron. Agric. 2011, 77, 155–160. [Google Scholar] [CrossRef]

- Paes de Melo, B.; Carpinetti, P.d.A.; Fraga, O.T.; Rodrigues-Silva, P.L.; Fioresi, V.S.; de Camargos, L.F.; Flores da Silva Ferreira, M. Abiotic Stresses in Plants and Their Markers: A Practice View of Plant Stress Responses and Programmed Cell Death Mechanisms. Plants 2022, 11, 1100. [Google Scholar] [CrossRef]

- Satheeshkumar, S.K.; Paolini, C.; Sarkar, M. Subsurface Heat stress detection in plants using machine learning regression models. In Proceedings of the 2023 International Conference on Intelligent Computing, Communication, Networking and Services (ICCNS), Valencia, Spain, 19–22 June 2023. [Google Scholar]

- Tian, Z.; Ma, W.; Yang, Q.; Duan, F. Application status and challenges of machine vision in plant factory—A review. Inf. Process. Agric. 2022, 9, 195–211. [Google Scholar] [CrossRef]

- Walsh, J.J.; Mangina, E.; Negrão, S. Advancements in Imaging Sensors and AI for Plant Stress Detection: A Systematic Literature Review. Plant Phenomics 2024, 6, 0153. [Google Scholar] [CrossRef]

- Foucher, P.; Revollon, P.; Vigouroux, B.; Chassériaux, G. Morphological Image Analysis for the Detection of Water Stress in Potted Forsythia. Biosyst. Eng. 2004, 89, 131–138. [Google Scholar] [CrossRef]

- Chung, S.; Breshears, L.E.; Yoon, J.-Y. Smartphone near infrared monitoring of plant stress. Comput. Electron. Agric. 2018, 154, 93–98. [Google Scholar] [CrossRef]

- Ghosal, S.; Blystone, D.; Singh, A.K.; Ganapathysubramanian, B.; Singh, A.; Sarkar, S. An explainable deep machine vision framework for plant stress phenotyping. Proc. Natl. Acad. Sci. USA 2018, 115, 4613–4618. [Google Scholar] [CrossRef]

- Karthickmanoj, R.; Sasilatha, T.; Padmapriya, J. Automated machine learning based plant stress detection system. Mater. Today Proc. 2021, 47, 1887–1891. [Google Scholar] [CrossRef]

- De Lucia, G.; Lapegna, M.; Romano, D. Towards explainable AI for hyperspectral image classification in Edge Computing environments. Comput. Electr. Eng. 2022, 103, 108381. [Google Scholar] [CrossRef]

- Pfenning, A.; Yan, X.; Gitt, S.; Fabian, J.; Lin, B.; Witt, D.; Afifi, A.; Azem, A.; Darcie, A.; Wu, J.; et al. A perspective on silicon photonic quantum computing with spin qubits. In Proceedings of the Silicon Photonics XVII, San Francisco, CA, USA, 22–24 February 2022. [Google Scholar]

- El Srouji, L.; Krishnan, A.; Ravichandran, R.; Lee, Y.; On, M.; Xiao, X.; Ben Yoo, S.J. Photonic and optoelectronic neuromorphic computing. APL Photonics 2022, 7, 051101. [Google Scholar] [CrossRef]

- Yang, W.; Wei, Y.; Wei, H.; Chen, Y.; Huang, G.; Li, X.; Lo, R.; Yao, N.; Waang, X.; Gu, X.; et al. Survey on Explainable AI: From Approaches, Limitations and Applications Aspects. Hum.-Cent. Intell. Syst. 2023, 3, 161–188. [Google Scholar] [CrossRef]

- Przybył, K. Explainable AI: Machine Learning Interpretation in Blackcurrant Powders. Sensors 2024, 24, 3198. [Google Scholar] [CrossRef]

- Liu, G.; Zhang, J.; Chan, A.B.; Hsiao, J.H. Human attention guided explainable artificial intelligence for computer vision models. Neural Netw. 2024, 177, 106392. [Google Scholar] [CrossRef]

- Shchanikov, S.; Bordanov, I.; Kucherik, A.; Gryaznov, E.; Mikhaylov, A. Neuromorphic Analog Machine Vision Enabled by Nanoelectronic Memristive Devices. Appl. Sci. 2023, 13, 13309. [Google Scholar] [CrossRef]

- Wang, H.; Sun, B.; GE, S.S.; Su, J.; Jin, M.L. On non-von Neumann flexible neuromorphic vision sensors. Npj Flex. Electron. 2024, 8, 28. [Google Scholar] [CrossRef]

- Imran, A.; He, X.; Tabassum, H.; Zhu, Q.; Dastgeer, G.; Liu, J. Neuromorphic Vision Sensor driven by Ferroelectric HfAlO. Mater. Today Nano 2024, 26, 100473. [Google Scholar] [CrossRef]

- Schuman, C.D.; Kulkarni, S.R.; Parsa, M.; Mitchell, J.P.; Date, P.; Kay, B. Opportunities for neuromorphic computing algorithms and applications. Nat. Comput. Sci. 2022, 2, 10–19. [Google Scholar] [CrossRef] [PubMed]

- Subramaniam, A. A neuromorphic approach to image processing and machine vision. In Proceedings of the 2017 Fourth International Conference on Image Information Processing (ICIIP), Shimla, India, 21–23 December 2017. [Google Scholar]

- Kösters, D.J.; Kortman, B.A.; Boybat, I.; Ferro, E.; Dolas, S.; de Austri, R.R.; Kwisthout, J.; Hilgenkamp, H.; Rasing, T.; Riel, H.; et al. Benchmarking energy consumption and latency for neuromorphic computing in condensed matter and particle physics. APL Mach. Learn. 2023, 1, 016101. [Google Scholar] [CrossRef]

- Wang, Y.; Wen, W.; Song, L.; Li, H.H. Classification accuracy improvement for neuromorphic computing systems with one-level precision synapses. In Proceedings of the 2017 22nd Asia and South Pacific Design Automation Conference (ASP-DAC), Chiba, Japan, 16–19 January 2017. [Google Scholar]

- Ji, Y.; Zhang, Y.; Li, S.; Chi, P.; Jiang, C.; Qu, P. NEUTRAMS: Neural network transformation and co-design under neuromorphic hardware constraints. In Proceedings of the 2016 49th Annual IEEE/ACM International Symposium on Microarchitecture (MICRO), Taipei, Taiwan, 15–19 October 2016. [Google Scholar]

- Ji, Y.; Wang, L.; Long, Y.; Wang, J.; Zheng, H.; Yu, Z.G.; Zhang, Y.-W.; Ang, K.-W. Ultralow energy adaptive neuromorphic computing using reconfigurable zinc phosphorus trisulfide memristors. Nat. Commun. 2025, 16, 6899. [Google Scholar] [CrossRef]

- Bai, Q.; Hu, X. Superposition-enhanced quantum neural network for multi-class image classification. Chin. J. Phys. 2024, 89, 378–389. [Google Scholar] [CrossRef]

- Ruiz, F.J.R.; Laakkonen, T.; Bausch, J.; Balog, M.; Barekatain, M.; Heras, F.J.H.; Novikov, A.; Fitzpatrick, N.; Romera-Paredes, B.; van de Wetering, J.; et al. Quantum circuit optimization with AlphaTensor. Nat. Mach. Intell. 2025, 7, 374–385. [Google Scholar] [CrossRef]

- Sciorilli, M.; Borges, L.; Patti, T.L.; García-Martín, D.; Camilo, G.; Anandkumar, A.; Aolita, L. Towards large-scale quantum optimization solvers with few qubits. Nat. Commun. 2025, 16, 476. [Google Scholar] [CrossRef]

- Blekos, K.; Brand, D.; Ceschini, A.; Chou, C.-H.; Li, R.-H.; Pandya, K.; Summer, A. A review on Quantum Approximate Optimization Algorithm and its variants. Phys. Rep. 2024, 1068, 1–66. [Google Scholar] [CrossRef]

- Fernandes, A.O.; Moreira, L.F.E.; Mata, J.M. Machine vision applications and development aspects. In Proceedings of the 2011 9th IEEE International Conference on Control and Automation (ICCA), Santiago, Chile, 19–21 December 2011. [Google Scholar]

- Mohaideen Abdul Kadhar, K.; Anand, G. Challenges in Machine Vision System. In Industrial Vision Systems with Raspberry Pi, 1st ed.; Asadi, F., Ed.; Apress: New York, NY, USA, 2024; Volume 4, pp. 73–86. [Google Scholar]

- Waelen, R.A. The ethics of computer vision: An overview in terms of power. AI Ethics 2024, 4, 353–362. [Google Scholar] [CrossRef]

- Zhang, B.H.; Lemoine, B.; Mitchell, M. Mitigating unwanted biases with adversarial learning. In Proceedings of the AIES’ 18: 2018 AAAI/ACM Conference on AI, Ethics, and Society, New Orleans, LA, USA, 2–3 February 2018. [Google Scholar]

- Hardt, M.; Price, E.; Srebro, N. Equality of opportunity in supervised learning. In Proceedings of the 30th International conference on Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; pp. 3323–3331. [Google Scholar]

- Mehrabi, N.; Morstatter, F.; Saxena, N.; Lerman, K.; Galstyan, A. A survey on bias and fairness in machine learning. ACM Comput. Surv. 2021, 54, 1–35. [Google Scholar] [CrossRef]

- Hanna, M.G.; Pantanowitz, L.; Jackson, B.; Palmer, O.; Visweswaran, S.; Pantanowitz, J.; Deebajah, M.; Rashidi, H.H. Ethical and Bias Considerations in Artificial Intelligence/Machine Learning. Mod. Pathol. 2025, 38, 100686. [Google Scholar] [CrossRef]

- Kashyapa, R. How Expensive Are Machine Vision Solutions? Available online: https://qualitastech.com/image-acquisition/how-expensive-is-machine-vision-solution/ (accessed on 28 March 2025).

- Würschinger, H.; Mühlbauer, M.; Winter, M.; Engelbrecht, M.; Hanenkamp, N. Implementation and potentials of a machine vision system in a series production using deep learning and low-cost hardware. Procedia CIRP 2020, 90, 611–616. [Google Scholar] [CrossRef]

- Using Artificial Intelligence in Machine Vision. Available online: https://www.cognex.com/what-is/edge-learning/using-ai-in-machine-vision (accessed on 28 March 2025).

- Malik, P.; Pathania, M.; Rathaur, V.K. Overview of artificial intelligence in medicine. J. Fam. Med. Prim. Care 2019, 8, 2328. [Google Scholar] [CrossRef]

- Kitaguchi, D.; Takeshita, N.; Hasegawa, H.; Ito, M. Artificial intelligence-based computer vision in surgery: Recent advances and future perspectives. Ann. Gastroenterol. Surg. 2022, 6, 29–36. [Google Scholar] [CrossRef]

- Sinha, S.; Lee, Y.M. Challenges with developing and deploying AI models and applications in industrial systems. Discov. Artif. Intell. 2024, 4, 55. [Google Scholar] [CrossRef]

- Christensen, D.V.; Dittmann, R.; Linares-Barranco, B.; Sebastian, A.; Gallo, M.L.; Redaelli, A.; Slesazeck, S.; Mikolajick, T.; Spiga, S.; Menzel, S.; et al. 2022 roadmap on neuromorphic computing and engineering. Neuromorph. Comput. Eng. 2022, 2, 022501. [Google Scholar] [CrossRef]

| Sensor Type | Function | Key Characteristics | Applications |

|---|---|---|---|

| CMOS (Complementary Metal-Oxide-Semiconductor) Sensor [48,49] | Converts light into electrical signals | Low power consumption, high-speed processing, cost-effective | General MV, industrial inspection, robotics |

| CCD (Charge-Coupled Device) Sensor [50] | Captures high-quality images with low noise | High image quality, low noise, higher power consumption | High-precision measurement, scientific imaging |

| 3D Sensors (Time-of-Flight, Structured Light, Stereo Vision) [51] | Captures depth information for 3D imaging | Measures object distance, accurate depth perception | Object recognition, bin picking, gesture recognition |

| Infrared (IR) Sensors [52] | Detects heat signatures and thermal variations | Works in low light, captures non-visible wavelengths | Night vision, defect detection, surveillance |

| LIDAR (Light Detection and Ranging) Sensors [53,54,55] | Measures distance using laser reflection | High accuracy, long-range detection | Autonomous vehicles, terrain mapping, 3D scanning |

| X-ray Sensors [56] | Penetrates objects to capture internal structure | Non-destructive testing, detects internal defects | Medical imaging, baggage scanning, industrial inspection |

| Hyperspectral Sensors [57] | Captures data across multiple wavelengths | Identifies materials, chemical composition analysis | Agriculture, pharmaceutical inspection, food quality control |

| Magnetic Sensors [58] | Detects metallic components in objects | Measures magnetic fields, high sensitivity | Industrial automation, position sensing, defect detection |

| Lighting Type | Description |

|---|---|

| Fibre Optic | Delivers light from halogen, tungsten-halogen, or xenon sources and provides bright, shapeable, and focused illumination. |

| LED Lights | Widely adopted for their fast response, ability to pulse or strobe (which freezes motion), flexible mounting options, long operational life, and consistent light output. |

| Dome Lights | Deliver omnidirectional illumination that minimizes glare and reflections, making them well suited for inspecting parts with complex or curved geometries. |

| Telecentric Lighting | Best suited for high-precision tasks like edge detection and flaw identification on shiny or reflective surfaces, where measurement accuracy is critical. |

| Diffused Light | Employs scattering filters to soften illumination, producing uniform lighting and reducing hotspots or uneven brightness on reflective materials. |

| Direct Light | Delivers light along the same optical path as the camera, ensuring direct illumination. |

| Characteristic | Image Processing Algorithms | AI Techniques (Neural Networks, DL) |

|---|---|---|

| Approach | Rule-based, deterministic processing | Data-driven, adaptive learning |

| Common Methods |

| |

| Development Time | Shorter, requires manual feature design | Longer, involves extensive training |

| Accuracy | Moderate, depends on predefined rules | Higher, learns complex patterns |

| Flexibility | Limited, needs manual adjustments | High, adapts to varying conditions |

| Computational Complexity | Lower, efficient for simple tasks | Higher, requires more processing power |

| Training Requirement | None, operates on fixed rules | Requires large annotated datasets |

| Performance in Complex Environments | Struggles with variations in lighting, noise, and occlusion | Robust against variations and distortions |

| Interpretability | High, decisions are explainable | Lower, functions as a “black box” |

| Adaptability | Low, requires reprogramming for new tasks | High, generalizes across tasks |

| Real-Time Processing | Faster, suitable for immediate analysis | Slower, depends on hardware optimization |

| Aspect | Classical CNNs | Neuromorphic (Spiking Neural Networks) | Trade-Off/Remarks |

|---|---|---|---|

| Latency | High (batch processing; ms–s scale) | Ultra-low (event-driven; µs–ms scale) [164] | Neuromorphic excels in real-time scenarios (e.g., drones, robotics). |

| Power consumption | Moderate–High (GPU/TPU intensive) | Very low (event-driven, sparse coding) [164] | Neuromorphic favored for IoT/edge devices with energy constraints. |

| Accuracy | Mature, state-of-the-art benchmarks | Emerging; often lags behind CNNs on complex datasets [165] | CNNs still outperform on accuracy, but gap is narrowing. |

| Hardware support | Widely available (GPU/TPU, CPUs) | Limited (Loihi, TrueNorth, SpiNNaker) [166] | Neuromorphic hardware is still niche and less accessible. |

| Adaptability | Strong with large training data | Good for temporal/event data, but less robust to large-scale supervised tasks [167] | Hybrid architectures may combine strengths. |

| Criterion | Edge Processing | Cloud Processing | Trade-Off/Remarks |

|---|---|---|---|

| Latency | Very low, real time on device | Higher, due to network delays | Edge essential for safety-critical tasks (e.g., autonomous driving). |

| Computational power | Limited (mobile CPUs, TPUs, FPGAs) | Virtually unlimited in datacenters | Cloud supports deep and complex models. |

| Energy use | Device power drain unless optimized | Energy offloaded to datacenters | Edge energy-efficient only with tailored hardware. |

| Privacy & security | Data remains local, higher privacy | Requires data transfer, higher risks | Edge aligns with GDPR/healthcare compliance. |

| Scalability | Limited by device capacity | Scales easily across many users/devices | Cloud better for global-scale analytics. |

| Cost | Higher upfront device hardware costs | Lower device cost, ongoing service fees | Best choice depends on deployment scale. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Khonina, S.N.; Kazanskiy, N.L.; Oseledets, I.V.; Khabibullin, R.M.; Nikonorov, A.V. Eyes of the Future: Decoding the World Through Machine Vision. Technologies 2025, 13, 507. https://doi.org/10.3390/technologies13110507

Khonina SN, Kazanskiy NL, Oseledets IV, Khabibullin RM, Nikonorov AV. Eyes of the Future: Decoding the World Through Machine Vision. Technologies. 2025; 13(11):507. https://doi.org/10.3390/technologies13110507

Chicago/Turabian StyleKhonina, Svetlana N., Nikolay L. Kazanskiy, Ivan V. Oseledets, Roman M. Khabibullin, and Artem V. Nikonorov. 2025. "Eyes of the Future: Decoding the World Through Machine Vision" Technologies 13, no. 11: 507. https://doi.org/10.3390/technologies13110507

APA StyleKhonina, S. N., Kazanskiy, N. L., Oseledets, I. V., Khabibullin, R. M., & Nikonorov, A. V. (2025). Eyes of the Future: Decoding the World Through Machine Vision. Technologies, 13(11), 507. https://doi.org/10.3390/technologies13110507